Significance

As a fundamental concept in information theory, mutual information has been commonly applied to quantify the dependence between variables. However, existing estimations have unstable statistical performance since they involve a set of tuning parameters. We develop a jackknife approach that does not incur predetermined tuning parameters. The proposed approach enjoys several appealing theoretical properties and has stable numerical performance.

Keywords: jackknifed estimation, mutual information, statistical dependence, kernel density estimation

Abstract

Quantifying the dependence between two random variables is a fundamental issue in data analysis, and thus many measures have been proposed. Recent studies have focused on the renowned mutual information (MI) [Reshef DN, et al. (2011) Science 334:1518–1524]. However, “Unfortunately, reliably estimating mutual information from finite continuous data remains a significant and unresolved problem” [Kinney JB, Atwal GS (2014) Proc Natl Acad Sci USA 111:3354–3359]. In this paper, we examine the kernel estimation of MI and show that the bandwidths involved should be equalized. We consider a jackknife version of the kernel estimate with equalized bandwidth and allow the bandwidth to vary over an interval. We estimate the MI by the largest value among these kernel estimates and establish the associated theoretical underpinnings.

A key issue in data science is how to measure the dependence between two random variables. Pearson’s correlation coefficient (1) provides a powerful measure for linear dependence, but it is incapable of detecting nonlinear association (2, 3). Thus, many other measures have been introduced to quantify complex dependence. For example, Gretton et al. (4) proposed a kernel-based independence criterion that uses the squared Hilbert–Schmidt norm of the cross-covariance operator. Székely, Rizzo, and Bakirov (5) introduced the distance correlation (dCor) which does not involve any nonparametric estimation and is free of tuning parameters. Heller, Heller, and Gorfine (6) proposed a rank-based distance measure which demonstrates good numerical performance. Although many different measures have been proposed, the mutual information introduced by Claude Shannon in 1948 (7) is not replaceable and is still of great research interest. As a fundamental measure of dependence, mutual information (MI) possesses several desirable properties and can be interpreted intuitively (8). These advantages secure MI as a very powerful measure of nonlinear dependence with very wide applications in data analysis. As such, studies have focused on its mathematical properties and its estimation efficiency (2, 9, 10).

For continuous data, there are three typical groups of estimation for MI. The first group is the “bins” method that discretizes the continuous data into different bins and estimates MI from the discretized data (11, 12). The asymptotic performance for this bins method is systematically analyzed in ref. 13. The second group is based on estimates of probability density functions, for example, the histogram estimator of ref. 14, the kernel density estimator (KDE) of ref. 15, the B-spline estimator of ref. 16, and the wavelet estimator of ref. 17. To reduce the bias at the boundary region, ref. 18 introduced the mirrored KDE and derived its exponential concentration bound. Recently, ref. 19 further applied the ensemble method in kernel estimation and derived the ensemble estimator. The third group is based on the relationship between the MI and entropies. One of the most popular estimations in this group is the k-nearest neighbors (kNN) estimator introduced in ref. 20, which was extended in ref. 10, leading to the introduction of the Kraskov–Stögbauer–Grassberger (KSG) estimator. This estimator is further discussed in refs. 21–23.

Although many different approaches have been considered, the estimation of MI, especially for continuous data, relies heavily on the choice of the tuning parameters involved such as the number of bins, the bandwidth in kernel density estimation, and the number of neighbors in the kNN estimator. As a consequence, the corresponding estimators may be very unstable or seriously biased. However, little research has been done on the selection of these parameters. In this paper, we focus on the KDE approach (24, 25), which involves at least four bandwidth matrices. Experience with tests for independence suggests that the bandwidths should be set equal. Equalization of bandwidths also helps us reduce the bias at the boundary region and thus improve the efficiency of estimation. To free the estimation from bandwidth selection, we consider a jackknife version of MI (called JMI) and show that JMI has asymptotically a unique maximum with respect to the equalized bandwidth. We adopt the maximum value as our estimator of MI and provide the necessary statistical underpinnings. Interestingly, for the very special case of independent random variables, JMI enjoys a consistency rate higher than that of root-n. Numerically, we compare the estimation efficiency of JMI vs. that of other estimation methods that include the mixed KSG of ref. 23, the copula-based KSG of ref. 21, and other KDE methods. We also construct a test for independence (2, 3, 26) based on the JMI and compare it with several popular methods, such as the dCor of ref. 5, the maximal information coefficient (MIC) of ref. 9, and the Heller–Heller–Gorfine (HHG) test of ref. 6. These comparisons demonstrate the superior performance of JMI.

MI and Its Kernel Estimation

Consider two random variables and . Let us focus on the case that their joint probability density function exists. MI is defined as

where , , and are the density functions of , , and , respectively. This definition can be easily extended to other types of random variables that may not have density functions (13). As a measure of complex dependence, MI possesses the following desirable properties. It is always nonnegative, i.e., and equality holds if and only if the two variables are independent. Moreover, the stronger is the dependence between two variables, the larger is the MI. MI is also invariant under strictly monotonic variable transformations. More recently, Kinney and Atwal (2) proved that MI satisfies the so-called self-equitability, indicating that MI places the same importance on linear and nonlinear dependence.

Let with and be independent samples from . Let represent the determinant of a matrix. Consider the following multivariate KDEs:

Here , , , and are diagonal bandwidth matrices with , , , and ; typically, for diagonal matrix , , where is a P-dimensional symmetric density function with . Based on these estimators, the KDE of MI is

| [1] |

This estimator is consistent under some mild conditions. However, as pointed out by ref. 15, its numerical performance is heavily influenced by the choice of bandwidths. Another problem is the notorious boundary effect, which becomes more serious as and appear in the denominator.

In checking the independence between and , we usually compare the product of frequencies in hypercubes and with the frequency in their intersection . Let denote the number of elements in a set. By taking , where is the indicator function of set , those frequencies are, respectively,

and

Thus, the ratio of comparison is

Note that the bandwidth matrices corresponding to in both estimators of and are the same, and the same is true for the case of . We therefore argue that and should be imposed on the KDE. With these equalizations of bandwidths, the joint and marginal densities are well defined; i.e., and , which is an important feature in the definition of MI. Another important motivation for equalizing the bandwidths is that it can automatically reduce the estimation bias at the boundary region; see theoretical justification in the next section.

Jackknife Estimation of MI

Marginal transformation is an efficient way to improve the estimation of ref. 1 and to reduce the technical complexity (27, 28). We consider the uniform transformation and , where and are the cumulative distribution functions of and , respectively. It is easy to see that Use and to denote the copula density functions of , , and , respectively. For observed data, the corresponding transformation is with and denoting the empirical cumulative distribution functions of and , respectively.

A main problem with the KDE is the selection of bandwidths. In the literature, many selection methods have been proposed (29–31). Although good properties have been proved for these selectors in the estimation of density function, applying them to the estimation of MI does not work well (15, 32).

Jackknife Estimation.

Note that MI is zero when the two random variables are independent, and generally bigger MIs imply stronger dependence. Thus, the bandwidths should be selected to maximize MI to detect possible dependence. This idea was also applied in statistical tests (33, 34). However, maximizing MI tends to overfit the MI, making it possibly infinite. Instead, we use the jackknife estimation (27, 35). Let

| (2) |

with bandwidth matrices , , , , and

We estimate MI by the maximum of . With four bandwidth matrices as arguments, this maximization is not easy. However, as suggested above, the bandwidths in the calculation should be set equal. The bias expansion in Theorem 1 also indicates that this equalization of bandwidths in Eq. 2 is helpful for counteracting boundary bias.

For theoretical analysis, we define an “oracle” estimator

Note that is exactly 0 when the two variables are independent and, by the central limit theorem, it is root-n consistent when and . As is actually not obtainable, hereafter we use it only as a benchmark for asymptotic analysis.

Theorem 1.

Under general regularity conditions (SI Appendix, section C, Assumptions A.1, A.2, A.5, and A.6) on functions , , , and and bandwidth matrices, we have

where is given in in SI Appendix, Eq.S1. Furthermore,

The first part of Theorem 1 indicates that a kernel-based estimate of a copula density has a bias of the same order as the bandwidth. It is caused by the boundary points since the kernel density estimation has a much faster consistency rate for the interior points (36). The second part concerns , which involves a divisor in the form of a product of two marginal copula densities; it shows that its bias depends on the difference of bandwidths, i.e., and . If the commonly used selectors (24, 25, 31, 37) are adopted to select the bandwidths separately, then and are of order while and are of order and , respectively. Consequently, the kernel estimator of MI with those bandwidths would suffer a bias of order . However, if we equalize the bandwidths, the leading term in the bias will be eliminated automatically. To further simplify the bandwidth selection, we also restrict and with the identity matrix. This restriction is reasonable since each component of and is uniformly distributed on after the transformation. Thus, we consider a jackknife estimator of MI with one common bandwidth,

Our final estimator is

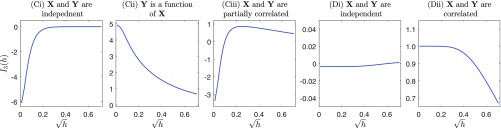

Since this estimation procedure involves a maximization problem, the existence of a global maximum is crucial for computation. With a different relationship between and , the objective function possesses three typical shapes: (i) When and are independent of each other, is an increasing function bounded by 0 as in Fig. 1 Ci; the maximum is achieved with a large and tends to 0. (ii) When and are functionally dependent (i.e., one is a function of the other), the objective function is monotone decreasing as in Fig. 1 Cii, suggesting a zero-valued bandwidth and that the estimated information tends to infinity. (iii) When and are partially correlated, is a unimodal function as shown in Fig. 1 Ciii. These shapes are further justified by Theorem 2. In particular, when and are partially correlated, Theorem 2 indicates that the unique maximum is achieved at .

Fig. 1.

Typical shapes of function . In Ci, Cii, and Ciii, at least one of and is a continuous variable; in Di and Dii, both and are discrete.

Theorem 2.

Under general regularity conditions (SI Appendix, section C, Assumptions A.1, A.5, and A.7) on functions , , , and bandwidth,

-

a)

if and satisfies the regularity condition A.2 in SI Appendix, section C, then

where and are two nonnegative constants given in SI Appendix, section C. In particular, when and are independent.

-

b)

If , and and are functionally dependent and satisfy regularity condition A.3 in SI Appendix, section C, then

Although JMI is defined for continuous random variables, it also applies to the discrete random variables and mixed random variables (with neither purely continuous distributions nor purely discrete distributions). If both and are discrete, depends on as shown in Fig. 1 Di or Dii: If they are independent, changes only marginally with ; otherwise remains unchanged when is smaller than a certain value and is decreasing thereafter, suggesting any bandwidth that is small enough will give the same estimator. If and have a mixture of continuous components and discrete components, depends on in the same way as in Fig. 1 Ci, Cii, and Ciii. More details about extension of JMI to discrete case can be found in SI Appendix, section G.

Compared with existing methods such as mirrored KDE (18), ensemble KDE (19), copula-based KSG (21), and mixed KSG (23), the has several advantages. First, it is more computationally efficient since only one common bandwidth is introduced. Second, the procedure is completely data driven, and we provide a stable selection procedure for bandwidth so that no tuning parameter needs to be predetermined. Third, our method does not necessitate boundary correction and yet it retains the same estimation efficiency because the boundary biases are eliminated automatically. Finally and most importantly, taking the unique maximum value makes numerically stable.

Consistency of the Estimation.

We have the following results for the consistency of our estimator.

Theorem 3.

Under general regularity conditions (SI Appendix, section C, Assumptions A.1, A.5, and A.8) on functions , , , and bandwidth,

-

a)

if and satisfies regularity condition A.2 in SI Appendix, section C, then

-

b)

if and satisfies regularity condition A.4 in SI Appendix, section C, then

Note that is the oracle estimator of MI with root-n consistency. When and are both univariate random variables, Theorem 3a indicates that JMI has, in general, the same root-n consistency as , which is the minimax rate (38) for estimating MI. Interestingly, for the special case when and are independent, and the estimator converges to 0 at rate , which is even faster than root-n. We exploit this faster consistency rate for the independent case to yield a test for independence with high local power as shown in SI Appendix, section F.

Test for Independence

The proposed JMI estimator is a natural test statistic for independence. Tests based on asymptotic distributions require data with large sample size. In contrast, the permutation technique can give a precise distribution for even small samples (39–41). For a random sample of observations, , from , let be a random permutation of . Based on data , calculate the jackknife estimator, denoted by . On repeating the above procedure times, are obtained. The distribution of , under the null hypothesis , can be approximated by the empirical distribution of . At significance level , we reject the null hypothesis when based on the original data is greater than the (1)th quantile of .

Simulation Study

Estimation of MI.

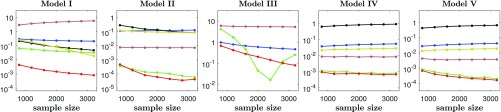

In this section, we compare the efficiency of the JMI estimator with that of existing estimators of MI. As shown in ref. 23, the mixed KSG has the best performance among the three kNN-based estimators they considered and outperforms the partitioning method in their simulation studies. We thus include the mixed KSG as a representative of kNN-based methods in the comparison. As the copula-based KSG (21) makes the same marginal transformation as ours, it is also included in the comparison. To illustrate the stability of the bandwidth selection of JMI, we compare its performance with that of mirrored KDE (18) and other KDE methods that select bandwidths, respectively, by the rule-of-thumb method (THUMB) and the plug-in method (PLUG-IN). We use the same models as in ref. 23 and their variations for multivariate cases, all together nine models, to evaluate the estimation methods. Details of these models are listed in SI Appendix, section D. Similar to ref. 23, the mean-squared errors (MSEs) of different methods for different models and sample sizes are calculated based on 250 replications. The results for the first five models are plotted in Fig. 2, while those for the other four models are shown in SI Appendix, section E. It can be seen that the other KDE methods have much bigger MSE than JMI, possibly due to the fact that unequalized bandwidths tend to cause boundary estimation bias. Copula-based KSG also performs badly as it is not applicable directly to discrete random variables. Mixed KSG shows satisfactory performance in all of the models but is worse than JMI, especially for models I and III. In comparison, our JMI has the smallest MSE for almost all models across different sample sizes, indicating its superior performance.

Fig. 2.

In each panel, the lines represent MSEs of different estimation methods based on 250 replications. Correspondence between colors and the methods are as follows: JMI in red, mixed KSG in green, rule of thumb KDE in blue, plug-in KDE in black, mirrored KDE in dark yellow, and copula-based KSG in brown. For model III, the plug-in method and the mirrored KDE method are not calculated due to their excessive computational complexity.

Test for Independence.

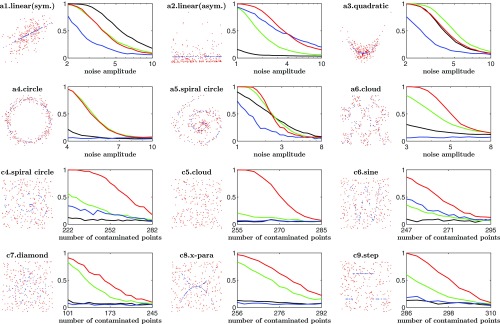

Many statistical tests have been proposed for independence. As demonstrated in ref. 3, HHG of ref. 6 usually has the best performance among all of the existing methods. To ease visualization, we include only dCor of ref. 5, HHG of ref. 6, and MIC of ref. 9 in this simulation study. We examine 16 models that were used in refs. 2, 3, and 6 which include both univariate models and multivariate models. For each model, we further consider the additive noise and the case where the data are contaminated with pure noise. Details of these models are listed in SI Appendix, section D. Similar to ref. 2, we simulate data with sample size and 25 different magnitudes of noise level. For models with additive noise, we increase the noise level by changing the noise ratio amplitude (NR), while for models with contaminations, we raise the noise level by introducing more contaminating observations. We plot the power curves for some of the results in Fig. 3, and the others are in SI Appendix, section E.

Fig. 3.

In each pair of panels, Left shows the model and the data, and Right shows the power of tests at significance level : Models a1–a6 with additive noise are in Upper panels, and models c4–c9 with contaminated noise are in Lower panels. For power curves, correspondence between colors and different methods is as follows: red for JMI, green for HHG, blue for MIC, and black for dCor.

We summarize the results as follows. dCor performs well only for linear models with symmetric additive noise, but poorly for the other models. Similar to results in refs. 2 and 3, MIC performs very well in testing independence for the high-frequency sine model but it fails in other models. In most cases, HHG has relatively better performance than MIC and dCor, which is consistent with the findings in ref. 3. Generally, JMI appears to be the most stable test for independence. It has similar performance to HHG for models with additive noise but has clear superiority for data with contaminated observations.

Discussion

In this paper, we have introduced a jackknife kernel estimation for the MI (JMI). Inspired by statistical tests for independence and guided by asymptotic analysis, we propose that the bandwidths involved in the estimation should be set equal. For measuring the dependence, we estimate the MI using its maximum value with respect to the equalized bandwidth. JMI is completely data driven and does not incur predetermined tuning parameters. It enjoys very good statistical properties such as automatic bias correction and high local power in testing for independence. The superiority of JMI is also demonstrated through simulation studies. We attribute the good performance of JMI to two factors: (i) the definition of MI itself and (ii) our estimation method that enjoys several advantages mentioned above.

Materials and Methods

“Mixed KSG” was calculated by the codes in ref. 23. “Copula-based KSG” was based on the algorithm described in ref. 21. “Mirrored KDE” was computed by the algorithm introduced in ref. 42. For the other methods, the simulations were conducted in R. “dCor” and “MIC” were estimated using packages “energy” and “Minerva,” respectively. The HHG test was carried out using the package “HHG.” For kernel methods, the rule of thumb used the optimal Gaussian bandwidth given in ref. 24; bandwidths for “PLUG-IN” were calculated using the package “ks.” “JMI” was calculated by the procedure discussed in SI Appendix, section B. For estimation efficiency, MSE for each model and sample size was calculated based on 250 replications. For the test of independence, we adopted the permutation test discussed above. For each sample, we randomly permuted observations of to generate samples under the null hypothesis. The power curve for each model and at each noise level was calculated based on 1,000 simulations. Our calculation codes are available at https://github.com/XianliZeng/JMI.

Supplementary Material

Acknowledgments

We are most grateful to the editor and two referees for their meticulous review, valuable comments, and constructive suggestions, which have led to a substantial improvement of this paper. Y.X. is partially supported by MOE AcRF Grant of Singapore R-155-000-193-114.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: All calculation codes used in this study have been deposited in GitHub, https://github.com/XianliZeng/JMI.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1715593115/-/DCSupplemental.

References

- 1.Pearson K. Note on regression and inheritance in the case of two parents. Proc R Soc Lond. 1895;58:240–242. [Google Scholar]

- 2.Kinney JB, Atwal GS. Equitability, mutual information, and the maximal information coefficient. Proc Natl Acad Sci USA. 2014;111:3354–3359. doi: 10.1073/pnas.1309933111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ding AA, Li Y. Copula correlation: An equitable dependence measure and extension of Pearson’s correlation. 2013. arXiv:1312.7214.

- 4.Gretton A, Bousquet O, Smola A, Schölkopf B. Measuring statistical dependence with Hilbert-Schmidt norms. In: Jain S, Simon HU, Tomita E, editors. International Conference on Algorithmic Learning Theory. Springer; Berlin: 2005. pp. 63–77. [Google Scholar]

- 5.Székely GJ, Rizzo ML, Bakirov NK. Measuring and testing dependence by correlation of distances. Ann Stat. 2007;35:2769–2794. [Google Scholar]

- 6.Heller R, Heller Y, Gorfine M. A consistent multivariate test of association based on ranks of distances. Biometrika. 2012;100:503–510. [Google Scholar]

- 7.Shannon CE. A mathematical theory of communication. Bell Labs Tech J. 1948;27:379–423. [Google Scholar]

- 8.Cover TM, Thomas JA. Elements of Information Theory. 2nd Ed Wiley-Interscience; New York: 2006. [Google Scholar]

- 9.Reshef DN, et al. Detecting novel associations in large data sets. Science. 2011;334:1518–1524. doi: 10.1126/science.1205438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kraskov A, Stögbauer H, Grassberger P. Estimating mutual information. Phys Rev E. 2004;69:066138. doi: 10.1103/PhysRevE.69.066138. [DOI] [PubMed] [Google Scholar]

- 11.Bialek W, Rieke F, Van Steveninck RDR, Warland D. Reading a neural code. Science. 1991;252:1854–1857. doi: 10.1126/science.2063199. [DOI] [PubMed] [Google Scholar]

- 12.Strong SP, Koberle R, van Steveninck RRDR, Bialek W. Entropy and information in neural spike trains. Phys Rev Lett. 1998;80:197–200. [Google Scholar]

- 13.Paninski L. Estimation of entropy and mutual information. Neural Comput. 2003;15:1191–1253. [Google Scholar]

- 14.Fraser AM, Swinney HL. Independent coordinates for strange attractors from mutual information. Phys Rev A. 1986;33:1134–1140. doi: 10.1103/physreva.33.1134. [DOI] [PubMed] [Google Scholar]

- 15.Moon YI, Rajagopalan B, Lall U. Estimation of mutual information using kernel density estimators. Phys Rev E. 1995;52:2318–2321. doi: 10.1103/physreve.52.2318. [DOI] [PubMed] [Google Scholar]

- 16.Daub CO, Steuer R, Selbig J, Kloska S. Estimating mutual information using b-spline functions–an improved similarity measure for analysing gene expression data. BMC Bioinformatics. 2004;5:118. doi: 10.1186/1471-2105-5-118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Peter AM, Rangarajan A. Maximum likelihood wavelet density estimation with applications to image and shape matching. IEEE Trans Image Process. 2008;17:458–468. doi: 10.1109/TIP.2008.918038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Singh S, Póczos B. 2014. Exponential concentration of a density functional estimator. Advances in Neural Information Processing Systems 27, eds Ghahramani Z, Welling M, Cortes C, Lawrence ND, Weinberger KQ (Curran Associates, Inc., Red Hook, NY), pp 3032–3040.

- 19.Moon KR, Sricharan K, Hero AO. Ensemble estimation of mutual information. In: Durisi G, Studer C, editors. 2017 IEEE International Symposium on Information Theory (ISIT) IEEE, Aachen; Germany: 2017. pp. 3030–3034. [Google Scholar]

- 20.Singh H, Misra N, Hnizdo V, Fedorowicz A, Demchuk E. Nearest neighbor estimates of entropy. Am J Math Manag Sci. 2003;23:301–321. [Google Scholar]

- 21.Pál D, Póczos B, Szepesvàri C. 2010. Estimation of Rényi entropy and mutual information based on generalized nearest-neighbor graphs. Advances in Neural Information Processing Systems 23, eds Lafferty JD, Williams CKI, Shawe-Taylor J, Zemel RS, Culotta A (Curran Associates, Inc., Red Hook, NY), pp1849–1857.

- 22.Gao S, Ver Steeg G, Galstyan A. Efficient estimation of mutual information for strongly dependent variables. In: Lebanon G, Vishwanathan SVN, editors. Artificial Intelligence and Statistics. Proceedings of Machine Learning Research; San Diego: 2015. pp. 277–286. [Google Scholar]

- 23.Gao W, Kannan S, Oh S, Viswanath P. Estimating mutual information for discrete-continuous mixtures. In: Guyon I, et al., editors. Advances in Neural Information Processing Systems. Vol 30. Curran Associates, Inc.; Red Hook, NY: 2017. pp. 5986–5997. [Google Scholar]

- 24.Silverman BW. Density Estimation for Statistics and Data Analysis. Vol 26 CRC Press; Boca Raton, FL: 1986. [Google Scholar]

- 25.Scott DW. Multivariate Density Estimation: Theory, Practice, and Visualization. Wiley; New York: 2015. [Google Scholar]

- 26.Khan S, et al. Relative performance of mutual information estimation methods for quantifying the dependence among short and noisy data. Phys Rev E. 2007;76:026209. doi: 10.1103/PhysRevE.76.026209. [DOI] [PubMed] [Google Scholar]

- 27.Hong Y, White H. Asymptotic distribution theory for nonparametric entropy measures of serial dependence. Econometrica. 2005;73:837–901. [Google Scholar]

- 28.Geenens G, Charpentier A, Paindaveine D. Probit transformation for nonparametric kernel estimation of the copula density. Bernoulli. 2017;23:1848–1873. [Google Scholar]

- 29.Bowman AW. An alternative method of cross-validation for the smoothing of density estimates. Biometrika. 1984;71:353–360. [Google Scholar]

- 30.Scott DW, Terrell GR. Biased and unbiased cross-validation in density estimation. J Am Stat Assoc. 1987;82:1131–1146. [Google Scholar]

- 31.Sheather SJ, Jones MC. A reliable data-based bandwidth selection method for kernel density estimation. J R Stat Soc Ser B Methodol. 1991;53:683–690. [Google Scholar]

- 32.Steuer R, Kurths J, Daub CO, Weise J, Selbig J. The mutual information: Detecting and evaluating dependencies between variables. Bioinformatics. 2002;18:S231–S240. doi: 10.1093/bioinformatics/18.suppl_2.s231. [DOI] [PubMed] [Google Scholar]

- 33.Horowitz JL, Spokoiny VG. An adaptive rate optimal test of a parametric mean regression model against a nonparametric alternative. Econometrica. 2001;69:599–631. [Google Scholar]

- 34.Heller R, Heller Y, Kaufman S, Brill B, Gorfine M. Consistent distribution-free -sample and independence tests for univariate random variables. J Machine Learn Res. 2016;17:1–54. [Google Scholar]

- 35.Efron B, Stein C. The jackknife estimate of variance. Ann Stat. 1981;9:586–596. [Google Scholar]

- 36.Fan J, Gijbels I. Local Polynomial Modelling and its Applications: Monographs on Statistics and Applied Probability. Vol 66 Chapman and Hall; London: 1996. [Google Scholar]

- 37.Wand MP, Jones MC. Kernel Smoothing. Chapman and Hall/CRC; Boca Raton, FL: 1994. [Google Scholar]

- 38.Kandasamy K, Krishnamurthy A, Poczos B, Wasserman L. Nonparametric von Mises estimators for entropies, divergences and mutual informations. In: Cortes C, Lawrence ND, Lee DD, Sugiyama M, Garnett R, editors. Advances in Neural Information Processing Systems. Curran Associates, Inc.; Red Hook, NY: 2015. pp. 397–405. [Google Scholar]

- 39.Fisher RA. The Design of Experiments. Oliver and Boyd; Edinburgh, London: 1935. [Google Scholar]

- 40.Manly BF. Randomization, Bootstrap and Monte Carlo Methods in Biology. Vol 70 Chapman and Hall/CRC; Boca Raton, FL: 2006. [Google Scholar]

- 41.Edgington E, Onghena P. Randomization Tests. Chapman and Hall/CRC; Boca Raton, FL: 2007. [Google Scholar]

- 42.Singh S, Póczos B. Generalized exponential concentration inequality for Rényi divergence estimation. In: Xing EP, Jebara T, editors. International Conference on Machine Learning. Proceedings of Machine Learning Research; Bejing, China: 2014. pp. 333–341. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.