Abstract

We present a novel family of nonparametric omnibus tests of the hypothesis that two unknown but estimable functions are equal in distribution when applied to the observed data structure. We developed these tests, which represent a generalization of the maximum mean discrepancy tests described in Gretton et al. [2006], using recent developments from the higher-order pathwise differentiability literature. Despite their complex derivation, the associated test statistics can be expressed rather simply as U-statistics. We study the asymptotic behavior of the proposed tests under the null hypothesis and under both fixed and local alternatives. We provide examples to which our tests can be applied and show that they perform well in a simulation study. As an important special case, our proposed tests can be used to determine whether an unknown function, such as the conditional average treatment effect, is equal to zero almost surely.

Keywords: higher order pathwise differentiability, maximum mean discrepancy, omnibus test, equality in distribution, infinite dimensional parameter

1. Introduction

In many scientific problems, it is of interest to determine whether two particular functions are equal to each other. In many settings these functions are unknown and may be viewed as features of a data-generating mechanism from which observations can be collected. As such, these functions can be learned from available data, and estimates of these respective functions can then be compared. To reduce the risk of deriving misleading conclusions due to model misspecification, it is appealing to employ flexible statistical learning tools to estimate the unknown functions. Unfortunately, inference is usually extremely difficult when such techniques are used, because the resulting estimators tend to be highly irregular. In such cases, conventional techniques for constructing confidence intervals or computing p-values are generally invalid, and a more careful construction, as exemplified by the work presented in this article, is required.

To formulate the problem statistically, suppose that n independent observations O1, O2, …, On are drawn from a distribution P0 known only to lie in the nonparametric statistical model, denoted by . Let denote the support of P0, and suppose that P ↦ RP and P ↦ SP are parameters mapping from onto the space of univariate bounded real-valued measurable functions defined on , i.e. RP and SP are elements of the space of univariate bounded real-valued measurable functions defined on . For brevity, we will write and . Our objective is to test the null hypothesis

versus the complementary alternative : not , where O follows the distribution P0 and the symbol denotes equality in distribution. We note that if R0 ≡ S0, i.e. R0(O) = S0 (O) almost surely, but not conversely. The case where S0 ≡ 0 is of particular interest since then the null simplifies to . Because P0 is unknown, R0 and S0 are not readily available. Nevertheless, the observed data can be used to estimate P0 and hence each of R0 and S0. The approach we propose will apply to functionals within a specified class described later.

Before presenting our general approach, we describe some motivating examples. Consider the data structure O = (W, A, Y) ~ P, where W is a collection of covariates, A is binary treatment indicator, and Y is a bounded outcome, i.e., there exists a universal c such that, for all , P(|Y| ≤ c) = 1. Note that, in our examples, the condition that Y is bounded cannot easily be relaxed, as the parameter from Gretton et al. [2006] on which we will base our testing procedure requires that the quantities under consideration have compact support.

- Example 1: Random sample size variant of the two-sample test from Gretton et al. [2006].

- If and , the null hypothesis corresponds to Y|A = 1 and Y|A = 0 sharing the same distribution. This will differ from the setting considered in Gretton et al. [2006] in that, in our setting, the number of subjects with A = 0 and A = 1 will be treated as random, while the total number of observed subjects is fixed. This is in contrast to Gretton et al. [2006], who studied the case where the number of subjects with A = 0 and A = 1 were both fixed. This is the simplest example that we will give in this work. In particular, it is our only example in which the functions RP and SP do not rely on the (unknown) data generating distribution P.

- Example 2: Testing a null conditional average treatment effect.

- If and SP ≡ 0, the null hypothesis corresponds to the absence of a conditional average treatment effect. This definition of RP corresponds to the so-called blip function introduced by Robins [2004], which plays a critical role in defining optimal personalized treatment strategies [Chakraborty and Moodie, 2013].

- Example 3: Testing for equality in distribution of regression functions in two populations.

- Suppose the setting of the previous example, but where A represents membership to population 0 or 1. If and , the null hypothesis corresponds to the outcome having the conditional mean functions, applied to a random draw of the covariate, having the same distribution in these two populations. We note here that our formulation considers selection of individuals from either population as random rather than fixed so that population-specific sample sizes (as opposed to the total sample size) are themselves random. The same interpretation could also be used for the previous example, now testing if the two regression functions are equivalent.

- Example 4: Testing a null covariate effect on average response.

- Suppose now that the data unit only consists of . If and SP ≡ 0, the null hypothesis corresponds to the outcome Y having conditional mean zero in all strata of covariates. This may be interesting when zero has a special importance for the outcome, such as when the outcome is the profit over some period.

- Example 5: Testing a null variable importance.

- Suppose again that and . Denote by W(−k) the vector (W(i) : 1 ≤ i ≤ K,i ≠ k). Setting and , the null hypothesis corresponds to W(k) having null variable importance in the presence of W(−k) with respect to the conditional mean of Y given W in the sense that EP (Y | W) = EP (Y | W(−k)) almost surely. This is true because if , the latter random variables have equal variance and so

implying that almost surely. Thus, a test of is equivalent to a test of almost sure equality between RP and SP in this example. We will show in Section 5 that our approach cannot be directly applied to this example, but that a simple extension yields a valid test.

Gretton et al. [2006] investigated the related problem of testing equality between two distributions in a two-sample problem. They proposed estimating the maximum mean discrepancy (hereafter referred to as MMD), a non-negative quantitative summary of the relationship between the two distributions. In particular, the MMD between distributions P1 and P2 for observations X is defined as

| (1) |

Defining the MMD relies on selecting a function class . Gretton et al. [2006] propose selecting to be the unit ball in a reproducing kernel Hilbert space. If the kernel defining this space is a so-called universal kernel and the support of X under P1 and P2 is compact, then they showed that the MMD is zero if and only if the two distributions are equal. They also observe that the Gaussian kernel is a universal kernel. Gretton et al. also investigated related problems using this technique [see, e.g., Gretton et al., 2009, 2012a, Sejdinovic et al., 2013]. In this work, we also utilize the MMD as a parsimonious summary of equality but consider the more general problem wherein the null hypothesis relies on unknown functions R0 and S0 indexed by the data-generating distribution P0.

Other investigators have proposed omnibus tests of hypotheses of the form versus in the literature. In the setting of Example 2 above, the work presented in Racine et al. [2006] and Lavergne et al. [2015] is particularly relevant. The null hypothesis of interest in these papers consists of the equality holding almost surely. If individuals have a nontrivial probability of receiving treatment in all strata of covariates, this null hypothesis is equivalent to . In both these papers, kernel smoothing is used to estimate the required regression functions. Therefore, key smoothness assumptions are needed for their methods to yield valid conclusions. The method we present does not hinge on any particular class of estimators and therefore does not rely on this condition.

To develop our approach, we use techniques from the higher-order pathwise differentiability literature [see, e.g., Pfanzagl, 1985, Robins et al., 2008, van der Vaart, 2014, Carone et al., 2014]. Despite the elegance of the theory presented by these various authors, it has been unclear whether these higher-order methods are truly useful in infinite-dimensional models since most functionals of interest fail to be even second-order pathwise differentiable in such models. This is especially troublesome in problems in which under the null the first-order derivative of the parameter of interest (in an appropriately defined sense) vanishes, since then there seems to be no theoretical basis for adjusting parameter estimates to recover parametric rate asymptotic behavior. At first glance, the MMD parameter seems to provide one such disappointing example, since its first-order derivative indeed vanishes under the null. The latter fact is a common feature of problems wherein the null value of the parameter is on the boundary of the parameter space. It is also not an entirely surprising phenomenon, at least heuristically, since the MMD achieves its minimum of zero under the null hypothesis. Nevertheless, we are able to show that this parameter is indeed second-order pathwise differentiable under the null hypothesis – this is a rare finding in infinite-dimensional models. As such, we can employ techniques from the recent higher-order pathwise differentiability literature to tackle the problem at hand.

This paper is organized as follows. In Section 2, we formally present our parameter of interest, the squared MMD between two unknown functions, and establish asymptotic representations for this parameter based on its higher-order differentiability, which, as we formally establish, holds even when the MMD involves estimation of unknown nuisance parameters. In Section 3, we discuss estimation of this parameter, discuss the corresponding hypothesis test and study its asymptotic behavior under the null. We study the consistency of our proposed test under fixed and local alternatives in Section 4. We revisit our examples in Section 5 and provide an additional example in which we can still make progress using our techniques even though our regularity conditions fails. In Section 6, we present results from a simulation study to illustrate the finite-sample performance of our test, and we end with concluding remark in Section 7.

Supplementary Appendix A reviews higher-order pathwise differentiability. Supplementary Appendix B gives a summary of the empirical U−process results from Nolan and Pollard [1988] that we build upon. All proofs can be found in Supplementary Appendix C.

2. Properties of maximum mean discrepancy

2.1. Definition

For a distribution P and mappings T and U, we define

| (2) |

and set . The MMD between the distributions of RP(O) and SP(O) when O ~ P, defined in Eq. 1 using to be the unit ball in the RKHS generated by the Gaussian kernel with unit bandwidth, is given by and is always well-defined because Ψ(P) is non-negative. Indeed, denoting by ψ0 the true parameter value Ψ(P0), Theorem 3 of Gretton et al. [2006] establishes that ψ0 equals zero if holds and is otherwise strictly positive. Though the study in Gretton et al. [2006] is restricted to two-sample problems, their proof of this result is only based upon properties of Ψ and therefore holds regardless of the sample collected. Their proof relies on the fact that two random variables X and Y with compact support are equal in distribution if and only if E[f(Y)] = E[f(X)] for every continuous function f, and uses techniques from the theory of Reproducing Kernel Hilbert Spaces [see, e.g., Berlinet and Thomas-Agnan, 2011, for a general exposition]. We invite interested readers to consult Gretton et al. [2006] – and, in particular, Theorem 3 therein – for additional details. The definition of the MMD we utilize is based on the univariate Gaussian kernel with unit bandwidth, which is appropriate in view of Steinwart [2002]. The results we present in this paper can be generalized to the MMD based on a Gaussian kernel of arbitrary bandwidth h by simply rescaling the mappings R and S to R/h and S/h.

2.2. First-order differentiability

To develop a test of , we will first construct an estimator ψn of ψ0. In order to avoid restrictive model assumptions, we wish to use flexible estimation techniques in estimating P0 and therefore ψ0. To control the operating characteristics of our test, it will be crucial to understand how to generate a parametric-rate estimator of ψ0. For this purpose, it is informative to first investigate the pathwise differentiability of Ψ as a parameter from to .

So far, we have not specified restrictions on the mappings P ↦ RP and P ↦ SP. However, in our developments, we will require these mappings to satisfy certain regularity conditions. Specifically, we will restrict our attention to elements of the class of all mappings T for which there exists some measurable function XT defined on , e.g. XT(o) = XT(w, a, y) = w, such that

-

(S1)

TP is a measurable mapping with domain and range contained in [− b, b] for some 0 ≤ b < ∞ independent of P;

-

(S2)

for all submodels dPt/dP = 1 + th with bounded h with Ph = 0, there exists some δ > 0 and a set with such that, for all , is twice differentiable at t1 with uniformly bounded (in xT) first and second derivatives;

-

(S3)for any and submodel dPt/dP = 1 + th for bounded h with Ph = 0, there exists a function uniformly bounded (in P and o) such that for almost all and

Condition (S1) ensures that T is bounded and only relies on a summary measure of an observation O. Condition (S2) ensures that we will be able to interchange differentiation and integration when needed. Condition (S3) is a conditional (and weaker) version of pathwise differentiability in that the typical inner product representation only needs to hold for the conditional distribution of O given XT under P0. We will verify in Section 5 that these conditions hold in the context of the motivating examples presented earlier.

Remark 1. As a caution to the reader, we warn that simultaneously satisfying (S1) and (S3) may at times be restrictive. For example, if the observed data unit is , the parameter

cannot generally satisfy both conditions. In Section 5, we discuss this example further and provide a means to tackle this problem using the techniques we have developed. In concluding remarks, we discuss a weakening of our conditions, notably by replacing by the linear span of elements in . Consideration of this larger class significantly complicates the form of the estimator we propose in Section 3. □

We are now in a position to discuss the pathwise differentiability of Ψ. For any elements , we define

and set . Note that ΓP is symmetric for any . For brevity, we will write and Γ0 to denote and , respectively. The following theorem characterizes the first-order behavior of Ψ at an arbitrary .

Theorem 1 (First-order pathwise differentiability of Ψ over ). If , the parameter is pathwise differentiable at with first-order canonical gradient given by .

Under some conditions, it is straightforward to construct an asymptotically linear estimator of ψ0 with influence function , that is, an estimator ψn of ψ0 such that

For example, the one-step Newton-Raphson bias correction procedure [see, e.g., Pfanzagl, 1982] or targeted minimum loss-based estimation [see, e.g., van der Laan and Rose, 2011] can be used for this purpose. If the above representation holds and the variance of is positive, then , where the symbol denotes convergence in distribution and we write . If σ0 is strictly positive and can be consistently estimated, Wald-type confidence intervals for ψ0 with appropriate asymptotic coverage can be constructed.

The situation is more challenging if σ0 = 0. In this case, in probability and typical Wald-type confidence intervals will not be appropriate. Because has mean zero under P0, this happens if and only if . The following lemma provides necessary and sufficient conditions under which σ0 = 0.

Corollary 1 (First-order degeneracy under ). If , it will be the case that σ0 = 0 if and only if either (i) holds, or (ii) R0(O) and S0(O) are degenerate, i.e. almost surely constant but not necessarily equal, with .

The above results rely in part on knowledge of and . It is useful to note that, in some situations, the computation of for a given and can be streamlined. This is the case, for example, if P ↦ TP is invariant to fluctuations of the marginal distribution of XT, as it seems (S3) may suggest. Consider obtaining iid samples of increasing size from the conditional distribution of O given XT = xT under P, so that all individuals have observed XT = xT. Consider the fluctuation submodel for the conditional distribution, where h is uniformly bounded and ∫ h(o)dP(o|xT) = 0. Suppose that (i) P ↦ TP(xT) is differentiable at t = 0 with respect to the above submodel and (ii) this derivative satisfies the inner product representation

for some uniformly bounded function with . If the above holds for all xT, we may take for all o with XT(o) = xT. If is uniformly bounded in P, (S3) then holds.

In summary, the above discussion suggests that, if T is invariant to fluctuations of the marginal distribution of XT, (S3) can be expected to hold if there exists a regular, asymptotically linear estimator of each TP(xT) under iid sampling from the conditional distribution of O given XT = xT implied by P.

Remark 2. If T is invariant to fluctuations of the marginal distribution of XT, one can also expect (S3) to hold if P ↦ ∫ TP(XT(o))dP(o) is pathwise differentiable with canonical gradient uniformly bounded in P and o in the model in which the marginal distribution of X is known. The canonical gradient in this model is equal to . □

2.3. Second-order differentiability and asymptotic representation

As indicated above, if σ0 = 0, the behavior of Ψ around P0 cannot be adequately characterized by a first-order analysis. For this reason, we must investigate whether Ψ is second-order differentiable. As we discuss below, under , Ψ is indeed second-order pathwise differentiable at P0 and admits a useful second-order asymptotic representation.

Theorem 2 (Second-order pathwise differentiability under ). If and holds, the parameter is second-order pathwise differentiable at P0 with second-order canonical gradient .

It is easy to confirm that Γ0, and thus , is one-degenerate under in the sense that ∫ Γ0(o,o2)dP0(o2) = ∫ Γ0(o1, o)dP0(o1) = 0 for all o. This is shown as follows. For any , the law of total expectation conditional on XU and fact that yields that

where we have written to denote . Since ∫ f(R0(o))dP0(o) = ∫ f(S0(o))dP0(o) for each measurable function f when , this then implies that and under . Hence, it follows that ∫ Γ0(o, o2)dP0(o2) = 0 under for any o.

If second-order pathwise differentiability held in a sufficiently uniform sense over , we would expect

| (3) |

to be a third-order remainder term. However, second-order pathwise differentiability has only been established under the null, and in fact, it appears that Ψ may not generally be second-order pathwise differentiable under the alternative. As such, may not even be defined under the alternative. In writing (3), we either naively set , which is not appropriately centered to be a candidate second-order gradient, or instead take to be the centered extension

Both of these choices yield the same expression above because the product measure (P − P0)2 is self-centering. The need for an extension renders it a priori unclear whether as P tends to P0 the behavior of is similar to what is expected under more global second-order pathwise differentiability. Using the fact that Ψ(P) = P2ΓP, we can simplify the expression in (3) to

| (4) |

As we discuss below, this remainder term can be bounded in a useful manner, which allows us to determine that it is indeed third-order.

For all , and , we define

as the remainder from the linearization of T based on the conditional gradient . Typically, is a second-order term. Further consideration of this term in the context of our motivating examples is described in Section 5. Furthermore, we define

For any given function , we denote by the Lp(P0)-norm and use the symbol ≲ to denote ‘less than or equal to up to a positive multiplicative constant’. The following theorem provides an upper bound for the remainder term of interest.

Theorem 3 (Upper bounds on remainder term). For each , the remainder term, admits the following upper bounds:

To develop a test procedure, we will require an estimator of P0, which will play the role of P in the above expressions. It is helpful to think of parametric model theory when interpreting the above result, with the understanding that certain smoothing methods, such as higher-order kernel smoothing, can achieve near-parametric rates in certain settings. In a parametric model where P0 is estimated with (e.g., a maximum likelihood estimator), we could often expect and to be and , respectively, for p ≥ 1. Thus, the above theorem suggests that the approximation error may be in a parametric model under . In some examples, it is reasonable to expect that for a large class of distributions P. In such cases, the upper bound on simplifies to under , which under a parametric model is often .

To make these results more concrete, we consider the special case where RP, SP, , and are smooth mappings of regression functions under P conditional on the d−dimensional covariate W (e.g., as in Example 3 – see Section 5). Suppose that all of these regression functions under P0 are at least ℓ−times differentiable. In this case, rates of convergence for the remainder terms are well understood for kernel smoothers using kernels of sufficiently high order. In particular, each regression function converges at rate in L2(P0). Under , one could rely on being and being . If is second-order, this would generally require ℓ > d, which is more stringent than the usual ℓ > d/2 requirement for standard first-order estimators. If, on the other hand, , then we require that is under , which corresponds to requiring ℓ slightly greater than d/2.

3. Proposed test: formulation and inference under the null

3.1. Formulation of test

We begin by constructing an estimator of ψ0 from which a test can then be devised. Using the fact that Ψ(P) = P2ΓP, as implied by (4), we note that if Γ0 were known, the U-statistic would be a natural estimator of ψ0, where denotes the empirical measure that places equal probability mass on each of the n(n − 1) points (Oi, Oj) with i ≠ j. In practice, Γ0 is unknown and must be estimated. This leads to the estimator , where we write for some estimator of P0 based on the available data. Since a large value of ψn is inconsistent with , we will reject if and only if ψn > cn for some appropriately chosen cutoff cn.

In the nonparametric model considered, it may be necessary, or at the very least desirable, to utilize a data-adaptive estimator of P0 when constructing Γn. Studying the large-sample properties of ψn may then seem particularly daunting since at first glance we may be led to believe that the behavior of ψn – ψ0 is dominated by . However, this is not the case. As we will see, under some conditions, ψn – ψ0 will approximately behave like . Thus, there will be no contribution of to the asymptotic behavior of ψn – ψ0. Though this result may seem counterintuitive, it arises because Ψ(P) can be expressed as P2ΓP with ΓP a second-order gradient (or rather an extension thereof) up to a proportionality constant. More concretely, this surprising finding is a direct consequence of (4).

As further support that ψn is a natural test statistic, even when a data-adaptive estimator of P0 has been used, we note that ψn could also have been derived using a second-order one-step Newton-Raphson construction, as described in Robins et al. [2008]. The latter is given by

where we use the centered extension of as discussed in Section 2.3. Here and throughout, Pn denotes the empirical distribution. It is straightforward to verify that indeed ψn = ψn,NR.

3.2. Inference under the null

3.2.1. Asymptotic behavior

For each , we let be the P0−centered modification of ΓP given by

and denote by . While under , this is not true more generally. Below, we use and to respectively denote and evaluated at . Straightforward algebraic manipulations allows us to write

| (5) |

Our objective is to show that n (ψn – ψ0) behaves like as n gets large under . In view of (5), this will be true, for example, under conditions ensuring that

-

C1)

(empirical process and consistency conditions);

-

C2)

(U−process and consistency conditions);

-

C3)

(consistency and rate conditions).

We have already argued that C3) is reasonable in many examples of interest, including those presented in this paper. Nolan and Pollard [1987, 1988] developed a formal theory that controls terms of the type appearing in C2). In Supplementary Appendix B.1 we restate specific results from these authors which are useful to study C2). Finally, the following lemma gives sufficient conditions under which C1) holds. We first set .

Lemma 1 (Sufficient conditions for C1)). Suppose that o1 ↦ ∫ Γn(o1, o)dP0(o)/K1n, defined to be zero if K1n = 0, belongs to a P0−Donsker class [van der Vaart and Wellner, 1996] with probability tending to 1. Then, under ,

and thus C1) holds whenever .

The following theorem describes the asymptotic distribution of nψn under the null hypothesis whenever conditions C1), C2) and C3) are satisfied.

Theorem 4 (Asymptotic distribution under ). Suppose that C1), C2) and C3) hold. Then, under ,

where are the eigenvalues of the integral operator h(o) ↦ ∫ Γ0 (o1, o)h(o1)dP0(o1) repeated according to their multiplicity, and is a sequence of independent standard normal random variables. Furthermore, all of these eigenvalues are nonnegative under .

We note that by employing a sample splitting procedure – namely, estimating Γ0 on one portion of the sample and constructing the U−statistic based on the remainder of the sample – it is possible to eliminate the U−process conditions required for C2). In such a case, satisfaction of C2) only requires convergence of to Γ0 with respect to the -norm. This sample splitting procedure would also allow one to avoid the empirical process conditions in C1): in particular, o ↦ P0Γn(o, ·) would need to converge to zero, but no further requirements would then be needed on Γn for C1) to be satisfied. We also note that the L2(P0) consistency of o ↦ P0Γn(o, ·) and the consistency of are implied by the consistency of Γn for Γ0, and so when sample splitting is used one could replace C1) and C2) by this single consistency condition.

We note also that, if sample splitting is not used, then one could replace C1) and C2) by this single consistency condition and the added assumption that Γn belongs to a class with a finite uniform entropy integral. See Supplementary Appendix B.2 for a proof that this suffices to imply the needed empirical process conditions for C1). It is also straightforward to show that controlling the entropy of the class to which Γn may belong also controls the entropy of the class to which the linear transformation of Γn may belong.

Remark 3. In Example 4, sample splitting may prove particularly important when the estimator of is chosen as the minimizer of an empirical risk since in finite samples the bias induced by using the same residuals as those in the definition of may be significant. Thus, without some form of sample splitting, the finite sample performance of ψn may be poor even under the conditions stated in Supplementary Appendix B.1. □

3.2.2. Estimation of the test cutoff

As indicated above, our test consists of rejecting if and only if ψn is larger than some cutoff cn. We wish to select cn to yield a non-conservative test at level α ∈ (0,1). In view of Theorem 4, denoting by q1−α the 1 – α quantile of the described limit distribution, the cutoff cn should be chosen to be q1−α/n. We thus reject if and only if nψn > q1−α. As described in the following corollary, q1−α admits a very simple form when SP ≡ 0 for all P.

Corollary 2 (Asymptotic distribution under , S degenerate). Suppose that C1), C2) and C3) hold, that SP ≡ 0 for all , and that . Then, under ,

where Z is a standard normal random variable. It follows then that , where z1−α/2 is the (1 − α/2) quantile of the standard normal distribution.

The above corollary gives an expression for q1−α that can easily be consistently estimated from the data. In particular, one can use as an estimator of q1−α, whose consistency can be established under a Glivenko-Cantelli and consistency condition on the estimator of . However, in general, such a simple expression will not exist. Gretton et al. [2009] proposed estimating the eigenvalues νk of the centered Gram matrix and then computing . In our context, the eigenvalues νk are those of the n × n matrix with entries defined as

| (6) |

Given these n eigenvalue estimates , one could then simulate from to approximate . While this seems to be a plausible approach, a formal study establishing regularity conditions under which this procedure is valid is beyond the scope of this paper. We note that it also does not fall within the scope of results in Gretton et al. [2009] since their kernel does not depend on estimated nuisance parameters. We refer the reader to Franz [2006] for possible sufficient conditions under which this approach may be valid. Though we do not have formal regularity conditions under which this procedure is guaranteed to maintain the type I error level, our simulation results do seem to suggest appropriate control in practice (Section 6).

In practice, it suffices to give a data-dependent asymptotic upper bound on q1−α. We will refer to , which depends on Pn, as an asymptotic upper bound of q1−α if

| (7) |

If q1−α is consistently estimated, one possible choice of is this estimate of q1−α – the inequality above would also become an equality provided the conclusion of Theorem 4 holds. It is easy to derive a data-dependent upper bound with this property using Chebyshev’s inequality. To do so, we first note that

where we have interchanged the variance operation and the limit using the L2 martingale convergence theorem and the last equality holds because λk, k = 1, 2,…, are the eigenvalues of the Hilbert-Schmidt integral operator with kernel . Under mild regularity conditions, can be consistently estimated using . Provided , we find that

| (8) |

where the limit variate has mean zero and unit variance. The following theorem gives a valid choice of .

Theorem 5. Fix α ∈ (0, 1) and suppose that C1), C2) and C3) hold. Then, under and provided in probability, is a valid upper bound in the sense of (7).

The proof of the result follows immediately by noting that P(X > t) ≤ (1 + t2)−1 for any random variable X with mean zero and unit variance in view of the one-sided Chebyshev’s inequality. For α = 0.05, the above demonstrates that a conservative cutoff is . This theorem illustrates concretely that we can obtain a consistent test that controls type I error. In practice, we recommend either using the result of Corollary 2 whenever possible or estimating the eigenvalues of the matrix in (6).

We note that the condition holds in many but not all examples of interest. Fortunately, the plausibility of this assumption can be evaluated analytically. In Section 5, we show that this condition does not hold in Example 5 and provide a way forward despite this.

4. Asymptotic behavior under the alternative

4.1. Consistency under a fixed alternative

We present two analyses of the asymptotic behavior of our test under a fixed alternative. The first relies on providing a good estimate of P0. Under this condition, we give an interpretable limit distribution that provides insight into the behavior of our estimator under the alternative. As we show, surprisingly, need not be close to P0 to obtain an asymptotically consistent test, even if the resulting estimate of ψ0 is nowhere near the truth. In the second analysis, we give more general conditions under which our test will be consistent if holds.

4.1.1. Nuisance functions have been estimated well

As we now establish, our test has power against all alternatives P0 except for the fringe cases discussed in Corollary 1 with Γ0 one-degenerate. We first note that

When scaled by , the leading term on the right-hand side follows a mean zero normal distribution under regularity conditions. The second summand is typically under certain conditions, for example, on the entropy of the class of plausible realizations of the random function (o1, o2) ↦ Γn(o1, o2) [Nolan and Pollard, 1987, 1988]. In view of the second statement in Theorem 3, the third summand is a second-order term that will often be negligible, even after scaling by . As such, under certain regularity conditions, the leading term in the representation above determines the asymptotic behavior of ψn, as described in the following theorem.

Theorem 6 (Asymptotic distribution under ). Suppose that , that , and furthermore, that o ↦ ∫ Γn(o1, o)dP0(o) belongs to a fixed P0−Donsker class with probability tending to 1 while . If holds, we have that , where .

In view of the results of Section 2, τ2 coincides with , the efficiency bound for regular, asymptotically linear estimators in a nonparametric model. Hence, ψn is an asymptotically efficient estimator of ψ0 under . Sufficient conditions for ∫ Γn(o1, o)dP0(o) to belong to a fixed P0−Donsker class with probability approaching one are given in Supplementary Appendix B.2.

The following corollary is trivial in light of Theorem 6. It establishes that the test is consistent against (essentially) all alternatives provided the needed components of the likelihood are estimated sufficiently well.

Corollary 3 (Consistency under a fixed alternative). Suppose the conditions of Theorem 6. Furthermore, suppose that τ2 > 0 and . Then, under , the test is consistent in the sense that

The requirement that is very mild given that q1−α will be finite whenever . As such, we would not expect to get arbitrarily large as sample size grows, at least beyond the extent allowed by our corollary. This suggests that most non-trivial upper bounds satisfying (7) will yield a consistent test.

4.1.2. Nuisance functions have not been estimated well

We now consider the case where the nuisance functions are not estimated well, in the sense that the consistency conditions of Theorem 6 do not hold. In particular, we argue that failure of these conditions does not necessarily undermine the consistency of our test. Let be the estimated cutoff for our test, and suppose that . Suppose also that is asymptotically bounded away from zero in the sense that, for some δ > 0, tends to one. This condition is reasonable given that if holds and is nevertheless a (possibly inconsistent) estimator of P0. Assuming that , which is true under entropy conditions on Γn [Nolan and Pollard, 1987, 1988], we have that

We have accounted for the random term as in the proof of Corollary 3. Of course, this result is less satisfying than Theorem 6, which provides a concrete limit distribution.

4.2. Consistency under a local alternative

We consider local alternatives of the form

where hn → h in for some non-degenerate h and P0 satisfies the null hypothesis . Suppose that the conditions of Theorem 4 hold. By Theorem 2.1 of Gregory [1977], we have that

where is the U−statistic empirical measure from a sample of size n drawn from Qn, 〈·, ·〉 is the inner product in L2(P0), Zk and λk are as in Theorem 4, and fk is a normalized eigenfunction corresponding to eigenvalue λk described in Theorem 4. By the contiguity of Qn, the conditions of Theorem 4 yield that the result above also holds with replaced by , our estimator applied to a sample of size n drawn from Qn.

If each λk is non-negative, the limiting distribution under Qn stochastically dominates the asymptotic distribution under P0, and furthermore, if 〈fk, h〉 ≠ 0 for some k with λk > 0, this dominance is strict. It is straightforward to show that, under the conditions of Theorem 4, the above holds if and only if , that is, if the sequence of alternatives is not too hard. Suppose that , is a consistent estimate of q1−α. By Le Cam’s third lemma, , is consistent for q1−α even when the estimator is computed on samples of size n drawn from Qn rather than P0. This proves the following theorem.

Theorem 7 (Consistency under a local alternative). Suppose that the conditions of Theorem 4 hold. Then, under and provided lim , the proposed test is locally consistent in the sense that , where is a consistent estimator of q1−a.

5. Illustrations

We now return to Examples 2, 3, 4, and 5. We do not return to Example 1 because it has already been well-studied, e.g. the fixed sample size variant was studied in detail in Gretton et al. [2006]. We first show that Examples 2, 3 and 4 satisfy the regularity conditions described in Section 2. Specifically, we show that all involved parameters R and S belong to under reasonable conditions. Furthermore, we determine explicit remainder terms for the asymptotic representation used in each example and describe conditions under which these remainder terms are negligible. For any , we will use the shorthand notation for in a neighborhood of zero.

Example 2 (Continued).

The parameter S with SP ≡ 0 belongs to trivially, with . Condition (S1) holds with xR(o) = w. Condition (S2) holds using that Rt(w) equals

| (9) |

Since we must only consider h1 and h2 uniformly bounded, for t sufficiently small, we see that Rt(w) is twice continuously differentiable with uniformly bounded derivatives. Condition (S3) is satisfied by

and . If mina P (A = a | W) is bounded away from zero with probability 1 uniformly in P, it follows that is uniformly bounded.

Clearly, we have that . We can also verify that equals

The above remainder is double robust in the sense that it is zero if either the treatment mechanism (i.e., the probability of A given W) or the outcome regression (i.e., the expected value of Y given A and W) is correctly specified under P. In a randomized trial where the treatment mechanism is known and specified correctly in P, we have that and thus . More generally, an upper bound for can be found using the Cauchy-Schwarz inequality to relate the rate of to the product of the L2(P0)-norm for the difference between each of the treatment mechanism and the outcome regression under P and P0.

Example 3 (Continued).

For (S1) we take xR = xS = w. Condition (S2) can be verified using an expression similar to that in (9). Condition (S3) is satisfied by

If mina P (A = a | W) is bounded away from zero with probability 1 uniformly in P, both and are uniformly bounded.

Similarly to Example 2, we have that is equal to

The remainder is equal to the above display but with A = 1 replaced by A = 0. The discussion about the double robust remainder term from Example 2 applies to these remainders as well.

Example 4 (Continued).

The parameter S is the same as in Example 2. The parameter R satisfies (S1) with xR(o) = w and (S2) by an identity analogous to that used in Example 2. Condition (S3) is satisfied by . By the bounds on Y, is uniformly bounded. Here, the remainder terms are both exactly zero: . Thus, we have that in this example.

The requirement that in Corollary 2, and more generally that there exist a nonzero eigenvalue λj for the limit distribution in Theorem 4 to be nondegenerate, may at times present an obstacle to our goal of obtaining asymptotic control of the type I error. This is the case for Example 5, which we now discuss further. Nevertheless, we show that with a little finesse the type I error can still be controlled at the desired level for the given test. In fact, the test we discuss has type I error converging to zero, suggesting it may be noticeably conservative in small to moderate samples.

Example 5 (Continued).

In this example, one can take xR = w and xS = w(−k). Furthermore, it is easy to show that

The first-order approximations for R and S are exact in this example as the remainder terms and are both zero. However, we note that if EP (Y | W) = EP (Y | W (−k)) almost surely, it follows that . This implies that Γ0 ≡ 0 almost surely under . As such, under the conditions of Theorem 4, all of the eigenvalues in the limit distribution of nψn in Theorem 4 are zero and nψn → 0 in probability. We are then no longer able to control the type I error at level α, rendering our proposed test invalid.

Nevertheless, there is a simple albeit unconventional way to repair this example. Let A be a Bernoulli random variable, independent of all other variables, with fixed probability of success p ∈ (0,1). Replace SP with o ↦ EP (Y | A = 1, W(−k) = w(−k)) from Example 3, yielding then

It then follows that and in particular Γ0 is no longer constant. In this case, the limit distribution given in Theorem 4 is non-degenerate. Consistent estimation of q1−α thus yields a test that asymptotically controls type I error. Given that the proposed estimator ψn converges to zero faster than n−1, the probability of rejecting the null approaches zero as sample size grows. In principle, we could have chosen any positive cutoff given that nψn → 0 in probability, but choosing a more principled cutoff seems judicious.

Because p is known, the remainder term is equal to zero. Furthermore, in view of the independence between A and all other variables, one can estimate by regressing Y on W(−k) using all of the data without including the covariate A.

In future work, it may also be worth checking to see if the parameter is third-order differentiable under the null, and if so whether or not this allows us to construct an α−level test without resorting to an artificial source of randomness.

6. Simulation studies

In simulation studies, we have explored the performance of our proposed test in the context of Examples 2, 3 and 4, and have also compared our method to the approach of Racine et al. [2006] for which software is readily available – see, e.g., the R package np [Hayfield and Racine, 2008]. We evaluate the performance of computing the eigenvalues of the Gram matrix defined in (6) for Example 3 in two different scenarios. We report the results of our simulation studies in this section.

In all simulation settings, we consider an adaptive bandwidth selection procedure that is a variant of the median heuristic that has been employed in the classical MMD setting where P ↦ RP and P ↦ SP do not depend on P [Gretton et al., 2012a]. In that case, the median heuristic selects the bandwidth to be equal to the median of the 2n × 2n Euclidean distance matrix of {R(Oi) : i = 1, …, n} ⋃ {S(Oi) : i = 1, …, n}, where the subscript of R and S on a distribution P has been omitted to emphasize the lack of this dependence in the classical MMD setting. In our case, we choose the bandwidth to be equal to the median of the Euclidean distance matrix between scalar or vector-valued observations (see Concluding Remark b in Section 7 for the extension to vector-valued unknown functions) in

This extension is natural in that and are reminiscent of one-step estimators [Pfanzagl, 1982] of the unknown R0 and S0, which should help this procedure account for the uncertainty in and . Except where specified, every MMD result presented in this section uses thins mediann heuristic to select the bandwidth. We also compare this procedure to a fixed choice of bandwidth in two of our settings.

6.1. Simulation scenario 1

We use an observed data structure (W, A, Y), where W ≜ (W1, W2, …, W5) is drawn from a standard 5-dimensional normal distribution, A is drawn according to a Bernoulli(0.5) distribution, and Y = μ(A, W) + 5ξ(A, W), where the different forms of the conditional mean function μ(a, w) are given in Table 1, and ξ(a, w) is a random variate following a Beta distribution with shape parameters α = 3expit(aw2) and β= 2expit[(1 − a)w1] shifted to have mean zero, where expit(x) = 1/(1 + exp(−x)).

Table 1.

Conditional mean function in each of three simulation settings within simulation scenario 1. Here, , and the third and fourth columns indicate, respectively, whether μ(1, W) and μ(0, W) are equal in distribution or almost surely.

| μ(a, w) | |||

|---|---|---|---|

| Simulation 1a | m(a, w) | × | × |

| Simulation 1b | m(a, w) + 0.4[aw3 + (1 − a)w4] | × | |

| Simulation 1c | m(a, w) + 0.8aw3 |

We performed tests of the null in which μ(1, W) is equal to μ(0, W) almost surely and in distribution, as presented in Examples 2 and 3, respectively. Our estimate of P0 was constructed using the knowledge that P0 (A = 1 | W) = 1/2, as would be available, for example, in the context of a randomized trial. The conditional mean function μ(a, w) was estimated using the ensemble learning algorithm Super Learner [van der Laan et al., 2007], as implemented in the SuperLearner package [Polley and van der Laan, 2013]. This algorithm was implemented using 10-fold cross-validation to determine the best convex combination of regression function candidates minimizing mean-squared error using a candidate library consisting of SL.rpart, SL.glm.interaction, SL.glm, SL.earth, and SL.nnet. We used the results of Corollary 2 to evaluate significance for Example 2, and the eigenvalue approach presented in Section 3.2.2 to evaluate significance for Example 3, where we used all of the positive eigenvalues for n = 125 and the largest 200 positive eigenvalues for n > 125 using the rARPACK package [Qiu et al., 2014].

To evaluate the performance of the adaptive bandwidth selection procedure in the context of a test of the equality in distribution of two unknown functions applied to w, namely μ(1, ·) and μ(0, ·), we also ran our procedure at fixed bandwidths with values 2k, k = −2, 1, 0, 1, 2. In the context of a test of the almost sure equality of μ(1,W) and μ(0, W), we compare our adaptive bandwidth selection procedure to fixing the bandwidth at one. The performance of the adaptive bandwidth selection procedure is evaluated in more detail for a null hypothesis of the almost sure equality of two unknown functions in our third simulation setting.

We ran 1,000 Monte Carlo simulations with samples of size 125, 250, 500, 1000, and 2000, except for the np package, which we only ran for 500 Monte Carlo simulations. For Example 2 we compared our approach with that of Racine et al. [2006] using the npsigtest function from the np package. This requires first selecting a bandwidth, which we did using the npregbw function, specifying that we wanted a local linear estimator and the bandwidth to be selected using the cv.aic method [Hayfield and Racine, 2008].

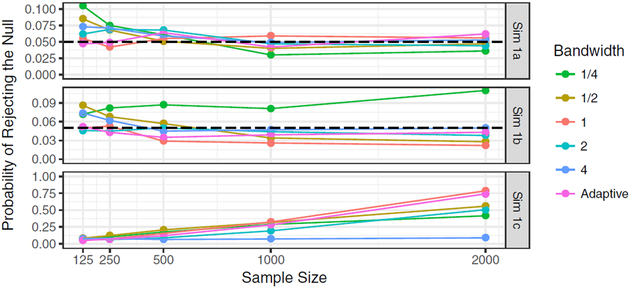

Figure 1 displays the empirical null rejection probability of our test of equality in distribution of μ(1, W) and μ(0, W) for simulation scenarios 1a, 1b and 1c. In particular, we observe that our method is able to properly control type I error for Simulation 1a when testing the hypothesis that μ(1, W) is equal in distribution to μ(0, W). Type I error is also properly controlled in Simulation 1b, though the control of the fixed bandwidth procedures appears to be conservative at the larger sample sizes. We also note that the adaptive bandwidth yielded similar performance to the best considered fixed bandwidth of 1. Our selection procedure generally picked values with an average of around 1.5 – at large sample sizes, there was little variability around this average bandwidth, while at smaller sample sizes the selected bandwidths generally fell between 1.25 and 1.75. The adaptive procedure always controlled type I error at or near the nominal level and had power increasing with sample size and comparable to that of a fixed bandwidth of 1. Choosing the largest fixed bandwidth, namely 4, yielded no power at the alternative in Simulation 1c. Choosing the smallest fixed bandwidth, namely 1/4, yielded inflated type I error levels at one of the null distributions, namely Simulation 1b.

Fig. 1.

Empirical probability of rejecting the null when testing the null hypothesis that μ(1, W) is equal in distribution to μ(0, W) (Example 3) in Simulation 1. Table 1 indicates that the null is true in Simulations 1a and 1b, and the alternative is true in Simulation 1c.

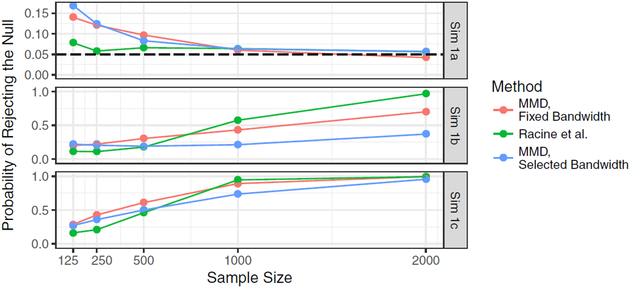

Figure 2 displays the empirical coverage of our approach as well as that resulting from use of the np package. At smaller sample sizes, our method does not appear to control type I error near the nominal level. This is likely because we use an asymptotic result to compute the cutoff, even when the sample size is small. Nevertheless, as sample size grows, the type I error of our test approaches the nominal level. We note that choosing the fixed unit bandwidth outperforms the median heuristic bandwidth selection procedure in this setting, especially in terms of power Simulation 1b. We note that in Racine et al. [2006], unlike in our proposal, the bootstrap was used to evaluate the significance of the proposed test. It will be interesting to see if applying a bootstrap procedure at smaller sample sizes improves our small-sample results. At larger sample sizes, it appears that the method of Racine et al. outperforms our approach in terms of power in simulation scenarios 1b and 1c. At smaller sample sizes (125, 250, 500), our method achieves higher power than that of Racine et al., but at the expense of double the type I error of that of Racine et al.: therefore, it appears that the method of Racine et al. outperforms our approach in Simulations 1a, 1b, and 1c when testing the null hypothesis that μ(1, W) – μ(0, W) is almost surely equal to zero. Nonetheless, we note that the generality of our approach allows us to apply our test in more settings than a test using the method of Racine et al.. For example, we are not aware of any other test devised to test the equality in distribution of μ(1, W) and μ(0, W) (Figure 1).

Fig. 2.

Empirical probability of rejecting the null when testing the null hypothesis that μ(1, W) – μ(0, W) is almost surely equal to zero (Example 2) in Simulation 1. Table 1 indicates that the null is true in Simulation 1a, and the alternative is true in Simulations 1b and 1c.

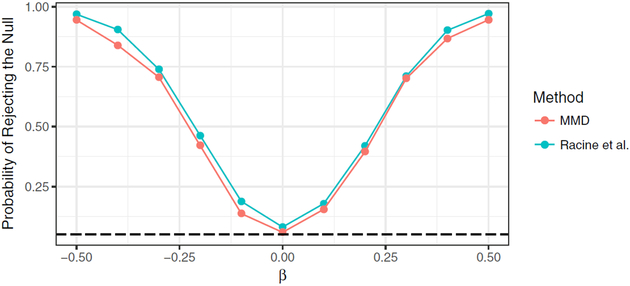

6.2. Simulation scenario 2: comparison with Racine et al. [2006]

We reproduced a simulation study from Section 4.1 of Racine et al. [2006] at sample size n = 100. In particular, we let , where A, W1, and W2 are drawn independently from Bernoulli(0.5), Bernoulli(0.5), and N(0,1) distributions, respectively. The error term ϵ is unobserved and drawn from a N(0,1) distribution independently of all observed variables. The parameter β was varied over values −0.5, −0.4,…, 0.4, 0.5 to achieve a range of distributions. The goal is to test whether E0 (Y | A, W) = E0 (Y | W) almost surely, or equivalently, that μ(1, W) – μ(0, W) = 0 almost surely.

Due to computational constraints, we only ran the ‘Bootstrap I test’ to evaluate significance of the method of Racine et al. [2006]. As the authors report, this method is anticonservative relative to their ‘Bootstrap II test’ and indeed achieves lower power (but proper type I error control) in their simulations.

Except for two minor modifications, our implementation of the method in Example 2 is similar to that as for Simulation 1. For a fair comparison with Racine et al. [2006], in this simulation study, we estimated P0 (A = 1 | W) rather than treating it as known. We did this using the same Super Learner library and the ‘family=binomial’ setting to account for the fact that A is binary. We also scaled the function μ(1, w) – μ(0, w) by a factor of 5 to ensure most of the probability mass of R0 falls between −1 and 1 (around 99% when β = 0). We note that even with scaling the variable Y is not bounded as our regularity conditions require. Nonetheless, an evaluation of our method under violations of our assumptions can itself be informative.

Figure 3 displays the empirical null rejection probability of our test as well as that of Racine et al. [2006]. In this setup, used by the authors themselves to showcase their test procedure, our method performs comparably to their proposal, with slightly lower type I error (closer to nominal) and slightly lower power.

Fig. 3.

Empirical probability of rejecting the null when testing the null hypothesis that μ(1, W) – μ(0, W) is almost surely equal to zero in Simulation 2.

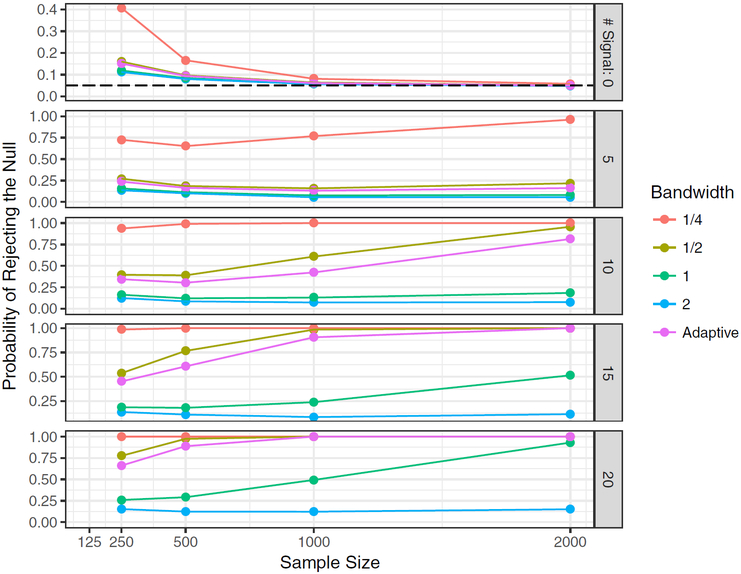

6.3. Simulation scenario 3: higher dimensions

We also explored the performance of our method as extended to tackle higher-dimensional hypotheses, as discussed in Section 7. To do this, we used the same distribution as for Simulation 1 but with Y now a 20-dimensional random variable. Our objective here was to test μ(1,W) – μ (0, W) is equal to (0,0, …, 0) in probability, where with . Conditional on A and W, the coordinates of Y are independent. We varied the number of coordinates that represent signal and noise. For signal coordinate j, given A and W, 20Yj was drawn from the same conditional distribution as Y give A and W in Simulation 1c. For noise coordinate j, given A and W, 20Yj was drawn from the same conditional distribution as Y given A and W in Simulation 1a.

Relative to Simulation 1, we have scaled each coordinate of the outcome to be one twentieth the size of the outcome in Simulation 1. Apart from the adaptive bandwidth selection procedure discussed at the beginning of this section, we considered defining the MMD with a Gaussian kernel with bandwidths of 1/4, 1/2, 1, and 2. Alternatively, this could be viewed as considering bandwidths 5, 10, 20, and 40 if the outcome had not been scaled by 1/20.

We ran the same Super Learner to estimate μ(1,w) as in Simulation 1, and we again treated the probability of treatment given covariates as known. We evaluated significance by estimating all of the positive eigenvalues of the centered Gram matrix for n = 125 and the largest 200 positive eigenvalues of the centered Gram matrix for n > 125.

In Figure 4, the empirical null rejection probability is displayed for our proposed MMD method. We did not include the results for sample size 125 in the figure because type I error control was too poor. For example, for zero signal coordinates, the probability of rejection was 0.24 for bandwidth 1 and 0.33 for bandwidth 1/2. The adaptive bandwidth method performs comparably to the procedure that a priori fixes the bandwidth at 1/2. This observation is consistent with the fact that, across all signal levels and sample sizes, the selected bandwidth was closely concentrated about 1/2 for all Monte Carlo repetitions: the minimal selected bandwidth was 0.49 and the maximal selected bandwidth was 0.59. Among the considered fixed bandwidths, 1/2 or 1/4 seem to be the best at the largest sample sizes (1000, 2000), with the tradeoff between the two being that a bandwidth of 1/4 increases power (substantially for a signal of 5) at the cost of inflating type I error. At smaller sample sizes, the bandwidth of 1/4 yields unacceptably inflated type I error (0.4 at n = 250 and 0.15 at n = 500). Our adaptive bandwidth procedure appears to control type I error well at moderate to large sample sizes (i.e., n ≥ 500). This simulation shows that, overall, our method indeed has increasing power as sample size grows or as the number of coordinates j for which μj(1, W) – μj(0, W) not equal to zero in probability increases. The only sample size and signal number at which our adaptive bandwidth procedure appears to be outperformed by a fixed bandwidth is at 2000: a fixed bandwidth of 1/4 attains nominal coverage at this sample size but dramatically outperforms the adaptive bandwidth when the signal is 5. This discrepancy disappears when the signal is 10.

Fig. 4.

Probability of rejecting the null when testing the null hypothesis that μ(1, W) – μ(0, W) is almost surely equal to zero in Simulation 3.

7. Concluding remarks

We have presented a novel approach to test whether two unknown functions are equal in distribution. Our proposal explicitly allows, and indeed encourages, the use of flexible, data-adaptive techniques for estimating these unknown functions as an intermediate step. Our approach is centered upon the notion of maximum mean discrepancy, as introduced in Gretton et al. [2006], since the MMD provides an elegant means of contrasting the distributions of these two unknown quantities. In their original paper, these authors showed that the MMD, which in their context tests whether two probability distributions are equal using n random draws from each distribution, can be estimated using a U− or V−statistic. Under the null hypothesis, this U- or V−statistic is degenerate and converges to the true parameter value quickly. Under the alternative, it converges at the standard n−1/2 rate. Because this parameter is a mean over a product distribution from which the data were observed, it is not surprising that a U− or V−statistic yields a good estimate of the MMD. What is surprising is that we were able to construct an estimator with these same rates even when the null hypothesis involves unknown functions that can only be estimated at slower rates. To accomplish this, we used recent developments from the higher-order pathwise differentiability literature. Our simulation studies indicate that our asymptotic results are meaningful in finite samples, and that in specific examples for which other methods exist, our methods generally perform at least as well as these established, tailor-made methods. Of course, the great appeal of our proposal is that it applies to a much wider class of problems.

In our simulation study, we adapted the median heuristic for selecting the Gaussian kernel bandwidth to our setting in which R0 and S0 are unknown. In some settings, this bandwidth selection procedure performed well in our simulation compared to specifying a fixed bandwidth in our simulation, though we did note settings where the adaptive procedure underperformed relative to using a fixed bandwidth. An advantage of this adaptive bandwidth selection procedure compared to selecting a fixed bandwidth (e.g., the unit bandwidth) is that it yields a procedure that is invariant to a rescaling of the unknown functions R0 and S0. For the classical MMD setting in which R0 and S0 are known functions, Gretton et al. [2012b] showed that other bandwidth selection procedures can outperform the median heuristic. One such procedure involves selecting the bandwidth to maximize an estimate of the power of the test of the null hypothesis of equality in distribution of R0(O) and S0 (O), subject to a constraint on the estimated type I error. Extending these procedures to our setting where R0 and S0 are unknown is an important area for future research.

We conclude with several possible extensions of our method that may increase further its applicability and appeal.

-

(a)Although this condition is satisfied in all but one of our examples, requiring R and S to be in can be somewhat restrictive. Nevertheless, it appears that this condition may be weakened by instead requiring membership to , the class of all parameters T for which there exist some M < ∞ and elements T1, T2, …, TM in such that . While the results in our paper can be established in a similar manner for functions in this generalized class, the expressions for the involved gradients are quite a bit more complicated. Specifically, we find that, for with and , the quantity equals

In particular, we note the need for conditional expectations with respect to and in the definition of Γ, which could render the implementation of our method more difficult. While we believe this extension is promising, its practicality remains to be investigated. -

(b)While our paper focuses on univariate hypotheses, our results can be generalized to higher dimensions. Suppose that P ↦ RP and P ↦ SP are -valued functions on . The class of allowed such parameters can be defined similarly as , with all original conditions applying componentwise. The MMD for the vector-valued parameters R and S using the Gaussian kernel is given by , where for any we set

It is not difficult to show then that, for any , is given by

where Id denotes the d−dimensional identity matrix and A′ denotes the transpose of a given vector A. Using these objects, the method and results presented in this paper can be replicated in higher dimensions rather easily. -

(c)

Our results can be used to develop confidence sets for infinite dimensional parameters by test inversion. Consider a parameter T satisfying our conditions. Then one can test if is equal in distribution to zero for any fixed function f that does not rely on P. Under the conditions given in this paper, a 1 – α confidence set for T0 is given by all functions f for which we do not reject at level α. The blip function from Example 2 is a particularly interesting example, since a confidence set for this parameter can be mapped into a confidence set for the sign of the blip function, i.e. the optimal individualized treatment strategy [Robins, 2004]. We would hope that the omnibus nature of the test implies that the confidence set does not contain functions f that are “far away” from T0, contrary to a test which has no power against certain alternatives. Formalization of this claim is an area of future research.

-

(d)

To improve upon our proposal for nonparametrically testing variable importance via the conditional mean function, as discussed in Section 5, it may be fruitful to consider the related Hilbert Schmidt independence criterion [Gretton et al., 2005]. Higher-order pathwise differentiability may prove useful to estimate and infer about this discrepancy measure.

Supplementary Material

Acknowledgement

The authors thank Noah Simon for helpful discussions. Alex Luedtke was supported by the Department of Defense (DoD) through the National Defense Science & Engineering Graduate Fellowship (NDSEG) Program. Marco Carone was supported by a Genentech Endowed Professorship at the University of Washington. Mark van der Laan was supported by NIH grant R01 AI074345–06.

Footnotes

Supplementary Appendices

Supplementary Appendix A reviews first- and second-order pathwise differentiability. Supplementary Appendix B.1 contains U−process results from Nolan and Pollard [1987, 1988] that are useful in our context, and Supplementary Appendix B.2 contains an empirical process result used to establish the Donsker condition that was assumed under . Supplementary Appendix C contains proofs for the results in the main text.

Contributor Information

Alexander R. Luedtke, Vaccine and Infectious Disease Division, Fred Hutchinson Cancer Research Center, Seattle, WA, USA, aluedtke@fredhutch.org

Marco Carone, Department of Biostatistics, University of Washington, Seattle, WA, USA.

Mark J. van der Laan, Division of Biostatistics, University of California, Berkeley, Berkeley, CA, USA

References

- Berlinet A and Thomas-Agnan C. Reproducing kernel Hilbert spaces in probability and statistics. Springer Science & Business Media, 2011. [Google Scholar]

- Carone M, Díaz I, and van der Laan MJ. Higher-order Targeted Minimum Loss-based Estimation. Technical report, Division of Biostatistics, University of California, Berkeley, 2014. [Google Scholar]

- Chakraborty B and Moodie EE. Statistical Methods for Dynamic Treatment Regimes. Springer, Berlin Heidelberg New York, 2013. [Google Scholar]

- Franz C. Discrete approximation of integral operators. Proceedings of the American Mathematical Society, 134(8):2437–2446, 2006. [Google Scholar]

- Gregory GG. Large sample theory for U-statistics and tests of fit. The annals of statistics, pages 110–123, 1977. [Google Scholar]

- Gretton A, Bousquet O, Smola A, and Schölkopf B. Measuring statistical dependence with Hilbert-Schmidt norms In Algorithmic learning theory, pages 63–77. Springer, 2005. [Google Scholar]

- Gretton A, Borgwardt MM, Rasch M, Schölkopf B, and Smola AJ. A kernel method for the two-sample-problem. In Advances in neural information processing systems, pages 513–520, 2006. [Google Scholar]

- Gretton A, Fukumizu K, Harchaoui Z, and Sriperumbudur BK. A fast, consistent kernel two-sample test. In Advances in neural information processing systems, pages 673–681, 2009. [Google Scholar]

- Gretton A, Borgwardt KM, Rasch MJ, Schölkopf B, and Smola A. A kernel two-sample test. The Journal of Machine Learning Research, 13(1):723–773, 2012a. [Google Scholar]

- Gretton A, Sejdinovic D, Strathmann H, Balakrishnan S, Pontil M, Fukumizu Kenji, and Sriperumbudur BK. Optimal kernel choice for large-scale two-sample tests. In Advances in neural information processing systems, pages 1205–1213, 2012b. [Google Scholar]

- Hayfield T and Racine JS. Nonparametric Econometrics: The np Package. Journal of Statistical Software, 27(5), 2008. URL http://www.jstatsoft.org/v27/i05/. [Google Scholar]

- Lavergne P, Maistre S, and Patilea V. A significance test for covariates in nonparametric regression. Electronic Journal of Statistics, 9:643–678, 2015. [Google Scholar]

- Nolan D and Pollard D. U-processes: rates of convergence. The Annals of Statistics, 15 (2):780–799, 1987. [Google Scholar]

- Nolan D and Pollard D. Functional Limit Theorems for $U$-Processes. The Annals of Probability, 16(3):1291–1298, 1988. ISSN 0091–1798. doi: 10.1214/aop/1176991691. [DOI] [Google Scholar]

- Pfanzagl J. No Title. Springer, Berlin Heidelberg New York, 1982. [Google Scholar]

- Pfanzagl J. Asymptotic expansions for general statistical models, volume 31 Springer-Verlag, 1985. [Google Scholar]

- Polley E and van der Laan MJ. SuperLearner: super learner prediction, 2013. URL http://cran.r-project.org/package=SuperLearner.

- Qiu Y, Mei J, and authors of the ARPACK library. See file AUTHORS for details. rARPACK: R wrapper of ARPACK for large scale eigenvalue/vector problems, on both dense and sparse matrices, 2014. URL http://cran.r-project.org/package=rARPACK.

- Racine JS, Hart J, and Li Q. Testing the significance of categorical predictor variables in nonparametric regression models. Econometric Reviews, 25(4):523–544, 2006. [Google Scholar]

- Robins JM. Optimal structural nested models for optimal sequential decisions. In Lin DY and Heagerty P, editors, Proceedings of the Second Seattle Symposium in Biostatistics, volume 179, pages 189–326, 2004. [Google Scholar]

- Robins JM, Li L, Tchetgen E, and van der Vaart AW. Higher order influence functions and minimax estimation of non-linear functionals In Essays in Honor of David A. Freedman, IMS, Collections Probability and Statistics, pages 335–421. Springer; New York, 2008. [Google Scholar]

- Sejdinovic D, Sriperumbudur B, Gretton A, and Fukumizu K. Equivalence of distance-based and RKHS-based statistics in hypothesis testing. The Annals of Statistics, 41 (5):2263–2291, 2013. [Google Scholar]

- Steinwart I. On the influence of the kernel on the consistency of support vector machines. The Journal of Machine Learning Research, 2:67–93, 2002. [Google Scholar]

- van der Laan MJ and Rose S. Targeted Learning: Causal Inference for Observational and Experimental Data. Springer, New York, New York, 2011. [Google Scholar]

- van der Laan MJ, Polley E, and Hubbard A. Super Learner. Stat Appl Genet Mol, 6 (1):Article 25, 2007. ISSN 1. [DOI] [PubMed] [Google Scholar]

- van der Vaart AW. Higher order tangent spaces and influence functions. Statistical Science, 29(4):679–686, 2014. [Google Scholar]

- van der Vaart AW and Wellner JA. Weak convergence and empirical processes. Springer, Berlin Heidelberg New York, 1996. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.