Abstract

Continuous attractor models of working-memory store continuous-valued information in continuous state-spaces, but are sensitive to noise processes that degrade memory retention. Short-term synaptic plasticity of recurrent synapses has previously been shown to affect continuous attractor systems: short-term facilitation can stabilize memory retention, while short-term depression possibly increases continuous attractor volatility. Here, we present a comprehensive description of the combined effect of both short-term facilitation and depression on noise-induced memory degradation in one-dimensional continuous attractor models. Our theoretical description, applicable to rate models as well as spiking networks close to a stationary state, accurately describes the slow dynamics of stored memory positions as a combination of two processes: (i) diffusion due to variability caused by spikes; and (ii) drift due to random connectivity and neuronal heterogeneity. We find that facilitation decreases both diffusion and directed drifts, while short-term depression tends to increase both. Using mutual information, we evaluate the combined impact of short-term facilitation and depression on the ability of networks to retain stable working memory. Finally, our theory predicts the sensitivity of continuous working memory to distractor inputs and provides conditions for stability of memory.

Author summary

The ability to transiently memorize positions in the visual field is crucial for behavior. Models and experiments have shown that such memories can be maintained in networks of cortical neurons with a continuum of possible activity states, that reflects the continuum of positions in the environment. However, the accuracy of positions stored in such networks will degrade over time due to the noisiness of neuronal signaling and imperfections of the biological substrate. Previous work in simplified models has shown that synaptic short-term plasticity could stabilize this degradation by dynamically up- or down-regulating the strength of synaptic connections, thereby “pinning down” memorized positions. Here, we present a general theory that accurately predicts the extent of this “pinning down” by short-term plasticity in a broad class of biologically plausible network models, thereby untangling the interplay of varying biological sources of noise with short-term plasticity. Importantly, our work provides a novel theoretical link from the microscopic substrate of working memory—neurons and synaptic connections—to observable behavioral correlates, for example the susceptibility to distracting stimuli.

Introduction

Information about past environmental stimuli can be stored and retrieved seconds later from working memory [1, 2]. Strikingly, this transient storage is achieved for timescales of seconds with neurons and synapse transmission operating mostly on time scales of tens of milliseconds and shorter [3]. An influential hypothesis of neuroscience is that working memory emerges from recurrently connected cortical neuronal networks: memories are retained by self-generating cortical activity through positive feedback [4–7], thereby bridging the time scales from milliseconds (neuronal dynamics) to seconds (behavior).

Sensory stimuli are often embedded in a physical continuum: for example, positions of objects in the visual field are continuous, as are frequencies of auditory stimuli, or the position of somatosensory stimuli on the body. Ideally, the organization of cortical working memory circuits should reflect the continuous nature of sensory information [3]. A class of cortical working memory models able to store continuously structured information is that of continuous attractors, characterized by a continuum of meta-stable states, which can be used to retain memories over delay periods much longer than those of the single network constituents [8]. Continuous attractors were proposed as theoretical models for cortical working memory [9–11], path integration [12–14], and other cortical functions [15–17] (see e.g. [3, 18–21] for recent reviews), well before experimental evidence was found in cortical networks [22] and the limbic system [18, 23]. The one-dimensional ring-attractor in the fly responsible for self-orientation [24, 25] is a particularly intriguing example.

Continuous attractor models have been successfully employed in the context of visuospatial working memory to explain behavioral performance [26–29], to predict the effects of neuromodulation [30, 31], or the implications of cognitive impairment [32, 33]. However, in networks with heterogeneities, the continuum of memory states quickly breaks down, since noise and heterogeneities break, transiently or permanently, the crucial symmetry necessary for continuous attractors [10, 11, 13, 16, 34–40]. For example, the stochasticity of neuronal spiking (“fast noise”) leads to transient asymmetries that randomly displace encoded memories along the continuum of states [10, 11, 35, 37, 39, 40], leading, averaged over many trials, to diffusion of encoded information. More drastically, introducing fixed asymmetries (“frozen noise”) due to network heterogeneities causes a directed drift of memories and a collapse of the continuum of attractive states to a set of discrete states. Examples of heterogeneities in biological scenarios include the sparsity of recurrent connections [13, 36], or randomness in neuronal parameters [36] and values of recurrent weights [16, 34, 38]. Since both (fast) noise and heterogeneities are expected in cortical settings, the feasibility of continuous attractors as computational systems of the brain has been called into question [3, 6, 41].

The question then arises, whether short-term plasticity of recurrent synaptic connections can rescue the feasibility of continuous attractor models. In particular, short-term depression has a strong effects on the directed drift of attractor states in rate models [42, 43], but no strong conclusions were drawn in a spiking network implementation [44]. Short-term facilitation, on the other hand, increases the retention time of memories in continuous attractor networks with noise-free [38] and, as shown parallel to this study, noisy [45] rate neurons. In simulations of continuous attractors implemented with spiking neurons for a fixed set of parameters, facilitation was reported to cause slow drift [46, 47] and a reduced amount of diffusion [47]. However, despite the large number of existing studies, several fundamental questions remain unanswered. What are the quantitative effects of short-term facilitation in more complex neuronal models and across facilitation parameters? How does short-term depression influence the strength of diffusion and drift, and how does it interplay with facilitation? Do phenomena reported in rate networks persist in spiking networks? Finally, can a single theory be used to predict all of the effects observed in simulations?

Here, we present a comprehensive description of the effects of short-term facilitation and depression on noise-induced displacement of one-dimensional continuous attractor models. Extending earlier theories for diffusion [39, 40, 45] and drift [38], we derive predictions of the amount of diffusion and drift in ring-attractor models of randomly firing neurons with short-term plasticity, providing, for the first time, a general description of bump displacement in the presence of both short-term facilitation and depression. Our theory is formulated as a rate model with noise, but since the gain-function of the rate model can be chosen to match that of integrate-and-fire models, our theory is also a good approximation for a large class of heterogeneous networks of integrate-and-fire models as long as the network as a whole is close to a stationary state. The theoretical predictions of the noisy rate model are validated against simulations of ring-attractor networks realized with spiking integrate-and-fire neurons. In both theory and simulation, we find that facilitation and depression play antagonistic roles: facilitation tends to decrease both diffusion and drift while depression increases both. We show that these combined effects can still yield reduced diffusion and drift, which increases the retention time of memories. Importantly, since our theory is, to a large degree, independent of the microscopic network configurations, it can be related to experimentally observable quantities. In particular, our theory predicts the sensitivity of networks with short-term plasticity to distractor stimuli.

Results

We investigated, in theory and simulations, the effects of short-term synaptic plasticity (STP) on the dynamics of ring-attractor models consisting of N excitatory neurons with distance-dependent and symmetric excitation, and global (uniform) inhibition provided by a population of inhibitory neurons (Fig 1A). For simplicity, we describe neurons in terms of firing rates, but our theory can be mapped to more complex neurons with spiking dynamics. An excitatory neuron i with 0 ≤ i < N is assigned an angular position , where we identify the bounds of the interval to form a ring topology (Fig 1A). The firing rate ϕi (in units of Hz) for each excitatory neuron i (0 ≤ i < N − 1) is given as a function of the neuronal input:

| (1) |

Here, the input-output relation F relates the dimensionless excitatory Ji and inhibitory Jinh inputs of neuron i to its firing rate. This represents a rate-based simplification of the possibly complex underlying neuronal dynamics [48]. We assume that the excitatory input Ji(t) to neuron i at time t is given by a sum over all presynaptic neurons

| (2) |

where wijsj(t) describes the total activation of synaptic input from the presynaptic neuron j onto neurons i. The maximal strength wij of recurrent excitatory-to-excitatory connections is chosen to be local in the angular arrangement of neurons, such that connections are strongest to nearby excitatory neurons (Fig 1A, red lines). The momentary input depends also on the synaptic activation variables sj, to be defined below. Finally, connections to and from inhibitory neurons are assumed to be uniform and global (all-to-all) (Fig 1A, blue lines), thereby providing non-selective inhibitory input Jinh to excitatory neurons.

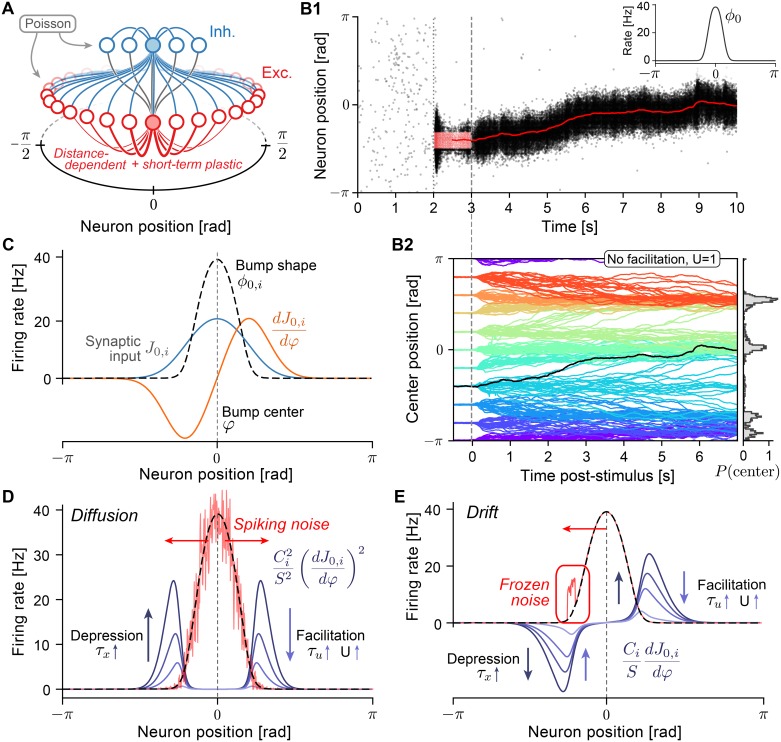

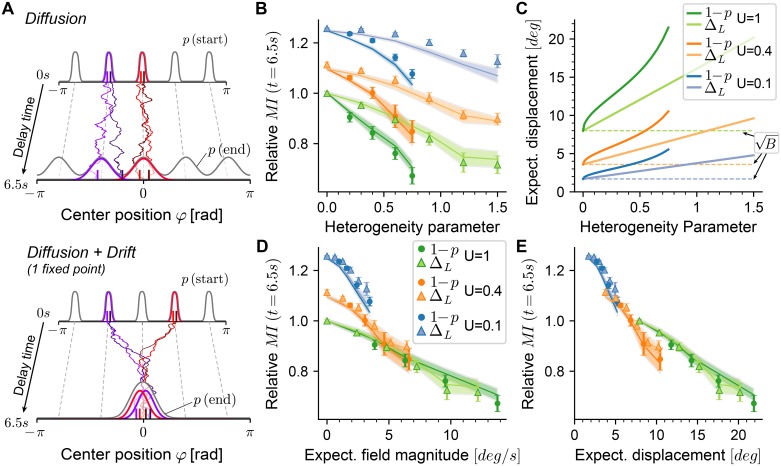

Fig 1. Drift and diffusion in ring-attractor models with short-term plasticity.

A Excitatory (E) neurons (red circles) are distributed on a ring with coordinates in [−π, π]. Excitatory-to-excitatory (E-E) connections (red lines) are distance-dependent, symmetric, and subject to short-term plasticity (facilitation and depression, see Eq (3)). Inhibitory (I) neurons (blue circles) project to all E and I neurons (blue lines) and receive connection from all E neurons (gray lines). Only outgoing connections from shaded neurons are displayed. In simulations with integrate-and-fire neurons, each neuron also receives noisy excitatory spike input generated by independent homogeneous Poisson processes. B1 Example simulation: E neurons fire asynchronously and irregularly at low rates until (dotted line) a subgroup of E neurons is stimulated (external cue), causing them to spike at elevated rates (red dots, input was centered at 0, starting at t = 2s for 1s). During and after (dashed line) the stimulus, a bump state of elevated activity forms and sustains itself after the external cue is turned off. The spatial center of the population activity is estimated from the momentary firing rates (red line, plotted from t = 2.5s onward). Inset: Activity profile in the bump state, centered at 0. B2 Center positions of 20 repeated spiking simulations for 10 different initial cue positions each for a network with short-term depression (U = 1, τx = 150ms). Random E-E connections (with connection probability p = 0.5) lead to directed drift in addition to diffusion. Right: Normalized histogram (200 bins) of final positions at time t = 13.5. C Illustration of quantities used in theoretic calculations. Neurons in the bump fire at rates ϕ0,i (dashed black line, compare to B1, inset) due to the steady-state synaptic input J0,i (blue line). Movement of the bump center causes a change of the synaptic input (orange line). D Diffusion along the attractor manifold is calculated (see Eq (5)) as a weighted sum of the neuronal firing rates in the bump state (dashed black line). Spiking noise (red line) is illustrated as a random deviation from the mean rate with variance proportional to the rate. The symmetric weighting factors (blue lines show for varying U) are non-zero at the flanks of the firing rate profile. Stronger short-term depression and weaker facilitation increase the magnitude of weighting factors. E Deterministic drift is calculated as a weighted sum (see Eq (7)) of systematic deviations of firing rates from the bump state (frozen noise): a large positive firing rate deviation in the left flank (red line) will cause movement of the center position to the left (red arrow) because the weighting factors (blue lines show for varying U) are asymmetric.

As a model of STP, we assume that excitatory-to-excitatory connections are subject to short-term facilitation and depression, which we implemented using a widely adopted model of short-term synaptic plasticity [49]. The outgoing synaptic activations sj of neuron j are modeled by the following system of ordinary differential equations:

| (3) |

The synaptic time scale τs governs the decay of the synaptic activations. The timescale of recovery τx is the main parameter of depression. While the recovery from facilitation is controlled by the timescale τu, the parameter 0 < U ≤ 1 controls the baseline strength of unfacilitated synapses as well as the timescale of their strengthening. For fixed τu, we consider smaller values of U to lead to a “stronger” effect of facilitation, and take U = 1 as the limit of non-facilitating synapses.

As a reference implementation of this model, we simulated networks of spiking conductance-based leaky-integrate-and-fire (LIF) neurons with (spike-based) short-term plastic synaptic transmission (Fig 1B1, see Spiking network model in Materials and methods for details). For these networks, under the assumption that neurons fire with Poisson statistics and the network is in a stationary state, neuronal firing can be approximated by the input-output relation F of Eq (1) [50, 51] (see Firing rate approximation in Materials and methods), which allows us to map the network into the general framework of Eqs (1) and (2). In the stationary state, synaptic depression will lead to a saturation of the synaptic activation variables sj at a constant value as firing rates increase. This nonlinear behavior enables spiking networks to implement bi-stable attractor dynamics with relatively low firing rates [46, 52] similar to saturating NMDA synapses [11, 47]. Since we found that without depression (for τx → 0) the bump state was not stable at low firing rates (in agreement with [52]), we always keep the depression timescale τx at positive values.

Particular care was taken to ensure that networks display nearly identical bump shapes (similar to Fig 1B1, inset; see also S1 Fig), which required the re-tuning of network parameters (recurrent conductance parameters and the width of distance-dependent connections; see Optimization of network parameters in Materials and methods) for each combination of the STP parameters above.

Simulations with spiking integrate-and-fire neurons generally show a bi-stability between a non-selective state and a bump state. In the non-selective state, all excitatory neurons emit action potentials asynchronously and irregularly at roughly identical and low firing rates (Fig 1B1, left of dotted line). The bump state can be evoked by stimulating excitatory neurons localized around a given position by additional external input (Fig 1B1, red dots). After the external cue is turned off, a self-sustained firing rate profile (“bump”) emerges (Fig 1B1, right of dashed line, and inset) that persists until the network state is again changed by external input. For example, a short and strong uniform excitatory input to all excitatory neurons causes a transient increase in inhibitory feedback that is strong enough to return the network to the uniform state [11].

During the bump state, fast fluctuations in the firing of single neurons transiently break the perfect symmetry of the firing rate profile and introduce small random displacements along the attractor manifold, which become apparent as a random walk of the center position. If the simulation is repeated for several trials, the bump has the same shape in each trial, but information on the center position is lost in a diffusion-like process. We additionally included varying levels of biologically plausible sources of heterogeneity (frozen noise) in our networks: random connectivity between excitatory neurons (E-E) and heterogeneity of the single neuron properties of the excitatory population [36], realized as a random distribution of leak reversal potentials. Heterogeneities makes the bump drift away from its initial position in a directed manner. For example, the bump position in the randomly connected (p = 0.5) network of Fig 1B1 shows a clear upwards drift towards center positions around 0. Repeated simulations of the same attractor network with bumps initialized at different positions provide a more detailed picture of the combined drift and diffusion dynamics: bump center trajectories systematically are biased towards a few stable fixed points (Fig 1B2) around which they are distributed for longer simulation times (histogram in Fig 1B2, t = 13.5s). The theory developed in this paper aims at analyzing the above phenomena of drift and diffusion of the bump center.

Theory of diffusion and drift with short-term plasticity

To untangle the observed interplay between diffusion and drift and investigate the effects of short-term plasticity, we derived a theory that reduces the microscopic network dynamics to a simple one-dimensional stochastic differential equation for the bump state. The theory yields analytical expressions for diffusion coefficients and drift fields, that depend on short-term plasticity parameters, the shape of the firing rate profile of the bump, as well as the neuron model chosen to implement the attractor.

First, we assume that the system of Eq (3) together with the network Eqs (1) and (2) has a 1-dimensional manifold of meta-stable states, i.e. the network is a ring-attractor network as described in the introduction. This entails, that the network dynamics permit the existence of a family of solutions that can be described as a self-sustained and symmetric bump of firing rates ϕ0,i(φ) = F(J0,i(φ)) with corresponding inputs J0,i(φ) (for 0 ≤ i < N). Importantly, the center φ of the bump can be located at any arbitrary position . For example, if ϕ0,i(0) is a solution with input J0,i(0), then is also a solution with input . This solution is illustrated in Fig 1C for a bump centered at φ = 0. Second, we assume that the number N of excitatory neurons is large (N → ∞), such that we can think of the possible positions φ as a continuum. Third, we assume that network heterogeneities are small enough to capture their effect as a linear (first order) perturbation to the stable bump state. Our final assumption is that neuronal firing is noisy, with spike counts distributed as Poisson processes, and that we are able to replace the shot-noise of Poisson spiking by white Gaussian noise with the same mean and autocorrelation, similar to earlier work [39, 53]; see Diffusion in Materials and methods, and Discussion. Under these assumptions, we are able to reduce the network dynamics to a one-dimensional Langevin equation, describing the dynamics of the center φ(t) of the firing rate profile (see Analysis of drift and diffusion with STP in Materials and methods):

| (4) |

Here, η(t) is white Gaussian noise with zero mean and correlation function 〈η(t), η(t′)〉 = δ(t − t′).

The first term is diffusion characterized by a diffusion strength B1, which describes the random displacement of bump center positions due to fluctuations in neuronal firing. For A(φ) = 0 this term causes diffusive displacement of the center φ(t) from its initial position φ(t0), with a mean (over realizations) squared displacement of positions 〈[φ(t) − φ(t0)]2〉 = B ⋅ (t − t0) that, during an initial phase, increases linearly with time [14, 54, 55], before saturating due to the circular domain of possible center positions [39]. Our theory shows (see Diffusion in Materials and methods) that the coefficient B can be calculated as a weighted sum over the neuronal firing rates (Fig 1D)

| (5) |

where is the change of the input to neuron i under shifts of the center position (Fig 1C, orange line), and S is a normalizing constant that tends to increase additionally with the synaptic time constant τs.

The analytical factors Ci express the spatial dependence of the diffusion coefficient on the short-term plasticity parameters through

| (6) |

1In Brownian motion, the diffusion constant is usually defined as D = B/2. The dependence of the single summands in Eq (5) on short-term plasticity parameters is visualized in Fig 1D, where we see that: a) due to the squared spatial derivative of the bump shape and the squared factors Ci/S, the important contributions to the sum arise primarily from the flanks of the bump; b) for a fixed bump shape, summands increase with stronger short-term depression (larger τx) and decrease with stronger short-term facilitation (smaller U, larger τu).

The second term in Eq (4) is the drift field A(φ), which describes deterministic drifts due to the inclusion of heterogeneities. For heterogeneity caused by variations in neuronal reversal potentials and random network connectivity, we calculate (see Frozen noise in Materials and methods) systematic deviations Δϕi(φ) of the single neuronal firing rates from the steady-state bump shape that depend on the current position φ of the bump center. In Drift in Materials and Methods, we show that the drift field is then given by a weighted sum over the firing rate deviations:

| (7) |

with weighing factors depending on the spatial derivative of the bump shape and the parameters of the synaptic dynamics through the same factors Ci/S. This is illustrated in Fig 1E: in contrast to Eq (5) summands are now asymmetric with respect to the bump center, since the spatial derivative is not squared.

Analytical considerations

To calculate the diffusion and drift terms of the last section, we assume the number of neurons N to be large enough to treat the center position φ as continuous: this allows us (similar to [39]) to derive projection vectors (see Projection of dynamics onto the attractor manifold in Materials and methods) that yield the dynamics of the center position. However, the actual projection yields sums over the system size N, whose scaling we made explicit (see System size scaling in Materials and methods). For the diffusion strength (cf. Eq (5)) we find a scaling as as , in agreement with earlier work [11, 14, 36, 39, 46]. For drift fields caused by random connectivity, we find a scaling with the connectivity parameter p and the system size N to leading order as , whereas drift fields due to heterogeneity of leak potentials (and other heterogeneous single-neuron parameters) will scale as , both in accordance with earlier results [16, 36, 38, 46].

In addition to reproducing the previously known scaling with the system size N, our theory exposes the scaling of both drift and diffusion with the parameters τx, τu, and U of short-term depression and facilitation via the analytical pre-factors Ci/S appearing in Eqs (5) and (7). Our result extends the calculation of the diffusion constant [39] to synaptic dynamics with short-term plasticity: In the limiting case of no facilitation and depression (U → 1, τx → 0ms), the pre-factor reduces to Ci = 1 and the normalization factor simplifies to , where is the derivative of the firing rate of neuron i at its steady-state input J0,i. For static synapses we thereby recover the known result for diffusion [39, Eq. S18], but also add an analogous relation for the drift . Our approach relies on the existence of a stationary bump state (which is stable for large noise-free homogeneous networks), around which we calculate drift and diffusion as perturbations. Following earlier work [11, 50, 52], we use in our simulations with spiking integrate-and-fire neurons a slow synaptic time constant (τs = 100ms) as an approximation of recurrent (NMDA mediated) excitation. While our theory captures the effects of changing this time constant τs in the pre-factors Ci/S, we did not check in simulations whether the bump state remains stable and whether our theory remains valid for very short time constants for τs.

Finally, two limiting cases are worth highlighting. First, for strong facilitation (U → 0) we obtain pre-factors , indicating that (i) this limit will leave residual drift and diffusion which (ii) will both be controlled by the time constants for facilitation (τu) and synaptic transmission (τs), with no dependence upon depression. Second, for vanishing facilitation (U → 1 and τu → 0) we find that the normalization factor S will tend to zero if the depression time constant τx is increased to a finite value τx,c. Through the pre-factors Ci/S this, in turn, yields exploding diffusion and drift terms (see S8 Fig). While this is a general feature of bump systems with short-term depression, the exact value of the critical time constant τx,c depends on the firing rates and neural implementation of the bump state (see section 6 in S1 Text): for the spiking network investigated here, we find a critical time constant τx,c = 223.9ms (see S8 Fig). In networks with both facilitation and depression, the critical τx,c increases as facilitation becomes stronger (see S8 Fig).

Prediction of continuous attractor dynamics with short-term plasticity

To demonstrate the accuracy of our theory, we chose random connectivity as a first source of frozen variability. Random connectivity was realized in simulations by retaining only a random fraction 0 < p ≤ 1 (connection probability) of excitatory-to-excitatory (EE) connections. The uniform connections from and to inhibitory neurons are taken as all-to-all, since the effects of making these random or sparse would have only indirect effects on the dynamics of the bump center positions.

Our theory accurately predicts the drift-fields A(φ) (see Eq (7)) induced by frozen variability in networks with short-term plasticity (Fig 2). Briefly, for each neuron 0 ≤ i < N, we treat each realization of frozen variability as a perturbation Δi around the perfectly symmetric system and use an expansion to first order of the input-output relation F to calculate the resulting changes in firing rates (see Frozen noise for details):

| (8) |

The resulting terms are then used in Eq (7) to predict the magnitude of the drift field A(φ) for any center position φ, which will, importantly, depend on STP parameters. The same approach can be used to predict drift fields induced by heterogeneous single neuron parameters [36] (see next sections) and additive noise on the E-E connection weights [16, 38].

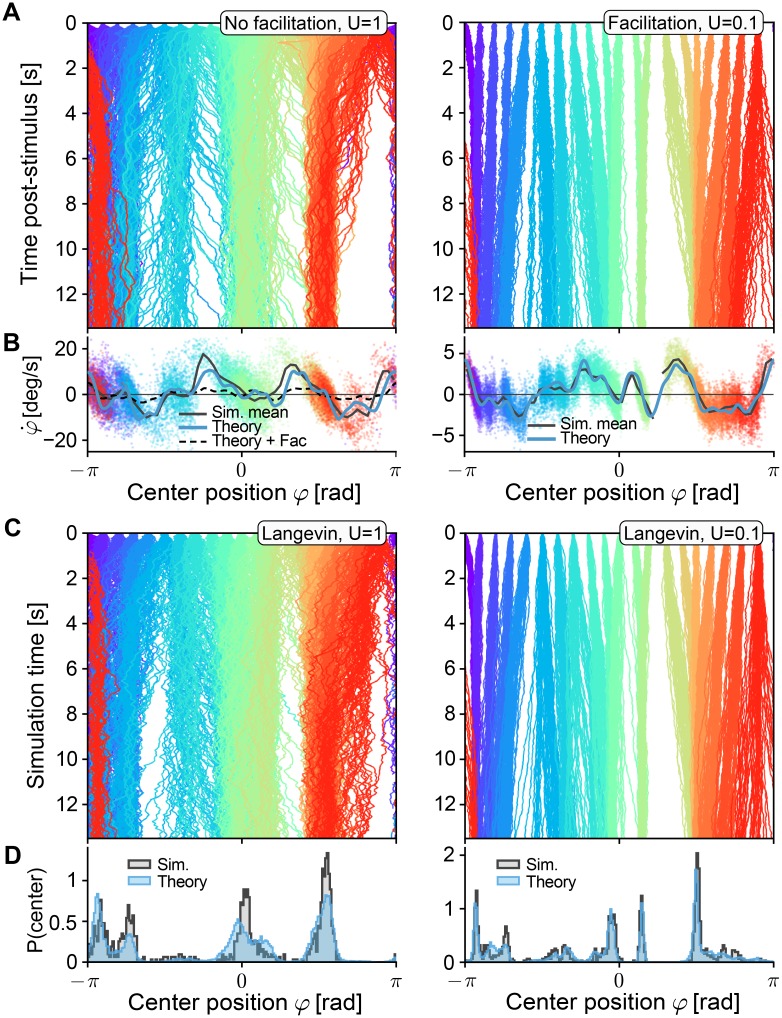

Fig 2. Drift field predictions for varying short-term facilitation.

All networks have the same instantiation of random connectivity (p = 0.5), similar to Fig 1B1. A Centers of excitatory population activity for 50 repetitions of 13.5s delay activity, for 20 different positions of initial cues (cue is turned off at t = 0) colored by position of the cues. Left: no facilitation (U = 1). Right: with facilitation (U = 0.1). B Drift field as a function of the bump position. The theoretical prediction (blue line, see Eq (7)) of the drift field is compared to velocity estimations along the trajectories shown in A, colored by the line they were estimated from. The thick black line shows the binned mean of data points in 60 bins. For comparison, the predicted drift field for U = 0.1 is plotted (thin dashed line). Left: no facilitation (U = 1), for comparison the theoretical prediction for the case U = 0.01 is plotted as a dashed line. Right: with facilitation (U = 0.01). C Trajectories under the same conditions as in A, but obtained by forward-integrating the one-dimensional Langevin equation, Eq (4). D Normalized histograms of final positions at time t = 13.5 for data from spiking simulations (gray areas, data from A) and forward solutions of the Langevin equations (blue areas, data from C). Other STP parameters were: τu = 650ms, τx = 150ms.

We first simulated spiking networks with only short-term depression and without facilitation (Fig 2A, left, same network as in Fig 1B1), for one instantiation of random (p = 0.5) connectivity. Numerical estimates of the drift in spiking simulations (by measuring the displacement of bumps over time as a function of their position, see Spiking simulations in Materials and methods for details) yielded drift-fields in good agreement with the theoretical prediction (Fig 2B, left). At points where the drift field prediction crosses from positive to negative values (e.g. Fig 2B, left, ), we expect stable fixed points of the center position dynamics in agreement with simulation results, which show trajectories converging to these points. Similarly, unstable fixed points (negative-to-positive crossings) can be seen to lead to a separation of trajectories (e.g. Fig 2A, left, ). In regions where the positional drifts are predicted to lie close to zero (e.g. Fig 2A, left φ = 0) the effects of diffusive dynamics are more pronounced. Finally, numerical integration of the full 1-dimensional Langevin equation Eq (4) with coefficients predicted by Eqs (5)–(7), produces trajectories with dynamics very similar to the full spiking network (Fig 2C, left). When comparing the center positions after 13.5s of delay activity between the full spiking simulation and the simple 1-dimensional Langevin system, we found very similar distributions of final positions (Fig 2D, left, compare to Fig 1B1, histogram). Our theory thus produces an accurate approximation of the dynamics of center positions in networks of spiking neurons with STP, thereby reducing the complex dynamics of the whole network to a simple equation. It should be noted that, in regions with strong drift or steep negative-to-positive crossings, the numerically estimated drift-fields deviate from the theory due to under-sampling of these regions as trajectories move quickly through them, yielding fewer data points. In Short-term plasticity controls drift we additionally show that the theory, as it relies on a linear expansion of the effects of heterogeneities on the neuronal firing rates, tends to generally over-predict drift-fields as heterogeneities become stronger.

Introducing strong short-term facilitation (U = 0.1) reduces the predicted drift fields (Fig 2B, left, dashed line), which resemble a scaled-down version of the drift-field for the unfacilitated case. We confirmed this theoretical prediction by simulations including facilitation (Fig 2A, right): the resulting drift fields show significant reduction of speeds (Fig 2B, right) while zero crossings remained similar to the unfacilitated network, similar to the results in [38]. Theoretical predictions of the drift fields with bump shapes extracted from these simulations again show an accurate prediction of the dynamics (Fig 2B, right). Thus, as before, forward integrating the simple 1-dimensional Langevin-dynamics yields trajectories (Fig 2C, right) highly similar to those of the full spiking network, with closely matching distributions of final positions (Fig 2D, right), indicative of a matching strength of diffusion. In summary, our theory predicts the effects of STP on the joint dynamics of diffusion and drift due to network heterogeneities, which we will show in detail in the next sections.

Short-term plasticity controls diffusion

To isolate the effects of STP on diffusion, we simulated networks without frozen noise for various STP parameters. For each combination of parameters, we simulated 1000 repetitions of 13.5s delay activity (after cue offset) distributed across 20 uniformly spaced initial cue positions (see Fig 3A for an example). From these simulations, the strength of diffusion was estimated by measuring the growth of variance (over repetitions) of the distance of the center position from its initial position as a function of time (see Spiking simulations in Materials and methods for details). For all parameters considered, this growth was well fit by a linear function (e.g. Fig 3A, inset), the slope of which we compared to the theoretical prediction obtained from the diffusion strength B (Eq (5)).

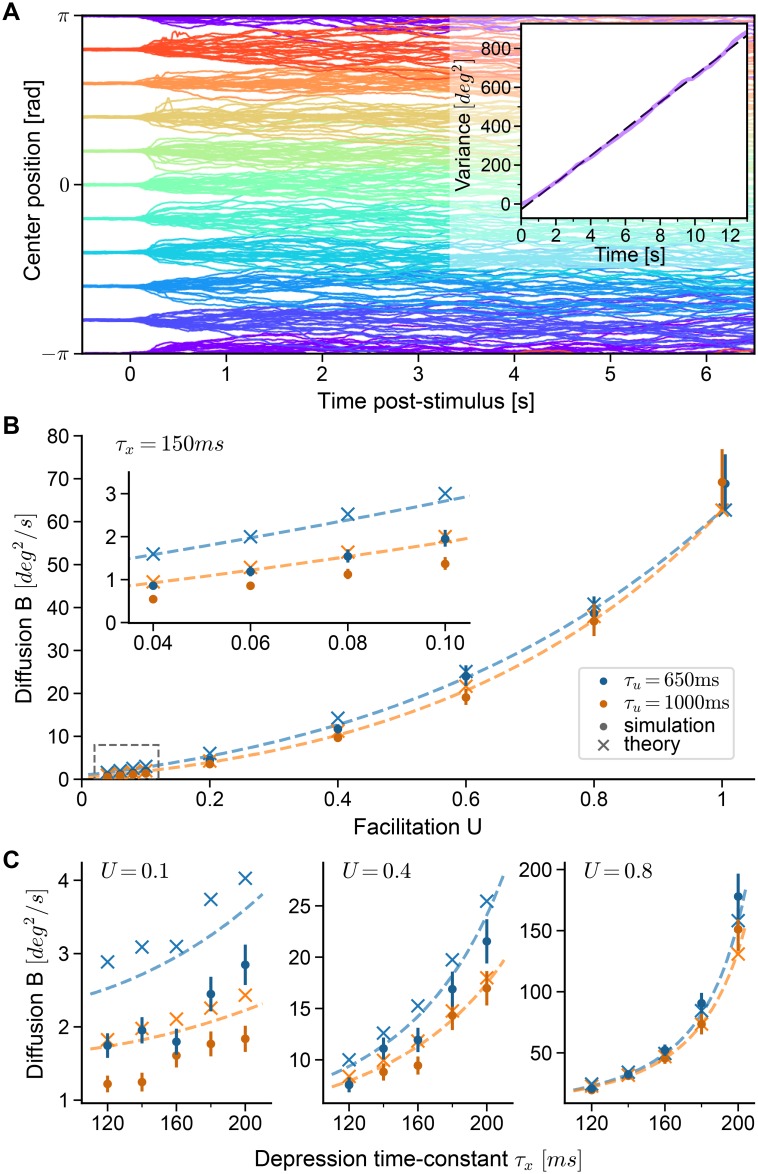

Fig 3. Diffusion on continuous attractors is controlled by short-term plasticity.

A Center positions of 20 repeated simulations of the reference network (U = 1, τx = 150ms) for 10 different initial cue positions each. Inset: Estimated variance of deviations of center positions φ(t) from their positions φ(0.5) at t = 0.5s (purple) as a function of time (〈[φ(t) − φ(0.5)]2〉), together with linear fit (dashed line). The slope of the dashed line yields an estimate of B (Eq (5)). B,C Diffusion strengths estimated from simulations (dots, error bars show 95% confidence interval, estimated by bootstrapping) compared to theory. Dashed lines show theoretical prediction using firing rates measured from the reference network (U = 1, τx = 150ms), while crosses are theoretical estimates using firing rates measured for each set of STP parameters separately (crosses). B Diffusion strength as a function of facilitation parameter U. Inset shows zoom of region indicated in the dashed area in the lower left. Increasing the facilitation time constant τu = 650ms (blue) to τu = 1s (orange) affects diffusion only slightly. In panels A and B, the depression time constant is τx = 150ms. C Diffusion strength as a function of depression time constant τx. Results for three different values of U are shown (note the change in scale). Colors indicate the two different values for the facilitation time constant also used in panel B.

We find that facilitation and depression control the amount of diffusion along the attractor manifold in an antagonistic fashion (Fig 3B and 3C). First, increasing facilitation by lowering the facilitation parameter U from its baseline U = 1 (no facilitation) towards U = 0, while keeping the depression time constant τx = 150ms fixed, decreases the measured diffusion strength over an order of magnitude (Fig 3B, dots). On the other hand, increasing the facilitation time constant τu from τu = 650ms to τu = 1000ms (Fig 3B, orange and blue dots, respectively) only slightly reduces diffusion. Our theory further predicts that increasing the facilitation time constants above τu = 1s will not lead to large reductions in the magnitude of diffusion (see S2 Fig). Second, we find that increasing the depression time constant τx for fixed U, thereby slowing down recovery from depression, leads to an increase of the measured diffusion (Fig 3C). More precisely, increasing the depression time constant from τx = 120ms to τx = 200ms leads only to slight increases in diffusion for strong facilitation (U = 0.1), but to a much larger increase for weak facilitation (U = 0.8).

For a comparison of these simulations with our theory, we used two different approaches. First, we estimated the diffusion strength by using the precise shape of the stable firing rate profile extracted separately for each network with different sets of parameters. This first comparison with simulations confirms that the theory closely describes the dependence of diffusion on short-term plasticity for each parameter set (Fig 3B, crosses). The observed effects could arise directly from changes in STP parameters for a fixed bump shape, or indirectly since STP parameters also influence the shape of the bump. To separate such direct and indirect effects, we used for a second comparison a theory with fixed bump shape, i.e. the bump shape measured in a “reference network” (U = 1, τx = 150ms) and extrapolated curves by changing only STP parameters in Eq (5). This leads to very similar predictions (Fig 3B, dashed lines) and supports the following conclusions: a) the diffusion to be expected in attractor networks with similar observable quantities (mainly, the bump shape) depends only on the short-term plasticity parameters; b) the bump shapes in the family of networks we have investigated are sufficiently similar to be approximated by measurement in a single reference network. It should be noted that the theory tends to slightly over-estimate the amount of diffusion, especially for small facilitation U (see Fig 3B and 3C left). This may be because slower bump movement decreases the firing irregularity of flank neurons, which deviates from the Poisson firing assumption of our theory (see Discussion). However, given the simplifying assumptions needed to derive the theory, the match to the spiking network is surprisingly accurate.

Short-term plasticity controls drift

Having established that our theory is able to predict the effect of STP on diffusion, as well as drift for a single instantiation of random connectivity, we wondered how different sources of heterogeneity (frozen noise) would influence the drift of the bump. We considered two sources of heterogeneity: First, random connectivity as introduced above, and second, heterogeneity of the leak reversal potential parameters of excitatory neurons: leak reversal potentials of excitatory neurons are given by VL + ΔL, where ΔL is normally distributed with zero mean and standard deviation σL [36]. The resulting fields can be calculated by calculating the resulting perturbations to the firing rates of neurons by Eq (8) (see Frozen noise in Materials and methods for details).

The theory developed so far allowed us to predict drift-fields for a given realization of frozen noise, controlled by the noise parameters p (for random connectivity) and σL (for heterogeneous leak reversal-potentials) (see S3 Fig for a comparison of predicted drift fields to those measured in simulations for varying STP parameters and varying strengths of frozen noises). We wondered, whether we could take the level of abstraction of our theory one step further, by predicting the magnitude of drift fields from the frozen noise parameters only, independently of a specific realization. First, the expectation of drift fields under the distributions of the frozen noises vanishes for any given position: 〈A(φ)〉frozen = 0, where the expectation 〈.〉frozen is taken over both noise parameters. We thus turned to the expected squared magnitude of drift fields under the distributions of these parameters (see Squared field magnitude in Materials and methods for the derivation):

| (9) |

where s0,j is the steady-state synaptic activation. Here, we introduced the derivatives of the input-output relation with respect to the noise sources that appear in Eq (8): is the derivative with respect to the steady state synaptic input, and is the derivative with respect to the perturbation in the leak potential. In Squared field magnitude in Materials and Methods, we show that Eq (9) is independent of the center position φ, and can be estimated from simulations as the variance of the drift field across positions, averaged over an ensemble of network instantiations.

We defined the root of the expected squared magnitude of Eq (9) as the expected field magnitude:

| (10) |

This quantity predicts the magnitude of the deviations of drift-fields from zero that are expected from the parameters that control the frozen noise—in analogy to the standard deviation for random variables, it predicts the standard deviation of the fields. To compare this quantity to simulations, we varied both heterogeneity parameters. First, the connectivity parameter p was varied between 0.25 and 1. Second, for heterogeneities in leak reversal-potentials, we chose values for the standard deviation σL of leak-reversal potentials between 0mV and 1.5mV, which lead to a similar range of drift magnitudes as those of randomly connected networks. For each combination of heterogeneities and STP parameters (networks had either random connections or heterogeneous leaks) we then realized 18–20 networks, for which we simulated 400 repetitions of 6.5s of delay activity each (20 uniformly spaced positions of the initial cue). We then estimated the drift-field numerically by recording displacements of bump centers along their trajectories (as in Fig 2A and 2B) and measured the standard deviation of the resulting fields across all positions.

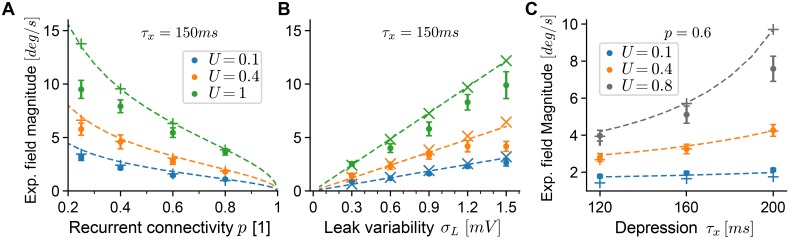

Similar to the analysis of diffusion above, we find that facilitation and depression elicit antagonistic control over the magnitude of drift fields. In both simulations and theory, we find (Fig 4A and 4B) that the expected field magnitude decreases as the effect of facilitation is increased from unfacilitated networks (U = 1) through intermediate levels of facilitation (U = 0.4) to strongly facilitating networks (U = 0.1). Our theory predicts this effect surprisingly well, which we validated twofold (as for the diffusion magnitude). First, we used Eq (10) with all parameters and coefficients estimated from each spiking simulation separately (Fig 4A and 4B, plus-signs and crosses). Second, we extrapolated the theoretical prediction by using coefficients in Eq (9) from the unfacilitated reference network only (U = 1, τx = 150ms) but changed the facilitation and heterogeneity parameters (Fig 4A and 4B, dashed lines). The largest differences between the extrapolated and full theory are seen for U < 1 and randomly connected networks (p < 1), which we found to result from the fact that bump shapes for these networks tended to be slightly reduced under random and sparse connectivity (e.g. the top firing rate is reduced to ∼ 35Hz for U = 0.1, p = 0.25). Generally, as noise levels increase, our theory tends to over-estimate the squared magnitude of fields, since we rely on a linear expansion of perturbations to the firing rates to calculate fields (Eq (8)). Such deviations are expected as the magnitude of firing rate perturbations increases, and could be counter-acted by including higher-order terms. Since in the theory facilitation (and depression) only scales the firing rate perturbations (Eq (7)), these deviations can also be observed across facilitation parameters. Finally, we performed a similar analysis to investigate the effect of short-term depression on drift fields. Here, we varied the depression time constant τx for randomly connected networks with p = 0.6, by simulating networks with combinations of short-term plasticity parameters from U ∈ {0.1, 0.4, 0.8} and τx ∈ {120ms, 160ms, 200ms} (Fig 4C). We find that an increase of the depression time constant leads to increased magnitude of drift fields, which again is well predicted by our theory.

Fig 4. Drift field magnitude is controlled by short-term plasticity.

A Expected magnitude of drift fields as a function of the sparsity parameter p of recurrent excitatory-to-excitatory connections. Dots are the standard deviation of fields estimated from 400 trajectories (see main text) of each network, averaged over 18–20 realizations for each noise parameter and facilitation setting (error bars show 95% confidence of the mean). Theoretical predictions (dashed lines) are given by Eq (10) extrapolated from the reference network (U = 1, τx = 150). For validation, we also estimated Eq (10) with coefficients measured from each simulated network separately (plus signs). The depression time constant was τx = 150ms. B Same as in panel A, with heterogeneous leak-reversal potentials as the source of frozen noise. Validation predictions are plotted as crosses. C Same as in panels A,B but varying the depression time constant τx for a fixed level of frozen noise (random connectivity, p = 0.6). In all panels, the facilitation time constant was τu = 650ms.

Short-term plasticity controls memory retention

The theory developed in previous sections shows that diffusion and drift of the bump center φ are controlled antagonistically by short-term depression and facilitation. In a working memory setup, we can view the attractor dynamics as a noisy communication channel [56] that maps a set of initial positions φ(t = 0s) (time of the cue offset in the attractor network) to associated final positions φ(t = 6.5s), after a memory retention delay of 6.5s. We used the distributions of initial and (associated) final positions to investigate the combined impact of diffusion and drift on the retention of memories (Fig 5A). Because of diffusion, distributions of positions will widen over time, which degrades the ability to distinguish different initial positions of the bump center (Fig 5A, top). Additionally, directed drift of the dynamics will contract distributions of different initial positions around the same fixed points, making them essentially indistinguishable when read out (Fig 5A, bottom).

Fig 5. Short-term facilitation increases memory retention.

A Illustration of the effects of diffusion (top) and additional drift (bottom) on the temporal evolution of distributions of initial positions p(start) towards distributions of final positions p(end) over 6.5s of delay activity. The bump is always represented by its center position φ. Two peaks in the distribution of initial positions φ(0) and their corresponding final positions φ(6.5) are highlighted by colors (purple, red), together with example trajectories of the center positions. Top: Diffusion symmetrically widens the initial distribution. Bottom: Strong drift towards one single fixed point of bump centers (φ = 0) makes the origin of trajectories indistinguishable. B Normalized mutual information (MI, see text for details) of distributions of initial and final bump center positions in working memory networks for different STP parameters and heterogeneity parameters(blue: strong facilitation, see legend in panel D). Dots and triangles are average MI (18–20 realizations, error bars show 95% CI) obtained from spiking network simulations. Lines show average MI calculated from Langevin dynamics for the same networks, repetitions and realizations (see text, shaded area shows 95% CI). Heterogeneity parameters are σL (triangles, in units of mV) and 1 − p (circles), where p is the connection probability. C Expected displacement |Δφ|(1s) for the same networks as in panel B. Dashed lines indicate displacement induced by diffusion only (), solid lines show the total displacement (including displacement due to drift, calculated as the expected field magnitude ). D Same as panel B, with x-axis showing the expected field magnitude. E Same as panel B, with x-axis showing the expected displacement. In panels B-D, all STP parameters except U were kept constant at τu = 650ms, τx = 150ms.

As a numerical measure of this ability of such systems to retain memories over the delay period, we turned to mutual information (MI), which provides a measure of the amount of information contained in the readout position about the initially encoded position [57, 58]. To measure MI from simulations (see Mutual information measure in Materials and methods), we analyzed network simulations for varying short-term facilitation parameters (U) and magnitudes of frozen noises (p and σL) (same data set as Fig 4A and 4B). We recorded the center positions encoded in the network at the time of cue-offset (t = 0) and after 6.5s of delay activity, and used binned histograms (100 bins) to calculate discrete probability distributions of initial (t = 0) and final positions (t = 6.5). For each trajectory simulated in networks of spiking integrate-and-fire neurons, we then generated a trajectory starting at the same initial position by using the Langevin equation Eq (4) that describes the drift and diffusion dynamics of center positions. The MI calculated from the resulting distributions of final positions (again at t = 6.5) for each network serve as the theoretical prediction for each network. As a reference, we used the spiking network without facilitation (U = 1, τu = 650ms, τx = 150ms) and no frozen noises (p = 1, σL = 0mV) and normalized the MI of all other networks (both for spiking simulations and theoretical predictions) with respect to the reference, yielding the measure of relative MI presented in Fig 5B–5E.

We found that the relative MI decreased compared to the reference network as network heterogeneities were introduced (Fig 5B, green). This was expected, since directed drift caused by heterogeneities leads to a loss of information about initial positions. There were two effects of increased short-term facilitation (by decreasing the parameter U). First, diffusion was reduced, which was visible in a vertical shift of the relative MI for facilitated networks (Fig 5A, orange and blue, at 0 heterogeneity). Second, the effects of frozen noise decreased with increasing facilitation, which was visible in the slopes of the MI decrease (see also S4 Fig). The MI obtained by integration of the Langevin equations (see above) matched those of the simulations well (Fig 5A, lines). From earlier results, we expected the drift-fields to be slightly over-estimated by the theory as the heterogeneity parameters increase (Fig 4), which would lead to an under-estimation of MI. We did observe this here, although for U = 1 the effect was slightly counter-balanced by the under-estimated level of diffusion (cf. Fig 3A, right), which we expected to increase the MI. For networks with stronger facilitation (U = 0.1), we systematically over-estimated diffusion (cf. Fig 3, left), and therefore under-estimated MI.

Using our theory, we were able to simplify the functional dependence between MI, short-term plasticity, and frozen noise. Combining the effects of both diffusion and drift into a single quantity for each network, we replaced the field A(φ) by our theoretical prediction in Eq (4) and forward integrated the differential equation for a time interval Δt = 1s, to arrive at the expected displacement in 1s:

| (11) |

This quantity describes the expected absolute value of displacement of center positions during 1s: it increases as a function of the frozen noise distribution parameters (Fig 5C), but even in the absence of frozen noise it is nonzero due to diffusion. Plotting the MI data in dependence of the first term only (), shows that the MI curves collapse onto a single curve for each facilitation parameter (Fig 5D). Finally, plotting the MI data against |Δφ|(1s) we find that all data collapse on to nearly a single curve (Fig 5E). Thus, the effects of the two sources of frozen noise (corresponding to 〈A2〉frozen) and diffusion (corresponding to B) are unified into a single quantity |Δφ|(1s).

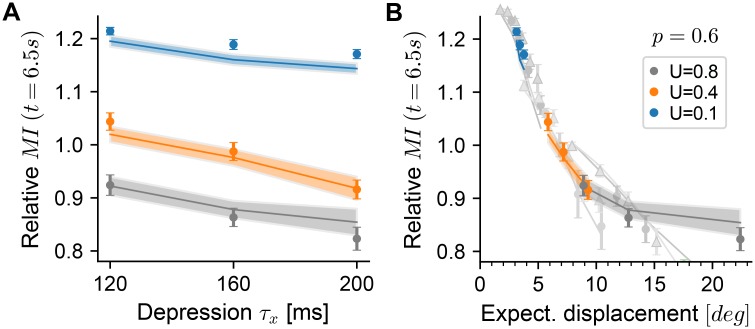

We performed the same analyses on a large set of network simulations with fixed random connectivity (p = 0.6) and varying STP parameters for both depression (τx) and facilitation (U) (same data set as in Fig 4C). Increasing the short-term depression time constant τx leads to decreased relative MI with a positive offset induced through stronger facilitation (Fig 6A, blue line). Calculating the expected displacement for these network configurations collapsed the data points mostly onto the same curve as earlier (Fig 6B). For strong depression combined with weak facilitation (τx = 200ms, U = 0.8), the drop-off of the relative MI saturates earlier, indicating that for these strongly diffusive networks the effect on MI may not be sufficiently captured by its relationship to |Δφ|(1s).

Fig 6. Short-term depression decreases memory retention.

A Same as Fig 5B, for network simulations with varying τx and U (see legend in panel B). MI is normalized to the same value as there. B Same as panel A, with x-axis showing the expected displacement. Gray data points and lines are the data plotted in Fig 5E. The facilitation time constant was kept constant at τu = 650ms.

Linking theory to experiments: Distractors and network size

The abstraction of our theory condenses the complex dynamics of bump attractors in spiking integrate-and-fire networks into a high-level description of a few macroscopic features, which in turn allows matching the theory to behavioral experiments. Here, we demonstrate how such quantitative links could be established using two different features: 1) the sensitivity of the working memory circuit to distractors, and 2) the stability of working memory expressed by the expected displacement. We stress that our model is a simplified description of biological circuits, in which several further sources of variability and also dynamical processes influencing displacement should be expected (see Discussion). Thus, at the current level of simplification, the results presented in this section should be seen as proofs of principle rather than quantitative predictions for a cortical setting.

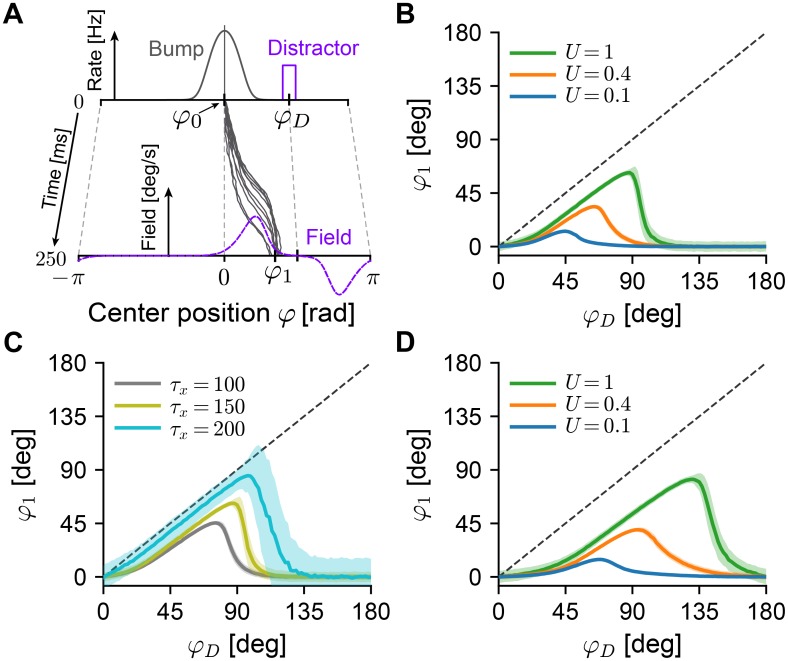

Predicting the sensitivity to distractor inputs

In a biological setting, drifts introduced by network heterogeneities (frozen noise) could be significantly reduced by (long-term) plasticity [36]. To measure the intrinsic stability of continuous attractor models, earlier studies [11, 47, 59] have proposed to use distractor inputs (Fig 7A): providing a short external input centered around a position φD to the network, the center position of an existing bump state will be biased towards the distracting input, with stronger biases appearing for closer distractors. In the context of our theory, we consider a weak distractor as an additional heterogeneity that induces drift. Therefore the time scale of bump drift caused by distractor-induced heterogeneity enables us link our theory to behavioral experiments [59].

Fig 7. Effect of short-term plasticity on distractor inputs.

A While a bump (“Bump”) is centered at an initial angle φ0 (chosen to be 0), additional external input causes neurons centered around the position φD to fire at elevated rates (“Distractor”). The theory predicts the shape and magnitude of the induced drift field (“Field”) and the mean bump center φ1 after 250ms of distractor input. Gray trajectories are example simulations of bump centers of the corresponding Langevin equation Eq (4). B Mean final positions φ1 of bump centers (1000 repetitions, shaded areas show 1 standard deviation) as a function of the distractor input location φD. Increased short-term facilitation (blue: strong facilitation, U = 0.1; orange: intermediate facilitation, U = 0.4; green: no facilitation U = 1) leads to less displacement due to the distractor input. Other STP parameters were kept constant at τu = 650ms, τx = 150ms. C Same as panel B, for three different depression time constants τx, while keeping U = 0.8, τu = 650ms fixed. D Same as panel B, with a broader bump half-width (σg = 0.8rad ≈ 45.8 deg). All other panels use the same bump half-width as in the rest of the study (σg = 0.5rad ≈ 28.7 deg) (see S1 Fig).

Our theory can readily yield quantitative predictions for the distractor paradigm. To accommodate distractor inputs in the theory, we assume that they cause some units i to fire at elevated rates ϕ0,i + Δϕi, which will introduce a drift field according to Eq (7) (Fig 7A, purple dashed line). The resulting dynamics (Eq (4)) of diffusion and drift during the presentation of the distractor input then allow us to calculate the expected shift of center positions as a function of all network parameters, including those of short-term plasticity. Repeating this paradigm for varying positions of the distractor inputs (see Distractor analysis in Materials and methods for details), our theory predicts that strong facilitation will strongly decrease both the effect and radial reach of distractor inputs (Fig 7B, blue), when compared to the unfacilitated system (Fig 7B, green)—in qualitative agreement with simulation results involving a related (cell-intrinsic) stabilization mechanism [47]. Conversely, we predict that longer recovery from short-term depression tends to increase the sensitivity to distractors (Fig 7C). The total displacement caused by a distractor input is found by integrating the resulting dynamics of Eq (4) over the stimulus duration. As such, the magnitude of the displacement will increase both with the amplitude and the duration of the distractor input. Finally, our theory demonstrates that the bump shape, in particular the width of the bump, influence the radial reach of distractor inputs (Fig 7D).

Relating displacement to network size in working memory networks

The simple theoretical measure of expected displacement |Δφ|(1s) introduced in the last section can be related to behavioral experiments: a value of |Δφ|(1s) = 1.0 deg lies in the upper range of experimentally reported deviations due to diffusive and systematic errors in behavioral studies [60, 61]. What are the microscopic circuit compositions that can attain such a (high) level of working memory stability? In particular, since an increase in network size can reduce diffusion [11] and the effects of random heterogeneities [16, 36, 38, 46], we turned to the question: which networks size would be needed to yield this level of stability in a one-dimensional continuous memory system?

To address the question of network size, we extended our theory to include the size N of the excitatory population as an explicit parameter (see System size scaling in Materials and methods for details). Using numerical coefficients in Eq (4) extracted from the spiking simulation of a reference network (U = 1, τx = 150 and NE = 800), we extrapolated the theory by changing the system size N and short-term plasticity parameters. We then constrained parameters of our theory by published data (Table 1). Short-term plasticity parameters were based on two groups of strongly facilitating synapses found in a study of mammalian (ferret) prefrontal cortex [62]. The same study reported a general probability p = 0.12 of pyramidal cells to be connected. However, for pairs of pyramidal cells that were connected by facilitating synapses, the study found a high probability of reciprocal connections (prec = 0.44): thus if neuron A was connected to neuron B (with probability p), neuron B was connected to neuron A with high probability (prec), resulting in a non-random connectivity. To approximate this in the random connectivities supported by our theory, we evaluated a second, slightly elevated, level of random connectivity, that has the same mean connection probability as the non-random connectivity with these additional reciprocal connections: p + p ⋅ prec = 0.1728. For the standard deviation of leak reversal-potentials σL, we used values measured in two studies [63, 64].

Table 1. Upper bounds on system-sizes for stable continuous attractor memory in prefrontal cortex.

| STP parameters | Δφ(1s) | p | σL | Network size N |

|---|---|---|---|---|

|

U = 0.17 τu = 563ms τx = 242ms [62, E1b] |

1.0 deg [60, 61] | 0.12 [62] |

1.7mV [63, RS] | 79 504 |

| 2.4mV [64, fa-RS] | 127 465 | |||

| 0.1728 [62] |

1.7mV | 79 047 | ||

| 2.4mV | 127 205 | |||

| 0.5 deg [60] | 0.12 | 2.4mV | 507 607 | |

|

U = 0.35 τu = 482ms τx = 163ms [62, E1a] |

1.0 deg | 0.12 | 1.7mV | 102 292 |

| 2.4mV | 163 896 | |||

| 0.1728 | 1.7mV | 101 836 | ||

| 2.4mV | 163 638 | |||

| 0.5 deg | 0.12 | 2.4mV | 653 350 |

Theoretical predictions of Eq (11) optimized for the number of excitatory neurons N that are needed to achieve a given level of expected displacement |Δφ|(1s) under given parameters of short-term plasticity and frozen noises. RS: regular spiking pyramidal cells, fa-RS: fast-adapting regular spiking pyramidal cells.

The resulting theory makes quantitative predictions for combinations of network size N and all other parameters that yield the desired levels of working memory stability (Table 1, see also S5 Fig). Network sizes were all smaller than 106 neurons, with values depending most strongly on the value of the facilitation parameter U and the magnitude of the leak reversal-potential heterogeneities σL. Since the expected field magnitude scales weakly () with the recurrent connectivity p, increasing p lead only to comparatively small decreases in the predicted network sizes. Finally, we see that the increasing the reliability of networks comes at a high cost: decreasing the expected displacement to |Δφ|(1s) = 0.5 deg [60] increases the required number of neurons by nearly a number of 4 for both facilitation settings we investigated. Nevertheless, these network sizes still lie within anatomically reasonable ranges [65].

In summary, we have provided a proof of principle, that the high-level description of our theory can be used to predict network sizes, by exposing features that can be constrained by experimental measurements. Given the simplifying assumptions of our models and the sources of variability that we could include at this stage, continuous attractor networks with realistic values for the strength of facilitation and depression of recurrent connections could achieve sufficient stability, even in the presence of biological variability.

Discussion

We presented a theory of drift and diffusion in continuous working memory models, exemplified on a one-dimensional ring attractor model. Our framework generalizes earlier approaches calculating the effects of fast noise by projection onto the attractor manifold [37, 39, 40] by including the effects of short-term plasticity (see [45] for a similar analysis for facilitation only). Our approach further extends earlier work on drift in continuous attractors with short-term plasticity [38] to include diffusion and the dynamics of short-term depression. Our theory predicts that facilitation makes continuous attractors robust against the influences of both dynamic noise (introduced by spiking variability) and frozen noise (introduced by biological variability) whereas depression has the opposite effect. We use this theory to provide, together with simulations, a novel quantitative analysis of the interaction of facilitation and depression with dynamic and frozen noise. We have confirmed the quantitative predictions of our theory in simulations of a ring-attractor implemented in a network model of spiking integrate-and-fire neurons with synaptic facilitation and depression, and found theory and simulation to be in good quantitative agreement.

In Section Short-term plasticity controls memory retention, we demonstrated the effects of STP on the information retained in continuous working memory. Using our theoretical predictions of drift and diffusion we were able to derive the expected displacement |Δφ| as a function of STP parameters and the frozen noise parameters, which provides a simple link between the resulting Langevin dynamics of bump centers and mutual information (MI) as a measure of working memory retention. Our results can be generalized in several directions. First, the choice of 1s of forward integrated time for |Δφ| (Eq (11)) was arbitrary. While a choice of ∼ 2s lets the curves in Fig 5E collapse slightly better, we chose 1s to avoid further heuristics. Second, we expect values of MI to decrease as the length of the delay period is increased. Our choice of 6.5s is comparable to delay periods often considered in behavioral experiments (usually 3-6s) [61, 66, 67]. However, a more rigorous link between the MI measure and the underlying attractor dynamics would be desirable. Indeed, for noisy channels governed by Fokker-Planck equations, this might be feasible [68], but goes beyond the scope of this work.

In Section Linking theory to experiments: Distractors and network size, we demonstrated that the high-level description of the microscopic dynamics obtained by our theory allows its parameters to be constrained by experiments. Considering that our model is a simplified description of its biological counterparts (see next paragraph), these demonstrations are to be seen as a proof of principle as opposed to quantitative predictions. However, since distractor inputs can be implemented in silico as well as in behavioral experiments (see e.g. [59]), they could eventually provide a quantitative link between continuous attractor models and working memory systems, by matching the resulting distraction curves. Our theory goes beyond previous models in which these distraction curves had to be extracted through repeated microscopic simulations for single parameter settings [47]. We further used our theory to derive bounds on network parameters, in particular the size of networks, that lead to “tolerable” levels of drift and diffusion in the simplified model. For large magnitudes of frozen noise our theory tends to over-estimate the expected magnitude of drift-fields slightly (cf. Fig 4). Thus, we expect the predictions made here to be upper bounds on network parameters needed to achieve a certain expected displacement. Finally, while the predictions of our theory might deviate from biological networks, they could be applied to accurately characterize the stability of, and the effects of inputs to, bump attractor networks implemented in neuromorphic hardware for robotics applications [69].

Our results show, that strong facilitation (small values of U) does not only slow down directed drift [38], but also efficiently suppresses diffusion in spiking continuous attractor models. However, in delayed response tasks involving saccades, that presumably involve continuous attractors in the prefrontal cortex [11, 22], one does observe an increase of variability in time [66]: quickly accumulating systematic errors (alike drift) [61] as well as more slowly increasing variable errors (with variability growing linear in time, alike diffusion) have been reported [60]. Indeed, there are several other possible sources of variability in cortical working memory circuits, which we did not consider here. In particular, we expect that heterogeneous STP parameters [62], noisy synaptic transmission and STP [70] or noisy recurrent weights [38] (see Random and heterogeneous connectivity in Materials and methods), for example, will induce further drift and diffusion beyond the effects discussed in this paper. Additionally, variable errors might be introduced elsewhere in the pathway between visual input and motor output (but see [71]) or by input from other noisy local circuits during the delay period [72]. Note that we excluded AMPA currents from the recurrent excitatory interactions [11]. However, since STP acts by presynaptic scaling of neurotransmitter release, it will act symmetrically on both AMPA and NMDA receptors so that an analytical approach similar to the one presented here is expected to work.

Several additional dynamical mechanisms might also influence the stability of continuous attractor working memory circuits. For example, intrinsic neuronal currents that modulate the neuronal excitability [47] or firing-rate adaptation [73] affect bump stability. These and other effects could be accommodated in our theoretical approach by including their linearized dynamics in the calculation of the projection vector (cf. Projection of dynamics onto the attractor manifold in Materials and methods). Fast corrective inhibitory feedback has also been shown to stabilize spatial working memory systems in balanced networks [74]. On the timescale of hours to days, homeostatic processes counteract the drift introduced by frozen noise [36]. Finally, inhibitory connections that are distance-dependent [11] and show short-term plasticity [75] could also influence bump dynamics.

We have focused here on ring-attractor models that obtain their stable firing-rate profile due to perfectly symmetric connectivity. Our approach can also be employed to analyze ring-attractor networks with short-term plasticity in which weights show (deterministic or stochastic) deviations from symmetry (see Frozen noise in Materials and methods for stochastic deviations). Although not investigated here, continuous line-attractors arising through a different weight-symmetry should be amenable to similar analyses [39]. Finally, it should be noted that adequate structuring of the recurrent connectivity can also positively affect the stability of continuous attractors [14]. For example, translational asymmetries included in the structured heterogeneity can break the continuous attractor into several isolated fixed points, which can lead to decreased diffusion along the attractor manifold [58].

We provided evidence that short-term synaptic plasticity controls the sensitivity of attractor networks to both fast diffusive and frozen noise. Control of short-term plasticity via neuromodulation [76] would thus represent an efficient “crank” for adapting the time scales of computations in such networks. For example, while cortical areas might be specialized to operate in certain temporal domains [7, 77], we show that increasing the strength of facilitation in a task-dependent fashion could yield slower and more stable dynamics, without changing the network connectivity. On the other hand, modulating the time scales of STP could provide higher flexibility in resetting facilitation-stabilized working memory systems to prepare them for new inputs [47], although there might be evidence for residual effects of facilitation between trials [45, 78]. By changing the properties of presynaptic calcium entry [79], inhibitory modulation mediated via GABAB and adenosine A1 receptors can lead to increased facilitatory components in rodent cerebellar [80] and avian auditory synapses [81]. Dopamine, serotonin and noradrenaline have all been shown to differentially modulate short-term depression (and facilitation when blocking GABA receptors) at sensorimotor synapses [82]. Interestingly, next to short-term facilitation on the timescale of seconds, other dynamic processes up-regulate recurrent excitatory synaptic connections in prefrontal cortex [62]: synaptic augmentation and post-tetanic potentiation operate on longer time scales (up to tens of seconds), and might be able to support working memory function [83]. While the long time scales of these processes might again render putative short-term memory networks inflexible, there is evidence that they might also be under tight neuromodulatory control [84]. Finally, any changes in recurrent STP properties of continuous attractors (without retuning networks as done here) will also lead to changes in the stable firing rate profiles, with further effects on their dynamical stability (see final section of the Discussion). This interplay of effects remains to be investigated in more detail.

Comparison to earlier work

Similar to an earlier theoretical approach using a simplified rate model [38], we find that the slowing of drift by facilitation depends mainly on the facilitation parameter U, while the time constant τu has a less pronounced effect. While the approach of [38] relied on the projection of frozen noise onto the derivative of the first spatial Fourier mode of the bump shape along the ring, here we reproduce and extend this result (1) for arbitrary neuronal input-output relations and (2) a more detailed spatial projection that involves the full synaptic dynamics and the bump shape. While, our theory can also accommodate noisy recurrent connection weights as frozen noise, as used in in [38] (see Frozen noise in Materials and methods for derivations), the drifts generated by these heterogeneities were generally small compared to diffusion and the other sources of heterogeneity.

A second study investigated short-term facilitation and showed that it reduces drift and diffusion in a spiking network, for a fixed setting of U (although the model of short-term facilitation differs slightly from the one employed here) [47]. Contrary to what we find here, these authors find that an increase in τu leads to increased diffusion, while we find that an increase over the range they investigated (∼ 0.5s − 4s) would decrease the diffusion by a factor of nearly two. More precisely, for our shape of the bump state (which we keep fixed) we predict a reduction from ∼ 26 to ∼ 16 deg2/s for a similar setting of facilitation U. These differences might arise from an increasing width of the bump attractor profile for growing facilitation time constants in [47], which would then lead to increased diffusion in our model. Whether this effect persists under the two-equation model of saturating NMDA synapses used there remains to be investigated. Finally, increasing the time constant of recurrent NMDA conductances has been shown to also reduce diffusion [47], in agreement with our theory, according to which the normalization constant S increases with τs [39].

A study performed in parallel to ours [45] used a similar theoretical approach to calculate diffusion with short-term facilitation in a rate-based model with external additive noise, but did not compare the results for varying facilitation parameters. The authors report a short initial transient of stronger diffusion as synapses facilitate, followed by weaker diffusion that is dictated by the fully facilitated synapses. Our theory, by assuming all synaptic variables to be at steady-state, disregards the initial strong phase of diffusion. We also disregarded such initial transients when comparing to simulations (see Numerical methods).

In a study that investigated only a single parameter value for depression (τx = 160ms, no facilitation) in a network of spiking integrate-and-fire neurons similar to the one investigated here, the authors observed no apparent effect of short-term depression on the stability of the bump [44]. In contrast, we find that stronger short-term depression will indeed increase both diffusion and directed drift along the attractor. Our result agrees qualitatively with earlier studies in rate models, which showed that synaptic depression, similar to neuronal adaptation [10, 85], can induce movement of bump attractors [42, 43, 86, 87]. In particular, simple rate models exhibit a regime where the bump state moves with constant speed along the attractor manifold [42]. We did not find any such directed movement in our networks, which could be due to fast spiking noise which is able to cancel directed bump movement [85].

Extensions and shortcomings

The coefficients of Eq (4) give clear predictions as to how drift and diffusion will depend on the shape of the bump state and the neural transfer function F. The relation is not trivial, since the pre-factors Ci and the normalization constant S also depend on the bump shape. For the diffusion strength Eq (5), we explored this relation numerically, by artificially varying the shape of the firing rate profile (while extrapolating other quantities). Although a more thorough analysis remains to be performed, a preliminary analysis shows (see S6 Fig) that diffusion increases both with bump width and top firing rate, consistent with earlier findings [11, 32].

Our theory can be used to predict the shape and effect of drift fields that are generated by localized external inputs due to distractor inputs; see Section Linking theory to experiments: Distractors and network size. Any localized external input (excitatory or inhibitory) will cause a deviation Δϕi from the steady-state firing rates, which, in turn, generates a drift field by Eq (7). This could predict the strength and location of external inputs that are needed to induce continuous shifts of the bump center at given speeds, for example when these attractor networks are designed to track external inputs (see e.g. [10, 88]). It should be noted that in our simple approximation of this distractor scheme, we assume the system to remain at approximately steady-state, i.e. that the bump shape is unaffected by the additional external input, except for a shift of the center position. For example, we expect additional feedback inhibition (through the increased firing of excitatory neurons caused by the distractor input) to decrease bump firing rates. A more in depth study and comparison to simulations will be left for further work.

Our networks of spiking integrate-and-fire neurons are tuned to display balanced inhibition and excitation in the inhibition dominated uniform state [53, 89], while the bump state relies on positive currents, mediated through strong recurrent excitatory connections (cf. [44] for an analysis). Similar to other spiking network models of this class, this mean–driven bump state shows relatively low variability of neuronal inter-spike-intervals of neurons in the bump center [90, 91] (see also next paragraph). Nevertheless, neurons at the flanks of the bump still display variable firing, with statistics close to that expected of spike trains with Poisson statistics (see S7 Fig), which may be because the flank’s position slightly jitters. Since the non-zero contributions to the diffusion strength are constrained to these flanks (cf. Fig 1D), the simple theoretical assumption of Poisson statistics of neuronal firing still matches the spiking network quite well. As discussed in Short-term plasticity controls diffusion, we find that our theory over-estimates the diffusion as bump movement slows down for small values of U—this may be due to a decrease in firing irregularity in stable bumps in particular in the flank neurons, at which the Poisson assumption becomes inaccurate.

More recent bump attractor approaches allow networks to perform working memory function with a high firing variability also during the delay period [3], in better agreement with experimental evidence [92]. These networks show bi-stability, where both stable states show balanced excitation and inhibition [90] and the higher self-sustained activity in the delay activity is evoked by an increase in fluctuations of the input currents (noise-driven) rather than an increase in the mean input [93]. This was also reported for a ring-attractor network (with distance-dependent connections between all populations), where facilitation and depression are crucial for irregularity of neuronal activity in the self-sustained state [46]. Application of our approach to these setups is left for future work.

Materials and methods

Analysis of drift and diffusion with STP

For the following, we define a concatenated 3 ⋅ N dimensional column vector of state variables y = (sT, uT, xT)T of the system Eq (3). Given a (numerical) solution of the stable firing rate profile we can calculate the stable fixed point of this system by setting the l.h.s. of Eq (3) to zero. This yields steady-state solutions for the synaptic activations, facilitation and depression variables y0 = (s0, u0, x0):

| (12) |