Abstract

Mixed methods research—i.e., research that draws on both qualitative and qualitative methods in varying configurations—is well suited to address the increasing complexity of public health problems and their solutions. This review focuses specifically on innovations in mixed methods evaluations of intervention, program or policy (i.e., practice) effectiveness and implementation. The article begins with an overview of the structure, function and process of different mixed methods designs and then provides illustrations of their use in effectiveness studies, implementation studies, and combined effectiveness-implementation hybrid studies. The article then examines four specific innovations: procedures for transforming (or “quantitizing”) qualitative data, applying rapid assessment and analysis procedures in the context of mixed methods studies, development of measures to assess implementation outcomes, and strategies for conducting both random and purposive sampling particularly in implementation-focused evaluation research. The article concludes with an assessment of challenges to integrating qualitative and quantitative data in evaluation research.

Keywords: methodology, evaluation, mixed methods, effectiveness, implementation, hybrid designs

Introduction

As in any field of science, our understanding of the complexity of public health problems and the solutions to these problems has required more complex tools to advance that understanding. Among the tools that have gained increasing attention in recent years in health services research and health promotion and disease prevention are designs that have been referred to as mixed methods. Mixed methods is defined as “research in which the investigator collects and analyzes data, integrates the findings, and draws inferences using both qualitative and quantitative approaches or methods in a single study or program of inquiry” (45). However, we qualify this definition in the following manner. First, integration may occur during the design and data collection phases of the research process in addition to the data analysis and interpretation phases (18). Second, mixed methods is often conducted by a team of investigators rather than a single investigator, with each member contributing specific expertise to the process of integrating qualitative and quantitative methods. Third, a program of inquiry may involve more than one study, but the studies are themselves linked by the challenge of answering a single question or set of related questions. Finally, the use of quantitative and qualitative approaches in combination provides a better understanding of research problems than either approach alone (18, 59, 71). In a mixed method design, each set of methods plays an important role in achieving the overall goals of the project and is enhanced in value and outcome by its ability to offset the weaknesses inherent in the other set and by its “engagement” with the other set of methods in a synergistic fashion (73, 86, 88).

Although mixed methods research is not new (72), the use of mixed methods designs has become increasingly common in the evaluation of the process and outcomes of health care intervention, program or policy effectiveness and their implementation (63, 64, 65, 67). A number of guides for conducting mixed methods evaluations are available (16, 38, 65). In this article, we review some recent innovations in mixed methods evaluations in health services effectiveness and implementation. Specifically, we highlight techniques for “quantitizing” qualitative data; applying rapid assessment procedures to collecting and analyzing evaluation data; developing measures of implementation outcomes; and sampling study participants in mixed methods investigations.

Characteristics of Mixed Methods Designs in Evaluation Research

Several typologies exist in mixed methods designs, including convergent, explanatory, exploratory, embedded, transformative, and multiphase designs (18). These, along with other mixed method designs in evaluation research, can be categorized in terms of their structure, function, and process (1, 4, 63, 65, 73).

Quantitative and qualitative methods may be used simultaneously (e.g. QUAN + qual) or sequentially (e.g., QUAN → qual), with one method viewed as dominant or primary and the other as secondary (e.g., QUAL + quan) (59), although equal weight can be given to both methods (e.g., QUAN + QUAL) (18, 64, 70). Sequencing of methods may also vary according phase of the research process such that quantitative and qualitative data may be collected (dc) simultaneously (e.g., QUANdc + qualdc), but analyzed (da) sequentially (e.g., QUANda → qualda). However, data collection and analysis of both methods occur in iterative fashion (e.g., QUANdc/da → qualda → QUAN2dc/da).

In evaluation research, mixed methods have been used to achieve different functions. Palinkas and colleagues (63, 65) identified five such functions: convergence, where one type of data are used to validate or confirm conclusions reached from analysis of the other type of data (also known as triangulation), or the sequential quantification of qualitative data (also known as transformation) (18); complementarity, where quantitative data are used to evaluate outcomes while qualitative data are used to evaluate process or qualitative methods are used to provide depth of understanding and quantitative methods are used to provide breadth of understanding; 3) expansion or explanation, where qualitative methods are used to explain or elaborate upon the findings of quantitative studies, but may also serve as the impetus for follow-up quantitative investigations; 4) development, where one method may be used to develop instruments, concepts or interventions that that will enable use of the other method to answer other questions; and 5) sampling (80), or the sequential use of one method to identify a sample of participants for use of the other method.

The process of integrating quantitative and qualitative data occurs in three forms, merging, connecting, and embedding the data (18, 63, 65). In general, quantitative and qualitative data are merged when the two sets of data are used to provide answers to the same questions, connected when used to provided answers to related questions sequentially, and embedded when used to provide answers to related questions simultaneously.

Illustrations of Mixed Methods Designs in Evaluation Research

To demonstrate the variations in structure, function and process of mixed method designs in evaluation, we provide examples of their use in evaluations of intervention or program effectiveness and/or implementation. Some designs are used to evaluate effectiveness or implementation alone, while other designs are used to conduct simultaneous evaluations of both effectiveness and implementation.

Effectiveness studies

Often, mixed methods are applied in the evaluation of program effectiveness in quasi-experimental and experimental designs. For instance, Dannifer and colleagues (25) evaluated the effectiveness of a farmers’ market nutrition education program using focus groups and surveys. Grouped by number of classes attended (none, one class, more than one class), a control group of market shoppers were asked about attitudes, self-efficacy, and behaviors regarding fruit and vegetable preparation and consumption (QUANdc → qualdc). Bivariate and regression analysis examined differences in outcomes as a function of number of classes attended and qualitative analysis was based on a grounded theory approach (14). By connecting the results (QUANdaqualda), qualitative findings were used to expand results from quantitative analysis with respect to changes in knowledge and attitudes.

In other effectiveness evaluations, quantitative methods are used to evaluate program or intervention outcomes, while mixed methods play a secondary role in evaluation of process. For example, Cook and colleagues (13) proposed to use a stepped wedge randomized design to examine the effect of an alcohol health champions program. A process evaluation will explore the context, implementation and response to the intervention using mixed methods (quandc + qualdc) in which the two types of data are merged (qualda →← qualda) to provide a complementary perspective on these phenomena.

Implementation studies

As with effectiveness studies, studies that focus solely on implementation use mixed methods to evaluate process and outcomes. Hanson and colleagues (41) describe a design for a non-experimental study of a community-based learning collaborative (CBLC) strategy for implementing trauma-focused cognitive behavioral therapy (12) by promoting inter-professional collaboration between child welfare and child mental health service systems. Quantitative data will be used to assess individual and organization level measures of interpersonal collaboration (IC), inter-organizational relationships (IOR), penetration, and sustainability. Mixed quantitative/qualitative data will then be collected and analyzed sequentially for three functions: 1) expansion to provide further explanation of the quantitative findings related to CBLC strategies and activities (i.e., explanations of observed trends in the quantitative results; Quandc → QUALdc/da); 2) convergence to examine the extent to which interview data support the quantitative monthly online survey data (i.e., validity of the quantitative data; QUANda →← qualda); and 3) complementarity to explore further factors related to sustainment of IC/IOR and penetration/use outcomes over the follow-up period (QUANda + QUALda). Taken together, the results of these analyses will inform further refinement of the CBLC model.

Hybrid designs

Hybrid designs are intended to efficiently and simultaneously evaluate the effectiveness and implementation of an evidence-based practice (EBP). There are three types of hybrid designs (20). Type I designs are primarily focused on evaluating the effectiveness of the intervention in a real-world setting; while assessing implementation is secondary. Type II designs give equal priority to an evaluation of intervention effectiveness and implementation; which may involve a more detailed examination of the implementation process. Type III designs are primarily focused on the evaluation of an implementation strategy; and, as a secondary priority, may evaluate intervention effectiveness, especially when intervention outcomes may be linked to implementation outcomes.

In Hybrid I designs quantitative methods are typically used to evaluate intervention or program effectiveness, while mixed methods are used to identify potential implementation barriers and facilitators (37) or to evaluate implementation outcomes such as fidelity, feasibility, and acceptability (29), or reach, adoption, implementation and sustainability (79). For instance, Broder-Fingert and colleagues (7) plan to simultaneously evaluate effectiveness and collect data on implementation of a patient navigation intervention to improve access to services for children with autism spectrum disorders in a two-arm randomized comparative effectiveness trial. A mixed-method implementation evaluation will be structured to achieve three aims that will be carried out sequentially, with each project informing the next (QUALda/da → QUALdc/da → QUANdc/da). Data will also converge in the final analysis (QUALda →← QUANda) for the purpose of triangulation.

Mixed methods have been used in Hybrid 2 designs to evaluate both process and outcomes of program effectiveness and implementation (19, 50, 78). For instance, Hamilton and colleagues (40) studied an evidence-based quality improvement approach for implementing supported employment services at specialty mental health clinics in a site-level controlled trial at four implementation sites and four control sites. Data collected included patient surveys and semi-structured interviews with clinicians and administrators before, during, and after implementation; qualitative field notes; structured baseline and follow-up interviews with patients; semi-structured interviews with patients after implementation; and administrative data. Qualitative results were merged to contextualize the outcomes evaluation (QUANda/dc + QUALda/dc) for complementarity.

Hybrid 3 designs are similar to implementation-only studies described above. While quantitative methods are typically used to evaluate effectiveness, mixed methods are used to evaluate both process and outcomes of specific implementation strategies (23, 87). For instance, Lewis et al. (51) conducted a dynamic cluster randomized trial of a standardized versus tailored measurement-based care (MBC) implementation in a large provider of community care. Quantitative data were used to compare the effect of standardized versus tailored MBC implementation on clinician- and client-level outcomes. Quantitative measures of MBC fidelity, and qualitative data on implementation barriers obtained from focus groups were simultaneously mixed in a QUAL + QUAN structure served the function of data expansion for the purposes of evaluation and elaboration using the process of data connection.

Procedures for Collecting Qualitative Data

Mixed methods evaluations often require timely collection and analysis of data to provide information that can inform the intervention itself or the strategy used to successfully implement the intervention. One such method is a technique developed by anthropologists known as Rapid Assessment Procedures (RAP). This approach is designed to provide depth to the understanding of the event and its community context that is critical to the development and implementation of more quantitative approaches involving the use of survey questionnaires and diagnostic instruments (5, 84).

With a typically shorter turnaround time, qualitative researchers in implementation science have turned toward rapid analysis techniques in which key concepts are identified in advance to structure and focus the inquiry (32, 39). In the rapid analytic approach used by Hamilton (39), main topics (domains) are drawn from interview and focus group guides and a template is developed to summarize transcripts (32, 49). Summaries are analyzed using matrix analysis, and key actionable findings are shared with the implementation team to guide further implementation (e.g., the variable use of implementation strategies) in real time, particularly during phased implementation research such as in a hybrid type II study (20).

Rapid assessment procedures have been used in evaluation studies of healthcare organization and delivery (92). However, with few exceptions (3, 51), they have been used primarily as standalone investigations with no integration with quantitative methods (11, 36, 44, 83, 97). Ackerman and colleagues (3) used “rapid ethnography” to understand efforts to implement secure websites (patient portals) in “safety net” health care systems that provide services for low-income populations. Site visits at four California safety net health systems included interviews with clinicians and executives, informal focus groups with front-line staff, observations of patient portal sign-up procedures and clinic work, review of marketing materials and portal use data, and a brief survey. However, “researchers conducting rapid ethnographies face tensions between the breadth and depth of the data they collect and often need to depend on participants who are most accessible due to time constraints” (93, pp. 321–322).

More recently, the combination of clinical ethnography and rapid assessment procedures has been modified for use in pragmatic clinical trials (66). Known as Rapid Assessment Procedure – Informed Clinical Ethnography or RAPICE, the process begins with preliminary discussions with potential sites, follow by training calls and site visits, conducted by the study Principal Investigator acting as a participant observer (PO). During the visit, the PO participates in and observes meetings with site staff, conducts informal or semi-structured interviews to assess implementation progress, collects available documents that record procedures implemented, and completes field notes. Both site-specific logs and domain-specific logs (i.e., trial specific activities, evidence-based intervention implementation, sustainability, and economic considerations) are maintained. Interview transcripts and field notes are subsequently reviewed by the study’s mixed methods consultant (MMC) (98). A discussion ensues until both the PO and the MMC reach consensus as to the meaning and significance of the data (66). This approach is consistent with the pragmatic trial requirement for the minimization of time intensive research methods (89) and the implementation science goal of understanding trial processes that could provide readily implementable intervention models (99).

The use of RAPICE is illustrated by Zatzick and collagues (99) in an evaluation of the American College of Surgeons national policy requirements and best practice guidelines used to inform the integrated operation of US trauma centers. In a hybrid trial testing the delivery of high-quality screening and intervention for PTSD across US level 1 trauma centers, the study uses implementation conceptual frameworks and RAPICE methods to evaluate the uptake of the intervention model using site visit data.

Procedures for Analyzing Qualitative Data

Intervention and practice evaluations using mixed methods designs generally rely on semi-structured interviews, focus groups and ethnographic observations as a source of qualitative data. However, the demand for rigor in mixed method designs have led to innovative approaches in both the kind of qualitative data collected and how these data are analyzed. One such approach transforms qualitative data into quantitative values; referred to as “quantitizing” qualitative data (56). This approach must adhere to assumptions that govern the collection of qualitative, as well as quantitative data simultaneously. Caution must be exercised in making certain that the application of one set of assumptions (e.g., insuring that every participant had an opportunity to answer a question when reporting a frequency or rate) does not violate another set of assumptions (i.e., samples purposively selected to insure depth of understanding). For instance, quantitative data may be used for purposes of description, but may not necessarily satisfy the requirements for application of statistical tests to ascertain the level of significance of differences across groups.

Three particular approaches to quantifying qualitative data are summarized below.

Concept mapping

The technique of concept mapping (91), where qualitative data elicited from focus groups are quantitized, is an example of convergence through transformation (2, 74). Concept mapping is a structured conceptualization process and a participatory qualitative research method that yields a conceptual framework for how a group views a particular topic. Similar to other methods such as the nominal group technique (NGT, 26) and the Delphi method (26), concept mapping uses inductive and structured small group data collection processes to qualitatively generate different ideas or constructs and then quantize these data for quantitative analysis. In the case of concept mapping, the qualitative data are used to produce illustrative cluster maps depicting relationships of ideas in the form of clusters.

Concept mapping involves six steps: preparation, generation, structuring, representation, interpretation, and utilization. In the preparation stage, focal areas are identified and criteria for participant selection/recruitment are determined. In the generation stage, participants address the focal question and generate a list of items to be used in subsequent data collection and analysis. In the structuring stage, participants independently organize the list of items generated by sorting the items into piles based on perceived similarity. Each item is then rated in terms of its importance or usefulness to the focal question. In the representation stage, data are entered into specialized concept-mapping computer software (Concept Systems), which provides quantitative summaries and visual representations or concept maps based on multidimensional scaling and hierarchical cluster analysis. In the interpretation stage, participants collectively process and qualitatively analyze the concept maps, assessing and discussing the cluster domains, evaluating items that form each cluster, and discussing the content of each cluster. This leads to a reduction in the number of clusters. Finally, in the utilization stage, findings are discussed to determine how best they inform the original focal question.

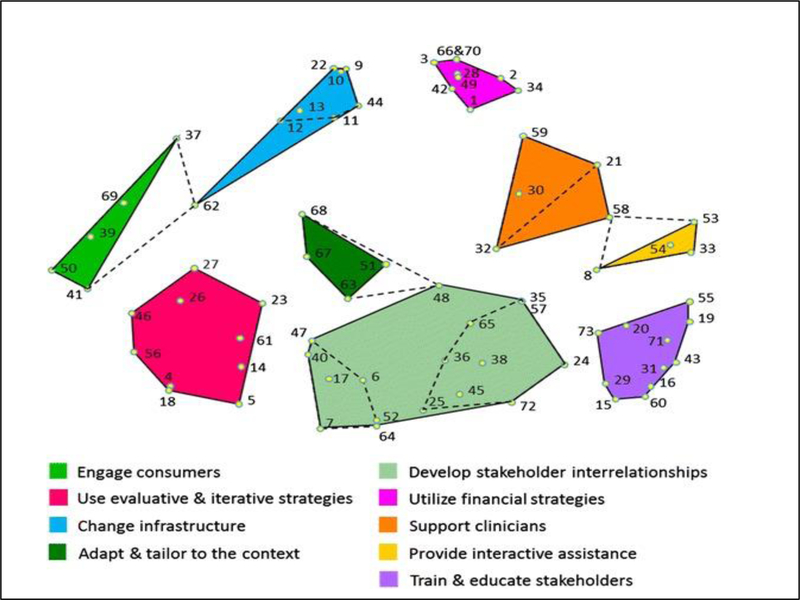

Waltz and colleagues (94) illustrate the use of concept mapping in a study to validate the compilation of discrete implementation strategies identified in the Expert Recommendations for Implementing Change (ERIC) study. Hierarchical cluster analysis supported organizing the 73 strategies into 9 categories (see Figure 1 below).

Figure 1.

Illustration of the graphic output of concept mapping. Point and cluster map of all 73 strategies identified in the ERIC process. Source: Waltz et al. (88).

Qualitative comparative analysis

Another procedure for quantitizing qualitative data that has gained increasing attention in recent years is qualitative comparative analysis (QCA). Developed in the 1980s (75), QCA was designed to study the complexities often observed in social sciences research by examining the nature of relationships. QCA can be used with qualitative data, quantitative data, or a combination of the two, and is particularly helpful in conducting studies that may have a small to medium sample size, but can also be used with large sample sizes (76).

Similar to the constant comparative method used in grounded theory (34) and thematic analysis (53) in which the analyst compares and contrasts incidents or codes to create categories or themes to generate a theory, QCA uses a qualitative approach in that it entails an iterative process and dialogue with the data. Findings in QCA, however, are based on quantitative analyses; specifically a Boolean algebra technique that allows for a reductionist approach interpreted in set-theoretic terms. The underlying purpose in using this method is to identify one or multiple configurations that are sufficient to produce an outcome (see Table 1) with enough consistency to illustrate that the same pathway will continue to produce the outcome, and a coverage score indicating the percentage of cases where a given configuration is applicable. Pathways are interpreted using logical ANDs, logical ORs, and the presence or absence of a condition. Configuration #3 below, for example, would be interpreted as: the presence of conditions A and B when combined with either E or D, but only in the absence of C, is sufficient to produce outcome X.

Table 1.

Development of causal pathways to outcome identified through qualitative comparative analysis

| Original conditions associated with outcome (x) | Causal pathways to outcome (x) identified through QCA |

|---|---|

| A, B, C, D, E → X | 1) A * C * E → X 2) A * B + D → X 3) A * B * E + D * ~C → X |

= logical AND

= logical OR

= the absence of a condition/outcome

Based on the type of data being used, the context of what is being studied, and what is already known about a particular area of interest, a researcher will begin by selecting one of two commonly used analyses, crisp-set (csQCA) or fuzzy-set (fsQCA). In csQCA, conditions and the outcome are dichotomized, meaning that a given case’s membership to a condition or outcome is either fully in or fully out (76). Alternatively, membership on a fuzzy-set can fall into three, four, six-point, or continuous value set; enabling the researcher to qualitatively assess the degree of membership most appropriate for a case on any given condition.

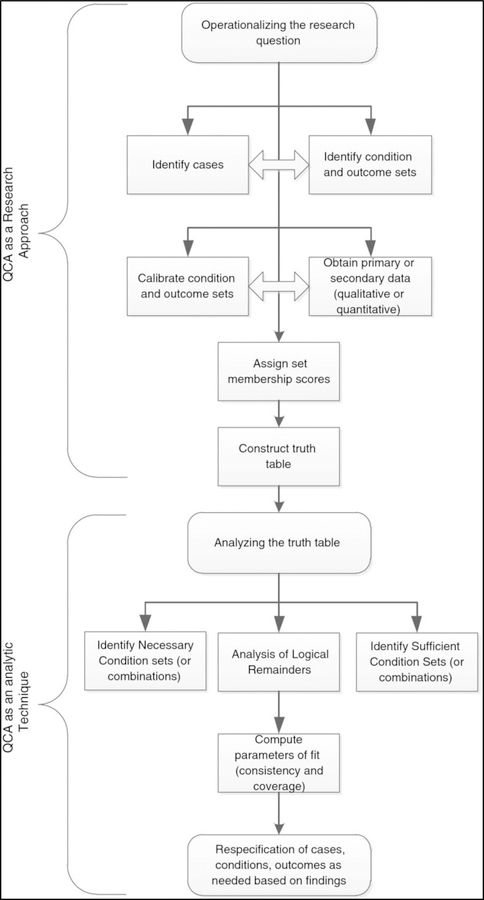

Procedures for conducting a QCA are illustrated in Figure 2 below. Prior to beginning formal analyses, several steps including determining outcomes and conditions, identifying cases, and calibrating membership scores inform the development of a data matrix. QCA relies heavily on substantive knowledge, and decisions made throughout the analytics process are guided by a theoretical framework rather than inferential statistics (76). In the first step, researchers assign weights to constructs based on previous knowledge and theory, rather than basing thresholds on means or medians. The number of conditions is carefully selected, as having as many conditions as cases will result in uniqueness and failure to detect configurations (55). Once conditions have been defined and operationalized, each case can be dichotomized for membership. In crisp-set analysis, cases are classified as having full non-membership (0) or full membership (1) in the given outcome by using a qualitative approach (indirect calibration) or a quantitative approach with log odds (direct calibration) (76).

Figure 2.

QCA as an approach and as an analytic technique

Source: Kane et al. (44), with permission.

Once a data matrix has been created, formal csQCA can commence using fs/QCA software, R suite, or other statistical packages for configurational comparative methods (a comprehensive list can be found on www.compass.org/software). Analyses should begin with determining whether all conditions originally hypothesized to influence the outcome, are, indeed, necessary (81). Using a Boolean algebra algorithm, a truth table is then designed to provide a reduced number of configurations. A truth table may show contradictions (consistency score = .3-.7), indicating that it is not clear whether this configuration is consistent with the outcome (76). Several techniques can be used to resolve such contradictions (77), many of which entail revisiting the operationalization and/or selection of conditions, or reviewing cases for fit.

Once all contradictions are resolved and assigned full non-membership or full membership on the given outcome, sufficiency analyses can be conducted. Initial sufficiency analysis is usually based on the presence of an outcome. The Quine-McCluskey algorithm produces a logical combination or multiple combinations of conditions that are sufficient for the outcome to occur. Three separate solutions are given: parsimonious, intermediate, and complex. Typically, the intermediate solution is selected for the purposes of interpretation (76). One interest of interpreting findings is to explicitly state that this combination will almost always produce the given outcome. This is measured by consistency. While a perfect score of 1 indicates that this causal pathway will always be consistent with the outcome, a score ≥ .8 is a strong measure of fit (76, 77). Complementary to consistency is coverage, or identifying the degree to which all cases were explained by a given causal pathway. While there is often a trade-off between these two measures of fit, without high consistency, it is not meaningful to have high coverage (76).

QCA has increasingly been used in health services research to evaluate program effectiveness and implementation where outcomes are dependent on interconnected structures and practices (15, 28, 30, 46, 47, 48, 90, 92). For instance, Kane and colleagues (43) used QCA to examine the elements of organizational capacity to support program implementation that result in successful completion of public health program objectives in a public health initiative serving 50 communities. The QCA used case study and quantitative data collected from 22 awardee programs to evaluate the Communities Putting Prevention to Work (CPPW) program. The results revealed two combinations for combining most work plan objectives: 1) having experience implementing public health improvements in combination with having a history of collaboration with partners; and 2) not having experience implementing public health improvements in combination with having leadership support.

Implementation frameworks

A third approach to quantitizing qualitative information used in evaluation research has been the coding and scaling of responses to interviews guided by existing implementation frameworks. These techniques call for assigning a numeric value to qualitative responses to questions pertaining to a set of variables believed to be predictive of successful implementation outcomes and then comparing the quantitative values across implementation domain, different implementation sites, or different stakeholder groups involved in implementation (24, 95).

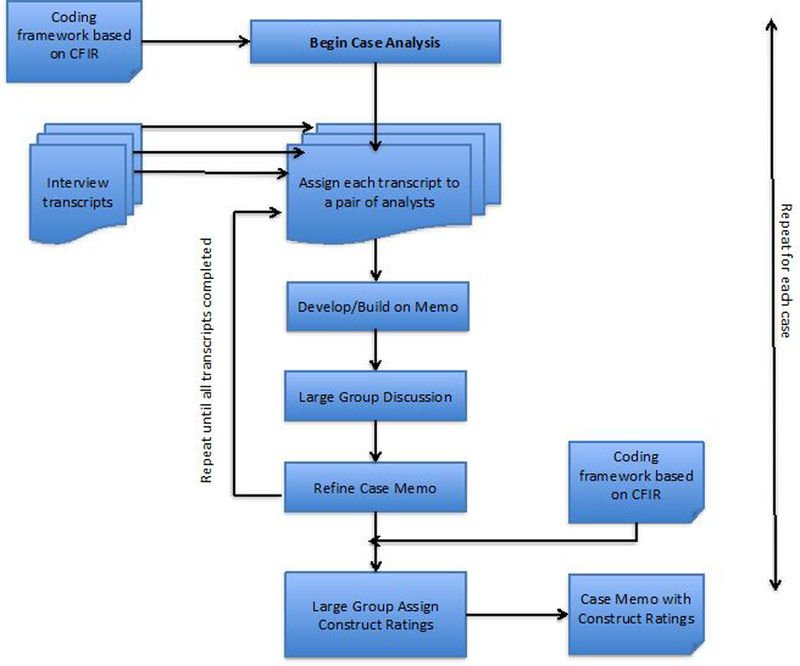

In an illustration of this approach, Damschroder and Lowery (22) embedded the constructs of the Consolidated Framework for Implementation Research (CFIR) (21) in semi-structured interviews conducted to describe factors that explained the wide variation in implementation of MOVE!, a weight management program disseminated nationally to Veterans Affairs (VA) medical centers. Interview transcripts were coded and used to develop a case memo for each facility. Numerical ratings were then assigned to each construct to reflect their valence and their magnitude or strength. This process is illustrated in Figure 3 below. The numerical ratings ranged from −2 (construct is mentioned by two or more interviewees a negative influence in the organization, an impeding influence on work processes, and/or an impeding influence in implementation efforts) to +2 (construct is mentioned by two or more interviewees as a positive influence in the organization, an impeding influence on work processes, and/or an impeding influence in implementation efforts). Of the 31 constructs assessed, 10 strongly distinguished between facilities with low versus high MOVE! implementation effectiveness; 2 constructs exhibited a weak pattern in distinguishing between low versus high effectiveness; 16 constructs were mixed across facilities; and 2 had insufficient data to assess.

Figure 3.

Team-based work flow for case analysis. Source: Damschroder and Lowery, (22).

In the absence of quantification of the qualitative data in these three analytical approaches, a thematic content analysis approach (43) might have been used for analysis of the data obtained from the small group concept mapping brainstorming sessions or the interviews or focus groups that are part of the QCA. A qualitative framework approach (33) might have been used for analysis of the data obtained from the interviews using the CFIR template. The analysis would be inductive for data collected for the concept mapping exercise, inductive-deductive for data collected for the QCA exercise, and deductive for the data collected for the framework exercise. With the quantification, these data are largely used to describe a framework (concept mapping) that could be used to generate hypotheses (implementation framework) or to test hypotheses (qualitative comparative analysis).

Procedures for Measuring Evaluation Outcomes

In addition to their use to evaluate intervention effectiveness and implementation, mixed methods have increasingly been employed to develop innovative measurement tools. Three such recent efforts are described below.

Stages of Implementation Completion (SIC)

The SIC is an 8-stage assessment tool (9) developed as part of a large-scale randomized implementation trial that contrasted two methods of implementing Treatment Foster Care Oregon (TFCO [formerly Multidimensional Treatment Foster Care] (10), an EBP for youth with serious behavioral problems in the juvenile justice and child welfare systems. The eight stages range from Engagement (Stage 1) with the developers/ purveyors in the implementation process, to achievement of Competency in program delivery (Stage 8). The SIC was developed to measure a community or organization’s progress and milestones toward successful implementation of the TFCO model regardless of the implementation strategy utilized. Within each of the eight stages, sub activities are operationalized and completion of activities are monitored, along with the length of time taken to complete these activities.

In an effort to examine the utility and validity of the SIC, Palinkas and colleagues (64) examined influences on the decisions of administrators of youth-serving organizations to initiate and proceed with implementation of three EBPs: Multisystemic Therapy (43), Multidimensional Family Therapy (52), and TFCO. Guided by the SIC framework, semi-structured interviews were conducted with 19 agency chief executive officers and program directors of 15 youth-serving organizations. Agency leaders’ self-assessments of implementation feasibility and desirability in the stages that occur prior to (Pre-implementation), during (Implementation), and after (Sustainment) phases were found to be influenced by several characteristics of the intervention, inner setting and outer setting that were unique to a phase in some instances and found to operate in more than one phase in other instances. Findings supported the validity of using the SIC to measure implementation of EBPs other than TFCO in a variety of practice settings, identified opportunities for using agency leader models to develop strategies to facilitate implementation of EBP, and supported using the SIC as standardized framework for guiding agency leader self-assessments of implementation.

Sustainment Measurement System (SMS)

The development of the SMS to measure sustainment of prevention programs and initiatives is another illustration of the use of mixed methods to develop evaluation tools. Palinkas and colleagues (69, 70) interviewed 45 representatives of 10 grantees and 9 program officers within 4 SAMHSA prevention programs to identify key domains of sustainability indicators (i.e., dependent variables) and requirements or predictors (i.e., independent variables). The conceptualization of “sustainability” was captured using three approaches: semi-structured interviews to identify experiences with implementation and sustainability barriers and facilitators; a free list exercise to identify how participants conceptualized sustainability, program elements they wished to sustain, and requirements to sustain such elements; and a checklist of CFIR constructs assessing how important each item was to sustainment. Interviews were analyzed using a grounded theory approach (14), while free lists and CFIR items were quantitized; the former consisting of rank-ordered weights applied to frequencies of listed items and the latter using a numeric scale ranging from 0 (not important) to 2 (very important) (69). Four sustainability elements were identified by all three data sets (ongoing coalitions, collaborations and networks; infrastructure and capacity to support sustainability; ongoing evaluation of performance and outcomes; and availability of funding and resources) and five elements were identified by two of three data sets (community need for program, community buy-in and support, supportive leadership, presence of a champion, and evidence of positive outcomes).

RE-AIM QuEST

Another innovation in the assessment of implementation outcomes is the RE-AIM Qualitative Evaluation for Systematic Translation (RE-AIM QuEST), a mixed methods framework developed by Forman and colleagues (31). The RE-AIM (Reach, Efficacy/Effectiveness, Adoption, Implementation, and Maintenance) framework is often used to monitor the success of intervention effectiveness, dissemination, and implementation in real-life settings (35), and has been used to guide several mixed method implementation studies (6, 50, 54, 79, 82, 85). The RE-AIM QuEST framework represents an attempt to provide guidelines for the systematic application of quantitative and qualitative data for summative evaluations of each of the five dimensions. These guidelines may also be used in conducting formative evaluations to help guide the process of implementation by identifying and addressing barriers in real time.

Forman and colleagues (31) applied this framework for both real-time and retrospective evaluation in a pragmatic cluster RCT of the Adherence and Intensification of Medications (AIM) program. Researchers found that the QuEST framework expanded RE-AIM in three fundamental ways: 1) allowing investigators to understand whether Reach, Adoption and Implementation varied across and within sites, 2) expanding retrospective evaluation of effectiveness by examining why the intervention worked or failed to work and explain which components of the intervention or the implementation context may have been barriers; and 3) explicating whether or not and in which ways the intervention was maintained. This information permitted researchers to improve implementation during the intervention and inform the design of future interventions.

Procedures for Participant Sampling

Purposeful sampling is widely used in qualitative research for the identification of information-rich cases related to the phenomenon of interest (18, 71). While criterion sampling is used most commonly in implementation research (68), combining sampling strategies may be more appropriate to the aims of implementation research and more consistent with recent developments in quantitative methods (8, 27). Palinkas and colleagues (68) reviewed the principles and practice of purposeful sampling in implementation research, summarized types and categories of purposeful sampling strategies and provided the following recommendations: 1) use of a single stage strategy for purposeful sampling for qualitative portions of a mixed methods implementation study should adhere to the same general principles that govern all forms of sampling, qualitative or quantitative; 2) a multistage strategy for purposeful sampling should begin first with a broader view with an emphasis on variation or dispersion and move to a narrow view with an emphasis on similarity or central tendencies; 3) selection of a single or multistage purposeful sampling strategy should be based, in part, on how it relates to the probability sample, either for the purpose of answering the same question (in which case a strategy emphasizing variation and dispersion is preferred) or for answering related questions (in which case, a strategy emphasizing similarity and central tendencies is preferred); 4) all sampling procedures, whether purposeful or probability, are designed to capture elements of both similarity (i.e., centrality) and differences (i.e., dispersion); and 5) although quantitative data can be generated from a purposeful sampling strategy and qualitative data can be generated from a probability sampling strategy, each set of data is suited to a specific objective and each must adhere to a specific set of assumptions and requirements.

Challenges of Integrating Quantitative and Qualitative Methods

Conducting integrated mixed methods research poses several challenges, from design to analysis and dissemination. Given the many methodological configurations possible, as described above, careful thought about optimal design should occur early in the process in order to have the potential to integrate methods when deemed appropriate to answer the research question(s). Considerations must include resources (e.g., time, funding, expertise; see 58), as integrated mixed methods studies tend to be complex and non-linear. After launching an integrated mixed methods study, the team needs to consistently evaluate the extent to which the mixed methods intentions are being realized, as the tendency in this type of study is to work (e.g., collect data) in parallel, even through analysis, only then to find that the sources of data are not reconcilable and the potential of the mixed methods design is not reached. This lack of integration may result in separate publications with quantitative and qualitative results rather than integrated mixed methods papers. Several sets of guidelines and critiques are available to facilitate high-quality integrated mixed methods products (e.g., 17, 60, 61).

Another consideration of integrating the two sets of methods lies in assessing the advantages and disadvantages of doing so with respect to data collection. Of course, there are tradeoffs involved with each method introduced here. For instance, Rapid Assessment Procedures enable more time efficient data collection but require more coordination of multiple data collectors to insure consistency and reliability. Rapid Assessment Procedure – Informed Clinical Ethnography also enables time efficient field observation and review procedures that constitute ideal “nimble” mixed method approaches for the pragmatic trial, along with minimizing participant burden, allowing for real-time workflow observations, more opportunities to conduct “repeated measures” of qualitative data through multiple site visits, and greater transparency in the integration of investigator and study participant perspectives on the phenomena of interest. However, it discourages use of semi-structured interviews or focus groups that may allow for the collection of data that would provide greater depth of understanding. Concept mapping offers a structured approach to data collection designed to facilitate quantification and visualization of salient themes or constructs at the expense of a semi-structured approach that may provide greater depth of understanding of the phenomenon of interest. Collection of qualitative data on implementation and sustainment processes and outcomes can be used to validate, complement and expand as well as develop quantitative measures such as the SI, SMS and RE-AIM, but can potentially involve additional time and personnel for minimal benefit. The advantages and disadvantages of each method must be weighed when deciding whether or not to use them for evaluation.

Finally, consideration must be given to identifying opportunities for the appropriate use of the innovative methods introduced in this article. Table 2 below outlines the range of mixed method functions, research foci, and study design for each innovative method. For example, Rapid Assessment Procedures could be used to achieve the functions of convergence, complementarity, expansion and development, assess both process and outcomes in effectiveness and implementation studies. However, we anticipate that these methods can and should be applied in ways we have yet to anticipate. Similarly, new innovative methods will inevitably be created to accommodate the functions, foci and design of mixed methods evaluations.

Table 2.

Opportunities for use of innovative methods in mixed methods evaluations based on function, focus and design.

| Methods | Mixed method function | Focus | Design |

|---|---|---|---|

| Collecting QUAL Data | |||

| RAP | Convergence Complementarity Expansion Development |

Process Outcomes |

Effectiveness/ implementation |

| RAPICE | Convergence Complementarity Expansion Development |

Process Outcomes |

Effectiveness/ implementation |

| Analyzing (Quantitizing) QUAL Data | |||

| Concept Mapping | Development | Predictors | Effectiveness/ implementation |

| Qualitative Comparative Analysis | Development | Predictors Outcomes |

Effectiveness/ implementation |

| Implementation Frameworks | Expansion | Predictors | Implementation |

| Measuring Evaluation Outcomes | |||

| States of Implementation Completion | Development Convergence Complementarity Expansion |

Outcomes Process |

Implementation |

| Sustainment Measurement System | Development Convergence Complementarity Expansion |

Outcomes Process |

Implementation |

| RE-AIM QuEST | Convergence Complementarity Expansion |

Outcomes | Effectiveness/ implementation |

| Sampling | Sampling | Predictors Process Outcomes |

Effectiveness/ implementation |

Conclusion

As evaluation research evolves as a discipline, the methods used by evaluation researchers must evolve as well. Evaluations are performed to achieve a better understanding of policy, program or practice effectiveness and implementation. They assess not just the outcomes associated with these activities, but the process and the context in which they occur. Mixed methods are central to this evolution (16, 57, 96). As they facilitate innovations in research and advances in the understanding gained from that research, so they must also change, adapt, and evolve. Options for determining suitability of particular designs are becoming increasingly sophisticated and integrated. This review summarizes only a fraction of the innovations currently underway. With each new application of mixed methods in evaluation research, the need for further change, adaptation and evolution becomes apparent. The key to the future of mixed methods research will be to continue building on what has been learned and to replicate designs that produce the most robust outcomes.

Acknowledgments

We are grateful for support from the National Institute on Drug Abuse (NIDA) (R34DA037516–01A1, L Palinkas, PI and P30DA027828, C. Hendricks Brown, PI) and the Department of Veterans Affairs (QUE 15–272, A. Hamilton, PI).

Footnotes

Disclosure Statement

The authors are not aware of any affiliations, memberships, funding, or financial holdings that might be perceived as affecting the objectivity of this review.

References

- 1.Aarons GA, Fettes DL, Sommerfeld DH, Palinkas LA. 2012. Mixed methods for implementation research: application to evidence-based practice implementation and staff turnover in community-based organizations providing child welfare services. Child Maltreat 17:67–79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Aarons GA, Wells R, Zagursky K, Fettes DL, Palinkas LA. 2009. Advancing a conceptual model of evidence-based practice implementation in child welfare. Am. J. Public Health 99:2087–95 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ackerman SL, Sarkar U, Tieu L, Handley MA, Schillinger D, et al. 2017. Meaningful use in the safety net: a rapid ethnography of patient portal implementation at five community health centers in California. J. Am. Med. Inform. Assoc 24(5):903–12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Albright K, Gechter K, Kempe A. 2013. Importance of mixed methods in pragmatic trials and dissemination and implementation research. Acad. Pediatr 13:400–7 [DOI] [PubMed] [Google Scholar]

- 5.Beebe J 1995. Basic concepts and techniques of rapid appraisal. Hum. Org 54:42–51 [Google Scholar]

- 6.Bogart LM, Fu CM, Eyraud J, Cowgill BO, Hawes-Dawson J, et al. 2018. Evaluation of the dissemination of SNaX, a middle school-based obesity prevention intervention, within a large US school district. Transl. Behav. Med XX:XX-XX https://doi:10.109/tbm/bx055 [DOI] [PMC free article] [PubMed]

- 7.Broder-Fingert S, Walls M, Augustyn M, Beidas R, Mandell D, et al. 2018. A hybrid type I randomized effectiveness-implementation trial of patient navigation to improve access to services for children with autism spectrum disorder. BMC Psychiatry 18:79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Brown CH, Curran G, Palinkas LA, Wells KB, Jones L, et al. 2017. An overview of research and evaluation designs for dissemination and implementation. Ann. Rev. Public Health 38:1–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chamberlain P, Brown C, Saldana L. 2011. Observational measure of implementation progress in community-based settings: the stages of implementation completion (SIC). Implement. Sci 6:116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chamberlain P, Mihalic SF (1998). Multidimensional Treatment Foster Care. In Book Eight: Blueprints for Violence Prevention, ed. DS Elliot Boulder CO: Institute of Behavioral Science, University of Colorado at Boulder [Google Scholar]

- 11.Choy I, Kitto S, Adu-Aryee N, Okrainee A. 2013. Barriers to uptake of laparoscopic surgery in a lower-middle-income country. Surg. Endosc 27:4009–15 [DOI] [PubMed] [Google Scholar]

- 12.Cohen JA, Mannarino AP, Deblinger E. 2006. Treating Trauma and Traumatic Grief in Children and Adolescents, New York: Guilford Press [Google Scholar]

- 13.Cook PA, Hargreaves SC, Burns EJ, de Vocht F, Parrott S, et al. 2018. Communities in charge of alcohol (CICA): a protocol for a stepped-wedge randomized control trial of an alcohol health champions programme. BMC Public Health 18:522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Corbin J, Strauss A. 2008. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory Thousand Oaks, CA: Sage [Google Scholar]

- 15.Cragun D, Pal T, Vadaparampil ST, Baldwin J, Hampel H, DeBate RD. 2016. Qualitative comparative analysis: a hybrid method for identifying factors associated with program effectiveness. J. Mix. Methods Res 10(3):251–72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Creswell JW, Klassen AC, Plano Clark VL, Clegg-Smith K. 2011. Best Practices for Mixed Methods Research in the Health Sciences Bethesda, MD: Off. Behav. Soc. Sci. Res., Natl. Inst. Health; https://obssr.od.nih.gov/wp-content/uploads/2016/02/Best_Practices_for_Mixed_Methods_Research.pdf [Google Scholar]

- 17.Creswell JW, Tashakkori A. 2007. Editorial: Developing publishable mixed methods manuscripts. J. Mix. Methods Res 1:107–11 [Google Scholar]

- 18.Creswell JW, Plano Clark VL. 2011. Designing and Conducting Mixed Method Research Thousand Oaks, CA: Sage. 2nd ed. [Google Scholar]

- 19.Cully JA, Armento ME, Mott J, Nadorff MR, Naik AD, et al. 2012. Brief cognitive behavioral therapy in primary care: a type-2 randomized effectiveness-implementation trial. Implement. Sci 7:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. 2012. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care 50(3):217–26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Damschroeder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. 2009. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Damschroder LJ, Lowery JC. 2013. Evaluation of a large-scale weight management program using the consolidated framework for implementation research (CFIR). Implement. Sci 8:51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Damschroder LJ, Moin T, Datta SK, Reardon CM, Steinle N, et al. 2015. Implementation and evaluation of the VA DPP clinical demonstration: protocol for a multi-site non-randomized hybrid effectiveness-implementation type III trial. Implement. Sci 10:68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Damschroder LJ, Reardon CM, Sperber N, Robinson CH, Fickel JJ, Oddone EZ. 2017. Implementation evaluation of the Telephone Lifestyle Coaching (TLC) program: organizational factors associated with successful implementation. Transl. Behav. Med 7:233–41 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dannifer R, Abrami A, Rapoport R, Sriphanlop P, Sacks R, Johns M. 2015. A mixed-methods evaluation of a SNAP-Ed farmers’ market-based nutrition education program. J. Nutr. Educ. Behav 47:516–25 [DOI] [PubMed] [Google Scholar]

- 26.Delbecq AL, VandeVen AH, Gustafson DH. 1975. Group Techniques for Program Planning: A guide to Nominal Group and Delphi Processes Glenview, IL: Scott Foresman and Company [Google Scholar]

- 27.Duan N, Bhaumik DK, Palinkas LA, Hoagwood K. 2015. Optimal design and purposeful sampling: twin methodologies for implementation research. Admin. Policy Ment. Health Ment. Health Serv. Res 42:424–32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Dy SM, Garg P, Nyberg D, 2005. Critical pathway effectiveness: assessing the impact of patient, hospital care, and pathway characteristics using qualitative comparative analysis. Health Serv. Res 40(2):499–516 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Elinder LS, Patterson E, Nyberg G, Norman A. 2018. A Healthy Start Plus for prevention of childhood overweight and obesity in disadvantaged areas through parental support in the school setting – study protocol for a parallel group cluster randomized trial. BMC Public Health 18:459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ford EW, Duncan WJ, Ginter PM. 2005. Health departments’ implementation of public health’s core functions: an assessment of health impacts. Pub. Health 119:11–21 [DOI] [PubMed] [Google Scholar]

- 31.Forman J, Heisley M, Damschroder LJ, Kaselitz E, Kerr EA. 2017. Development and application of the RE-AIM QuEST mixed methods framework for program evaluation. Prev. Med. Repts 6:322–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fox AB, Hamilton AB, Frayne SN, Wiltsey-Stirman S, Bean-Mayberry B, et al. 2016. Effectiveness of an evidence-based quality improvement approach to cultural competence training: The Veterans Affairs “Caring for Women Veterans” program. J. Continuing Ed. Health Prof 36:96–103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Gale NK, Heath G, Cameron E, Rashid S, Redwood S. 2013. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med Res Methodology, 13: 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Glaser BG, Strauss AL. 1967. The Discovery of Grounded Theory: Strategies for Qualitative Research New York: Aldine de Gruyter. [Google Scholar]

- 35.Glasgow RE, Vogt SM, Bowles TM. 1999. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am. J. Public Health 89(9): 1322–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Goepp JG, Meykler S, Mooney NE, Lyon C, Raso R, Julliard K. 2008. Provider insights about palliative care barriers and facilitators: results of a rapid ethnographic assessment. Am. J. Hosp. Palliat. Care 25:309–14 [DOI] [PubMed] [Google Scholar]

- 37.Granholm E, Holden JL, Sommerfeld D, Rufener C, Perivoliotis D, et al. 2015. Enhancing assertive community treatment with cognitive behavioral social skills training for schizophrenia: study protocol for a randomized controlled trial. Trials 16:438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Greene JC, Caracelli VJ, Graham WF. 1989. Toward a conceptual framework for mixed-method evaluation designs. Educ. Eval. Policy Anal 11(3):255–74 [Google Scholar]

- 39.Hamilton AB. 2013. Rapid Qualitative Methods in Health Services Research: Spotlight on Women’s Health VA HSR&D National Cyberseminar series: Spotlight on Women’s Health; December 2013 [Google Scholar]

- 40.Hamilton AB, Cohen AN, Glover DL, Whelan F, Chemerinski E, et al. 2013. Implementation of evidence-based employment services in specialty mental health. Health Serv. Res 48:100–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hanson RF, Schoenwald S, Saunders BE, Chapman J, Palinkas LA, et al. 2016. Testing the Community-Based Learning Collaborative (CBLC) implementation model: a study protocol. Int. J. Ment. Health Syst 10:52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Henggler SW, Schoenwald SK, Borduin CM, Rowland MD, Cunningham PB. 2009. Multisystemic Therapy for Antisocial Behavior in Children and Adolescents, New York: Guilford Press. 2nd ed. [Google Scholar]

- 43.Hsieh HF, Shannon SE. 2009. Three approaches to qualitative content analysis. Qual. Health Res 15: 1277–1288. [DOI] [PubMed] [Google Scholar]

- 44.Jayawardena A, Wijayasinghe SR, Tennakoon D, Cook T, Morcuendo JA. 2013. Early effects of a ‘train the trainer’ approach on Ponseti method dissemination: a case study of Sri Lanka. Iowa Orthop. J 33:153–60 [PMC free article] [PubMed] [Google Scholar]

- 45.Journal of Mixed Methods Research. 2018. Description https://au.sagepub.com/en-gb/oce/journal-of-mixed-methods-research/journal201775#description

- 46.Kahwati LC, Lewis MA, Kane H, Williams PA, Nerz P, et al. 2011. Best practices in the Veterans Health Administration’s MOVE! weight management program. Am. J. Prev. Med 41:457–64 [DOI] [PubMed] [Google Scholar]

- 47.Kane H, Hinnant L, Day K, Council M, Tzeng J, et al. 2017. Pathways to program success: a qualitative comparative analysis (QCA) of Communities Putting Prevention to Work case study programs. J. Pub. Health. Manag. Pract 23: 104–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kane H, Lewis MA, Williams PA, Kahwati LC. 2014. Using qualitative comparative analysis to understand and quantify translation and implementation. Transl. Behav. Med 4:201–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Koenig CJ, Abraham T, Zamora KA, Hill C, Kelly PA, et al. 2016. Pre-implementation strategies to adapt and implement a veteran peer coaching intervention to improve mental health treatment engagement among rural veterans. J. Rural Health 32(4):418–28 [DOI] [PubMed] [Google Scholar]

- 50.Kozica SL, Lombard CB, Harrison CL, Teede HJ. 2016. Evaluation of a large healthy lifestyle program: informing program implementation and scale-up in the prevention of obesity Implement. Sci 11:151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lewis CC, Scott K, Marty CN, Marriott BR, Kroenke K, et al. 2015. Implementing measurement-based care (iMBC) for depression in community mental health: a dynamic cluster randomized trial study protocol. Implement. Sci 10:127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Liddle HA. 2002. Multidimensional family therapy treatment (MDFT) for adolescent cannabis users: Vol 5 Cannibis youth treatment (CYT) manual series Rockville, MD: Center for Substance Abuse Treatment, Substance Abuse and Mental Health Services Administration [Google Scholar]

- 53.Lincoln YS, Guba EG. 1985. Naturalistic Inquiry Beverly Hills, CA: Sage [Google Scholar]

- 54.Martinez JL, Duncan LR, Rivers SE, Bertoli MC, Latimer-Cheung AE, Salovey P. 2017. Healthy eating for Life English as a second language curriculum: applying the RE-AIM framework to evaluate a nutrition education intervention targeting cancer risk reduction. Transl. Behav. Med 7:657–66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Marx A (2010). Crisp-set qualitative comparative analysis (csQCA) and model specification: Benchmarks for future csQCA applications. Int. J. Mult. Res. Approaches 4(2):138–58 [Google Scholar]

- 56.Miles M, Huberman M. 1994. Qualitative Data Analysis: An Expanded Sourcebook, Thousand Oaks, CA: Sage. 2nd ed. [Google Scholar]

- 57.Moore GF, Audrey S, Barker M, Bond L, Bonell C, et al. 2015. Process evaluation of complex interventions: Medical Research Council guidance. BMJ 19;350:h1258. doi: 10.1136/bmj.h1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Morse JM. 2005. Evolving trends in qualitative research: advances in mixed-method design. Qual. Health Res 15(5):583–5 [DOI] [PubMed] [Google Scholar]

- 59.Morse JM, Niehaus L. 2009. Mixed Method Design: Principles and Procedures Walnut Creek, CA: Left Coast Press [Google Scholar]

- 60.O’Cathain A, Murphy E, Nicholl J. 2008. The quality of mixed methods studies in health services research. J. Health Serv. Res. Policy 13(2):92–8 [DOI] [PubMed] [Google Scholar]

- 61.Onwuegbuzie A, Poth C. 2016. Editors’ afterword: Toward evidence-based guidelines for reviewing mixed methods research manuscripts submitted to journals. Int. J. Qual. Methods 15(1):1–13. 10.1177/1609406916628986 [DOI] [Google Scholar]

- 62.Palinkas LA. 2014. Qualitative and mixed methods in mental health services and implementation research. J. Clin. Child Adolesc. Psychol 43:851–61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Palinkas LA, Aarons GA, Horwitz SM, Chamberlain P, Hurlburt M, Landsverk J. 2011. Mixed method designs in implementation research. Adm. Policy Ment. Health Ment Health Serv. Res 38:44–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Palinkas LA, Campbell M, Saldana L. 2018. Agency leaders’ assessments of feasibility and desirability of implementation of evidence-based practices in youth-serving organizations using the Stages of Implementation Completion. Front. Public Health 6:161. doi: : 10.3389/fpubh.2018.00161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Palinkas LA Cooper BR. 2018. Mixed methods evaluation in dissemination and implementation science In Dissemination and Implementation Research in Health: Translating Science to Practice, ed. Brownson RC, Colditz GA, Proctor EK, pp. 335–53. New York: Oxford University Press. 2nd ed. [Google Scholar]

- 66.Palinkas LA, Darnell D, Zatzick D. 2017. Developing clinical ethnographic implementation methods for rapid assessments in acute care clinical trials. Presented at the 10th Conference on the Science of Dissemination and Implementation, Washington DC, December 5, 2017 [Google Scholar]

- 67.Palinkas LA, Holloway IW, Rice E, Fuentes D, Wu Q, Chamberlain P. 2011. Social networks and implementation of evidence-based practices in public youth-serving systems: a mixed methods study. Implement. Sci 6:113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, Hoagwood KE. 2015. Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Admin. Policy Ment. Health Ment Health Serv. Res 42:533–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Palinkas LA, Spear S, Mendon S, Villamar J, Brown CH. 2018. Development of a system for measuring sustainment of prevention programs and initiatives. Implement. Sci 13 (Suppl 3):A16 (Abstr.) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Palinkas LA, Spear SE, Mendon SJ, Villamar J, Valente T, et al. 2016. Measuring sustainment of prevention programs and initiatives: a study protocol. Implement. Sci 11:95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Patton MQ. Qualitative Research and Evaluation Methods 3rd ed. Thousand Oaks, CA: Sage; 2002 [Google Scholar]

- 72.Pelto PJ. 2015. What is so new about mixed methods? Qual. Health Res 25(6):734–45. [DOI] [PubMed] [Google Scholar]

- 73.Pluye P, Hong QN. 2014. Combining the power of stories and the power of numbers: mixed methods research and mixed studies reviews. Ann. Rev. Public Health 35:29–45 [DOI] [PubMed] [Google Scholar]

- 74.Powell BJ, Stanick CF, Stalko HM, Dorsey CN, Weiner BJ, et al. 2017. Toward criteria for pragmatic measurement in implementation research and practice: a stakeholder-driven approach using concept mapping. Implement. Sci 12:118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Ragin C 1987. The Comparative Method: Moving Beyond Qualitative and Quantitative Strategies Berkeley: University of California Press [Google Scholar]

- 76.Ragin C 2009. Redesigning Social Inquiry: Fuzzy Sets and Beyond Chicago: University of Chicago Press [Google Scholar]

- 77.Rihoux B, Ragin CC. (2008). Configurational Comparative Methods: Qualitative Comparative Analysis (QCA) and Related Techniques (Vol. 51). Thousand Oaks, CA: Sage Publications [Google Scholar]

- 78.Rogers E, Fernandez S, Gillispie C, Smelson D, Hagedorn HJ, et al. 2013. Telephone care coordination for smokers in VA mental health clinics: protocol for a hybrid type-2 effectiveness-implementation trial. Addict. Sci. Clin. Pract 8:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Rosas LG, Lv N, Xiao L, Lewis MA, Zavill P, et al. 2016. Evaluation of a culturally-adapted lifestyle intervention to treat elevated cardiometabolic risk of Latino adults in primary care (Vida Sana): a randomized controlled trial. Contemp. Clin. Trials 48:30–40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Sandelowski M 2000. Combining qualitative and quantitative sampling, data collection, and analysis techniques in mixed-method studies. Res. Nurs. Health 23(3):246–55. [DOI] [PubMed] [Google Scholar]

- 81.Schneider CQ, Wagemann C. 2010. Standards of good practice in qualitative comparative analysis (QCA) and fuzzy-sets. Comp. Sociol 9(3):397–418. [Google Scholar]

- 82.Schwingel A, Galvez P, Linares D, Sebastiao E. 2017. Using a mixed-methods RE-AIM framework to evaluate community health programs for older Latinas. J. Aging Health 29(4):551–93. [DOI] [PubMed] [Google Scholar]

- 83.Schwitters A, Lederer P, Zilversmit L, Gudo PS, Ramiro I, et al. 2015. Barriers to health care in rural Mozambique: a rapid assessment of planned mobile health clinics for ART. Glob. Health Sci. Pract 3:109–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Scrimshaw SCM, Hurtado E. 1987. Rapid Assessment Procedures for Nutrition and Primary Health Care: Anthropological Approaches to Improving Programme Effectiveness Los Angeles, CA: UCLA Latin American Center [Google Scholar]

- 85.Shanks CB, Harden S. 2016. A Reach, Effectiveness, Adoption, Implementation, Maintenance Evaluation of weekend backpack food assistance programs. Am. J. Health Promot 30(7):511–20. https://doi:10.4278/ajhp.140116-QUAL-28. Epub 2016 Jun 16 [DOI] [PubMed] [Google Scholar]

- 86.Stange K, Crabtree BF, Miller WL. 2006. Publishing multimethod research. Ann Fam Med 4:292–4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Swindle T, Johnson SL, Whiteside-Mansell L, Curran GM. 2017. A mixed methods protocol for developing and testing implementation strategies for evidence-based obesity prevention in childcare: a cluster randomized hybrid type III trial. ZXZ 12:90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Teddlie C, Tashakkori A. 2003. Major issues and controversies in the use of mixed methods in the social and behavioral sciences. In Handbook of Mixed Methods in the Social and Behavioral Sciences, ed. Tashakkori A, Teddlie C, pp 3–50. Thousand Oaks, CA: Sage [Google Scholar]

- 89.Thorpe KE, Zwarenstein M, Oxman AD, Treweek S, Furberg CD, et al. 2009. A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. J. Clin. Epidemiology 62:464–75 [DOI] [PubMed] [Google Scholar]

- 90.Thygeson NM, Solberg LL, Asche SE, Fontaine P, Pawlson LG, Scholle SH. 2012. Using fuzzy set qualitative comparative analysis (fs/QCA) to explore the relationship between medical “homeness” and quality. Health Serv. Res 47(1 Pt 1):22–45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Trochim WM. 1989. An introduction to concept mapping for planning and evaluation. Eval. Prog. Plann 12:1–16 [Google Scholar]

- 92.Van der Kleij RM, Crone MR, Paulussen TG, van de Gar VM, Reis R. 2015. A stitch in time save nine? A repeated cross-sectional case study on the implementation of the intersectoral community approach Youth At a Health Weight. BMC Public Health 15:1032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Vindrola-Padros C, Vindrola-Padros B. 2018. Quick and dirty? A systematic review of the use of rapid ethnographies in healthcare organization and delivery. BMJ Qual. Saf 27:321–30 [DOI] [PubMed] [Google Scholar]

- 94.Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, et al. 2015. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement. Sci 10:109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Watts BV, Shiner B, Zubkoff L, Carpenter-Song E, Ronconi JM, Coldwell CM. 2014. Implementation of evidence-based psychotherapies for posttraumatic stress disorder in VA specialty clinics. Psychiatr. Serv 65(5):648–53 [DOI] [PubMed] [Google Scholar]

- 96.World Health Organization. 2012. Changing Mindsets: Strategy on Health Policy and Systems Research Geneva: WHO. [Google Scholar]

- 97.Wright A, Sittig DF, Ash JS, Erikson JL, Hickman TT, et al. 2015. Lessons learned from implementing service-oriented clinical decision support at four sites: a qualitative study. Int. J. Med. Inform 84:901–11 [DOI] [PubMed] [Google Scholar]

- 98.Zatzick D, Rivera F, Jurkovich G, Russo J, Trusz SG, et al. 2011. Enhancing the population impact of collaborative care interventions: mixed method development and implementation of stepped care targeting posttraumatic stress disorder and related comorbidities after acute trauma. Gen. Hosp. Psychiatry 33:123–34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Zatzick D, Russo J, Darnell D, Chambers DA, Palinkas LA, et al. 2016. An effectiveness-implementation hybrid trial study protocol targeting posttraumatic stress disorder and comorbidity. Implement. Sci 11:58. [DOI] [PMC free article] [PubMed] [Google Scholar]