Abstract

Electroencephalography (EEG) provides a non-invasive measure of brain electrical activity. Neural population models, where large numbers of interacting neurons are considered collectively as a macroscopic system, have long been used to understand features in EEG signals. By tuning dozens of input parameters describing the excitatory and inhibitory neuron populations, these models can reproduce prominent features of the EEG such as the alpha-rhythm. However, the inverse problem, of directly estimating the parameters from fits to EEG data, remains unsolved. Solving this multi-parameter non-linear fitting problem will potentially provide a real-time method for characterizing average neuronal properties in human subjects. Here we perform unbiased fits of a 22-parameter neural population model to EEG data from 82 individuals, using both particle swarm optimization and Markov chain Monte Carlo sampling. We estimate how much is learned about individual parameters by computing Kullback-Leibler divergences between posterior and prior distributions for each parameter. Results indicate that only a single parameter, that determining the dynamics of inhibitory synaptic activity, is directly identifiable, while other parameters have large, though correlated, uncertainties. We show that the eigenvalues of the Fisher information matrix are roughly uniformly spaced over a log scale, indicating that the model is sloppy, like many of the regulatory network models in systems biology. These eigenvalues indicate that the system can be modeled with a low effective dimensionality, with inhibitory synaptic activity being prominent in driving system behavior.

Author summary

Electroencephalography (EEG), where electrodes are used to measure electric potential on the outside of the scalp, provides a simple, non-invasive way to study brain activity. Physiological interpretation of features in EEG signals has often involved use of collective models of neural populations. These neural population models have dozens of input parameters to describe the properties of inhibitory and excitatory neurons. Being able to estimate these parameters by direct fits to EEG data holds the promise of providing a real-time non-invasive method of inferring neuronal properties in different individuals. However, it has long been impossible to fit these nonlinear, multi-parameter models effectively. Here we describe fits of a 22-parameter neural population model to EEG spectra from 82 different subjects, all exhibiting alpha-oscillations. We show how only one parameter, that describing inhibitory dynamics, is constrained by the data, although all parameters are correlated. These results indicate that inhibitory synaptic activity plays a central role in the generation and modulation of the alpha-rhythm in humans.

Introduction

The classical alpha rhythm is one of the most remarkable features observed in electroencephalogram (EEG) recordings from humans [1, 2]. First discovered by Berger in the 1920s [3, 4] these waxing and waning oscillations of 8—13 Hz, that are prominent during eyes-closed, are a defining feature of the resting EEG and have played a central role in phenomenological descriptions of brain electromagnetic activity during cognition and in behaviour [5]. Despite being discovered almost a century ago, the alpha rhythm remains poorly understood, both in terms of its underlying physiological and dynamical mechanisms as well as its relevance to brain information processing and function. The received view proposes a central role for the thalamus [5] with early theories suggesting that alpha oscillatory activity intrinsic to thalamus ‘drives’ or ‘paces’ overlying cortical tissue [6]. This conception has been modified to suggest that it is feedback reverberant activity between thalamus and cortex which underpins the genesis of alpha band cortical activity [5, 7]. A contrasting hypothesis is that such oscillatory activity arises intrinsically in cortex, emerging purely from the recurrent activity between cortical populations of excitatory and inhibitory neurons. These different hypotheses have motivated a variety of theories for describing the alpha-rhythm [8–12].

Theories of alpha-rhythmogenesis can be divided into two major frameworks: those that take a nominal microscopic perspective by modeling the behaviour of large numbers of synaptically connected biophysically realistic individual neurons [8, 9] and those that take a macroscopic, or mean-field, stance by considering the activity of interacting populations of neurons [13–15]. While the microscopic approach is more fundamental, macroscopic models are better matched to the spatial scale at which the bulk electrophysiological measurement, the EEG, occurs.

Despite their reduced complexity, it is still extremely difficult to estimate the input parameters of neural population models by direct fits to EEG data. Up until now, the use of such models to explain alpha-rhythmogenesis has largely been limited to calculations of the forward problem: manually selecting input parameters such that the model generates alpha oscillations. It is vastly more difficult to solve the inverse problem, where a full set of parameters and their uncertainties are estimated directly from fits to EEG data. Yet solving this inverse problem is crucial if we are to ever regard the inferred parameter values as physiologically meaningful. As we will show, the fundamental challenge in fitting a neural population model is that many different combinations of input parameters can give the same EEG signal. Understanding the nature of such parameter unidentifiability (discussed next) in a neural population model is the major contribution of this paper.

Parameter identifiability and sloppy models

Neural population models are high-order, multi-parameter, dynamical systems. It has long been known that, even in simple dynamical systems, there can exist very different parameter combinations which generate similar predictions [16–27]. This many-to-one mapping between parameter inputs and model observables is referred to as structural unidentifiability, if the predictions are exactly identical, or practical identifiability, if the predictions are nearly identical. Any fitting of an unidentifiable model to data results in large, correlated parameter uncertainties. Many developments in the study of identifiability in differential equation models have been motivated by problems in systems biology involving biomolecular regulatory networks [28–35].

Model unidentifiability is closely related to, though distinct from, model sloppiness. A model is referred to as sloppy if the sensitivity of its predictions for different parameters covers a broad range [36–41]. These sensitivities, quantified by the eigenvalues of the Fisher information matrix, are roughly uniformly spaced over a log scale. This characteristic has been discovered in a variety of nonlinear models and arises from the geometry of nonlinear models projected into data space [37, 38]. Parameters that sensitively affect model predictions are termed ‘stiff’ while those that can be changed with little effect on predictions are termed ‘sloppy’. While sloppy parameters are often unidentifiable as well, the terms are not synonymous [42, 43]. Like unidentifiability, sloppiness has been found to be prevalent in models of biomolecular networks [44–47].

Unidentifiability and sloppiness are pervasive in nonlinear fitting problems, the simplest examples of which are fits to polynomials or to multiple exponentials [36, 38]. Since parameter estimation in differential equation models always involves nonlinear fits (to exponential impulse responses in the time domain or rational transfer functions in the spectral domain, for example), unidentifiability and sloppiness should always be a concern in dynamical systems. This is true even for linear, time-invariant systems [19, 22, 26]. Of course, explicitly nonlinear functions of parameters certainly exacerbate the problem—a nonlinear function at saturation will give the same response for a range of different parameter inputs, for example. Unidentifiabilities also arise when a model supports phenomena at significantly different timescales. For instance, if only dynamics on a slower timescale can be observed, parameters which determine behavior at the faster timescale would not be constrained by data [48].

Unlike in systems biology modeling, in neurophysical modeling there has been little recognition of the problem of unidentifiability, beyond select examples in neural code models [49], a thalamo-cortical neural population model [50], and dynamic causal models [51]. This has been cited in [52] as an example of how approaches used in systems biology can help address problems in computational neuroscience [53]. However, despite this lack of formal discussion, implicit recognition of unidentifiability in computational neuroscience has been widespread, with several studies, including those for models of single neurons [54–58], occulomotor integration [59], and neural populations [60–62], detecting the large, correlated parameter uncertainties that are the hallmark of unidentifiability and sloppiness.

Outline

In this paper we examine identifiability and sloppiness in a well-known neurophysical model [11, 61, 63, 64] with 22 unknown parameters. We concentrate on fitting EEG data exhibiting alpha-oscillations in resting state human subjects in an attempt to understand the mechanistic origin of this prominent, yet still poorly understood, phenomenon. We fit the model to the EEG spectrum from each of 82 subjects using both a particle swarm optimization and Markov chain Monte Carlo method. When viewed across all subjects, only 1 of the original parameters, the decay rate of inhibitory synaptic activity, emerges as being identifiable. This indicates that inhibitory synaptic activity is essential for explaining alpha-rhythmogenesis. Examination of the Fisher information matrix shows that there are ∼5 parameter combinations that are identifiable, a considerable reduction from the original 22. This indicates that, although most parameters are unidentifiable, their values cannot in general be selected arbitrarily to fit the data.

Neural population model

The neural population model used in this paper is well established and has been described previously [11, 65]. Semi-analytical and numerical solutions of these equations have revealed a rich repertoire of physiologically plausible activity including noise driven, limit cycle and chaotic oscillations at the frequency of the mammalian alpha rhythm [11, 61, 66, 67]. Here we use the spatially homogeneous version given by the following coupled set of first and second order ordinary differential equations:

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

where

| (7) |

These equations describe the interactions between inhibitory and excitatory neuronal populations in a macrocolumn.

Table 1 lists the parameters along with their physiological ranges as assumed by Bojak and Liley [61]. The temporal dynamics of mean soma membrane potentials for the excitatory (he(t)) and inhibitory (hi(t)) populations are described in Eqs (1) and (2). The temporal dynamics of the synaptic activity, Iee(t), Iie(t), Iei(t), and Iii(t), are given by Eqs (3)–(6). The relationship between the mean population firing rate, Sj, and the mean soma membrane potentials of the respective population is given in Eq (7). It has been shown that the local field potential measured in the EEG is linearly proportional to the mean soma membrane potentials of the excitatory populations, he(t) [68, 69].

Table 1. Physiological parameters of the model presented along with their physiological ranges.

| No | Physiological parameter | Symbol | Min | Max |

|---|---|---|---|---|

| 1 | Mean resting membrane potential of the excitatory population | -80 mV | -60 mV | |

| 2 | Mean resting membrane potential of the inhibitory population | -80 mV | -60 mV | |

| 3 | Mean Nernst membrane potential of the excitatory population | -20 mV | 10 mV | |

| 4 | Mean Nernst membrane potential of the inhibitory population | -20 mV | 10 mV | |

| 5 | Maximum mean firing rate of the excitatory population | 0.05/ms | 0.5/ms | |

| 6 | Maximum mean firing rate of the inhibitory population | 0.05/ms | 0.5/ms | |

| 7 | Firing thresholds of the excitatory population | -55 mV | -40 mV | |

| 8 | Firing thresholds of the inhibitory population | -55 mV | -40 mV | |

| 9 | Std. deviation of firing thresholds of the excitatory population | σe | 2 mV | 7 mV |

| 10 | Std. deviation of firing thresholds of the inhibitory population | σi | 2 mV | 7 mV |

| 11 | Passive membrane decay time const. of the excitatory population | τe | 5 ms | 150 ms |

| 12 | Passive membrane decay time const. of the inhibitory population | τi | 5 ms | 150 ms |

| 13,14 | Excitatory postsynaptic potential rate constant | γee, γei | 0.1/ms | 1.0/ms |

| 15,16 | Inhibitory postsynaptic potential rate constant | γie, γii | 0.01/ms | 0.5/ms |

| 17 | Postsynaptic potential amplitude of the excitatory population | Γe | 0.1 mV | 2.0 mV |

| 18 | Postsynaptic potential amplitude of the inhibitory population | Γi | 0.1 mV | 2.0 mV |

| 19 | Rate of the excitatory input to the excitatory population | pee | 0.0 /ms | 10.0/ms |

| 20 | Rate of the excitatory input to the inhibitory population | pei | 0.0 /ms | 10.0/ms |

| 21 | Rate of the inhibitory input to the excitatory population | pie | 0.0/ms(fixed) | |

| 22 | Rate of the inhibitory input to the inhibitory population | pii | 0.0/ms(fixed) | |

| 23 | Total number of connections an excitatory neuron receives from nearby excitatory neurons | 2000 | 5000 | |

| 24 | Total number of connections an inhibitory neuron receives from nearby excitatory neurons | 100 | 1000 | |

| 25 | Total number of connections an excitatory neuron receives from nearby inhibitory neurons | 2000 | 5000 | |

| 26 | Total number of connections an inhibitory neuron receives from nearby inhibitory neurons | 100 | 1000 | |

Among the 26 physiological parameters involved in the model, there are only 22 unknown parameters that are fit to the data (In our implementation, pie and pii are fixed constants, γee = γei ≡ γe, and γie = γii ≡ γi).

Spectral analysis of EEG data

In this study we fit the above model to EEG recordings from 82 different individuals. This data is a subset of a larger dataset which, in its full version, consists of 14 experimental tasks performed by each of the 109 subjects with recordings on 64 electrodes according to the International 10-10 System. The full set, collected and contributed by Schalk et al [70] using the BCI2000 instrumentation system, is available for public access in PhysioNet [71] (https://www.physionet.org/pn4/eegmmidb/).

For the purpose of studying alpha-rhythm, we restricted our analysis to signals from the Oz electrode, selecting data from individuals whose EEG spectrum exhibited clear alpha peaks during the associated eyes-closed task. Welch’s method of averaging the spectra derived from multiple overlapping time segments [72] was used to estimate the single spectrum for a particular individual. This approach improves the precision of the power spectral density estimate by sacrificing some spectral resolution. A one-minute EEG signal associated with a particular individual, sampled at 160 Hz, was divided into segments using a 4-second Hamming window with an overlap of 50%.

Since the computational demands of fitting our model directly to EEG time series data are prohibitive, we fit the EEG spectrum instead. This approach is in accordance with earlier fits of neural population models [50, 61, 62], which involved fewer unknown parameters than we have here, and generally only fit a single EEG spectrum. We are thus assuming stationarity of the system over the one-minute EEG signal, where stationarity here means that it is the parameters that are constant; the states are allowed to vary about a stable fixed point. We furthermore assume that deviations of the state away from the fixed point are small enough to allow linearization of the model. Though parameters are assumed to be constant within a given EEG recording they can of course vary between different recordings (and thus individuals). Because of well-known nonlinearities and nonstationarities in EEG recordings, our linearized model was used in inference procedures only for frequencies between 2 Hz and 20 Hz. It is well known that well over 95% of the spectral power in the resting M/EEG falls below 30 Hz. Indeed, typical estimates of resting M/EEG spectral edge frequency (SEF95) (i.e. the frequency below which 95% of the spectral power is contained) are in the range of 24-26 Hz (see e.g. [73–75]).

To demonstrate the accuracy of our inference methods, we also show an example of parameter estimation from a simulated spectrum where the underlying parameter set is known (and referred to as the ground truth). In order to choose a plausible parameter set for this test, we use the maximum likelihood estimate found for Subject 77 (the estimate found from any other subject would also have been suitable). The simulated spectrum was then calculated by sampling each frequency channel from the gamma-distributed model prediction.

Model fitting and analysis

To examine the identifiability and sloppiness of the neural population model, we fit to an EEG spectrum and estimate the posterior distribution over the 22 unknown parameters. We then characterize the properties of this distribution to diagnose the signatures of unidentifiability and sloppiness. To ensure that our results are not specific to a particular fitting algorithm, we use two independent methods: particle swarm optimization (PSO) and Markov chain Monte Carlo (MCMC). To ensure that our results are not specific to a given individual, we estimate the 22 posterior distributions, using both methods, for each of the 82 different EEG spectra.

A full description of the methods for fitting the data and analyzing the results is given in Section “Methods” where we first describe the procedure for calculating the predicted model spectrum, along with the likelihood function for the spectral estimate. We then outline the two fitting schemes, focusing on how we use them to sample from the 22-dimensional posterior distribution. Finally, we describe use of the Kullback-Leibler divergence (KLD) to summarize how much we learned about individual parameters, and the Fisher information matrix (FIM), to assess the sloppiness and identifiability of the model. Our implementation of the methods and all datasets are publicly available at https://github.com/cds-swinburne/Hartoyo-et-al-2019-DATA-n-CODE.

Results

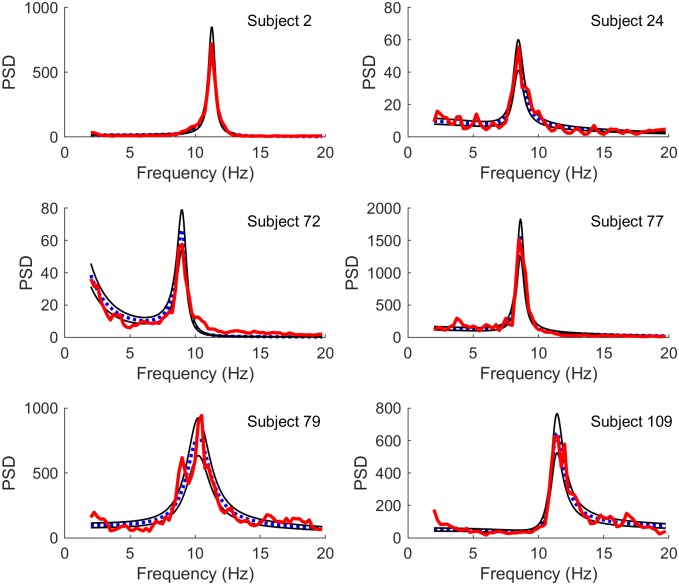

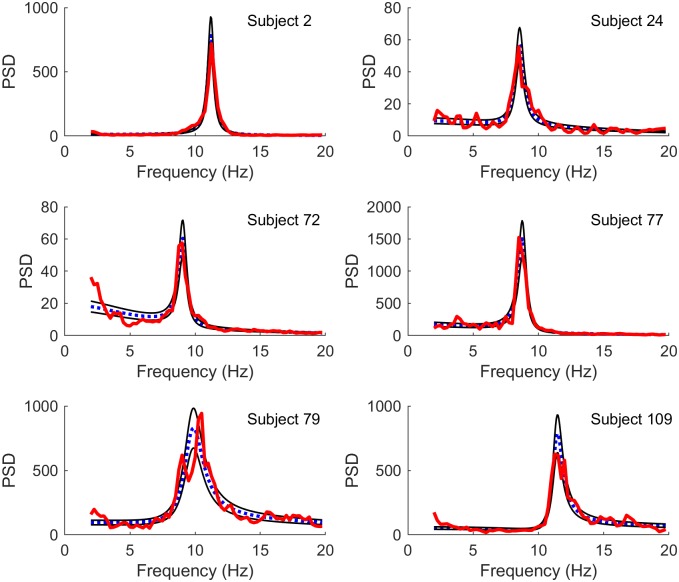

Figs 1 and 2 illustrate best fits using two different methods: PSO, which finds the best fits in least squares (LS) manner, and MCMC, which samples solutions based on maximum likelihood (ML) estimations. Although the fits are generally similar, subtle differences between the two methods can be observed. For example, in subject 72 the the ML fit performs better on regions with lower power but less well in regions with higher power. This difference is expected: while LS are computed over unweighted power spectra, ML favors frequencies with lower variances which typically are those with lower power spectra.

Fig 1. Best fits using least squares.

Comparison of model spectra (blue dotted line) fit to experimental spectra (red thick line) by least squares (LS) minimization using particle swarm optimization, for a select set of subjects. Also shown are the 16% and 84% quantiles based on the gamma distribution for the fitted spectra (thin black lines). The subjects have been selected to show the range of spectra included in the full data set. A comparison of the experimental time series with representative samples of modelled time series for the same subjects is included in S1 Fig.

Fig 2. Best fits using maximum likelihood.

Comparison of model spectra (blue dotted line) fit to experimental spectra (red thick line) by maximum likelihood (ML) estimation using MCMC. Also shown are the 16% and 84% quantiles (thin black lines). The subjects are the same as in Fig 1. It should be noted that LS and ML fits are expected to differ in this case, since the LS fits are more sensitive to deviations in the unweighted spectral power (typically in regions with larger power) whereas the ML fits are more sensitive to deviations in regions where the variance of spectral power is small (typically in regions of lower spectral power).

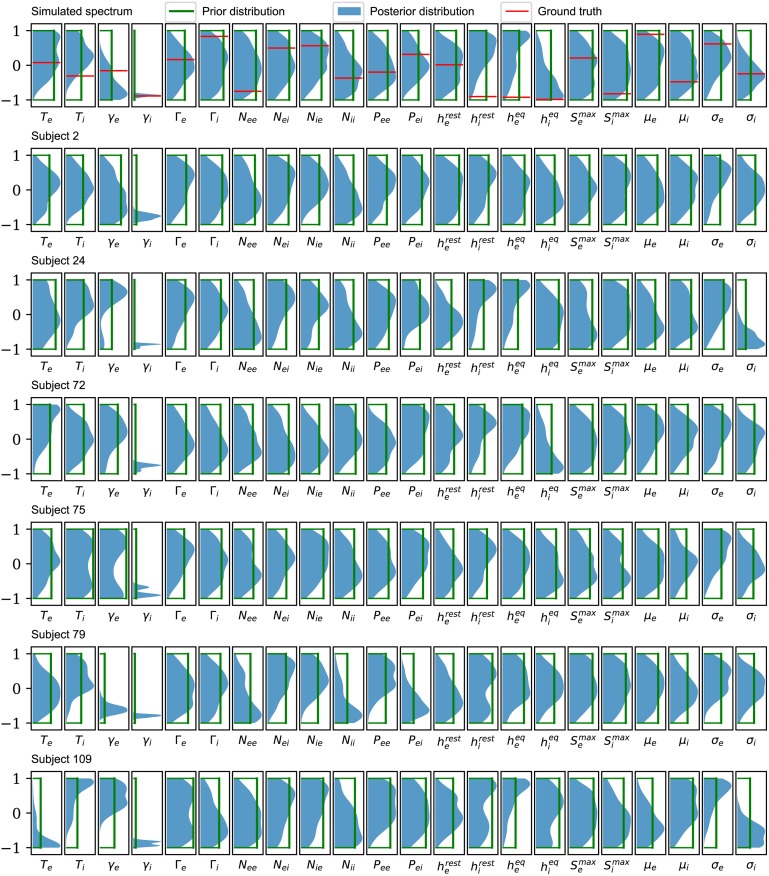

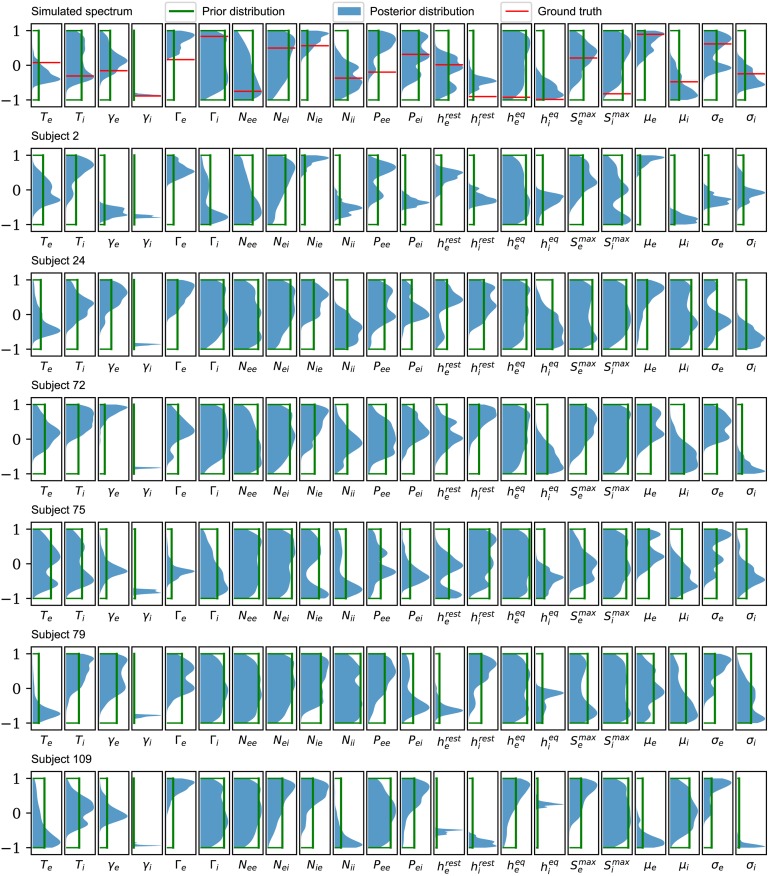

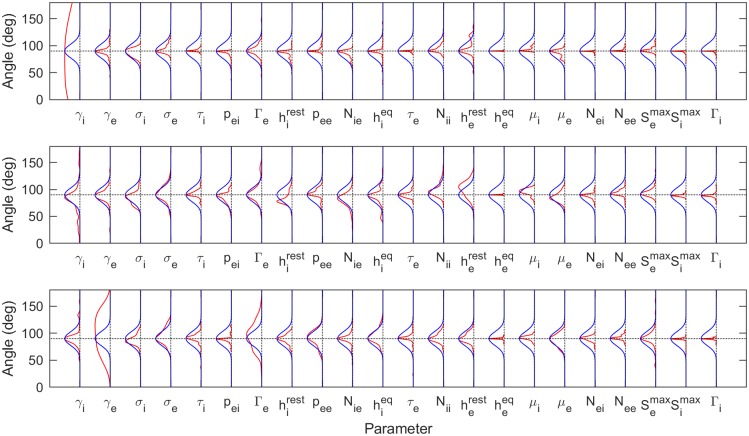

The posterior marginal distributions for each parameter, from a few subjects, are shown in blue in Fig 3 (from PSO sampling) and Fig 4 (from MCMC sampling). These are compared to the uniform prior distributions (green). The top row, which corresponds to analysis of the simulated spectrum, also shows the ground truth value (red). Each parameter is plotted in normalized coordinates, where -1 corresponds to the lower limit of the plausible parameter interval and +1 corresponds to the upper limit (see Table 1).

Fig 3. Posterior distributions based on PSO sampling.

Comparison of the posterior (solid color) and prior marginal (green line) distributions for the selected subjects used in Figs 1 and 2. For the simulated spectrum (first row), the distributions of the parameters are presented against the ground truths for the corresponding parameters (red line). The distributions are based on kernel density estimates from the best 100 of 1000 randomly seeded particle swarm optimizations for each subject. The seeds are uniformly distributed over the allowed parameter ranges. The major result is that, across the full set of 82 subjects, only the parameter γi is significantly constrained. All other parameters have nearly the same uncertainties as the prior.

Fig 4. Posterior distributions based on MCMC sampling.

Comparison of the posterior marginal distributions (solid color) with the prior marginal distributions (green line) for the selected subjects used in Figs 1 and 2. For the simulated spectrum (first row), the distributions of the parameters are presented against the ground truths for the corresponding parameters (red line). Each distribution is based on a kernel density estimate from 1000 samples (sub-sampled from 106MCMC samples). Consistent with the conclusions from PSO sampling, only γi is consistently constrained by the data when viewed across all subjects.

Posterior distributions found using PSO sampling are generally broader than those found using MCMC sampling. This behavior is expected from the differences between the sampling methods: while MCMC sampling can retain correlations between samples even with significant subsampling, the different PSO samples are independent from one another. This demonstrates the superiority of the PSO approach, at least under the sampling conditions employed here. Nevertheless, both methods show that it is the postsynaptic potential rate constant of the inhibitory population, γi, which is consistently constrained by the data across different subjects.

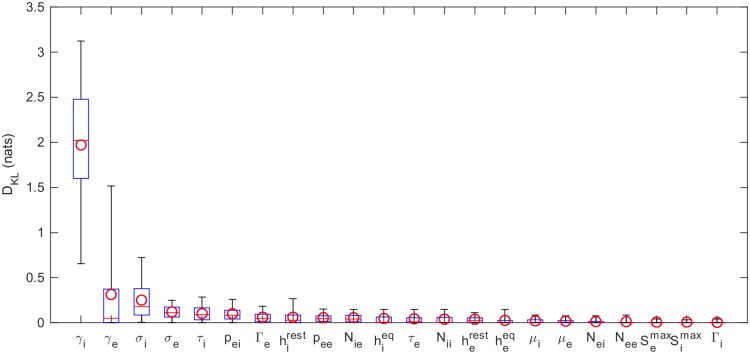

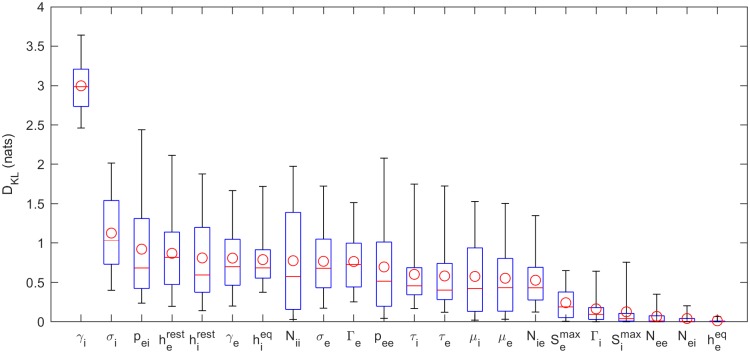

To better quantify how much we have learned about each parameter, the KLDs for each parameter, from all 82 subjects, are shown in Fig 5 (for PSO) and Fig 6 (for MCMC). These confirm that it is γi that is best-constrained by the data. Most other posterior distributions are only slightly narrower than their prior distribution. Furthermore, by analysis of a simulated spectrum (see Table 2), we find that the γi estimate is accurate as well as precise.

Fig 5. KLDs based on PSO samples.

Kullback-Leibler divergences of marginal posterior parameter distributions calculated relative to uniform prior distributions. The posteriors are based on the best 100 of 1000 randomly seeded runs of particle swarm optimization (see Fig 3). The boxes represent the 25% and 75% quartiles; the whiskers represent the 5% and 95% quantiles; the red lines show the median KLDs and the circles the mean KLDs over the full set of 82 subjects.

Fig 6. KLDs based on MCMC samples.

Kullback-Leibler divergences of marginal posterior parameter distributions (see Fig 4). Here kernel density estimates based on 1000 MCMC samples of the posterior parameter distribution are used.

Table 2. Accuracy and precision of parameter estimates in the analysis of the simulated spectrum.

| Parameter | PSO samples | MCMC samples | ||

|---|---|---|---|---|

| Normalized error | SD | Normalized error | SD | |

| 0.0224 | 0.4771 | 0.1198 | 0.3772 | |

| 0.6165 | 0.4785 | 0.2231 | 0.1669 | |

| 0.6402 | 0.5702 | 0.5069 | 0.5779 | |

| 0.2550 | 0.4864 | 0.1516 | 0.2082 | |

| 0.1382 | 0.5405 | 0.0220 | 0.3967 | |

| 0.3847 | 0.5322 | 0.5205 | 0.4957 | |

| μe | 0.4217 | 0.5281 | 0.1260 | 0.2655 |

| μi | 0.2423 | 0.5216 | 0.0613 | 0.3217 |

| σe | 0.2215 | 0.4528 | 0.0972 | 0.3991 |

| σi | 0.0064 | 0.3669 | 0.0459 | 0.2440 |

| τe | 0.1043 | 0.4970 | 0.1640 | 0.2075 |

| τi | 0.3050 | 0.3934 | 0.2444 | 0.4614 |

| γe | 0.1939 | 0.3761 | 0.1747 | 0.3269 |

| γi | 0.0071 | 0.0346 | 0.0156 | 0.0215 |

| Γe | 0.0972 | 0.4558 | 0.2641 | 0.2167 |

| Γi | 0.4104 | 0.5393 | 0.3805 | 0.5452 |

| pee | 0.0708 | 0.4707 | 0.3750 | 0.3335 |

| pei | 0.1199 | 0.4443 | 0.0031 | 0.4528 |

| Nee | 0.3600 | 0.5229 | 0.2575 | 0.5362 |

| Nei | 0.2240 | 0.5255 | 0.1608 | 0.5410 |

| Nie | 0.2087 | 0.4796 | 0.0784 | 0.2057 |

| Nii | 0.1042 | 0.4789 | 0.0108 | 0.3417 |

The accuracy of a parameter estimate is given by its deviation from the known ground truth value. Shown is the mean of this deviation for different sample estimates, normalized to the prior of the parameter. Also shown is the precision, given by the standard deviation of the sample estimates. The values in bold are the top three lowest values for each category. In this single case, the estimate of γi always has the highest precision, for both PSO and MCMC sampling, and one of the highest accuracies.

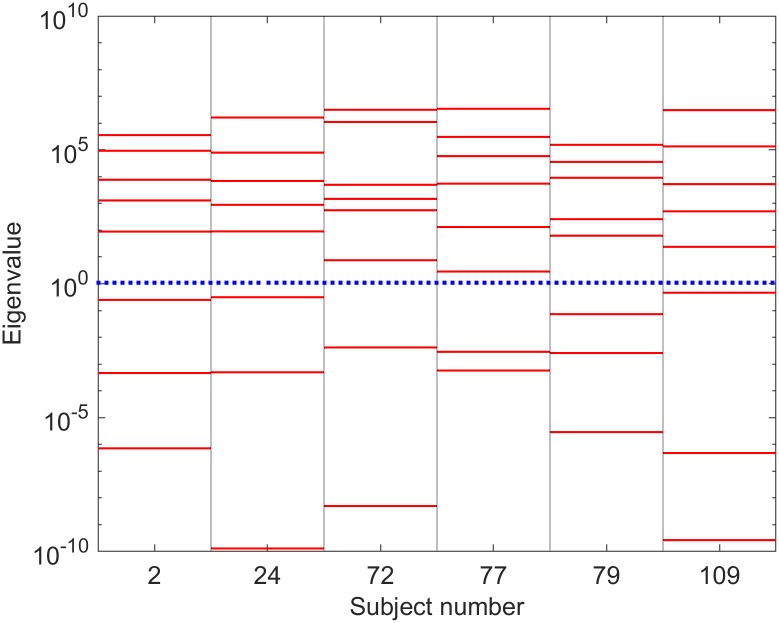

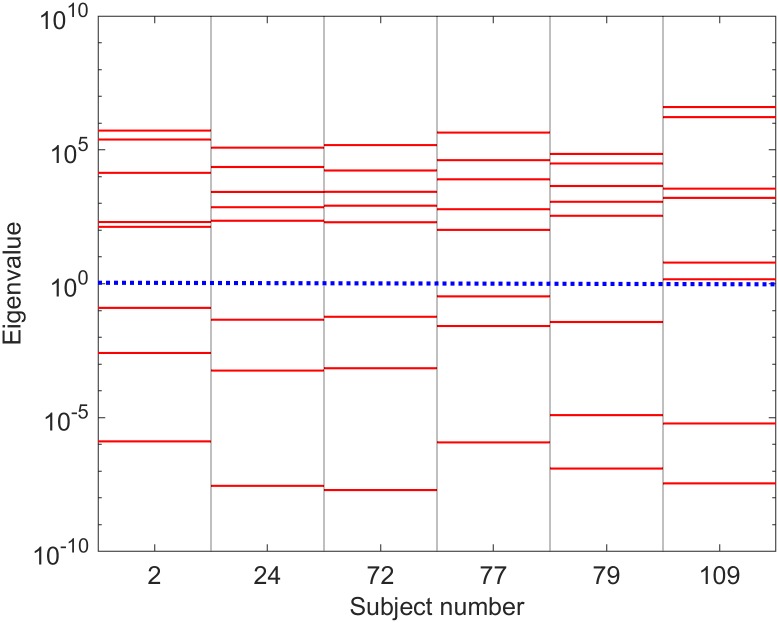

Eigenvalues of the Fisher information matrix (FIM) are shown on log scale for the selected subjects are shown in Fig 7 (for PSO) and Fig 8 (for MCMC), i.e. those computed around LS best fits and ML best fits, respectively. In all cases presented in the figures, the eigenvalues are spread over many decades with approximately uniform spacing over a log scale. This indicates that this neural population model is sloppy [36–41]. Comparison of these eigenspectra across different subjects suggests that there are usually ∼5 identifiable parameter combinations for each subject.

Fig 7. FIM eigenspectra based on LS best fits.

Leading eigenvalues of the FIM for selected subjects. The FIM is numerically calculated using dimensionless increments at the parameters corresponding to a least squares fit to the experimental spectrum. Of the 22 possible eigenvalues, roughly 7 correspond to zero, at least to the numerical accuracy of the eigenvalue estimation routine. Typically 7 of the remaining 15 are too small (relative to the largest eigenvalue) to be reliably calculated using the Matlab™ eig command. The roughly uniform distribution of the eigenvalues on a log scale is a characteristic of a sloppy model. The blue dotted line delineates the separation of identifiable (above the dotted line) from unidentifiable (below the dotted line) regimes [43]. Thus ∼5 parameter combinations are usually identifiable, suggesting that the 22-parameter model can be described using 5 or 6 effective parameters. A comprehensive plot of the FIM eigenspectra for all subjects is included in S2 Fig; the spectra observed above are typical of those seen across the full set of subjects.

Fig 8. FIM eigenspectra based on ML best fits.

This is similar to Fig 7, except here the FIM is calculated around the best fit found from maximum likelihood optimization for each subject’s spectrum.

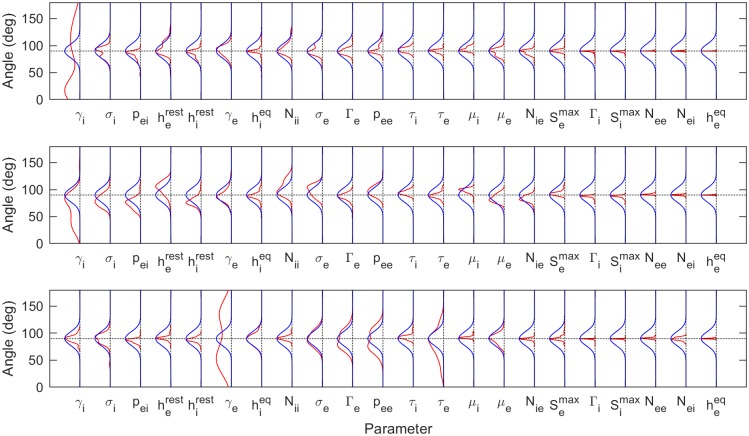

The larger FIM eigenvalues define eigenvector directions corresponding to identifiable parameter combinations. To understand the parameters that contribute the most to each (identifiable) eigenvector we compute the angular distance between each parameter direction and a given eigenvector. The closer the angular distance to 0° or 180°, the greater the parameter contributes to the parameter combination and thus the more identifiable that parameter. Fig 9 (LS fits) and Fig 10 (ML fits) show the distributions, across all 82 subjects, of angular distances between each parameter and the three stiffest parameter combinations (red). For a null comparison, the angular distances to vectors randomly pointed in the 22-dimensional space are also shown (blue). For both LS and ML fits, γi again stands out. It has the greatest contribution to the stiffest parameter direction, once again showing that it is identifiable. Interestingly, the postsynaptic potential rate constant of the excitatory population, γe, dominates the third stiffest parameter combination. This indicates that it may also play an identifiable role in driving system dynamics, though to a lesser extent than γi.

Fig 9. Contributions to the eigenvectors corresponding to 1st, 2nd and 3rd eigenvalues based on LS best fits.

Alignment of the leading eigenvectors relative to each parameter. 0° and 180° represent perfect alignment (maximum contribution) whereas 90° represents orthogonality (no contribution). To compare the 82 subjects, results are presented as angular distributions (red lines). The first row is for the largest eigenvalue, the second row for the second-large eigenvalue, etc. The blue lines show the expected angular distributions for a randomly pointed vector in the 22-dimensional parameter space, illustrating how these are most likely to be orthogonal to any parameter direction. The angles are the inverse cosines of the direction cosines of the vectors. The distributions indicate that the parameters γi and (to a lesser extent) γe may play significant roles in determining the spectral form in their own right. The remaining parameters appear largely in complicated combinations.

Fig 10. Components of eigenvectors corresponding to 1st, 2nd and 3rd eigenvalues based on ML best fits.

As for Fig 9, but using the ML best fits, again showing the significant roles played by the parameters γi and γe.

Discussion

Fitting a neural population model to EEG data is an ill-posed inverse problem, where a wide range of parameter combinations are consistent with the observed spectrum. Our approach to fitting such an unidentifiable model is to generate many samples of parameter estimates, all of which give a good fit to the data, and then characterize the structure of these samples. The steps we used can be summarized as follows:

Optimization with a prior: Using two different optimization methods, particle swarm optimization (PSO) with least squares minimization and Markov chain Monte Carlo (MCMC) with likelihood maximization, we search for parameter combinations which provide a good fit to the data. This required imposing a prior distribution on the parameters to ensure that only solutions within physiologically-plausible ranges were accepted.

Marginal posterior: information gained about parameters individually: To characterize the sampled parameter sets, we started by estimating the marginal posterior distribution of each parameter, using the Kullback-Leibler divergence to separate the information gained from the data from that which was already present in the prior. This showed that only γi, the rate constant associated with inhibitory synaptic activity, had significantly less variability than its prior.

Fisher information matrix: information gained about parameters collectively: The marginal posterior characterizes our knowledge about each parameter individually. It does not capture what the data has taught us about the parameters collectively. Pairwise correlations and pairwise marginal distributions do not help either (See S4 Fig for details). In other words, because of the complexity of the interrelations among parameters, marginal posteriors for individual parameters and even pairs cannot fully describe what we have learned from the data. Clearly the joint posterior distribution has the information we need, but estimating this would require generating a prohibitive number of parameter estimates to adequately sample the 22-dimensional space. We rely instead on the Eigen-analysis of the Fisher Information Matrix (FIM), which examines the second-order behaviour of the model around the best fit solution. The fact that many of the eigenvalues of the FIM are zero or nearly zero indicates that the manifold surrounding the global optimum is essentially flat (zero curvature) in those directions, meaning that certain parameter combinations are impossible to determine from the data. The spacing of the first several eigenvalues is characteristic of the ‘hyper-ribbon’ geometry of sloppy models [37, 38].

Characterization of unidentifiability and sloppiness helps quantify the degree to which a model is over-parameterized. This in turn helps to illuminate how it can be simplified. The existence of correlations between parameter estimates suggests that model complexity can be reduced by grouping together, eliminating, or averaging subsets of parameters. This produces an effective model, with fewer degrees of freedom, without compromising predictive ability.

A number of model reduction techniques have been proposed for dynamical systems, such as balanced truncation [76–78], singular perturbation [79], and the manifold boundary approximation [80, 81]. In physical theory, model reduction techniques such as mean field and renormalization group methods [82] have long been used to quantify the effective parameters in complex physical systems. The concept of entropy, which enumerates the number of (unidentifiable) microstates that are consistent with a single (observable) macrostate, can be thought of as a measure of unidentifiability. Our finding that there are only ∼5 identifiable eigenvalues in the FIM spectrum indicates that the number of effective parameters in our model is only about 5. The challenge is to understand what these effective parameters mean physiologically.

Some insight into their role can be obtained by examining the derivatives of the modelled spectra in the directions of the leading eigenvectors of the Fisher information matrix [83] (See S3 Appendix for details). In broad terms, for most subjects, it appears that the leading eigenvector (effectively the inhibitory decay rate γi) is related to variation in the location of the alpha peak; the second eigenvector is related to the height of the alpha peak compared to the overall background level and (somewhat more loosely) the third eigenvector is associated with the width of the alpha peak. The remaining combinations do not appear to be related to easily identifiable features of the spectrum. Importantly, although each eigenvector could have contributions from all 22 of the original parameters, the leading 3 eigenvectors appear to be influenced by just a few of these (See S5 Fig for details). This indicates that, even though γi is the only parameter that is clearly identifiable, a subset of the other parameters is being constrained as well. This suggests that the essential character of the alpha peak —its position, height and width—is determined by only a few of the original parameters.

Neural population models are coarse-grained approximations to networks of single neurons. When trying to interpret a measurement such as the EEG, our results show that even neural population models are not coarse-grained enough, since most parameters are unidentifiable. The fact that only one of the original parameters, out of 22, is consistently identifiable, a result confirmed by comparisons over 82 subjects and two different fitting routines, would seem to be a bleak result: despite the considerable effort required to fit the model, we appear to still be ignorant of 21 of the 22 parameters. However, when fitting a nonlinear model with many parameters, there is no guarantee that any of them will be identifiable. The fact that one has been found hints that it has a special role.

This has parallels in physical systems where the effective model parameters are the ones that remain identifiable under coarse-graining. For example, it has been shown [39] that in diffusion processes and magnetic phase transitions, most of the microscopic parameters become unidentifiable at macroscopic scales, with only parameters such as the diffusion coefficient and average magnetization emerging unscathed. It has been suggested [84] that there may exist organizing principles that create ‘protectorates’ at mesoscopic scales, corresponding to particular parameters or parameter combinations that are robust to coarse-graining. The suggestion here then is that γi is an effective parameter in neural population models, one that plays a central role in generating and modulating the alpha-rhythm in cortex.

This discovery has potential clinical significance since the majority of agents used to induce a state of surgical anaesthesia are thought to function by altering the time course of postsynaptic inhibition. Being able to determine γi, the postsynaptic inhibitory rate constant, by fitting to EEG data could provide real-time physiological insights into the functional effect of anaesthesia, improving upon standard signal processing methods [85] which are not built on an underlying theory of brain dynamics.

We note that it is likely that the alpha band activity obtained from a single electrode (in our case Oz) represents the superposition of multiple independent, spatially distributed, alpha rhythm generators [86–88]. In future, it may be possible to differentiate between these different sources by jointly fitting the model to signals from multiple electrodes.

We conclude by remarking that there are deep parallels between model identifiability, dynamic compensation [89–91] and evolvability [92] in a dynamical system. If the function of the system is robust, or insensitive, to changes in some of its underlying parameters, it can be impossible to infer those parameters by studying functional observables alone. Thus the study of identifiability and sloppiness is not simply a study of fitting problems but is also an examination of which parameter values are functionally essential and which are not.

Methods

Predicted model spectrum

The model spectrum is calculated from the spatially homogeneous version of the full model equations [11]. We make the additional assumptions that:

In the vicinity of the solutions corresponding to the resting eyes-closed spectra, the system is linearly stable [93, 94].

The excitatory rate constants γee and γei are equal, as are the inhibitory rate constants γii and γie [95].

The measured EEG signal is proportional to the excitatory mean soma membrane potential, he [68, 69].

The linearised system is driven by Gaussian white noise fluctuations on the external excitatory to excitatory signal pee [11].

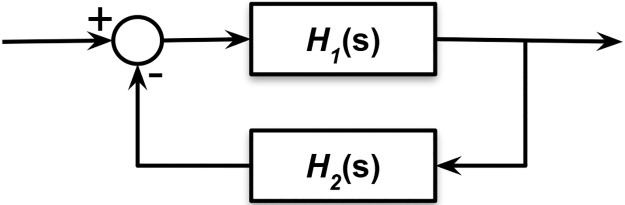

Under these assumptions, it can be shown that the linear system transfer function, T(s), is (to within an overall sign) that of a simple feedback system as shown in Fig 11 involving two third order filters:

| (8) |

| (9) |

| (10) |

Fig 11. The model as a simple feedback system.

The transfer function of the system where both H1(s) and H1(s) are third order filters.

The polynomials k11(s) and k22(s) are linear in s and k33(s) and k55(s) are quadratic in s. The derivation of this result and detailed expressions for the factors appearing in these equations are given in S1 Appendix.

Given that the spectra are assumed to arise from a white noise spectrum filtered by this transfer function, the expected value of the spectral estimate at frequency ω, given a vector of model parameters (θ), has the form:

| (11) |

where the constant α accounts for the unknown driving amplitude and for attenuation due to volume conduction and other (frequency-independent) effects. The value for α is found using a least-squares fit to the measured spectral estimates. The analytic result is:

| (12) |

Likelihood for the predicted model spectrum

For a spectrum of the form described above, with sufficiently high sampling rates and negligible measurement noise, the spectral estimate from the Welch periodogram at each sampled frequency {ωn = 2π fn; n = 1, 2, 3,…,N} is approximately an independent random variable with a known distribution [96]. The exact form is computationally involved and for our immediate purposes we will ignore the effects of window overlap and non-uniform window shape on the resulting distributions. With this simplification the probability distribution function (pdf) for the spectral estimate, Sn, is a gamma distribution:

| (13) |

Here, for non-zero frequencies, the shape parameter K is found from the number of epochs averaged in the periodogram. For zero frequency, replace K with K/2 throughout. The scale parameter is given by

| (14) |

The likelihood function for the vector of spectral estimates, S = [S1 S2 S3 ⋯ SN]T, given the parameter values θ, is then the product of the distributions of the individual spectral estimates:

| (15) |

The constant α is adjusted to give the maximum likelihood fit of the model spectrum to the target experimental spectrum. The analytic result is that

| (16) |

The likelihood based on model parameters alone is then

| (17) |

Fitting schemes

Particle swarm optimization and least squares minimization

The first method we used to find the best fit parameters and their uncertainties is particle swarm optimization (PSO) [97], a standard technique for nonlinear optimization problems. PSO is an optimization algorithm inspired by swarming behavior in nature to process knowledge in the course of searching the best solution in a high-dimensional space . At the individual level a particular particle p in a particular iteration represents a distinct candidate solution whose quality is defined by the cost function. Throughout the iterations the particle moves around in the search-space in the direction and velocity guided by its local best known position as well as the global best known position in such a way that in any iteration p’s velocity is given by:

| (18) |

where ψ is a predefined inertia weight, as proposed by Shi and Eberhart [98], while and are weights given to individual versus social interaction, respectively (here rand(0, cmax) represents a random value between 0 and cmax). The algorithm iteratively updates the global best known position G until the stopping criterion is reached.

PSO was used to estimate the 22-dimensional vector of parameters that minimizes a least squares (LS) cost function C given by the sum of squared residuals between the measured spectrum S and the normalized model spectrum :

| (19) |

Markov chain Monte Carlo and maximum likelihood estimation

In addition to the PSO method, we also used a Markov Chain Monte Carlo (MCMC) approach. For a given measured spectrum, S, the best fit θ was found by maximizing the posterior distribution p(θ|S) given in Eq 20. To simplify the calculation, a local maximum is sought in the vicinity of the MCMC sampled parameter that maximizes the likelihood function (Eq 17) evaluated at the observed spectrum. In practice this starting value is based on the resampled parameter set rather than on the full set and the maximum found using the Matlab® fminsearch command on the negative of the log of the posterior distribution.

Sampling schemes

PSO-based random sampling

We apply an unbiased approach to draw a set of fair samples from a complicated distribution of solutions to a model-parameterization problem. Given a target spectrum to infer the distributions of the estimated parameters from, we carry out the approach by performing the three steps as follows.

Generate samples. We perform this step by using 1000 independent instantiations of the PSO to find 1000 different parameter estimates. In this scheme we use 80 particles for each swarm. Parameter starting points are chosen by random sampling from a uniform distribution over the physiologically-relevant ranges given in Table 1. During the parameter search, each parameter is forced to stay within its physiologically-relevant range by assigning a high cost to particles with values outside these ranges. This is similar to employing a Bayesian prior that is flat over the acceptable parameter range, and zero elsewhere. This step results in a preliminary set of 1000 parameter estimates .

Select the best samples. Not all samples drawn in the first step represent acceptable fits. We only accept the 10 percent of estimates which have the lowest cost functions, since, by inspecting fits to multiple subjects (See S3 Fig) only these consistently correspond to good fits.

Estimate the distributions. Having selected samples of , we construct kernel density estimates of the marginal posterior distributions of the parameters.

Markov chain Monte Carlo sampling

In the MCMC approach we employ an explicitly Bayesian framework, treating the parameters as random variables. Given a known prior distribution, p0(θ), we seek the posterior distribution, conditioned on the observed spectrum S given by

| (20) |

In the absence of an explicit closed form expression for this function, averaged quantities can be estimated in a Monte-Carlo fashion given a sufficiently large set of parameter values drawn randomly from this distribution. To obtain these values we use the Metropolis-Hastings Markov chain Monte Carlo (MCMC) algorithm [99] based on the log likelihood ratio that follows from the likelihood function described before.

| (21) |

The sampled sets obtained for each target spectrum consist of 106 MCMC samples with the step size (of normalised parameter values) adjusted during a burn-in phase of length 40000 to yield an acceptance ratio in the vicinity of 0.25. When appropriate, the long sampled sequence is resampled, typically to yield sequences of length 1000 upon which to base averages. For consistency with the particle swarm approach, the prior distribution is assumed to be uniform over its support.

Kullback-Leibler divergence

A convenient measure of the information gained about individual parameters as a result of the measurement of the spectrum is the Kullback-Leibler divergence (KLD) [100, 101]. Here we use the KLD to measure the change in the marginal posterior distribution of each parameter relative to its marginal prior:

| (22) |

When KLDs are used to measure how posteriors differ from priors based on MCMC samples, the integral is numerically evaluated using marginal distributions approximated by kernel density estimates using 1000 parameter values resampled from the full MCMC sampled parameter set for the given spectrum. The prior distributions are uniform over their support. For consistency, the posterior kernel estimates are truncated to have the same support. The kernel density estimate for a given parameter is sampled at 100 points over its support and the integral estimated numerically. For the PSO samples, due to the limited number of independent samples, the integral is estimated using a 10 bin histogram approximation.

Fisher information matrix

To assess the sloppiness of the model fit, we examine the eigenvalues of the Fisher information matrix (FIM), the definition of which for the pdf P(S|θ) is given by:

| (23) |

In general the integration here could present considerable difficulty, however, for the distribution given by Eq (13), it can be shown that a simplification is possible, resulting in an expression involving only the derivatives of the model spectral estimates, evaluated at the desired parameter values:

| (24) |

(For a derivation of this result, see S2 Appendix.)

The derivatives, with respect to normalised parameters at the LS or ML estimated values, are evaluated numerically using a 5-point finite difference approximation, and the resulting products summed over the sampled frequencies. The Matlab® eig command is used to find the eigenvalues and eigenvectors of the resulting matrices. Numerical experiments with surrogate matrices suggest that the eigenvalues calculated using eig are reliable over some 10 orders of magnitude. For our modelled spectra we expect the FIM to be positive semidefinite and of less than full rank, so negative eigenvalues and eigenvalues smaller than 10−10 times the largest eigenvalue are taken as zero.

Supporting information

The derivation of Eq (8) and detailed expressions for the factors appearing in Eqs (9) and (10).

(PDF)

The derivation of Eq (24) from Eq (23).

(PDF)

Computation and analysis of the directional derivatives of the modelled spectra along the directions of the leading eigenvectors.

(PDF)

Comparisons of time series of the EEG data and representative samples of modelled time series using parameters for the 6 subjects shown in Figs 1 and 2.

(PDF)

The eigenspectra of the Fisher information matrices plotted for all 82 subjects.

(PDF)

The distribution showing how different values of each decile of the relative costs are spread across the 82 subjects.

(PDF)

Absolute values of correlation coefficients and selected pairwise distributions based on MCMC parameter distributions for the 6 selected subjects.

(PDF)

Pseudocolor plots of the ratios of the magnitudes of the components to the L1 norm of the vector for the leading eigenvectors of the FIM (ML parameters) for the full set of 82 subjects.

(PDF)

Data Availability

All EEG data files are available from the PhysioNet database (https://www.physionet.org/pn4/eegmmidb/). The implementation of the methods and all datasets are publicly available at https://github.com/cds-swinburne/Hartoyo-et-al-2019-DATA-n-CODE.

Funding Statement

This work was supported in part by a Swinburne Postgraduate Research Award to AH and in part by an Australian Research Council (https://www.arc.gov.au/) grant FT140101104 to DGH. Computations were performed on the gSTAR/ozSTAR national facilities at Swinburne University of Technology funded by Swinburne and the Australian Government’s Education Investment Fund. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Kropotov JD. Chapter 2—Alpha Rhythms In: Kropotov JD, editor. Quantitative EEG, Event-Related Potentials and Neurotherapy. San Diego: Academic Press; 2009. p. 29–58. Available from: http://www.sciencedirect.com/science/article/pii/B9780123745125000025. [Google Scholar]

- 2. Aminoff MJ. Chapter 3—Electroencephalography: General Principles and Clinical Applications In: Aminoff MJ, editor. Aminoff’s Electrodiagnosis in Clinical Neurology (Sixth Edition) sixth edition ed. London: W.B. Saunders; 2012. p. 37–84. Available from: http://www.sciencedirect.com/science/article/pii/B9781455703081000030. [Google Scholar]

- 3. Berger H. Über das elektrenkephalogramm des menschen. Archiv für psychiatrie und nervenkrankheiten. 1929;87(1):527–570. 10.1007/BF01797193 [DOI] [Google Scholar]

- 4. Berger H. On the electroencephalogram of man. Third Report 1931; Twelfth Report 1937. Translated by Pierre Gloor. Electroencephalogr Clin Neurophysiol. 1931;28(suppl):113–167. [Google Scholar]

- 5. Lozano-Soldevilla D. On the Physiological Modulation and Potential Mechanisms Underlying Parieto-Occipital Alpha Oscillations. Front Comput Neurosci. 2018;12:23 10.3389/fncom.2018.00023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Andersen P, Andersson SA. Physiological basis of the alpha rhythm. New York: Appleton-Century-Crofts; 1968. [Google Scholar]

- 7. Hughes SW, Crunelli V. Thalamic mechanisms of EEG alpha rhythms and their pathological implications. Neuroscientist. 2005;11(4):357–372. 10.1177/1073858405277450 [DOI] [PubMed] [Google Scholar]

- 8. Vijayan S, Ching S, Purdon PL, Brown EN, Kopell NJ. Thalamocortical mechanisms for the anteriorization of alpha rhythms during propofol-induced unconsciousness. J Neurosci. 2013;33(27):11070–11075. 10.1523/JNEUROSCI.5670-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Jones SR, Pritchett DL, Sikora MA, Stufflebeam SM, Hamalainen M, Moore CI. Quantitative analysis and biophysically realistic neural modeling of the MEG mu rhythm: rhythmogenesis and modulation of sensory-evoked responses. J Neurophysiol. 2009;102(6):3554–3572. 10.1152/jn.00535.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Nunez PL, Srinivasan R. Electric Fields of the Brain: The Neurophysics of EEG. 2nd ed Oxford: Oxford University Press; 2005. [Google Scholar]

- 11. Liley DT, Cadusch PJ, Dafilis MP. A spatially continuous mean field theory of electrocortical activity. Network: Computation in Neural Systems. 2002;13(1):67–113. 10.1080/net.13.1.67.113 [DOI] [PubMed] [Google Scholar]

- 12. Robinson PA, Rennie CJ, Rowe DL. Dynamics of large-scale brain activity in normal arousal states and epileptic seizures. Phys Rev E Stat Nonlin Soft Matter Phys. 2002;65(4 Pt 1):041924 10.1103/PhysRevE.65.041924 [DOI] [PubMed] [Google Scholar]

- 13. Liley DTJ, Foster BL, Bojak I. Co-operative populations of neurons: mean field models of mesoscopic brain activity In: Novère NL, editor. Computational Systems Neurobiology. Dordrecht, NL: Springer Science & Business Media; 2012. p. 315–362. [Google Scholar]

- 14. Coombes S. Large-scale neural dynamics: simple and complex. Neuroimage. 2010;52(3):731–739. 10.1016/j.neuroimage.2010.01.045 [DOI] [PubMed] [Google Scholar]

- 15. Deco G, Jirsa VK, Robinson PA, Breakspear M, Friston K. The dynamic brain: from spiking neurons to neural masses and cortical fields. PLoS Comput Biol. 2008;4(8):e1000092 10.1371/journal.pcbi.1000092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Bellman R, Åström KJ. On structural identifiability. Mathematical biosciences. 1970;7(3-4):329–339. 10.1016/0025-5564(70)90132-X [DOI] [Google Scholar]

- 17. Glover K, Willems J. Parametrizations of linear dynamical systems: Canonical forms and identifiability. IEEE Transactions on Automatic Control. 1974;19(6):640–646. 10.1109/TAC.1974.1100711 [DOI] [Google Scholar]

- 18. Grewal M, Glover K. Identifiability of linear and nonlinear dynamical systems. IEEE Transactions on automatic control. 1976;21(6):833–837. 10.1109/TAC.1976.1101375 [DOI] [Google Scholar]

- 19. Reid J. Structural identifiability in linear time-invariant systems. IEEE Transactions on Automatic Control. 1977;22(2):242–246. 10.1109/TAC.1977.1101474 [DOI] [Google Scholar]

- 20. Cobelli C, Distefano JJ 3rd. Parameter and structural identifiability concepts and ambiguities: a critical review and analysis. American Journal of Physiology-Regulatory, Integrative and Comparative Physiology. 1980;239(1):R7–R24. 10.1152/ajpregu.1980.239.1.R7 [DOI] [PubMed] [Google Scholar]

- 21. Travis C, Haddock G. On structural identification. Mathematical Biosciences. 1981;56(3-4):157–173. 10.1016/0025-5564(81)90052-3 [DOI] [Google Scholar]

- 22. Nguyen V, Wood E. Review and unification of linear identifiability concepts. SIAM review. 1982;24(1):34–51. 10.1137/1024002 [DOI] [Google Scholar]

- 23. Ljung L, Glad T. On global identifiability for arbitrary model parametrizations. Automatica. 1994;30(2):265–276. 10.1016/0005-1098(94)90029-9 [DOI] [Google Scholar]

- 24. Němcová J. Structural identifiability of polynomial and rational systems. Mathematical biosciences. 2010;223(2):83–96. 10.1016/j.mbs.2009.11.002 [DOI] [PubMed] [Google Scholar]

- 25. Miao H, Xia X, Perelson AS, Wu H. On identifiability of nonlinear ODE models and applications in viral dynamics. SIAM review. 2011;53(1):3–39. 10.1137/090757009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Stanhope S, Rubin J, Swigon D. Identifiability of linear and linear-in-parameters dynamical systems from a single trajectory. SIAM Journal on Applied Dynamical Systems. 2014;13(4):1792–1815. 10.1137/130937913 [DOI] [Google Scholar]

- 27. Kreutz C. An easy and efficient approach for testing identifiability. Bioinformatics. 2018;34(11):1913–1921. 10.1093/bioinformatics/bty035 [DOI] [PubMed] [Google Scholar]

- 28. Zak DE, Gonye GE, Schwaber JS, Doyle FJ. Importance of input perturbations and stochastic gene expression in the reverse engineering of genetic regulatory networks: insights from an identifiability analysis of an in silico network. Genome research. 2003;13(11):2396–2405. 10.1101/gr.1198103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Raue A, Kreutz C, Maiwald T, Bachmann J, Schilling M, Klingmüller U, et al. Structural and practical identifiability analysis of partially observed dynamical models by exploiting the profile likelihood. Bioinformatics. 2009;25(15):1923–1929. 10.1093/bioinformatics/btp358 [DOI] [PubMed] [Google Scholar]

- 30. Lillacci G, Khammash M. Parameter estimation and model selection in computational biology. PLoS computational biology. 2010;6(3):e1000696 10.1371/journal.pcbi.1000696 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Chis OT, Banga JR, Balsa-Canto E. Structural identifiability of systems biology models: a critical comparison of methods. PloS one. 2011;6(11):e27755 10.1371/journal.pone.0027755 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Kreutz C, Raue A, Timmer J. Likelihood based observability analysis and confidence intervals for predictions of dynamic models. BMC Systems Biology. 2012;6(1):120 10.1186/1752-0509-6-120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Raue A, Karlsson J, Saccomani MP, Jirstrand M, Timmer J. Comparison of approaches for parameter identifiability analysis of biological systems. Bioinformatics. 2014;30(10):1440–1448. 10.1093/bioinformatics/btu006 [DOI] [PubMed] [Google Scholar]

- 34. Villaverde AF, Barreiro A, Papachristodoulou A. Structural identifiability of dynamic systems biology models. PLoS computational biology. 2016;12(10):e1005153 10.1371/journal.pcbi.1005153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Gábor A, Villaverde AF, Banga JR. Parameter identifiability analysis and visualization in large-scale kinetic models of biosystems. BMC systems biology. 2017;11(1):54 10.1186/s12918-017-0428-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Waterfall JJ, Casey FP, Gutenkunst RN, Brown KS, Myers CR, Brouwer PW, et al. Sloppy-model universality class and the Vandermonde matrix. Physical review letters. 2006;97(15):150601 10.1103/PhysRevLett.97.150601 [DOI] [PubMed] [Google Scholar]

- 37. Transtrum MK, Machta BB, Sethna JP. Why are nonlinear fits to data so challenging? Physical review letters. 2010;104(6):060201 10.1103/PhysRevLett.104.060201 [DOI] [PubMed] [Google Scholar]

- 38. Transtrum MK, Machta BB, Sethna JP. Geometry of nonlinear least squares with applications to sloppy models and optimization. Physical Review E. 2011;83(3):036701 10.1103/PhysRevE.83.036701 [DOI] [PubMed] [Google Scholar]

- 39. Machta BB, Chachra R, Transtrum MK, Sethna JP. Parameter space compression underlies emergent theories and predictive models. Science. 2013;342(6158):604–607. 10.1126/science.1238723 [DOI] [PubMed] [Google Scholar]

- 40. Transtrum MK, Machta BB, Brown KS, Daniels BC, Myers CR, Sethna JP. Perspective: Sloppiness and emergent theories in physics, biology, and beyond. The Journal of chemical physics. 2015;143(1):07B201_1 10.1063/1.4923066 [DOI] [PubMed] [Google Scholar]

- 41. Raman DV, Anderson J, Papachristodoulou A. Delineating parameter unidentifiabilities in complex models. Physical Review E. 2017;95(3):032314 10.1103/PhysRevE.95.032314 [DOI] [PubMed] [Google Scholar]

- 42. Chis OT, Villaverde AF, Banga JR, Balsa-Canto E. On the relationship between sloppiness and identifiability. Mathematical biosciences. 2016;282:147–161. 10.1016/j.mbs.2016.10.009 [DOI] [PubMed] [Google Scholar]

- 43. White A, Tolman M, Thames HD, Withers HR, Mason KA, Transtrum MK. The limitations of model-based experimental design and parameter estimation in sloppy systems. PLoS computational biology. 2016;12(12):e1005227 10.1371/journal.pcbi.1005227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Brown KS, Sethna JP. Statistical mechanical approaches to models with many poorly known parameters. Physical review E. 2003;68(2):021904 10.1103/PhysRevE.68.021904 [DOI] [PubMed] [Google Scholar]

- 45. Brown KS, Hill CC, Calero GA, Myers CR, Lee KH, Sethna JP, et al. The statistical mechanics of complex signaling networks: nerve growth factor signaling. Physical biology. 2004;1(3):184 10.1088/1478-3967/1/3/006 [DOI] [PubMed] [Google Scholar]

- 46. Gutenkunst RN, Waterfall JJ, Casey FP, Brown KS, Myers CR, Sethna JP. Universally sloppy parameter sensitivities in systems biology models. PLoS computational biology. 2007;3(10):e189 10.1371/journal.pcbi.0030189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Transtrum MK, Qiu P. Bridging mechanistic and phenomenological models of complex biological systems. PLoS computational biology. 2016;12(5):e1004915 10.1371/journal.pcbi.1004915 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Zobeley J, Lebiedz D, Kammerer J, Ishmurzin A, Kummer U. A new time-dependent complexity reduction method for biochemical systems In: Transactions on Computational Systems Biology I. Springer; 2005. p. 90–110. [Google Scholar]

- 49. Amarasingham A, Geman S, Harrison MT. Ambiguity and nonidentifiability in the statistical analysis of neural codes. Proceedings of the National Academy of Sciences. 2015;112(20):6455–6460. 10.1073/pnas.1506400112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Hashemi M, Hutt A, Buhry L, Sleigh J. Optimal Model Parameter Estimation from EEG Power Spectrum Features Observed during General Anesthesia. Neuroinformatics. 2018;16(2):231–251. 10.1007/s12021-018-9369-x [DOI] [PubMed] [Google Scholar]

- 51. Arand C, Scheller E, Seeber B, Timmer J, Klöppel S, Schelter B. Assessing parameter identifiability for dynamic causal modeling of fMRI data. Frontiers in neuroscience. 2015;9:43 10.3389/fnins.2015.00043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. O’Leary T, Sutton AC, Marder E. Computational models in the age of large datasets. Current opinion in neurobiology. 2015;32:87–94. 10.1016/j.conb.2015.01.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. De Schutter E. Why are computational neuroscience and systems biology so separate? PLoS computational biology. 2008;4(5):e1000078 10.1371/journal.pcbi.1000078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Goldman MS, Golowasch J, Marder E, Abbott L. Global structure, robustness, and modulation of neuronal models. Journal of Neuroscience. 2001;21(14):5229–5238. 10.1523/JNEUROSCI.21-14-05229.2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Prinz AA, Bucher D, Marder E. Similar network activity from disparate circuit parameters. Nature neuroscience. 2004;7(12):1345 10.1038/nn1352 [DOI] [PubMed] [Google Scholar]

- 56. Huys QJM, Ahrens MB, Paninski L. Efficient Estimation of Detailed Single-Neuron Models. Journal of Neurophysiology. 2006;96(2):872–890. 10.1152/jn.00079.2006 [DOI] [PubMed] [Google Scholar]

- 57. Taylor AL, Goaillard JM, Marder E. How multiple conductances determine electrophysiological properties in a multicompartment model. Journal of Neuroscience. 2009;29(17):5573–5586. 10.1523/JNEUROSCI.4438-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Doloc-Mihu A, Calabrese RL. Identifying crucial parameter correlations maintaining bursting activity. PLoS computational biology. 2014;10(6):e1003678 10.1371/journal.pcbi.1003678 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Fisher D, Olasagasti I, Tank DW, Aksay ER, Goldman MS. A modeling framework for deriving the structural and functional architecture of a short-term memory microcircuit. Neuron. 2013;79(5):987–1000. 10.1016/j.neuron.2013.06.041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Rowe DL, Robinson PA, Rennie CJ. Estimation of neurophysiological parameters from the waking EEG using a biophysical model of brain dynamics. Journal of theoretical biology. 2004;231(3):413–433. 10.1016/j.jtbi.2004.07.004 [DOI] [PubMed] [Google Scholar]

- 61. Bojak I, Liley D. Modeling the effects of anesthesia on the electroencephalogram. Physical Review E. 2005;71(4):041902 10.1103/PhysRevE.71.041902 [DOI] [PubMed] [Google Scholar]

- 62.Maybank P, Bojak I, Everitt RG. Fast approximate Bayesian inference for stable differential equation models. arXiv:170600689 [statCO]. 2017.

- 63. Liley DT, Cadusch PJ, Gray M, Nathan PJ. Drug-induced modification of the system properties associated with spontaneous human electroencephalographic activity. Physical Review E. 2003;68(5):051906 10.1103/PhysRevE.68.051906 [DOI] [PubMed] [Google Scholar]

- 64. Liley DT, Foster BL, Bojak I. Co-operative populations of neurons: mean field models of mesoscopic brain activity. In: Computational Systems Neurobiology. Springer; 2012. p. 317–364. 10.1007/978-94-007-3858-4_11 [DOI] [Google Scholar]

- 65. Liley DT, Cadusch PJ, Wright JJ. A continuum theory of electro-cortical activity. Neurocomputing. 1999;26:795–800. 10.1016/S0925-2312(98)00149-0 [DOI] [Google Scholar]

- 66. Bojak I, Liley DT. Self-organized 40 Hz synchronization in a physiological theory of EEG. Neurocomputing. 2007;70(10-12):2085–2090. 10.1016/j.neucom.2006.10.087 [DOI] [Google Scholar]

- 67. van Veen L, Liley DTJ. Chaos via Shilnikov’s Saddle-Node Bifurcation in a Theory of the Electroencephalogram. Phys Rev Lett. 2006;97:208101 10.1103/PhysRevLett.97.208101 [DOI] [PubMed] [Google Scholar]

- 68.Freeman WJ. Mass action in the nervous system; 1975.

- 69. Nunez PL, Srinivasan R, et al. Electric fields of the brain: the neurophysics of EEG. Oxford University Press, USA; 2006. [Google Scholar]

- 70. Schalk G, McFarland DJ, Hinterberger T, Birbaumer N, Wolpaw JR. BCI2000: a general-purpose brain-computer interface (BCI) system. IEEE Transactions on biomedical engineering. 2004;51(6):1034–1043. 10.1109/TBME.2004.827072 [DOI] [PubMed] [Google Scholar]

- 71. Goldberger AL, Amaral LA, Glass L, Hausdorff JM, Ivanov PC, Mark RG, et al. PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circulation. 2000;101(23):e215–e220. 10.1161/01.CIR.101.23.e215 [DOI] [PubMed] [Google Scholar]

- 72. Welch P. The use of fast Fourier transform for the estimation of power spectra: a method based on time averaging over short, modified periodograms. IEEE Transactions on audio and electroacoustics. 1967;15(2):70–73. 10.1109/TAU.1967.1161901 [DOI] [Google Scholar]

- 73. Rey M, Bastuji H, Garcia-Larrea L, Guillemant P, Mauguière F, Magnin M. Human Thalamic and Cortical Activities Assessed by Dimension of Activation and Spectral Edge Frequency During Sleep Wake Cycles. Sleep. 2007;30(7):907–912. 10.1093/sleep/30.7.907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Bruhn J M D, Bouillon TW M D, Radulescu L M D, Hoeft A M D Ph D, Bertaccini E M D, Shafer SL M D. Correlation of Approximate Entropy, Bispectral Index, and Spectral Edge Frequency 95 (SEF95) with Clinical Signs of “Anesthetic Depth” during Coadministration of Propofol and Remifentanil. Anesthesiology. 2003;98(3):621–627. 10.1097/00000542-200303000-00008 [DOI] [PubMed] [Google Scholar]

- 75. Schwender D, Daunderer M, Mulzer S, Klasing S, Finsterer U, Peter K. Spectral edge frequency of the electroencephalogram to monitor “depth” of anaesthesia with isoflurane or propofol. British Journal of Anaesthesia. 1996;77(2):179–184. 10.1093/bja/77.2.179 [DOI] [PubMed] [Google Scholar]

- 76. Moore B. Principal component analysis in linear systems: Controllability, observability, and model reduction. IEEE transactions on automatic control. 1981;26(1):17–32. 10.1109/TAC.1981.1102568 [DOI] [Google Scholar]

- 77.Antoulas AC, Sorensen DC. Approximation of large-scale dynamical systems: An overview; 2001.

- 78. Liebermeister W, Baur U, Klipp E. Biochemical network models simplified by balanced truncation. The FEBS journal. 2005;272(16):4034–4043. 10.1111/j.1742-4658.2005.04780.x [DOI] [PubMed] [Google Scholar]

- 79. Surovtsova I, Simus N, Hübner K, Sahle S, Kummer U. Simplification of biochemical models: a general approach based on the analysis of the impact of individual species and reactions on the systems dynamics. BMC systems biology. 2012;6(1):14 10.1186/1752-0509-6-14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Transtrum MK, Qiu P. Model reduction by manifold boundaries. Physical review letters. 2014;113(9):098701 10.1103/PhysRevLett.113.098701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Mattingly HH, Transtrum MK, Abbott MC, Machta BB. Maximizing the information learned from finite data selects a simple model. Proceedings of the National Academy of Sciences. 2018;115(8):1760–1765. 10.1073/pnas.1715306115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Goldenfeld N. Lectures on phase transitions and the renormalization group. CRC Press; 2018. [Google Scholar]

- 83.We would like to express our thanks to an anonymous reviewer for suggesting the directional derivatives of modelled spectra.

- 84. Laughlin RB, Pines D, Schmalian J, Stojković BP, Wolynes P. The middle way. Proceedings of the National Academy of Sciences. 2000;97(1):32–37. 10.1073/pnas.97.1.32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Schomer DL, Da Silva FL. Niedermeyer’s electroencephalography: basic principles, clinical applications, and related fields. Lippincott Williams & Wilkins; 2012. [Google Scholar]

- 86.Sokoliuk R, Mayhew S, Aquino K, Wilson R, Brookes M, Francis S, et al. Two spatially distinct posterior alpha sources fulfil different functional roles in attention (bioRxiv: 384065). 2018. [DOI] [PMC free article] [PubMed]

- 87. Barzegaran E, Vildavski VY, Knyazeva MG. Fine structure of posterior alpha rhythm in human EEG: Frequency components, their cortical sources, and temporal behavior. Scientific reports. 2017;7(1):8249 10.1038/s41598-017-08421-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. Keitel A, Gross J. Individual human brain areas can be identified from their characteristic spectral activation fingerprints. PLoS biology. 2016;14(6):e1002498 10.1371/journal.pbio.1002498 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89. Karin O, Swisa A, Glaser B, Dor Y, Alon U. Dynamical compensation in physiological circuits. Molecular systems biology. 2016;12(11):886 10.15252/msb.20167216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90. Sontag ED. Dynamic compensation, parameter identifiability, and equivariances. PLoS computational biology. 2017;13(4):e1005447 10.1371/journal.pcbi.1005447 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91. Villaverde AF, Banga JR. Dynamical compensation and structural identifiability of biological models: Analysis, implications, and reconciliation. PLoS computational biology. 2017;13(11):e1005878 10.1371/journal.pcbi.1005878 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92. Daniels BC, Chen YJ, Sethna JP, Gutenkunst RN, Myers CR. Sloppiness, robustness, and evolvability in systems biology. Current opinion in biotechnology. 2008;19(4):389–395. 10.1016/j.copbio.2008.06.008 [DOI] [PubMed] [Google Scholar]

- 93. Theiler J, Rapp PE. Re-examination of the evidence for low-dimensional, nonlinear structure in the human electroencephalogram. Electroencephalography and clinical Neurophysiology. 1996;98(3):213–222. 10.1016/0013-4694(95)00240-5 [DOI] [PubMed] [Google Scholar]

- 94. Stam C, Pijn J, Suffczynski P, Da Silva FL. Dynamics of the human alpha rhythm: evidence for non-linearity? Clinical Neurophysiology. 1999;110(10):1801–1813. 10.1016/S1388-2457(99)00099-1 [DOI] [PubMed] [Google Scholar]

- 95. Dafilis MP, Liley DT, Cadusch PJ. Robust chaos in a model of the electroencephalogram: Implications for brain dynamics. Chaos: An Interdisciplinary Journal of Nonlinear Science. 2001;11(3):474–478. 10.1063/1.1394193 [DOI] [PubMed] [Google Scholar]

- 96. Johnson PE, Long DG. The probability density of spectral estimates based on modified periodogram averages. IEEE transactions on signal processing. 1999;47(5):1255–1261. 10.1109/78.757213 [DOI] [Google Scholar]

- 97.Eberhart R, Kennedy J. A new optimizer using particle swarm theory. In: Micro Machine and Human Science, 1995. MHS’95., Proceedings of the Sixth International Symposium on. IEEE; 1995. p. 39–43.

- 98.Shi Y, Eberhart R. A modified particle swarm optimizer. In: Evolutionary Computation Proceedings, 1998. IEEE World Congress on Computational Intelligence., The 1998 IEEE International Conference on. IEEE; 1998. p. 69–73.

- 99. Hastings WK. Monte Carlo Sampling Methods Using Markov Chains and Their Applications. Biometrika. 1970;57(1):97–109. 10.1093/biomet/57.1.97 [DOI] [Google Scholar]

- 100. Kullback S, Leibler RA. On information and sufficiency. The annals of mathematical statistics. 1951;22(1):79–86. 10.1214/aoms/1177729694 [DOI] [Google Scholar]

- 101. Kullback S. Information theory and statistics. Courier Corporation; 1997. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The derivation of Eq (8) and detailed expressions for the factors appearing in Eqs (9) and (10).

(PDF)

The derivation of Eq (24) from Eq (23).

(PDF)

Computation and analysis of the directional derivatives of the modelled spectra along the directions of the leading eigenvectors.

(PDF)

Comparisons of time series of the EEG data and representative samples of modelled time series using parameters for the 6 subjects shown in Figs 1 and 2.

(PDF)

The eigenspectra of the Fisher information matrices plotted for all 82 subjects.

(PDF)

The distribution showing how different values of each decile of the relative costs are spread across the 82 subjects.

(PDF)

Absolute values of correlation coefficients and selected pairwise distributions based on MCMC parameter distributions for the 6 selected subjects.

(PDF)

Pseudocolor plots of the ratios of the magnitudes of the components to the L1 norm of the vector for the leading eigenvectors of the FIM (ML parameters) for the full set of 82 subjects.

(PDF)

Data Availability Statement

All EEG data files are available from the PhysioNet database (https://www.physionet.org/pn4/eegmmidb/). The implementation of the methods and all datasets are publicly available at https://github.com/cds-swinburne/Hartoyo-et-al-2019-DATA-n-CODE.