Summary

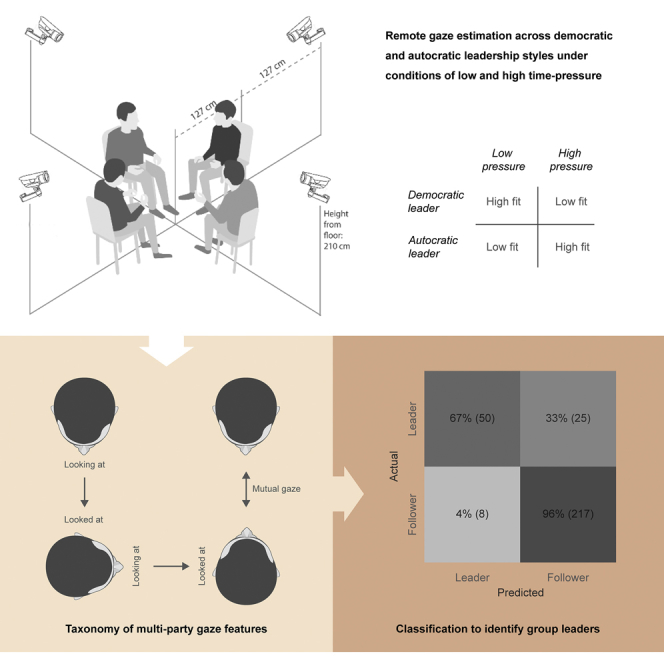

Can social gaze behavior reveal the leader during real-world group interactions? To answer this question, we developed a novel tripartite approach combining (1) computer vision methods for remote gaze estimation, (2) a detailed taxonomy to encode the implicit semantics of multi-party gaze features, and (3) machine learning methods to establish dependencies between leadership and visual behaviors. We found that social gaze behavior distinctively identified group leaders. Crucially, the relationship between leadership and gaze behavior generalized across democratic and autocratic leadership styles under conditions of low and high time-pressure, suggesting that gaze can serve as a general marker of leadership. These findings provide the first direct evidence that group visual patterns can reveal leadership across different social behaviors and validate a new promising method for monitoring natural group interactions.

Subject Areas: Social Interaction, Neuroscience, Behavioral Neuroscience

Graphical Abstract

Highlights

-

•

Leadership shapes gaze dynamics during real-world human group interactions

-

•

Social gaze behavior distinctively identifies group leaders

-

•

Identification generalizes across leadership styles and situational conditions

-

•

Gaze can serve as a general marker of leadership

Social Interaction; Neuroscience; Behavioral Neuroscience

Introduction

It is commonly believed that leadership is reflected in gaze behavior. Stereotypical thinking links leadership to prolonged gazing toward leaders (Hall et al., 2005) and longer mutual gazing in response to interactions initiated by leaders (Carney et al., 2005). However, evidence for an actual relationship between leadership and social gaze behaviors is limited. To date, investigations on the influence of leadership on gaze behavior have focused on computer-based paradigms that do not provide any opportunity for social interaction (Capozzi and Ristic, 2018, Koski et al., 2015, Risko et al., 2016). The aim of the present study was to develop a novel approach to investigate how leadership shapes gaze dynamics during real-world human group interactions.

Authentic social situations are complex and highly dynamic (Foulsham et al., 2010). What is more, unlike computer-based paradigms, they involve the potential for social interaction and reciprocity. When looking at a representation of a social stimulus (e.g., images of people), individuals need not worry about what their own gaze might be communicating to the stimulus. When looking at real people, in contrast, the eyes not only collect information (encoding function) but also communicate information to others (signaling function; Risko et al., 2016). This dual function of gaze yields an interdependency among multi-agent gaze patterns, which traditional computer-based paradigms, be they static or dynamic scene-viewing tasks, arguably fail to capture (Laidlaw et al., 2011).

Despite a growing understanding of the necessity of studying social cognitive processes in interactive (Schilbach et al., 2013) and complex settings (Frank and Richardson, 2010), little is known about the influence of leadership on gaze-based interactions in unconstrained group interactions. Older studies report that, in dyadic interactions, attribution of power increases as the proportion of looking while speaking increases (Dovidio and Ellyson, 1982, Ellyson et al., 1981, Exline et al., 1975). However, the evidence is inconclusive as to whether gazing decoupled from speaking time identifies leaders (Hall et al., 2005). Moreover, it remains unclear whether the same dynamics constraining dyads also constrain group interactions.

A major reason for the lack of studies investigating group gaze-based interactions is the difficulty of simultaneously tracking transient variations in multi-party gaze features to capture the implicit semantics of social gaze behaviors. In the attempt to overcome these limitations, in this study, we developed a novel tripartite approach combining (1) computer vision methods for remote gaze-tracking, (2) a detailed taxonomy to encode the implicit semantics of multi-party gaze features, and (3) advance machine learning methods to establish dependencies between leadership and visual behaviors during unconstrained group interactions involving four people simultaneously. The basic idea for establishing a relationship between social gaze behavior and leadership was to conceptualize multi-party gaze features as patterns and to treat the analysis as a pattern classification problem: can a classifier applied to the visual behavior pattern of real people interacting in small groups reveal the leader? This is the first question we addressed in the study described here. The second question is whether the relationship between gaze behavior and leadership generalizes across leadership styles and situational conditions—in other words, whether gaze behavior can serve as a general marker of leadership.

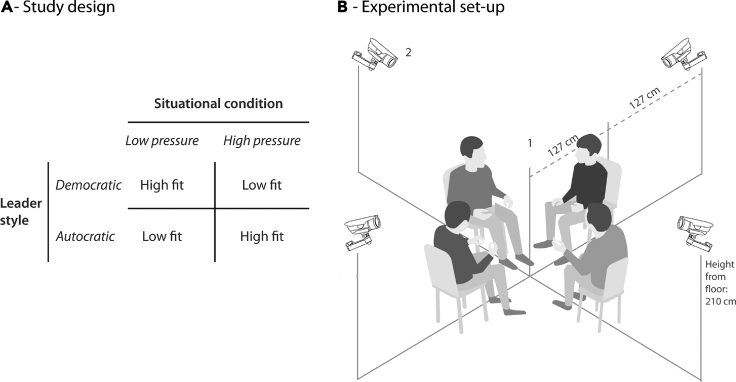

Drawing on ideas from social psychology (Chemers, 2014, Foels et al., 2000, Livi et al., 2008, Northouse, 2016), we analyzed gaze-based interaction dynamics in four leadership settings resulting from the orthogonal manipulation of leadership style (i.e., Democratic versus Autocratic) and situational condition (i.e., Low time-pressure versus High time-pressure). Democratic leadership is expected to be more effective under situational conditions of low time-pressure, whereas autocratic leaderships are expected to be more effective under situational conditions of high time-pressure (Fiedler, 2006, Pierro et al., 2003). The orthogonal manipulation of leadership styles and situational conditions resulted in two high-fit conditions (Democratic-Low time-pressure, Autocratic-High time-pressure) and two low-fit conditions (Democratic-High time-pressure, Autocratic-Low time-pressure) (Figure 1A; see also Supplemental Information and Figure S1 for group composition and manipulation checks). Each group, composed of one designated leader and three followers, was assigned a survival task to solve within a limited time (see Figure 1B for the experimental setting). First, using a method for automatically estimating the Visual Focus of Attention (VFOA; Ba and Odobez, 2006, Beyan et al., 2016, Gatica-Perez, 2009, Stiefelhagen et al., 1999), we determined “who looked at whom.” Then, we established a detailed taxonomy of multi-party gaze behaviors and, combining the VFOA of individual group-members, reconstructed the gaze-based interaction dynamics. Next, we probed the actual association between leadership and gaze patterns by asking whether a pattern classification algorithm could discriminate leaders and followers among the group-members. After finding evidence for leadership classification, we finally tested whether the classifier was able to generalize across leadership styles, situational conditions, and time.

Figure 1.

Study Design and Experimental Setting

(A) Study design and manipulation of leadership style and situational condition.

(B) Schematic reproduction of the experimental setting (drawing not to scale). Participants seated on four equidistant chairs (1), while four individual video-cameras were recording the upper part of their bodies (2).

Results

Extraction of the Visual Focus of Attention

First, using a method for automatically estimating the VFOA (Beyan et al., 2016), we determined “who looked at whom.” To do so, we recorded the visual behavior of 16 groups composed of four previously unacquainted individuals over a period of maximum 30 min (mean = 23 min, range = 12–30). Individuals were sitting on four equidistant chairs (Figure 1B, 1). The visual behavior of each individual was simultaneously captured by four multi-view streaming cameras (1,280 × 1,024 pixel resolution, 20 frames per second frame rate) (Figure 1B, 2). In addition, a standard camera (440 × 1,080 pixel resolution, and 25 frames per second frame rate) was used to capture the whole scene. An automated extraction technique was used to estimate the frame-by-frame VFOA of each participant (Beyan et al., 2016). The performance of the SVM classifiers used to model the individual VFOAs yielded an average of 72% detection rate (see “Visual Focus of Attention” in Transparent Methods).

Reconstruction of Group Interaction Dynamics

Having determined the VFOA of each participant, we proceeded to reconstruct the gaze-based interaction dynamics by combining the VFOA of individual group-members. To this aim, we derived a detailed taxonomy of multi-party gaze on the basis of the three broad social dimensions classically used in the study of social gaze behavior (Capozzi and Ristic, 2018, Emery, 2000, Jording et al., 2018, Kleinke, 1986, Pfeiffer et al., 2013), here labeled participation, prestige, and mutual engagement (see Pierro et al., 2003). Participation refers to the amount of time that each individual looks at others and indicates the individual involvement in interactive dynamics (Ellyson and Dovidio, 1985). Prestige refers to the amount of time that each individual is looked at by others and indicates the extent to which one is referred to during an interaction (Feinman et al., 1992). Mutual engagement refers to the amount of time that each individual looks at someone while looked back and indicates the individual engagement in cooperative behaviors (Foddy, 1978). Within these dimensions, we extracted eight multi-party gaze features to capture comprehensively gaze behavior during group interactions (Table 1; see also Data S1 for gaze behavior data).

Table 1.

Gaze Behavior Taxonomy: Description, Operationalization, and Social Dimensions of Visual Features

| Multi-Party Gaze Feature | Operationalization | Indexed on | Dimension |

|---|---|---|---|

| Looking at | Video-frames in which each individual looked at another member while not looked back | Total video-frames | Participation |

| Looked at | Video-frames in which each individual was looked at while not looking back | Total video-frames | Prestige |

| Looked at_multiple | Video-frames in which each individual was looked at by twoa members simultaneously, while not looking back at any of them | Total video-frames | |

| Looked at_Ratio | Ratio between “Looked at” and “Looking at” | NA | |

| Mutual gaze | Video-frames in which each individual was looking at someone while simultaneously being looked back | Total video-frames | Mutual engagement |

| Mutual gaze_multiple | Video-frames in which each individual was looked at by twoa members simultaneously, while looking back at one of them | Total video-frames | |

| Mutual gaze initiation | Frequency of mutual engagement episodes initiated | Total mutual engagement episodes in each video | |

| Mutual gaze response time | Video-frames between the initiation of a mutual engagement episode and the reaction of the looked at person | Total video-frames |

Note: For both Looked at_multiple and Mutual gaze_multiple, the number of video-frames in which an individual was looked at by three members simultaneously did not result in values different from zero, thus these features were omitted from subsequent analyses.

Leader Classification by Group Visual Behavior

To establish a dependency between visual behavior and leadership, we next trained a linear Support Vector Machine (SVM) to discriminate leaders versus followers on the extracted multi-party gaze features. Classification performance was computed as the resulting average of a leave-one-subject-out cross-validation scheme (Koul et al., 2018).

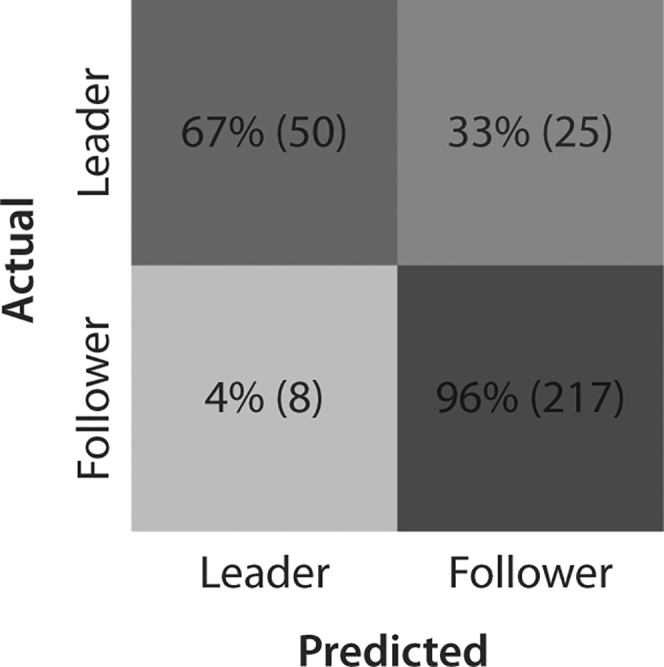

With a cross-validated accuracy of 89%, classification performance was well above the 0.50 chance level (95% confidence interval [CI] = 0.85, 0.92; kappa = 0.68; sensitivity = 0.86; specificity = 0.90; F1 = 0.75; p < 0.001). Figure 2 shows the corresponding confusion matrix.

Figure 2.

Confusion Matrix for the Leaders versus Followers Classification (Full Dataset, N = 300)

Darker shading denotes higher percentages. The actual number of observations is shown in parentheses.

To investigate which features were more effective for the classification task, we next computed F-scores (see “Leader classification analysis” in Transparent Methods). F-score provides a measure of how well a single feature at a time can discriminate between different classes. The higher the F-score, the greater the ability of a feature to discriminate between leaders and followers. Table 2 provides an overall view of the discriminative power of each visual feature.

Table 2.

F-Scores and Group Means for Individual Features for Discrimination between Leaders and Followers (Full Dataset)

| Feature | F-Score | Leaders Mean (±SD) | Followers Mean (±SD) |

|---|---|---|---|

| Looking at | 1.800 | 0.36 ± 0.09 | 0.57 ± 0.13 |

| Looked at_Ratio | 1.700 | 2.43 ± 1.07 | 0.85 ± 0.53 |

| Looked at | 1.300 | 0.72 ± 0.18 | 0.43 ± 0.17 |

| Looked at_multiple | 1.300 | 0.28 ± 0.13 | 0.10 ± 0.08 |

| Mutual gaze | 0.780 | 0.41 ± 0.14 | 0.24 ± 0.12 |

| Mutual gaze_mutiple | 0.450 | 0.26 ± 0.14 | 0.15 ± 0.10 |

| Mutual gaze response time | 0.350 | 0.13 ± 0.06 | 0.19 ± 0.08 |

| Mutual gaze initiation | 0.085 | 0.27 ± 0.08 | 0.24 ± 0.07 |

Features are ranked based on F-scores, higher values indicating higher contribution to the classification. The unit of measurement for the means is the proportion of frames in which the visual behavior occurred (see Table 1).

Overall, F-scores suggest that leaders looked less at others and, conversely, were looked at more when compared with followers. Also, leaders were involved in and caused more episodes of mutual engagement, relative to followers. The time taken by another group-member to respond to the initiation of mutual engagement was also less for leader-initiated episodes compared with follower-initiated episodes.

Generalization across Leadership Styles, Situational Conditions, and Time

To provide direct evidence that the relationship between leadership and visual behavior generalizes across leadership styles and situational conditions, we next applied Multivariate Cross-Classification (MVCC) analysis to our data (Kaplan et al., 2015). In MVCC, a classifier is trained on one set of data and then tested with another set. If the two datasets share the same patterns, then learning should transfer from the training to the testing set (Kaplan et al., 2015, Kriegeskorte, 2011).

Following this logic, we first applied MVCC analysis to test generalization across leadership styles. We trained a linear SVM to discriminate leaders based on gaze patterns recorded during group interactions with a designated democratic leader and then tested it on group interactions with a designated autocratic leader. With an accuracy of 88%, cross-classification performance was well above the 0.50 chance level (95% CI = 0.82, 0.93; kappa = 0.66; sensitivity = 0.81; specificity = 0.90; F1 = 0.73; p < 0.001). Train-autocratic and test-democratic led to a similar cross-classification accuracy of 90% (95% CI = 0.84, 0.95; kappa = 0.72; sensitivity = 0.89; specificity = 0.91; F1 = 0.78; p < 0.001).

With a similar logic, we applied MVCC to test generalization across situational conditions. We trained a linear SVM on gaze patterns recorded under high-fit situational conditions (i.e., democratic leaders working in a low time-pressure condition and autocratic leaders working in a high time-pressure condition), and then tested it on group interactions under low-fit situational conditions, and vice versa. Cross-classification performance was once again well above the 0.50 chance level, reaching 94% and 85% for train-high fit and test-low fit (95% CI = 0.89, 0.97; kappa = 0.83; sensitivity = 0.92; specificity = 0.94; F1 = 0.87; p < 0.001) and train-low fit and test-high fit (95% CI = 0.78, 0.91; kappa = 0.54; sensitivity = 0.82; specificity = 0.86; F1 = 0.63; p < 0.001), respectively. Collectively, these data show that multi-party visual behavior supports identification of group leaders across leadership styles (i.e., democratic, autocratic) and situational fit conditions (i.e., high fit, low fit).

Finally, we applied MVCC to test the temporal stability of leadership-related gaze dynamics, that is, whether similar gaze patterns identify leaders over time. To do so, we trained a linear SVM to discriminate leaders based on gaze patterns recorded during the first part of the group task (first half of the video-segments) and then tested it on gaze patterns from the second part of the group task (second half of the video-segments). With an accuracy of 91%, cross-classification performance was well above the 0.50 chance level (95% CI = 0.86, 0.95; kappa = 0.76; sensitivity = 0.90; specificity = 0.92; F1 = 0.81; p < 0.001). Training on the second part and testing on the first part led to a similar cross-classification accuracy of 89% (95% CI = 0.83, 0.94; kappa = 0.68; sensitivity = 0.92; specificity = 0.89; F1 = 0.74; p < 0.001). These results indicate that leadership-related gaze patterns generalized over time.

Discussion

The study of visual behavior as a nonverbal index of leadership has received attention both within evolutionary perspectives seeking out the ancestral foundations of the human propensity to organize into social structures (van Vugt, 2014), as well as within social neurocognitive perspectives aiming at describing the neural and cognitive processes that enable such structures (Koski et al., 2015). The joint efforts of these disciplines have so far mainly focused on the conditions that predict who will emerge as leader in a particular situation and on the nonverbal cues that signal or predict leadership effectiveness—a computational problem often referred to as “leader index” (Grabo et al., 2017). Albeit important, this approach leaves unaddressed a related but distinct “leader marker” problem: Can the semantics of group visual behavior reveal the leader among group-members?

To address this problem, in the present study, we developed a novel approach combining computer vision methods, a detailed taxonomy of social gaze behaviors, and machine learning methods for pattern classification. We found that social gaze behavior distinctively identified group leaders. Furthermore, leadership identification generalized across different leadership styles and situational conditions. Intriguingly, the features that contributed to classification spanned all the three dimensions of social visual behavior: participation, prestige, and mutual engagement. The association of “prestige” to leadership—leaders being looked at more compared with followers—is consistent with previous findings from computer-based studies. For example, studies investigating gaze allocation in video clips found that people perceived as leaders were fixated more often and for a longer total time compared with people perceived as non-leaders (Foulsham et al., 2010, Gerpott et al., 2018). Could this be because leaders tend to speak more than non-leaders? To address this possibility, we performed an additional MVCC analysis training a linear SVM to discriminate leaders based on gaze patterns recorded during the video-segments in which the leader spoke the most, and then tested it on the video-segments in which a follower spoke the most. Cross-classification results confirmed that speaking time was not the factor driving leader identification (see Supplemental Information).

A novel finding of our study is that leaders looked less to others when compared with followers. We propose that this distinctive visual behavior of leaders may reflect the signaling function of gaze in authentic social situations (Dovidio and Ellyson, 1982, Kalma et al., 1993). That is, thinking their gaze was being monitored by followers, leaders may have implemented a sort of “gaze-based impression management” (Mattan et al., 2017). Similarly, one could hypothesize that followers' recurrent looks toward leaders and promptness to respond to mutual engagement episodes initiated by leaders betrayed a communicative concern, i.e., communicate their interest in leaders' opinions. These hypotheses could be tested by manipulating participants' beliefs about whether or not their own gaze is viewed by others. To the extent that the visual behavior of group-members reflects gaze-based impression management, one would expect the reported patterns to disappear when people believe that they are not seen by others.

To our knowledge, this is the first study that attempts to provide a full characterization of the relationship between leadership and social gaze behavior during natural group interactions. The novel method utilized in the current study demonstrates that gaze-based group behaviors distinctively identified leaders during natural group interactions. Leaders were looked at more, looked less at others, and elicited more mutual gaze. This pattern was observed over time regardless of leadership style and situational condition, suggesting that gaze can serve as a general marker of leadership. Together with previous findings on body movements (Badino et al., 2014, Chang et al., 2017, D'Ausilio et al., 2012) and paralinguistic behaviors (Gatica-Perez, 2009, Hall et al., 2005, Schmid Mast, 2002), these results demonstrate the significance of non-verbal cues for leadership identification. We expect that future empirical and modeling studies will investigate whether and how different (and possibly correlated) non-verbal features contribute to leader classification. In addition, we anticipate that these findings will inspire new research questions and real-world applications spanning a variety of domains, from business management (Beyan et al., 2018, Beyan et al., 2016) to surveillance and politics (Bazzani et al., 2012).

Limitations of the Study

In the present study, designated leaders were assigned to groups. It will be important for future studies to investigate whether and to what extent the current findings generalize to emergent leadership (e.g., Jiang et al., 2015). In contrast to designated leaders, emergent leaders gain status and respect through engagement with the group and its task. We would expect that, under these conditions, a temporal generalization method using cross-classification over multiple time windows (King and Dehaene, 2014) may identify different gaze-based interaction dynamics depending on the stage of the interaction. The same approach may also reveal how leadership is distributed among group-members across interaction stages.

Methods

All methods can be found in the accompanying Transparent Methods supplemental file.

Acknowledgments

This research was supported by a grant from the European Union‘s Horizon 2020 Research and Innovation action under grant agreement no. 824160 (EnTimeMent) to C. Becchio and by a grant of the Social Sciences and Humanities Research Council of Canada of Canada (SSHRC) to F.C. The funder(s) had no role in this work. We are grateful to Matteo Bustreo, Sebastiano Vascon, Luca Pascolini, and Davide Quarona for support in data acquisition.

Author Contributions

Study design, F.C., A.P., and C. Becchio, with the contribution of S.L. and V.M. Assessment of individual dispositions, F.C., with the contribution of A.P. and S.L. Data acquisition, F.C. Visual Focus of Attention, C. Beyan and V.M. Classification analyses, F.C. and A.K., with the contribution of C. Beyan. Manipulation checks, F.C., with the contribution of A.P. and S.L. Data interpretation, all authors. Manuscript preparation, F.C. and C. Becchio, with the contribution of J.R. and A.P.B.; all authors revised and approved the final version of the manuscript.

Declaration of Interests

The Authors report no competing interests.

Published: June 28, 2019

Footnotes

Supplemental Information can be found online at https://doi.org/10.1016/j.isci.2019.05.035.

Supplemental Information

References

- Ba S.O., Odobez J.-M. A study on Visual Focus of Attention modeling using head pose in a meeting room. In: Renals S., Bengio S., Fiscus J.G., editors. Machine Learning for Multimodal Interaction. Springer; 2006. pp. 75–87. [Google Scholar]; Ba, S.O., Odobez, J.-M.., 2006. A study on Visual Focus of Attention modeling using head pose in a meeting room, in: Renals, S., Bengio, S., Fiscus, J.G. (Eds.), Machine Learning for Multimodal Interaction. Springer, pp. 75-87.

- Badino L., D’Ausilio A., Glowinski D., Camurri A., Fadiga L. Sensorimotor communication in professional quartets. Neuropsychologia. 2014;55:98–104. doi: 10.1016/j.neuropsychologia.2013.11.012. [DOI] [PubMed] [Google Scholar]; Badino, L., D’Ausilio, A., Glowinski, D., Camurri, A., Fadiga, L.., 2014. Sensorimotor communication in professional quartets. Neuropsychologia 55, 98-104. [DOI] [PubMed]

- Bazzani L., Cristani M., Paggetti G., Tosato D., Menegaz G., Murino V. Analyzing groups: a social signaling perspective. In: Shan C., Porikli F., Xiang T., Gong S., editors. Video Analytics for Business Intelligence. Studies in Computational Intelligence. Springer; 2012. pp. 271–305. [Google Scholar]; Bazzani, L., Cristani, M., Paggetti, G., Tosato, D., Menegaz, G., Murino, V.., 2012. Analyzing groups: a social signaling perspective, in: Shan, C., Porikli, F., Xiang, T., Gong, S.. (Eds.), Video Analytics for Business Intelligence. Studies in Computational Intelligence. Springer, pp. 271-305.

- Beyan C., Capozzi F., Becchio C., Murino V. Prediction of the leadership style of an emergent leader using audio and visual nonverbal features. IEEE Trans. Multimed. 2018;20:441–456. [Google Scholar]; Beyan, C., Capozzi, F., Becchio, C., Murino, V.., 2018. Prediction of the leadership style of an emergent leader using audio and visual nonverbal features. IEEE Trans. Multimed. 20, 441-456.

- Beyan C., Carissimi N., Capozzi F., Vascon S., Bustreo M., Pierro A., Becchio C., Murino V. Proceedings of the 18th ACM International Conference on Multimodal Interaction. ACM; 2016. Detecting emergent leader in a meeting environment using nonverbal visual features only; pp. 317–324. [Google Scholar]; Beyan, C., Carissimi, N., Capozzi, F., Vascon, S., Bustreo, M., Pierro, A., Becchio, C., Murino, V.., 2016. Detecting emergent leader in a meeting environment using nonverbal visual features only, in: Proceedings of the 18th ACM International Conference on Multimodal Interaction. ACM, Tokyo, pp. 317-324.

- Capozzi F., Ristic J. How attention gates social interactions. Ann. N. Y. Acad. Sci. 2018;1426:179–198. doi: 10.1111/nyas.13854. [DOI] [PubMed] [Google Scholar]; Capozzi, F., Ristic, J.., 2018. How attention gates social interactions. Ann. N. Y. Acad. Sci. 1426, 179-198. [DOI] [PubMed]

- Carney D.R., Hall J.A., LeBeau L.S. Beliefs about the nonverbal expression of social power. J. Nonverbal. Behav. 2005;29:105–123. [Google Scholar]; Carney, D.R., Hall, J.A., LeBeau, L.S.., 2005. Beliefs about the nonverbal expression of social power. J. Nonverbal. Behav. 29, 105-123.

- Chang A., Livingstone S.R., Bosnyak D.J., Trainor L.J. Body sway reflects leadership in joint music performance. Proc. Natl. Acad. Sci. U S A. 2017;114:E4134–E4141. doi: 10.1073/pnas.1617657114. [DOI] [PMC free article] [PubMed] [Google Scholar]; Chang, A., Livingstone, S.R., Bosnyak, D.J., Trainor, L.J.., 2017. Body sway reflects leadership in joint music performance. Proc. Natl. Acad. Sci. U S A 114, E4134-E4141. [DOI] [PMC free article] [PubMed]

- Chemers M.M. Psychology Press; 2014. An Integrative Theory of Leadership. [Google Scholar]; Chemers, M.M.., 2014. An Integrative Theory of Leadership. Psychology Press.

- D'Ausilio A., Badino L., Li Y., Tokay S., Craighero L., Canto R., Aloimonos Y., Fadiga L. Leadership in orchestra emerges from the causal relationships of movement kinematics. PLoS One. 2012;7:e35757. doi: 10.1371/journal.pone.0035757. [DOI] [PMC free article] [PubMed] [Google Scholar]; D'Ausilio, A., Badino, L., Li, Y., Tokay, S., Craighero, L., Canto, R., Aloimonos, Y., Fadiga, L.., 2012. Leadership in orchestra emerges from the causal relationships of movement kinematics. PLoS One 7, e35757. [DOI] [PMC free article] [PubMed]

- Dovidio J.F., Ellyson S.L. Decoding visual dominance: attributions of power based on relative percentages of looking while speaking and looking while listening. Soc. Psychol. Q. 1982;45:106–113. [Google Scholar]; Dovidio, J.F., Ellyson, S.L.., 1982. Decoding visual dominance: attributions of power based on relative percentages of looking while speaking and looking while listening. Soc. Psychol. Q.. 45, 106-113.

- Ellyson S.L., Dovidio J.F. Springer-Verlag; 1985. Power, Dominance, and Nonverbal Behavior. [Google Scholar]; Ellyson, S.L., Dovidio, J.F.., 1985. Power, Dominance, and Nonverbal Behavior. Springer-Verlag.

- Ellyson S.L., Dovidio J.F., Fehr B.J. Visual behavior and dominance in women and men. In: Mayo C., Henle N.M., editors. Gender and Nonverbal Behavior. Springer; 1981. pp. 63–79. [Google Scholar]; Ellyson, S.L., Dovidio, J.F., Fehr, B.J.., 1981. Visual behavior and dominance in women and men, in: Mayo, C., Henle, N.M. (Eds.), Gender and Nonverbal Behavior. Springer, pp. 63-79.

- Emery N.J. The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci. Biobehav. Rev. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]; Emery, N.J.., 2000. The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci. Biobehav. Rev. 24, 581-604. [DOI] [PubMed]

- Exline R.V., Ellyson S.L., Long B. Visual behavior as an aspect of power role relationships. In: Pliner P., Krames L., Alloway T., editors. Vol. 2. Plenum Press; 1975. pp. 21–52. (Nonverbal Communication of Aggression. Advances in the Study of Communication and Affect). [Google Scholar]; Exline, R.V., Ellyson, S.L., Long, B.., 1975. Visual behavior as an aspect of power role relationships, in: Pliner, P., Krames, L., Alloway, T.. (Eds.), Nonverbal Communication of Aggression. Advances in the Study of Communication and Affect, Vol. 2. Plenum Press, pp. 21-52.

- Feinman S., Roberts D., Hsieh K.-F., Sawyer D., Swanson D. A critical review of social referencing in infancy. In: Feinman S., editor. Social Referencing and the Social Construction of Reality in Infancy. Plenum Press; 1992. pp. 15–54. [Google Scholar]; Feinman, S., Roberts, D., Hsieh, K.-F., Sawyer, D., Swanson, D.., 1992. A critical review of social referencing in infancy, in: Feinman, S.. (Ed.), Social Referencing and the Social Construction of Reality in Infancy. Plenum Press, pp. 15-54.

- Fiedler F.E. The contingency model: a theory of leadership effectiveness. In: Levine J.M., Moreland R.L., editors. Small Groups: Key Readings. Psychology Press; 2006. pp. 369–382. [Google Scholar]; Fiedler, F.E.., 2006. The contingency model: a theory of leadership effectiveness, in: Levine, J.M., Moreland, R.L. (Eds.), Small Groups: Key Readings. Psychology Press, pp. 369-382.

- Foddy M. Patterns of gaze in cooperative and competitive negotiation. Hum. Relat. 1978;31:925–938. [Google Scholar]; Foddy, M.., 1978. Patterns of gaze in cooperative and competitive negotiation. Hum. Relat. 31, 925-938.

- Foels R., Driskell J.E., Mullen B., Salas E. The effects of democratic leadership on group member satisfaction: an integration. Small Gr. Res. 2000;31:676–701. [Google Scholar]; Foels, R., Driskell, J.E., Mullen, B., Salas, E.., 2000. The effects of democratic leadership on group member satisfaction: an integration. Small Gr. Res. 31, 676-701.

- Foulsham T., Cheng J.T., Tracy J.L., Henrich J., Kingstone A. Gaze allocation in a dynamic situation: effects of social status and speaking. Cognition. 2010;117:319–331. doi: 10.1016/j.cognition.2010.09.003. [DOI] [PubMed] [Google Scholar]; Foulsham, T., Cheng, J.T., Tracy, J.L., Henrich, J., Kingstone, A.., 2010. Gaze allocation in a dynamic situation: effects of social status and speaking. Cognition 117, 319-331. [DOI] [PubMed]

- Frank T.D., Richardson M.J. On a test statistic for the Kuramoto order parameter of synchronization: an illustration for group synchronization during rocking chairs. Phys. D Nonlinear Phenom. 2010;239:2084–2092. [Google Scholar]; Frank, T.D., Richardson, M.J.., 2010. On a test statistic for the Kuramoto order parameter of synchronization: an illustration for group synchronization during rocking chairs. Phys. D Nonlinear Phenom. 239, 2084-2092.

- Gatica-Perez D. Automatic nonverbal analysis of social interaction in small groups: a review. Image Vis. Comput. 2009;27:1775–1787. [Google Scholar]; Gatica-Perez, D.., 2009. Automatic nonverbal analysis of social interaction in small groups: a review. Image Vis. Comput. 27, 1775-1787.

- Gerpott F.H., Lehmann-Willenbrock N., Silvis J.D., van Vugt M. In the eye of the beholder? An eye-tracking experiment on emergent leadership in team interactions. Leadersh. Q. 2018;29:523–532. [Google Scholar]; Gerpott, F.H., Lehmann-Willenbrock, N., Silvis, J.D., van Vugt, M.., 2018. In the eye of the beholder? An eye-tracking experiment on emergent leadership in team interactions. Leadersh. Q. 29, 523-532.

- Grabo A., Spisak B.R., van Vugt M. Charisma as signal: an evolutionary perspective on charismatic leadership. Leadersh. Q. 2017;28:473–485. [Google Scholar]; Grabo, A., Spisak, B.R., van Vugt, M.., 2017. Charisma as signal: an evolutionary perspective on charismatic leadership. Leadersh. Q. 28, 473-485.

- Hall J.A., Coats E.J., LeBeau L.S. Nonverbal behavior and the vertical dimension of social relations. Psychol. Bull. 2005;131:898–924. doi: 10.1037/0033-2909.131.6.898. [DOI] [PubMed] [Google Scholar]; Hall, J.A., Coats, E.J., LeBeau, L.S.., 2005. Nonverbal behavior and the vertical dimension of social relations. Psychol. Bull. 131, 898-924. [DOI] [PubMed]

- Jiang J., Chen C., Dai B., Shi G., Ding G., Liu L., Lu C. Leader emergence through interpersonal neural synchronization. Proc. Natl. Acad. Sci. U S A. 2015;112:4274–4279. doi: 10.1073/pnas.1422930112. [DOI] [PMC free article] [PubMed] [Google Scholar]; Jiang, J., Chen, C., Dai, B., Shi, G., Ding, G., Liu, L., Lu, C.., 2015. Leader emergence through interpersonal neural synchronization. Proc. Natl. Acad. Sci. U S A, 112, 4274-4279. [DOI] [PMC free article] [PubMed]

- Jording M., Hartz A., Bente G., Vogeley K. The “social gaze space”: gaze-based communication in triadic interactions. Front. Psychol. 2018;9:226. doi: 10.3389/fpsyg.2018.00226. [DOI] [PMC free article] [PubMed] [Google Scholar]; Jording, M., Hartz, A., Bente, G., Vogeley, K.., 2018. The “social gaze space”: gaze-based communication in triadic interactions. Front. Psychol. 9, 226. [DOI] [PMC free article] [PubMed]

- Kalma A.P., Visser L., Peeters A. Sociable and aggressive dominance: personality differences in leadership style? Leadersh. Q. 1993;4:45–64. [Google Scholar]; Kalma, A.P., Visser, L., Peeters, A.., 1993. Sociable and aggressive dominance: personality differences in leadership style? Leadersh. Q. 4, 45-64.

- Kaplan J.T., Man K., Greening S.G. Multivariate cross-classification: applying machine learning techniques to characterize abstraction in neural representations. Front. Hum. Neurosci. 2015;9:151. doi: 10.3389/fnhum.2015.00151. [DOI] [PMC free article] [PubMed] [Google Scholar]; Kaplan, J.T., Man, K., Greening, S.G.., 2015. Multivariate cross-classification: applying machine learning techniques to characterize abstraction in neural representations. Front. Hum. Neurosci. 9, 151. [DOI] [PMC free article] [PubMed]

- King J.R., Dehaene S. Characterizing the dynamics of mental representations: the temporal generalization method. Trends Cogn. Sci. 2014;18:203–210. doi: 10.1016/j.tics.2014.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]; King, J.R., Dehaene, S.., 2014. Characterizing the dynamics of mental representations: the temporal generalization method. Trends Cogn. Sci. 18, 203-210. [DOI] [PMC free article] [PubMed]

- Kleinke C.L. Gaze and eye contact: a research review. Psychol. Bull. 1986;100:78–100. [PubMed] [Google Scholar]; Kleinke, C.L.., 1986. Gaze and eye contact: a research review. Psychol. Bull. 100, 78-100. [PubMed]

- Koski J.E., Xie H., Olson I.R. Understanding social hierarchies: the neural and psychological foundations of status perception. Soc. Neurosci. 2015;10:527–550. doi: 10.1080/17470919.2015.1013223. [DOI] [PMC free article] [PubMed] [Google Scholar]; Koski, J.E., Xie, H., Olson, I.R.., 2015. Understanding social hierarchies: the neural and psychological foundations of status perception. Soc. Neurosci.. 10, 527-550. [DOI] [PMC free article] [PubMed]

- Koul A., Becchio C., Cavallo A. PredPsych: a toolbox for predictive machine learning-based approach in experimental psychology research. Behav. Res. Methods. 2018;50:1657–1672. doi: 10.3758/s13428-017-0987-2. [DOI] [PMC free article] [PubMed] [Google Scholar]; Koul, A., Becchio, C., Cavallo, A.., 2018. PredPsych: a toolbox for predictive machine learning-based approach in experimental psychology research. Behav. Res. Methods 50, 1657-1672. [DOI] [PMC free article] [PubMed]

- Kriegeskorte N. Pattern-information analysis: from stimulus decoding to computational-model testing. Neuroimage. 2011;56:411–421. doi: 10.1016/j.neuroimage.2011.01.061. [DOI] [PubMed] [Google Scholar]; Kriegeskorte, N.., 2011. Pattern-information analysis: from stimulus decoding to computational-model testing. Neuroimage 56, 411-421. [DOI] [PubMed]

- Laidlaw K.E.W., Foulsham T., Kuhn G., Kingstone A. Potential social interactions are important to social attention. Proc. Natl. Acad. Sci. U S A. 2011;108:5548–5553. doi: 10.1073/pnas.1017022108. [DOI] [PMC free article] [PubMed] [Google Scholar]; Laidlaw, K.E.W., Foulsham, T., Kuhn, G., Kingstone, A.., 2011. Potential social interactions are important to social attention. Proc. Natl. Acad. Sci. U S A 108, 5548-5553. [DOI] [PMC free article] [PubMed]

- Livi S., Kenny D.A., Albright L., Pierro A. A social relations analysis of leadership. Leadersh. Q. 2008;19:235–248. [Google Scholar]; Livi, S., Kenny, D.A., Albright, L., Pierro, A., 2008. A social relations analysis of leadership. Leadersh. Q. 19, 235-248.

- Mattan B.D., Kubota J.T., Cloutier J. How social status shapes person perception and evaluation: a social neuroscience perspective. Perspect. Psychol. Sci. 2017;12:468–507. doi: 10.1177/1745691616677828. [DOI] [PubMed] [Google Scholar]; Mattan, B.D., Kubota, J.T., Cloutier, J.., 2017. How social status shapes person perception and evaluation: a social neuroscience perspective. Perspect. Psychol. Sci. 12, 468-507. [DOI] [PubMed]

- Northouse P.G. Seventh Edition. SAGE Publications; 2016. Leadership: Theory and Practice. [Google Scholar]; Northouse, P.G.., 2016. Leadership: Theory and Practice, Seventh Edition. SAGE Publications.

- Pfeiffer U.J., Vogeley K., Schilbach L. From gaze cueing to dual eye-tracking: novel approaches to investigate the neural correlates of gaze in social interaction. Neurosci. Biobehav. Rev. 2013;37:2516–2528. doi: 10.1016/j.neubiorev.2013.07.017. [DOI] [PubMed] [Google Scholar]; Pfeiffer, U.J., Vogeley, K., Schilbach, L.., 2013. From gaze cueing to dual eye-tracking: novel approaches to investigate the neural correlates of gaze in social interaction. Neurosci. Biobehav. Rev. 37, 2516-2528. [DOI] [PubMed]

- Pierro A., Mannetti L., De Grada E., Livi S., Kruglanski A.W. Autocracy bias in informal groups under need for closure. Personal. Soc. Psychol. Bull. 2003;29:405–417. doi: 10.1177/0146167203251191. [DOI] [PubMed] [Google Scholar]; Pierro, A., Mannetti, L., De Grada, E., Livi, S., Kruglanski, A.W.., 2003. Autocracy bias in informal groups under need for closure. Personal. Soc. Psychol. Bull. 29, 405-417. [DOI] [PubMed]

- Risko E.F., Richardson D.C., Kingstone A. Breaking the fourth wall of cognitive science: real-world social attention and the dual function of gaze. Curr. Dir. Psychol. Sci. 2016;25:70–74. [Google Scholar]; Risko, E.F., Richardson, D.C., Kingstone, A.., 2016. Breaking the fourth wall of cognitive science: real-world social attention and the dual function of gaze. Curr. Dir. Psychol. Sci. 25, 70-74.

- Schilbach L., Timmermans B., Reddy V., Costall A., Bente G., Schlicht T., Vogeley K. Toward a second-person neuroscience. Behav. Brain Sci. 2013;36:393–414. doi: 10.1017/S0140525X12000660. [DOI] [PubMed] [Google Scholar]; Schilbach, L., Timmermans, B., Reddy, V., Costall, A., Bente, G., Schlicht, T., Vogeley, K.., 2013. Toward a second-person neuroscience. Behav. Brain Sci. 36, 393-414. [DOI] [PubMed]

- Schmid Mast M. Dominance as expressed and inferred through speaking time: a meta-analysis. Hum. Commun. Res. 2002;28:420–450. [Google Scholar]; Schmid Mast, M.., 2002. Dominance as expressed and inferred through speaking time: a meta-analysis. Hum. Commun. Res. 28, 420-450.

- Stiefelhagen R., Finke M., Yang J., Waibel A. From gaze to focus of attention. In: Huijsmans D., Smeulders A.W.M., editors. Visual Information and Information Systems: Third International Conference Visual ‘99. Springer; 1999. pp. 765–772. [Google Scholar]; Stiefelhagen, R., Finke, M., Yang, J., Waibel, A.., 1999. From gaze to focus of attention, in: Huijsmans, D., Smeulders, A.W.M. (Eds.), Visual Information and Information Systems: Third International Conference Visual ‘99. Springer, pp. 765-772.

- van Vugt M. On faces, gazes, votes, and followers: evolutionary psychological and social neuroscience approaches to leadership. In: Decety J., Christen Y., editors. New Frontiers in Social Neuroscience. Springer (IPSEN foundation); 2014. pp. 93–110. [Google Scholar]; van Vugt, M.., 2014. On faces, gazes, votes, and followers: evolutionary psychological and social neuroscience approaches to leadership, in: Decety, J., Christen, Y.. (Eds.), New Frontiers in Social Neuroscience. Springer (IPSEN foundation), pp. 93-110.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.