Abstract

Objective

Although adaptive e-learning environments (AEEs) can provide personalised instruction to health professional and students, their efficacy remains unclear. Therefore, this review aimed to identify, appraise and synthesise the evidence regarding the efficacy of AEEs in improving knowledge, skills and clinical behaviour in health professionals and students.

Design

Systematic review and meta-analysis.

Data sources

CINAHL, EMBASE, ERIC, PsycINFO, PubMed and Web of Science from the first year of records to February 2019.

Eligibility criteria

Controlled studies that evaluated the effect of an AEE on knowledge, skills or clinical behaviour in health professionals or students.

Screening, data extraction and synthesis

Two authors screened studies, extracted data, assessed risk of bias and coded quality of evidence independently. AEEs were reviewed with regard to their topic, theoretical framework and adaptivity process. Studies were included in the meta-analysis if they had a non-adaptive e-learning environment control group and had no missing data. Effect sizes (ES) were pooled using a random effects model.

Results

From a pool of 10 569 articles, we included 21 eligible studies enrolling 3684 health professionals and students. Clinical topics were mostly related to diagnostic testing, theoretical frameworks were varied and the adaptivity process was characterised by five subdomains: method, goals, timing, factors and types. The pooled ES was 0.70 for knowledge (95% CI −0.08 to 1.49; p.08) and 1.19 for skills (95% CI 0.59 to 1.79; p<0.00001). Risk of bias was generally high. Heterogeneity was large in all analyses.

Conclusions

AEEs appear particularly effective in improving skills in health professionals and students. The adaptivity process within AEEs may be more beneficial for learning skills rather than factual knowledge, which generates less cognitive load. Future research should report more clearly on the design and adaptivity process of AEEs, and target higher-level outcomes, such as clinical behaviour.

PROSPERO registration number

CRD42017065585

Keywords: Computer-assisted instruction, medical education, nursing education, e-learning, meta-analysis

Strengths and limitations of this study.

This is the first systematic review and meta-analysis examining the efficacy of adaptive e-learning environments in improving knowledge, skills and clinical behaviour in health professionals and students.

Strengths of this review include the broad search strategy, and in-depth assessments of the risk of bias and the quality of evidence.

High statistical heterogeneity resulting from clinical and methodological diversity limits the interpretation of findings.

Quantitative results should be treated with caution, given the small number and risk of bias of studies included in the meta-analysis.

Introduction

The use of information and communication technologies (ICTs) in the education of health professionals and students has become ubiquitous. Indeed, e-learning, defined as the use of ICTs to access educational curriculum and support learning,1 is increasingly present in clinical settings for the continuing education of health professionals,2 3 and in academic settings for the education of health profession students.4 E-learning environments integrate information, in the form of text and multimedia (eg, illustrations, animations, videos). They can include both asynchronous (ie, designed for self-study) and synchronous (ie, a class taught by an educator in real time) components.1 Non-adaptive e-learning environments (NEEs), the most widespread type of e-learning environment today, provide a standardised training for all learners.5 6 While they can include instructional design variations (eg, interactivity, feedback, practice exercises), they do not consider learners’ characteristics and the data generated during the learning process to provide a personalised training.6–8 This is problematic, since the interaction of health professionals and students with e-learning environments during the learning process generates a significant amount of data.9 However, designers of e-learning environments and educators rarely make use of this data to optimise learning efficacy and efficiency.9

In recent years, educational researchers have striven to develop e-learning environments that take a data-driven and personalised approach to education.10–13 E-learning environments that take into account each learner’s interactions and performance level could anticipate what types of content and resources meet the learner’s needs, potentially increasing learning efficacy and efficiency.13 Adaptive e-learning environments (AEEs) were developed for this purpose. AEEs collect data to build each learner’s profile (eg, navigation behaviour, preferences, knowledge), and use simple techniques (eg, adaptive information filtering, adaptive hypermedia) to implement different types of adaptivity targeting the content, navigation, presentation, multimedia or strategies of the training to provide a personalised learning experience.11 12 In the fields of computer science and educational technology, the term adaptivity refers to the process executed by a system based on ICTs of adapting educational curriculum content, structure or delivery to the profile of a learner.14 Two main methods of adaptivity can be implemented within an AEE. The first method, designed adaptivity, is expert-based and refers to an educator who designs the optimal instructional sequence to guide learners to learning content mastery. The educator determines how the curriculum will adapt to learners based on a variety of factors, such as knowledge or response time to a question. This method of adaptivity is thus based on the expertise of the educator who specifies how technology will react in a particular situation on the basis of the ‘if THIS, then THAT’ approach. The second method, algorithmic adaptivity, refers to use of algorithms to determine, for instance, the extent of the learner’s knowledge and the optimal instructional sequence. Algorithmic adaptivity requires more advanced adaptivity techniques and learner-modelling techniques derived from the fields of computer science and artificial intelligence (eg, Bayesian knowledge tracing, rule-based machine learning, natural language processing).10 15–18

The variability in the degree and the complexity of adaptivity within AEEs mirrors the adaptivity that can be observed in non-e-learning educational interventions. Some interventions, like the one-on-one human instruction and small-group classroom instruction, generally have a high degree of adaptivity since the instructor can adapt his teaching to the individual profiles of learners and consider their feedback.19 Other interventions, like large-group classroom instruction, generally have a low degree of adaptivity to individual learners. In some interventions, like paper-based instruction (eg, handouts, textbooks), there is no adaptivity at all.

AEEs have been developed and evaluated primarily in academic settings for students in mathematics, physics and related disciplines, for the acquisition of knowledge and development of cognitive skills (eg, arithmetic calculation). Four meta-analyses reported on the efficacy of AEEs among high school and university students in these fields of study.15–17 20 The results are promising: AEEs are in almost all cases more effective than large-group classroom instruction. In addition, Nesbit et al 21 point out that AEEs are more effective than NEEs. However, despite evidence of the efficacy of AEEs for knowledge acquisition and skill development in areas such as mathematics in high school and university students, their efficacy in improving learning outcomes in health professionals and students has not yet been established. To address this need, we conducted a systematic review and meta-analysis to identify and quantitatively synthesise all comparative studies of AEEs involving health professionals and students.

Systematic review and meta-analysis objective

To systematically identify, appraise and synthesise the best available evidence regarding the efficacy of AEEs in improving knowledge, skills and clinical behaviour in health professionals and students.

Systematic review and meta-analysis questions

We sought to answer the following questions with the systematic review:

What are the characteristics of studies assessing an AEE designed for health professionals’ and students’ education?

What are the characteristics of AEEs designed for health professionals’ or students’ education?

We sought to answer the following question with the meta-analysis:

What is the efficacy of AEEs in improving knowledge, skills and clinical behaviour in health professionals and students in comparison with NEEs, and non-e-learning educational interventions?

Methods

We planned and conducted this systematic review following the Effective Practice and Organisation of Care (EPOC) Cochrane Group guidelines,22 and reported it according to the Preferred Reporting Items for Systematic review and Meta-Analysis (PRISMA) standards23 (see online supplementary file 1). We prospectively registered (International Prospective Register of Systematic Reviews) and published the protocol of this systematic review.24 Thus, in this paper, we present an abridged version of the methods with an emphasis on changes made to the methods since the publication of the protocol.

bmjopen-2018-025252supp001.pdf (65.4KB, pdf)

Study eligibility

We included primary research articles reporting the assessment of an AEE with licensed health professionals, students, trainees and residents in any discipline. We defined an AEE as a computer-based learning environment which collects data to build each learner’s profile (eg, navigation behaviour, individual objectives, knowledge), interprets these data through expert input or algorithms, and adapts in real time the content (eg, showing/hiding information), navigation (eg, specific links and paths), presentation (eg, page layout), multimedia presentation (eg, images, videos) or tools (eg, different sets of strategies for different types of learners) to provide a dynamic and evolutionary learning path for each learner.10 14 We used the definitions of each type of adaptivity proposed by Knutov et al.12 We included studies in which AEEs had designed or algorithmic adaptivity, and studies including a co-intervention in addition to adaptive e-learning (eg, paper-based instruction). We included primary research articles in which the comparator was: (1) A NEE. (2) A non-e-learning educational intervention. (3) Another AEE with design variations. While included in the qualitative synthesis of the evidence for descriptive purposes, the third comparator was excluded from the meta-analysis. Outcomes of interest were knowledge, skills and clinical behaviour,25 26 and were defined as follows: (1) Knowledge: subjective (eg, learner self-report) or objective (eg, multiple-choice question knowledge test) assessments of factual or conceptual understanding. (2) Skills: subjective (eg, learner self-report) or objective (eg, faculty ratings) assessments of procedural skills (eg, taking a blood sample, performing cardiopulmonary resuscitation) or cognitive skills (eg, problem-solving, interpreting radiographs) in learners. (3) Clinical behaviour: subjective (eg, learner self-report) or objective (eg, chart audit) assessments of behaviours in clinical practice (eg, test ordering).6 In terms of study design, we considered for inclusion all controlled, experimental studies in accordance with the EPOC Cochrane Group guidelines.27

We excluded studies that: (1) Were not published in English or French. (2) Were non-experimental. (3) Were not controlled. (4) Did not report on at least one of the outcomes of interest in this review. (5) Did not have a topic related to the clinical aspects of health.

Study identification

We previously published our search strategy.24 Briefly, we designed a strategy in collaboration with a librarian to search the Cumulative Index to Nursing and Allied Health Literature (CINAHL), the Excerpta Medical Database (EMBASE), the Education Resources Information Center (ERIC), PsycINFO, PubMed and Web of Science for primary research articles published since the inception of each database up to February 2019. The search strategy revolved around three key concepts: ‘adaptive e-learning environments’, ‘health professionals/students’ and ‘effects on knowledge/competence (skills)/behaviour’ (see online supplementary file 2). To identify additional articles, we hand-searched six key journals (eg, British Journal of Educational Technology, Computers and Education) and the reference lists of included primary research articles.

bmjopen-2018-025252supp002.pdf (1.6MB, pdf)

Study selection

We worked independently and in duplicate (GF and M-AM-C or TM) to screen all titles and abstracts for inclusion using the EndNote software V.8.0 (Clarivate Analytics). We resolved disagreements by consensus. We then performed the full-text assessment of potentially eligible articles using the same methodology. Studies were included in the meta-analysis if they had a NEE control group and had no missing data.

Data extraction

One review author (GF) extracted data from included primary research articles using a modified version of the data collection form developed by the EPOC Cochrane Group.28 The main changes made to the extraction form were the addition of specific items relating to the AEE assessed in each study. Two review authors (TM or M-FD) validated the data extraction forms by reviewing the contents of each form against the data in the original article, adding comments when changes were needed. For all studies, we extracted the following data items if possible:

The population and setting: study setting, study population, inclusion criteria, exclusion criteria.

The methods: study aim, study design, unit of allocation, study start date and end date, and duration of participation.

The participants: study sample, withdrawals and exclusions, age, sex, level of instruction, number of years of experience as a health professional, practice setting and previous experience using e-learning.

The interventions: name of intervention, theoretical framework, statistical model/algorithm used to generate the learning path, clinical topic, number of training sessions, duration of each training session, total duration of the training, adaptivity subdomains (method, goals, timing, factors, types), mode of delivery, presence of other educational interventions and strategies.

The outcomes: name, time points measured, definition, person measuring, unit of measurement, scales, validation of measurement tool.

The results: results according to our primary (knowledge) and secondary (skills, clinical behaviour) outcomes, comparison, time point, baseline data, statistical methods used and key conclusions.

We contacted the corresponding authors of included primary research articles to provide us with missing data.

Assessment of the risk of bias

We worked independently and in duplicate (GF and TM or M-FD) to assess the risk of bias of included primary research articles using the EPOC risk of bias criteria, based on the data extracted with the data collection form.28 A study was deemed at high risk of bias if the individual criterion ‘random sequence generation’ was scored at ‘high’ or at ‘unclear’ risk of bias.

Data synthesis

First, we synthesised data qualitatively using tables to provide an overview of the included studies, and of the AEEs reported in these studies.

Second, using the Review Manager (RevMan) software V.5.1, we conducted meta-analyses to quantitatively synthesise the efficacy of AEEs vs other educational interventions for each outcome for which data from at least two studies were available (ie, knowledge, skills). We included studies in the meta-analysis if the comparator wasn’t another AEE. For randomised controlled trials (RCTs), we converted each post-test mean and SD to a standardised mean difference (SMD), also known as Hedges g effect size (ES). For cross-over RCTs, we used means pooled across each intervention. We pooled ESs using a random-effects model. Statistical significance was defined by a two-sided α of .05.

We first assessed heterogeneity qualitatively by examining the characteristics of included studies, the similarities and disparities between the types of participants, the types of interventions and the types of outcomes. We then used the I 2 statistic within the RevMan software to quantify how much the results varied across individual studies (ie, between-study inconsistency or heterogeneity). We interpreted the I 2 values as follows: 0%–40%: might not be important; 30%–60%: may represent moderate heterogeneity; 50%–90%: may represent substantial heterogeneity; and 75%–100%: considerable heterogeneity.29 We performed sensitivity analysis to assess if the exclusion of studies at high risk of bias or with a small sample size (n<20) would have had an impact on statistical heterogeneity. Subgroup analyses were performed to examine if study population and study comparators were potential effect modifiers.

Since less then 10 studies were included in the meta-analysis for each outcome, we did not assess reporting biases using a funnel plot, as suggested in the Cochrane Handbook.30

Assessment of the quality of evidence

We worked independently and in duplicate (GF and M-AM-C) to assess the quality of evidence for each individual outcome. We used the Grading of Recommendations Assessment, Development and Evaluation (GRADE) web-based software, based on the data extracted with the data collection checklist.31 We considered five factors (risk of bias of included studies, indirectness of evidence, unexplained heterogeneity or inconsistency of results, imprecision of the results, probability of reporting bias) for downgrading the quality of the body of evidence for each outcome.31

Patient and public involvement

Patients and the public were not involved in setting the research question, the outcome measures, the design or conduct of this systematic review. Patients and the public were not asked to advise on interpretation of results or to contribute to the writing or editing of this document.

Results

Study flow

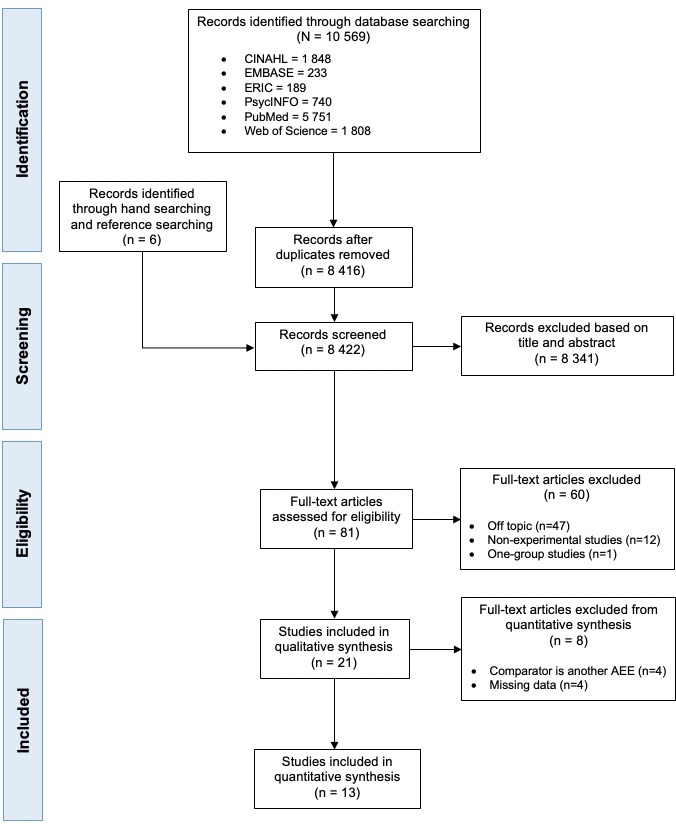

From a pool of 10 569 potentially relevant articles, we found 21 quantitative, controlled studies assessing an AEE with health professionals or students (see figure 1).

Figure 1.

Preferred Reporting Items for Systematic review and Meta-Analysis (PRISMA) study flow diagram. AEE, adaptive e-learning environment.

Out of 21 included studies in the qualitative synthesis, 4 studies compared two AEEs with design variations,32–35 and 4 studies had missing data.36–39 The four studies with missing data did not report properly data regarding the results, that is, mean scores and SD in both study groups at post-test, regarding the outcomes of interest in this review (ie, knowledge, skills or clinical behaviour). Thus, 13 studies were included in the meta-analysis and used to calculate an ES on learning outcomes.

Study characteristics

We summarised the key characteristics of included studies in table format (see table 1). In terms of study population, in the 21 studies examined published between 2003 and 2018, investigators have evaluated AEEs mostly in the medical field. Studies focused on medical students (n=8),38–44 medical residents (n=8),32–35 41 45–47 physicians in practice (n=4),36 37 41 48 nursing students (n=2),40 49 nurses in practice (n=2)48 50 and health sciences students (n=1).51 Three studies focused on multiple populations.40 41 48 The median sample size was 46 participants (IQR 123). In terms of study design, 15 out of 21 studies (71%) were randomised, 7 studies of which were randomised cross-over trials.33 34 41–43 45 47 The median number of training sessions was 2 (IQR 2.5 sessions), the median training time was 2.13 hours (IQR 2.88 hours) and the median training period was 14 days (IQR 45 days). In terms of comparators, it is possible to underline three types of comparisons. The first comparison is an AEE versus another AEE with design variations (n=4),32–35 which implies that one of the AEEs assessed had variations in its design, such as different types of adaptivity (eg, feedback in one AEE is longer or more complex than in the other). The second comparison is an AEE versus a NEE (n=11).38–40 42–46 48 50 51 The third and final comparison is an AEE versus another type of educational intervention, such as a paper-based educational intervention, including handouts, textbooks or images (n=3),37 41 47 or a traditional educational intervention, such as a group lecture (n=2).49 52 In one study, the comparator was not clearly reported.36 As stated before, only the second and third types of comparisons were included in the meta-analysis since our aim was to synthesise quantitatively the efficacy of AEEs versus other types of educational interventions.

Table 1.

Characteristics of included studies

| First author, year, country | Participants* | Study design† | No. and duration of training sessions | Duration of intervention | Comparison(s)‡ | Type of outcome(s) of interest | Outcome measures |

| Comparison: AEEs versus other educational interventions | |||||||

| Casebeer, 2003, USA |

PP; n=181 | RCT; post-test-only, 2 groups | Four sessions; 1 hour each | NR | NR | Knowledge | 21-item multiple-choice questionnaire |

| Skills | |||||||

| Cook, 2008, USA |

R; n=122 | RXT; post-test-only, 4 groups | Four sessions; 30 min each | 126 days | NEE | Knowledge | 69-item case-based multiple-choice questionnaire |

| Crowley, 2010, USA |

PP; n=15 | RCT; pretest–post-test, 2 groups | Four sessions; 4 hours each | 138 days | P | Skills | Virtual slide test to examine diagnostic accuracy |

| de Ruijter, 2018, the Netherlands |

NP; n=269 | RCT; pretest–post-test, 2 groups | No fixed sessions | 180 days | NEE | Knowledge | 18-item true-false questionnaire (range 0–18) |

| Behaviour | 9-item self-reported questionnaire (range 0–9) |

||||||

| Hayes-Roth, 2010 USA |

MS, NS; n=30 | RCT; pretest–post-test–retention-test, 3 groups | NR; mean training time 2.36 hours | NR |

|

Skills | 6-item written skill probe (range −6–18) |

| Lee, 2017, USA |

MS; n=1522 | NRCT; pretest–post-test, 3 groups | Five sessions; NR | 42 days | NEE | Knowledge | Unclear |

| Skills | Multidimensional situation-based questions—Real Index (range 0%–100%) |

||||||

| Behaviour | Unclear | ||||||

| Micheel, 2017, USA |

PP, NP; n=751 | NRCT; pretest–post-test–retention-test, 2 groups | NR | NR | NEE | Knowledge | 10-item true-false questionnaire (range 0–10) |

| Morente, 2013, Spain |

NS; n=73 | RCT; pretest–post-test, 2 groups | One session; 4 hours | 1 day | T | Knowledge | 22-item multiple-choice questionnaire (range 0–22) |

| Munoz, 2010, Colombia |

MS; n=40 | NRCT; pretest–post-test, 2 groups | NR; mean training time 5.97 hours | NR | NEE | Knowledge | 10-item multiple-choice questionnaire (range 0–10) |

| Romito, 2016, USA |

R; n=24 | NRCT; pretest–post-test–retention-test, 2 groups | One session; 30 min | 1 day | NEE and T | Skills | 22-item video clip-based test |

| Samulski, 2017, USA |

MS, R, PP; n=36 | RXT; pretest–post-test, 2 groups | Two sessions; 20 min to 14 hours | 1 month | P | Knowledge | 28-item multiple-choice questionnaire (range 0%–100%) |

| Thai, 2015, USA |

HSC; n=87 | RCT; pretest–post-test–retention-test, 3 groups | One session; 45 min | 1 day |

|

Skills | 14-item case-based test (range 0%–100%) |

| Van Es, 2015, Australia |

R; n=43 | RXT; post-test-only, 2 groups | Three sessions; NR | 50 days | P | Knowledge | 7-item to 21-item multiple-choice questionnaire (range 0%–100%) |

| Van Es, 2016, Australia |

MS; n=46 | RXT; post-test-only, 2 groups | Three sessions; 2 hours each | 34 days | NEE | Knowledge | Multiple-choice questionnaire |

| Wong, 2015, Australia |

MS; n=99 | RXT; post-test-only, 2 groups | Two sessions; 1.5 hour each | 14 days | NEE | Knowledge | 8-item multiple-choice and interactive questions (range 0%–100%) |

| Wong, 2017, USA |

MS; n=178 | NRCT; pretest–post-test–retention-test, 3 groups | One session; NR | 35 days |

|

Skills | Test to examine diagnostic accuracy |

| Woo, 2006, USA |

MS; n=73 | NRCT; pretest–post-test, 3 groups | One session; 2 hours | 1 day |

|

Knowledge | Short-response questionnaire |

| Comparison: adaptive e-learning vs adaptive e-learning (two AEEs with design variations) | |||||||

| Crowley, 2007, USA |

R; n=21 | RCT; pretest–post-test–retention-test, 2 groups | One session; 4.5 hours | 1 day | AEE | Knowledge | 51-item multiple-choice questionnaire (range 0%–100%) |

| El Saadawi, 2008, USA |

R; n=20 | RXT; pretest–post-test, 2 groups | Two sessions; 2 hours each | 1 day | AEE | Skills | Virtual slide test to examine diagnostic accuracy |

| El Saadawi, 2010, USA |

R; n=23 | RXT; pretest–post-test, 2 groups | Two sessions; 2.25 hours each | 2 days | AEE | Skills | Virtual slide test to examine diagnostic accuracy |

| Feyzi-Begnagh, 2014, USA |

R; n=31 | RCT; pretest–post-test, 2 groups | Two sessions; 2 hours and 3 hours | 1 day | AEE | Knowledge | Unspecified test |

*Participants: MS, medical students; NS, nursing students; R, residents (physicians in postgraduate training); PP, physicians in practice; NP, nurses in practice; HSC, health sciences students.

†Study design: RCT, randomised controlled trial; RXT, randomised cross-over trial; NRCT, non-randomised controlled trial.

‡Comparison: AEE, adaptive e-learning environment; NEE, non-adaptive e-learning environment; NI, no-intervention control group; T, traditional (group lecture); P, paper (handout, textbook or latent image cases).

NR, not reported.

Finally, regarding the outcomes, knowledge was assessed in 14 out of 21 studies (66.7%),32 35 36 38 39 41–45 47–50 skills in 9 studies (42.9%)33 34 36 37 40 46 50–52 and clinical behaviour in 2 studies (9.5%).44 50 Outcome measures for knowledge were similar across studies: in 9 out of 14 studies measuring knowledge, investigators employed multiple-choice questionnaires developed by the research team with input from content experts that were tailored to training content to ensure specificity. Knowledge was also assessed using true-false questions in two studies, and the type of questionnaire was not specified in three studies. Outcome measures for skills were also similar across the nine studies reporting this outcome, since in all studies investigators measured cognitive skills rather than procedural skills. Indeed, all outcomes measures for skills were related to clinical reasoning. In six studies, skills were measured through tests that included a series of diagnostic tests (eg, electrocardiograms, X-rays, microscopy images) that learners had to interpret. In three studies, skills were measured through questions based on clinical situations in which learners had to specify how they would react in these particular situations. We were not able to describe the similarity between the outcome measures for clinical behaviour; no details were provided in one of the two studies reporting this outcome.

Characteristics of AEEs

We summarised the key characteristics of AEEs assessed in the 21 studies in table format (see table 2). In terms of the clinical topics of the AEEs, the majority of AEEs focused on training medical students and residents in executing and/or interpreting diagnostic tests. Indeed, a significant proportion of the AEEs assessed focused on dermopathology and cytopathology microscopy32–35 37 41 42 47 (n=8). Other topics were diagnostic imaging43 46 (n=2), behaviour change counselling40 50 (n=2), chronic disease management45 48 (n=2), pressure ulcer evaluation49 (n=1), childhood illness management38 (n=1), 51electrocardiography (n=1), fetal heart rate interpretation52 (n=1), haemodynamics39 (n=1), chlamydia screening (n=1)36 and atrial fibrillation management (n=1).44

Table 2.

Characteristics of adaptive e-learning environments

| First author, year | Clinical topic(s) | Theoretical framework(s) | Platform | Adaptivity subdomains | ||||

| Adaptivity method | Adaptivity goals | Adaptivity timing | Adaptivity factors | Adaptivity types | ||||

| Casebeer, 2003 | Chlamydia screening | Transtheoretical model of change, problem-based learning, situated learning theory | NR | Designed adaptivity | To increase learning effectiveness (knowledge, skills). | Throughout the training, after case-based and practice-based questions. | User answers to questions |

|

| Cook, 2008 |

Diabetes, hyperlipidaemia, asthma, depression | NR | NR | Designed adaptivity | To increase learning efficiency (knowledge gain divided by learning time). | After each case-based question in each module (17 to 21 times/module). | User knowledge |

|

| Crowley, 2007 | Dermopathology, subepidermal vesicular dermatitis | Cognitive tutoring | SlideTutor | Algorithmic adaptivity | To increase learning gains, metacognitive gains and diagnostic performance. | At the beginning of each case. | User actions: results of problem-solving tasks; requests for help |

|

| Crowley, 2010 | Dermopathology, melanoma | Cognitive tutoring | SlideTutor | Algorithmic adaptivity | To improve reporting performance and diagnostic accuracy. | At the beginning of each case. | User actions: results of problem-solving tasks; reporting tasks; requests for help |

|

| de Ruijter, 2018 | Smoking cessation counselling | I-Change Model | Computer-tailored e-learning programme | Designed adaptivity | To modify behavioural predictors and behaviour. | At the beginning of the training. | Demographics, behavioural predictors, behaviour |

|

| El Saadawi, 2008 | Dermopathology, melanoma | Cognitive tutoring | Report tutor | Algorithmic adaptivity | To teach how to correctly identify and document all relevant prognostic factors in the diagnostic report. | At the beginning of each case. | User actions, report features |

|

| El Saadawi, 2010 | Dermopathology | Cognitive tutoring | SlideTutor | Algorithmic adaptivity | To facilitate transfer of performance gains to real world tasks that do not provide direct feedback on intermediate steps. | During intermediate problem-solving steps. | User actions: results of problem-solving tasks; reporting tasks; requests for help |

|

| Feyzi-Begnagh, 2014 | Dermopathology, nodular and diffuse dermatitis | Cognitive tutoring, theories of self-regulated learning | SlideTutor | Algorithmic adaptivity | To improve metacognitive and learning gains during problem-solving. | During each case or immediately after each case. | User actions: results of problem-solving tasks; reporting tasks; requests for help |

|

| Hayes-Roth, 2010 | Brief intervention training in alcohol abuse | Guided mastery | STAR workshop | NR | To improve attitudes and skills. | During clinical cases. | User scores, user-generated dialogue |

|

| Lee, 2017 |

Treatment of atrial fibrillation | NR | Learning assessment platform | Designed adaptivity | To increase learning effectiveness (knowledge, competence, confidence and practice). | After learning gaps identified in the first session. | Learning gaps in relation to objectives |

|

| Micheel, 2017 | Oncology | Learning style frameworks | Learning-style tailored educational platform | Designed adaptivity | To increase learning effectiveness (knowledge). | After assessing the learning style. | Learning style |

|

| Morente, 2013 | Pressure ulcer evaluation | NR | ePULab | Designed adaptivity | To increase learning effectiveness (knowledge, skills). | Each pressure ulcer evaluation. | User skills |

|

| Munoz, 2010 | Management of childhood illness | Learning styles framework | SIAS-ITS | Designed adaptivity | To increase learning effectiveness and efficiency. | At the beginning of the training. | User knowledge, user learning style |

|

| Romito, 2016 | Transoesophageal echocardiography | Perceptual learning | TOE PALM | Algorithmic adaptivity | To improve response accuracy and response time. | After each clinical case. | User response accuracy, user response time |

|

| Samulski, 2017 | Cytopathology, pap test, squamous lesions, glandular lesions | NR | Smart Sparrow |

Designed adaptivity | To improve learning effectiveness. | During intermediate problem-solving steps. | User knowledge |

|

| Thai, 2015 |

Electrocardiography | Perceptual learning theory, adaptive response-time-based algorithm | PALM | Algorithmic adaptivity | To improve perceptual classification learning effectiveness and efficiency. | After each user response. | User response accuracy, user response time |

|

| Van Es, 2015 | Diagnostic cytopathology, gynaecology, fine needle aspiration, exfoliative fluid | NR | Smart Sparrow |

Designed adaptivity | To improve learning effectiveness. | During intermediate problem-solving steps. | User responses |

|

| Van Es, 2016 | Diagnostic cytopathology,; gynaecology, fine needle aspiration, exfoliative fluid | NR | Smart Sparrow |

Designed adaptivity | To improve learning effectiveness. | During intermediate problem-solving steps. | User responses |

|

| Wong, 2015 | Diagnostic imaging, chest X-rays, computed tomography scans | Cognitive load theory | Smart Sparrow |

Designed adaptivity | To improve learning effectiveness. | During intermediate problem-solving steps. | User responses |

|

| Wong, 2017 | Fetal heart rate interpretation | Perceptual learning | PALM | Algorithmic adaptivity | To improve response accuracy and response time. | After each clinical case. | User response accuracy, user response time |

|

| Woo, 2006 | Haemodynamics, baroreceptor reflex | NR | CIRCSIM tutor | Algorithmic adaptivity | To improve knowledge related to problem-solving tasks. | After each user response. | User knowledge, user responses |

|

ePULab, electronic pressure ulcer lab; NR, not reported; PALM, perceptual adaptive learning module.; SIAS-ITS, SIAS intelligent tutoring system; TOE PALM, transoesophageal echocardiography perceptual adaptive learning module.

The 21 AEEs examined were based on a wide variety of theoretical frameworks. The most frequently used framework was cognitive tutoring, adopted in five studies,32–35 37 which refers to the use of a cognitive model. The integration of a cognitive model in an AEE implies the representation of all the knowledge in the field of interest in a way that is similar to the human mind for the purpose of understanding and predicting the cognitive processes of learners.53 The second most used framework was perceptual learning, adopted in three studies.46 51 52 Perceptual learning aims at improving information extraction skills of the environment and the development of automaticity in this respect in learners.46 Interestingly, two studies used models from behavioural science, the Transtheoretical Model36 and the I-Change Model,50 to tailor the AEE to the theoretical determinants of clinical behaviour change in nurses and physicians in practice. Theoretical frameworks relating to self-regulated learning,35 learning styles,38 48 guided mastery,40 and cognitive load,43 problem-based-learning36 and situated learning36 were also used.

Three main adaptive e-learning platforms were used by investigators in studies examined: SlideTutor (n=4),33 37 54 55 Smart Sparrow (n=4)41–43 47 and the Perceptual Adaptive Learning Module (PALM, n=3).51 52 56 SlideTutor is an AEE with algorithmic adaptivity which provides cases to be solved by learners under supervision by the system. These cases incorporate dermopathology virtual slides that must be examined by learners to formulate a diagnosis. An expert knowledge base, consisting of evidence-diagnosis relationships, is used by SlideTutor to create a dynamic solution graph representing the current state of the learning process and to determine the optimal instructional sequence.55 Smart Sparrow is an AEE with designed adaptivity which allows educators to determine adaptivity factors, such as answers to questions, response time to a question and learner actions, to specify how the system will adapt the instructional sequence or provide feedback. These custom learning paths can be more or less personalised.42 PALM is an AEE with algorithmic adaptivity aiming to improve perceptual learning through adaptive response-time-based sequencing to determine dynamically the spacing between different learning items based on each learner’s accuracy and speed in interactive learning trials.51 Different custom adaptive e-learning platforms were used in other studies.

We propose five subdomains that emerged from the review to characterise the adaptivity process of AEEs reported in the 21 studies: (1) Adaptivity method. (2) Adaptivity goals. (3) Adaptivity timing. (4) Adaptivity factors. (5) Adaptivity types

First subdomain: adaptivity method

This subdomain relates to the method of adaptivity that dictates how the AEE adapts instruction to a learner. As we previously described, there are two main methods of adaptivity: designed adaptivity and algorithmic adaptivity. The first is based on the expertise of the educator who specifies how technology will react in a particular situation on the basis of the ‘if THIS, then THAT’ approach. The second refers to use of algorithms that will determine, for instance, the extent of the learner’s knowledge and the optimal instructional sequence. In this review 11 AEEs employed designed adaptivity36 38 41–44 46–50 and 9 AEEs employed algorithmic adaptivity.33 37 39 51 52 54–57 The adaptivity method wasn’t specified in one study.40

Second subdomain: adaptivity goals

This subdomain relates to the purpose of the adaptivity process within the AEE. For most AEEs, the adaptivity process aims primarily to increase the efficacy and/or efficiency of knowledge acquisition and skills development relative to other training methods.32 35 36 38–45 47–49 51 For instance, several AEEs aimed to increase the diagnostic accuracy and reporting performance of medical students and residents.32–34 37 46 52 In one study, the goal of adaptivity was to modify behavioural predictors and behaviour in nurses.50 In cases where two adaptive AEEs with certain variations in their technopedagogical design are compared with each other, the adaptivity process generally aims at improving the metacognitive and cognitive processes related to learning.32 33 35

Third subdomain: adaptivity timing

This subdomain relates to when the adaptivity occurs during the learning process with the AEE. In 19 out of 21 studies, the adaptivity occurred throughout the training with AEE, usually after an answer to a question or during intermediate problem-solving steps. However, in two studies, adaptivity was only implemented at the beginning of the training with the AEE following survey responses.38 50

Fourth subdomain: adaptivity factors

This subdomain relates to the learner-related data (variables) on which the adaptivity process is based. The most frequently targeted variable is the learner’s scores after an assessment or a question within the AEE (eg, knowledge/skills scores, response accuracy scores).38–43 45–47 49 51 52 Other frequently targeted variables include the learner’s actions during its use of the AEE (eg, results of problem-solving tasks, results of reporting tasks, requests for help),32–35 37 and the learner’s response time regarding a specific question or task.46 51 52

Fifth subdomain: adaptivity types

The final subdomain relates to which types of adaptivity are mobilised in the AEE: content, navigation, multimedia, presentation and tools. In the context of this review, the adaptivity types are based on the work of Knutov et al.12 Overall, 17 out of 21 (81%) AEEs examined integrated more than one type of adaptivity. Content adaptivity was the most used adaptivity type; it was implemented in all but one AEEs reviewed (n=20). Content adaptivity aims to adapt the textual information (curriculum content) to the learner’s profile through different mechanisms and to different degrees.12 Navigation adaptivity was the second most used adaptivity type (n=14). Navigation can be adapted in two ways; it can be enforced or suggested. When enforced, an optimal personalised learning path is determined for the learner by an expert educator or by the algorithms within the AEE. When suggested, there are several personalised learning paths available to each learner, who can determine the path he prefers himself.12 Most reviewed studies included AEEs with enforced navigation, with one optimal personalised learning path being determined by an expert educator or by the algorithm. Multimedia adaptivity was the third most used adaptivity type (n=11). This adaptivity type, much like content adaptivity which relates to textual information, implies the adaptivity of the multimedia elements of the training such as videos, pictures, models, to the learner’s profile. Presentation adaptivity was the fourth most used adaptivity type (n=9). It implies the adaptivity of the layout of the page to the digital device used, or to the learner’s profile. Tools adaptivity was the least used adaptivity type (n=8). This technique results in providing a different set of features or learning strategies for different types of learners, such as different interfaces for problem-solving, and knowledge representation.

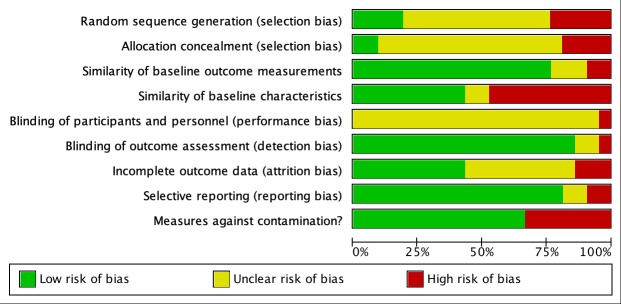

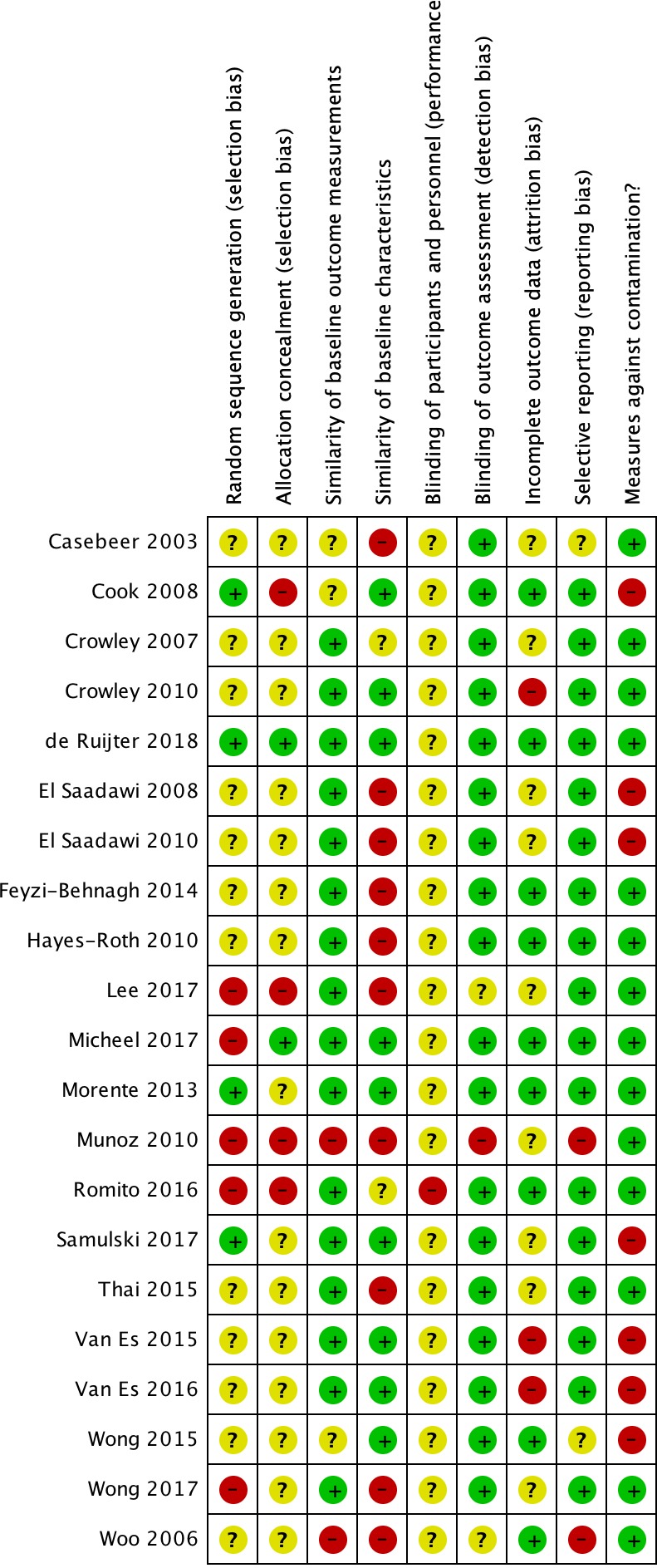

Risk of bias assessment

Results of included studies for the risk of bias assessment are presented in figures 2 and 3. In ≥75% of studies, biases related to similarity of baseline outcome measurements, blinding of outcome assessment and selective reporting of outcomes were low. Moreover, in ≥50% of studies, biases related to contamination were low. Regarding the blinding of outcome assessment, in most studies, review authors judged that the outcomes of interest and the outcome measurement were not likely to be influenced by the lack of blinding, since studies had objective measures, that is, an evaluative test of knowledge or skills. Regarding contamination bias, review authors scored studies at high risk if they had a cross-over design.

Figure 2.

Risk of bias summary: review authors' judgements about each risk of bias item for each included study.

Figure 3.

Risk of bias graph: review authors' judgements about each risk of bias item presented as percentages across all included studies.

However, in ≥50% of studies, biases related to random sequence generation, allocation concealment, similarity of baseline characteristics, blinding of participants and personnel, and incomplete outcome data were unclear or high. Regarding random sequence generation, an important number of studies did not report on the method of randomisation used by investigators. As per Cochrane recommendations, all eligible studies were included in the meta-analysis, regardless of the risk of bias assessment. Indeed, since almost all studies scored overall at unclear risk of bias, Cochrane suggests to present an estimated intervention effect based on all available studies, together with a description of the risk of bias in individual domains.30

Quantitative results

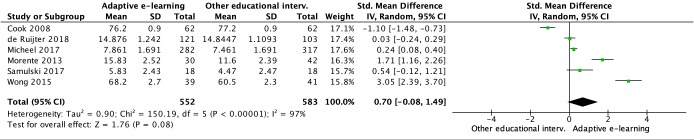

Efficacy of AEEs versus other educational interventions in improving knowledge

The pooled ES (SMD 0.70; 95% CI −0.08 to 1.49; Z=1.76, p=0.08) of AEEs compared with other educational interventions in improving knowledge suggests a moderate to large effect (see figure 4). However, this result is not statistically significant. Significant statistical heterogeneity was observed among studies (I2=97%, p<0.00001), and individual ESs ranged from −1.10 to 3.05. One study in particular45 reported a negative ES, but the difference between groups in knowledge scores was statistically non-significant. Moreover, while participants using the AEE in the experimental group reported the same knowledge scores as participants in the control group at the end of the study, time spent on instruction was reduced by 18% with the AEE compared with the NEE, thus improving learning efficiency.45 When that study45 is removed from the meta-analysis, the pooled ES becomes statistically significant (SMD 1.07; 95% CI 0.28 to 1.85; Z=2.67, p=0.008).

Figure 4.

Forest plot representing the meta-analysis of the efficacy of adaptive e-learning versus other educational interventions in improving knowledge.

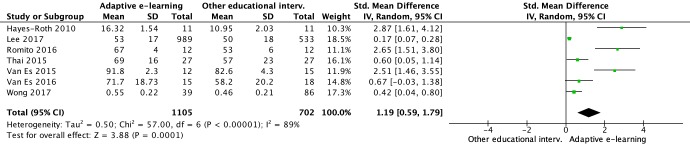

Efficacy of AEEs versus other educational interventions in improving skills

As we considered ESs larger than 0.8 to be large,58 the pooled ES (SMD 1.19; 95% CI 0.59 to 1.79; Z=3.88, p=0.0001) of AEEs compared with other educational interventions in improving skills suggests a significantly large effect (see figure 5). Statistical heterogeneity was lower than in previous analyses, but was still significant (I2=89%, p<0.00001). Individual ESs ranged from 0.17 to 2.87.

Figure 5.

Forest plot representing the meta-analysis of the efficacy of adaptive e-learning versus other educational interventions in improving skills.

For both knowledge and skills, we conducted subgroup analyses according to population (health professionals vs students) and comparator (adaptive e-learning vs non-adaptive e-learning, adaptive e-learning vs paper-based instruction, adaptive e-learning vs classroom-based instruction). No statistically significant differences between subgroups were found regarding the ESs.

Quality of the evidence

The quality of the evidence table produced with GRADE, as well as the justifications for each decision, is presented in online supplementary file 3 (GRADE quality of evidence levels: very low, low, moderate, high). For knowledge, the quality of evidence was deemed to be very low. More precisely, risk of bias was deemed serious, inconsistency serious, indirectness not serious and imprecision serious. For skills, the quality of evidence was deemed to be low. More precisely, risk of bias was deemed serious, inconsistency serious, indirectness not serious and imprecision serious.

bmjopen-2018-025252supp003.pdf (53.8KB, pdf)

Discussion

Principal findings

This is the first systematic review and meta-analysis to evaluate the efficacy of AEEs in health professionals and students. We identified 21 relevant studies published since 2003, 17 of which assessed an AEE versus another educational intervention (large-group classroom instruction, NEE or paper-based learning), and 4 of which assessed 2 AEEs with design variations head-to-head. When compared with other educational interventions, AEEs were associated with statistically significant improvements in learning outcomes in 12 out of 17 studies. Pooled ESs were medium to large for knowledge and large for skills, but only the latter was associated with a statistically significant effect. Statistical heterogeneity was high in all analyses. However, this finding is consistent with other meta-analyses in the field of medical education that also reported high heterogeneity across studies.8 59 60 No potential effect modifiers were found during subgroup analyses, and these did not help in explaining the source of the heterogeneity. The quality of evidence for all comparisons was either low or very low. Therefore, while we believe the results support the potential of AEEs for the education of health professionals and students, we recommend interpreting the ESs with caution.

Comparison with other studies

To our knowledge, no previous systematic review and meta-analysis has specifically assessed the efficacy of AEEs in improving learning outcomes in health professionals and students, or any other population. However, interestingly, since the 1990s there has been a strong research interest in the field of AEEs with algorithmic adaptivity (also known as intelligent learning environments or intelligent tutoring systems) into elementary, high school and postsecondary education for multiple subjects.17 Thus, multiple meta-analyses have been conducted with regard to AEEs in that setting.

Steenbergen-Hu and Cooper15 reported a mean ES of 0.35 of AEEs with algorithmic adaptivity on learning outcomes in college students when compared with all other types of educational interventions. The mean ES was 0.37 when the comparator was large-group classroom instruction, 0.35 when the comparator was aNEE and 0.47 when the comparator was textbooks or workbooks.15

Ma et al 18 reported a mean ES of 0.42 of AEEs with algorithmic adaptivity on learning outcomes in elementary, high school and postsecondary students when compared with large-group classroom instruction. The mean ES was 0.57 when the comparator was a NEE, and 0.35 when the comparator was textbooks or workbooks. Interestingly, the mean ES was higher for studies which assessed an AEE in biology and physiology (0.59) and in humanities and social science (0.63) than in studies which assessed an AEE in mathematics (0.35) and physics (0.38).18

Kulik and Fletcher17 reported a mean ES of 0.65 of AEEs with algorithmic adaptivity on learning outcomes in elementary, high school and postsecondary students when compared with large-group classroom instruction. Education areas in this review were diverse (eg, mathematics, computer science, physics), but none were related to health sciences. Interestingly, the mean ES was 0.78 for studies up to 80 participants, and 0.30 for studies with more than 250 participants. Moreover, the mean ES for studies conducted with elementary and high school students was 0.44, compared with 0.75 for studies conducted with postsecondary students.17

Thus, in light of the results of these meta-analyses, the ES reported in our review may appear high. However, our review looked more specifically into the efficacy of AEEs in improving learning outcomes in health professionals and students. This is significant since, in the meta-analyses of Steenbergen-Hu and Cooper,15 Ma et al,18 and Kulik and Fletcher,17 AEEs seem to be more effective in postsecondary students17 18 and for learning subjects related to biology, physiology and social science.18 Moreover, previous meta-analyses focused on the efficacy of AEEs in improving procedural and declarative knowledge, and did not report on the efficacy of AEEs in improving skills. This is important since AEEs may be more effective for providing tailored guidance and coaching for developing skills regarding complex clinical interventions, rather than learning factual knowledge, which often generates less cognitive load.61

Implications for practice and research

This review provides important implications for the design and development of AEEs for health professionals and students. Table 3 presents eight practical considerations for the design and development of AEEs based on the results of this systematic review for educators and educational researchers.

Table 3.

Practical considerations for the design and development of adaptive e-learning environments

| Practical considerations | Explanations |

| Developing the educational content |

|

| Selecting a theoretical framework |

|

| Selecting the adaptivity method |

|

| Selecting the adaptivity goal(s) |

|

| Selecting the adaptivity timing |

|

| Selecting the adaptivity factor(s) |

|

| Selecting the adaptivity type(s) |

|

| Determining your technical resources and selecting the adaptive e-learning platform |

|

This review also provides several key insights for future research. In terms of population, future research should focus on assessing AEEs with health professionals in practice, such as registered nurses and physicians, rather than students in these disciplines. This could provide key insights into how AEEs can impact clinical behaviour and, ultimately, patient outcomes. In addition, investigators should target larger sample sizes. In terms of interventions, researchers should report more clearly on adaptivity methods, goals, timing, factors and types. Moreover, researchers should provide additional details regarding the underlining algorithms, or AEE architecture, allowing the adaptivity process in order to ensure replicability of findings. Regarding comparators, this review suggests there is a need for additional research using traditional comparators (ie, large-group classroom instruction) and more specific comparators (ie, AEE with design variations). Regarding outcomes and outcome measures, researchers should use validated measurement tools of knowledge, skills and clinical behaviour to facilitate knowledge synthesis. Moreover, the very low number of studies assessing the impact of AEEs on health professionals’ and students’ clinical behaviour demonstrates the need for research with higher-level outcomes. Finally, in terms of study designs, researchers should focus on research designs allowing the assessment of the impact of multiple educational design variations and adaptivity types within one study, such as factorial experiments.

Strengths and limitations

Strengths of this systematic review and meta-analysis include the prospective registration and publication of a protocol based on rigorous methods in accordance with Cochrane and PRISMA guidelines; the exhaustive search in all relevant databases; the independent screening of the titles, abstracts and full-text of studies; the assessment of each included study’ risk of bias using EPOC Cochrane guidelines; and the assessment of the quality of evidence for each individual outcome using the GRADE methodology.

Our review also has limitations to consider. First, outcome measures varied widely across studies. To address this issue, we conducted the meta-analysis using the SMD. Using the SMD allowed us to standardise the results of studies to a uniform scale before pooling them. Review authors judged that using the SMD was the best option for this review, as it is the current practice in the field of knowledge synthesis in medical education.6 59

Second, there was high inconsistency among study results, which we can mostly attribute to differences in populations, AEE design, research methods and outcomes. This resulted in sometimes widely differing estimates of effect. To partly address this issue, we used a random-effects model for the meta-analysis, which assumes that the effects estimated in the studies are different and follow a distribution.30 However, since a random-effects model awards more weight to smaller studies to learn about the distribution of effects, it could potentially exacerbate the effects of the bias in these studies.30

Finally, publication bias could not be assessed by means of a funnel plot since there were less than 10 studies included in the meta-analysis.

Conclusions

Adaptive e-learning has the potential to increase the effectiveness and efficiency of learning in health professionals and students. Through the different subdomains of the adaptivity process (ie, method, goals, timing, factors, types), AEEs can take into account the particularities inherent to each learner and provide personalised instruction. This systematic review and meta-analysis underlines the potential of AEEs for improving knowledge and skills in health professionals and students in comparison with other educational interventions, such as NEEs and large-group classroom learning, across a range of topics. However, evidence was either of low quality or very low quality and heterogeneity was high across populations, interventions, comparators and outcomes. Thus, additional comparative studies assessing the efficacy of AEEs in health professionals and students are needed to strengthen the quality of evidence.

Supplementary Material

Footnotes

Contributors: GF contributed to the conception and design of the review, to the acquisition and analysis of data and to the interpretation of results. Moreover, GF drafted the initial manuscript. SC contributed to the conception and design of the review, and to the interpretation of results. M-AM-C contributed to the conception and design of the review, to the acquisition of data and interpretation of results. TM contributed to the conception and design of the review, to the acquisition of data and to the interpretation of results. M-FD contributed to the conception and design of the review, to the acquisition of data and to the interpretation of results. GM-D contributed to the conception and design of the review, and to the interpretation of results. JC contributed to the interpretation of results. M-PG contributed to the interpretation of results. VD contributed to the interpretation of results. All review authors contributed to manuscript writing, critically revised the manuscript, gave final approval and agreed to be accountable for all aspects of work, ensuring integrity and accuracy.

Funding: GF was supported by the Vanier Canada Graduate Scholarship (Canadian Institutes of Health Research), a doctoral fellowship from Quebec’s Healthcare Research Fund, the AstraZeneca and Dr Kathryn J Hannah scholarships from the Canadian Nurses Foundation, a doctoral scholarship from the Montreal Heart Institute Foundation, a doctoral scholarship from Quebec’s Ministry of Higher Education, and multiple scholarships from the Faculty of Nursing at the University of Montreal. M-AM-C was supported by a doctoral fellowship from Quebec’s Healthcare Research Fund, a doctoral scholarship from the Montreal Heart Institute Foundation, a doctoral scholarship from Quebec’s Ministry of Higher Education, and multiple scholarships from the Faculty of Nursing at the University of Montreal. TM was supported by a postdoctoral fellowship from Quebec’s Healthcare Research Fund, a postdoctoral scholarship from the Montreal Heart Institute Foundation. M-FD was supported by a doctoral fellowship from Canada’s Social Sciences and Humanities Research Council, and a scholarship from the Center for Innovation in Nursing Education.

Competing interests: None declared.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: All data relevant to the study are included in the article or uploaded as supplementary information.

References

- 1. Clark R, Mayer R. E-Learning and the science of instruction: proven guidelines for consumers and Designers of multimedia learning. 4. Hoboken, NJ: John Wiley & Sons, 2016. [Google Scholar]

- 2. Cheng B, Wang M, Mørch AI, et al. . Research on e-learning in the workplace 2000–2012: a bibliometric analysis of the literature. Educ Res Rev 2014;11:56–72. 10.1016/j.edurev.2014.01.001 [DOI] [Google Scholar]

- 3. Fontaine G, Cossette S, Heppell S, et al. . Evaluation of a web-based e-learning platform for brief motivational interviewing by nurses in cardiovascular care: a pilot study. J Med Internet Res 2016;18:e224 10.2196/jmir.6298 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. George PP, Papachristou N, Belisario JM, et al. . Online eLearning for undergraduates in health professions: a systematic review of the impact on knowledge, skills, attitudes and satisfaction. J Glob Health 2014;4:010406 10.7189/jogh.04.010406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Lahti M, Hätönen H, Välimäki M. Impact of e-learning on nurses’ and student nurses knowledge, skills, and satisfaction: A systematic review and meta-analysis. Int J Nurs Stud 2014;51:136–49. 10.1016/j.ijnurstu.2012.12.017 [DOI] [PubMed] [Google Scholar]

- 6. Cook DA, Levinson AJ, Garside S, et al. . Internet-Based learning in the health professions. JAMA 2008;300:1181–96. 10.1001/jama.300.10.1181 [DOI] [PubMed] [Google Scholar]

- 7. Sinclair PM, Kable A, Levett-Jones T, et al. . The effectiveness of Internet-based e-learning on clinician behaviour and patient outcomes: a systematic review. Int J Nurs Stud 2016;57:70–81. 10.1016/j.ijnurstu.2016.01.011 [DOI] [PubMed] [Google Scholar]

- 8. Voutilainen A, Saaranen T, Sormunen M. Conventional vs. e-learning in nursing education: a systematic review and meta-analysis. Nurse Educ Today 2017;50:97–103. 10.1016/j.nedt.2016.12.020 [DOI] [PubMed] [Google Scholar]

- 9. Ellaway RH, Pusic MV, Galbraith RM, et al. . Developing the role of big data and analytics in health professional education. Med Teach 2014;36:216–22. 10.3109/0142159X.2014.874553 [DOI] [PubMed] [Google Scholar]

- 10. Desmarais MC, Baker RSJd. A review of recent advances in learner and skill modeling in intelligent learning environments. User Model User-adapt Interact 2012;22:9–38. 10.1007/s11257-011-9106-8 [DOI] [Google Scholar]

- 11. Knutov E, De Bra P, Pechenizkiy M. Generic adaptation framework: a process-oriented perspective. J Digit Inf 2011;12:1–22. [Google Scholar]

- 12. Knutov E, De Bra P, Pechenizkiy M. Ah 12 years later: a comprehensive survey of adaptive hypermedia methods and techniques. New Rev Hypermedia Multimed 2009;15:5–38. 10.1080/13614560902801608 [DOI] [Google Scholar]

- 13. Akbulut Y, Cardak CS. Adaptive educational hypermedia accommodating learning styles: a content analysis of publications from 2000 to 2011. Comput Educ 2012;58:835–42. 10.1016/j.compedu.2011.10.008 [DOI] [Google Scholar]

- 14. Brusilovsky P, Adaptive PC. Adaptive and intelligent web-based educational systems. Int J Art Intel Educ 2003;13:159–72. [Google Scholar]

- 15. Steenbergen-Hu S, Cooper H. A meta-analysis of the effectiveness of intelligent tutoring systems on college students’ academic learning. J Educ Psychol 2014;106:331–47. 10.1037/a0034752 [DOI] [Google Scholar]

- 16. Steenbergen-Hu S, Cooper H. A meta-analysis of the effectiveness of intelligent tutoring systems on K–12 students’ mathematical learning. J Educ Psychol 2013;105:970–87. 10.1037/a0032447 [DOI] [Google Scholar]

- 17. Kulik JA, Fletcher JD. Effectiveness of intelligent tutoring systems: a meta-analytic review. Review of Educational Research 2015;86:42–78. [Google Scholar]

- 18. Ma W, Adesope OO, Nesbit JC, et al. . Intelligent tutoring systems and learning outcomes: a meta-analysis. J Educ Psychol 2014;106:901–18. 10.1037/a0037123 [DOI] [Google Scholar]

- 19. Evens M, Michael J. One-on-one tutoring by humans and computers. Mahwah, NJ: Psychology Press, 2006. [Google Scholar]

- 20. Strengers Y, Moloney S, Maller C, et al. . Beyond behaviour change: practical applications of social practice theory in behaviour change programmes : Strengers Y, Maller C, Social practices, interventions and sustainability. New York, NY: Routledge, 2014: 63–77. [Google Scholar]

- 21. Nesbit JC, Adesope OO, Liu Q, et al. . How effective are intelligent tutoring systems in computer science education? IEEE 14th International Conference on advanced learning technologies (ICALT). Athens, Greece 2014.

- 22. Cochrane Effective Practice and Organisation of Care (EPOC) Epoc resources for review authors, 2017. Available: http://epoc.cochrane.org/resources/epoc-resources-review-authors

- 23. Moher D, Shamseer L, Clarke M, et al. . Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev 2015;4 10.1186/2046-4053-4-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Fontaine G, Cossette S, Maheu-Cadotte M-A, et al. . Effectiveness of adaptive e-learning environments on knowledge, competence, and behavior in health professionals and students: protocol for a systematic review and meta-analysis. JMIR Res Protoc 2017;6:e128 10.2196/resprot.8085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Moja L, Moschetti I, Cinquini M, et al. . Clinical evidence continuous medical education: a randomised educational trial of an open access e-learning program for transferring evidence-based information - ICEKUBE (Italian Clinical Evidence Knowledge Utilization Behaviour Evaluation) - study protocol. Implement Sci 2008;3 10.1186/1748-5908-3-37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Miller GE. The assessment of clinical skills/competence/performance. Acad Med 1990;65:S63–7. 10.1097/00001888-199009000-00045 [DOI] [PubMed] [Google Scholar]

- 27. EPOC Cochrane Review Group What study designs should be included in an EPOC review and what should they be called? 2014. Available: https://epoc.cochrane.org/sites/epoc.cochrane.org/files/public/uploads/EPOC%20Study%20Designs%20About.pdf2017

- 28. EPOC Cochrane Review Group Data extraction and management: EPOC resources for review authors Oslo. Norway: Norwegian Knowledge Centre for the Health Services, 2013. [Google Scholar]

- 29. Higgins JPT, et al. Measuring inconsistency in meta-analyses. BMJ 2003;327:557–60. 10.1136/bmj.327.7414.557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Higgins JPT, Green S. Cochrane Handbook for systematic reviews of interventions version 5.1.0.: the Cochrane collaboration 2011.

- 31. Guyatt GH, Oxman AD, Vist GE, et al. . Grade: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008;336:924–6. 10.1136/bmj.39489.470347.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Crowley RS, Legowski E, Medvedeva O, et al. . Evaluation of an intelligent tutoring system in pathology: effects of external representation on performance gains, metacognition, and acceptance. J Am Med Inform Assoc 2007;14:182–90. 10.1197/jamia.M2241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. El Saadawi GM, Azevedo R, Castine M, et al. . Factors affecting feeling-of-knowing in a medical intelligent tutoring system: the role of immediate feedback as a metacognitive scaffold. Adv in Health Sci Educ 2010;15:9–30. 10.1007/s10459-009-9162-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. El Saadawi GM, Tseytlin E, Legowski E, et al. . A natural language intelligent tutoring system for training pathologists: implementation and evaluation. Adv in Health Sci Educ 2008;13:709–22. 10.1007/s10459-007-9081-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Feyzi-Behnagh R, Azevedo R, Legowski E, et al. . Metacognitive scaffolds improve self-judgments of accuracy in a medical intelligent tutoring system. Instr Sci 2014;42:159–81. 10.1007/s11251-013-9275-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Casebeer LL, Strasser SM, Spettell CM, et al. . Designing tailored web-based instruction to improve practicing physicians' preventive practices. J Med Internet Res 2003;5:e20 10.2196/jmir.5.3.e20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Aleven V, Kay J, Mostow J. Use of a medical its improves reporting performance among community pathologists. intelligent tutoring systems. Pittsburgh, PA: Springer Berlin/Heidelberg, 2010. [Google Scholar]

- 38. Munoz DC, Ortiz A, Gonzalez C, et al. . Effective e-learning for health professional and medical students: the experience with SIAS-Intelligent tutoring system. Stud Health Technol Inform 2010;156:89–102. [PubMed] [Google Scholar]

- 39. Woo CW, Evens MW, Freedman R, et al. . An intelligent tutoring system that generates a natural language dialogue using dynamic multi-level planning. Artif Intell Med 2006;38:25–46. 10.1016/j.artmed.2005.10.004 [DOI] [PubMed] [Google Scholar]

- 40. Hayes-Roth B, Saker R, Amano K. Automating individualized coaching and authentic role-play practice for brief intervention training. Methods Inf Med 2010;49:406–11. 10.3414/ME9311 [DOI] [PubMed] [Google Scholar]

- 41. Samulski TD, Taylor LA, La T, et al. . The utility of adaptive eLearning in cervical cytopathology education. Cancer Cytopathology 2017:1–7. [DOI] [PubMed] [Google Scholar]

- 42. Van Es SL, Kumar RK, Pryor WM, et al. . Cytopathology whole slide images and adaptive tutorials for senior medical students: a randomized crossover trial. Diagn Pathol 2016;11:1 10.1186/s13000-016-0452-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Wong V, Smith AJ, Hawkins NJ, et al. . Adaptive Tutorials versus web-based resources in radiology: a mixed methods comparison of efficacy and student engagement. Acad Radiol 2015;22:1299–307. 10.1016/j.acra.2015.07.002 [DOI] [PubMed] [Google Scholar]

- 44. Lee BC, Ruiz-Cordell KD, Haimowitz SM, et al. . Personalized, assessment-based, and tiered medical education curriculum integrating treatment guidelines for atrial fibrillation. Clin Cardiol 2017;40:455–60. 10.1002/clc.22676 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Cook DA, Beckman TJ, Thomas KG, et al. . Adapting web-based instruction to residents' knowledge improves learning efficiency: a randomized controlled trial. J Gen Intern Med 2008;23:985–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Romito BT, Krasne S, Kellman PJ, et al. . The impact of a perceptual and adaptive learning module on transoesophageal echocardiography interpretation by anaesthesiology residents. Br J Anaesth 2016;117:477–81. 10.1093/bja/aew295 [DOI] [PubMed] [Google Scholar]

- 47. Van Es SL, Kumar RK, Pryor WM, et al. . Cytopathology whole slide images and adaptive tutorials for postgraduate pathology trainees: a randomized crossover trial. Hum Pathol 2015;46:1297–305. 10.1016/j.humpath.2015.05.009 [DOI] [PubMed] [Google Scholar]

- 48. Micheel CM, Anderson IA, Lee P, et al. . Internet-Based assessment of oncology health care professional learning style and optimization of materials for web-based learning: controlled trial with concealed allocation. J Med Internet Res 2017;19:e265 10.2196/jmir.7506 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Morente L, Morales-Asencio JM, Veredas F. Effectiveness of an e-learning tool for education on pressure ulcer evaluation. International Conference on health informatics. Barcelona, Spain 2013:155–60. [DOI] [PubMed]

- 50. de Ruijter D, Candel M, Smit ES, et al. . The effectiveness of a Computer-Tailored e-learning program for practice nurses to improve their adherence to smoking cessation counseling guidelines: randomized controlled trial. J Med Internet Res 2018;20:e193 10.2196/jmir.9276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Cognitive Science Society Adaptive perceptual learning in electrocardiography: the synergy of passive and active classification. 37th annual conference of the. Pasadena, CA, 2015. [Google Scholar]

- 52. Wong MS, Krasne S. 859: use of a perceptual adaptive learning module results in improved accuracy and fluency of fetal heart rate categorization by medical students. Am J Obstet Gynecol 2017;216:S491–2. 10.1016/j.ajog.2016.11.768 [DOI] [Google Scholar]

- 53. Corbett A, Kauffman L, Maclaren B, et al. . A cognitive Tutor for genetics problem solving: learning gains and student modeling. J Educ Comput Res 2010;42:219–39. 10.2190/EC.42.2.e [DOI] [Google Scholar]

- 54. Feyzi-Behnagh R, Azevedo R, Legowski E, et al. . Metacognitive scaffolds improve self-judgments of accuracy in a medical intelligent tutoring system. Instructional Science 2014;42:159–81. 10.1007/s11251-013-9275-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Cook DA, Beckman TJ, Thomas KG, et al. . Adapting web-based instruction to residents’ knowledge improves learning efficiency. J Gen Intern Med 2008;23:985–90. 10.1007/s11606-008-0541-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. El Saadawi GM, Tseytlin E, Legowski E, et al. . A natural language intelligent tutoring system for training pathologists: implementation and evaluation. Adv Health Sci Educ Theory Pract 2008;13:709–22. 10.1007/s10459-007-9081-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Cohen J. Statistical power analysis for the behavioral sciences. Hillsdale, NJ: L. Erlbaum Associates, 1988. [Google Scholar]

- 58. Cook DA, Hatala R, Brydges R, et al. . Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. JAMA 2011;306:978–88. 10.1001/jama.2011.1234 [DOI] [PubMed] [Google Scholar]

- 59. Liu Q, Peng W, Zhang F, et al. . The effectiveness of blended learning in health professions: systematic review and meta-analysis. J Med Internet Res 2016;18:e2 10.2196/jmir.4807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Paas F, Renkl A, Sweller J. Cognitive load theory and instructional design: recent developments. Educ Psychol 2003;38:1–4. 10.1207/S15326985EP3801_1 [DOI] [Google Scholar]

- 61. Sweller J. Cognitive load during problem solving: effects on learning. Cogn Sci 1988;12:257–85. 10.1207/s15516709cog1202_4 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2018-025252supp001.pdf (65.4KB, pdf)

bmjopen-2018-025252supp002.pdf (1.6MB, pdf)

bmjopen-2018-025252supp003.pdf (53.8KB, pdf)