Abstract

MultiBUGS is a new version of the general-purpose Bayesian modelling software BUGS that implements a generic algorithm for parallelising Markov chain Monte Carlo (MCMC) algorithms to speed up posterior inference of Bayesian models. The algorithm parallelises evaluation of the product-form likelihoods formed when a parameter has many children in the directed acyclic graph (DAG) representation; and parallelises sampling of conditionally-independent sets of parameters. A heuristic algorithm is used to decide which approach to use for each parameter and to apportion computation across computational cores. This enables MultiBUGS to automatically parallelise the broad range of statistical models that can be fitted using BUGS-language software, making the dramatic speed-ups of modern multi-core computing accessible to applied statisticians, without requiring any experience of parallel programming. We demonstrate the use of MultiBUGS on simulated data designed to mimic a hierarchical e-health linked-data study of methadone prescriptions including 425,112 observations and 20,426 random effects. Posterior inference for the e-health model takes several hours in existing software, but MultiBUGS can perform inference in only 28 minutes using 48 computational cores.

Keywords: BUGS, parallel computing, Markov chain Monte Carlo, Gibbs sampling, Bayesian analysis, hierarchical models, directed acyclic graph

1. Introduction

BUGS is a long running project that makes easy to use Bayesian modelling software available to the statistics community. The software has evolved through three main versions since nineteen eighty-nine: first ClassicBUGS (Spiegelhalter et al. 1996), then WinBUGS (Lunn et al. 2000), then the current open-source OpenBUGS (Lunn et al. 2009). The software is structured around the twin ideas of the declarative BUGS language (Thomas 2006), through which the user specifies the graphical model (Lauritzen et al. 1990) that defines the statistical model to be analysed; and Markov Chain Monte Carlo simulation (MCMC) (Geman and Geman 1984; Gelfand and Smith 1990), which is used to estimate the posterior distribution. These ideas have also been widely adopted in other Bayesian software, notably in JAGS (Plummer 2017) and NIMBLE (de Valpine et al. 2017), and related ideas are used in Stan (Carpenter et al. 2017).

Technological advances in recent years have led to massive increases in the amount of data that are generated and stored. This has posed problems for traditional Bayesian modelling, because fitting such models with a huge amount of data in existing standard software, such as OpenBUGS, is typically either impossible or extremely time-consuming. While most recent computers have multiple computational cores, which can be used to speed up computation, OpenBUGS has not previously made use of this facility. The aim of MultiBUGS is to make available to applied statistics practitioners the dramatic speed-ups of multi-core computation without requiring any knowledge of parallel programming, through an easy-to-use implementation of a generic, automatic algorithm for parallelising the MCMC algorithms used by BUGS-style software.

1.1. Approaches to MCMC parallelisation

The most straightforward approach for using multiple computational cores or multiple central processing units (CPUs) to perform MCMC simulation is to run each of multiple, independent MCMC chains on a separate CPU or core (e.g., Bradford and Thomas 1996; Rosenthal 2000). Since the chains are independent, there is no need for information to be passed between the chains: the algorithm is embarrassingly parallel. Running several MCMC chains is valuable for detecting problems of non-convergence of the algorithm using, for example, the Brooks-Gelman-Rubin diagnostic (Gelman and Rubin 1992; Brooks and Gelman 1998). However, the time taken to get past the burn in period cannot be shortened using this approach.

A different approach is to use multiple CPUs or cores for a single MCMC chain, with the aim of shortening the time taken for the MCMC chain to converge and to mix. One way to do this is to identify tasks within standard MCMC algorithms that can be calculated in parallel, without altering the underlying Markov chain. A task that is often, in principle, straightforward to parallelise, and is fundamental in several MCMC algorithms, such as the Metropolis-Hastings algorithm, is evaluation of the likelihood (e.g., Whiley and Wilson 2004; Jewell et al. 2009; Bottolo et al. 2013). Another task that can be parallelised is sampling of conditionally-independent components, as suggested by, for example, Wilkinson (2006).

MultiBUGS implements all of the above strategies for parallelisation of MCMC. There are thus two levels of parallelisation: multiple MCMC chains are run in parallel, with the computation required by each chain also parallelised by identifying both complex parallelisable likelihoods and conditionally-independent components that can be sampled in parallel.

There are numerous other approaches to MCMC parallelisation. Several authors have proposed running parts of the model on separate cores and then combining results (Scott et al. 2016) using either somewhat ad hoc procedures or sequential Monte Carlo-inspired methods (Goudie et al. 2018). This approach has the advantage of being able to reuse already written MCMC software and, in this sense, is similar to the approach used in MultiBUGS. A separate body of work (Brockwell 2006; Angelino et al. 2014) proposes using a modified version of the Metropolis-Hastings algorithm which speculatively considers a possible sequence of MCMC steps and evaluates the likelihood at each proposal on a separate core. The time saving tends to scale logarithmically in the number of cores for this class of algorithms. A final group of approaches modifies the Metropolis-Hastings algorithm by proposing a sequence of candidate points in parallel (Calderhead 2014). This approach can reduce autocorrelations in the MCMC chain and so speed up MCMC convergence.

1.2. MultiBUGS software

MultiBUGS is available as free software, under the GNU General Public License version 3, and can be downloaded from https://www.MultiBUGS.org. MultiBUGS currently requires Microsoft Windows, and version 8.1 or newer of the Microsoft MPI (MS-MPI) parallel programming framework, available from https://msdn.microsoft.com/en-us/library/bb524831(v=vs.85).aspx. Note that the Windows firewall may require you to give MultiBUGS permission to communicate between cores. The source code for MultiBUGS can be downloaded from https://github.com/MultiBUGS/MultiBUGS. The data and model files to replicate all the results presented in this paper can be found within MultiBUGS, as we describe later in the paper, or can be downloaded from https://github.com/MultiBUGS/MultiBUGS-examples.

The paper is organised as follows: in Section 2 we introduce the class of models we consider and the parallelisation strategy adopted in MultiBUGS; implementation details are provided in Section 3; Section 4 summarises the basic process of fitting models in MultiBUGS; Section 5 demonstrates MultiBUGS for analysing a large hierarchical dataset; and we conclude with a discussion in Section 6.

2. Background and methods

2.1. Models and notation

MultiBUGS performs inference for Bayesian models that can be represented by a directed acyclic graph (DAG), with each component of the model associated with a node in the DAG. A DAG G = (VG, EG) consists of a set of nodes or vertices VG joined by directed edges EG ⊂ VG × VG, represented by arrows. The parents paG(v) = {u : (u, v) ∈ EG} of a node v are the nodes with an edge pointing to node v. The children chG(v) = {u : (v, u) ∈ EG} of a node v are the nodes pointed to by edges emanating from node v. We omit G subscripts here, and throughout the paper, wherever there is no ambiguity.

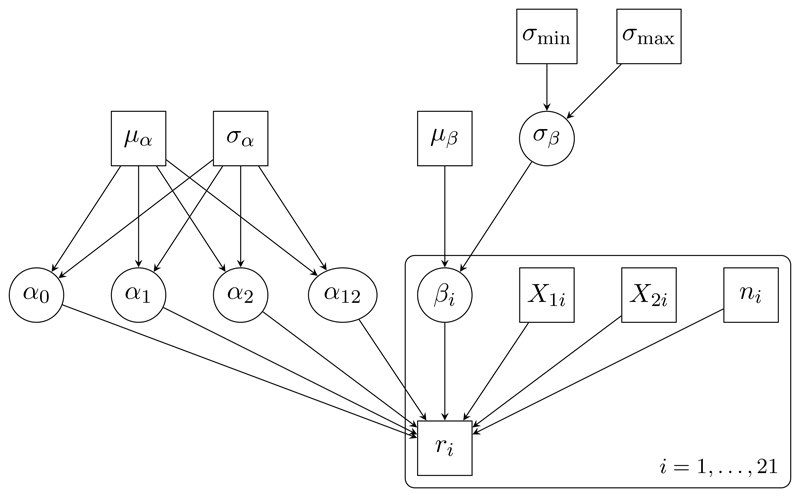

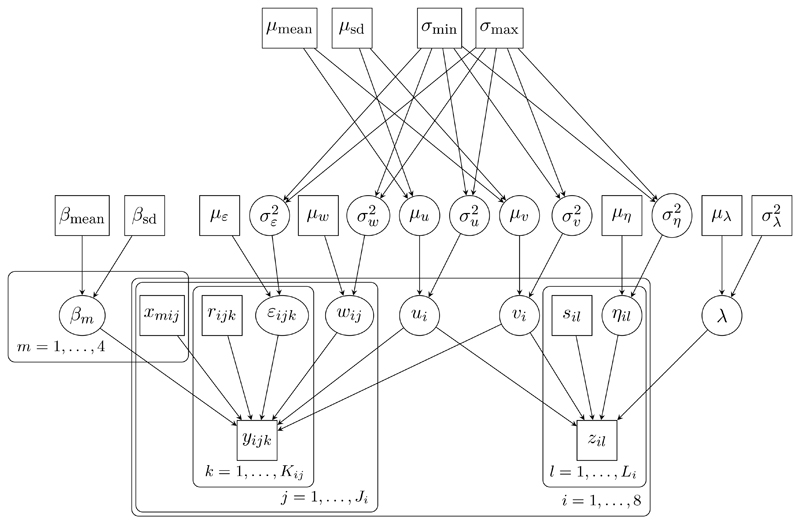

DAGs can be presented graphically (see Figures 1 and 3 below), with stochastic nodes shown in ovals, and constant and observed quantities in rectangles. Stochastic dependencies are represented by arrows. Repeated nodes are enclosed by a rounded rectangle (plate), with the range of repetition indicated by the label.

Figure 1.

DAG representation of the seeds model.

Figure 3.

DAG representation of the e-health model.

To establish ideas, consider a simple random effects logistic regression model (called “seeds”) for the number ri of seeds that germinated out of ni planted, in each of i = 1, … , N = 21 experiments, with binary indicators of seed type X 1i and root extract type X 2i (Crowder 1978; Breslow and Clayton 1993).

We choose normal priors for the regression parameters α 0, α 1, α 2, α 12, with mean µα = 0 and standard deviation σα = 1000. We fix µβ = 0, and choose a uniform prior on the range σ min = 0 to σ max = 10 for the standard deviation σβ of the random effects βi. Figure 1 shows a DAG representation of the “seeds” model. The data are presented in Crowder (1978).

For ease of exposition of the parallelisation methods used by MultiBUGS, we assume throughout this paper that the set of nodes VG includes all stochastic parameters SG ⊆ VG and constant quantities (including observations and hyperparameters) in the model, but excludes parameters that are entirely determined by other parameters. As a consequence, the DAG for the seeds example (Figure 1) includes as nodes the stochastic parameters SG = {α 0, α 1, α 2, α 12, β 1, … , β 21, σβ}, the observations {ri, X 1i, X 2i, ni : i = 1, … , 21} and the constant hyperparameters {µα, σα, µβ, σ min, σ max}, but not the parameters that are deterministic functions of other parameters (the germination probabilities pi), which have been assimilated into the definition of the distribution of ri before forming the DAG. Arbitrary DAG models can nevertheless be considered by assimilating deterministic intermediary quantities, such as linear predictors in generalised linear models, into the definition of the conditional distribution of the appropriate descendant stochastic parameter; and considering deterministic prediction separately from the main MCMC computation. For example, in the seeds example, the random effect precision is deterministically related to the standard deviation σβ, so it would not be considered part of the graph if it were of interest: posterior inference for τβ could instead be made either by updating its value in the usual (serial) manner after each MCMC iteration, or by post-processing the MCMC samples for σβ.

In DAG models, the conditional independence assumptions represented by the DAG mean that the full joint distribution of all quantities V has a simple factorisation in terms of the conditional distribution p(v | pa(v)) of each node v ∈ V given its parents pa(v):

Posterior inference is performed in MultiBUGS by an MCMC algorithm, constructed by associating each node with a suitable updating algorithm, chosen automatically by the program according to the structure of the model. Most MCMC algorithms involve evaluation of the conditional distribution of the stochastic parameters S ⊆ V (at particular values of its arguments). The conditional distribution p(v | V −v) of a node v ∈ S, given the remaining nodes V −v = V \ {v} is

where p(υ | pa(v)) is the prior factor and L(v) = ∏u∈ch(v) p(u | pa(u)) is the likelihood factor.

2.2. Parallelisation methods in MultiBUGS

MultiBUGS performs in parallel both multiple chains and the computation required for a single MCMC chain. In this section, we describe how the computation for a single MCMC chain can be performed in parallel.

Parallelisation strategies

MCMC entails sampling, which often requires evaluation of the conditional distribution of the stochastic parameters S in the model. MultiBUGS parallelises these computations for a single MCMC chain via two distinct approaches.

First, when a parameter has many children, evaluation of the conditional distribution is computationally expensive, since Equation 1 is the product of many terms. However, the evaluation of the likelihood factor L(v) can easily be split between C cores by calculating a partial product involving every C th child on each core. With a partition {ch(1)(v), … , ch(C)(v)} of the set of children ch(v), we can evaluate ∏u∈ch(c) (v) p(u | pa(u)) on the cth core, c = 1, … , C. The prior factor p(v | pa(v)) and these partial products can be multiplied together to recover the complete conditional distribution.

Second, when a model includes a large number of parameters then computation may be slow in aggregate, even if sampling of each individual parameter is fast. However, parameters can clearly be sampled in parallel if they are conditionally independent. Specifically, all parameters in a set W ⊆ S can be sampled in parallel whenever the parameters in W are mutually conditionally-independent; i.e., all w 1 ∈ W and w 2 ∈ W (w 1 ≠ w 2) are conditionally independent given V \ W. If C cores are available and |W| denotes the number of elements in the set W, then in a parallel scheme at most ⌈|W|/C⌉ parameters need be sampled on a core (where ⌈x⌉ denotes the ceiling function), rather than |W| in the standard serial scheme.

To identify sets of conditionally-independent parameters, MultiBUGS first partitions the stochastic parameters S into depth sets defined as the set of stochastic nodes with topological depth dG(v) = h, where topological depth of a node v ∈ V is defined recursively, starting from the nodes with no parents.

Note that stochastic nodes v ∈ S have topological depth dG(v) ≥ 1, since the constant hyperparameters of stochastic nodes are included in the DAG.

Sets of conditionally-independent parameters within a depth set can be identified by noting that all parameters in a set are mutually conditionally-independent, given the other nodes V \ W, if the parameters in W have no child node in common. This follows from the d-separation criterion (Definition 1.2.3, Pearl 2009): all such pairs of parameters w 1 ∈ W and w 2 ∈ W (w 1 ≠ w 2) are d-separated by V \ W because no ‘chain path’ can exist between w 1 and w 2 because these nodes have the same topological depth; and all ‘fork paths’ are blocked by V \ W, as are all ‘collider paths’, except those involving a common child of w 1 and w 2, which are prevented by definition of W.

Heuristic for determining parallelisation strategy

A heuristic criterion is used by MultiBUGS to decide which type of parallelism to exploit for each parameter in the model. The heuristic aims to parallelise the evaluation of conditional distributions of ‘fixed effect’-like parameters, and parallelise the sampling of ‘random effect’-like parameters. The former tend to have a large number of children, whereas the latter tend to have a small number of children. Each depth set is considered in turn, starting with the ‘deepest’ set with h* = maxv∈S dG(v). The computation of the parameter’s conditional distribution is parallelised if a parameter has more children than double the mean number of children or if all parameters in the graph have topological depth h = 1; otherwise the sampling of conditionally independent sets of parameters is parallelised whenever this is permitted. The special case for h = 1 ensures that evaluation of the conditional distribution of parameters is parallelised in ‘flat’ models in which all parameters have identical topological depth. When a group of parameters is sampled in parallel we would like the time taken to sample each one to be similar, so MultiBUGS assigns parameters to cores in order of the number of children that each parameter has.

MultiBUGS creates a C-column computation schedule table T, which specifies the parallelisation scheme: where different parameters appear in a row, the corresponding parameters are sampled in parallel; where a single parameter is repeated across a full row, the evaluation of the conditional distribution for that parameter is split into partial products across the C cores. A single MCMC iteration consists of evaluating updates as specified by each row of the computation schedule in turn. The computation schedule includes blanks whenever a set W of mutually conditionally-independent parameters does not divide equally across the C cores; that is, when |W| mod C ≠ 0, where mod denotes the modulo operator. The corresponding cores are idle when a blank occurs. Appendix A describes the algorithms used to create the C-column computation schedule table T in detail.

We illustrate the heuristic by describing the process of creating Table 1, the computation schedule for the seeds example introduced in Section 2, assuming C = 4 cores are available. The model includes 26 stochastic parameters S = {α 0, α 1, α 2, α 12, β 1, … , β 21, σβ}; and | ch(α 0)| = | ch(α 1)| = | ch(α 2)| = | ch(α 12)| = | ch(σβ)| = 21 and | ch(β 1)| = … = | ch(β 21)| = 1. MultiBUGS first considers the parameters β 1, … , β 21, since the topological depth d(β 1) = … = d(β 21) = 2 = maxv∈S d(v). None of the likelihood evaluation for β 1, … , β 21 is parallelised, because all these parameters have only 1 child and However, β 1, … , β 21 are mutually conditionally-independent and so these parameters are distributed across the 4 cores as shown in the first 6 rows of Table 1. Since 21 mod 4 ≠ 0, cores 2, 3 and 4 will be idle while β 21 is sampled. Next, we consider α 0, α 1, α 2, α 12 and σβ, since d (α 0) = … = d(α 12) = d(σβ) = 1. Since all of these parameters have 21 children and MultiBUGS will spread the likelihood evaluation of all these parameters across cores, and these are assigned to the computation schedule in turn.

Table 1. Computation schedule table T for the seeds example, with 4 cores.

| Row | Core | |||

|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |

| 1 | β 1 | β 2 | β 3 | β 4 |

| 2 | β 5 | β 6 | β 7 | β 8 |

| 3 | β 9 | β 10 | β 11 | β 12 |

| 4 | β 13 | β 14 | β 15 | β 16 |

| 5 | β 17 | β 18 | β 19 | β 20 |

| 6 | β 21 | |||

| 7 | α 12 | α 12 | α 12 | α 12 |

| 8 | α 1 | α 1 | α 1 | α 1 |

| 9 | α 2 | α 2 | α 2 | α 2 |

| 10 | α 0 | α 0 | α 0 | α 0 |

| 11 | σβ | σβ | σβ | σβ |

Block samplers

MultiBUGS is able to use a block MCMC sampler when appropriate: that is, algorithms that sample a block of nodes jointly, rather than just a single node at a time. Block samplers are particularly beneficial when parameters in the model are highly correlated a posteriori (see e.g., Roberts and Sahu 1997). The conditional distribution for a block B ⊆ S of nodes, given the rest of nodes V −B = V \ B, is

Block samplers can be parallelised in a straightforward manner: if we consider a block B as a single node, and define ch(B) = ∪b∈B ch(b), then the approach introduced above is immediately applicable, and we can exploit both opportunities for parallelisation for block updates. A mixture of single node and block updaters can be used without complication.

In the seeds example it is possible to block together α 0, α 1, α 2, α 12. The block then has 21 children, and so our algorithm chooses to spread evaluation of their likelihood over multiple cores. The computation schedule remains identical to Table 1, but the block sampler waits until all the likelihoods corresponding to rows 7 to 10 of Table 1 are evaluated before determining each update for the {α 0, α 1, α 2, α 12} block.

3. Implementation details

BUGS represents statistical models internally using a dynamic object-oriented data structure (Warford 2002) that is analogous to a DAG. The nodes of the graph are objects and the edges of the graph are pointers contained in these objects. Although the graph is specified in terms of the parents of each node, BUGS identifies the children of each node and stores this as a list embedded in each parameter node. Each node object has a value and a method to calculate its probability density function. For observations and fixed hyperparameters the value is fixed and is read in from a data file; for parameters the value is variable and is sampled within a MCMC algorithm. Each MCMC sampling algorithm is represented by a class (Warford 2002) and a new sampling object of an appropriate class is created for each parameter in the statistical model. Each sampling object contains a link to the node (or block of nodes) in the graphical model that represents the parameter (or block of parameters) being sampled. One complete MCMC update of the model involves a traversal of a list of all these sampling objects, with each object’s sampling method called in turn. Lunn et al. (2000) provides further background on the internal design of BUGS.

The MultiBUGS software consists of two distinct computer programs: a user interface and a computational engine. The computational engine is a small program assembled by linking together some modules of the OpenBUGS software plus a few additional modules to implement our parallelisation algorithm. Copies of the computational engine run on multiple cores and communicate with each other using the message passing interface (MPI) protocol (Pacheco 1997), version 2.0. The user interface program is a slight modification (and extension) of the OpenBUGS software. The user interface program compiles an executable “worker program” that contains the computational engine required for a particular statistical model. It also writes out a file containing a representation of the data structures that specify the statistical model. It then starts a number of copies of the computational engine on separate computer cores. These worker programs then read in the model representation file to rebuild the graphical model and start generating MCMC samples using our distributed algorithms. The worker programs communicate with the user interface program via an MPI intercommunicator object. The user interface is responsible for calculating summary statistics of interest.

Both sources of parallelism described in Section 2.2 require only simple modifications of the data structures and algorithms used in the BUGS software. Each core keeps a copy of the current state of the MCMC, as well as two pseudo-random number generation (PRNG) streams (Wilkinson 2006): a “core-specific” stream, initialised with a different seed for each core; and “common” stream, initialised using the same seed on all cores. Initially, each core loads the sampling algorithm, the computation schedule, and the complete DAG, which is then altered as follows so that the overall computation yields the computation required for the original, complete DAG.

When the calculation of a parameter’s likelihood is parallelised across cores, the list of children associated with a parameter on each core is thinned (pruned) so that it contains only the children in the corresponding partition component of ch(v). The BUGS MCMC sampling algorithm implementations then require only minor changes so that the partial likelihoods are communicated between cores. For example, a random walk Metropolis algorithm (Metropolis et al. 1953) is performed as follows: first, on each core, the prior factor and a partial likelihood contributions to the conditional distribution are calculated for the current value of the parameter. Each core then samples a candidate value. These candidates will be identical across cores, since the “common” PRNG stream is used. The prior and partial likelihood contributions are then calculated for the candidate value, and the difference between the two partial log-likelihood contributions can be combined across cores using the MPI function Allreduce. The usual Metropolis test can then be applied on each core in parallel using the “common” PRNG stream, after which the state of Markov chain is identical across cores. Computation of the prior factor and the Metropolis test is intentionally duplicated on every core because we found that the time taken to evaluate these quantities is usually shorter than the time taken to propagate their result across cores.

When a set of parameters W is sampled in parallel over the worker cores, the list of MCMC sampling objects is thinned on each core so that only parameters specified by the corresponding column of the computation schedule are updated on each core. The existing MCMC sampling algorithm implementations used in OpenBUGS can then be used without modification with each “core-specific” PRNG stream. The MPI function Allgather is used to send newly sampled parameters to each core. Note we need run Allgather only after each core has sampled all of its assigned components in W, rather than after each component in W is sampled. For example, in the seeds example, we use Allgather after row 6. This considerably reduces message-passing overheads when the number of elements in W is large.

Running multiple chains is handled via standard MPI methods. If we have, say, two chains and eight cores, we partition the cores into two sets of four cores and set up separate MPI collective communicators (Pacheco 1997) for each set of cores for Allreduce and Allgather to use. Requests can be sent from the master to the workers using the intercomunicater and results returned. We find it useful to designate a special “lead worker” for each chain that we simulate. Each of these lead workers sends back sampled values to the master, where summary statistics can be collected. Only sampled values corresponding to quantities that the user is monitoring need to be returned to the master. This can considerably reduce the amount of communication between the workers and the master.

4. Basic usage of MultiBUGS

The procedure for running a model in MultiBUGS is largely the same as in WinBUGS or OpenBUGS. MultiBUGS adopts the standard BUGS language for specifying models, the core of which is common also to WinBUGS, OpenBUGS, JAGS and NIMBLE. A detailed tutorial on the use of BUGS can be found in, for example, Lunn et al. (2013).

An analysis is specified in MultiBUGS using the Specification Tool ( ) by checking the syntax of a model (

) by checking the syntax of a model ( ), loading the data (

), loading the data ( ), compiling (

), compiling ( ) and setting up initial values (

) and setting up initial values ( and

and  ).

).

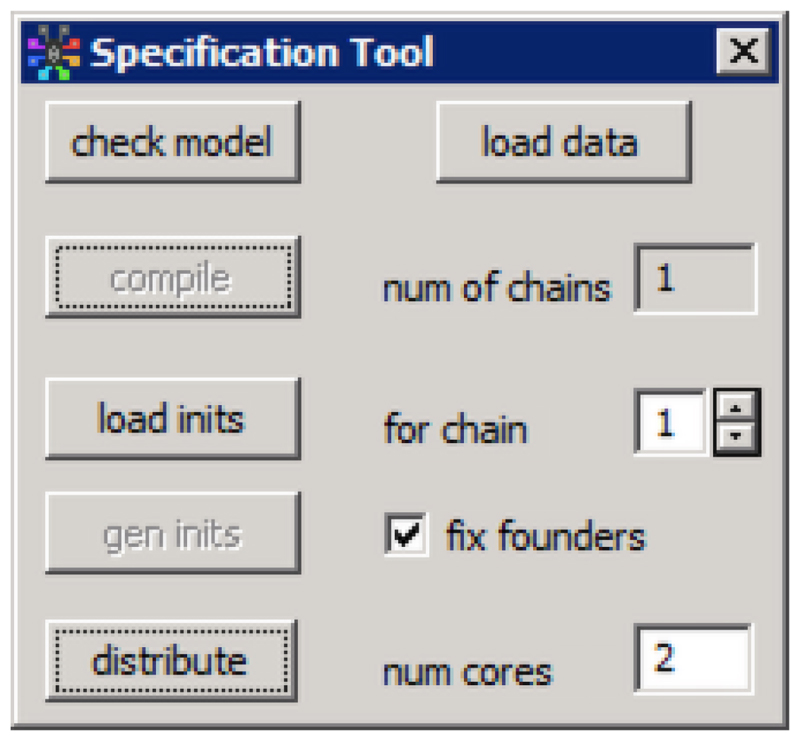

We then specify the total number of cores to distribute computation across by entering a number in the box labelled num cores (figure 2) and then clicking ( ). This should be set at a value less than or equal to the number of processing cores available on your computer (the default is 2). If multiple chains are run, the cores will be divided equally across chains. We recommend that users experiment with different numbers of cores, since the setting that leads to fastest computation depends both on the specific model and data being analysed and on the computing hardware being used. While increased parallelisation will often result in faster computation, in some cases communication overheads will balloon to the point where parallelisation gains are overturned. Furthermore, Amdahl’s bound (Amdahl 1967) on the speed-up that is theoretically obtainable with increased parallelisation may also be hit in some settings. Note that changing the number of cores will alter the exact samples obtained, since this affects the PRNG stream used to draw each sample (as described in Section 3).

). This should be set at a value less than or equal to the number of processing cores available on your computer (the default is 2). If multiple chains are run, the cores will be divided equally across chains. We recommend that users experiment with different numbers of cores, since the setting that leads to fastest computation depends both on the specific model and data being analysed and on the computing hardware being used. While increased parallelisation will often result in faster computation, in some cases communication overheads will balloon to the point where parallelisation gains are overturned. Furthermore, Amdahl’s bound (Amdahl 1967) on the speed-up that is theoretically obtainable with increased parallelisation may also be hit in some settings. Note that changing the number of cores will alter the exact samples obtained, since this affects the PRNG stream used to draw each sample (as described in Section 3).

Figure 2.

The Specification Tool in MultiBUGS, including the ‘distribute’ button, which is used to initialise the parallelisation.

Samples are drawn using the Update Tool ( ). The use of the Sample Monitor Tool (

). The use of the Sample Monitor Tool ( ) to monitor parameters; to assess MCMC convergence, using, for example, the Brooks-Gelman-Rubin diagnostic (Gelman and Rubin 1992; Brooks and Gelman 1998); and to obtain results is the same as in WinBUGS and OpenBUGS. Analyses can be automated in MultiBUGS using the same simple procedural scripting language that is available in OpenBUGS. The new command modelDistribute(C) can be used to specify that parallelisation should be across C cores; for details see (

) to monitor parameters; to assess MCMC convergence, using, for example, the Brooks-Gelman-Rubin diagnostic (Gelman and Rubin 1992; Brooks and Gelman 1998); and to obtain results is the same as in WinBUGS and OpenBUGS. Analyses can be automated in MultiBUGS using the same simple procedural scripting language that is available in OpenBUGS. The new command modelDistribute(C) can be used to specify that parallelisation should be across C cores; for details see ( ).

).

4.1. Seeds example

The model, data and initial conditions for the seeds examples can be found within MultiBUGS in ( ). This is a simple model involving a small number of parameters and observations, so computation is already fast in OpenBUGS and is no faster in MultiBUGS (both take less than a second to do 1000 MCMC updates) because the benefit of parallelisation is cancelled out by communication overheads. However, for some more complicated models, MultiBUGS will be dramatically faster than OpenBUGS. We illustrate this with an example based on e-health data.

). This is a simple model involving a small number of parameters and observations, so computation is already fast in OpenBUGS and is no faster in MultiBUGS (both take less than a second to do 1000 MCMC updates) because the benefit of parallelisation is cancelled out by communication overheads. However, for some more complicated models, MultiBUGS will be dramatically faster than OpenBUGS. We illustrate this with an example based on e-health data.

5. Illustration of usage with hierarchical e-health data

Our e-health example is based on a large linked database of methadone prescriptions given to opioid dependent patients in Scotland, which was used to examine the influence of patient characteristics on doses prescribed (Gao et al. 2016; Dimitropoulou et al. 2017). This example is typical of many databases of linked health information drawn from primary care records, hospital records, prescription data and disease/death registries. Each data source often has a hierarchical structure, arising from regions, institutions and repeated measurements within individuals. Here, since we are unable to share the original dataset, we analyse a synthetic dataset, simulated to match the key traits of the original dataset.

The model includes 20,426 random effects in total, and was fitted to 425,112 observations. It is possible to fit this model using standard MCMC simulation in OpenBUGS but, unsurprisingly, the model runs extremely slowly and it takes 32 hours to perform a sufficient number of iterations (15,000) to satisfy standard convergence assessment diagnostics. In such data sets it can be tempting to choose a much simpler and faster method of analysis, but this may not allow appropriately for the hierarchical structure or enable exploration of sources of variation.

Instead it is preferable to fit the desired hierarchical model using MCMC simulation, while speeding up computation as much as possible by exploiting parallel processing.

The model code, data and initial conditions can be found within MultiBUGS in ( )

)

5.1. E-health data

The data have a hierarchical structure, with multiple prescriptions nested within patients within regions. For some of the outcome measurements, person identifiers and person-level covariates are available (240,776 observations). These outcome measurements yijk represent the quantity of methadone prescribed on occasion k for person j in region i (i = 1, … , 8; j = 1, … , Ji; k = 1, … , Kij), and are recorded in the file ehealth_data_id_available. Each of these measurements is associated with a binary covariate rijk (called source.indexed) that indicates the source of prescription on occasion k for person j in region i, with rijk = 1 indicating that the prescription was from a General Practitioner (family physician). No person identifiers or person-level covariates are available for the remaining outcome measurements (184,336 observations). We denote by zil the outcome measurement for the l th prescription without person identifiers in region i (i = 1, … , 8; l = 1, … , Li). These data are in the file ehealth_data_id_missing. A binary covariate sil (called source.nonindexed) indicates the source of the l th prescription without person identifiers in region i, with sil = 1 indicating that the prescription was from a General Practitioner (family physician). The final data file, ehealth_data_n, contains several totals used in the BUGS code.

5.2. E-health model

We model the effect of the covariates with a regression model, with regression parameter βm corresponding to the m th covariate xmij (m = 1, … , 4), while allowing for within-region correlation via region-level random effects ui, and within-person correlation via person-level random effects wij; source effects vi are assumed random across regions.

The means μw and με are both fixed to 0.

The outcome measurements zil contribute only to estimation of regional effects ui and source effects vi.

The error variance represents a mixture of between-person and between-occasion variation. We fix the error mean μη = 0. We assume uniform priors for σu, σv, σw, σε, ση on the range σ min = 0 to σ max = 10, and normal priors for β 1, … , β 4, μu, μv and λ with mean β mean = μ mean = μ λ = 0 and standard deviation β sd = μ sd = μ λ = 100. Figure 3 is a DAG representation of this model.

The data have been suitably transformed so that fitting a linear model is appropriate. We do not consider alternative approaches to analysing the data set. The key parameters of interest are the regression parameters β 1, … , β 4 and the standard deviations σu and σv for the region and source random effects.

This model can be specified in BUGS as follows:

model {

# Outcomes with person-level data available

for (i in 1:n.indexed) {

outcome.y[i] ~ dnorm(mu.indexed[i], tau.epsilon)

mu.indexed[i] <- beta[1] * x1[i] +

beta[2] * x2[i] +

beta[3] * x3[i] +

beta[4] * x4[i] +

region.effect[region.indexed[i]] +

source.effect[region.indexed[i]] * source.indexed[i] +

person.effect[person.indexed[i]]

}

# Outcomes without person-level data available

for (i in 1:n.nonindexed){

Robert J. B. Goudie, Rebecca M. Turner, Daniela De Angelis, Andrew Thomas 13

outcome.z[i] ~ dnorm(mu.nonindexed[i], tau.eta)

mu.nonindexed[i] <- lambda +

region.effect[region.nonindexed[i]] +

source.effect[region.nonindexed[i]] *

source.nonindexed[i]

}

# Hierarchical priors

for (i in 1:n.persons){

person.effect[i] ~ dnorm(0, tau.person)

}

for (i in 1:n.regions) {

region.effect[i] ~ dnorm(mu.region, tau.region)

source.effect[i] ~ dnorm(mu.source, tau.source)

}

lambda ~ dnorm(0, 0.0001)

mu.region ~ dnorm(0, 0.0001)

mu.source ~ dnorm(0, 0.0001)

# Priors for regression parameters

for (m in 1:4){

beta[m] ~ dnorm(0, 0.0001)

}

# Priors for variance parameters

tau.eta <- 1/pow(sd.eta, 2)

sd.eta ~ dunif(0, 10)

tau.epsilon <- 1/pow(sd.epsilon, 2)

sd.epsilon ~ dunif(0, 10)

tau.person <- 1/pow(sd.person, 2)

sd.person ~ dunif(0, 10)

tau.source <- 1/pow(sd.source, 2)

sd.source ~ dunif(0, 10)

tau.region <- 1/pow(sd.region, 2)

sd.region ~ dunif(0, 10)

}5.3. E-health initial values

For chain 1, we used the following initial values:

list(lambda = 0, beta = c(0, 0, 0, 0), mu.source = 0, sd.epsilon = 0.5,

sd.person = 0.5, sd.source = 0.5, sd.region = 0.5, sd.eta = 0.5)

and for chain 2 we used:

list(lambda = 0.5, beta = c(0.5, 0.5, 0.5, 0.5), mu.source = 0.5,

sd.epsilon = 1, sd.person = 1, sd.source = 1, sd.region = 1,

sd.eta = 1)5.4. Parallelisation in MultiBUGS

After setting the number of cores, the computation schedule chosen by MultiBUGS can be viewed in ( ). MultiBUGS parallelises sampling of all the person-level random effects wij, except for the component corresponding to the person with the most observations (176 observations); MultiBUGS parallelises likelihood computation of this component instead. The likelihood computation of all the other parameters in the model is also parallelised, except for the mutually conditionally-independent sets {μu, μv} and which are sampled in parallel in turn.

). MultiBUGS parallelises sampling of all the person-level random effects wij, except for the component corresponding to the person with the most observations (176 observations); MultiBUGS parallelises likelihood computation of this component instead. The likelihood computation of all the other parameters in the model is also parallelised, except for the mutually conditionally-independent sets {μu, μv} and which are sampled in parallel in turn.

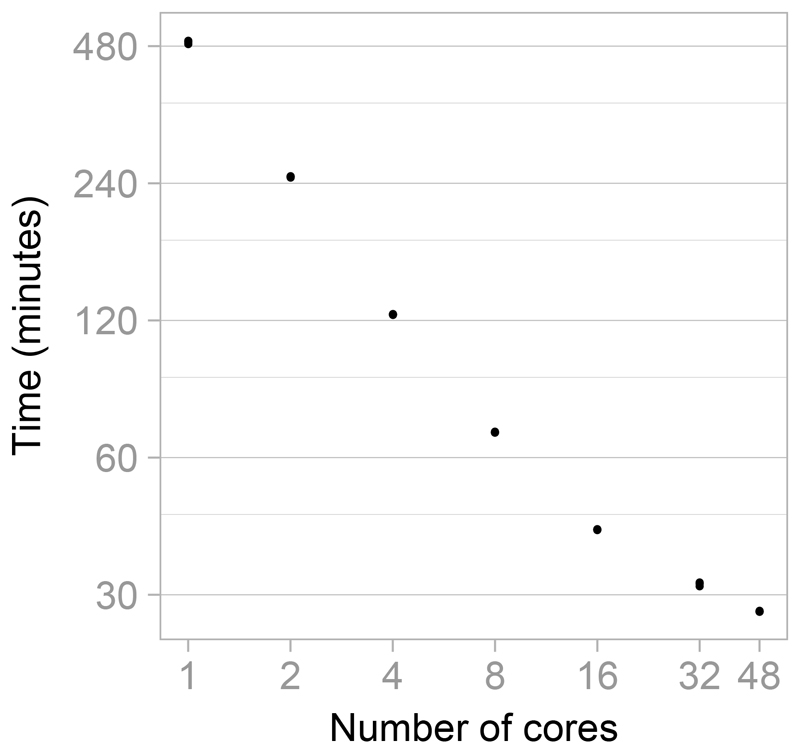

5.5. Run time comparisons across BUGS implementations

To demonstrate the speed-up possible in MultiBUGS using a range of number of cores, we ran two chains for 15,000 updates for the e-health example. This run length was chosen to mimic realistic statistical practice, since, after discarding the first 5,000 iterations as burn-in, visual inspection of chain-history plots and the Brooks-Gelman-Rubin diagnostic (Gelman and Rubin 1992; Brooks and Gelman 1998) indicated convergence. We ran the simulations (each replicated three times) on a sixty four core machine consisting of four sixteen-core 2.4Ghz AMD Operon 6378 processors with 128GB shared RAM.

Figure 4 shows the run time against the number of cores on a log-log scale. Substantial time savings are achieved using MultiBUGS: on average using one core took 8 hours 10 minutes; using two cores took 4 hours and 8 minutes; and using forty-eight cores took only 28 minutes. In contrast, these simulations took 32 hours in standard single-core OpenBUGS 3.2.3; and 9 hours using JAGS 4.0.0 via R 3.3.1.

Figure 4.

Run time against number of cores for 15,000 iterations of the e-health example model, running 2 chains simultaneously. The run time in each of 3 replicate runs are shown. Both time and number of cores are displayed on a log scale.

The scaling of performance with increasing number of cores is good up to sixteen cores and then displays diminishing gains. This may be due to inter core communication being much faster within each processor of 16 cores compared to across processors, or the diminishing returns anticipated by Amdahl’s law (Amdahl 1967). Running only one chain approximately halved the run time for two chains.

5.6. Results

The posterior summary table we obtained is as follows:

mean median sd MC_error val2.5pc val97.5pc start sample ESS beta[1] -0.07124 -0.07137 0.01272 5.784E-4 -0.09561 -0.0461 5001 20000 483 beta[2] -0.2562 -0.2563 0.02437 9.186E-4 -0.3036 -0.208 5001 20000 704 beta[3] 0.1308 0.1311 0.0114 5.7E-4 0.1085 0.1528 5001 20000 399 beta[4] 0.13 0.1305 0.0182 7.083E-4 0.09474 0.1651 5001 20000 660 sd.region 1.259 1.157 0.4606 0.005305 0.7024 2.445 5001 20000 7536 sd.source 0.3714 0.3417 0.1359 0.001611 0.2057 0.7153 5001 20000 7116

6. Discussion

MultiBUGS makes Bayesian inference using multi-core processing accessible for the first time to applied statisticians working with the broad class of statistical models available in BUGS language software. It adopts a pragmatic algorithm for parallelising MCMC sampling, which we have demonstrated speeds up inference in a random-effects logistic regression model involving a large number of random effects and observations. While a large literature has developed proposing methods for parallelising MCMC algorithms (see Section 1), a generic, easy-to-use implementation of these ideas has been heretofore lacking. Almost all users of BUGS language software will have a multi-core computer available, since desktop computers typically now have a moderate number (up to ten) of cores, and laptops typically have 2-4 cores. However, workstations with an even larger number of cores are now becoming available: for example, Intel’s Xeon Phi x200 processor contains between sixty-four and seventy-two cores.

The magnitude of speed-up provided by MultiBUGS depends on the model and data being analysed and the computer hardware being used. Two levels of parallelisation can be used in MultiBUGS: independent MCMC chains can be parallelised, and then computation within a single MCMC chain can be parallelised. The first level of parallelisation will almost always be advantageous whenever sufficient cores are available, since no communication across cores is needed. The gain from second level of parallelisation is problem specific: the gain will be largest for models involving parameters with a large number of likelihood terms and/or a large number of conditionally independent parameters. For example, MultiBUGS is able to parallelise inference for many standard regression-type models involving both fixed and random effects, especially with a large number of observations, since fixed effect regression parameters will have a large number of children (the observations), and random effects will typically be conditionally independent. For models without these features, the overheads of the second level of parallelisation may outweigh the gains on some computing hardware, meaning only the first level of parallelisation is beneficial.

The mixing properties of the simulated MCMC chains are the same in OpenBUGS and MultiBUGS, because they use the same collection of underlying MCMC sampling algorithms. Models with severe MCMC mixing problems in OpenBUGS are thus not resolved in MultiBUGS. However, since MultiBUGS can speed-up MCMC simulation, it may be practicable to circumvent milder mixing issues by simply increasing the run length.

Several extensions and developments are planned for MultiBUGS in the future. First, at present MultiBUGS requires the Microsoft Windows operating system. However, most large computational clusters use the Linux operating system, so a version of MultiBUGS running on Linux is under preparation. Second, MultiBUGS currently loads data and builds its internal graph representation of a model on a single core. This process will need to be rethought for extremely large datasets and graphical models.

Supplementary Material

Acknowledgements

This work was supported by the UK Medical Research Council [programme codes MC UU 00002/2 (RJBG), MC UU 12023/21 (RT), MC UU 00002/11 (DDA and AT)]. We are grateful to Chris Jewell, Sylvia Richardson and Christopher Jackson for helpful discussions of this work; to the Associate Editor and Reviewers for their insightful comments; and also to all contributors to the BUGS project upon which MultiBUGS is based.

Contributor Information

Robert J. B. Goudie, MRC Biostatistics Unit University of Cambridge

Rebecca M. Turner, MRC Clinical Trials Unit University College London

References

- Amdahl GM. Validity of the Single Processor Approach to Achieving Large Scale Computing Capabilities. Proceedings of the April 18-20, 1967; Spring Joint Computer Conference; Reston, VA: AFIPS Press; 1967. pp. 483–485. [Google Scholar]

- Angelino E, Kohler E, Waterland A, Seltzer M, Adams RP. Accelerating MCMC via Parallel Predictive Prefetching. Proceedings of the Thirtieth Conference Annual Conference on Uncertainty in Artificial Intelligence (UAI-14); Corvallis, OR: 2014. pp. 22–31. [Google Scholar]

- Bottolo L, Chadeau-Hyam M, Hastie DI, Zeller T, Liquet B, Newcombe P, Yengo L, Wild PS, Schillert A, Ziegler A, Nielsen SF, et al. GUESSing Polygenic Associations with Multiple Phenotypes Using a GPU-Based Evolutionary Stochastic Search Algorithm. PLOS Genetics. 2013;9(8):e1003657. doi: 10.1371/journal.pgen.1003657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradford R, Thomas A. Markov Chain Monte Carlo Methods for Family Trees Using a Parallel Processor. Statistics and Computing. 1996;6(1):67–75. [Google Scholar]

- Breslow NE, Clayton DG. Approximate Inference in Generalized Linear Mixed Models. Journal of the American Statistical Association. 1993;88(421):9–25. [Google Scholar]

- Brockwell AE. Parallel Markov Chain Monte Carlo Simulation by Pre-Fetching. Journal of Computational and Graphical Statistics. 2006;15(1):246–261. [Google Scholar]

- Brooks SP, Gelman A. General Methods for Monitoring Convergence of Iterative Simulations. Journal of Computational and Graphical Statistics. 1998;7(4):434–455. [Google Scholar]

- Calderhead B. A General Construction for Parallelizing Metropolis-Hastings Algorithms. Proceedings of the National Academy of Sciences of the United States of America. 2014;111(49):17408–17413. doi: 10.1073/pnas.1408184111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpenter B, Gelman A, Hoffman MD, Lee D, Goodrich B, Betancourt M, Brubaker M, Guo J, Li P, Riddell A. Stan: A Probabilistic Programming Language. Journal of Statistical Software. 2017;76(1):1–32. doi: 10.18637/jss.v076.i01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowder MJ. Beta-Binomial Anova for Proportions. Journal of the Royal Statistical Society C. 1978;27(1):34–37. [Google Scholar]

- de Valpine P, Turek D, Paciorek CJ, Anderson-Bergman C, Lang DT, Bodik R. Programming with Models: Writing Statistical Algorithms for General Model Structures with NIMBLE . Journal of Computational and Graphical Statistics. 2017;26(2):403–413. [Google Scholar]

- Dimitropoulou P, Turner R, Kidd D, Nicholson E, Nangle C, McTaggart S, Bennie M, Bird S. Methadone Prescribing in Scotland: July 2009 to June 2013. 2017. Unpublished.

- Gao L, Dimitropoulou P, Robertson JR, McTaggart S, Bennie M, Bird SM. Risk-Factors for Methadone-Specific Deaths in Scotland’s Methadone-Prescription Clients between 2009 and 2013. Drug and Alcohol Dependence. 2016;167:214–223. doi: 10.1016/j.drugalcdep.2016.08.627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gelfand AE, Smith AFM. Sampling-Based Approaches to Calculating Marginal Densities. Journal of the American Statistical Association. 1990;85(410):398–409. [Google Scholar]

- Gelman A, Rubin DB. Inference from Iterative Simulation Using Multiple Sequences. Statistical Science. 1992;7(4):457–472. [Google Scholar]

- Geman S, Geman D. Stochastic Relaxation, Gibbs Distributions, and the Bayesian Restoration of Images. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1984;PAMI-6(6):721–741. doi: 10.1109/tpami.1984.4767596. [DOI] [PubMed] [Google Scholar]

- Goudie RJB, Presanis AM, Lunn D, De Angelis D, Wernisch L. Joining And Splitting Models with Markov Melding. Bayesian Analysis. 2018 doi: 10.1214/18-BA1104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jewell CP, Kypraios T, Neal P, Roberts GO. Bayesian Analysis for Emerging Infectious Diseases. Bayesian Analysis. 2009;4(2):191–222. [Google Scholar]

- Lauritzen SL, Dawid AP, Larsen BN, Leimer HG. Independence Properties of Directed Markov Fields. Networks. 1990;20(5):491–505. [Google Scholar]

- Lunn D, Jackson C, Best N, Thomas A, Spiegelhalter D. The BUGS Book: A Practical Introduction to Bayesian Analysis. CRC Press; Boca Raton, FL: 2013. [Google Scholar]

- Lunn D, Spiegelhalter DJ, Thomas A, Best N. The BUGS Project: Evolution, Critique and Future Directions. Statistics in Medicine. 2009;28(25):3049–3067. doi: 10.1002/sim.3680. [DOI] [PubMed] [Google Scholar]

- Lunn D, Thomas A, Best N, Spiegelhalter DJ. WinBUGS - A Bayesian Modelling Framework: Concepts, Structure and Extensibility. Statistics and Computing. 2000;10(4):325–337. [Google Scholar]

- Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH, Teller E. Equation of State Calculations by Fast Computing Machines. The Journal of Chemical Physics. 1953;21(6):1087–1092. [Google Scholar]

- Pacheco P. Parallel Programming with MPI. Morgan Kauffman; San Francisco: 1997. [Google Scholar]

- Pearl J. Causality: Models, Reasoning, and Inference. 2nd edition. Cambridge University Press; New York: 2009. [Google Scholar]

- Plummer M. JAGS Version 4.2.0 User Manual. 2017 URL http://mcmc-JAGS.sourceforge.net.

- Roberts GO, Sahu SK. Updating Schemes, Correlation Structure, Blocking and Parameterization for the Gibbs Sampler. Journal of the Royal Statistical Society B. 1997;59(2):291–317. [Google Scholar]

- Rosenthal JS. Parallel Computing and Monte Carlo Algorithms. Far East Journal of Theoretical Statistics. 2000;4:207–236. [Google Scholar]

- Scott SL, Blocker AW, Bonassi FV, Chipman HA, George EI, McCulloch RE. Bayes and Big Data: The Consensus Monte Carlo Algorithm. International Journal of Management Science and Engineering Management. 2016;11(2):78–88. [Google Scholar]

- Spiegelhalter D, Thomas A, Best N, Gilks W. BUGS 0.5: Bayesian Inference Using Gibbs Sampling – Manual (Version ii) Cambridge, UK: 1996. [Google Scholar]

- Thomas A. The BUGS Language. R News. 2006;6(1):17–21. [Google Scholar]

- Warford JS. Computing Fundamentals: The Theory and Practice of Software Design with BlackBox Component Builder. Vieweg & Sohn; Braunschweig/Wiesbaden: 2002. [Google Scholar]

- Whiley M, Wilson SP. Parallel Algorithms for Markov Chain Monte Carlo Methods in Latent Spatial Gaussian Models. Statistics and Computing. 2004;14(3):171–179. [Google Scholar]

- Wilkinson D. Parallel Bayesian Computation. In: Kontoghiorghes E, editor. Handbook of Parallel Computing and Statistics. Chapman and Hall/CRC; Boca Raton, FL: 2006. pp. 477–508. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.