Abstract

Many vision-based applications rely on logistic regression for embedding classification within a probabilistic context, such as recognition in images and videos or identifying disease-specific image phenotypes from neuroimages. Logistic regression, however, often performs poorly when trained on data that is noisy, has irrelevant features, or when the samples are distributed across the classes in an imbalanced setting; a common occurrence in visual recognition tasks. To deal with those issues, researchers generally rely on ad-hoc regularization techniques or model a subset of these issues. We instead propose a mathematically sound logistic regression model that selects a subset of (relevant) features and (informative and balanced) set of samples during the training process. The model does so by applying cardinality constraints (via ℓ0-’norm’ sparsity) on the features and samples. ℓ0 defines sparsity in mathematical settings but in practice has mostly been approximated (e.g., via ℓ1 or its variations) for computational simplicity. We prove that a local minimum to the non-convex optimization problems induced by cardinality constraints can be computed by combining block coordinate descent with penalty decomposition. On synthetic, image recognition, and neuroimaging datasets, we furthermore show that the accuracy of the method is higher than alternative methods and classifiers commonly used in the literature.

Keywords: Sparsity, non-convex optimization, feature selection, sample selection, imbalanced classification, logistic regression

1. Introduction

Real-world applications involving natural images [1, 2], videos [3, 4], or neuroimages (medical images) [5–7] suffer from labeled training data being noisy, possibly containing several redundant features, and having skewed class distributions (i.e., imbalanced number of samples across classes). For example, the analysis of brain Magnetic Resonance Images (MRI) are prone to noise due to inaccuracies in acquisition, preprocessing, and diagnosis of subjects [7]. Furthermore, these studies extract features from several pre-defined regions of interest (ROIs) [8, 9], some of which may be impacted by the disease. Finally, MRI based studies often contain an imbalanced number of samples across classes due to the difficulties associated with the recruiting samples [10]. Noise, redundancy, and skewed class distributions often negatively impact the accuracy of classifiers, such as logistic regression [11], and thus they present significant challenges to the analysis of visual data.

Previous methods have viewed these issues as problems that have to be addressed a-priori, i.e., before beginning the training of the classifier [12–15]. For example, training approaches have accounted for skewed class distributions by weighing samples from different classes disproportional to the number of samples from that class [16] and several publications [17–19] proposed dealing with noisy features and samples by only selecting a subset of them for training [20]. Given that a-priori solutions to these issues most likely will not result in the optimal classifier as they have highly complex relations, recent work explicitly models a subset of those issues as part of the training process (e.g., [7, 9]).

To investigate explicitly modelling all three issues, we revisit Logistic Regression (LR) [21]. LR is a popular loss function for deep learning models [22] and a common classifier for many applications, such as in computer vision, computational neuroscience, and social sciences [11]). Contrary to other generalized linear models (e.g., [23], LR computes the probability of a sample belonging to a class by learning a set of parameters that minimize the induced log-likelihood over the training samples. While support vector machines (SVMs) focus on a few samples (i.e., the support vectors), LR explicitly accounts for all training samples, which works well when the signal-to-noise-ratio in the training dataset is low [21, 24, 25]. To increase its robustness, we derive the first LR classification model that explicitly accounts for noisy samples, redundant features, and skewed class distributions.

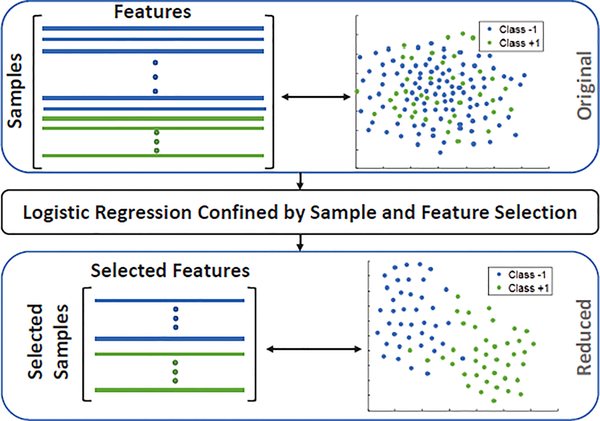

Specifically, we propose a joint sample and feature selection (SFS) method that selects the most distinctive set of features and informative samples (balanced across classes) while training the classifier. The method is tailored for classification of visual and neuroimaging data applications for recognition or diagnosis based on imbalanced and potentially noisy data (see Fig. 1). This is achieved by adding a variable (denoted by α) representing sample selection to a conventional logistic regression model in which the parameterization focuses on determining the optimal weighting of the features (denoted by β). Furthermore, the optimal setting of those two variables is confined by sparsity constraints based on ℓ0-’norm’ (a natural underpinning of this task) and its surrogates. The cardinality constraint of α (i.e., ℓ0-’norm’ of α) enforces the number of samples from both classes to be equal in the learning model. For selecting the most informative features, we experiment with two different regularizers, applied to the coefficients β. The first generalizes the ℓ0-’norm’and the second one the ℓ1-norm.

Fig. 1:

Our proposed logistic regression simultaneously selects samples and features to improve classification accuracy.

The proposed method is compared to alternative methods and classifiers commonly reported in the literature with respect to several different experimental settings. First, we apply the approaches to a synthetic dataset, which underlines the robustness of the method against noisy features and samples. We also train and test the method on benchmark image recognition datasets, i.e., MNIST digit recognition [26], COIL20 object recognition [27], and CMU PIE face verification [28]. These experiments vary concerning the imbalance across classes and provide accuracy scores reported by other publications. Finally, we test the utility of the method by applying it to neuroimaging data. Identifying informative features is an important task in the analysis of neuroimaging data, which can yield imaging biomarkers for different diseases. As the outcome of the experiments shows, the proposed method best deals with all these challenges compared to the alternative approaches that only explicitly model a subset of the issues during training.

1.1. Related Works

We first review the literature with respect to the three tasks related to our proposed algorithm and their prevalence to computer vision and neuroimaging applications: imbalanced classification, feature selection, sample selection. While prior work mostly has viewed these as problems that need to be solved independently [16, 29–31] or sequentially [9], we also review articles that jointly perform feature and sample selection.

Imbalanced Classification

Many real-world classification problems require training an algorithm on imbalanced data, i.e., distinguish groups that are different in sample size. Conventional classifiers maximize classification accuracy irrespective of the distribution of samples across classes in the training data [15, 32]. This strategy results in the models emphasizing the class with more samples [15]. The classifiers then do not generalize well to unseen data as they tend to mislabel samples associated with the ‘smaller’ class. To deal with this issue, previous methods have used weighted loss functions [15, 33], under-sampled the larger class [32], or over-sampled the smaller class [34, 35]. Even with the recent focus on this topic [14, 15, 34], properly dealing with imbalanced data is still an open question as under- or oversampling are often preprocessing steps that do not fully account for the preceding classifier, and loss-weighting approaches are sensitive to noise and outliers in the training data [15].

Feature Selection

Feature selection techniques select the most relevant and informative features among numerous measurements for the application. Examples are unsupervised statistical methods (e.g., Fisher score [36]), learning based approaches (e.g., [37]), and supervised machine learning techniques (such as [38–40]). Among these, sparse methods have recently attracted attention due to their simplicity and high accuracy [5, 29, 38, 40, 41, 41–43].

Sparsity is mathematically defined by the ℓ0-’norm’, which counts the number of nonzero entries in the associated vector (e.g., [44, 45]). However, ℓ0-confined classification problems are generally NP-hard [46] and are usually computationally challenging to solve due to the discontinuity of the ℓ0-’norm’. Various surrogates for the ℓ0 regularizer have been proposed for relaxing the ℓ0 minimization problem. For example, ℓ1 regularizer is a popular relaxation technique as the corresponding solutions often generalize well in the presence of many irrelevant features [47]. While being convex, ℓ1-based regularization biases analysis as this rough approximation of sparsity is dominated by the elements of the associated vector with large magnitudes (the leading entries of the vector). A promising compromise between those two regularizers is the partial ℓ1-’norm’ [48]. This ‘norm’ reduces the bias risk by omitting the leading entries, while the computational complexity is still relatively low [48]. Various nonconvex surrogates are investigated in the literature, such as capped-ℓ1 penalty [49], SCAD [50], Log [51], and ℓp quasi-norm [52].

Sample Selection

Sample selection is commonly used for selecting informative samples for building learning models (such as in [9, 53]), as well as for identifying noisy and outlier samples, i.e., samples that dramatically violate the classification criteria and might skew decision boundaries of classifiers [7, 54, 55]. It has also been integrated into under-sampling approaches [16], where the aim is to address the class imbalance problem. All these techniques are run prior to classification, which increases the risk of introducing a sample selection bias into the analysis [56]. The bias describes the phenomena of analyzing samples of data that do not adequately represent the population of interest so that the findings do not generalize well.

Joint Selection of Samples and Features for Visual and Neuroimaging Data

Based on the intuition that feature and sample selection tasks are interrelated, few publications [53, 57, 58] proposed to perform these two tasks jointly. Among these, Mohsenzadeh et al. [57] add one variable for feature selection and one for sample selection to the Bayesian inference model defining relevance vector machines (RVM). Similarly, the joint feature-sample selection (JFSS) technique proposed by [53] uses two independent parameter sets for these two tasks and optimizes a bi-convex objective function to obtain sparse solutions for both of them. An et al. [58] instead perform feature-sample selection by constructing a manifold across the samples, which come from the training and unlabeled test set. All three methods are used as a separate preprocessing step before classification. The last two methods were used for classification and identification of biomarkers for neurodegenerative diseases using MRI data.

1.2. Summary and Contributions

As discussed earlier, our model selects the most informative subset of samples and features for the classification task. Similar to methods like SVM [23], our model explicitly selects a set of samples that contribute to building the classification model as part of the learning process. Unlike those approaches, our method selects a set of balanced samples across the classes to reduce the risk of outliers and noisy samples corrupting the learning process. Specifically, sample selection is confined via the ℓ0-’norm’, which allows us to explicitly define the cost function to contain an equal number of training samples per group. Our method avoids the sample selection bias, as the samples are selected concurrent with training the classifier. In other words, the selected samples are the most informative ones for the classification task.

For feature selection, we introduce two novel regularization frameworks that are generalizations of the ℓ0-, ℓ1-, or ℓ2-norm. Inspired by relaxed LASSO [59], the first framework is based on a hybrid regularization scheme coupling the ℓ0-’norm’ with the ℓ2-norm. Specifically, the scheme confines the search space via a hard-constraint based on the ℓ0-’norm’. Furthermore, it expands the cost function of LR with a soft-constraint defined by the ℓ2 norm, which has shown to result in classifiers that generalize well to unseen data [60]. The second framework regularizes feature selection by adding the partial ℓ1-norm [48] (a generalization of the ℓ1-norm) to the original cost function.

The main contributions of this paper are two-fold: (1) We propose a classification scheme that simultaneously selects the most informative features and samples from visual data while ensuring that the training of the classifier is always based on an equal number of samples per class (even if that is not the case for the training dataset); (2) We directly solve the original ℓ0-’norm’ minimization problem for selecting samples and features, and apply the method to classify natural and medical images.

2. Logistic Regression with Sample-Feature Selection (SFS)

We first define the minimization problem through a cost function that combines sample-feature selection within a log-logistic classifier [61]. After introducing these minimization problems in Section 2.1, we derive the optimization algorithm in Section 2.2 and its convergence properties in Section 2.3.

2.1. Modelling Classification Confined by Sample and Feature Selection

The classification problem is defined with respect to n training samples and their respective labels yi ∈ {−1, +1}, i = 1, · · ·, n (see1 for notation). Samples selected for training of the classifier are encoded via the vector of indicator variables . The indicator variable αi = 1, if the ith sample (xi, yi) is selected, and αi = 0 otherwise. Feature selection is encoded via the feature weight vector . Now, let σ(t) := log(1 + exp(−t)) be the sigmoid function and the bias term defining the linear log-odds function , then the logistic regression loss function defining the classification model is defined as

Training of the classifier determines the parameters that minimize the above cost function according to the constraints specific to sample and feature selection. With respect to sample selection, only k− samples are selected from group ‘−1’ and k+ from group ‘+1’. We explicitly enforce this constraint by first introducing the set I(z) = {i : yi = z} of indices associated with samples with label ‘z’ and αI(z), which is α reduced to the indices of I(z). With respect to feature selection, we add the regularizer to the previous cost function, i.e.,

with λ > 0 encoding the weight of the regularization over the logistic loss function. In addition, β is confined to a closed space . The entire minimization problem is then defined as

| (1) |

Note that we write instead of for simplicity. In practice, we choose k− = k+ to solve a balanced classification problem. Next, we explicitly define and with respect to the hybrid and partial regularization of features.

2.1.1. Hybrid ℓ0-ℓ2 Regularization

In the hybrid regularization scheme, the regularization term is defined by the ℓ2-norm, i.e., , so that the loss function of the minimization problem is

| (2) |

In addition, the search space for the optimal β is confined to those that ‘select’ no more than τ ∈ [1, p] features, i.e., . The minimization problem is thus defined as

| (3) |

Note, if λ = 0, then feature selection is solely confined by the ℓ0-’norm’[45, 62]. If instead τ = p, then the regularizer becomes the commonly used ridge regressor (or ℓ2-norm) [63].

2.1.2. Partial ℓ1 Regularization

Let |β|[j] be the jth largest component in |β| = (|β1|, · · ·, |βp|), then the partial ℓ1-norm of feature weight vector β is defined as

where r encodes the number of features that the ‘norm’ is indifferent to. Using the ‘norm’ to define the regularizer of Eq. (1) results in the cost function

| (4) |

Furthermore, we omit any constraints on the search space (i.e., ), so the corresponding minimization problem is

| (5) |

Note that so that the widely used ℓ1-norm is just a special case of the partial ℓ1-’norm’.

2.2. Determining the Optimal Solution via Block Coordinate Descent (BCD)

We now present the joint Sample-Feature Selection classifier (SFS), an optimization algorithm based on Block Coordinate Descent (BCD) [64] for determining a local minimum to the previously derived minimization problems (see also Algorithm 1). We refer to the implementation specific to the ‘hybrid’ minimization problem of Eq. (3) as SFSH and ‘partial’ minimization problem of Eq. (5) as SFSP. For each minimization problem, BCD estimates its solution by alternating between finding the minimum with respect to α (with (β, β0)being fixed) and (β, β0) (with α being fixed) until the estimates computed by BCD fulfill the stopping criteria (see ‘6:’ in Algorithm 1). As we show next, the two resulting sub-problems are straightforward to solve, which was the primary motivation for choosing BCD.

To derive the first sub-problem, let be fixed, so that Eq. (3) and Eq. (5) simplify to

| (6) |

To solve this minimization problem (see also Step 2 in Algorithm 1), we assume (without loss of generality) that the label of the first k− samples is ‘−1’ (i.e., ) and that the loss associated with each of these samples (i.e., ) is smaller than that of the other samples of that group. Furthermore, we assume (without loss of generality) that the label of the next k+ samples is ‘+1’ and that the loss associated with each of those samples is smaller than that of the other samples of that group. As we then show in Section 2.3, the closed-form solution of the minimization problem is

The second sub-problem inferred from BCD is solving Eq. (3) or Eq. (5) with respect to (β, β0) and being fixed, which the proceeding sub-sections do.

2.2.1. Hybrid ℓ0-ℓ2 Confined Feature Selection

With respect to SFSH and being fixed, the corresponding minimization problem (i.e., Eq. (3)) simplifies to the ℓ0 constrained optimization problem

| (7) |

One of the optimization algorithms for this type of problem is Penalty Decomposition (PD) [65]. PD adds to the model the non-sparse feature weight vector and a penalty parameter ρ > 0, which accounts for the ‘allowed’ differences between the sparse and non-sparse solution. In other words, the cost function is now defined as

and the corresponding minimization problem as

| (8) |

Instead of setting parameter ρ = ∞ (for which ), PD estimates the solution to Eq. (7) by first initializing ρ with a small value. It then increases ρ at the beginning of each iteration and estimates the solution to Eq. (8) by iterating between estimating the solution with respect to the ‘dense’ variables (i.e., γ and β0) and the ‘sparse’ variable β [62, 65]. Note, while [62, 65] focused their implementation of PD on minimizing the logistic cost function , the properties of PD are preserved with respect to the cost function as it is smooth and convex, i.e., fulfills the assumptions of the proofs in [62, 65]. Refer to Algorithm 2 in the Appendix for the implementation.

Algorithm 1.

Sample-Feature Selection (SFS)

| Input: Regularization scheme X, hyperparameter λ (and r for partial regularization), number of desired features τ, number of desired samples k− and k+, and threshold ϵ. | ||

| Initialization: Let be arbitrarily chosen such that . Set t = 0 and f(0) = ∞. | ||

| 1: | repeat | ▹ Beginning of BCD |

| 2: | Update sample weights α(t+1): | |

| 2.1: | ||

| 2.2: ‘+1’ samples, s. t. | ||

| 2.3: | ||

| 2.4: ‘−1’ samples, s. t. | ||

| 2.5: | ||

| 2.6: | ||

| 3: | Update features weights and bias via Algorithm 2 (X = H) or Algorithm 3 (X = P) | |

| 4: | Update | |

| 5: | t ← t + 1 | |

| 6: | until | ▹ Stopping Criteria |

| Output: | ||

2.2.2. Partial ℓ1-Regularized Feature Selection

With respect to SFSP and being fixed, the corresponding minimization problem (i.e., Eq. (5)) simplifies to a unconstrained partially regularized problem:

| (9) |

We estimate its solution also via another instance of BCD (referred to as P-BCD). Now let be the estimate of P-BCD at the bth iteration. In the next iteration, β = β(b) is fixed so that Eq. (9) simplifies to the univariate optimization problem

| (10) |

We estimate its solution via the simplex search approach [66]. Next, P-BCD fixes simplifying Eq. (9) to

| (11) |

As pointed out in [48], a solution to the above problem can be estimated via the Nonmonotone Proximal Gradient (NPG) algorithm [67]. At each iteration, NPG approximates the Taylor expansion of at its current estimate of β(b+1), replaces in Eq. (11) with its approximated Taylor expansion, and updates its estimate by solving the resulting minimization problem in closed-form. After NPG converges, its estimate is a first-order stationary point of Eq. (11).

P-BCD iteratively keeps updating its estimates of until it reaches the stopping criteria, i.e., the absolute value of the estimated minimum of the cost function or the relative difference in that minimum across two iterations is smaller than a threshold ϵ:

The Appendix provides the pseudo-code of P-BCD (Algorithm 3) and additional remarks about the implementation.

2.3. Convergence Properties of SFS

This section introduces a lemma and two theorems showing that the accumulation point of the sequence generated by either implementations of Algorithm 1 is a local minimum to corresponding instance of Eq. (1), i.e., Eq. (3) for the ‘hybrid’ implementation SFSH and Eq. (5) for the ‘partial’ implementation SFSP. Specifically, we first show that SFS (Algorithm 1) computes a local coordinate minimum, i.e., a minimum to Eq. (1) with α or (β, β0) being fixed. For the case that (β, β0) is fixed, Step 2 of SFS computes the closed form solution to Eq. (1) according to Lemma 2.1. When α is fixed, then Step 3 of SFS computes local minima of Eq. (1) with respect to (β, β0), i.e., Proposition A.1 (see Appendix) shows that this the case for the hybrid regularization (Eq. (7)) and Theorem 2.2 for the partial ℓ1 regularization (Eq. (9)). A condition stemming from Theorem 2.2 is that the NPG algorithm converges to a local minimum, which is proven by Proposition A.2 (see Appendix). Given these findings, Theorem 2.3 then shows that this local coordinate minimum is also a local minimum of Eq. (1). The remainder of this section provides further details about these derivations.

Lemma 2.1

Let be given and {I(+1),I(−1)} be the decomposition of index {1, · · ·, n} according to the two groups. Consider the following optimizaton problem

| (12) |

where αI(z) denotes the subvector of α reduced to the index set I(z), z = ±1. Without loss of generality, we assume {1, · · ·, k−} ⊆ I(−1) and ξi ≤ ξj for any i, j ∈ I(−1) with i < j. Without loss of generality, we also assume {k− + 1, · · ·, k− + k+} ⊆ I(+1) and ξi ≤ ξj for any i, j ∈ I(+1) with i < j. Then, the optimal solution of Eq. (12) is

Proof. Now, , and and the following is true for any feasible point α of Eq. (12),

Hence, α* is an optimal solution of Eq. (12). □

Setting , the previous lemma provides a closed form solution to (1) with respect to α (with (β, β0) being fixed). Next, we show that SFSH and SFSP compute local minima of (1) with respect to (β, β0) (with α being fixed).

With respect to SFSH and fixed α, Eq. (1) turns into Eq. (7), where the solution is estimated by a version of the PD algorithm [62]. While the PD algorithm in [62] is only applied to a logistic cost function, its proofs of convergence are derived for cost functions that are smooth and convex. As the cost function of Eq. (7) fulfills these assumptions, we conclude (see Proposition A.1) that any accumulation point of the resulting sequence generated by our implementation of PD provides a local minimum to Eq. (7).

With respect to SFSP and fixed α, Eq. (1) turns into Eq. (9), where the solution is estimated via P-BCD. The following theorem shows that P-BCD derives a local minimum for that problem:

Theorem 2.2

Suppose that is an accumulation point of the sequence generated by P-BCD. For any b ≥ 1, assume that

and β(b) is a local minimum of the problem

Then is a local minimum of Eq. (9).

Proof. We first show is a block coordinate minimum, i.e.,

and then a local minimum of Eq. (9).

One can observe from Eq. (10) and Eq. (11) that

| (13) |

| (14) |

It follows that for all b ≥ 1

| (15) |

Hence, the sequence is non-increasing. Since is an accumulation point of the sequence , there exists a subsequence L such that . Observe that can be expressed as the difference of ℓ1-norm and the r-norm (defined as the sum of the r largest magnitudes of the entries in the associated vector, i.e., for any with ). It immediately follows that is a continuous function and hence . By this and the continuity of , we observe that is bounded, which together with the monotonicity of implies that is bounded below and hence exists. This observation, Eq. (15) and the continuity of yield

Using this, the continuity of , and taking limits on both sides of Eq. (13) and Eq. (14) as L∋b→∞, we have

| (16) |

| (17) |

i.e., is a block coordinate minimum

Now to show that is a local minimum of Eq. (9), let be a neighbourhood of and be arbitrarily chosen. Let , then when ϵ is sufficiently small, there exists some such that . To simplify presentation, we define ϕ(β) := λ∥βJ ∥1 and . For any , it follows from , and Eq. (17) that

Thus, is an optimal solution of , and its first-order optimality condition implies

where is the subdifferential of ϕ at . Hence, we have

| (18) |

According to Eq. (16), is an optimal solution of and its first-order optimality condition implies

| (19) |

Making use of Eq. (18), Eq. (19), the convexity of and ϕ, we obtain that for any

| (20) |

where the first inequality follows from (18) and the second one is due to the convexity of . Our choice of thus implies that is a local minimum of (9). □

Since the simplex search converges to the optimal solution of Eq. (10) according to [66, Theorem 4.1] (quoted also in Section S1 of the Supplement) and NPG converges to a local minimum of Eq. (11) (see Proposition A.2), it follows from Theorem 2.2 that any accumulation point of the resulting sequence generated by P-BCD is a local minimum to Eq. (9).

Now that we have shown that each iteration of Algorithm 1 computes local minima for Eq. (6) and Eq. (7) (with respect to SFSH) or Eq. (9) (with respect to SFSP), we prove that any accumulation point of the sequences generated by them are local minima to the corresponding instances of Eq. (1).

Theorem 2.3

Given any closed set and a function such that , we consider the following optimization problem

| (21) |

Suppose that is an accumulation point of the sequence generated by Algorithm 1, where

and is a local minimum of the problem

for t = 1, 2, …. Assuming that

f1, · · ·, fn are continuous

if α(i) = α(j) and i and j are sufficiently large

then is a local minimum of Eq. (21).

Proof. Since is an accumulation point of the sequence , there exists a subsequence such that

Define . As and , and since and are closed sets, and . Indeed, for any sufficiently large due to the fact that the elements of α(t) are discrete and is convergent. Consequently, when is sufficiently large, the BCD is simplified into iteratively solving the following optimization problem

| (22) |

For any sufficiently large , it follows from the Assumption (b) that , and hence is a local minimum of Eq. (22). Consider any feasible point that is sufficiently close to . It follows from Assumption (a) that the ordering of is the same with that of . By Lemma 2.1, we have

which immediately implies that

With this relation and the local optimality of on (22), then . The conclusion immediately follows from this inequality and the feasibility of . □

The theorem does not explicitly specify the regularization term but implicitly confines it to functions that fulfill Assumption (b). That is, the local minimum of is attainable and it only depends on α(t). Furthermore, the implementations of Eq. (3) and Eq. (5) fulfill both assumptions of the theorem. They fulfill Assumption (a) as the sigmoid function σ(·) of Eq. (3) and Eq. (5) is continuous. They also fulfill Assumption (b) as the PD algorithm (defined in Section 2.2.1) of SFSH and P-BCD algorithm (defined in Section 2.2.2) of SFSP involve no randomness or other uncertainties (given fixed initialization schemes) so that their outcomes only depend on α(t). In conclusion, the accumulation point of the sequence generated by SFSH is a local minimum of Eq. (3) and by SFSP of Eq. (5).

3. Experiments

We now compare the accuracy of the proposed and alternative methods with respect to ‘imbalanced’ datasets, i.e., each dataset consists of classes that are unequal in size. Applying 10-fold nested cross-validation to a synthetic, three image recognition (MNIST, COIL20, CMU PIE), and one neuroimaging dataset, we measure the robustness and accuracy of our proposed approaches SFSP, SFSH, and the ℓ0-only regularization approach SFSC (which is equivalent to SFSH with λ = 0 in Eq. (2)). The codes are available at https://github.com/eadeli/sfs_l0.

The comparison also includes the joint feature-sample selection approach based on the ℓ1-norm [53]. Furthermore, it includes [62] (i.e., the logistic regression classifier confined only by cardinality-constraint feature selection and not sample selection), linear support vector machines (SVM), and a sparse feature selection technique [38] coupled with a linear SVM (denoted by FSSVM). For a fair comparison, we re-ran the last set of methods by weighing samples in the corresponding cost function according to the size of the associated class (denoted , SVM-W and FSSVM-W, respectively).

Specific to three image recognition benchmarks, the comparison also lists accuracy scores previously published on these datasets, such as the deep learning or more traditional approaches on MINST (i.e., ALOCC [1], LOF [2], DRAE [68]), COIL20 (i.e., Wang et al. [69], multi-layer neural networks), and CMU PIE (Laplacianface [70] and Wang et al. [69]).

3.1. Datasets

First, we measure the reliability of the methods with respect to increasing the number of redundant features and outlier samples on a synthetic dataset. We use the MNIST dataset [26] consisting of images of hand-written digits to quantitatively and qualitatively evaluate the performance of the methods with respect to the imbalance between classes during training. To show that our findings generalize to other imbalanced image recognition datasets, the experiments also include COIL20 [27] (testing object recognition) and CMU PIE [28] (testing face verification). Finally, we underline the utility of our approach by applying it to neuroimage datasets [71–73] consisting of control (CTRL) subjects, those with Alcohol use disorder (ALC), or HIV infection. Beyond reporting accuracy scores, we interpret the features selected by our method as imaging phenotype with the potential to improve the mechanistic understanding of ALC and HIV infection on the brain.

3.1.1. Synthetic Dataset

Similar to [74], the synthetic dataset consists of two groups with 100 samples assigned to the ‘positive class’ and 50 to the ‘negative class’. Each sample is described by 80 features. For the first group, these features are encoded by the feature matrix X+ := UQ+, where is a random orthogonal matrix and all entries of the matrix are independently and identically sampled from the normal distribution . The feature matrix X− := TUQU−, of the second group is defined with respect to matrix U, the random rotation matrix , and random matrix , where entries are again samples of . The first 100 columns of the feature matrix X are then defined by X+ and the remaining 50 columns by X−.

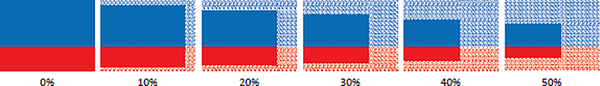

As also graphically displayed in Figure 2, we create datasets with noisy samples (outliers) by randomly selecting rows of the matrix X and then adding random noise (sampled from to the ‘raw’ feature of the corresponding entries. Furthermore, we create unreliable features by selecting rows of matrix X and then adding random noise again to the entries of those columns following the same procedure as for corrupting rows with noise. This process results in six sets, which have 0%, 10%, 20%, 30%, 40%, and 50% of the entire columns and rows corrupted by noise, respectively.

Fig. 2:

Schematic illustration of synthetic dataset. Six different sets are created with 0% to 50% of their rows (i.e., samples) and columns (i.e., features) contaminated by noise.

3.1.2. Image Recognition Datasets

MNIST Digit Dataset [26]

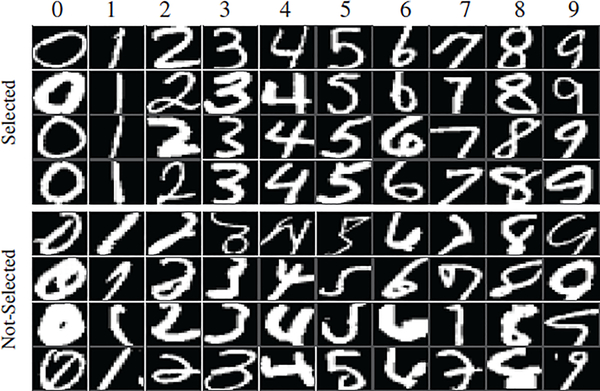

Mixed National Institute of Standards and Technology (MNIST) is a database of handwritten digits, which includes 60,000 handwritten digits from ‘0’ to ‘9’ for training and 10,000 digits for testing (sample images can be found in Fig. 8). All images are of dimension 20 × 20, therefore we directly use raw pixels as input features. Similar to prior work [1, 68], the target class in the training data consists of all images of one digit and the images of the second class are randomly drawn from all other digits. The total number of images of the second class is 50% to 100% of the number of images of the first class. The same ratio is used to define the 10 testing sets, which contain none of the training data. This experiment is repeated for all of the ten digit categories.

Fig. 8:

Example Selected (top) and Not-Selected (bottom) samples in the MNIST experiment by our method (i.e., SFSH).

COIL20 Object Dataset [27]

The Columbia University Image Library (COIL20) dataset is a benchmark object recognition and verification dataset containing 20 object categories. Each object is photographed 72 times by rotating a turntable 5 degree at a time. Each photograph is represented by a 32 × 32 gray-scale image (see Fig. 3 for examples). On this dataset, we repeat the experiment proposed by [69]. Specifically, the training set consists of 14 images of 10 object categories (totaling 140 images). For each of the 10 object categories, the corresponding 14 images are labeled positive and the other images as negative. The testing set consists of 58 images of the first 10 objects and 72 images of the last 10 objects. Using this set, the testing performance results of 10 separate runs (one for each of the 10 object categories, while the images of the other 9 objects are left out simply labeled as negative) are averaged and reported.

Fig. 3:

Samples images from the COIL20 [27] dataset.

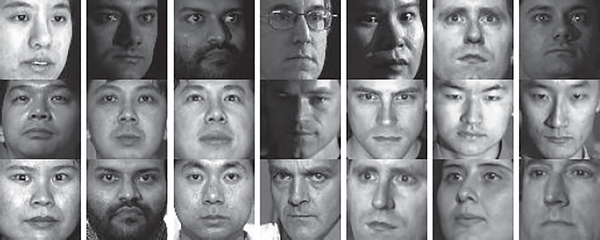

CMU PIE Face Dataset [28]

Carnegie Mellon University Pose, Illumination, and Expression (CMU PIE) dataset contains more than 40,000 facial images of 68 people captured under 43 different illumination conditions, across 13 different poses, and with 4 different expressions. As in [69], we apply each method on the first 10 individuals confined to five different poses (see Fig. 4 for examples). Image pixels are used as features. Each individual has 170 images, 30 from each are used for training and the rest for testings (similar to [69]). We verify each individual separately with independent training sets and report the average performance metrics over 10 individuals.

Fig. 4:

Sample images from the CMU PIE [28] dataset.

3.1.3. Neuroimage Datasets

As summarized in Table 1, the dataset consists of the morphometric measurements extracted from the magnetic resonance images (MRIs) of 265 healthy controls (CTRL), 245 ALC subjects without HIV infection, 65 HIV-infected individuals that do not meet the criteria for ALC (HIV), and 66 subjects with both ALC and HIV infection (HIV+ALC). Each MR image is preprocessed (see Section S2 of the Supplement) and a total of 299 features are extracted: mean curvature (MeanCurv), surface area (SurfArea), gray matter volume (GrayVol), and average thickness (ThickAvg) of 34 bilateral cortical ROIs; the volumes of 8 bilateral sub-cortical ROIs (i.e., thalamus, caudate, putamen, pallidum, hippocampus, amygdala, accumbens, cerebellar cortex); the volumes of 5 subregions of the corpus callosum (posterior, mid-posterior, central, mid-central and anterior); and the combined volume of all white matter hypointensities. Additionally, supratentorial volume (svol), the left and right lateral ventricles, and the third ventricle are measured. Each subject is then represented by svol and the z-scores of the remaining 298 morphometric measures.

TABLE 1:

Details of the medical image analysis datasets used in this study (‘svol’ = supratentorial volume).

| Total | sex |

Age (years) | svol (×106) | ||

|---|---|---|---|---|---|

| F | M | ||||

| CTRL | 245 | 122 | 123 | 45.59 ± 17.17 | 1.27 ± 0.13 |

| ALC | 226 | 67 | 159 | 48.49 ± 10.04 | 1.27 ± 0.11 |

| HIV | 65 | 20 | 45 | 51.81 ± 8.44 | 1.27 ± 0.15 |

| ALC+HIV | 66 | 23 | 43 | 50.97 ± 8.12 | 1.23 ± 0.14 |

Confounding Factors

With respect to the CTRL group, age, sex, and svol significantly impact (p-value < 0.001) the morphemetric measurements according to the paired t-test [75] between each demographic factor and feature. To omit their influence from the analysis, we capture the relationship between each feature and the confounding factors by parameterizing a generalized linear model (GLM) [76] on the CTRL cohort of each training run within the cross-validation. In other words, let vj encode the feature ‘j’, vage the age, vsex the sex, and vsvol the svol across all controls in the training set, then we determine the factors that provide the least-square solution across all CTRLs

| (23) |

After parameterizing the GLM, the model is applied to the morphometric measurements of each test subject in order to compute the residual scores that are indifferent to the confounding factors. In other words, the final jth feature value (the residual measurements) of subject i is defined as

| (24) |

Note, the GLM model is only trained on the data from the training folds to avoid the risk of involving testing data for any preprocessing stage in the training phase and thus reporting overly optimistic findings [77]. Furthermore, the neuroscience literature often confines parameterization of the GLM to the CTRL cohort (instead of the entire training dataset) to ensure consistency of findings across datasets and that abnormal patterns in the disease group do not negatively bias the analysis.

3.2. Measuring Accuracy

On each dataset, the accuracy of each method is measured via 10-fold nested cross-validation with the hyperparameters determined via 5-fold inner cross-validation. For SFSH and SFSP, the search space of the hyperparameter λ is {0, 10−4, 10−3, 10−2, 10−1, 100, 101}. For those implementations and SFSC, the search space for the number of selected samples (i.e., k+ and k−) is defined by the ratio ρκ. To compute ρκ, we introduce k = k+ = k− and denote with Imin = min(|I(+1)|,|I(−1)|) the size of the smallest class in the data experiment. Then, and the search space is {0.1, 0.2, …, 1}. Similarly, we define the search space for the selected number of features τ by the ratio , where p was the number total features used in the experiment. The search space for ρτ is [0.2,0.8] with the step size of 0.1. The setting of PD hyperparameters, such as the threshold for the stopping criteria, are set according to [40]. We also rely on the literature to set the search space for the hyperparameters of the alternative approaches. According to [38], the search space for hyperparameter ‘γ’ of sparse feature selection used in FSSVM is {10−4, 10−3, 10−2, 10−1, 1}. This is also the search for the hyperparameter λ of [53]. Finally, the search space for the hyperparameter C of SVM and SVM-W is {10−2, 10−1, 1, 101, 102}.

We summarize the outcome of each approach through Balanced Accuracy (BAcc) and F1-score (defined using the true positive (TP), true negative (TN), false positive (FP) and false negative (FN) of the classification approach). BAcc is the average of true positive rate (TPR) and true negative rate (TNR):

To facilitate comparison to previous publications, we report the average TPR, TNR, and BAcc over the 10 classes with respect to COIL20 and CMU PIE datasets as in [69]. For the neuroimaging datasets, we report those measures with respect to CTRL vs. HIV, CTRL vs. ALC, and CTRL vs. ALC+HIV. As done previously [1, 2, 68], we report the F1-score (F1) on the MNIST dataset, which is computed with respect to Precision (Pre) and Recall (Rec):

3.3. Results of Comparison

3.3.1. Results on the Synthetic Dataset

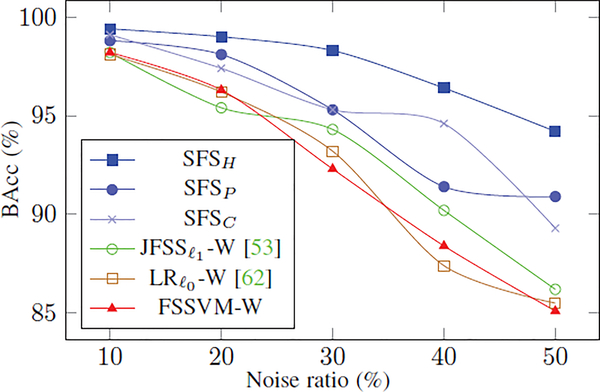

Fig. 5 summarizes the relationship between the BAcc scores of implementations and the percentage of features and samples corrupted by noise. To improve readability, the figure only plots the results of the best implementations: our proposed approaches and , , and FSSVM-W. All of those implementations achieve 100% testing accuracy on the noise-free dataset. However, when the noise increases, the accuracy scores of , , and FSSVM-W rapidly decline to around 85%. The scores of the proposed methods (SFSH, SFSP, SFSC) are less impacted by increasing noise with the hybrid regularization approach recording the least drop in accuracy (i.e., about 6%). Unlike the alternative methods, the proposed implementations build the classification model by carefully selecting informative samples, which is one explanation for their higher accuracy.

Fig. 5:

Balanced accuracy on the synthetic datasets corrupted by different ratios of noise. With the gradual increase in noise (in both samples and features), the proposed techniques more robustly classify the data, compared to [53], [62], and FSSVM.

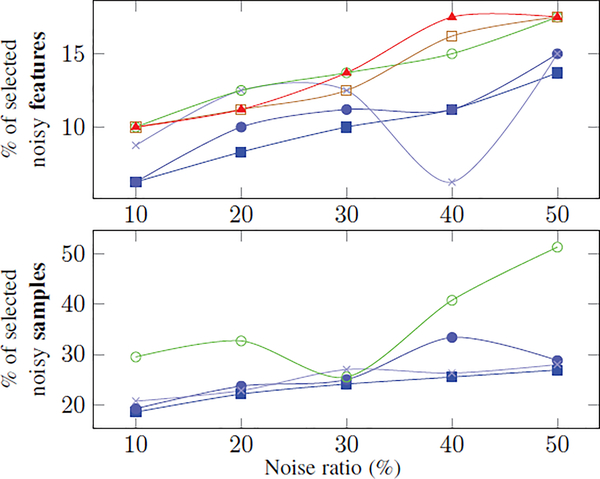

To analyze the impact of sample and feature selection on the accuracy of the methods, Fig. 6 plots the percentage of selected noisy samples and features as a function of the amount of added noise to the synthetic data. For each method and noise level, the percentage is defined by the ratio between the number of the times noisy samples (or features) were selected during each training run and the total number of noisy samples (or features) across all training runs. Note, and FSSVM-W do not select samples and are thus omitted from the corresponding plot. The plots confirm our observations of Fig. 5 that the percentages are increasing with increasing noise, the proposed methods select less noisy features and samples than the alternative implementations, and that SFSH selects the least. One exception is the large fluctuations in the percentages of selected noise features reported for SFSC. For 40% noise ratio, the percentage is even lower than for SFSH and thus would explain the relatively high BAcc for SFSC at that noise level. This instability motivates the use of the hybrid regularization scheme in SFSH as the addition of the ℓ2-norm improves the robustness of the classifier during testing. This finding also agrees with [60, 78] that the ℓ2-norm results in classifiers that generalize well to unseen testing data. Furthermore, it explains the higher BAcc scores of SFSH compared to the other proposed approaches SFSP and SFSC as the percentages of selected features and samples are quite similar between all three implementations.

Fig. 6:

Percentage of the noisy features (top) and samples (bottom) that were selected during the 10-fold cross-validation by each method. The methods are marked according to the legend in Fig. 5. The bottom plot does not include -W and FSSVM-W methods as they omit sample selection. In general, proposed methods select less noisy features and samples than the alternatives.

3.3.2. Results on the Object Recognition Dataset

MNIST

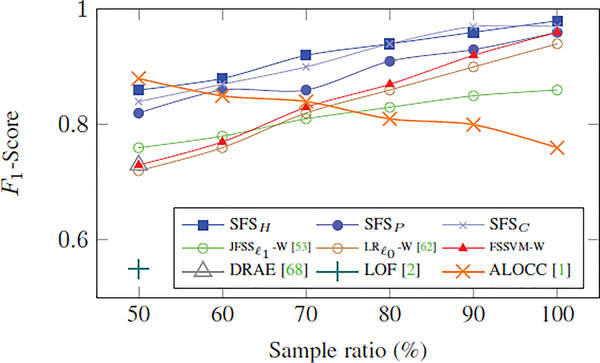

Fig. 7 shows the mean F1-scores of the classification tasks on this dataset for our proposed methods and other approaches with respect to different ratios of imbalances across the two classes (i.e., the ratio between the number of samples of the second class over the number of samples of the first class in the training set). As the results reveal, our proposed methods (SFSH, SFSP, and SFSC) achieve the highest F1-scores. With the exception of the deep learning approach ALOCC [1], the F1-scores of the approaches increase when the ratio between second and first class samples increase. Furthermore, our SFS implementations constantly outperform those published approaches.

Fig. 7:

Comparisons of F1-scores on MNIST dataset with respect to the ratio of samples across the two classes in the training set.

As mentioned, this experimental setting was inspired by [68], which was used to test their method, DRAE, and the approach LOF [2] for outlier detection/removal. They published their F1-score with respect to the 50% ratio, which was 0.73 for DRAE and 0.55 for LOF. In comparison, SFSH recorded a F1-score of 0.86. For this setting, only ALOCC reported a higher F1-score of 0.88 in [1]. When re-running their open-sourced code for the other experimental settings (i.e., 60% to 100%), the F1-score dropped down to 0.76 for the 100% setting (balanced data set), which was much lower than the 0.98 reported for SFSH. The drop in the accuracy of [1] is mainly a result of the one-class classifiers dealing with the imbalance between the two classes by focusing on the first class (target class) and regarding the second (smaller) class as ‘outliers’ or ‘novelties’. Whereas, our approach is fairly accurate regardless of the imbalance in the training data.

To evaluate the method for the cases of severe imbalance between the two classes, we repeat the same experiment with the portion of 5% or 10% samples for the second (smaller) class. The F1-score for these cases are 0.60 and 0.68, respectively. Note that our method is a two-class classifier. Therefore, in cases of severe imbalance, outlier or anomaly detection methods that model only the inlier class (such as [1, 2, 68]) are better choices.

To qualitatively evaluate the selection scheme by our method, Fig. 8 visualizes examples of digits that were either Selected or Not-Selected by SFSH in the 50% ratio setting. As is evident in the figure, the Not-Selected samples, although belonging to the same digit class, seem more deviant than the Selected ones.

COIL20

For each method, Table 2 lists the average TPR, TNR, and BAcc over all 10 object classes. The table also provides the most accurate scores published in [69], in which the proposed approach was compared to SVM, multi-layer perceptron (MLP) neural network, and a Gaussian mixture model (GMM). Of all implementations, our proposed SFSH obtains the highest balanced accuracy score in this highly imbalanced experimental setting.

TABLE 2:

Percentages of TPR, TNR, and BAcc of different methods for object verification on COIL20 dataset.

| SFSH | SFSP | SFSC | SVM | MLP | GMM | [69] | |

|---|---|---|---|---|---|---|---|

| TPR | 97.4 | 93.4 | 96.7 | 71.4 | 66.0 | 35.0 | 94.7 |

| TNR | 99.1 | 95.8 | 98.2 | 98.5 | 99.4 | 87.6 | 99.9 |

| BAcc | 98.2 | 94.6 | 97.5 | 85.0 | 82.7 | 87.6 | 97.3 |

CMU PIE

Table 3 lists the accuracy scores for the different methods on this dataset. The comparison now includes the highest accuracy scores published in [69] and the accuracy scores of the LPP approach published in [70], i.e., applying the Labpacianface approach on the face images followed by an SVM classifier. For baseline comparison, it also lists the scores of PCA followed by an SVM classifier. Among all of those implementations, the three accuracy scores of our proposed method SFSH are the highest. Furthermore, the TPR and TNR of our implementations are balanced, which is unlike those of traditional methods such as Laplacianface (LPP [70]) or PCA.

TABLE 3:

Percentages of TPR, TNR, and BAcc of different methods for face verification on CMU PIE dataset.

In summary, digit identification, object recognition, and face verification can be viewed as imbalanced classification tasks. The robustness of our method lies in jointly selecting samples and features to train a logistic regression model.

3.3.3. Results on the Neuroimage Dataset

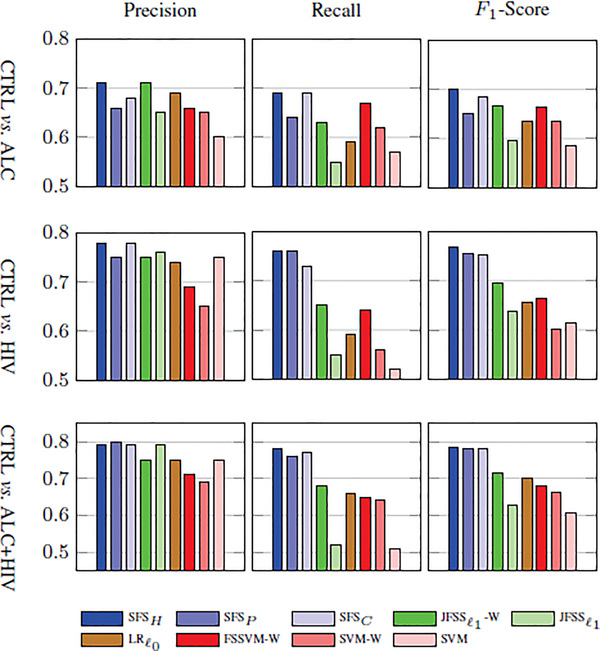

Table 4.lists the BAcc scores with respect to the three classification scenarios that involve the control group. Accuracy scores that are not significantly better than chance (i.e., p-value > 0.01 in Fisher’s exact test [79]) are marked by ‘†’. As in the previous experiments, the hybrid approach achieves the highest accuracy scores and the other two proposed methods outperform alternative implementations. The difference in accuracy between our proposed and alternative implementations is especially large for the highly imbalanced datasets (i.e., CTRL vs. HIV and CTRL vs. ALC+HIV). On the other hand, on the relatively-balanced set (CTRL vs. ALC), the difference in accuracy between all methods is relatively small. Fig. 9 plots precision, recall and F1scores of all implementations, which confirm the findings of the table. Our method SFSH reports balanced precision and recall measures, specifically for the highly imbalanced case of CTRL vs. HIV. SFSH yields a precision and recall of (0.78, 0.76), while and SVM-W result in (0.75, 0.65) and (0.65, 0.56), respectively. This shows that the proposed method can deal with the class imbalance problem more robustly than the alternative methods, even those that are based on weighted loss functions.

TABLE 4:

The balanced accuracy (BAcc) of the proposed classifier and the baseline methods on the three neuroimaging datasets.

Fig. 9:

Comparisons of results (i.e., Precision, Recall and F1-score) between the proposed and the baseline methods.

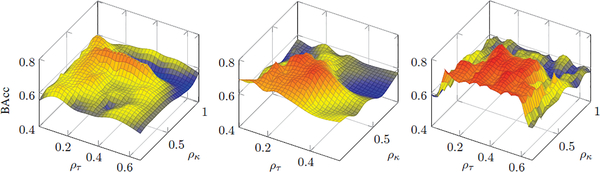

To gain deeper insight into SFSH, Fig. 10 shows the BAcc score as a function of selected samples (ρκ) and features (ρτ). To plot these diagrams, we conduct a fine grid-search across the two corresponding hyperparameters. Interestingly, the behavior of the method is specific to the classification task:

CTRL vs. ALC Our method leads to the best results with the values of the two hyperparameters fixed to ρκ=0.2 and ρτ=0.7. Interestingly, on this set, ρκ in the narrow range of [0.65, 0.75] and ρτ in [0.1, 0.4] yield better BAcc scores compared to other values of the two hyperparameters. The relatively wide range of values for ρτ and ρκ leading to similar accuracy score is probably due to the difficulties associated in accurately separating the two cohorts (i.e., the BAcc scores for all methods in Table 4. are relatively low).

CTRL vs. HIV On this set, SFSH shows the best BAcc for (ρκ=0.25, ρτ=0.4). The combination of two hyperparameters involved in the analysis showed good classification BAcc score in the following ranges: ρτ in [0.1, 0.35], and ρκ in [0.2, 0.6]. When the number of selected samples and features increases, the accuracy declines rapidly. These results imply that this dataset contains a relatively larger number of unreliable (probably noisy) samples.

CTRL vs. ALC+HIV The method achieves the highest BAcc score for (ρκ=0.30, ρτ=0.45). As far as ρτ is concerned, our method shows relatively good results in the range [0.2, 0.5] (red areas in Fig. 10). Interestingly, when the number of selected samples is fewer, then the classification accuracy is dramatically higher. This can be easily seen in Fig. 10, as there are almost no red regions for ρκ> 0.7. These results suggest that adding training samples in a highly imbalanced setting not always improves the accuracy of the classifier as the training can be corrupted by noisy samples.

Fig. 10:

Balanced accuracy (BAcc) of the proposed method with hybrid regularization as functions of the ratio of selected samples (ρκ) and the ratio of selected features (ρτ).

The output of the experiments supports our modeling assumption that not all features are informative for classification (due to redundancy or not being impacted by the conditions) as our method performs better on a subset of the features. A similar argument can be made for the sample selection component. As feature and sample selection are done in tandem with parameterizing the classifier, our algorithm, unlike alternative implementations, adjusts its threshold for the number of selected features and samples to the given dataset and thus achieves the highest accuracy scores across the three experiments.

Biomedical Findings

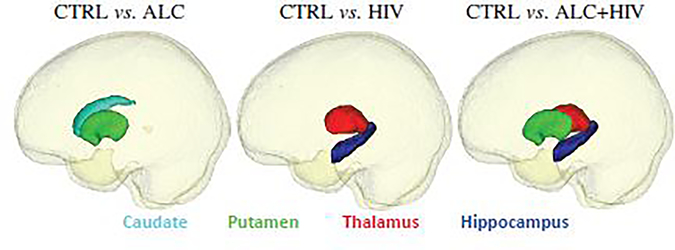

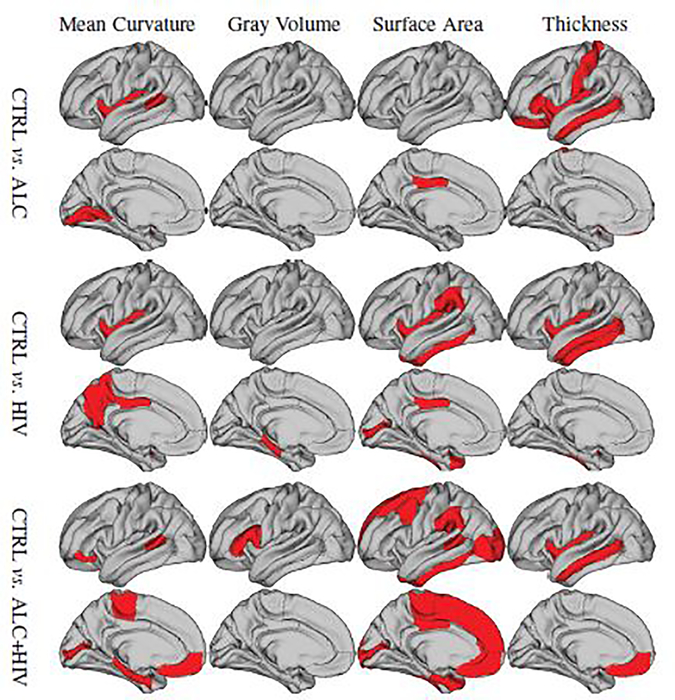

Analyzing the selected features by our method, we identify the most important features (analogous to brain ROIs) related to the disease. To this end, we plot the ROIs where the respective feature was selected at least 80% of times in the 10-fold cross-validation setting. Fig. 11 visualizes the subcortical ROIs meeting that threshold and Fig. 12 shows the cortical ROIs that meet that threshold with respect to Mean Curvature, Gray Matter Volume, Surface Area, and Cortical Thickness. As the selected features are best predictors of the class label, i.e., distinguishing controls from the disease cohort, they can be considered imaging biomarkers for each of these conditions.

Fig. 11:

Selected subcortical ROIs by our method (i.e., SFSH) for the three neuroimage datasets.

Fig. 12:

Selected ROIs by our method (i.e., SFSH) for the three neuroimage datasets.

The patterns identified by the proposed method is comprised of approximately 12% of the 298 features for the CTRL vs. ALC experiment, 16% for the CTRL vs. HIV and 23% for the CTRL vs. ALC+HIV experiment. In other words, the experiment associated with the highest accuracy score, i.e., CTRL vs. ALC+HIV, records the largest number of frequently selected ROIs. This phenomenon might document a compounding effect of ALC and HIV in the ALC+HIV cohort. This observation is furthermore supported by the fact that most of the features selected with respect to the other two experiments (i.e., CTRL vs. ALC and CTRL vs. HIV) are selected for identifying the ALC+HIV cohort.

The selected features are also in agreement with the literature, which suggests that ALC and HIV-infection are associated with volume deficits in cortical and subcortical regions [80–84]. Furthermore, studies have reported on cortical thickness and surface area being more important than cortical gray volume for both ALC and HIV-infection [85, 86], which agrees with our findings. Subcortical regions, including hippocampus, thalamus and basal ganglia structures (including caudate and putamen), are reported in the literature to be affected by HIV and ALC [72, 87–89], which are also found important by our method.

4. Discussion and Conclusion

We proposed an algorithm for training logistic regression, where the parameterization of the classifier explicitly accounted for noise being present in the samples, existence of irrelevant features, and class imbalance. We implemented the method based on cardinality constraints enforced by the ℓ0-’norm’and surrogates. According to the proofs, our method converges to the local minimum of this non-convex optimization problem. The most accurate implementation, i.e., SFSH, coupled a soft-constraint defined by ℓ2-norm with a hard-constraint enforced through ℓ0-’norm’. This finding resonates with that reported of another hybrid regularization schema, such as coupling ℓ2 with ℓ1 by elasticnet [60]. Also, note that our sample selection scheme discarded hugely deviating samples from the prediction loss (i.e., outliers). Therefore, the selected samples lie closer to the decision boundary if a linear boundary between the two classes is drawn (similar to support vectors in SVM). As a result, the selected samples were an optimal set that the model can build a classifier upon.

SFSH and the other implementations of our proposed methods also recorded higher balanced accuracy scores than previously published methods. We first tested the methods on synthetic data gradually adding noise to the features and samples. Compared to alternative implementations, our method was better in omitting noisy samples and features from the training process, which explained its higher testing accuracy. The next set of experiments on image recognition benchmark and neuroimaging datasets confirmed the higher accuracy scores on real data that were corrupted by noise, contained irrelevant features, and had skewed class distributions. The three experiments on the image-based recognition and verification tasks showed that jointly selecting relevant features and samples during training can improve the results and outperform the already published results, especially under such highly imbalanced settings. With respect to the neuroimaging data, our method identified brain regions to be impacted by ALC or HIV that had been reported on in the neuroscience literature. For most of the cortical regions, our approach selected Surface Area or Thickness as informative but rarely their volume. This is interesting as cortical volume can be estimated by multiplying surface area with cortical thickness. We, thus, experimentally showed that our model selects a compact set of relevant features.

In summary, we proposed a classifier that extends logistic regression so that it not only selects informative features but also samples for distinguishing the cohorts. We solved the resulting ℓ0-based non-convex optimization problem by coupling block coordinate descent (BCD) with the penalty decomposition (PD) approach. The more accurate scores on several experiments compared to other published works demonstrated the superiority of our proposed method. Furthermore, the method focused on regions impacted by ALC or HIV highlighting the potential of our method for identifying imaging phenotypes that improve the mechanistic understanding of psychiatric diseases and disorders. As a direction for future works, to apply our logistic model to other real-world applications, one can embed a version of the loss function (possibly with convex surrogates) into deep feature learning strategies.

Supplementary Material

Acknowledgments

This research was supported in part by NIH grants U01-AA017347, R37-AA010723, K05-AA017168, K23-AG032872, R01-MH113406, R01-HL127661, AA005965, and AA026762. The authors also would like to thank Drs. Zirui Zhou (Hong Kong Baptist University), Bin Dong (Peking University), Qingyu Zhao (Stanford University), and Mrs. Lisa M. Jack (SRI and Stanford) for proofreading and their inputs on the theoretical aspects of the paper.

Biography

Ehsan Adeli is a postdoctoral research fellow at Stanford University. He received his Ph.D. from Iran University of Science and Technology, after which he worked as a postdoctoral researcher at the University of North Carolina at Chapel Hill. He has previously worked as a visiting research scholar at the Robotics Institute, Carnegie Mellon University in Pittsburgh, PA. Dr. Adeli’s research interests include machine learning, computer vision, medical image analysis and computational neuroscience.

Xiaorui Li received her master’s degree in mathematics from Simon Fraser University in Canada, where she is currently working toward the Ph.D. degree on Operations Research. Her current interests include optimization theory, algorithms and applications in pattern recognition and machine learning.

Dongjin Kwon is a research scientist at SRI International and Stanford University. He received his Ph.D. degree in Electrical Engineering and Computer Science from Seoul National University, Korea. He was a postdoctoral researcher at University of Pennsylvania. Dr. Kwon’s research interests include medical image analysis, computer vision, machine learning and fingerprint recognition.

Yong Zhang received his Ph.D. from Simon Fraser University in 562014. He then took up a postdoctoral research position at Stanford University. He is currently a senior principal engineer in Vancouver Research Center, Huawei, Burnaby, BC, Canada. His major interests involve applying various optimization methods to solving problems arising in computer vision, machine learning, and medical image analysis.

Kilian M. Pohl received the PhD degree from the Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology. He is currently the Program Director of Biomedical Computing at the Center for Health Sciences, SRI International. His research focuses on creating algorithms aimed at identifying biomedical phenotypes accelerating the mechanistic understanding, diagnosis, and treatment of neuropsychiatric conditions.

Footnotes

Notations: We use to denote the set of all real numbers. The set of n-dimensional real vectors is denoted by denotes the set of all n-dimensional binary vectors. Bold capital letters denote matrices (e.g., D), and bold small letters denote vectors (e.g., d, β). All non-bold letters denote scalar variables. di denotes the ith component of vector d. Vector norms are defined as: , infinity-norm . Also, the ℓ0-’norm’ (also known as, cardinality) of d is defined as the number of nonzero entries in d. The inner products of the vectors is defined as designates the Frobenius norm of D. |A| is defined as the number of elements in the set A.

Contributor Information

Ehsan Adeli, Stanford University, Stanford, CA, 94305.

Xiaorui Li, Department of Mathematics, Simon Fraser University, Burnaby, BC, Canada V5A 1S6.

Dongjin Kwon, Center for Health Sciences, SRI International, Menlo Park, CA, 94025; Department of Psychiatry and Behavioral Sciences, Stanford University, Stanford, CA, 94305.

Yong Zhang, Vancouver Research Center, Huawei, Burnaby, BC, Canada V5C 6S7.

Kilian M. Pohl, Center for Health Sciences, SRI International, Menlo Park, CA, 94025.

References

- [1].Sabokrou M, Khalooei M, Fathy M, and Adeli E, “Adversarially learned one-class classifier for novelty detection,” in CVPR, 2018. [Google Scholar]

- [2].Breunig MM, Kriegel H-P, Ng RT, and Sander J, “Lof: identifying density-based local outliers,” in ACM sigmod record, vol. 29, pp. 93–104, ACM, 2000. [Google Scholar]

- [3].Maalouf M and Trafalis TB, “Robust weighted kernel logistic regression in imbalanced and rare events data,” Computational Statistics & Data Analysis, vol. 55, no. 1, pp. 168–183, 2011. [Google Scholar]

- [4].Adeli-Mosabbeb E and Fathy M, “Non-negative matrix completion for action detection,” Image and Vision Computing, vol. 39, pp. 38–51, 2015. [Google Scholar]

- [5].Adeli-Mosabbeb E, Thung K-H, An L, Shi F, and Shen D, “Robust feature-sample linear discriminant analysis for brain disorders diagnosis,” in NIPS, 2015. [Google Scholar]

- [6].Coupé P, Yger P, Prima S, Hellier P, Kervrann C, and Barillot C, “An optimized blockwise nonlocal means denoising filter for 3-d magnetic resonance images,” IEEE transactions on medical imaging, vol. 27, no. 4, pp. 425–441, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Adeli E, Thung K-H, An L, Wu G, Shi F, Wang T, and Shen D, “Semi-supervised discriminative classification robust to sample-outliers and feature-noises,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Djamanakova A, Tang X, Li X, Faria AV, Ceritoglu C, Oishi K, Hillis AE, Albert MS, Lyketsos C, Miller MI, and Mori S, “Tools for multiple granularity analysis of brain MRI data for individualized image analysis,” NeuroImage, vol. 101, no. 0, pp. 168–176, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Thung K-H, Wee C-Y, Yap P-T, and Shen D, “Neurodegenerative disease diagnosis using incomplete multi-modality data via matrix shrinkage and completion,” NeuroImage, vol. 91, pp. 386–400, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Wan X, Liu J, Cheung WK, and Tong T, “Learning to improve medical decision making from imbalanced data without a priori cost,” BMC medical informatics and decision making, vol. 14, no. 1, p. 111, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Hosmer DW Jr, Lemeshow S, and Sturdivant RX, Applied logistic regression, vol. 398 John Wiley & Sons, 2013. [Google Scholar]

- [12].Mwangi B, Tian TS, and Soares JC, “A review of feature reduction techniques in neuroimaging,” Neuroinformatics, vol. 12, no. 2, pp. 229–244, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Hodge V and Austin J, “A survey of outlier detection methodologies,” Artificial intelligence review, vol. 22, no. 2, pp. 85–126, 2004. [Google Scholar]

- [14].Kang Q, Chen X, Li S, and Zhou M, “A noise-filtered under-sampling scheme for imbalanced classification,” IEEE transactions on cybernetics, vol. 47, no. 12, pp. 4263–4274, 2017. [DOI] [PubMed] [Google Scholar]

- [15].He H and Garcia EA, “Learning from imbalanced data,” IEEE Transactions on knowledge and data engineering, vol. 21, no. 9, pp. 1263–1284, 2009. [Google Scholar]

- [16].Ng V and Cardie C, “Combining sample selection and error-driven pruning for machine learning of coreference rules,” in Proceedings of the ACL conference on Empirical methods in natural language processing, pp. 55–62, Association for Computational Linguistics, 2002. [Google Scholar]

- [17].Zellner D, Keller F, and Zellner GE, “Variable selection in logistic regression models,” Communications in Statistics-Simulation and Computation, vol. 33, no. 3, pp. 787–805, 2004. [Google Scholar]

- [18].Bursac Z, Gauss CH, Williams DK, and Hosmer DW, “Purposeful selection of variables in logistic regression,” Source code for biology and medicine, vol. 3, no. 1, p. 17, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Austin PC and Steyerberg EW, “The number of subjects per variable required in linear regression analyses,” Journal of clinical epidemiology, vol. 68, no. 6, pp. 627–636, 2015. [DOI] [PubMed] [Google Scholar]

- [20].Agresti A, “Building and applying logistic regression models,” Categorical Data Analysis, Second Edition, pp. 211–266, 2003. [Google Scholar]

- [21].Pregibon D, “Logistic regression diagnostics,” The Annals of Statistics, pp. 705–724, 1981. [Google Scholar]

- [22].Goodfellow I, Bengio Y, Courville A, and Bengio Y, Deep learning, vol. 1 MIT press Cambridge, 2016. [Google Scholar]

- [23].Chapelle O, “Training a support vector machine in the primal,” Neural computation, vol. 19, no. 5, pp. 1155–1178, 2007. [DOI] [PubMed] [Google Scholar]

- [24].Feng J, Xu H, Mannor S, and Yan S, “Robust logistic regression and classification,” in NIPS, pp. 253–261, 2014. [Google Scholar]

- [25].Tibshirani J and Manning CD, “Robust logistic regression using shift parameters,” in Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, vol. 2, pp. 124–129, 2014. [Google Scholar]

- [26].LeCun Y, Bottou L, Bengio Y, and Haffner P, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998. [Google Scholar]

- [27].Nene SA, Nayar SK, and Murase H, “Columbia object image library (coil-20)(technical report),” Columbia University, 1996. [Google Scholar]

- [28].Sim T, Baker S, and Bsat M, “The cmu pose, illumination, and expression (pie) database,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, no. 12, pp. 1615–1618, 2003. [Google Scholar]

- [29].Bron E, Smits M, van Swieten J, Niessen W, and Klein S, “Feature selection based on svm significance maps for classification of dementia,” in Machine Learning in Medical Imaging, vol. 8679, pp. 272–279, 2014. [Google Scholar]

- [30].Oh JH, Kim YB, Gurnani P, Rosenblatt K, and Gao J, “Biomarker selection for predicting alzheimer disease using high-resolution maldi-tof data,” in IEEE International Conference on Bioinformatics and Bioengineering, pp. 464–471, October 2007. [Google Scholar]

- [31].Fischler MA and Bolles RC, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Commun. ACM, vol. 24, no. 6, pp. 381–395, 1981. [Google Scholar]

- [32].Tang Y, Zhang Y-Q, Chawla NV, and Krasser S, “Svms modeling for highly imbalanced classification,” IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), vol. 39, no. 1, pp. 281–288, 2009. [DOI] [PubMed] [Google Scholar]

- [33].Hwang JP, Park S, and Kim E, “A new weighted approach to imbalanced data classification problem via support vector machine with quadratic cost function,” Expert Systems with Applications, vol. 38, no. 7, pp. 8580–8585, 2011. [Google Scholar]

- [34].Ertekin Ş, “Adaptive oversampling for imbalanced data classification,” in Information Sciences and Systems 2013, pp. 261–269, Springer, 2013. [Google Scholar]

- [35].Chawla NV, Bowyer KW, Hall LO, and Kegelmeyer WP, “Smote: synthetic minority over-sampling technique,” Journal of artificial intelligence research, vol. 16, pp. 321–357, 2002. [Google Scholar]

- [36].Gu Q, Li Z, and Han J, “Generalized fisher score for feature selection,” in UAI, 2011. [Google Scholar]

- [37].Coates A, Lee H, and Ng A, “An analysis of single-layer networks in unsupervised feature learning,” in AI and Stat., Journal of Machine Learning Research, vol. 15, pp. 215–223, 2011. [Google Scholar]

- [38].Nie F, Huang H, Cai X, and Ding CH, “Efficient and robust feature selection via joint ℓ2,1-norms minimization,” in NIPS, pp. 1813–1821, 2010. [Google Scholar]

- [39].Peng H, Long F, and Ding C, “Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 8, pp. 1226–1238, 2005. [DOI] [PubMed] [Google Scholar]

- [40].Zhang Y, Kwon D, Esmaeili-Firidouni P, Pfefferbaum A, Sullivan EV, Javitz H, Valcour V, and Pohl KM, “Extracting patterns of morphometry distinguishing hiv associated neurodegeneration from mild cognitive impairment via group cardinality constrained classification,” Human brain mapping, vol. 37, no. 12, pp. 4523–4538, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Saeys Y, Inza I, and Larrañaga P, “A review of feature selection techniques in bioinformatics,” bioinformatics, vol. 23, no. 19, pp. 2507–2517, 2007. [DOI] [PubMed] [Google Scholar]

- [42].Adeli E, Wu G, Saghafi B, An L, Shi F, and Shen D, “Kernel-based joint feature selection and max-margin classification for early diagnosis of parkinsons disease,” Scientific reports, vol. 7, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Rosa MJ, Portugal L, Hahn T, Fallgatter AJ, Garrido MI, Shawe-Taylor J, and Mourao-Miranda J, “Sparse network-based models for patient classification using fmri,” Neuroimage, vol. 105, pp. 493–506, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Blumensath T and Davies ME, “Iterative thresholding for sparse approximations,” Journal of Fourier Analysis and Applications, vol. 14, pp. 629–654, December 2008. [Google Scholar]

- [45].Tropp JA, “Greed is good: algorithmic results for sparse approximation,” IEEE Transactions on Information Theory, vol. 50, pp. 2231–2242, October 2004. [Google Scholar]

- [46].Natarajan BK, “Sparse approximate solutions to linear systems,” SIAM J on Computing, vol. 24, no. 2, pp. 227–234, 1995. [Google Scholar]

- [47].Tibshirani R, “Regression shrinkage and selection via the lasso: a retrospective,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), vol. 73, no. 3, pp. 273–282, 2011. [Google Scholar]

- [48].Lu Z and Li X, “Sparse recovery via partial regularization: Models, theory and algorithms,” Mathematics of Operations Research, 2017. [Google Scholar]

- [49].Zhang T, “Analysis of multi-stage convex relaxation for sparse regularization,” J. Mach. Learn. Res, vol. 11, pp. 1081–1107, March 2010. [Google Scholar]

- [50].Fan J and Li R, “Variable selection via nonconcave penalized likelihood and its oracle properties,” J of the American Statistical Association, vol. 96, no. 456, pp. 1348–1360, 2001. [Google Scholar]

- [51].Candès EJ, Wakin MB, and Boyd SP, “Enhancing sparsity by reweighted ℓ1 minimization,” Journal of Fourier Analysis and Applications, vol. 14, pp. 877–905, December 2008. [Google Scholar]

- [52].Foucart S and Lai M-J, “Sparsest solutions of underdetermined linear systems via q-minimization for 0¡q1,” Applied and Computational Harmonic Analysis, vol. 26, no. 3, pp. 395–407, 2009. [Google Scholar]

- [53].Adeli E, Shi F, An L, Wee C-Y, Wu G, Wang T, and Shen D, “Joint feature-sample selection and robust diagnosis of parkinson’s disease from MRI data,” NeuroImage, vol. 141, pp. 206–219, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Huber PJ and Ronchetti E, “Robust statistics, series in probability and mathematical statistics,” 1981. [Google Scholar]

- [55].Gislason PO, Benediktsson JA, and Sveinsson JR, “Random forests for land cover classification,” Pattern Recognition Letters, vol. 27, no. 4, pp. 294–300, 2006. [Google Scholar]

- [56].Liu A and Ziebart B, “Robust classification under sample selection bias,” in NIPS, pp. 37–45, 2014. [Google Scholar]

- [57].Mohsenzadeh Y, Sheikhzadeh H, Reza AM, Bathaee N, and Kalayeh MM, “The relevance sample-feature machine: A sparse bayesian learning approach to joint feature-sample selection,” IEEE Transactions Cybernetics, vol. 43, no. 6, pp. 2241–2254, 2013. [DOI] [PubMed] [Google Scholar]

- [58].An L, Adeli E, Liu M, Zhang J, and Shen D, “Semi-supervised hierarchical multimodal feature and sample selection for alzheimers disease diagnosis,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 79–87, Springer, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Meinshausen N, “Relaxed lasso,” Computational Statistics and Data Analysis, pp. 374–393, 2007. [Google Scholar]

- [60].Zou H and Hastie T, “Regularization and variable selection via the elastic net,” J. of Royal Statistical Society: Series B (Statistical Methodology), vol. 67, no. 2, pp. 301–320, 2005. [Google Scholar]

- [61].Berkson J, “Application of the logistic function to bio-assay,” J of the American Statistical Association, vol. 39, no. 227, pp. 357–365, 1944. [Google Scholar]

- [62].Zhang Y, Kwon D, and Pohl KM, “Computing group cardinality constraint solutions for logistic regression problems,” Medical image analysis, vol. 35, pp. 58–69, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Hoerl A and Kennard R, “Ridge regression: Biased estimation for nonorthogonal problems,” Technometrics, vol. 42, no. 1, pp. 80–86, 2000. [Google Scholar]

- [64].Ortega J and Rheinboldt W, Iterative Solution of Nonlinear Equations in Several Variables. Society for Industrial and Applied Math, 2000. [Google Scholar]

- [65].Lu Z and Zhang Y, “Sparse approximation via penalty decomposition methods,” SIAM J on Optimization, vol. 23, no. 4, pp. 2448–2478, 2013. [Google Scholar]

- [66].Lagarias JC, Reeds JA, Wright MH, and Wright PE, “Convergence properties of the nelder–mead simplex method in low dimensions,” SIAM Journal on Optimization, vol. 9, no. 1, pp. 112–147, 1998. [Google Scholar]

- [67].Wright SJ, Nowak RD, and Figueiredo MA, “Sparse reconstruction by separable approximation,” IEEE Transactions on Signal Processing, vol. 57, no. 7, pp. 2479–2493, 2009. [Google Scholar]

- [68].Xia Y, Cao X, Wen F, Hua G, and Sun J, “Learning discriminative reconstructions for unsupervised outlier removal,” in Proceedings of the IEEE International Conference on Computer Vision, pp. 1511–1519, 2015. [Google Scholar]

- [69].Wang J, You J, Li Q, and Xu Y, “Extract minimum positive and maximum negative features for imbalanced binary classification,” Pattern Recognition, vol. 45, no. 3, pp. 1136–1145, 2012. [Google Scholar]

- [70].He X, Yan S, Hu Y, Niyogi P, and Zhang H-J, “Face recognition using laplacianfaces,” IEEE transactions on pattern analysis and machine intelligence, vol. 27, no. 3, pp. 328–340, 2005. [DOI] [PubMed] [Google Scholar]

- [71].Pfefferbaum A, Rosenbloom MJ, Rohlfing T, Adalsteinsson E, Kemper CA, Deresinski S, and Sullivan EV, “Contribution of alcoholism to brain dysmorphology in hiv infection: effects on the ventricles and corpus callosum,” Neuroimage, vol. 33, no. 1, pp. 239–251, 2006. [DOI] [PubMed] [Google Scholar]

- [72].Pfefferbaum A, Rosenbloom MJ, Sassoon SA, Kemper CA, Deresinski S, Rohlfing T, and Sullivan EV, “Regional brain structural dysmorphology in human immunodeficiency virus infection: effects of acquired immune deficiency syndrome, alcoholism, and age,” Biological psychiatry, vol. 72, no. 5, pp. 361–370, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [73].Pfefferbaum A, Rogosa DA, Rosenbloom MJ, Chu W, Sassoon SA, Kemper CA, Deresinski S, Rohlfing T, Zahr NM, and Sullivan EV, “Accelerated aging of selective brain structures in human immunodeficiency virus infection: a controlled, longitudinal magnetic resonance imaging study,” Neurobiology of aging, vol. 35, no. 7, pp. 1755–1768, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [74].Liu G, Lin Z, Yan S, Sun J, Yu Y, and Ma Y, “Robust recovery of subspace structures by low-rank representation,” IEEE Trans. on Pattern Analysis and Machine Intelligence, vol. 35, no. 1, pp. 171–184, 2013. [DOI] [PubMed] [Google Scholar]

- [75].Zimmerman DW, “Teachers corner: A note on interpretation of the paired-samples t test,” Journal of Educational and Behavioral Statistics, vol. 22, no. 3, pp. 349–360, 1997. [Google Scholar]

- [76].Madsen H and Thyregod P, Introduction to general and generalized linear models. CRC Press, 2010. [Google Scholar]

- [77].Kriegeskorte N, Simmons WK, Bellgowan PS, and Baker CI, “Circular analysis in systems neuroscience: the dangers of double dipping,” Nature neuroscience, vol. 12, no. 5, pp. 535–540, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [78].Ng AY, “Feature selection, L1 vs. L2 regularization, and rotational invariance,” in Proceedings of the twenty-first international conference on Machine learning, p. 78, ACM, 2004. [Google Scholar]

- [79].Fisher RA, “The logic of inductive inference,” Journal of the Royal Statistical Society, vol. 98, no. 1, pp. 39–82, 1935. [Google Scholar]