Abstract

Combining information from multiple sources is a fundamental operation performed by networks of neurons in the brain, whose general principles are still largely unknown. Experimental evidence suggests that combination of inputs in cortex relies on nonlinear summation. Such nonlinearities are thought to be fundamental to perform complex computations. However, these non-linearities are inconsistent with the balanced-state model, one of the most popular models of cortical dynamics, which predicts networks have a linear response. This linearity is obtained in the limit of very large recurrent coupling strength. We investigate the stationary response of networks of spiking neurons as a function of coupling strength. We show that, while a linear transfer function emerges at strong coupling, nonlinearities are prominent at finite coupling, both at response onset and close to saturation. We derive a general framework to classify nonlinear responses in these networks and discuss which of them can be captured by rate models. This framework could help to understand the diversity of non-linearities observed in cortical networks.

Author summary

Models of cortical networks are often studied in the strong coupling limit, where the so-called balanced state emerges. Across a wide range of parameters, balanced state models explain a number of ubiquitous properties of cortex, such as irregular neural firing. However, in the strong coupling limit, balanced state models show an unrealistic linear network transfer function. We examined, in networks of spiking neurons, how nonlinearities arise as network coupling strength is reduced to realistic levels. We examine closed-form solutions that arise from mean-field analysis, and confirm results with numerical simulations, to show that nonlinearities at response onset and saturation emerge as coupling strength is reduced. Critically, for realistic parameter values, both types of nonlinearities are observed at experimentally-observed firing rates. Thus, cortical network models with moderate coupling strength can account for experimentally observed cortical response nonlinearities.

Introduction

The ability of the brain to perform complex functions relies on circuits combining inputs from several sources to make decisions and drive behavior. The principles governing how different inputs are combined in neuronal circuits have yet to be uncovered. One of the leading theoretical models for cortical dynamics and its dependence on inputs is the balanced network model [1, 2]. In this model, a ‘balanced state’ in which excitation and inhibition approximately cancel each other emerges dynamically, without fine tuning, in the strong coupling limit. This model captures in a parsimonious fashion multiple aspects of cortex dynamics. In particular, it leads to irregular firing [1–4], wide firing rate distributions [1–3, 5], and weak correlations [6] (but see [7–9]). These properties match experimental observations [6, 10–15] and seem to be universal features of strongly coupled units, as they emerge in strongly coupled networks of binary units [1, 2], current-based spiking neurons [4, 5], and conductance-based spiking neurons [16]; although the underlying operation mechanism might differ [16]. Another feature of strongly coupled neural networks is the linearity of the relationship between input rate and mean population response, i.e. of the network static transfer function, which emerges even when the single neuron response is highly nonlinear [1, 2, 4, 16]. This property, however, is problematic for different reasons. First, it limits the possible computations implementable by such networks, as nonlinearities are fundamental to perform computations, and layers of networks with linear transfer functions can only perform linear computations. Second, it implies that different inputs should be summed linearly; a prediction which is contradicted by experimental evidence in cortex. In fact, multiple studies have found that neural responses to preferred stimuli are suppressed by contextual stimuli at high contrast and enhanced at low contrast (e.g. [17–20]). Third, linear combination of inputs fails to predict responses to natural images starting from those measured with elementary stimuli [21].

Different mechanisms have been proposed to explain how nonlinearities can be produced in networks of neurons. One possibility is that nonlinear network response are generated by short-term plasticity [22]. However, the degree to which synapses are facilitated or depressed in vivo is not known. Moreover, it would be informative to understand whether nonlinear computations can be produced in networks with linear synapses. It has been pointed out that nonlinearities can be produced in rate models featuring a power-law transfer function [23, 24]. In particular, it has been shown that these models can produce saturated response while preserving contrast invariant tuning [23], and that these features are reproducible in simulations of conductance-based neurons [23]. In the stabilized supralinear network model (or SSN [24]), power-law transfer functions have been used to explain a variety of nonlinearities observed in cortex [25]. While these papers have been successful in explaining experimental data, their relationship to more realistic networks of spiking neurons is not fully understood. More generally, a theory of nonlinearities in networks of spiking neurons, explaining under what conditions they can be generated and what are the underlying mechanisms, is missing.

In this paper, we investigate analytically and numerically the response of networks of current-based integrate-and-fire neurons as a function of coupling strength. We show that, while a linear transfer function is obtained in the strong coupling limit, nonlinearities at response-onset and at saturation appear as the coupling is decreased. We systematically characterize how they are shaped by single neuron properties and network connectivity, and compare them to nonlinearities observed in rate models with power-law transfer function. A preliminary version of these results has been presented as abstract in [26].

Materials and methods

Networks of spiking neurons

We study a randomly connected network of excitatory and inhibitory leaky integrate-and-fire neurons, using a theoretical framework that was developed in refs. [3, 4]. The network is composed of N leaky integrate-and-fire (LIF) neurons, out of which NE are excitatory (E) and the remaining NI = N − NE are inhibitory (I). The dynamics of the membrane potential of neuron i (i = 1, …, N) obeys

| (1) |

where τi, R and Ii are the membrane time constant, the membrane resistance and the input current of the neuron. The input current is generated by the sum of incoming spikes generated by pre-synaptic neurons, which could be within or outside the network (external inputs); this input is written as

| (2) |

where is the k-th spike generated by pre-synaptic neuron j at time t − D, D is a synaptic delay, and Jij is the synaptic efficacy from neuron j to neuron i. Every time the membrane potential Vi reaches the firing threshold θ, neuron i emits a spike, its membrane potential is set to a reset Vr, and stays at that value for a refractory period τrp; after this time the dynamics continues as before. Assuming that connectivity is sparse, that a neuron receives a large fixed number of presynaptic inputs from each presynaptic population, that each presynaptic spike causes a small change in membrane potential (Jij/θ ≪ 1), that temporal correlations in synaptic inputs can be neglected, that all neurons in a given population are described by the same single cell parameters, and that the network is in an asynchronous state in which all neurons fire at a constant firing rate, one can use the diffusion approximation to compute the firing rate of neurons in population A (A = E, I) [3, 27, 28]

| (3) |

where umax,A and umin,A are the distance form threshold and reset of the mean input μA measured in units of noise σA, i.e.

| (4) |

where means and variances are given by

| (5) |

Here νAX are the average firing rates of neurons from outside the network providing inputs to population A, while KAB and JAB are the number of connections and the synaptic efficacy from population B (B = E, I and X for external) to population A. The right side of Eq (3) is sometimes referred to as the Ricciardi transfer function, or nonlinearity [27]. It relates the presynaptic input mean μ and noise σ of a neuron to its firing rate.

Note that the lack of temporal correlations in synaptic inputs imply both that synaptic time constants are negligible, and that neurons fire with approximately Poissonian statistics [29]. These temporal correlations have a quantitative but not qualitative effect on the single neuron transfer function [30–34]. We also point out that all calculations of finite K and J effects in this paper are done within the framework of the diffusion approximation, and therefore do not include deviations from the diffusion approximation. However, mean field results obtained with the diffusion approximation match quantitatively simulations of spiking neurons (e.g. see comparison with network simulations in the results section). We also show, in the Supporting Information (S2 Text), that the results obtained in this paper remain valid when the diffusion approximation is relaxed and the shot-noise structure of the synaptic input is taken into account.

Firing variability is quantified with the coefficient of variation (CV) of inter-spike intervals (ISI), given by [4]

| (6) |

The network activity is found self-consistently from Eqs (3)–(5). As in [4] we investigated two models: model A, in which all neurons have the same biophysical and input connectivity properties, and model B, in which the excitatory and the inhibitory populations have different properties.

Through the paper, we use the following parametrizations:

- In model A, we use JEE = JIE = J, JEX = JIX = gX J, JEI = JII = gJ, KEX = KEE = KIX = KIE = K and KEI = KII = γK, so that the equations for means and variances become (note that in this model the excitatory and inhibitory rates are equal, νE = νI = ν)

where, without loss of generality, we have absorbed a factor gX in the external drive νX.(7) - In model B, we use JEX = gEX JEE, JIX = gIX JIE, JEI = gE JEE, JII = gI JIE, KEX = KEE, KIX = KIE and KAI = γKAE. In this case, the equations for means and variances in both populations (A = E, I) read

where we have again absorbed gAX in the external drive νAX. Moreover, we take inputs of the form νAX = αA νX, where αE,I are fixed parameters while νX represents the intensity of the input.(8)

Throughout the paper, we use θ = 20mV, Vr = 10mV, τE = τI = 20ms, τrp = 2ms, and γ = 0.25. Note that the equations for the firing rates are independent on the synaptic delays D and the total number of neurons N. We specify the values of these parameters at the end of the results section, where our analytical results are compared with numerical simulations.

Rate models

In the last part of the results section, we analyzed which of the nonlinearities observed in spiking networks emerge in rate models. We study this question using two specific rate models:

- Ricciardi rate model: It is defined here using the single neuron transfer function of Eq (3), assuming the noise amplitude is fixed. The f − μ curve of the A population is then defined as

where σA is a fixed parameter. Once the f − μ curve has been fixed, rates in the network are computed as above, i.e. a self-consistency condition is imposed which gives the mean input currents μAs as a function of the external drive and recurrent interactions.(9) - Supralinear Stabilized Network (SSN) rate model: In the SSN model, the f − μ curve is given by a power-law

Rates are computed as for the Ricciardi rate model.(10)

Results

Two nonlinear regions in network response at finite coupling

The main goal of this paper is to characterize systematically the network transfer function, that describes how the average E and I firing rates depend on external inputs to the network, and in particular how this transfer function depends on network parameters. This transfer function is well understood in two opposite limits (see Fig 1 for an illustration of these two limits):

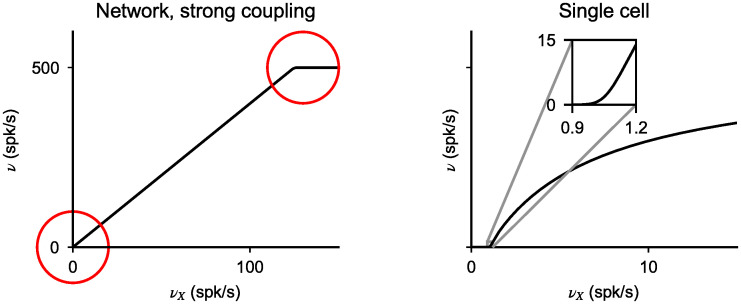

Fig 1. Network transfer function in the strong and weak coupling limits.

Network response in strongly coupled networks (left) features linear regions separated by abrupt transitions (red circles). In the weak coupling limit, the network transfer function becomes identical to the single cell transfer function. It is supralinear at low inputs, but then becomes sublinear at high inputs.

In the strong coupling limit, excitatory and inhibitory mean inputs need to ‘balance’ in both E and I neurons, for total inputs to remain finite, leading to a linear relationship between output and input rates [1, 2]. This linear relationship can only hold provided specific inequalities on network parameters are satisfied (see below). Furthermore, this linear relationship only holds in a limited range of inputs, since output firing rates must be bounded between zero and a maximal value imposed by the refractory period. Therefore, the network transfer function is piecewise linear, as shown in Fig 1A.

In the opposite weak coupling limit, the recurrent inputs become negligible compared to external inputs. Therefore, the network transfer function becomes in this limit identical to the single neuron transfer function, Eq (3). In the presence of fluctuations in external inputs, this transfer function is expected to be generically sigmoidal (see Fig 1B), with a supralinear region at low rates, in the so-called subthreshold, or fluctuation-driven regime, while it is expected to become sublinear at higher rates, because of the refractory period (e.g. [35]).

Here, and in the following (except when specified otherwise), we adopt a simple definition of coupling strength as the product between the number of projections per neuron K and the synaptic efficacy J. Other potential definitions include the slope of the neuronal f-μ curve, as it can change the sensitivity of neurons to their presynaptic inputs. We discuss this feature in more detail in the Supporting Information (see S3 Text).

Intuitively, we expect that varying coupling strength should lead to an interpolation between the two extremes shown in Fig 1. In particular, we expect two non-linear regions, one at low rates, one at high rates (shown as red circles in Fig 1A), whose size should decrease as coupling strength increases, separated by a linear region, whose size should increase as coupling strength increases. Furthermore, we would expect naively that the low rate region is supralinear, while the high rate region is sublinear. In the following, we show that these naive expectations are not necessarily true, since other behaviors are possible.

To get more insight into what controls response nonlinearities, we need to analyze the equations that give the average population firing rates as a function of network parameters, Eqs (3)–(5). Here, we start by considering model A, in which E and I neurons have identical parameters, leading to a single equation describing the firing rates of both populations. The solutions of this equation depend on the single neuron transfer function, Eq (3). In particular, Eq (3) tells us that the mean firing rate is given as a function of two variables, umax = (θ − μ)/σ and umin = (Vr − μ)/σ, that describe the distance of the mean inputs to neurons from threshold and reset, respectively, in units of input noise σ.

The high input/high rate regime

When the mean inputs are far above threshold (umax ≪ − 1), neural firing is dominated by deterministic drift in the neuron membrane potential, and noise has only a weak effect on firing. In this regime, we use the first order term in the expansion

| (11) |

valid for large and negative u, to obtain a simplified expression of Eq (3),

| (12) |

Eq (12), is the transfer function of a single neuron receiving deterministic input, as expected from the fact that the mean input is far above threshold in units of the input noise. Higher order terms in expansion (11) provide corrections due to membrane fluctuations. Eq (12) shows that the relation between ν and νX in this regime is nonlinear. With the additional assumption |(umax − umin)/umax| ≪ 1 (which is equivalent to θ − Vr ≪ μ − θ, a condition satisfied for μ sufficiently above threshold θ), we can expand Eq (12) and obtain a direct relation between ν and νX

| (13) |

where is the fraction of recurrent excitatory inputs that are needed to fire simultaneously to drive the membrane from reset to threshold, and νth = θ/KJτ is the external input needed to generate spikes in the absence of noise, respectively. Eq (13) shows explicitly that the nonlinearity in this regime is generated by the presence of the refractory period τrp, while the parameter ϵ controls the deviation from the linear prediction.

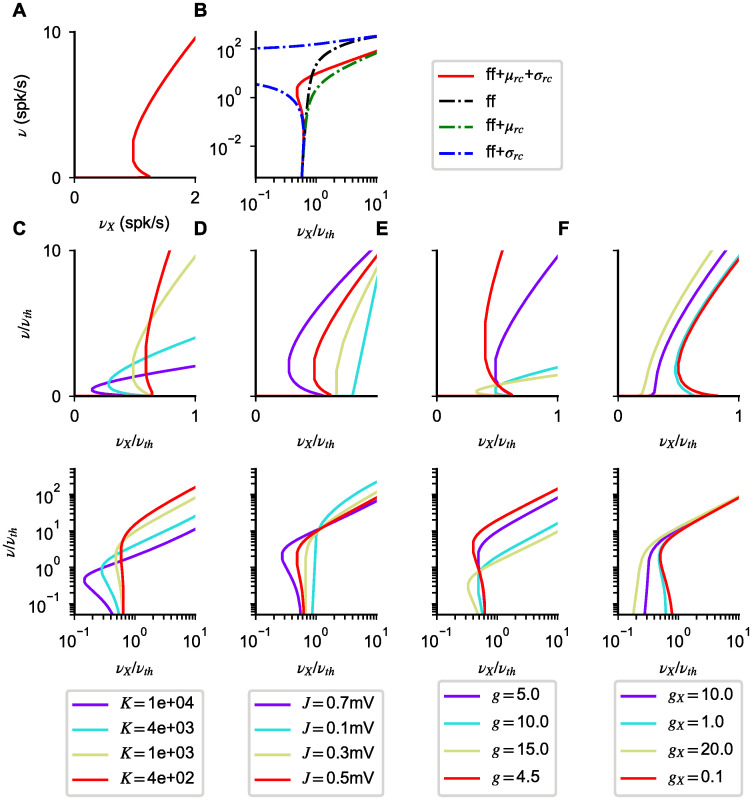

In Fig 2 first row, we compare the prediction of Eq (13) with the numerically computed solutions of Eqs (3)–(5) for various values of K. Numerical solutions of the mean-field equations show that our approximation gives a good description of the transfer function, capturing the nonlinearity observed close to saturation. As expected from Eq (13), the width of the non-linear region close to saturation expands as ϵ increases. For instance, for K = 1, 000, we have ϵ = 0.05, and the deviations from linear behavior are already significant when the firing rate is less than half its maximal value. On the other extreme, for the unrealistically high value K = 100, 000, ϵ = 0.0005 and the non-linear region becomes extremely small.

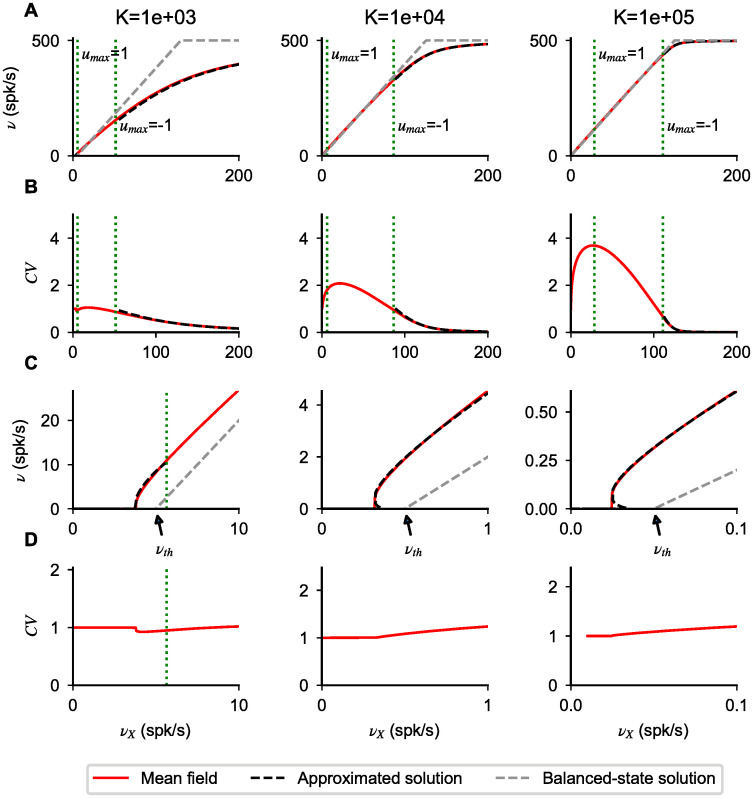

Fig 2. Types of nonlinearities in network response.

(A) Network transfer function obtained solving numerically Eqs (3)–(5) for different values of K (indicated on top of each column). (B) CV of interspike interval distribution obtained solving numerically Eq (6) (red lines). (C,D) Plots as in A, B but zoomed in the region of response onset. In all panels, dotted green lines correspond to the values at which umax = 1 and umax = − 1, i.e. they indicate the separation between the different operating regimes mentioned in the main text. Firing nonlinearities at response onset and saturation are captured by approximated forms (black dashed lines) obtained for umax ≪ − 1 (Eq (13)) and umax ≫ 1 (Eq (15)), respectively. For umax ∼ 0, the transfer function approaches the balanced-state solution (gray dashed lines, Eq (17)); in the region − 1 < umax < 1, the first order corrections are of order and become negligible as K increases. In the suprathreshold regime (umax ≪ − 1), the CV approaches zero, i.e. firing becomes regular, with a decay with input strength captured by Eq 14 (dashed line). Parameters: J = 0.2 mV, g = 5.0, gX = 1.

Consistent with the idea that, for umax ≪ − 1, firing is driven by deterministic drift, the CV of the ISI is found to be smaller than one in the high input regime (see Fig 2 second row); with a value that becomes smaller and smaller as νX increases. Using Eq (11), we derive a simplified expression for the CV starting from Eq (6) given by

| (14) |

This expression captures the decay of the CV observed in Fig 2, and shows that, as the neural firing rate ν approaches its maximum value, the CV goes to zero as 1 − τrpν.

Low input/low rate regime

For membrane potential far below threshold (umax ≫ 1), firing is driven by large stochastic fluctuations, so that noise can no longer be neglected. In this regime, in the simplified framework of model A, Eq (3) is well approximated by [4]

| (15) |

The transfer function obtained solving Eq (15) is nonlinear and provides a good description of the response for small rates. Specifically, the response shows threshold like nonlinearities, with threshold close to νth; we will characterize this in more detail in the following sections. Consistent with the fact that firing is produced by large fluctuations, the response is highly irregular (CV of order one or above) (see Fig 2 second and fourth row). Note that the CV depends non-monotonically on input rate, as shown in [36].

Intermediate linear region

Up to now, we have shown that network response is expected to be nonlinear in the regions of rates corresponding to umax ≪ − 1 and umax ≫ 1; we will now show that in the region between these two regimes the response is expected to be approximately linear. For fixed values of νX and ν, we can write umax = ω with ω constant of order one. In this regime, neural firing is driven by input fluctuations if ω > 0, or a combination of deterministic drift and fluctuations if ω < 0. The transfer function can be found iteratively for every value of ν and νX as

| (16) |

Eq (16) shows that, for ω = 0, the transfer function always matches the balanced-state solution [1, 4]

| (17) |

For ω of order one, deviation from this solution are expected to be of order . To test this, we plot in Fig 2 first row the balanced-state solution (gray dashed-lines): network responses are found to be close to this solution, with a distance that decreases as K increases. The balanced-state model [1] is characterized by a linear transfer function (given by Eq (17) with our notation). Consistent with the fact that this model has been derived in the strong coupling limit, we find that as K increases the network response converges to the balanced-state solution.

The above argument can be generalized for the more general case of model B. Using the approximations derived above, we can deduce that nonlinearities in the network response will appear any time that one or both populations have mean inputs far below threshold or above threshold. Moreover, an approximately linear solution appears when both mean inputs are close to threshold, with a scaling close to the one predicted by the balanced-state model up to corrections of order . In the following sections we systematically classify these nonlinearities as a function of the network parameters.

Saturation nonlinearities

In this section, we use a perturbative approach to characterize saturation nonlinearities, first in model A and then in model B. We find that a key role is played by linear solutions obtained in the strong coupling limit, i.e. balanced-solutions, which serves as a starting point for the perturbative expansion, and by the coupling strength, which determines the amplitude of the deviation from linear response.

Model A

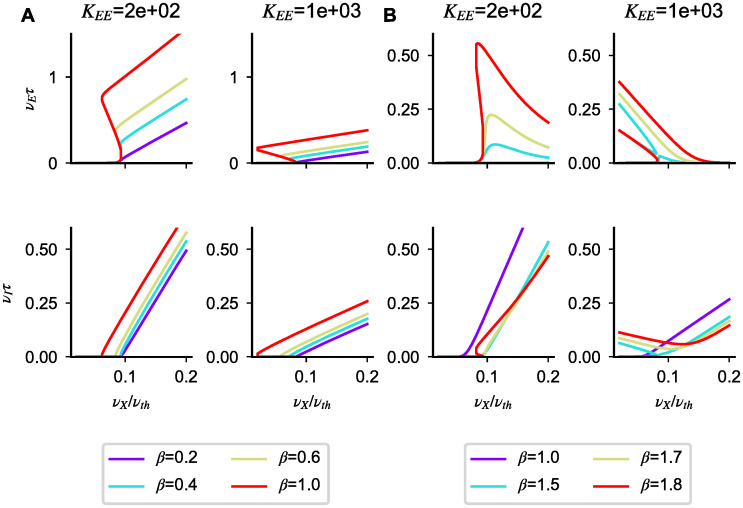

We start our analysis of saturation nonlinearities by computing numerically responses in the mean field theory, i.e. solving Eqs (3)–(5), for different parameter values. The network response, for mean input μ above threshold, depends on g, K, and J; it depends only weakly on gX since, in this regime, responses depend weakly on noise amplitude. Results of the numerical analysis are shown in Fig 3. Note that in this figure input firing rates are rescaled so that the balanced limit is the same regardless of the parameters. The transfer function shows a sublinear scaling as the network rate approaches saturation (Fig 3A–3C), but nonlinear effects are seen at low rates, especially for small K; the CV decreases monotonically for sufficiently large inputs (Fig 3D–3E). To understand the generality of these results, we turn to the approximated expressions of Eqs (13) and (14).

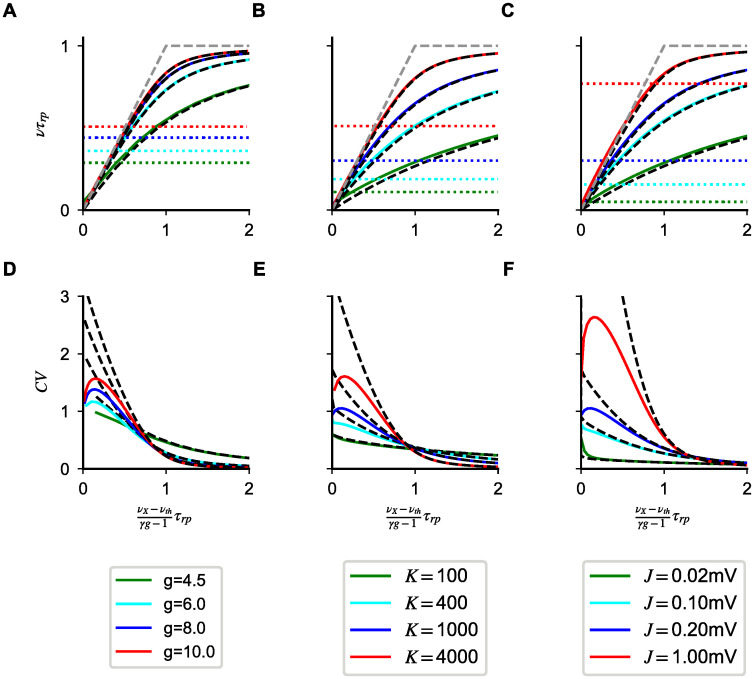

Fig 3. Saturation nonlinearities in Model A.

Transfer function (first row) and CV (second row) computed numerically from Eqs (3)–(5) and (6) (continuous lines) for different g (first column), C (second column) and J (third column). As in Fig 2, colored dotted lines in the first row represent values of the rates at which umax = − 1. Black dashed lines solutions of the approximated Eqs (13) and (14). This validation of the approximated rate equation motivates the perturbative approach used in the main text and allows to classify nonlinearities in a general way. Simulation parameters: J = 0.2 mV, K = 103 in (A,D); g = 5.0, J = 0.1mV in (B,E); g = 5.0, K = 103 in (C,F); in all plots gX = 1, τ = 20ms.

As shown in Fig 3A–3C, solutions of Eq (13) capture the observed nonlinearities across input strength and parameter values. Surprisingly, they provide a good approximation of the network transfer function also for |umax| ≈ 1, i.e. beyond the range of validity of Eq (13). These results suggest that Eq (13) can be used to understand nonlinearities in the whole range of activity levels above response onset.

To classify possible nonlinear responses, we solve Eq (13) using a perturbative expansion in ϵ,

| (18) |

The zeroth order solutions are found taking ϵ = 0 (strong coupling limit) in Eq (13); there are two such solutions:

| (19) |

The first solution corresponds to the balanced-state solution of Eq (17) while the second solution corresponds to saturated activity, with firing at maximum rate.

Using the balanced-state solution in the ϵ expansion, we get

| (20) |

In the inhibition dominated regime (gγ > 1), which is thought to underlie cortical dynamics [4], the first order correction is always negative and its absolute value becomes larger as the rate increases; this shows that the output rate increases sublinearly with input rate, regardless of the choice of parameters. Eq (20) also shows that the deviations from linear response increase with ϵ. At finite coupling (ϵ > 0), the first order correction is linear if τrpν0 ≪ 1; nonlinear corrections become large when ϵ ≈ (γg − 1)(1 − τrp ν0).

Using the saturation solution in the ϵ expansion, we get

| (21) |

This equation shows that the rate approaches saturation as 1/νX for all connectivity parameters. Note that, in the inhibition dominated regime, corrections with τrp(νX − νth) < (γg − 1) produce rates above saturation, which are not realizable. It follows that the saturation solution appears only when the balanced-solution is larger than 1/τrp, i.e. the two solutions found at ϵ = 0 are mutually exclusive at finite coupling; this will not be true in model B.

As in the previous section, the approximate CV expression of Eq (14) captures the decay as activity approaches saturation, ensuring that the suppression of irregular firing is expected for all parameter values. However, unlike what happens for the rates, the approximated expression departs from the mean field value as soon as umax ≈ − 1 (see Fig 3 second row).

Model B

In this section, we characterize saturation nonlinearities in model B. Using the perturbative method introduced for model A, we show that the network transfer function, at a fixed external drive, has in some cases multiple solutions. In cases in which there is a unique rate value, depending on the connectivity matrix and coupling strength, the response can be a sub-linear, linear or supra-linear function of the input.

As discussed in the methods section, model B features one excitatory and one inhibitory population with rates νE,I and external drive νEX, νIX = αE,I νX. The transfer function of the network is obtained solving Eqs (3)–(5) which, in the limit in which the inputs to inhibitory neurons are much larger than threshold, is approximated by

| (22) |

where is the fraction of recurrent excitatory inputs that are needed to fire simultaneously to drive the membrane of an excitatory neuron from reset to threshold, is a parameter measuring the ratio of total recurrent excitatory synaptic strength onto excitatory and inhibitory neurons, (A = E,I) is the external firing rate needed to bring population A = E,I at firing threshold, in the absence of recurrent inputs and input fluctuations.

Admissible solutions of Eq (22), i.e. with rates in the range [0, 1/τrp], provide a good approximation of the network transfer function. The advantage of using Eq (22) is that it is polynomial in the rates and can be easily solved with an ϵ expansion of the form

| (23) |

Moreover, as discussed above, solutions describe, up to corrections of order , the network response for |umax| ∼ 1.

We now investigate the structure of the network transfer function using the ϵ expansion. To simplify expressions, in what follow we omit contributions coming from νth,A. For ϵ = 0, there are two solutions of Eq (22) in which neither population is saturated. The first of these solutions, which will be called s1 or regular, has the first two terms of the expansion given by

| (24) |

This solution corresponds to the classic ‘balanced’ solution [1, 2]. In the strong coupling limit, regular solutions feature excitatory and inhibitory rate increasing linearly with input strength. For finite coupling, the network transfer function deviates from this linear scaling. In particular, the response of one population is supralinear (sublinear) if the first order correction is positive (negative). For instance, the excitatory rate is a supralinear function of inputs if

| (25) |

sublinear scaling appears if the above inequality is not satisfied. Analogous conditions holds for the inhibitory population. In agreement with this result, we find numerically that solutions of Eqs (3)–(5) which approach s1 solutions for ϵ = 0 transition from sublinear to supralinear as β increases (Fig 4A).

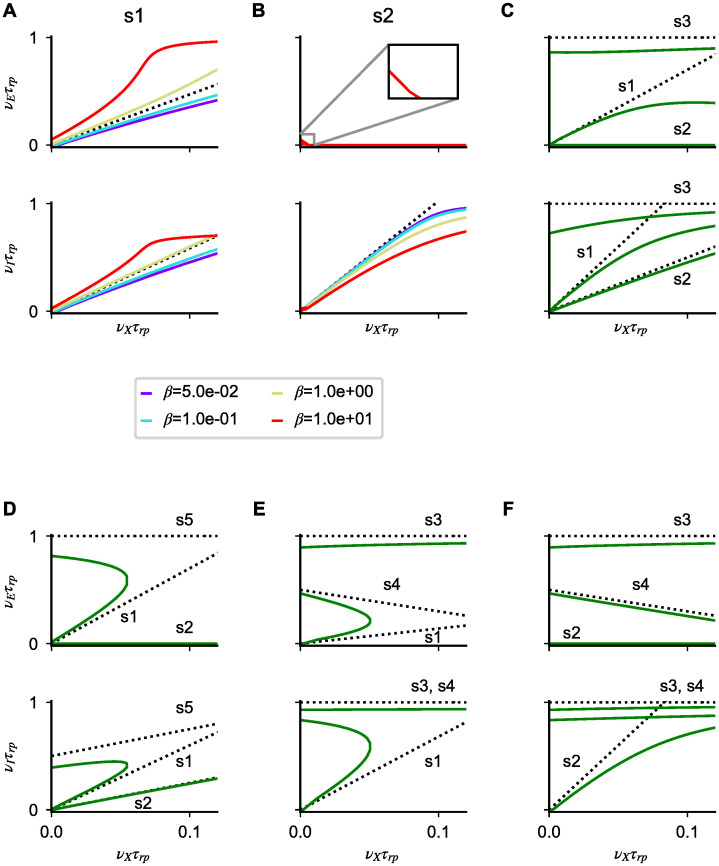

Fig 4. Saturation nonlinearities in model B.

(A-F) Numerical solutions of Eqs (3)–(5) for different parameters (colored lines) and linear approximations predicted by Eqs (24), (26) and (27) in the strong coupling limit (dotted lines). In each panel, the first (second) row shows the excitatory (inhibitory) firing rate as a function of νX. (A,B) Nonlinear solutions obtained at finite coupling starting from solution s1 and s2 for different β values. (C-F) All admissible cases of coexistence of multiple solutions at low νX; note that, as expected from our analysis, the number of solutions changes as νX increases. Simulation parameters are: (A) gI = 3.9, gE = 8, αI = 1 αE = 7; (B) gI = 3.9, gE = 8, αI = 10, αE = 7; (C) gI = 4, gE = 3, αI = 5, αE = 2; (D) gI = 8, gE = 6, αI = 5, αE = 2; (E) gI = 1, gE = 2, αI = 0.3, αE = 2; (F) gI = 1, gE = 2, αI = 3, αE = 2. In panels (A-F), gEX = gIX = 1; JEE = JIE = 0.2mV and KEE = KIE = 103; except in A and B where , .

The second unsaturated solution of Eq (22) obtained for ϵ = 0 will be called s2 or supersaturated and is given by

| (26) |

In the strong coupling limit, the inhibitory rate increases linearly with the external drive while the excitatory population remains silent due to overwhelming inhibition. Applying the same approach as the one used for s1 solutions, we find that s2 solutions at finite coupling admit only sublinear scaling (Fig 4B).

Up to now, we have assumed that only one of the two solution types appears for any given value of νX. In what follows we discuss under what conditions the assumption is valid and what happens if these conditions are not met. In this analysis, a key role is played by saturated solutions of Eq (22) which are of three types

| (27) |

In the strong coupling limit, in s3 solutions, both populations are saturated; in s4 and s5 solutions, only one population is saturated while the other changes linearly with inputs.

To analyze solution admissibility, for any given value of νX, we compute the rates predicted by the ϵ expansion up to first order and investigate for what parameters each solution is within the [0, 1/τrp] range. Conditions obtained for general values of νX are given in the Supporting Information (S1 Text). For simplicity, here we analyze results obtained for νX = 0; because of response continuity, these results are valid also in a range of sufficiently small external inputs. In this range of inputs, we find that there are seven possible scenarios, 3 with a single admissible solution (s1, s2 and s3), and 4 with three admissible solutions ({s1, s2, s3}, {s1, s2, s5}, {s1, s3, s4}, {s2-s3-s4}). When there are three solutions, we expect at least one of these solutions to be unstable; we will come back to this point during the analysis of network simulations and in the discussion.

We find that s1 solutions are the only one admissible if

| (28) |

analogously, s2 solutions are the only one that appear if

| (29) |

Note that the conditions in Eq (29) are analogous to those for supersaturating solutions in the SSN [24]. The first two inequalities ensure suppression of excitatory rate with increasing input strength; reabsorbing JEA into αA, they correspond to the conditions ΩE < 0 and det J > 0 found in [24]. The last two inequalities prevent the existence of saturated solutions. Because of the presence of the refractory period in our model, the mathematical expressions are different in the SSN—the analogous condition in [24] being . Any time Eqs (28) or (29) are violated, multiple solutions appear. All the above-mentioned combinations of coexisting multiple solutions for νX ∼ 0 are shown explicitly in Fig 4C–4F. Note that the admissibility conditions derived in SI depends on the value of νX. Therefore, as νX increases, the number and the identity of solutions is expected to change; examples of this phenomena are given in Fig 4, where s1-s5 (Fig 4D) or s1-s4 (Fig 4E) solutions merge for νXτrp ∼ 0.05 leaving only one admissible solution for larger inputs. Using the relations derived in SI, we also derive relations between solutions that must be satisfied for arbitrary values of νX. First, we find that solutions s3 and s5 are mutually exclusive and can never appear at the same time. Second, any time that solution s1 and s2 are admissible at the same time, s4 solutions are not admissible. These two results imply that, for any value of νX at most three saturation-generated solutions can coexist. Finally, for large νX, only s3 solutions are admissible, i.e. as the input intensity increases, both populations eventually saturate.

To summarize, we have shown that in model B at finite coupling, the network response can have one or multiple solutions. We have provided a general framework which, given the network parameters, predicts the expected number of solutions and their nonlinearities at finite coupling.

Response-onset nonlinearities

In this Section, we characterize nonlinearities generated at response onset, first in model A and then in model B. In both models we show that, at response onset, one or multiple firing rates can coexist while, at larger input stimuli, response approaches the balanced-solutions described in the previous section.

Model A

To understand the possible nonlinearities appearing at response onset in model A, we rely on Eq (15), whose solution provide a good approximation of the network response for umax ≫ 1. In this regime, we were not able to find a useful perturbative expansion and hence we will use a different approach, analyzing how the response evolves as a function of input strength νX.

For small enough νX, effects of recurrent interactions in the network are negligible, feedforward inputs dominate over recurrent inputs, and the transfer function is given by

| (30) |

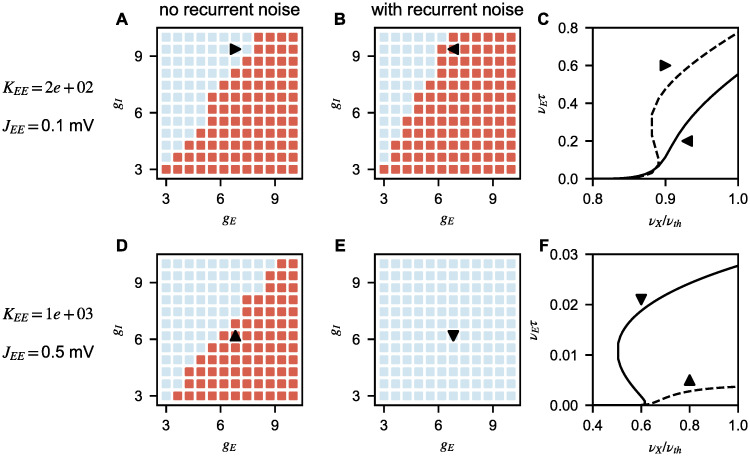

As shown in Fig 5A, Eq (30) captures the response for small rates: response rises exponentially as νX approaches νth.

Fig 5. Response-onset nonlinearities in model A.

(A) Network response computed from Eqs (3)–(5) (red). (B) As in panel A but in log-log scale to highlight the behavior at low rate. Response at low rates is determined only by feedforward inputs (black, Eq (30)); effects of recurrent noise (blue) and mean (green) become relevant at higher rates, with opposite effects on the number of solutions. (C-F) Effects of different parameters on the number of solutions in network response; responses are shown in linear (top) and log-log (bottom) scale. Simulation parameters, unless otherwise specified in legend, are K = 103, J = 0.5 mV, g = 5.0, gX = 1, τ = 20ms.

As νX increases, the network rate increases and recurrent inputs become relevant. In particular, recurrent inputs impacts network response as soon as their contributions to current mean or noise are of the same order as feedforward input. Specifically, using Eq (15) we find that this happens as soon as ν approaches the smaller of (νX − νth)/(1 − gγ) and νXgX/(1 + g2 γ). At this point, the transfer function is found solving the implicit Eqs (3)–(5) (or its simplified form Eq (15)). We find numerically (see Fig 5B–5D) that, up to the point at which the membrane potential is close to threshold (umax ∼ 1), there is either a single solution, or three solutions to the equations for the population firing rate. When a unique solution is present, firing increases supralinearly with inputs. For larger input values, the transfer function is determined by the linear response of the balanced-state regime.

As pointed out in [37, 38], multiple solutions emerge because of the positive feedback induced by the variance of the fluctuations in recurrent synaptic inputs and can be understood as follows. Let us consider the fictitious dynamics given by [38]

| (31) |

The fixed points of the above equation gives the network response at fixed input, i.e. the network transfer function; when multiple solutions are present, one solution must be unstable. This means there must be a solution for which the linearized dynamics

| (32) |

has λ > 0, with

| (33) |

Since the above equation is valid for umax ≫ 1, multiple solutions can be generated only if the derivative of umax with respect to ν is sufficiently large and negative. This derivative is given by

| (34) |

The above equation shows that, in an inhibition dominated network (gγ > 1), recurrent input mean and fluctuation have opposite effects; while the mean inputs provide negative feedback and hence cannot generate more than one solution, fluctuations provide positive feedback and therefore can potentially lead to multiple solutions.

This is verified in Fig 5A, where we compare network response computed from Eqs (3)–(5) with and without either recurrent input mean (μrc = ν(1 − gγ)) or noise (); it show that noise is the key factor to produce multiple solutions.

We characterize the number of solutions numerically, since the nonlinearities make an analytic approach unfeasible. First we note that, out of the 5 model parameters in Eq (15) (τ, K, gX, J, and g) only 4 are relevant in determining the response structure, since τ fixes the overall scale of the rates. Results of the numerical investigation are shown in Fig 5B–5E. For all parameters, the low rate response rises exponentially up to the point at which recurrent inputs are no longer negligible. After this point, the network shows either supralinear increases or multiple solutions. Increasing J and K (Fig 5B–5C) decreases the response onset point, as expected by the definition of νth, and lead to multiple solutions. Changing g (Fig 5D) has little effect on response onset but it affects the slope of the balanced-solution. Finally, increasing gX (Fig 5E) reduces the likelihood of having multiple solutions; this is due to the fact that recurrent input noise becomes negligible compared to the feedforward input noise.

Model B

In this section we analyze the behavior of model B at response onset. As discussed above, at low input rates, responses are determined by feedforward inputs, and the contribution of recurrent interactions are negligible. At larger input rates, when at least one of the two population has umax of order one, recurrent interactions are relevant, the network response is approximately linear and approaches one of the solutions derived in the previous section (e.g., when only one solution is present, regular or supersaturated). The response region connecting these two regimes is expected to be nonlinear; characterizing possible nonlinearities is the goal of this section. Unlike the case of model A, however, the large number of parameters makes an extensive exploration of possible behaviors unfeasible. Therefore, we focus our investigation on the role of coupling strength, which in model A was found to have a major role in determining the type of response nonlinearity.

Examples of excitatory and inhibitory activity for regular and supersaturated solutions are shown in Fig 6. We find that, in the region of inputs connecting response onset to the balanced solution, rates can either have a unique or multiple solutions. When only a unique solution is present, response increases supralinearly in the regular case (Fig 6A) and has a supralinear increase which eventually becomes sublinear in the supersaturated case (Fig 6B). In model B, there are two independent ways in which coupling strength can be modified to affect these responses: uniform change (e.g. increase KEE with KEE = KIE) and relative change (e.g. fix KEE and change KIE).

Fig 6. Onset-nonlinearities in model B.

Responses obtained solving numerically Eqs (3)–(5) for regular (A) and supersaturated (B) solutions at different coupling strengths and for different β = KEE JEE/KIE JIE. The size of the nonlinear region decreases as the coupling strength increases and as the inhibitory activation threshold decreases. In B, the lack of purple curve in the first row means that the firing rates are very close to zero in the whole range of inputs. In the plots, response onset emerges around 0.1νth because of the value gEX = gIX = 10 used in simulations. As showed in Fig 5F, this choice enhances the amplitude of the nonlinear region by decreasing the likelihood of having multiple solutions. Other simulation parameters are:, JEE = JIE = 0.1mV, gI = 3.9, gE = 8. In (A) αI = 1 αE = 7; in (B) αI = 10, αE = 7.

In regular solutions, a uniform increase in coupling strength reduces the region of nonlinear response, promoting a more sudden transition to linear scaling (Fig 6A, first vs second columns). The same modification in supersaturated solutions reduces the peak response of excitatory cells and increases the likelihood of having multiple solutions (Fig 6A, first vs second columns).

The main effect of changing the relative coupling is to move the relative onset point of the two populations, since it controls the ratio of νth,E and . For instance, increasing KEE at fixed KIE makes the excitatory population respond at lower input rates than the inhibitory population. For small modifications, this leads to a larger region of supralinear response (e.g. first column of Fig 6B light blue vs yellow lines). For larger modifications, it produces multiple solutions, since the excitatory population is unstable on its own (e.g. first column of Fig 6B red line). Finally, we note that similar effects could be produced modifying the relative onset point of the two populations through changes in other parameters, such as JEE of JIE.

In model B, there are two independent sources of positive feedback which can generate multiple solutions: excitatory-to-excitatory connectivity [39] and noise. Which of these sources generates the observed multiple responses? We already know that, when network structure reduces model B to model A, i.e. when excitatory and inhibitory populations have the same properties, multiple solutions are generated by noise feedback. Are noise-generated multiple solutions limited to this specific case or a more general feature of model B? To answer this question, inspired by model A, we computed numerically the network response with and without recurrent noise, for different values of network parameters; results are shown in Fig 7. At all coupling values, results along the line gE = gI are analogous to model A, as expected. At strong coupling, for a significant fraction of parameter space, the presence of multiple solutions is generated by noise, as they disappear when noise is removed. Interestingly, at weak coupling, noise seems to stabilize activity in a finite fraction of the parameter space; this phenomenon could be due to the larger increase in inhibitory response produce by its positive feedback coming from recurrent noise of inhibitory population.

Fig 7. Role of recurrent noise in generating multiple solutions at onset in model B.

Number of solutions for different combinations of gE and gI (red and blue correspond to single solution and multiple solutions, respectively) computed without (first column) and with (second column) recurrent noise; the third column shows transfer functions for specific combinations (indicated by triangles in the first two columns). Both for small (first row) and large (second row) K, noise influences the number of solutions. (A-C) For some parameters, noise reduces the size of the region with multiple solutions. (D-F) For others, noise increases the size of the region with multiple solutions. Parameters: JIE = JEE, gIX = gEX = 1, αI = αE = 1.

These results show that, in model B, response onset is nonlinear and that a full characterization of response nonlinearities requires recurrent noise to be included in the model. This represents a qualitative difference with respect to rate models, where recurrent noise is not taken into account. In the next section we show how the results obtained so far compare with rate models.

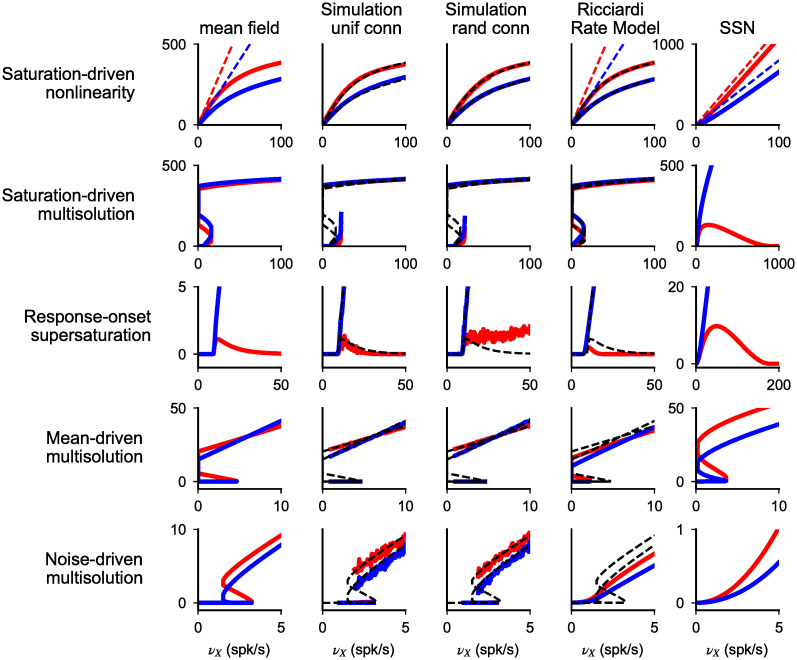

Comparison with network simulations and rate models

Results on response nonlinearities described up to this point have been obtained using a mean field analysis of networks of spiking neurons. In this section, we compare these results to other two approaches widely used in the study of neural networks: network simulations and rate models.

Network simulations

We perform numerical simulation in networks with either uniform or Erdös–Rényi connectivity. In the uniform case, all neurons receive exactly the same numbers of external, recurrent excitatory and recurrent inhibitory connections, i.e. there is a fixed in-degree for all types of connections. In the Erdös-Rényi (ER) case, the adjacency matrix specifying the existence of a synapse from any presynaptic to any (distinct) post-synaptic neuron is composed of i.i.d. Bernoulli variables, with connection probability given by ratio between mean number of connections and number of neurons in the presynaptic population. This leads to fluctuations in numbers of in-degrees between neurons which generates, in the balanced limit, a wide distribution of firing rates [1–3, 5]. The mean-field theory we have used so far assumes a fixed in-degree. Simulations of networks with uniform connectivity are useful to check that MF results are accurate, in spite of all the involved approximations (see Materials and Methods); simulations of ER networks allow us to assess the robustness of our results to heterogeneities.

Network simulations were performed with the simulator BRIAN2 [40] using networks of NE = 11KEE excitatory and NI = 11KEI inhibitory neurons, receiving excitatory inputs from ensembles of NEX,IX = 11KEX,IX independent Poisson units firing at rates νEX,EX, respectively. We used uniformly distributed delays of excitatory and inhibitory synapses. Delays were drawn randomly and independently at each existing synapse from uniform distributions in the range [0, 100]ms (E synapses) and [0, 1]ms (I synapses) [38]. These extreme values ensure no synchronized oscillations are present (see Discussion). For fixed network parameters, rates were computed starting from a given initial condition and gradually increasing the external drive. We explored different initial conditions to check for multiple solutions. Stationary responses obtained for different representative parameter sets are shown in Fig 8. For connectivity parameters leading to saturation nonlinearity, results of the network simulations closely match predictions of the mean field theory. In the case in which multiple solutions are expected from the mean field theory, either generated at saturation or at response-onset, simulations capture the upper and lower branches of the response; the absence of the middle branch suggests that it corresponds to unstable fixed points of the dynamics. Simulations obtained for parameters generating supersaturation in the mean field model are found to depend on the connectivity structure. For uniform connectivity, simulation follows the same trend of the mean field prediction, with inhibitory rate increasing monotonically while excitatory rate show an increase with inputs at low intensity and suppression at larger inputs. We note that, although this mean response follows the trend of the mean field prediction, the network showed oscillatory activity in the region of maximum excitatory response despite the broad distribution of synaptic delays. In the case of random connectivity, on the other hand, we found that the suppression of excitatory response is not present. This lack of supersaturation at the population level is generated by an heterogeneity in responses of excitatory cells, with 66% of cells which are silent while the remaining 33% show a weak increase in rate with the external drive. Despite this difference, a common features of supersaturating solutions in networks of spiking neurons, observed both in the mean filed theory and in simulations, is that the peak response of excitatory cells is small. The intuitive reason why firing rates of excitatory neurons are generically small in this scenario is that rates go to zero in the strong coupling limit.

Fig 8. Spiking-networks nonlinearities in network simulations and rate models.

Comparison of responses of excitatory (red) and inhibitory (blue) neurons computed with: mean field theory of spiking networks (colored lines in first column and black dashed lines in second to fourth columns); network simulation with uniform (second column) and random (third column) connectivity; rate models (Ricciardi model, fourth column, and the SSN, fifth column). Different rows correspond to different values of αE,I and gE,I. Prediction of the mean field theory match numerical simulations, with the only exception of the lack of supersaturation in networks with random connectivity. Spiking networks present nonlinearities at saturation and at response-onset. Saturation nonlinearities are generated by the refractory period and hence are captured only by the Ricciardi model and not by the SSN, which lack this ingredient. These nonlinearities have effects also at low rate and constrain the parameter space over which the response is unique. Response-onset nonlinearities have similar structure in the three models but in spiking networks are smaller in size and feature multiple solutions generated by noise feedback. Network structure (from top): gE = 8, gI = 7, αE = 4, αI = 2; gE = 2.08, gI = 1.67, αE = αI = 1; gE = 4.5, gI = 2.9, αE = αI = 1; gE = 4.1, gI = 2.46, αE = 1, αI = 0.2; gE = 7, gI = 6, αE = 1, αI = 0.7. In all simulations, for spiking-networks mean field, simulations and Ricciardi model: JEE = JIE = J; KEX = KEE = KIE = K; gEX = gIX = 1. (except second row, where JEE = JIE 2.5/2.4 = J, and fourth row, where KEX = KEE = 2KIE = 2K); values are 400 for K and 0.2mV for J in all simulations except for noise driven bistability where J = 0.5mV. In Ricciardi model, σE = σI = σ; σ matches noise at threshold in spiking neurons and is (from the top): 25mV, 10mV, 3mV, 5mV, 7mV. In the SSN, k = 0.04, n = 2, WEE = WIE = 1, WAI = γgA except in second row, where WIE = 2.4/2.5 and WEI = γgI 2.4/2.5, and fourth row where WEE = 2 and WEI = 2γgE.

These numerical results validate the analysis derived above as a good description of the response in spiking networks, with the only exception given by the lack of supersaturation in networks with random connectivity. In what follows we compare how rate models compare to results obtained in spiking networks.

Rate models

Rate models are characterized by a fixed relation between input current μ and firing rate response (here referred to as f − μ curve); this is not the case in spiking networks, where the firing rate also depends on the noise level which, in turns, depends on the input rate. Here we focus on two specific models: a ‘Ricciardi’ model, in which the single unit transfer function is given by the Ricciardi nonlinearity at fixed noise value, and the SSN [24], where the single unit transfer function is a power law with an exponent that is larger than one; a mathematical definition of these models is given in the Methods section. The comparison between different models is performed computing responses in networks of given input structure αE,I and of recurrent inhibition gE,I; this choice ensures the same balanced-solutions in all models and limits differences to the nonlinear response regions. In the Ricciardi-model, parameters are as in spiking network, the only exception is the noise level, which must be specified. We fix this to be the value observed in the spiking-network model at threshold. In the SSN, we fix parameters as in [24], i.e. we assume a powerlaw nonlinearity with exponent n = 2 and proportionality constant k = 0.04.

For networks producing saturation nonlinearities (Fig 8 first and second rows), the Ricciardi-model recapitulates quantitatively responses observed in spiking networks. As mentioned during our analysis of saturation nonlinearities, this is due to the fact that in spiking network the response in the balanced-regime and for larger rates depends weakly on the noise level. It follows that the agreement between spiking networks and the Ricciardi model is valid for all saturation nonlinearities discussed in model A and B. The SSN, on the the hand, shows substantial discrepancies. In particular, for network structure generating sublinear response in spiking network (Fig 8 first row), the SSN responds linearly; in the parameter conditions in which spiking network shows multiple solutions at zero inputs and maximum firing at large inputs (Fig 8 second row), the SSN shows supersaturation. These discrepancies are expected, since the SSN does not include a refractory period, i.e. the ingredient needed to generate saturation nonlinearity. While these nonlinearities can be captured by adding a refractory period to the model, our results show that consequences of the refractory period appear at rates that are much lower than 1/τrp. First, for moderate coupling, response is nonlinear for rates that are far from the maximum firing rate. Second, saturation nonlinearities limit the parameter space in which the network has a unique solution. In particular, the network structure used in Fig 8 second row is the one used for supersaturating solutions in [24, 25]; our results show that these parameters produce multiple solutions and saturation when the refractory period is taken into account (see Supporting Information for more details).

We next focus on response-onset nonlinearities. In spiking networks, rates at responses onset can either increase supralinearly or go through a region with multiple solutions; for larger inputs, rates approach the balanced solutions. Example of these transitions are shown in the last three rows of Fig 8. For network structure leading to supersaturating solution in spiking networks, the same qualitative behavior is observed in rate models but quantitative differences are observed. First, because of feedback coming from recurrent noise, the supralinear region at response onset is stiffer in spiking network. Indeed, the response in the Ricciardi-model, where the noise amplitude is fixed and there is no positive feedback coming from noise, is much less steep. A second major quantitative difference is that the peak excitatory rate is smaller in spiking network and Ricciardi model with respect to the SSN. More generally, our numerical analysis shows that response-onset nonlinearities occur in a smaller range of firing rates in spiking networks and in the Ricciardi model than in the SSN. In the Supporting Information (S3 Text), we show that this difference comes from the different shape of the f − μ curve in these models. For network structures leading to multiple solutions at response-onset, we should distinguish two cases: mean and noise generated multiple solutions. For some parameters, positive feedback due to mean recurrent input generates multiple solutions; this mechanism is also present in the Ricciardi model and in the SSN (Fig 8, fourth row). On the other hand, when multiple solutions are generated by noise feedback in spiking networks, there are no multiple solutions in rate models (Fig 8, fifth row); this is expected because in rate models there is no positive feedback generated by recurrent noise.

Discussion

In this work, we have investigated responses in networks of spiking neurons at finite coupling. In this regime, which has recently been suggested to underlie cortical dynamics [24, 25, 41, 42], we have shown that two types of nonlinearities emerge: response-onset and saturation. The network response transitions between these two nonlinearities as feedforward input increases; for intermediate inputs, the response matches that of the balanced-state model up to corrections of order . Importantly, the influence of refractoriness emerges already at rates that are much lower than the single neuron maximum response, producing sublinear or supralinear response and affecting the number of solutions at low inputs. Therefore, both types of nonlinearities can be relevant at activity levels observed in the brain. Our results have been obtained using a mean-field analysis, but are also confirmed in numerical simulations of large networks. Finally, we have analyzed which of the features of the response of spiking networks can be recapitulated by rate models.

Nonlinear operations are thought to underlie contrast dependent input summations observed in cortex, e.g. in surround suppression [17, 18] and normalization [19, 20]. These phenomena, which involve summation of responses to two different stimuli (e.g. two stimuli in the receptive field or stimuli in center and surround), have been recently explained by the SSN model [25]. In the SSN, because of the assumed power-law single neuron f − μ curve, the activity level smoothly modulates the effective coupling between units in the network: at low inputs, cells are weakly coupled and driven by feedforward inputs; at larger inputs, recurrent interactions become dominant and determine the network response. In the absence of structured connectivity, the increase of excitatory rates with feedforward input is supralinear for small inputs and, depending on the parameter regime, becomes either linear or supersaturating for larger inputs [24]. When the model includes structured connectivity, it shows nonlinear summation of responses to two different stimuli consistent with what is observed in cortex [24, 25, 43], both in non-supersaturating and supersaturating regimes (Ken Miller, private communication). Our work shows that all the response nonlinearities observed in the SSN in the absence of structured connectivity are present in spiking networks. As in the SSN, these nonlinearities emerge at response-onset, when coupling between units becomes strong enough to dominate over feedforward inputs. However, unlike in the SSN, the f − μ curve in spiking networks is supralinear only for a small region of inputs, and rapidly becomes vanishingly small for smaller inputs, and linear and then sublinear for larger inputs. This feature limits the range of rates over which response-onset nonlinearities unravel, both in regular (Figs 2, 5, 6 and 8) and supersaturated solutions (Figs 6, 8 and supporting Information S3 Text), and seems to question the applicability of response-onset nonlinearities to explain some summation properties observed in the cortex, since they emerge over rate changes up to tens of spk/s [19]. Note however that we cannot exclude having missed small regions in parameter space for which more robust response-onset nonlinearities could occur. Also, one cannot exclude that this issue might be alleviated by the use of more detailed spiking neuron models. Finally, the nonlinearities in spiking networks discussed here refer to cases in which the strength of a single stimulus is varied, while summation properties observed in the cortex arise in the SSN when structured connectivity is considered. This suggests that structured connectivity could yield nonlinear behaviors not captured by the analysis discussed here and which need further investigation.

Our analysis suggests a different explanation of nonlinear summation in cortex than the one proposed by SSN model. We found that, for finite coupling, saturation nonlinearities generate an activity dependent summation: at low intensity, inputs are summed linearly; summation becomes increasingly sublinear as the intensity increases. This nonlinearity emerges at relatively low rates and evolves gradually over activity levels which span few hundreds spk/s, a feature which compares favorably with some experimental results [19]. Moreover, unlike the SSN, this model does not require supralinear input summation at low activity level, a feature which has (to our knowledge) not been widely reported so far. Further experimental studies are needed to understand which mechanism underlies nonlinear input summation in cortex.

We have found that, in networks of spiking neurons, firing irregularity, i.e. the CV of the ISI, decreases monotonically in regular solutions for sufficiently large inputs. This effect is consistent with the stimulus-driven suppression of variability that has been reported in various experiments [44–48]. In our model, regardless of the specific parameters used, variability suppression emerges as the mean input approaches firing threshold, a robust prediction which is consistent with results from intracellular recordings [44, 45, 47, 49]. In addition, depending on the parameters, our model can show supra-Poissonian variability (e.g. Fig 2B, see also [36]), another phenomenon which has been widely reported experimentally [46]. It is important to point out that the suppression of variability observed experimentally [44–48] could potentially involve both ‘private’ and ‘shared’ variability components. Our model captures only the former component, as the network is assumed to operate in the asynchronous irregular state. Hence, our work differs qualitatively from other studies [43, 50], which focus on explaining the suppression of the shared component of variability. Additional experimental and theoretical investigations are needed to understand the degree to which private and shared variability contribute to the quenching of neuronal variability observed experimentally and its underlying mechanisms.

Our analysis revealed that multiple solutions can appear at response-onset, produced by noise-driven or mean-driven positive feedback, and at larger rate values, generated by single neuron refractoriness. In both cases, neural response can assume one of three possible values; combining these mechanisms, we find that, in spiking networks, there can be up to five coexisting solutions for every fixed value of the external drive. Even though the stability of these solutions has not been investigated here, network simulations suggests that in the case three solutions coexist, up to two of them can be stable (the one with lowest and highest rates), while the intermediate solution is unstable. In the case of 5 coexisting solutions, up to three of them can be stable (the ones with lowest, 3rd highest and highest rates) while intermediate solutions are again unstable. Note that 5 coexisting solutions can also be generated in rate models with refractoriness, as noted by Wilson and Cowan [51], while SSNs can support up to four fixed points at fixed external drive, with only two being stable [52].

Multiple studies have found that fixed points can also become unstable due to oscillatory instabilities, depending for instance on the distribution of synaptic delays [4, 53] These instabilities can destroy bistability [4, 38] and, in some regimes, produce transitions between network states which leads to an increase in irregularity and to large ‘shared’ variability [38, 39]. Moreover, it has been shown that, for certain parameters, the asynchronous state is stable only for intermediate values of the external drive, while synchronous states exist both for low external drive [4, 38, 39], and high external drive [4, 54, 55]. This pattern bears similarities with experimental data obtained in primary visual cortex, both in monkeys [49] and mice [56]. In addition, synchronous oscillations at low inputs can generate an effective supralinear transfer function in a region with multiple (unstable) fixed points coexist, through time average of network activity. In this context, our classification of multiple solutions could be used as a starting point to systematically classify the possible oscillatory regions that can emerge in a network.

We developed a new approach to study network response at finite coupling strength, allowing us to classify all possible deviations from linear response. Although this framework has been derived in the case of one excitatory and one inhibitory population, it can be readily generalized to study models with multiple interacting populations. An important application concerns the case of networks with one excitatory and multiple inhibitory populations, e.g. SOM, PV and VIP cells. The interaction between these neuron types has been recently implicated, among other phenomena, in the top-down modulation of activity during locomotion [57–61]. In particular, the observed change of modulation with context (dark vs visual stimuli) has been explained with a rate model with supralinear f − μ curve, assuming a change in baseline activity (low vs high) [62]. As we have shown in the present work, other scenarios exist close to response onset in networks of spiking neurons, which might lead to alternative explanations of the observed phenomena. Understanding the role of nonlinearities in this and other phenomena will require additional theoretical and experimental work.

Nonlinearities studied in this contribution are generated uniquely from the interplay between single neuron properties and network connectivity, using synapses with fixed efficacy. Synapses in the brain exhibit changes at multiple time scales, which could affect qualitatively the picture derived here. Further theoretical and experimental investigations are needed to understand how the interplay between these mechanisms shapes response properties of networks in the brain.

To conclude, we have shown that a simple network of interacting excitatory and inhibitory spiking neurons shows a rich repertoire of nonlinearities. Most neural systems receive information from multiple inputs. This is true not only in sensory systems, where most of the currently available data come from, but also in associative areas, where information coming from different sensory systems is combined with an animal cognitive state to make decisions and drive behavior. The general rules with which different inputs are combined for these and other computations are still the subject of debate; our work provides the basis to systematically study the biophysical constraints on these operations.

Supporting information

(PDF)

(PDF)

(PDF)

Acknowledgments

We thank K. Miller for discussions and for comments on the manuscript. This work used the computational resources of the NIH HPC Biowulf cluster (http://hpc.nih.gov) and the Duke Compute Cluster (https://rc.duke.edu/dcc/).

Data Availability

All relevant data are within the manuscript and its Supporting Information files.

Funding Statement

AS and MH were supported by the NIMH Intramural Research Program and by NIH BRAIN U01NS108683. NB was supported by NIH BRAIN U01NS108683. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. van Vreeswijk C, Sompolinsky H. Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science. 1996;274(5293):1724–6. 10.1126/science.274.5293.1724 [DOI] [PubMed] [Google Scholar]

- 2. van Vreeswijk C, Sompolinsky H. Chaotic balanced state in a model of cortical circuits. Neural computation. 1998;10(6):1321–71. 10.1162/089976698300017214 [DOI] [PubMed] [Google Scholar]

- 3. Amit DJ, Brunel N. Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cerebral cortex (New York, NY: 1991). 1997;7(3):237–252. 10.1093/cercor/7.3.237 [DOI] [PubMed] [Google Scholar]

- 4. Brunel N. Dynamics of sparsely connected networls of excitatory and inhibitory neurons. Journal of Computational Neuroscience. 2000;8:183–208. 10.1023/A:1008925309027 [DOI] [PubMed] [Google Scholar]

- 5. Roxin A, Brunel N, Hansel D, Mongillo G, van Vreeswijk C. On the distribution of firing rates in networks of cortical neurons. Journal of Neuroscience. 2011;31(45):16217–16226. 10.1523/JNEUROSCI.1677-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Renart A, de la Rocha J, Bartho P, Hollender L, Parga N, Reyes A, et al. The asynchronous state in cortical circuits. Science. 2010;327(5965):587–590. 10.1126/science.1179850 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Rosenbaum R, Smith MA, Kohn A, Rubin JE, Doiron B. The spatial structure of correlated neuronal variability. Nature Neuroscience. 2016;20:107 EP –. 10.1038/nn.4433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Darshan R, van Vreeswijk C, Hansel D. Strength of correlations in strongly recurrent neuronal networks. Phys Rev X. 2018;8:031072. [Google Scholar]

- 9. Baker C, Ebsch C, Lampl I, Rosenbaum R. Correlated states in balanced neuronal networks. Phys Rev E. 2019;99:052414 10.1103/PhysRevE.99.052414 [DOI] [PubMed] [Google Scholar]

- 10. Douglas RJ, Martin KA. A functional microcircuit for cat visual cortex. The Journal of physiology. 1991;440:735–769. 10.1113/jphysiol.1991.sp018733 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Softky W, Koch C. The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. Journal of Neuroscience. 1993;13(1):334–350. 10.1523/JNEUROSCI.13-01-00334.1993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Bair W, Koch C, Newsome W, Britten K. Power spectrum analysis of bursting cells in area MT in the behaving monkey. Journal of Neuroscience. 1994;14(5):2870–2892. 10.1523/JNEUROSCI.14-05-02870.1994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Hromádka T, DeWeese MR, Zador AM. Sparse representation of sounds in the unanesthetized auditory cortex. PLOS Biology. 2008;6(1):1–14. 10.1371/journal.pbio.0060016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. O’Connor DH, Peron SP, Huber D, Svoboda K. Neural activity in barrel cortex underlying vibrissa-based object localization in mice. Neuron. 2010;67(6):1048–1061. 10.1016/j.neuron.2010.08.026 [DOI] [PubMed] [Google Scholar]

- 15. Ecker AS, Berens P, Keliris GA, Bethge M, Logothetis NK, Tolias AS. Decorrelated neuronal firing in cortical microcircuits. Science. 2010;327(5965):584–587. 10.1126/science.1179867 [DOI] [PubMed] [Google Scholar]

- 16. Sanzeni A, Histed M, Brunel N. Dynamics of networks of conductance-based neurons in the strong coupling limit. Computational and Systems Neuroscience (Cosyne). 2018;. [Google Scholar]

- 17. Sengpiel F, Sen A, Blakemore C. Characteristics of surround inhibition in cat area 17. Experimental Brain Research. 1997;116(2):216–228. 10.1007/PL00005751 [DOI] [PubMed] [Google Scholar]

- 18. Polat U, Mizobe K, Pettet MW, Kasamatsu T, Norcia AM. Collinear stimuli regulate visual responses depending on cell’s contrast threshold. Nature. 1998;391(6667):580–584. 10.1038/35372 [DOI] [PubMed] [Google Scholar]

- 19. Heuer HW, Britten KH. Contrast dependence of response normalization in area MT of the rhesus macaque. Journal of Neurophysiology. 2002;88(6):3398–3408. 10.1152/jn.00255.2002 [DOI] [PubMed] [Google Scholar]

- 20. Ohshiro T, Angelaki DE, DeAngelis GC. A normalization model of multisensory integration. Nature neuroscience. 2011;14(6):775–782. 10.1038/nn.2815 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Olshausen BA, Field DJ. How close are we to understanding V1? Neural Computation. 2005;17(8):1665–1699. 10.1162/0899766054026639 [DOI] [PubMed] [Google Scholar]

- 22. Mongillo G, Hansel D, van Vreeswijk C. Bistability and spatiotemporal irregularity in neuronal networks with nonlinear synaptic transmission. Phys Rev Lett. 2012;108:158101 10.1103/PhysRevLett.108.158101 [DOI] [PubMed] [Google Scholar]

- 23. Persi E, Hansel D, Nowak L, Barone P, van Vreeswijk C. Power-law input-output transfer functions explain the contrast-response and tuning properties of neurons in visual cortex. PLOS Computational Biology. 2011;7(2):1–21. 10.1371/journal.pcbi.1001078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Ahmadian Y, Rubin DB, Miller KD. Analysis of the stabilized supralinear network. Neural Computation. 2013;25(8):1994–2037. 10.1162/NECO_a_00472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Rubin DB, VanHooser SD, Miller KD. The stabilized supralinear network: A unifying circuit motif underlying multi-input integration in sensory cortex. Neuron. 2015;85(2):402–417. 10.1016/j.neuron.2014.12.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Sanzeni A, Histed M, Brunel N. Emergence of nonlinear computations in spiking neural networks with linear synapses. Neuroscience Meeting Planner. 2017;. [Google Scholar]

- 27. Ricciardi LM. Diffusion processes and related topics in biology. Berlin; New York: Springer-Verlag Inc; 1977. [Google Scholar]

- 28. Amit DJ, Tsodyks MV. Quantitative study of attractor neural network retrieving at low spike rates: I. substrate—spikes, rates and neuronal gain. Network: Computation in Neural Systems. 1991;2(3):259–273. 10.1088/0954-898X_2_3_003 [DOI] [Google Scholar]

- 29. Lindner B. Superposition of many independent spike trains is generally not a Poisson process. Phys Rev E. 2006;73:022901 10.1103/PhysRevE.73.022901 [DOI] [PubMed] [Google Scholar]

- 30. Brunel N, Sergi S. Firing frequency of leaky intergrate-and-fire neurons with synaptic current dynamics. Journal of theoretical biology. 1998;195(1):87–95. 10.1006/jtbi.1998.0782 [DOI] [PubMed] [Google Scholar]

- 31. Fourcaud N, Brunel N. Dynamics of the firing probability of noisy integrate-and-fire neurons. Neural Computation. 2002;14(9):2057–2110. 10.1162/089976602320264015 [DOI] [PubMed] [Google Scholar]

- 32. Moreno R, de la Rocha J, Renart A, Parga N. Response of spiking neurons to correlated inputs. Phys Rev Lett. 2002;89:288101 10.1103/PhysRevLett.89.288101 [DOI] [PubMed] [Google Scholar]

- 33. Moreno-Bote R, Parga N. Role of synaptic filtering on the firing response of simple model neurons. Phys Rev Lett. 2004;92:028102 10.1103/PhysRevLett.92.028102 [DOI] [PubMed] [Google Scholar]

- 34. van Vreeswijk C, Farkhooi F. Fredholm theory for the mean first-passage time of integrate-and-fire oscillators with colored noise input. Phys Rev E. 2019;100:060402 10.1103/PhysRevE.100.060402 [DOI] [PubMed] [Google Scholar]

- 35. Brunel N, Hakim V, Richardson MJ. Single neuron dynamics and computation. Current Opinion in Neurobiology. 2014;25:149–155. 10.1016/j.conb.2014.01.005 [DOI] [PubMed] [Google Scholar]

- 36. Barbieri F, Brunel N. Irregular persistent activity induced by synaptic excitatory feedback. Frontiers in Computational Neuroscience. 2007;1:5 10.3389/neuro.10.005.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Renart A, Moreno-Bote R, Wang XJ, Parga N. Mean-driven and fluctuation-driven persistent activity in recurrent networks. Neural Computation. 2007;19:1–46. 10.1162/neco.2007.19.1.1 [DOI] [PubMed] [Google Scholar]

- 38. Tartaglia EM, Brunel N. Bistability and up/down state alternations in inhibition-dominated randomly connected networks of LIF neurons. Scientific Reports. 2017;7(1):11916 10.1038/s41598-017-12033-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Jercog D, Roxin A, Barthó P, Luczak A, Compte A, de la Rocha J. UP-DOWN cortical dynamics reflect state transitions in a bistable network. eLife. 2017;6:e22425 10.7554/eLife.22425 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Stimberg M, Brette R, Goodman DF. Brian 2, an intuitive and efficient neural simulator. eLife. 2019;8:e47314 10.7554/eLife.47314 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Ahmadian Y, Miller KD. What is the dynamical regime of cerebral cortex? arXiv. 2019;. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Sanzeni A, Akitake B, Goldbach HC, Leedy CE, Brunel N, Histed MH. Inhibition stabilization is a widespread property of cortical networks. eLife. 2020; 9:e54875 10.7554/eLife.54875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Hennequin G, Ahmadian Y, Rubin DB, Lengyel M, Miller KD. The dynamical regime of sensory cortex: Stable dynamics around a single stimulus-tuned attractor account for patterns of noise variability. Neuron. 2018;98(4):846–860.e5. 10.1016/j.neuron.2018.04.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Finn IM, Priebe NJ, Ferster D. The emergence of contrast-invariant orientation tuning in simple cells of cat visual cortex. Neuron. 2007;54(1):137–152. 10.1016/j.neuron.2007.02.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Poulet JFA, Petersen CCH. Internal brain state regulates membrane potential synchrony in barrel cortex of behaving mice. Nature. 2008;454(7206):881–885. 10.1038/nature07150 [DOI] [PubMed] [Google Scholar]

- 46. Churchland MM, Yu BM, Cunningham JP, Sugrue LP, Cohen MR, Corrado GS, et al. Stimulus onset quenches neural variability: a widespread cortical phenomenon. Nature neuroscience. 2010;13(3):369–378. 10.1038/nn.2501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Gentet LJ, Avermann M, Matyas F, Staiger JF, Petersen CCH. Membrane potential dynamics of GABAergic neurons in the barrel cortex of behaving mice. Neuron. 2010;65(3):422–435. 10.1016/j.neuron.2010.01.006 [DOI] [PubMed] [Google Scholar]

- 48. Chen M, Wei L, Liu Y. Motor preparation attenuates neural variability and beta-band LFP in parietal cortex. Scientific Reports. 2014;4:6809 EP –. 10.1038/srep06809 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Tan AYY, Chen Y, Scholl B, Seidemann E, Priebe NJ. Sensory stimulation shifts visual cortex from synchronous to asynchronous states. Nature. 2014;509:226 EP –. 10.1038/nature13159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Rajan K, Abbott LF, Sompolinsky H. Stimulus-dependent suppression of chaos in recurrent neural networks. Phys. Rev. E. 2010;82(1):011903 10.1103/PhysRevE.82.011903 [DOI] [PMC free article] [PubMed] [Google Scholar]