Abstract

Predicting the electrical behavior of the heart, from the cellular scale to the tissue level, relies on the numerical approximation of coupled nonlinear dynamical systems. These systems describe the cardiac action potential, that is the polarization/depolarization cycle occurring at every heart beat that models the time evolution of the electrical potential across the cell membrane, as well as a set of ionic variables. Multiple solutions of these systems, corresponding to different model inputs, are required to evaluate outputs of clinical interest, such as activation maps and action potential duration. More importantly, these models feature coherent structures that propagate over time, such as wavefronts. These systems can hardly be reduced to lower dimensional problems by conventional reduced order models (ROMs) such as, e.g., the reduced basis method. This is primarily due to the low regularity of the solution manifold (with respect to the problem parameters), as well as to the nonlinear nature of the input-output maps that we intend to reconstruct numerically. To overcome this difficulty, in this paper we propose a new, nonlinear approach relying on deep learning (DL) algorithms—such as deep feedforward neural networks and convolutional autoencoders—to obtain accurate and efficient ROMs, whose dimensionality matches the number of system parameters. We show that the proposed DL-ROM framework can efficiently provide solutions to parametrized electrophysiology problems, thus enabling multi-scenario analysis in pathological cases. We investigate four challenging test cases in cardiac electrophysiology, thus demonstrating that DL-ROM outperforms classical projection-based ROMs.

Introduction

The electrical activation of the heart, which drives its contraction, is the result of two processes: at the microscopic scale, the generation of ionic currents through the cellular membrane producing a local action potential; and at the macroscopic scale, the propagation of the action potential from cell to cell in the form of a transmembrane potential [1–3]. This latter process can be described by means of partial differential equations (PDEs), suitably coupled with systems of ordinary differential equations (ODEs) modeling the ionic currents in the cells.

Solving this system using a high-fidelity, full order model (FOM) such as, e.g., the finite element (FE) method, is computationally demanding. Indeed, the propagation of the electrical signal is characterized by the fast dynamics of very steep fronts, thus requiring very fine space and time discretizations [3–5]; see also, e.g., [6, 7] for higher-order and/or more robust numerical methods, [8] for time and space adaptivity, and [9] regarding the use of GPU computing in this context. Using a FOM may quickly become unaffordable if such a coupled system must be solved for several values of parameters representing either functional or geometric data such as, e.g., material properties, initial and boundary conditions, or the shape of the domain. Multi-query analysis is relevant in a variety of situations: when analyzing multiple scenarios, when dealing with sensitivity analysis and uncertainty quantification (UQ) problems in order to account for inter-subject variability [10–13], for parameter estimation or data assimilation, in which some unknown (or unaccessible) quantities characterizing the mathematical model must be inferred from a set of measurements [14–18]. In all these cases, to achieve computational efficiency, multi-query analysis in cardiac electrophysiology must rely on suitable surrogate models see, e.g., [19] for a recent review on the topic. Among surrogate models, several options are available, such as (i) emulators, obtained, e.g., via Polynomial Chaos Expansions or Gaussian process regression [20–22], aiming at the approximation of the input-output mapping by fitting a set of training data; (ii) lower-fidelity models, introducing suitable modeling simplifications—such as, for instance, the Eikonal model in this context [23]; and (iii) reduced order models (ROMs) obtained through a projection process on the equations governing the FOM to reduce the state-space dimensionality. Although typically more intrusive to implement, ROMs often yield more accurate approximations than data fitting and usually generate more significant computational gains than lower-fidelity models.

Conventional projection-based ROMs built, e.g., through the reduced basis (RB) method [24], yields inefficient ROMs when dealing with nonlinear time-dependent parametrized PDE-ODE system as the one arising from cardiac electrophysiology. The three major computational bottlenecks shown by such kind of ROMs for cardiac electrophysiology are:

the linear superimposition of modes, on which they are based, would cause the dimension of the ROM to be excessively large to guarantee an acceptable accuracy;

evaluating the ROM requires the solution of a dynamical system, which might be unstable unless the size of time step Δt is very small;

the ROM must also account for the dynamics of the gating variables, even when aiming at computing just the electrical potential. This fact entails an extremely intrusive and costly hyper-reduction stage to reduce the solution of the ODE system to a few, selected mesh nodes [25].

To overcome the limitations of projection-based ROMs, we propose a new, non-intrusive ROM technique based on deep learning (DL) algorithms, which we refer to as DL-ROM. Combining in a suitable way a convolutional autoencoder (AE) and a deep feedforward neural network (DFNN), the DL-ROM technique enables the construction of an efficient ROM, whose dimension is as close as possible to the number of parameters upon which the solution of the differential problem depends. A preliminary numerical assessment of our DL-ROM technique has already been presented in [26], albeit on simpler—yet challenging—test cases.

The proposed DL-ROM technique is a combination of a data-driven with a physics based model approach. Indeed, it exploits snapshots taken from a set of FOM solutions (for selected parameter values and time instances) and deep neural network architectures to learn, in a non-intrusive way, both (i) the nonlinear trial manifold where the ROM solution is sought, and (ii) the nonlinear reduced dynamics. In a linear ROM built, e.g., through proper orthogonal decomposition (POD), the former quantity is nothing but a set of basis functions, while the latter task corresponds to the projection stage in the subspace spanned by these basis functions. Here, our goal is to show that DL-ROM can be effectively used to handle parametrized problems in cardiac electrophysiology, accounting for both physiological and pathological conditions, in order to provide fast and accurate solutions. The proposed DL-ROM is computationally efficient during the testing stage, that is for any new scenario unseen during the training stage. This is particularly useful in view of the evaluation of patient-specific features to enable the integration of computational methods in current clinical platforms.

DL techniques for parametrized PDEs have previously been proposed in other contexts. In [27–30] feedforward neural networks have been employed to model the reduced dynamics in a less intrusive way, that is, avoiding the costs entailed by projection-based ROMs, but still relying on a linear trial manifold built, e.g., through POD. In [31–33] the construction of ROMs for nonlinear, time-dependent problems has been replaced by the evaluation of regression models based on artificial neural networks (ANNs). In [34, 35] the reduced trial manifold where the approximation is sought has been modeled through ANNs thus avoiding the linear superimposition of POD modes, on a minimum residual formulation to derive the ROM [35], or without considering an explicit parameter dependence in the differential problem that is considered [34]. In all these works, coupled problems have never been considered. Moreover, very often DL techniques have been exploited to address problems which require only a moderate dimension of projection-based ROMs. We demonstrate that our DL-ROM provides accurate results by constructing ROMs with extremely low-dimension in prototypical test cases. These tests exhibit all the relevant physical features which make the numerical approximation of parametrized problems in cardiac electrophysiology a challenging task.

Materials and methods

Cardiac electrophysiology

Muscle contraction and relaxation drive the pump function of the heart. In particular, tissue contraction is triggered by electrical signals self-generated in the heart and propagated through the myocardium thanks to the excitability of the cardiac cells, the cardiomyocites [3, 36]. When suitably stimulated, cardiomyocites produce a variation of the potential across the cellular membrane, called transmembrane potential. Its evolution in time is usually referred to as action potential, involving a polarization and a depolarization in the early stage of every heart beat. The action potential is generated by several ion channels (e.g., calcium, sodium, potassium) that open and close, and by the resulting ionic currents crossing the membrane. For instance, coupling the so-called monodomain model for the transmembrane potential u = u(x, t) with a phenomenological model for the ionic currents—involving a single gating variable w = w(x, t)—in a domain Ω representing, e.g., a portion of the myocardium, results in the following nonlinear time-dependent system

| (1) |

Here t denotes a rescaled time Dimensional times and potential [37] are given by and . The transmembrane potential ranges from the resting state of −80 mV to the excited state of + 20 mV., n denotes the outward directed unit vector normal to the boundary ∂Ω of Ω, whereas Iapp is an applied current representing, e.g., the initial activation of the tissue. The nonlinear diffusion-reaction equation for u is two-ways coupled with the ODE system, which must be in principle solved at any point x ∈ Ω; indeed, the reaction term Iion and the function g depend on both u and w. The most common choices for the two functions Iion and g in order to efficiently reproduce the action-potential are, e.g., the FitzHugh-Nagumo [38, 39], the Aliev-Panfilov [37, 40] or the Mitchell and Schaeffer models [41]. The diffusivity tensor D usually depends on the fibers-sheet structure of the tissue, affecting directional conduction velocities and directions. In particular, by assuming an axisymmetric distribution of the fibers, the conductivity tensor takes the form

| (2) |

where σl and σt are the conductivities in the fibers and the transversal directions.

When a simple phenomenological ionic model is considered, such as the FitzHugh-Nagumo or the Aliev-Panfilov (A-P) model, the ionic current takes the form of a cubic nonlinear function of u and a single (dimensionless) gating variable plays the role of a recovery function, allowing to model refractoriness of cells. In this paper, we focus on the Aliev-Panfilov model, which consists in taking

| (3) |

The parameters K, a, b, ε0, c1, c2 are related to the cell. Here a represents an oscillation threshold, whereas the weighting factor was introduced in [37] to tune the restitution curve to experimental observations by adjusting the parameters c1 and c2; see, e.g., [1–3, 42] for a detailed review. In the remaining part of the paper, we denote by a parameter vector listing all the nμ input parameters characterizing physical (and, possibly, geometrical) properties we might be interested to vary; is a subset of , denoting the parameter space. Relevant physical situations are those in which input parameters affect the diffusivity matrix D (through the conduction velocities) and the applied current Iapp; previous analyses focused instead on the gating variable dynamics (through g) and the ionic current Iion, see [25].

Projection-based ROMs

From an algebraic standpoint, the spatial discretization of system (1) through the Galerkin-finite element (FE) approximation [43] yields the following nonlinear dynamical system for u = u(t, μ), w = w(t, μ), representing our full order model (FOM):

| (4) |

Here is a matrix arising from the diffusion operator (thus including the conductivity tensor D(μ) = D(x; μ), which can vary within the myocardium due to fiber orientation and conditions, such as the possible presence of ischemic regions); is the mass matrix; are vectors arising from the nonlinear terms; finally, is a vector collecting the applied currents. The dimension N is related to the dimension of the FE space and, ultimately, depends on the size h > 0 of the computational mesh used to discretize the domain Ω. Note that the system of ODEs arises from the collocation of the ODE in (1) at the nodes used for the numerical integration. A detailed derivation of the FOM (4) is reported in the S1 Appendix.

The intrinsic dimension of the solution manifold

| (5) |

obtained by solving (4) when (t; μ) varies in , is usually much smaller than N and, under suitable conditions, is at most nμ + 1 ≪ N, where nμ is the number of parameters—in this respect, the time independent variable plays the role of a parameter. For this reason, ROMs attempt at approximating by introducing a suitable trial manifold of lower dimension. The most popular approach is proper orthogonal decomposition (POD), which exploits a linear trial manifold built through the singular value decomposition of a matrix collecting a set of FOM snapshots

this is a set of solutions obtained for Ntrain selected input parameters at (a subset, possibly, of) the time instants in which (0, T) is partitioned for the sake of time discretization. The most common choice is to set tk = kΔt where Δt = T/(Nt − 1).

When using a projection-based ROM, the approximation of u(t; μ) is sought as a linear superimposition of modes, under the form

| (6) |

thus yielding a linear ROM, in which the columns of the matrix form an orthonormal basis of a space Vn, an n-dimensional subspace of . In the case of POD, Vn provides the best n-rank approximation of S in the Frobenius norm, that is, ζ1, …, ζn are the first n (left) singular vectors of S corresponding to the n largest singular values σ1, …, σn of S, such that the projection error is smaller than a desired tolerance εPOD. To meet this requirement, it is sufficient to choose n as the smallest integer such that

i.e., the energy retained by the last Ns − n POD modes is equal or smaller than .

The approximation of w is given instead by its restriction

to a (possibly, small) subset of m degrees of freedom, where m ≪ n, at which the nonlinear term Iion is interpolated exploiting a problem-dependent basis, spanned by the columns of a matrix , which is built according to a suitable hyper-reduction strategy; see, e.g., [25] for further details. Here denotes a matrix formed by the columns of the N × N identity matrix corresponding to the m selected degrees of freedom.

A Galerkin-POD ROM for system (1) is then obtained by (i) first, substituting (Eq 6) into Eq 4 and projecting it onto Vn; then, (ii) solving the system of ODEs at m selected degrees of freedom, thus yielding the following nonlinear dynamical system for un = un(t, μ) and the selected components PT w = PT w(t; μ) of w:

| (7) |

This strategy is the essence of the reduced basis (RB) method for nonlinear time-dependent parametrized PDEs. However, using (7) as an approximation to (4) is known to suffer from several problems. First of all, an extensive hyper-reduction stage (exploiting, e.g., the discrete empirical interpolation method (DEIM)) must be performed in order to be able to evaluate any μ- or u-dependent quantities appearing in (7), that is, without relying on N-dimensional arrays. Moreover, whenever the solution of the differential problem features coherent structures that propagate over time, such as steep wavefronts, the dimension n of the projection-based ROM (7) might easily become very large, due to the basic linearity assumption, by which the solution is given by a linear superimposition of POD modes, thus severely degrading the computational efficiency of the ROM. A possible way to overcome this bottleneck is to rely on local reduced bases, built through POD after the set of snapshots has been split into Nc > 1 clusters, according to suitable clustering (or unsupervised learning) algorithms [25].

Deep learning-based reduced order modeling (DL-ROM)

To overcome the limitations of linear ROMs, we consider a new, nonlinear ROM technique based on deep learning models. First introduced in [26] and assessed on one-dimensional benchmark problems, the DL-ROM technique aims at learning both the nonlinear trial manifold (corresponding to the matrix V in the case of a linear ROM) in which we seek the solution to the parametrized system (1) and the nonlinear reduced dynamics (corresponding to the projection stage in a linear ROM). This method is not intrusive; it relies on DL algorithms trained on a set of FOM solutions obtained for different parameter values.

We denote by Ntrain and Ntest the number of training and testing parameter instances, respectively; the ROM dimension is again denoted by n ≪ N. In order to describe the system dynamics on a suitable reduced nonlinear trial manifold (a task which we refer to as reduced dynamics learning), the intrinsic coordinates of the ROM approximation are defined as

| (8) |

where is a DFNN, consisting in the subsequent composition of a nonlinear activation function, applied to a linear transformation of the input, multiple times [44]. Here θDF denotes the vector of parameters of the DFNN, collecting all the corresponding weights and biases of each layer of the DFNN.

Regarding instead the description of the reduced nonlinear trial manifold, approximating the solution one, (a task which we refer to as reduced trial manifold learning) we employ the decoder function of a convolutional autoencoder The AE is a particular type of neural network aiming at learning the identity function

| (9) |

It is composed by two main parts:

the encoder function , where and n ≪ N, mapping the high-dimensional input x onto a low-dimensional code ;

the decoder function , where , mapping the low-dimensional code to an approximation of the original high-dimensional input .

(AE) [45, 46]. More precisely, takes the form

| (10) |

where consists in the decoder function of a convolutional AE. This latter results from the composition of several layers (some of which are convolutional), depending upon a vector θD collecting all the corresponding weights and biases.

As a matter of fact, the approximation provided by the DL-ROM technique is defined as

| (11) |

The encoder function of the convolutional AE can then be exploited to map the FOM solution associated to (t, μ) onto a low-dimensional representation

| (12) |

denotes the encoder function, depending upon a vector θE of parameters.

Computing the DL-ROM approximation of u(t; μtext), for any possible t ∈ (0, T) and , corresponds to the testing stage of a DFNN and of the decoder function of a convolutional AE; this does not require the evaluation of the encoder function. We remark that our DL-ROM strategy overcomes the three major computational bottlenecks implied by the use of projection-based ROMs, since:

the dimension of the DL-ROM can be kept extremely small;

the time resolution required by the DL-ROM can be chosen to be larger than the one required by the numerical solution of dynamical systems in cardiac electrophysiology;

the DL-ROM can be queried at any desired time instant, without requiring the solution of a dynamical system until that time;

the DL-ROM does not require to account for the dynamics of the gating variables, thus avoiding any hyper-reduction stage. This advantage, already visible when employing a single gating variable as in our case, might become even more effective when dealing with more realistic ionic models, when dozens of additional variables in the system of ODEs must be accounted for [3].

The training stage consists in solving the following optimization problem, in the variable θ = (θE, θDF, θD), after the snapshot matrix S has been formed:

| (13) |

where Ns = Ntrain Nt and

| (14) |

with ωh ∈ [0, 1]. The per-example loss function (14) combines the reconstruction error (that is, the error between the FOM solution and the DL-ROM approximation) and the error between the intrinsic coordinates and the output of the encoder.

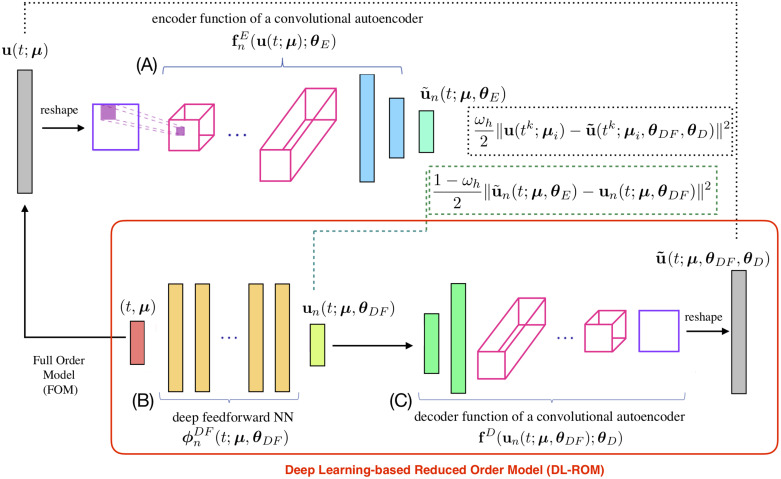

The architecture of DL-ROM is the one shown in Fig 1. The encoder function is used only during the training and validation steps; it is instead discarded during the testing phase. See [26] for further algorithmic details about the training and the testing algorithms required to build and evaluate a DL-ROM.

Fig 1. DL-ROM architecture.

DL-ROM architecture used during the training phase. In the red box, the DL-ROM to be queried for any new selected couple (t, μ) during the testing phase. The FOM solution u(t; μ) is provided as input to block (A) which outputs . The same parameter instance associated to the FOM, i.e. (t; μ), enters block (B) which provides as output un(t; μ) and the error between the low-dimensional vectors (dashed green box) is accumulated. The intrinsic coordinates un(t; μ) are given as input to block (C) returning the ROM approximation . Then the reconstruction error (dashed black box) is computed.

We highlight that the DL-ROM technique does not require to solve a (reduced) nonlinear dynamical system for the reduced degrees of freedom as in (7); rather, it evaluates a nonlinear map for any given couple (t, μtest), for each t ∈ (0, T). Numerical results are extremely accurate, the mean relative error is indeed below 1% (see, e.g., Test 2), even if the biophysical dynamics underlying Eq (1) Moreover, the map features an extremely low dimension, in the most favorable scenario equal to nμ + 1. From a computational perspective, remarkable gains and simplifications can be obtained against a linear ROM, since (i) no hyper-reduction is required to enhance the evaluation of any μ- or u-dependent quantity, and (ii) even more interestingly, there is no need to evaluate the dynamics of the recovery variable w if one is only interested in the electrical potential.

Results and discussion

We now assess the computational performances of the proposed DL-ROM strategy on four relevant test cases in cardiac electrophysiology. Our choice of the numerical tests is aimed at highlighting the performance of our DL-ROM method in challenging electrophysiology problems, namely pathological cases in portion of cardiac tissues or physiological scenarios on realistic left ventricle geometries.

The architecture used to perform all the numerical tests is the one reported in the S2 Appendix. To solve the optimization problem (13) and (14) we use the ADAM algorithm [47] with a starting learning rate equal to η = 10−4. Moreover, we perform cross-validation by splitting the data in training and validation and following a proportion 8:2 and we implement an early-stopping regularization technique to reduce overfitting [44].

To evaluate the performance of the DL-ROM, we use the loss function (14) and an error indicator defined as

| (15) |

Neural networks required by our DL-ROM technique have been implemented by means of the Tensorflow deep learning framework [48]. The training phase has been carried out on a workstation equipped with an Nvidia GeForce GTX 1070 8 GB GPU while, in addition to this hardware, the testing phase has also been carried out on a HPC cluster.

Test 1: Two-dimensional slab with ischemic region

We consider the computation of the transmembrane potential in a square slab Ω = (0, 10 cm)2 of cardiac tissue in presence of an ischemic (non-conductive) region. The ischemic region may act as anatomical driver of cardiac arrhythmias like tachycardias and fibrillations. The system we want to solve is a slight modification of Eq (1), accounting for the presence of a non-conductive region which affects both the conductivity tensor and the ionic current term. The ischemic portion of the domain is modeled by replacing the conductivity tensor D(x), defined in (2), with , where the function σ(x, μ) is given by

| (16) |

In this case, nμ = 2 parameters are considered, representing the coordinates of the center of the scar, belong to the parameter space . Moreover, α = 7 cm2, σ0 = 10−4, the transversal and longitudinal conductivities are σt = 12.9 ⋅ 0.1 cm2/ms and σl = 12.9 ⋅ 0.2 cm2/ms, respectively, and f0 = (1, 0)T, meaning that the tissue fibers are parallel to the x−axis. Similarly, the ionic current Iion(u, w) in (1) is replaced by . The applied current takes the form

where C = 100 mA, β = 0.02 cm2 and ms, consisting in a Gaussian-shaped applied stimulus with support in a circle with radius almost equal to 3 cm. The parameters appearing in (3) are set to K = 8, a = 0.01, b = 0.15, ε0 = 0.002, c1 = 0.2, and c2 = 0.3, see [49]. The equations have been discretized in space through linear finite elements by considering N = 64×64 = 4096 grid points. For the time discretization and the treatment of nonlinear terms, we use a one-step, semi-implicit, first order scheme (see [25] for further details) by considering a time step Δt = 0.1/12.9 over (0, T) with T = 400 ms.

For the training phase, we uniformly sample Nt = 1000 time instances over (0, T) and consider Ntrain = 49 training-parameter instances, with μtrain = (3.5+ i0.5, 3.5 + j0.5), i, j = 0, …, 6. The maximum number of epochs is set equal to Nepochs = 10000, the batch size is Nb = 40 and, regarding the early-stopping criterion, we stop the training if the loss function does not decrease in 500 epochs. For the testing phase, Ntest = 36 testing-parameter instances μtest = (3.75 + i0.5, 3.75 + j0.5), i, j = 0, …, 5, have been considered.

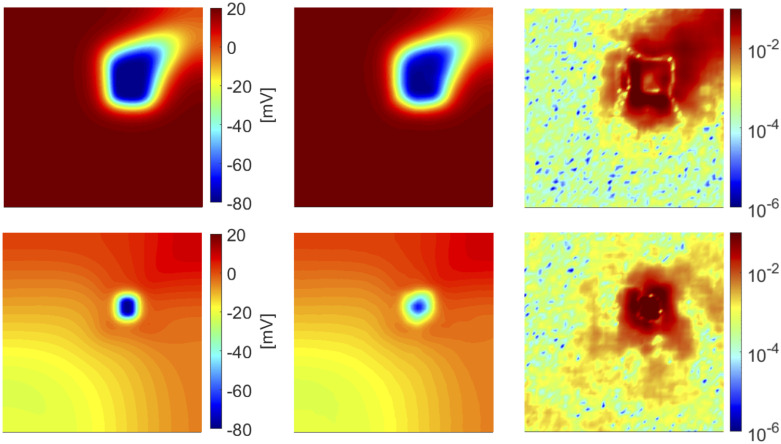

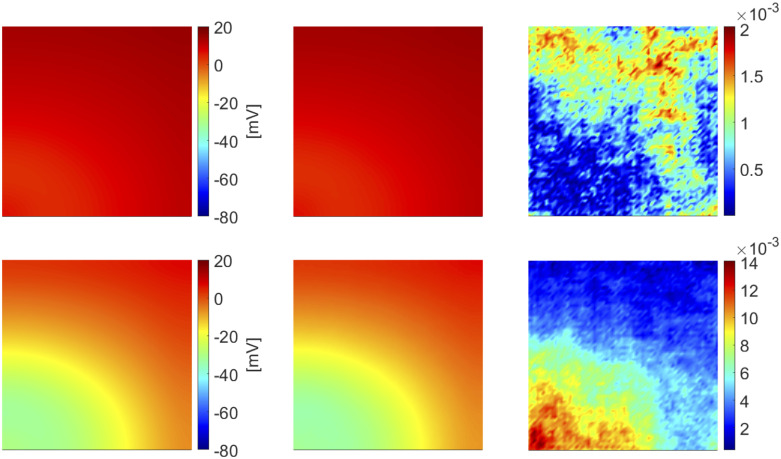

In Fig 2 we show the FOM and the DL-ROM solutions, the latter obtained with n = 3 for the testing-parameter instance μtest = (6.25, 6.25) cm at and 356 ms, respectively, together with the relative error , for k = 1, …, Nt, defined as

| (17) |

While (15) is a synthetic indicator, the quantity defined in (17) is instead a function of the space independent variable. In Fig 2 (top) the tissue is depolarized except for the region occupied by scar and surrounding it, which is clearly characterized by a slower conduction. In Fig 2 (bottom) the tissue is starting to repolarize and even if the shape of the ischemic region is not sharply reproduced, the DL-ROM solution is able to capture the diseased (non-conductive) nature of this portion of tissue.

Fig 2. Test 1: Comparison between FOM and DL-ROM solutions for a testing-parameter instance.

FOM solution (left), DL-ROM solution with n = 3 (center) and relative error ϵk (right) for the testing-parameter instance μtest = (6.25, 6.25) cm at ms (top) and ms (bottom). The maximum of the relative error ϵk is 10−3 and it is associated to the diseased tissue.

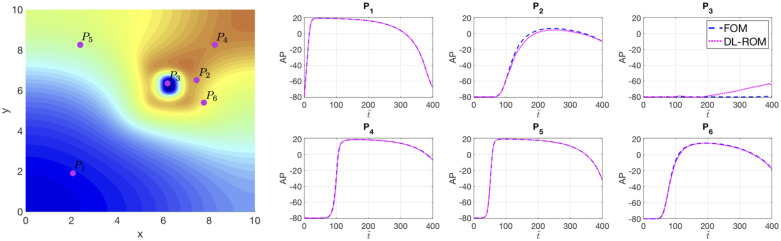

In Fig 3 The DL-ROM is able to provide an accurate reconstruction of the AP at almost all points; the maximum error is associated to the point P3, the closest one to the center of the scar, for ms. However, even in this case, the DL-ROM technique is able to capture the difference, in terms of AP peak values, between the diseased and the healthy tissue.

Fig 3. Test 1: Comparison between the FOM and DL-ROM APs at six points P1, …, P6.

Left: FOM solution evaluated for μtest = (6.25, 6.25) cm at ms together with the points P1, …, P6. Right: APs evaluated for μtest = (6.25, 6.25) cm at points P1, …, P6. The DL-ROM, with n = 3, is able to to sharply reconstruct the AP in almost all the points and the main features are captured also for the points close to the scar.

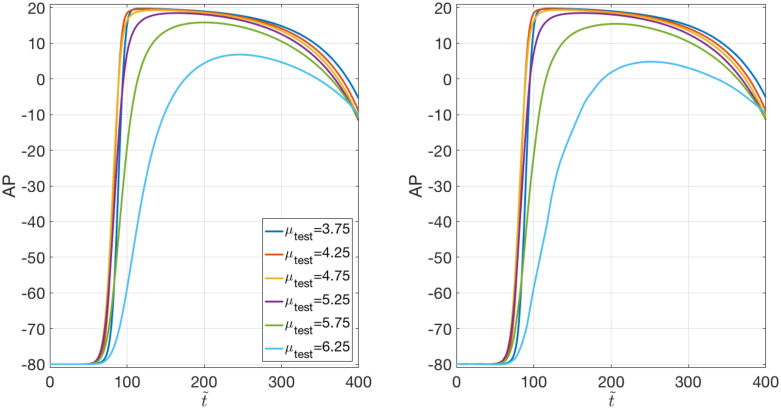

The AP variability across the parameter space characterizing both the FOM and the DL-ROM solutions is shown in Fig 4. Still with a DL-ROM dimension n = 3, we report the APs for μtest = (μtest, μtest) cm, with μtest = 3.75, 4.25, 4.75, 5.25, 5.75, 6.25, evaluated at P = (7.46, 6.51) cm. The DL-ROM is able to capture such variability over ; moreover, the larger μtest, the smaller the distance between the point P and the scar, with their proximity impacting on the shape and the values of the AP. In particular, for μtest = 6.25, the point P falls into the grey zone.

Fig 4. Test 1: Variability of the FOM and DL-ROM solutions over the parameter space.

FOM (right) and DL-ROM (left) AP variability over at P = (7.46, 6.51) cm. The DL-ROM sharply reconstructs the FOM variability over .

By using the DL-ROM technique and setting the dimension of the nonlinear trial manifold equal to the dimension of the solution manifold, i.e. n = 3, we obtain an error indicator (15) of ϵrel = 2.01 ⋅ 10−2. In order to assess the computational efficiency of DL-ROM, we compare it with the POD-Galerkin ROM relying on Nc local reduced bases; we report in Table 1 the maximum and minimum number of basis functions, among all the clusters, required by the POD-Galerkin ROM [24, 25] to achieve the same accuracy.

Table 1. Test 1: Dimensions of the POD-Galerkin ROM linear trial manifolds by varying the number of clusters.

| Nc = 1 | Nc = 2 | Nc = 4 | Nc = 6 |

|---|---|---|---|

| 250 | 219 | 200 | 193 |

| 107 | 35 | 26 |

Maximum and minimum dimensions of the local reduced bases (that is, linear trial manifolds) built by the POD-Galerkin ROM for different numbers Nc of clusters.

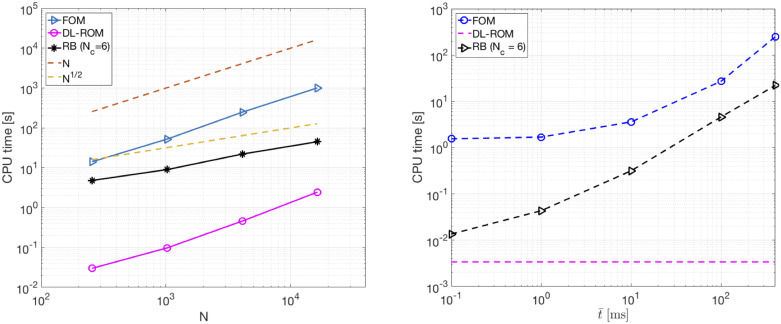

In Fig 5 (left) we compare the CPU time required to solve the FOM (through linear finite elements) over the time interval (0, T), with the one needed by DL-ROM with n = 3, and the POD-Galerkin ROM with Nc = 6 local reduced bases, at testing time, by varying the FOM dimension N. Here, with testing time we refer, both for the DL-ROM and the POD-Galerkin ROM, to the time needed to query the ROM over the whole interval (0, T), by using for each technique the proper time resolution, for a given testing-parameter instance. Since the DL-ROM solution can be queried at a given time without requiring any solution of a dynamical system to recover the former time instances, the DL-ROM can employ larger time windows compared to the time steps required by the solution of the FOM and POD-Galerkin ROM dynamical systems for the cases at hand. This fact also has a positive impact on the data used during the training phase Indeed, in order to build the snapshot matrix, we uniformly sample Nt time instances of the FOM solution over T/Δt = 4000 time steps; for each training parameter instance, only 25% of 4000 snapshots are retained from the FOM solution in the DL-ROM case, against 4000 snapshots in the POD-Galerkin ROM case. The speed-up obtained, for each value of N considered, is reported in Table 2 Both the DL-ROM and the POD-Galerkin ROM allow us to decrease the computational costs associated to the computation of the FOM solution for a testing-parameter instance. However, for a desired level of accuracy, CPU times required by the POD-Galerkin ROM during the testing phase are remarkably higher than the ones required by a DL-ROM with n = 3.

Fig 5. Test 1: FOM, DL-ROM and POD-Galerkin ROM CPU computational times.

Left: CPU time required to solve the FOM, by DL-ROM at testing time with n = 3 and by the POD-Galerkin ROM at testing time with Nc = 6 vs. N. The DL-ROM provides the smallest testing computational time for each N considered. Right: FOM, POD-Galerkin ROM and DL-ROM CPU computational times to compute vs. averaged over the testing set. Thanks to the fact that the DL-ROM can be queried at any time istance it is extremely efficient in computing with respect to both the FOM and the POD-Galerkin ROM.

Table 2. Test 1: DL-ROM and POD-Galerkin ROM vs. FOM speed-up.

| N = 256 | N = 1024 | N = 4096 | N = 16384 | |

|---|---|---|---|---|

| FOM vs. DL-ROM | 472 | 536 | 539 | 412 |

| FOM vs. POD-Galerkin ROM | 3 | 6 | 12 | 22 |

DL-ROM and POD-Galerkin ROM vs. FOM speed-up by varying N. The DL-ROM speed-up is remarkably higher than the one obtained by using the POD-Galerkin ROM.

Both the DL-ROM and the POD-Galerkin ROM depend on the FOM dimension N. In the case of DL-ROM, the dependency on N at testing time, for a fixed value of Δt, is due to the presence of the decoder function; indeed, the process of learning the reduced dynamics (and so the dimension of the nonlinear trial manifold) does not depend on the FOM dimension. On the other hand, the dependence of the POD-Galerkin ROM on the FOM dimension also impacts on the dimension of the local linear trial manifolds: in general, by increasing N the dimension of each local linear subspace also increases. Referring to Fig 5 (left) and Table 2, the CPU time required by the DL-ROM at testing time scales linearly with N, instead the one required by the POD-Galerkin ROM scales linearly with . In particular, even for the larger FOM dimension considered (N = 16384 for this test case), our DL-ROM is 19 times faster than the POD-Galerkin ROM. We are not able to run simulations for N > 16384, because of the limitation of the computing resources we have at our disposal. Despite the trend in Fig 5 (left) is apparently not favorable for the DL-ROM technique, practice indicates that the CPU time for DL-ROM is smaller than the one for the POD-Galerkin ROM for small values of N, in other words only with very large values of N the POD-Galerkin ROM outperforms the DL-ROM strategy. Indeed, a linear fitting of the DL-ROM and the POD-Galerkin ROM CPU times N = 65536 and N = 262144 for this test case represent FOM dimensions corresponding to mesh sizes h needed to solve, by means of linear finite elements, the problem on a 3D slab geometry both for physiological and pathological electrophysiology in the case a ten Tusscher-Panfilov ionic model [50] is used. This latter would indeed require smaller values of h compared to the Aliev-Panfilov model, due to the shape of the AP. See, e.g., [51, 52] for further details. in Fig 5 (left) highlights that for N = 65536 and N = 262144, DL-ROM could be almost 10 and 5 times, respectively, faster than the POD-Galerkin ROM for the same values of N. Note that the results of this section have been obtained by employing the DL-ROM on a single CPU, an architecture which is not favorable to neural networks Indeed, all tests are performed on a node (20 Intel® Xeon® E5-2640 v4 2.4GHz cores), using 5 cores, of our in-house HPC cluster. Further improvements are expected when employing our DL-ROM on a GPU for a given testing-parameter instance.

We highlight that since the DL-ROM solution can be evaluated at any desired time instance without solving any dynamical system, the resulting computational time entailed by the DL-ROM at testing time are drastically reduced compared to the ones required by the FOM or the POD-Galerkin ROM to compute solutions at a particular time instance. In Fig 5 (right) we show the DL-ROM, FOM and POD-Galerkin ROM CPU time needed to compute the approximated solution at , for , 10, 100 and 400 ms averaged over the testing set and with N = 4096. We perform the training phase of the POD-Galerkin ROM over the original time interval (0, T) ms and we report the results for Nc = 6, the number of clusters for which the smallest computational time is obtained. The DL-ROM CPU time to compute does not vary over and, by choosing , the DL-ROM speed-ups are equal to 7.3 × 104 and 6.5 × 103 with respect to the FOM and the POD-Galerkin ROM, with Nc = 6, computational times.

Regarding the training (offline) times, in the case of a FOM dimension N = 4096, training the DL-ROM neural network on a GTX 1070 8GB GPU requires about 21 hours, whereas training the POD-Galerkin ROM (with Nc = 6 local bases) on 5 cores of a node of the HPC cluster at our disposal requires about 3 hours; in both the cases, the time needed to assemble the snapshot matrix S is not included. However, the 7 times higher training time of the DL-ROM is justified by the efficiency gained at testing time; indeed, a query to the DL-ROM online requires 0.08 seconds on a GPU, implying a speedup of about 275 times compared to the POD-Galerkin ROM.

Test 2: Two-dimensional slab with figure of eight re-entry

The most recognized cellular mechanisms sustaining atrial tachycardia is re-entry [53]. The particular kind of re-entry we deal with in this test case is called figure of eight re-entry, and can be obtained by solving Eq (1). To induce the re-entry, we apply a classical S1-S2 protocol [3, 54]. In particular, we consider a square slab of cardiac tissue Ω = (0, 2 cm)2 and apply an initial stimulus (S1) at the bottom edge of the domain, i.e.

| (18) |

where Ω1 = {x ∈ Ω: y ≤ 0.1}, ms and ms. A second stimulus (S2) under the form

| (19) |

with Ω2(μ) = {x ∈ Ω: (x − 1)2 + (y − μ)2 ≤ (0.2)2}, ms and ms, is then applied. Here the parameter μ is the y-coordinate of the center of the second circular stimulus. We analyze two configurations: (i) a first case in which both re-entry and non re-entry cases are generated, by considering cm; (ii) a second case in which instead only re-entrant dynamics are taken into account, and cm. These choices have been made to obtain a re-entry elicited and sustained until T = 175 ms. Moreover, we restrict ourselves to the time interval [95, 175] ms, without considering the time window [0, 95) ms in which the re-entry has not arisen yet, and is common to all μ instances. The time step is Δt = 0.2/12.9. We consider N = 256 × 256 = 65536 grid points, implying a mesh size h = 0.0784 mm; this mesh size is recognized to correctly solve the tiny transition front developing during depolarization of the tissue, see [51, 52]. The fibers are parallel to the x-axis and the conductivities in the longitudinal and transversal directions to the fibers are σl = 2 × 10−3 cm2/ms and σt = 3.1 × 10−4 cm2/ms, respectively. The parameters appearing in (3) are set to K = 8, a = 0.1, b = 0.1, ε0 = 0.01, c1 = 0.14, and c2 = 0.3, see [55].

The snapshot matrix is built by solving problem (1), completed with the applied currents (18) and (19), by means of a semi-implicit scheme, over Nt = 400 time instances. Moreover, we consider Ntrain = 13 training-parameter instances uniformly distributed in the parameter space and Ntest = 12 testing-parameter instances, each of them corresponding to the midpoint of two consecutive training-parameter instances. The maximum number of epochs is set equal to Nepochs = 6000, the batch size is Nb = 3, due to the high GPU memory occupation of each sample. Regarding the early-stopping criterion, we stop the training if the loss does not decrease in 1000 epochs.

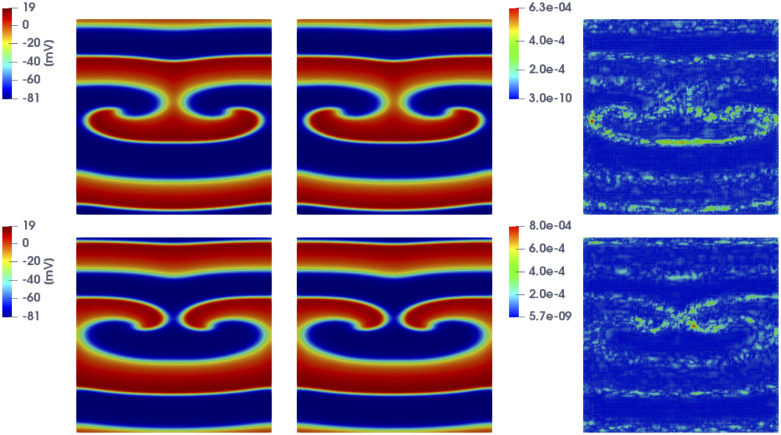

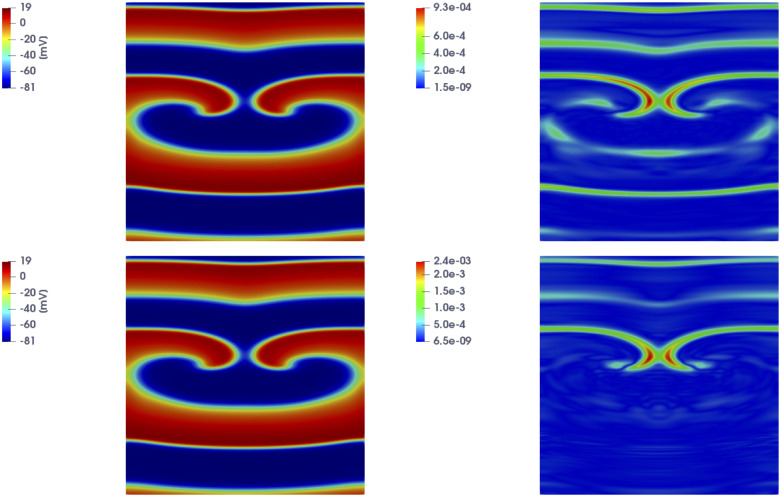

In Fig 6 we show the FOM solution and the DL-ROM one obtained by setting the reduced dimension to n = 5, for the testing-parameter instance μtest = 0.9625 cm, at ms and ms, together with the relative error computed according to (17).

Fig 6. Test 2: Comparison between FOM and DL-ROM solutions for a testing-parameter instance.

FOM solution (left), DL-ROM one (center) with n = 5, and relative error ϵk (right) at ms (top) and ms (bottom), for the testing-parameter instance μtest = 0.9625 cm. The relative error ϵk is below 0.1% at both time instants.

We introduce the relative error , for k = 1, …, Nt, given by

| (20) |

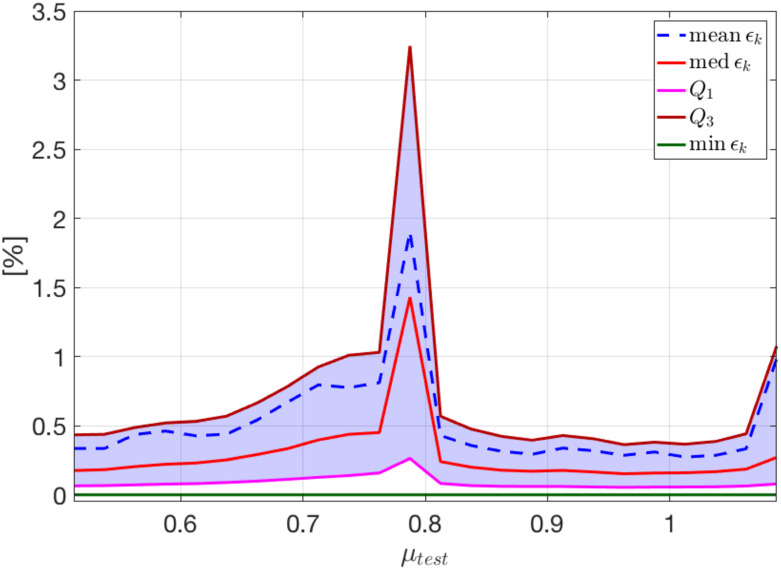

The trend of (20) over time, for the selected testing-parameter instance μtest = 0.9625 cm, is depicted in Fig 7; we highlight that the error is, on average, always smaller than 0.3%. In particular, in Fig 7 we show the mean, the median, and the first and third quartile (all computed with respect to the spatial coordinates) of the relative error, as well as its minimum. The interquartile range (IQR) shows that the distribution of the error is almost uniform over time, and that the maximum error is associated to the first time instant—this latter being the time instant at which the solution is most different over .

Fig 7. Test 2: Trend of the relative error over time.

Relative error vs. with n = 5 for the testing-parameter instance μtest = 0.9625 cm (the red band indicates the IQR). The error distribution is almost uniform over time.

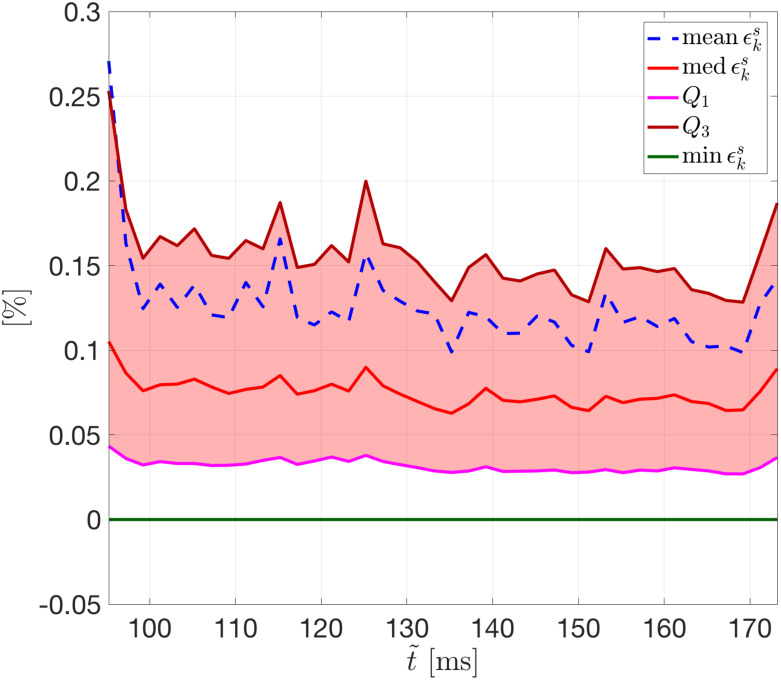

In Fig 8 we show the FOM and the DL-ROM solutions, the latter obtained by setting n = 5, for the last time instance, i.e. at ms, for μtest = 0.6125 cm and μtest = 0.9125 cm, in order to point out the variability of the solution over the parameter space cm and the ability of DL-ROM to capture it. In particular, in Fig 8 we compare the FOM and the DL-ROM solutions for two testing-parameter instances corresponding to (i) a case in which the re-entry does not arise (top), since S2 is too far from the front elicited with S1, i.e. the tissue around S2 is no longer in the refractory period and is able to activate again; (ii) a case in which the re-entry is elicited, the electrical signal follows an alternative circuit looping back upon itself and developing a self-perpetuating rapid and abnormal activation (bottom).

Fig 8. Test 2: Comparison between FOM and DL-ROM solutions for different testing-parameter instances.

FOM solution (left), DL-ROM one (center) with n = 5, and relative error ϵk (right) at ms, for the testing-parameter instance μtest = 0.6125 cm (top) and μtest = 0.9125 cm (bottom). In both cases the relative error ϵk is below 1%.

In Fig 9 we show the trend of the relative error (20) at a selected time instance given by t = 147 ms over the parameter space, reporting the mean, the median, the first and third quartile, as well as its minimum (all computed with respect to the spatial coordinates). We highlight that the error is always smaller than 1%, except for its maximum which is associated to the value of μtest corresponding to the transition between re-entry and non re-entry dynamics.

Fig 9. Test 2: Trend of the relative error over the parameter space.

Relative error vs. μtest with n = 5 for the time instance ms (the violet band indicates the IQR). The maximum error is associated to μtest = 0.7875 cm, the testing-parameter instance between μtrain = 0.775 cm (the last value for which re-entry does not arise) and μtrain = 0.8 cm (the first value for which re-entry is elicited).

Let us now focus on the case in which only re-entrant dynamics are generated, and cm, in order to compare the FOM, the POD-Galerkin ROM and the DL-ROM approximations. In Fig 10 we show the solutions obtained through the POD-Galerkin ROM with Nc = 2 (top) and Nc = 4 (bottom) local reduced bases, along with the relative error defined in (17), for the testing-parameter instance μtest = 0.9625 cm at ms. In both cases, we have considered the setting yielding the most efficient POD-Galerkin ROM approximation, which require about 30 (40, respectively) seconds to be evaluated. By comparing Figs 10 and 6 (bottom), we observe that the DL-ROM outperforms the POD-Galerkin ROM in terms of accuracy.

Fig 10. Test 2: POD-Galerkin ROM solutions for different testing-parameter instances.

POD-Galerkin ROM solution (left) and relative error ϵk (right) for Nc = 2 (top) and Nc = 4 (bottom) at ms, for μtest = 0.9625 cm.

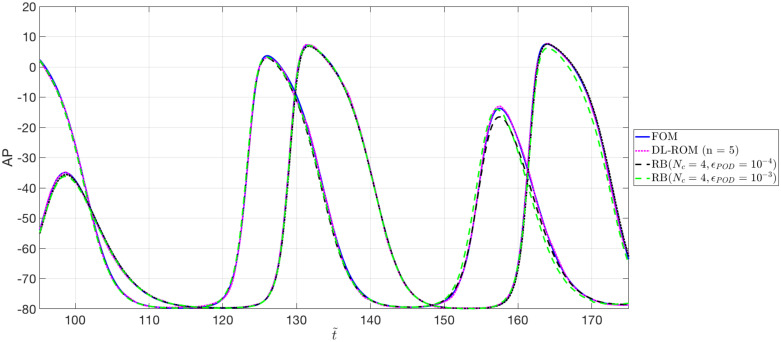

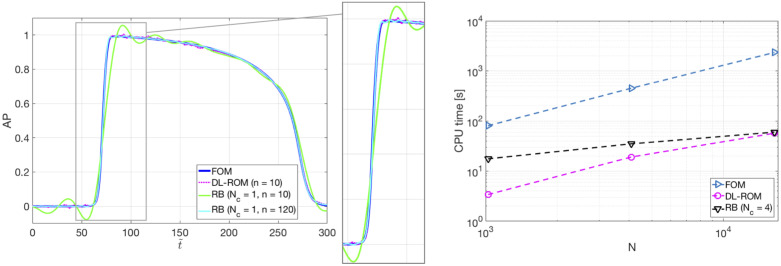

In Fig 11 we show the action potentials obtained through the FOM, the DL-ROM and the POD-Galerkin ROM (with Nc = 4 local reduced bases), for the testing-parameter instance μtest = 0.9625 cm, evaluated at P1 = (0.64, 1.11) cm and P2 = (0.69, 1.03) cm. These two points are close to the left core of the figure of eight re-entry, where a shorter action potential duration, and lower peak values of AP, with respect to a healthy case, due to the meandering of the cores, are observed. The AP dynamics at those points is accurately captured by the DL-ROM, while the POD-Galerkin ROM leads to slightly less accurate results requiring larger testing times.

Fig 11. Test 2: FOM, DL-ROM and POD-Galerkin ROM APs at P1 and P2.

AP obtained through the FOM, the DL-ROM and the POD-Galerkin ROM with Nc = 4, for the testing-parameter instance μtest = 0.9625 cm, at P1 = (0.64, 1.11) cm and P2 = (0.69, 1.03) cm. The POD-Galerkin ROM approximations are obtained by imposing a POD tolerance εPOD = 10−4 and 10−3, resulting in error indicator (15) values equal to 5.5 × 10−3 and 7.6 × 10−3, respectively.

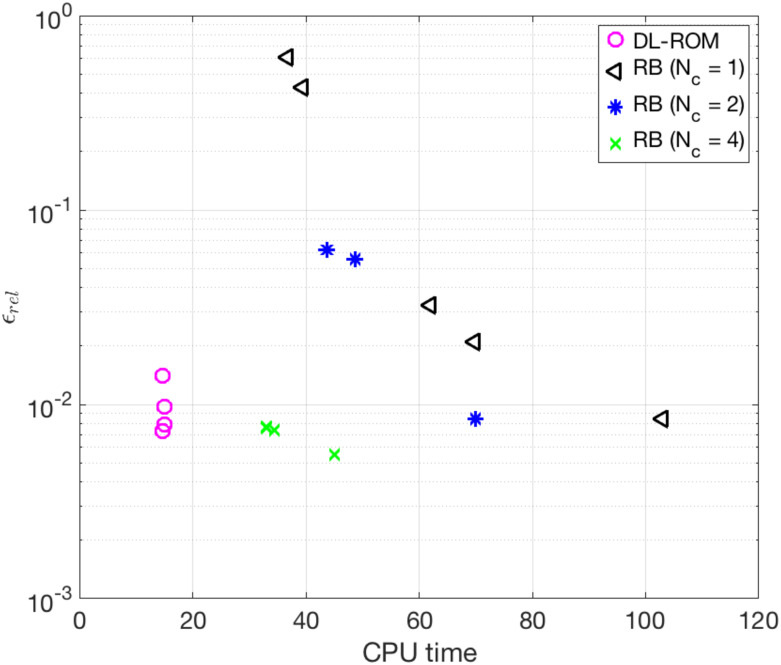

We now compare the computational times required by the FOM, the POD-Galerkin ROM (for different values of Nc) and the DL-ROM, keeping for all the same degree of accuracy achieved by DL-ROM, i.e. ϵrel = 7.87 × 10−3, and running the code on the hardware each implementation is optimized for—a CPU for the FOM and the POD-Galerkin ROM, a GPU Indeed, at each layer of a neural network thousands of identical computations must be performed. The most suitable hardware architectures to carry out this kind of operations are GPUs because (i) they have more computational units (cores) and (ii) they have a higher bandwidth to retrieve from memory. Moreover, in applications requiring image processing, as CNNs, the graphics specific capabilities can be further exploited to speed up calculations. for the DL-ROM. In Table 3 we report the CPU time needed to compute the FOM solution and the POD-Galerkin ROM approximation (online, at testing phase), both on a full 64 GB node (20 Intel® Xeon® E5-2640 v4 2.4GHz cores), and the GPU time required by the DL-ROM to compute 875 time instances (the same number of time instants considered in the solution of the dynamical systems associated to the FOM and the POD-Galerkin ROM) at testing time, by fixing its dimension to n = 5, on an Nvidia GeForce GTX 1070 8 GB GPU. For the sake of completeness, we also report the computational time required by the DL-ROM when employing a single CPU node. It is evident that a POD-Galerkin ROM, built employing a global reduced basis (Nc = 1), is not amenable to a complex and challenging pathological cardiac electrophysiology problem like the figure of eight re-entry. Using a nonlinear approach, for which the solution manifold is approximated through a piecewise linear trial manifold (as in the case of Nc = 2 or Nc = 4 local reduced bases) reduces the online computational time. However, the DL-ROM still confirms to provide a more efficient ROM, almost 5 (or 2) times faster on the CPU, and 39 (or 19) faster on the GPU, than the POD-Galerkin ROM with Nc = 2 (or Nc = 4) local reduced bases.

Table 3. Test 2: FOM, POD-Galerkin ROM and DL-ROM computational times.

| time [s] | FOM/ROM dimensions | |

|---|---|---|

| FOM (CPU) | 382 | N = 65536 |

| DL-ROM (CPU/GPU) | 15/1.2 | n = 5 |

| POD-Galerkin ROM Nc = 1 (CPU) | 103 | n = 1538 |

| POD-Galerkin ROM Nc = 2 (CPU) | 70 | n = 1158, 751 |

| POD-Galerkin ROM Nc = 4 (CPU) | 33 | n = 435, 365, 298, 45 |

POD-Galerkin ROM and DL-ROM computational times along with FOM and reduced trial manifold(s) dimensions. DL-ROM provides a more efficient ROM with respect to the POD-Galerkin ROMs.

In Fig 12 we show the trend of the error indicator (15) over the testing set versus the CPU time both for the DL-ROM and the POD-Galerkin ROM at testing phase. Slight improvements of the performance of DL-ROM, in terms of accuracy, are obtained for a small increase of the DL-ROM dimension n, coherently with our previous findings reported in [26]. Indeed, the DL-ROM is able, also in this case, to accurately represent the solution manifold by a reduced nonlinear trial manifold of dimension nμ + 1 = 2; for the case at hand, we report the results for n = 5 (very close to the intrinsic dimension nμ + 1 = 2 of the problem, and much smaller than the POD-Galerkin ROM dimension), providing slightly smaller values of the error indicator (15) than in the case n = 2. Regarding instead the POD-Galerkin ROM, in Fig 12 we report results obtained for different tolerances εPOD = 10−4, 5 ⋅ 10−4, 10−3, 5 ⋅ 10−3, 10−2. In the cases Nc = 2 and Nc = 4 we only report the results related to the smallest POD tolerances, which indeed allow us to meet the prescribed accuracy on the approximation of the gating variable, which would otherwise impact dramatically on the overall accuracy of the POD-Galerkin ROM. Moreover, we do not consider more than Nc = 4 local reduced bases in order not to generate too small local linear subspaces, which would be otherwise unable to approximate the variability of the solution over the parameter space accurately. Indeed, by considering a larger number of clusters, the dimension of some linear subspaces becomes so small that the error would start to increase compared to the one obtained with fewer clusters. As shown in Fig 12, the proposed DL-ROM outperforms the POD-Galerkin ROM in terms of both efficiency and accuracy.

Fig 12. Test 2: Trend of the error indicator versus the CPU testing computational time.

Error indicator ϵrel vs. CPU testing computational time for different values of Nc and εPOD. The DL-ROM outperforms the POD-Galerkin ROM in terms of both efficiency and accuracy.

Regarding the training (offline) times, in the case of a FOM dimension N = 4096, training the DL-ROM neural network on a GTX 1070 8GB GPU requires about 64 hours, whereas training the POD-Galerkin ROM (with Nc = 4 local bases) on a full node (20 Intel®Xeon® E5-2640 v4 2.4GHz cores) of a HPC cluster requires about 4 hours; in both cases, the time needed to assemble the snapshot matrix S is not included. The DL-ROM training time is related to the value chosen during the hyper-parameters tuning for the batch size, i.e. Nb = 3; indeed we highlight that by choosing a slightly higher value of Nb, it is possible to decrease the GPU computational training time as long as we look for a lower accuracy. The fact that the DL-ROM training time is 16 times higher than the POD-Galerkin ROM one is again justified by the efficiency introduced at testing time. Indeed, a query to the DL-ROM online requires 1.2 seconds on a GPU, implying a speedup of about 28 times compared to the POD-Galerkin ROM.

Test 3: Three-dimensional ventricle geometry

We consider the solution of the coupled system (1) in a three-dimensional left ventricle (LV) geometry, obtained from the 3D Human Heart Model provided by Zygote [56]. Here, we consider a single (nμ = 1) parameter, given by the longitudinal conductivity in the fibers direction. The conductivity tensor takes the form

| (21) |

where σt = 12.9 ⋅ 0.02 mm2/ms; f0 is determined at each mesh point through a rule-based approach, by solving a suitable Laplace problem [57]. The resulting fibers field is reported in S2 File. The applied current is defined as

where ms, C = 1000 mA, α = 50, β = 50 mm2, mm.

In order to build the snapshot matrix S, we solve problem (1) completed with the conductivity tensor (21) by means of linear finite elements, on a mesh made by N = 16365 vertices, and a semi-implicit scheme in time over a uniform partition of (0, T) with T = 300 ms and time step Δt = 0.1/12.9. We uniformly sample Nt = 1000 time instances in (0, T) and we zero-padded [44] the snapshot matrix to reshape each column in a 2D square matrix. The parameter space is provided by mm2/ms; here we consider Ntrain = 25 training-parameter instances and Ntest = 24 testing-parameter instances computed as in Test 2. In this case, the maximum number of epochs is set to Nepochs = 30000, the batch size is Nb = 40 and the training is stopped if the loss does not decrease over 4000 epochs.

In Fig 13 we report the FOM solution for two testing-parameter instances, i.e. μ = 12.9 ⋅ 0.0739 mm2/ms and μ = 12.9 ⋅ 0.1991 mm2/ms, at ms, to show the variability of the FOM solution over the parameter space. As expected, front propagation is faster for larger values of the parameter μ.

Fig 13. Test 3: FOM solutions for different testing-parameter instances.

FOM solutions for μ = 12.9 ⋅ 0.0739 mm2/ms (left) and μ = 12.9 ⋅ 0.1991 mm2/ms (right) at ms. By increasing the value of μ the wavefront propagates faster.

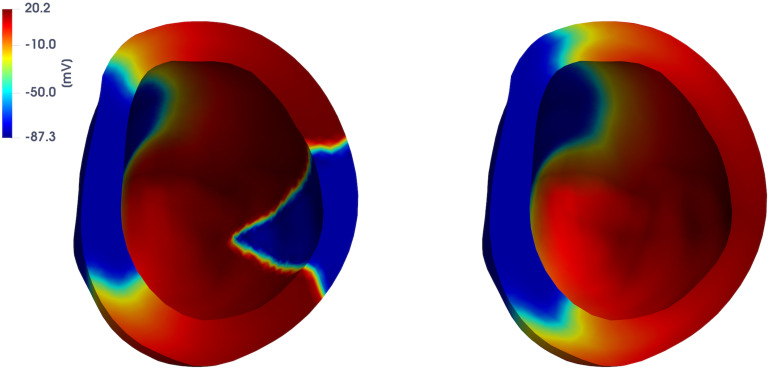

In Figs 14 and 15 we report the FOM and DL-ROM solutions, the latter with n = 10, at ms and ms, for two testing-parameter instances, μtest = 12.9 ⋅ 0.1435 mm2/ms and μtest = 12.9 ⋅ 0.3243 mm2/ms. The DL-ROM approximation is essentially as accurate as the FOM solution.

Fig 14. Test 3: Comparison between FOM and DL-ROM solutions for a testing-parameter instance at different time instances.

FOM solution (left) and DL-ROM one (right), with n = 10, at ms (top) and ms (bottom), for the testing-parameter instance μtest = 12.9 ⋅ 0.1435 mm2/ms.

Fig 15. Test 3: Comparison between FOM and DL-ROM solutions for a testing-parameter instance at different time instances.

FOM solution (left) and DL-ROM one (right), with n = 10, at ms (top) and ms (bottom), for the testing-parameter instance μtest = 12.9 ⋅ 0.3243 mm2/ms.

Also for this test case, it is possible to build a reduced nonlinear trial manifold of dimension very close to the intrinsic one—nμ + 1 = 2—as long as the maximum number of epochs Nepochs is increased; the choice n = 10 is obtained as the best trade-off between accuracy and efficiency of the DL-ROM approximation in this case.

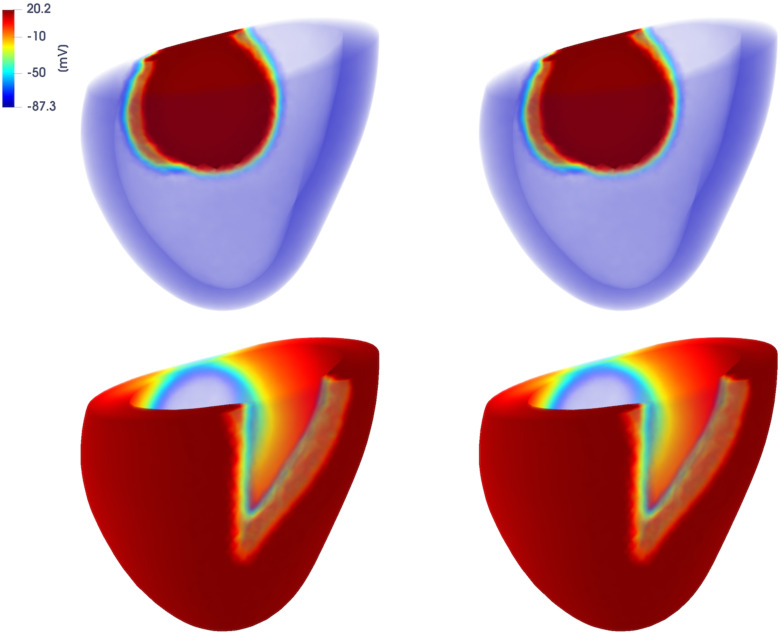

In Fig 16 (left) we report the APs obtained by the FOM and the DL-ROM, this latter with n = 10, computed at point P = [36.56; 1329.59; 28.82] mm for the testing-parameter instance μtest = 12.9 · 0.31 mm2/ms. For the sake of comparison, we also report the POD-Galerkin ROM approximation, with Nc = 1, of dimension n = 10 and n = 120. Clearly, in dimension n = 10 the DL-ROM approximation is far more accurate than the POD-Galerkin ROM approximation; to reach the same accuracy (about ϵrel = 5.9 × 10−3, measured through the error indicator (15)) achieved by the DL-ROM with n = 10, n = 120 POD modes would be required. In Fig 16 (right) we highlight instead the improvements, in terms of efficiency, enabled by the use of the DL-ROM technique; we report the CPU time required to solve the FOM for a testing-parameter instance, the one required by DL-ROM (of dimension n = 10) at testing time and by the POD-Galerkin ROM with Nc = 4 (n = 68, 81, 82, 45), by using the time resolution each technique requires and by varying the FOM dimension N on a 6-core platform Numerical tests have been performed on a MacBook Pro Intel Core i7 6-core with 16 GB RAM. The FOM solution with N = 16365 degrees of freedom requires about 40 minutes to be computed, against 57 seconds required by the DL-ROM approximation, thus implying a speed-up almost equal to 41 times.

Fig 16. Test 3: FOM, DL-ROM and POD-Galerkin ROM APs for a testing-parameter instance.

FOM and DL-ROM CPU computational times. Left: FOM, DL-ROM and POD-Galerkin ROM APs for the testing-parameter instance μtest = 12.9 ⋅ 0.31 mm2/ms. For the same n, the DL-ROM is able to provide more accurate results than the POD-Galerkin ROM. Right: CPU time required to solve the FOM, by DL-ROM with n = 10 and by the POD-Galerkin ROM with Nc = 6 at testing time vs N. The DL-ROM is able to provide a speed-up equal to 41.

Regarding the training (offline) times for this test case, featuring a FOM dimension N = 16365, training the DL-ROM neural network on a GTX 1070 8GB GPU requires about 160 hours, whereas training the POD-Galerkin ROM (with Nc = 4 local bases) on a full node (20 Intel®Xeon® E5-2640 v4 2.4GHz cores) of a HPC cluster requires about 28 hours; in both cases, the time needed to assemble the snapshot matrix S is not included. We report also the GPU testing computational time of DL-ROM which is equal to 0.35 seconds thus obtaining a speed-up, with respect the POD-Galerkin ROM testing time with Nc = 4, equal to 172. The efficiency introduced at testing time justifies the higher training time of DL-ROM.

Test 4: Two-dimensional slab with varying restitution properties

To take into account a case in which parameters also affect the ionic model, we finally focus on the solution of problem (1) in a square slab of cardiac tissue Ω = (0, 10) cm, considering nμ = 3 parameters possibly reflecting intra- and inter- subjects variability. More precisely, the conductivity tensor now takes the form

where μ1 and μ2 consist of the electric conductivities in the longitudinal and the transversal direction to the fibers f0 = (1, 0)T, respectively, and μ3 regulates the action potential duration (APD) by defining

The parameters belong to the parameter space and the applied current is defined as in Test 1.

For the training phase, we uniformly sample Nt = 1000 time instances in the interval (0, T) and consider Ntrain = 5 × 5 × 5 = 125 training-parameters, i.e. μtrain = (12.9 ⋅ (0.06 + i0.035), 12.9 ⋅ (0.03 + j0.0175), 0.15 + s0.025) with i, j, s = 0, …, 4. For the testing phase, Ntest = 16 testing-parameter instances have been considered, each of them given by μtest = (12.9 ⋅ (0.0775 + i0.035), 12.9 ⋅ (0.0387 + j0.0175), 0.1625+ s0.025) with i, j, s = 0, …, 3. The maximum number of epochs is Nepochs = 10000, the batch size is Nb = 40 and, regarding the early-stopping criterion, we stop the training if the loss function does not decrease along 500 epochs.

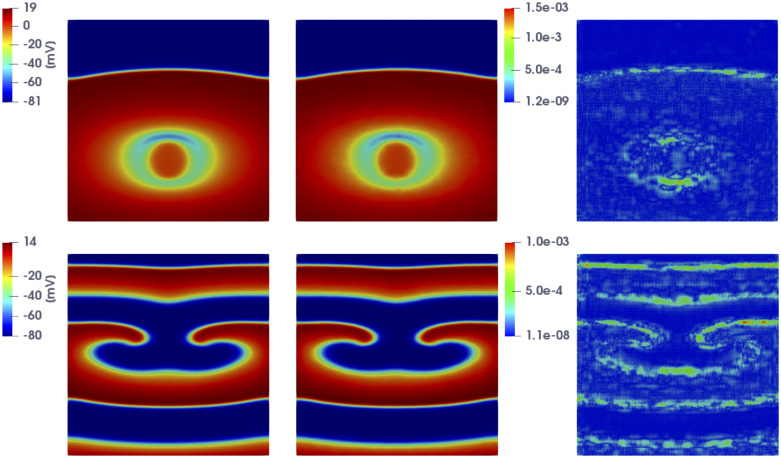

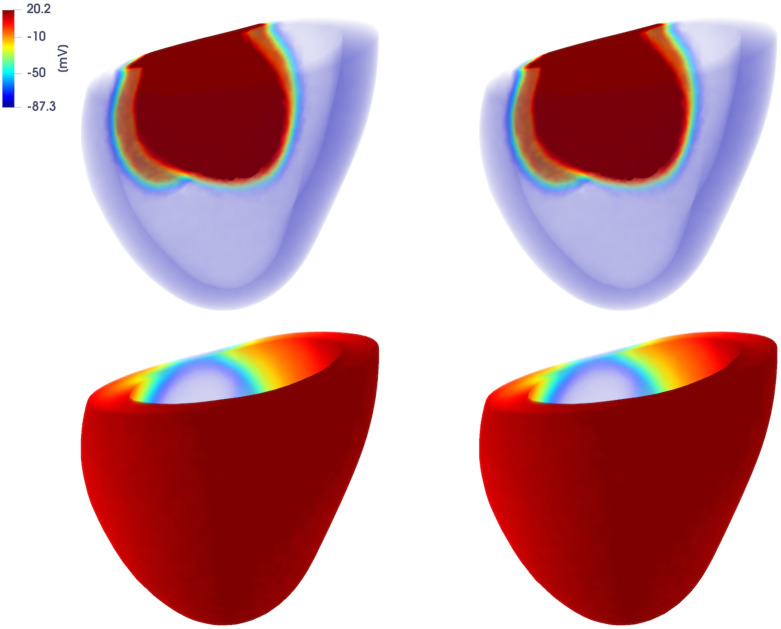

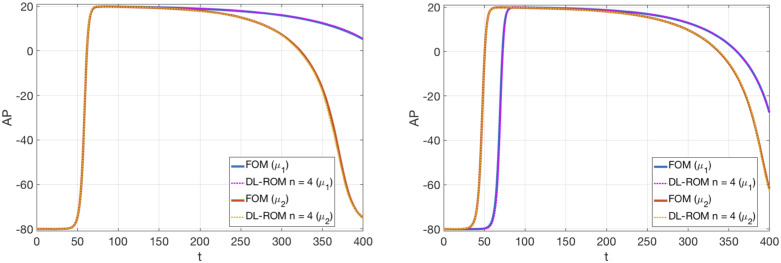

In Fig 17 we show the FOM and the DL-ROM approximation, this latter with n = 4, at ms for the testing-parameter innstaces μtest = (12.9 · 0.1125 cm2/ms, 12.9. 0.0563 cm2/ms, 0.1875) and μtest = (12.9 · 0.1475 cm2/ms, 12.9 · 0.0737 cm2/ms, 0.2375), together with the relative error defined as in (17). The DL-ROM technique is able to capture the strong variability of the solution over the parameter space. Indeed, in Fig 17 (top) the tissue is almost completely depolarized whereas in Fig 17 (bottom) repolarization has already started. The error indicator, computed as in (15) over these Ntest = 16 testing-parameter instances, is equal to 5.4 × 10−3.

Fig 17. Test 4: Comparison between FOM and DL-ROM solutions for different testing-parameter instances.

FOM solution (left), DL-ROM solution with n = 4 (center) and relative error ϵk (right) for the testing-parameter instances μtext = (12.9 · 0.1125 cm2/ms, 12.9 · 0.0563 cm2/ms, 0.1875) (top) and μtext = (12.9 · 0.1475 cm2/ms, 12.9 · 0.0737 cm2/ms, 0.2375) (bottom) at ms. The maximum of the relative error ϵk is about 10−3.

In Fig 18 we compare the FOM and the DL-ROM APs at x = (9.524, 4.762) cm, by considering the effect of the different parameters separately. More precisely, in Fig 18 (left) we let μ3 vary, i.e. we take and . In Fig 18 (right) instead we only vary μ1 and μ2, i.e. we take and . In both cases, the DL-ROM correctly reproduces the APD variability and the different depolarization patterns.

Fig 18. Test 4: Comparison between FOM and DL-ROM solutions for different testing-parameter instances.

APs obtained through the FOM and the DL-ROM with n = 4. Left: and ; right: and . The DL-ROM approximation accurately reproduces the APD variability and the different depolarization patterns.

Finally, we report in Table 4 the training and testing computational times of the DL-ROM, on a GTX 1070 8 GB GPU, by considering either nμ = 2 or 3 parameters:

nμ = 2, Nrain = 5 × 5 = 25, Nt = 1000, with

nμ = 3, Nrain = 5 × 5 × 5 = 125, Nt = 1000, with

Table 4. Test 4: DL-ROM training and testing computational times.

| nμ | Ntrain | train. time | nepochs | test.time |

|---|---|---|---|---|

| 2 | 25 | 15 h | 6981 | 0.08 s |

| 3 | 125 | 5 h | 449 | 0.08 s |

Number of parameters, of training-parameter instances and of epochs together with training and testing computational times in the two configurations.

to analyze the effect of introducing an additional parameter on the training time for a prescribed level of accuracy, keeping the architecture of the network fixed. The training time refers to the overall training and validation time, while the testing one refers to the time needed by the DL-ROM to compute Nt time instances of the solution for a given testing-parameter instance. In this case, considering Ntrain = 125 training-parameter instances allows us to reduce the training computational time of a factor 3, even if more parameters are considered, a larger training set is provided. However, we highlight that stating general conclusions about the training complexity and costs, as a function of the number of parameters and the training set dimensions, is far from being trivial, and still represents an open issue in this framework.

Conclusion

In this work we have proposed a new efficient reduced order model obtained using deep learning algorithms to boost the solution of parametrized problems in cardiac electrophysiology. Numerical results show that the resulting DL-ROM technique, formerly introduced in [26], allows one to accurately capture complex wave propagation processes, both in physiological and pathological scenarios.

The proposed DL-ROM technique provides ROMs that are orders of magnitude more efficient than the ones provided by common linear (projection-based) ROMs, built for instance through a POD-Galerkin reduced basis method, for a prescribed level of accuracy. Through the use of DL-ROM, it is possible to overcome the main computational bottlenecks shown by POD-Galerkin ROMs, when addressing parametrized problems in cardiac electrophysiology. The most critical points related to (i) the linear superimposition of modes which linear ROMs are based on; (ii) the need to account for the gating variables when solving the reduced dynamics, even if not required; and (iii) the necessity to use (very often, expensive) hyper-reduction techniques to deal with terms that depend nonlinearly on either the transmembrane potential or the input parameters, are all addressed by the DL-ROM technique, which finally yields more efficient and accurate approximation than POD-Galerkin ROMs. Moreover, larger time resolutions can be employed when using a DL-ROM, compared to the ones required by the numerical solution of a dynamical systems through a FOM or a POD-Galerkin ROM. Indeed, the DL-ROM approximation can be queried at any desired time, without requiring to solve a dynamical system until that time, thus drastically decreasing the computational time required to compute the approximated solution at any given time.

Outputs of clinical interest, such as activation maps and action potentials, can be more efficiently evaluated by the DL-ROM technique than by a FOM built through the finite element method, while maintaining a high level of accuracy. This work is a proof-of-concept of the DL-ROM technique ability to investigate intra- and inter- subjects variability, towards performing multi-scenario analyses in real time and, ultimately, supporting decisions in clinical practice. In this respect, the use of DL-ROM techniques can foster assimilation of clinical data with physics-driven computational models.

So far, the training time required by the DL-ROM technique appears to be the major computational bottleneck, even if it is completely compensated by the great computational efficiency provided at testing time. Enhancing efficiency also during the training phase represents the focus of our ongoing research activity, and will be the object of a forthcoming publication.

Supporting information

We provide the complete derivation of the spatial (and temporal) discretization of system (1).

(PDF)

Here we report the configuration of the DL-ROM neural network used for our numerical tests.

(PDF)

In the notes we report the feature maps of the DL-ROM neural network.

(PDF)

Here we report the resulting fibers distribution used for Test 3.

(PDF)

Here we show the ability of the DL-ROM approximation to replace the FOM solution when evaluating outputs of interest.

(PDF)

(TXT)

(EPS)

(EPS)

(EPS)

Acknowledgments

The authors acknowledge Dr. S. Pagani (MOX, Politecnico di Milano) for the kind help in the FOM software development, and Dr. M. Fedele for providing us the computational mesh of the Zygote Solid 3D heart model.

Data Availability

The code used in this work is publicly available on GitHub (https://github.com/stefaniafresca/DL-ROM). The training and testing datasets are available on Zenodo (doi.org/10.5281/zenodo.4022140).

Funding Statement

- A. Q. - ERC Advanced Grant iHEART (ERC2016AdG, project ID: 740132) - H2020-EU.1.1. - cordis.europa.eu/project/id/740132 - The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Quarteroni A, Manzoni A, Vergara C. The cardiovascular system: Mathematical modeling, numerical algorithms, clinical applications. Acta Numerica. 2017;26:365–590. 10.1017/S0962492917000046 [DOI] [Google Scholar]

- 2. Quarteroni A, Dedè L, Manzoni A, Vergara C. Mathematical modelling of the human cardiovascular system: Data, numerical approximation, clinical applications Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press; 2019. [Google Scholar]

- 3. Colli Franzone P, Pavarino LF, Scacchi S. Mathematical cardiac electrophysiology vol. 13 of MS&A. Springer; 2014. [Google Scholar]

- 4. Sundnes J, Lines GT, Cai X, Nielsen BF, Mardal KA, Tveito A. Computing the electrical activity in the heart. vol. 1 Springer Science & Business Media; 2007. [Google Scholar]

- 5. Colli Franzone P, Pavarino LF. A parallel solver for reaction–diffusion systems in computational electrocardiology. Mathematical Models and Methods in Applied Sciences. 2004;14(06):883–911. 10.1142/S0218202504003489 [DOI] [Google Scholar]

- 6. Sundnes J, Artebrant R, Skavhaug O, Tveito A. A Second-Order Algorithm for Solving Dynamic Cell Membrane Equations. IEEE Transactions on Biomedical Engineering. 2009;56(10):2546–2548. 10.1109/TBME.2009.2014739 [DOI] [PubMed] [Google Scholar]

- 7. Cervi J, Spiteri RJ. A comparison of fourth-order operator splitting methods for cardiac simulations. Applied Numerical Mathematics. 2019;145:227–235. 10.1016/j.apnum.2019.06.002 [DOI] [Google Scholar]

- 8. Bendahmane M, Bürger R, Ruiz-Baier R. A multiresolution space-time adaptive scheme for the bidomain model in electrocardiology. Numerical Methods for Partial Differential Equations. 2010;26(6):1377–1404. 10.1002/num.20495 [DOI] [Google Scholar]

- 9. Sachetto Oliveira R, Martins Rocha B, Burgarelli D, Meira W Jr, Constantinides C, Weber Dos Santos R. Performance evaluation of GPU parallelization, space-time adaptive algorithms, and their combination for simulating cardiac electrophysiology. International Journal for Numerical Methods in Biomedical Engineering. 2018;34(2):e2913 10.1002/cnm.2913 [DOI] [PubMed] [Google Scholar]

- 10. Mirams GR, Pathmanathan P, Gray RA, Challenor P, Clayton RH. Uncertainty and variability in computational and mathematical models of cardiac physiology. The Journal of Physiology. 2016;594.23:6833–6847. 10.1113/JP271671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Johnstone RH, Chang ETY, Bardenet R, de Boer TP, Gavaghan DJ, Pathmanathan P, et al. Uncertainty and variability in models of the cardiac action potential: Can we build trustworthy models? Journal of Molecular and Cellular Cardiology. 2016;96:49–62. 10.1016/j.yjmcc.2015.11.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hurtado DE, Castro S, Madrid P. Uncertainty quantification of two models of cardiac electromechanics. International Journal for Numerical Methods in Biomedical Engineering. 2017;33(12):e2894 10.1002/cnm.2894 [DOI] [PubMed] [Google Scholar]

- 13. Clayton RH, Aboelkassem Y, Cantwell CD, Corrado C, Delhaas T, Huberts W, et al. An audit of uncertainty in multi-scale cardiac electrophysiology models. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2020;378(2173):20190335 10.1098/rsta.2019.0335 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Dhamala J, Arevalo HJ, Sapp J, Horácek BM, Wu KC, Trayanova NA, et al. Quantifying the uncertainty in model parameters using Gaussian process-based Markov chain Monte Carlo in cardiac electrophysiology. Medical Image Analysis. 2018;48:43–57. 10.1016/j.media.2018.05.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Quaglino A, Pezzuto S, Koutsourelakis PS, Auricchio A, Krause R. Fast uncertainty quantification of activation sequences in patient-specific cardiac electrophysiology meeting clinical time constraints. International Journal for Numerical Methods in Biomedical Engineering. 2018;34(7):e2985 10.1002/cnm.2985 [DOI] [PubMed] [Google Scholar]

- 16. Johnston BM, Coveney S, Chang ET, Johnston PR, Clayton RH. Quantifying the effect of uncertainty in input parameters in a simplified bidomain model of partial thickness ischaemia. Medical & Biological Engineering & Computing. 2018;56(5):761–780. 10.1007/s11517-017-1714-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Pathmanathan P, Cordeiro JM, Gray RA. Comprehensive uncertainty quantification and sensitivity analysis for cardiac action potential models. Frontiers in Physiology. 2019;10 10.3389/fphys.2019.00721 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Levrero-Florencio F, Margara F, Zacur E, Bueno-Orovio A, Wang Z, Santiago A, et al. Sensitivity analysis of a strongly-coupled human-based electromechanical cardiac model: Effect of mechanical parameters on physiologically relevant biomarkers. Computer Methods in Applied Mechanics and Engineering. 2020;361:112762 10.1016/j.cma.2019.112762 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Niederer SA, Aboelkassem Y, Cantwell CD, Corrado C, Coveney S, Cherry EM, et al. Creation and application of virtual patient cohorts of heart models. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2020;378(2173):20190558 10.1098/rsta.2019.0558 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Coveney S, Corrado C, Roney CH, O’Hare D, Williams SE, O’Neill MD, et al. Gaussian process manifold interpolation for probabilistic atrial activation maps and uncertain conduction velocity. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2020;378(2173):20190345 10.1098/rsta.2019.0345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Longobardi S, Lewalle A, Coveney S, Sjaastad I, Espe EKS, Louch WE, et al. Predicting left ventricular contractile function via Gaussian process emulation in aortic-banded rats. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2020;378(2173):20190334 10.1098/rsta.2019.0334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Lei CL, Ghosh S, Whittaker DG, Aboelkassem Y, Beattie KA, Cantwell CD, et al. Considering discrepancy when calibrating a mechanistic electrophysiology model. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2020;378(2173):20190349 10.1098/rsta.2019.0349 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Neic A, Campos FO, Prassl AJ, Niederer SA, Bishop MJ, Vigmond EJ, et al. Efficient computation of electrograms and ECGs in human whole heart simulations using a reaction-eikonal model. Journal of Computational Physics. 2017;346:191–211. 10.1016/j.jcp.2017.06.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Quarteroni A, Manzoni A, Negri F. Reduced basis methods for partial differential equations: An introduction. vol. 92 Springer; 2016. [Google Scholar]

- 25. Pagani S, Manzoni A, Quarteroni A. Numerical approximation of parametrized problems in cardiac electrophysiology by a local reduced basis method. Computer Methods in Applied Mechanics and Engineering. 2018;340:530–558. 10.1016/j.cma.2018.06.003 [DOI] [Google Scholar]

- 26.Fresca S, Dedé L, Manzoni A. A comprehensive deep learning-based approach to reduced order modeling of nonlinear time-dependent parametrized PDEs. arXiv preprint arXiv:200104001. 2020.

- 27. Guo M, Hesthaven JS. Reduced order modeling for nonlinear structural analysis using Gaussian process regression. Computer Methods in Applied Mechanics and Engineering. 2018;341:807–826. 10.1016/j.cma.2018.07.017 [DOI] [Google Scholar]

- 28. Guo M, Hesthaven JS. Data-driven reduced order modeling for time-dependent problems. Computer Methods in Applied Mechanics and Engineering. 2019;345:75–99. 10.1016/j.cma.2018.10.029 [DOI] [Google Scholar]

- 29. Hesthaven J, Ubbiali S. Non-intrusive reduced order modeling of nonlinear problems using neural networks. Journal of Computational Physics. 2018;363:55–78. 10.1016/j.jcp.2018.02.037 [DOI] [Google Scholar]

- 30. San O, Maulik R. Neural network closures for nonlinear model order reduction. Advances in Computational Mathematics. 2018;44(6):1717–1750. 10.1007/s10444-018-9590-z [DOI] [Google Scholar]

- 31.Kani JN, Elsheikh AH. DR-RNN: A deep residual recurrent neural network for model reduction. arXiv preprint arXiv:170900939. 2017.

- 32.Mohan A, Gaitonde DV. A deep learning based approach to reduced order modeling for turbulent flow control using LSTM neural networks. arXiv preprint arXiv:18040926. 2018.

- 33. Wan Z, Vlachas P, Koumoutsakos P, Sapsis T. Data-assisted reduced-order modeling of extreme events in complex dynamical systems. PloS one. 2018;13 10.1371/journal.pone.0197704 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.González FJ, Balajewicz M. Deep convolutional recurrent autoencoders for learning low-dimensional feature dynamics of fluid systems. arXiv preprint arXiv:180801346. 2018.

- 35.Lee K, Carlberg K. Model reduction of dynamical systems on nonlinear manifolds using deep convolutional autoencoders. arXiv preprint arXiv:181208373. 2018.

- 36.Klabunde R. Cardiovascular Physiology Concepts. Wolters Kluwer Health/Lippincott Williams & Wilkins; 2011. Available from: https://books.google.it/books?id=27ExgvGnOagC.

- 37. Aliev RR, Panfilov AV. A simple two-variable model of cardiac excitation. Chaos Solitons Fractals. 1996;7(3):293–301. 10.1016/0960-0779(95)00089-5 [DOI] [Google Scholar]

- 38. FitzHugh R. Impulses and physiological states in theoretical models of nerve membrane. Biophysical journal. 1961;1(6):445–466. 10.1016/s0006-3495(61)86902-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Nagumo J, Arimoto S, Yoshizawa S. An active pulse transmission line simulating nerve axon. Proceedings of the IRE. 1962;50(10):2061–2070. 10.1109/JRPROC.1962.288235 [DOI] [Google Scholar]

- 40. Nash MP, Panfilov AV. Electromechanical model of excitable tissue to study reentrant cardiac arrhythmias. Progress in Biophysics and Molecular Biology. 2004;85:501–522. 10.1016/j.pbiomolbio.2004.01.016 [DOI] [PubMed] [Google Scholar]

- 41. Mitchell CC, Schaeffer DG. A two-current model for the dynamics of cardiac membrane. Bulletin of Mathematical Biology. 2003;65(5):767–793. 10.1016/S0092-8240(03)00041-7 [DOI] [PubMed] [Google Scholar]

- 42. Clayton RH, Bernus O, Cherry EM, Dierckx H, Fenton FH, Mirabella L, et al. Models of cardiac tissue electrophysiology: Progress, challenges and open questions. Progress in Biophysics and Molecular Biology. 2011;104(1):22–48. 10.1016/j.pbiomolbio.2010.05.008 [DOI] [PubMed] [Google Scholar]

- 43. Quarteroni A, Valli A. Numerical approximation of partial differential equations. vol. 23 Springer; 1994. [Google Scholar]

- 44.Goodfellow I, Bengio Y, Courville A. Deep Learning. MIT Press; 2016. Available from: http://www.deeplearningbook.org.

- 45. LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient based learning applied to document recognition. Proceedings of the IEEE. 1998; p. 533–536. [Google Scholar]

- 46.Hinton GE, Zemel RS. Autoencoders, minimum description length, and Helmholtz free energy. Proceedings of the 6th International Conference on Neural Information Processing Systems (NIPS’1993). 1994.

- 47.Kingma DP, Ba J. Adam: A method for stochastic optimization. International Conference on Learning Representations (ICLR); 2015.

- 48.Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, et al. TensorFlow: A system for large-scale machine learning; 2016. Available from: https://www.usenix.org/system/files/conference/osdi16/osdi16-abadi.pdf.

- 49. Göktepe S, Wong J, Kuhl E. Atrial and ventricular fibrillation: Computational simulation of spiral waves in cardiac tissue. Archive of Applied Mechanics. 2010;80:569–580. 10.1007/s00419-009-0384-0 [DOI] [Google Scholar]

- 50. ten Tusscher KHWJ, Panfilov AV. Alternans and spiral breakup in a human ventricular tissue model. American Journal of Physiology-Heart and Circulatory Physiology. 2006;291(3):H1088–H1100. 10.1152/ajpheart.00109.2006 [DOI] [PubMed] [Google Scholar]

- 51. Trayanova NA. Whole-heart modeling applications to cardiac electrophysiology and electromechanics. Circulation Research. 2011;108:113–28. 10.1161/CIRCRESAHA.110.223610 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Plank G, Zhou L, Greenstein J, Cortassa S, Winslow R, O’Rourke B, et al. From mitochondrial ion channels to arrhythmias in the heart: Computational techniques to bridge the spatio-temporal scales. Philosophical Transactions Series A, Mathematical, Physical, and Engineering Sciences. 2008;366:3381–409. 10.1098/rsta.2008.0112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Nattel S. New ideas about atrial fibrillation 50 years on. Nature. 2002;415:219–226. 10.1038/415219a [DOI] [PubMed] [Google Scholar]

- 54. Nagaiah C, Kunisch K, Plank G. Optimal control approach to termination of re-entry waves in cardiac electrophysiology. Journal of Mathematical Biology. 2013;67(2):359–388. 10.1007/s00285-012-0557-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.ten Tusscher K. Spiral wave dynamics and ventricular arrhythmias. PhD Thesis. 2004.

- 56.Zygote solid 3D heart generation II developement report. Zygote Media Group Inc.; 2014.

- 57. Rossi S, Lassila T, Ruiz Baier R, Sequeira A, Quarteroni A. Thermodynamically consistent orthotropic activation model capturing ventricular systolic wall thickening in cardiac electromechanics. European Journal of Mechanics—A/Solids. 2014;48:129–142. 10.1016/j.euromechsol.2013.10.009 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

We provide the complete derivation of the spatial (and temporal) discretization of system (1).

(PDF)

Here we report the configuration of the DL-ROM neural network used for our numerical tests.

(PDF)

In the notes we report the feature maps of the DL-ROM neural network.

(PDF)

Here we report the resulting fibers distribution used for Test 3.

(PDF)

Here we show the ability of the DL-ROM approximation to replace the FOM solution when evaluating outputs of interest.

(PDF)

(TXT)

(EPS)

(EPS)

(EPS)

Data Availability Statement