Abstract

Introduction

Methodological studies (ie, studies that evaluate the design, conduct, analysis or reporting of other studies in health research) address various facets of health research including, for instance, data collection techniques, differences in approaches to analyses, reporting quality, adherence to guidelines or publication bias. As a result, methodological studies can help to identify knowledge gaps in the methodology of health research and strategies for improvement in research practices. Differences in methodological study names and a lack of reporting guidance contribute to lack of comparability across studies and difficulties in identifying relevant previous methodological studies. This paper outlines the methods we will use to develop an evidence-based tool—the MethodologIcal STudy reportIng Checklist—to harmonise naming conventions and improve the reporting of methodological studies.

Methods and analysis

We will search for methodological studies in the Cumulative Index to Nursing and Allied Health Literature, Cochrane Library, Embase, MEDLINE, Web of Science, check reference lists and contact experts in the field. We will extract and summarise data on the study names, design and reporting features of the included methodological studies. Consensus on study terms and recommended reporting items will be achieved via video conference meetings with a panel of experts including researchers who have published methodological studies.

Ethics and dissemination

The consensus study has been exempt from ethics review by the Hamilton Integrated Research Ethics Board. The results of the review and the reporting guideline will be disseminated in stakeholder meetings, conferences, peer-reviewed publications, in requests to journal editors (to endorse or make the guideline a requirement for authors), and on the Enhancing the QUAlity and Transparency Of health Research (EQUATOR) Network and reporting guideline websites.

Registration

We have registered the development of the reporting guideline with the EQUATOR Network and publicly posted this project on the Open Science Framework (www.osf.io/9hgbq).

Keywords: statistics & research methods, epidemiology, education & training (see medical education & training)

Strengths and limitations of this study.

To the best of our knowledge, this is the first study to design an evidence-based tool to support the complete and transparent reporting of methodological studies in health research.

This project will help to highlight the current reporting practices of authors of methodological studies to outline a list of key reporting items.

The stakeholders recruited for the consensus study will represent a diverse group of expert health research methodologists including biostatisticians, clinical researchers, journal editors, healthcare providers and reporting guideline developers.

Our study does not incorporate a blinded consensus process and this may impact the flow of discussions during the conference meetings.

Introduction

Concerns with the quality and quantity of research have sparked interest in the rapidly evolving field which has been called meta-epidemiology, meta-research or research-on-research.1–3 This field of research addresses the entire research process, from question development to design, conduct and reporting issues, and most often uses research-related reports (eg, protocols, published manuscripts, registry entries, conference abstracts) as the unit of analysis. These studies may seek to ‘(1) describe the distribution of research evidence for a specific question; (2) examine heterogeneity and associated risk factors; and (3) control bias across studies and summarise research evidence as appropriate’.4 For the purpose of this project, we will refer to these research outputs as ‘methodological studies’, that is, studies that evaluate the design, conduct, analysis (eg, including bias, statistical plan and methods) or reporting of other studies in health research. This definition does not include statistical methodological studies (eg, studies testing new algorithms or analytical methods, simulation studies) and experimental studies in which the unit of analysis is not a research report. Methodological studies are important because they can identify gaps, biases and inefficiencies in research practices, and propose improvements and solutions.

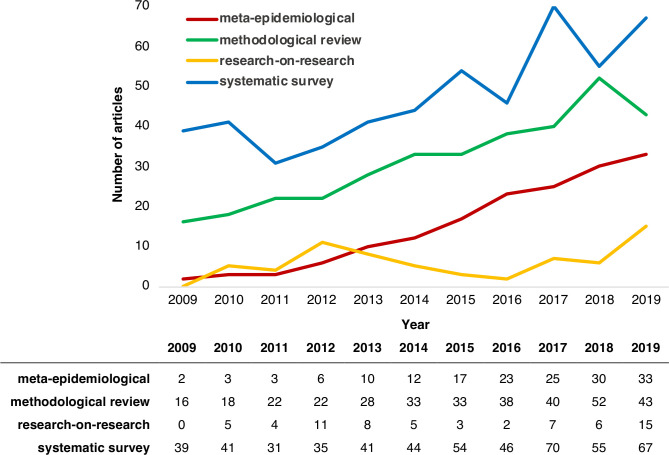

A PubMed search performed in April 2020 for terms often used to describe methodological studies suggests that the rate of publication of methodological studies has increased over time, illustrated in figure 1.

Figure 1.

Trends in methodological studies indexed in PubMed from 2009 to 2019.

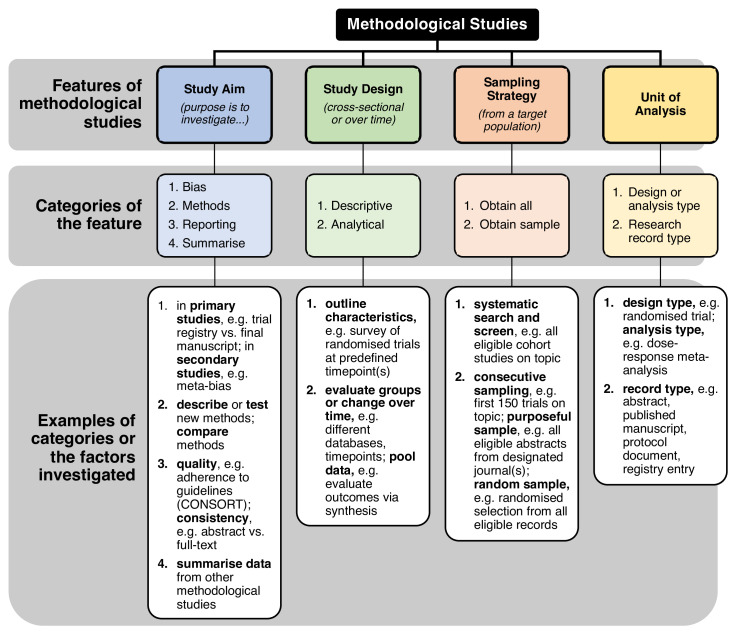

In the past 20 years, methodological studies have influenced the conduct of health research by informing many popular practices such as double data extraction in systematic reviews5; optimal approaches to conducting subgroup analyses6; and reporting of randomised trials, observational studies, pilot studies and systematic reviews7–10 to name a few. Methodological studies have played an important role in ensuring that health research is reliable, valid, transparent and replicable. These types of studies may investigate: bias in research,11 12 quality or completeness of reporting,13 14 consistency of reporting,15 methods used,16 factors associated with reporting practices17; and may provide summaries of other methodological studies18 and other issues. Methodological studies may also be used to evaluate the uptake of methods over time to investigate whether (and where) practices are improving and allow researchers to make comparisons across different medical areas.19 20 These studies can also highlight methodological strengths and shortcomings such as sample size calculations in randomised controlled trials,21 22 quality of clinical prediction models,23 and spin and over-interpretation of study findings.24–26 As such, methodological studies promote robust, evidence-based science and help to discard inefficient research practices.27 A draft conceptual framework of the various categories of methodological studies that we have observed is outlined in figure 2. Broadly, some categories of methodological studies include those investigating: bias and spin, methodological approaches to study design or reporting issues.

Figure 2.

Draft conceptual framework of categories of methodological studies. CONSORT, CONsolidated Standards Of Reporting Trials.

Despite the importance of methodological studies, there is no guidance for their reporting. Murad and Wang have suggested a modification to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), a widely used reporting tool that is sometimes used for methodological studies because these studies often use methods that are also used in systematic reviews.28 Although a modification of PRISMA may work well for the data collection components of some methodological studies, it would fail to appropriately address the many different types of research questions that methodological studies attempt to answer. For example, if researchers were interested in changes in reporting quality of trials since the publication of the CONsolidated Standards Of Reporting Trials guidelines, they could use an interrupted time-series design. Also, methodological studies that include a random sample of research reports,29 or those structured as before–after designs19 would be a poor fit for the modified PRISMA tool, which is best suited for studies designed in the style of systematic reviews. Likewise, studies in which the unit of analysis is not the ‘study’ require more specific guidance (eg, when investigating multiple subgroup analyses or multiple outcomes within the same study).30 Thus, guidelines for transparent reporting of methodological studies are needed, and this need is widely acknowledged in the scientific community.31 32

Our work will address two main concerns:

There are no globally accepted names for methodological studies, making them difficult to identify. Methodological studies have been called ‘methodological review’, ‘systematic review’, ‘systematic survey’, ‘literature review’, ‘meta-epidemiological study’ and many other names. The diversity in names compromises training and educational activities,33 and it makes it difficult for end-users (eg, clinical researchers, guideline developers) to search for, identify and use these studies.34 35

The reporting of methodological studies is inconsistent, which may relate to differences in objectives, and to differences in transparency and completeness. That is, some studies may be better reported than others. While the most appropriate approach to reporting will depend on the research question, explicit, user-friendly and consensus-based guidance is needed to ensure that methodological studies are reported transparently and comprehensively.36

Aims

The aims of this study protocol are to outline the procedures to define and harmonise the names describing methodological studies, and to develop reporting guidelines for methodological studies in human health research.

Methods and analysis

Study design

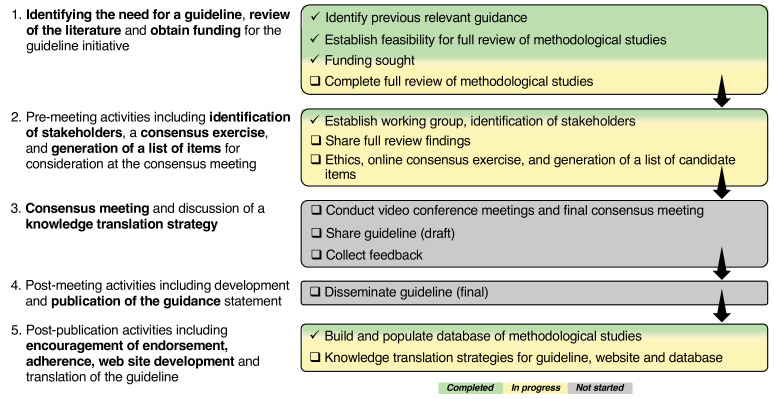

We have adopted the strategy for the development of reporting guidelines proposed by Moher et al.37 A visual overview of this approach, highlighting key components of the process, is presented in figure 3. The three parts of the project which will be addressed using the above strategy are outlined in detail below (see online supplemental file for an outline of the data flow informing subsequent parts of the project).

Figure 3.

Project overview for the development of reporting guidelines for methodological studies in health research.

bmjopen-2020-040478supp001.pdf (530.1KB, pdf)

Part 1: methodological review

The objectives of this part are to: (a) identify names used to describe methodological studies, (b) identify the various designs, analysis and reporting features of methodological studies, (c) find any previous reporting guidance and (d) identify methodological study experts.

Search strategy

We developed a search strategy informed by our pilot work38 targeting health-related sciences and biomedicine databases: Cumulative Index to Nursing and Allied Health Literature, Cochrane Library, Excerpta Medica (Embase), MEDLINE and Web of Science. There will be no limits by publication year, type or language. We will perform searches for authors known to publish in this field, check reference lists of relevant studies, check existing methodological study repositories (Studies Within a Trial and Studies Within a Review), preprints (bioRxiv and medRxiv), set up Google Alerts for keywords (eg, meta-epidemiology, research-on-research) and contact experts (eg, via email, meetings, following relevant journals, subscribing to methods email newsletters including the Methods in Research on Research and the National Institute for Health and Care Excellence groups, and following researchers on social media platforms such as ResearchGate and Twitter) to identify additional methodological studies. We will also check the Enhancing the QUAlity and Transparency Of health Research (EQUATOR) library to identify any published or under development reporting guidance. These approaches are informed by previous work and published literature.35 38 Two health sciences librarians at the Health Sciences Library (McMaster University) were consulted and reviewed the final search strategy (see online supplemental file) in line with the Peer Review of Electronic Search Strategies framework.39

Eligible studies

Studies that investigate methods—design, conduct, analysis or reporting—in other studies of health research in humans will be eligible. The ‘other studies’ (or research reports) refers to the unit of analysis of the methodological studies (eg, abstracts, cohort studies, randomised trials, registry records, study protocols, systematic reviews). Only published protocols and final reports of studies that investigate methods will be eligible. We will exclude simulation studies, studies testing new statistical methods (ie, there is no specific unit of analysis) and experimental studies of methods (ie, the unit of analysis is not a research report). These sorts of studies either already have reporting guidelines or can be reported in a commentary-style format.

Screening

A team of reviewers led by DOL will screen titles and abstracts independently, in duplicate in Rayyan,40 and full texts in standardised forms in DistillerSR.41 Both are online collaborative platforms for screening and reviewing literature. We will measure agreement on screening and study inclusion using Cohen’s kappa statistic.42 43 Any discrepancies between reviewers will be resolved through discussion.

Data extraction

In order to document the current reporting practices, we will extract data from included studies independently, in duplicate based on a standardised data collection form. Key data extraction fields for documenting methodological study features and reporting practices (eg, study design name, databases searched, any guideline use) are outlined in table 1. All data will be compiled in DistillerSR. Any discrepancies between reviewers will be resolved through discussion.

Table 1.

Overview of data extraction fields for the review

| Section | Data to be collected |

| Bibliometrics |

|

| Methods |

|

| Results |

|

| Discussion |

|

| Other |

|

All reviewers will undergo calibration exercises and pilot the screening and data collection forms (25 studies per reviewer). We will incorporate an emergent design in the data collection stage of the review, which is characterised by a flexibility in the methodology, allowing researchers to remain open to modifications.44 Should any new information that is of interest arise during the full-text screen or data extraction, we will update the data collection form and collect this information for all studies retrospectively and going forward. Any modifications to the present protocol will be reported in the final published review. This iterative approach will allow for the capture of information as new methodological study design features come to light during the full-text screening and data extraction phases. Based on this approach, data extraction will be updated accordingly for previously reviewed studies as needed. For example, we expect to see overlaps in methodological study names, some of which might be attributed to collaborating research groups. There also appear to be similarities in methodological study reporting styles that are borrowed from systematic review4 or survey study designs, which have both been extensively developed and are omnipresent in health research literature. However, if the current data collection fields, listed in table 1, are insufficient to capture the nuances of the varieties of methodological studies, we will revise our data collection forms accordingly and collect the data for all studies.

Generation of a list of candidate items

The generation of a list of candidate items will be informed by two sources. First, a list of reporting items will be compiled based on what has been reported by authors of the included studies in the methodological review (eg, flow diagram, search strategy). We will also note the use of any reporting guidance as mentioned by authors (eg, PRISMA, STrengthening the Reporting of OBservational studies in Epidemiology (STROBE)). Each item will be ranked from most frequently reported to those less frequently reported. Second, this list will be presented to expert user stakeholders alongside the proportion of methodological studies that report on each item. Stakeholders will be asked to propose additional relevant items to finalise the list of candidate reporting items for part 2.

Data analysis

We will present the flow of articles retrieved and screened in a study flow diagram, and summarise data in tables with explanatory text. We will provide descriptive statistics, that is, counts (percentage) for categorical data, and means (SD) or medians (IQR) for continuous data. In addition to study names, we will synthesise and tabulate verbatim quotations for the study objectives, outcomes, and intended use of findings to provide context and clarification for methodological study rationales.45 We will qualitatively group studies into categories based on similarities in reporting features. All statistical analyses will be done in Stata V.15.1.46 We will identify additional potential stakeholders from the list of authors of included studies.

Part 2: consensus study

This part of the project will consist of consultation with expert user stakeholders in a consensus study. The objectives are to define methodological studies, and outline the recommended study name(s) and best reporting practices. The project steering group (DOL, GHG, LM, LT), which includes members with expertise in health research methods, will oversee the consensus study and development of the reporting guideline.

Identification of stakeholders

The steering group will be responsible for identifying expert user stakeholders based on expertise with methodological studies and expertise with reporting guideline development.47 Additional stakeholders will be identified from the list of authors (either corresponding or senior, with academic faculty status) of methodological studies from the review. In our selection of stakeholders, we will seek individuals who will be committed to participating and providing feedback for the reporting guideline. We define expert user stakeholders as researchers involved in the design, conduct, analysis, interpretation or dissemination of methodological studies. Approximately 20–30 stakeholders will be selected (including the protocol authors) as participants in the consensus exercises. We will track response rates to invitations to participate in the consensus study. We will collect participant demographics (eg, country, primary job title, academic rank, and methodological study publication history) to provide insight into the representation in this field of research based on sociocultural factors.

Measuring agreement and achieving consensus

The above definition of methodological studies (ie, studies that evaluate the design, conduct, analysis or reporting of other studies in health research) will be used during the online consensus exercises and video conference meetings. Participants will discuss the following: (a) names for methodological studies, (b) categories of methodological studies and (c) reporting requirements. These three components, outlined in table 2, will be completed electronically through a McMaster Ethics Compliant service, LimeSurvey (https://reo.mcmaster.ca/limesurvey) for online surveys.48

Table 2.

Overview of consensus study activities and expected outputs

| Stage | Description of activities to be completed | Expected outputs |

| Online consensus exercise: categories of methodological studies* |

|

|

| Online consensus exercise: name(s) for methodological studies* |

|

|

| Online consensus exercise: reporting items* |

|

|

| First video conference meeting (two calls†, 2 hours each) |

|

|

| Second video conference meeting (two calls†, 2 hours each) |

|

|

| Final video conference meeting (4 hours) |

|

|

*During the online exercises, participants can suggest additional categories, names or items that they wish to discuss during the video conferences.

†Two calls will be scheduled to accommodate stakeholders in Eastern and Western time zones.

All video conferences will be facilitated by two investigators (DOL and LM). Stakeholders will be consulted for the development of drafts, elaborations and explanations for specific items. All steering committee members and stakeholders will be required to participate and vote during the consensus meetings. Disagreements will be resolved through discussion, and if no consensus can be reached, the steering committee will convey the recommendations for the stakeholder group to approve. Zoom, or comparable video conferencing software, will be used to allow for the collection of recordings.49

Data analysis

Findings from the consensus exercise will be summarised descriptively in tables that include counts (percentage) for categorical data, and means (SD) or medians (IQR) for continuous data. We will measure the levels of agreement (ie, percentage increase in agreement for successive rounds, number of comments made for each successive round and rounds with emergence of new themes) and instability (ie, spread and SD of ranked responses for each item) for each round.50 After the online exercises, one investigator (DOL) will qualitatively synthesise and code the suggestions for the methodological study names, categories and reporting items into common themes in Dedoose, a qualitative research software.51 The steering committee will synthesise data from the participant discussions to revise each subsequent draft.

Part 3: reporting guideline

The objectives of this part are to develop, refine, publish and disseminate the reporting guideline for methodological studies. We have registered the development of the reporting guideline— MethodologIcal STudy reportIng Checklist—with the EQUATOR Network.52 This record may see updates to its name and acronym after deliberations during the consensus study. We will also consider which reporting items are appropriate for different categories of methodological studies. This will include discussions about whether a decision tree may be useful to direct users to other existing reporting guidelines should they be more appropriate for specific categories of methodological studies (eg, STROBE for methodological studies designed as cohort studies). Quantitative and qualitative findings from the consensus study will be incorporated into the final guideline document to include the: (a) recommended methodological study name(s) and categories, (b) recommended checklist with agreed on reporting items, (c) user guide and elaboration (eg, an explanation of why it is important, rationales and an example of how it can be presented in a methodological study), and (d) consensus statement. The draft document will be returned to the steering group and stakeholders to collect additional feedback. The checklist will be tested with end-users for face validity and clarity, and for additional fine-tuning as needed prior to publication. We will distribute the finalised checklist to a group of authors of methodological studies identified from the review (part 1) to assess its usefulness and whether the checklist appropriately captures items relevant to the reporting of methodological studies.53

Patient and public involvement

Although patients and the general public are not directly involved in this project, the findings of this research will be relevant to a broad range of knowledge users including methodological study authors, health researchers, methodologists, statisticians and journal editors. We will seek recommendations from investigators for general public members and patients that could be recruited for this project.

Ethics and dissemination

This research has received an exemption (October 2019) from the Hamilton Integrated Research Ethics Board for the consensus study. Ethics committee approval and consent to participate is not required for any other component of this project since only previously published data will be used.

Data deposition and curation

All participant records and data will be stored in MacDrive, a secure cloud storage drive that is privately hosted and based in-house at McMaster University.54 Only two researchers (DOL and LM) will have direct access to study-related documents and source data. Qualitative data will be promptly coded and transcribed, and all audio files will be encrypted. As part of our knowledge translation (KT) strategy and a consequence of the difficulties we faced in retrieving methodological studies from literature databases during our pilot work, we have developed an open-access database of methodological studies (www.methodsresearch.ca). We will catalogue all included studies from the pilot and full reviews on this website such that end-users can easily retrieve these studies. We have also set up a submission portal for researchers to submit their studies to be catalogued in this database. Parallel research by our colleagues will use this database as well as explore the automation of retrieving and indexing methodological studies in a dedicated space.55 Lastly, we will set up a complementary website to serve as the primary repository for the published reporting guideline document.

Dissemination

We will publish all manuscripts arising from this research and present the findings at conferences. We will set up a complementary website to serve as the primary repository for the published reporting guideline document. The inclusion of knowledge users and representatives from methodology journals and guideline groups on our core study team will aid the wide dissemination of the reporting guideline. We continue to contact journal editors for their endorsement, and encourage researchers to reach out to us about this work, as we have done previously.34 We will also encourage user feedback to inform future updates of the guideline as needed. These approaches are informed by our collective experience in developing and disseminating health research guidelines.7 56–60

Discussion

Our work is contributing to reducing research waste by: (1) making methodological studies transparent through streamlining their reporting; (2) permitting researchers to appraise methodological studies based on adherence to proposed guidelines; (3) allowing end-users of methodological studies to be able to locate inaccessible research in a dedicated database and promoting its continued development; and in doing so (4) allowing end-users of methodological studies to better evaluate and identify issues with study design and reporting that influence patient health, enabling them to apply methodological study evidence to their own research practices. Many methodological studies are done to improve the design, conduct, analysis and reporting of primary and secondary research. We anticipate that, in reviewing this body of evidence on research methods, we will further highlight the importance of studies that aim to improve the design of health research.61

Strengths and limitations

We acknowledge that there are inherent challenges in the search and retrieval of studies that lack consistent names, or dedicated indexing in common health research databases. As such, it is plausible that certain methodological studies that use terms not previously identified in the pilot or from our systematic database searches may be missed. To mitigate this limitation, we will (and have already) contact(ed) experts in the field to identify additional studies, and screen references and cite articles of relevant studies. We have consulted extensively with librarians at the McMaster Health Sciences Library on optimal approaches to capture the maximum number of studies.

The uncertainty in the number of methodological studies that are currently available and published in the literature can present additional logistic and timing constraints to the review component and overall progress of this work. However, given the landscape of methodological studies, we believe it is essential to apply a comprehensive search. To help with the organisation of screening and data extraction, we will use robust systematic review management software (DistillerSR).41 Further, we have designed all screening and data extraction prompts to ensure consistency and replicability of our work.

Lastly, our study does not incorporate a blinded consensus process and this may impact the flow of discussions during the video conference meetings. We will aim to regulate discussions such that dominant speakers do not steer the discussion and ensure that all participants have a chance to speak. Additionally, we will share summaries of the discussion and decisions after the meetings. This will allow for participants to privately provide any additional written feedback to the steering group that may not have been addressed.

A key strength of this research is the diversity of our study team. We have brought together an international, multidisciplinary team with expertise in consensus activities and guideline development, and research methodology and synthesis. This gives us an advantage in the breadth of feedback and fruitful discussions to be had with a wide array of users of the forthcoming guideline. Given the rise in the conduct of methodological studies, a general call for guidelines in the scientific community, and the number of teams that have reached out to us with interest in participating in this work, we are confident that the guideline will be used. However, we fully acknowledge the factors associated with implementation and use of guidelines, notably journal endorsement of the guidelines, the passage of time and other study level characteristics.20 62–66 Therefore, our stakeholders include editors from key journals that publish methodological studies such as the Journal of Clinical Epidemiology, BMC Medical Research Methodology, BMC Systematic Reviews, The Campbell Collaboration, and Cochrane. Stakeholders also include representatives from academic programmes building capacity, at the master’s and doctoral level, in conducting methodological research. To encourage better uptake, it has been suggested that researchers should work collaboratively with journals in the prospective design, knowledge translation and evaluation of reporting guidelines,67 as well as following up on user feedback and incorporating a system to revise the reporting guidelines when necessary.68 These strategies have been incorporated in our KT plan.

Conclusions

This research will improve the transparency of reporting of methodological studies, and help streamline their indexing and easier retrieval in literature databases. This work stands to make a substantial impact by informing research reporting standards for studies that investigate the design, conduct, analysis or reporting of other health studies, and thereby improving the transparency, reliability and replicability of health research, and ultimately benefiting patients and decision makers. Future efforts will focus on field-testing the published checklist with authors of methodological studies, gathering feedback from end-users, and optimising and adapting the checklist for different typologies of methodological studies as needed.

Supplementary Material

Acknowledgments

We would like to thank Denise Smith and Jack Young at the Health Sciences Library at McMaster University for their critical review of the search strategy. We would like to thank Dr Susan Jack at the School of Nursing at McMaster University for sharing her expertise and recommendations on qualitative and mixed-methods research design.

Footnotes

Contributors: DOL and LM conceived the idea. DOL, GHG, LM and LT contributed to the design of the study. DOL wrote the first draft of the manuscript. AKN, A-WC, BDT, DBA, DM, DP, EM-W, GHG, GSC, JCJ, LM, LP, LT, MB, PT, RB-P, SS, TY, VAW and ZS contributed to the refinement of the study methods and critical revision of the manuscript. All authors read and approved the final version of the manuscript.

Funding: This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors. This project is being carried out as part of a doctoral dissertation by DOL. DOL is supported by an Ontario Graduate Scholarship and the Queen Elizabeth II Graduate Scholarship in Science & Technology, and the Ontario Drug Policy Research Network Student Training Program.

Disclaimer: The funders had no influence over the design, data collection, analysis, interpretation, preparation of or decision to publish the manuscript.

Competing interests: ‘Yes, there are competing interests for one or more authors and I have provided a Competing Interests statement in my manuscript and in the box below’.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

References

- 1.Chalmers I, Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet 2009;374:86–9. 10.1016/S0140-6736(09)60329-9 [DOI] [PubMed] [Google Scholar]

- 2.Chan A-W, Song F, Vickers A, et al. Increasing value and reducing waste: addressing inaccessible research. Lancet 2014;383:257–66. 10.1016/S0140-6736(13)62296-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ioannidis JPA, Greenland S, Hlatky MA, et al. Increasing value and reducing waste in research design, conduct, and analysis. Lancet 2014;383:166–75. 10.1016/S0140-6736(13)62227-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bae J-M. Meta-epidemiology. Epidemiol Health 2014;36:e2014019. 10.4178/epih/e2014019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Buscemi N, Hartling L, Vandermeer B, et al. Single data extraction generated more errors than double data extraction in systematic reviews. J Clin Epidemiol 2006;59:697–703. 10.1016/j.jclinepi.2005.11.010 [DOI] [PubMed] [Google Scholar]

- 6.Sun X, Briel M, Busse JW, et al. Credibility of claims of subgroup effects in randomised controlled trials: systematic review. BMJ 2012;344:e1553. 10.1136/bmj.e1553 [DOI] [PubMed] [Google Scholar]

- 7.Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. J Clin Epidemiol 2009;62:1006–12. 10.1016/j.jclinepi.2009.06.005 [DOI] [PubMed] [Google Scholar]

- 8.Schulz KF, Altman DG, Moher D, et al. Statement: updated guidelines for reporting parallel group randomised trials. BMJ 2010;2010:c332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.von Elm E, Altman DG, Egger M, et al. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. J Clin Epidemiol 2008;61:344–9. 10.1016/j.jclinepi.2007.11.008 [DOI] [PubMed] [Google Scholar]

- 10.Thabane L, Hopewell S, Lancaster GA, et al. Methods and processes for development of a consort extension for reporting pilot randomized controlled trials. Pilot Feasibility Stud 2016;2:25. 10.1186/s40814-016-0065-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Babic A, Vuka I, Saric F, et al. Overall bias methods and their use in sensitivity analysis of Cochrane reviews were not consistent. J Clin Epidemiol 2020;119:57–64. 10.1016/j.jclinepi.2019.11.008 [DOI] [PubMed] [Google Scholar]

- 12.Ritchie A, Seubert L, Clifford R, et al. Do randomised controlled trials relevant to pharmacy meet best practice standards for quality conduct and reporting? A systematic review. Int J Pharm Pract 2020;28:220–32. 10.1111/ijpp.12578 [DOI] [PubMed] [Google Scholar]

- 13.Croitoru DO, Huang Y, Kurdina A, et al. Quality of reporting in systematic reviews published in dermatology journals. Br J Dermatol 2020;182:1469–76. 10.1111/bjd.18528 [DOI] [PubMed] [Google Scholar]

- 14.Khan MS, Shaikh A, Ochani RK, et al. Assessing the quality of Abstracts in randomized controlled trials published in high impact cardiovascular journals. Circ Cardiovasc Qual Outcomes 2019;12:e005260. 10.1161/CIRCOUTCOMES.118.005260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rosmarakis ES, Soteriades ES, Vergidis PI, et al. From conference Abstract to full paper: differences between data presented in conferences and journals. Faseb J 2005;19:673–80. 10.1096/fj.04-3140lfe [DOI] [PubMed] [Google Scholar]

- 16.Mueller M, D'Addario M, Egger M, et al. Methods to systematically review and meta-analyse observational studies: a systematic scoping review of recommendations. BMC Med Res Methodol 2018;18:44. 10.1186/s12874-018-0495-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Farrokhyar F, Chu R, Whitlock R, et al. A systematic review of the quality of publications reporting coronary artery bypass grafting trials. Can J Surg 2007;50:266–77. [PMC free article] [PubMed] [Google Scholar]

- 18.Li G, Abbade LPF, Nwosu I, et al. A scoping review of comparisons between Abstracts and full reports in primary biomedical research. BMC Med Res Methodol 2017;17:181. 10.1186/s12874-017-0459-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kuriyama A, Takahashi N, Nakayama T. Reporting of critical care trial Abstracts: a comparison before and after the announcement of consort guideline for Abstracts. Trials 2017;18:32. 10.1186/s13063-017-1786-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mbuagbaw L, Thabane M, Vanniyasingam T, et al. Improvement in the quality of Abstracts in major clinical journals since consort extension for Abstracts: a systematic review. Contemp Clin Trials 2014;38:245–50. 10.1016/j.cct.2014.05.012 [DOI] [PubMed] [Google Scholar]

- 21.Armijo-Olivo S, Fuentes J, da Costa BR, et al. Blinding in physical therapy trials and its association with treatment effects: a Meta-epidemiological study. Am J Phys Med Rehabil 2017;96:34–44. 10.1097/PHM.0000000000000521 [DOI] [PubMed] [Google Scholar]

- 22.Kovic B, Zoratti MJ, Michalopoulos S, et al. Deficiencies in addressing effect modification in network meta-analyses: a meta-epidemiological survey. J Clin Epidemiol 2017;88:47–56. 10.1016/j.jclinepi.2017.06.004 [DOI] [PubMed] [Google Scholar]

- 23.Deliu N, Cottone F, Collins GS, et al. Evaluating methodological quality of prognostic models including patient-reported health outcomes iN oncologY (EPIPHANY): a systematic review protocol. BMJ Open 2018;8:e025054. 10.1136/bmjopen-2018-025054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kosa SD, Mbuagbaw L, Borg Debono V, et al. Agreement in reporting between trial publications and current clinical trial registry in high impact journals: a methodological review. Contemp Clin Trials 2018;65:144–50. 10.1016/j.cct.2017.12.011 [DOI] [PubMed] [Google Scholar]

- 25.Schandelmaier S, Chang Y, Devasenapathy N, et al. A systematic survey identified 36 criteria for assessing effect modification claims in randomized trials or meta-analyses. J Clin Epidemiol 2019;113:159–67. 10.1016/j.jclinepi.2019.05.014 [DOI] [PubMed] [Google Scholar]

- 26.Zhang Y, Flórez ID, Colunga Lozano LE, et al. A systematic survey on reporting and methods for handling missing participant data for continuous outcomes in randomized controlled trials. J Clin Epidemiol 2017;88:57–66. 10.1016/j.jclinepi.2017.05.017 [DOI] [PubMed] [Google Scholar]

- 27.Ioannidis JPA. Meta-research: why research on research matters. PLoS Biol 2018;16:e2005468. 10.1371/journal.pbio.2005468 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Murad MH, Wang Z. Guidelines for reporting meta-epidemiological methodology research. Evid Based Med 2017;22:139–42. 10.1136/ebmed-2017-110713 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.El Dib R, Tikkinen KAO, Akl EA, et al. Systematic survey of randomized trials evaluating the impact of alternative diagnostic strategies on patient-important outcomes. J Clin Epidemiol 2017;84:61–9. 10.1016/j.jclinepi.2016.12.009 [DOI] [PubMed] [Google Scholar]

- 30.Tanniou J, van der Tweel I, Teerenstra S, et al. Subgroup analyses in confirmatory clinical trials: time to be specific about their purposes. BMC Med Res Methodol 2016;16:20. 10.1186/s12874-016-0122-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Puljak L. Methodological studies evaluating evidence are not systematic reviews. J Clin Epidemiol 2019;110:98–9. 10.1016/j.jclinepi.2019.02.002 [DOI] [PubMed] [Google Scholar]

- 32.Granholm A, Anthon CT, Perner A, et al. Transparent and systematic reporting of meta-epidemiological studies. J Clin Epidemiol 2019;112:93–5. 10.1016/j.jclinepi.2019.04.014 [DOI] [PubMed] [Google Scholar]

- 33.Rice DB, Moher D. Curtailing the Use of Preregistration: A Misused Term. Perspect Psychol Sci 2019;14:1105–8. 10.1177/1745691619858427 [DOI] [PubMed] [Google Scholar]

- 34.Lawson DO, Thabane L, Mbuagbaw L. A call for consensus guidelines on classification and reporting of methodological studies. J Clin Epidemiol 2020;121:110–2. 10.1016/j.jclinepi.2020.01.015 [DOI] [PubMed] [Google Scholar]

- 35.Penning de Vries BBL, van Smeden M, Rosendaal FR, et al. Title, Abstract, and keyword searching resulted in poor recovery of articles in systematic reviews of epidemiologic practice. J Clin Epidemiol 2020;121:55–61. 10.1016/j.jclinepi.2020.01.009 [DOI] [PubMed] [Google Scholar]

- 36.Puljak L. Reporting checklist for methodological, that is, research on research studies is urgently needed. J Clin Epidemiol 2019;112:93. 10.1016/j.jclinepi.2019.04.016 [DOI] [PubMed] [Google Scholar]

- 37.Moher D, Schulz KF, Simera I, et al. Guidance for developers of health research reporting guidelines. PLoS Med 2010;7:e1000217. 10.1371/journal.pmed.1000217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lawson DO, Leenus A, Mbuagbaw L. Mapping the nomenclature, methodology, and reporting of studies that review methods: a pilot methodological review. Pilot Feasibility Stud 2020;6:1–11. 10.1186/s40814-019-0544-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.McGowan J, Sampson M, Salzwedel DM, et al. PRESS Peer Review of Electronic Search Strategies: 2015 Guideline Statement. J Clin Epidemiol 2016;75:40–6. 10.1016/j.jclinepi.2016.01.021 [DOI] [PubMed] [Google Scholar]

- 40.Ouzzani M, Hammady H, Fedorowicz Z, et al. Rayyan-a web and mobile APP for systematic reviews. Syst Rev 2016;5:210. 10.1186/s13643-016-0384-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.DistillerSR. in. Ottawa, Canada: evidence partners 2019.

- 42.Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med 2005;37:360–3. [PubMed] [Google Scholar]

- 43.Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas 1960;20:37–46. 10.1177/001316446002000104 [DOI] [Google Scholar]

- 44.Hesse-Biber SN. Leavy P: Handbook of emergent methods. New York: Guilford Press, 2008. [Google Scholar]

- 45.Corden A, Sainsbury R. Unit UoYSPR: using Verbatim quotations in reporting qualitative social research: researchers' views: University of York. Social Policy Research Unit 2006. [Google Scholar]

- 46.Stata Statistical Software Release 15.1. College Station, TX: StataCorp LLC, 2018. [Google Scholar]

- 47.Jones J, Hunter D. Consensus methods for medical and health services research. BMJ 1995;311:376–80. 10.1136/bmj.311.7001.376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.LimeSurvey An open source survey tool. Hamburg, Germany: Limesurvey GmbH, 2019. [Google Scholar]

- 49.Zoom Video Communications Zoom video communications, Inc. in. San Jose, United States; 2020 2020.

- 50.Holey EA, Feeley JL, Dixon J, et al. An exploration of the use of simple statistics to measure consensus and stability in Delphi studies. BMC Med Res Methodol 2007;7:52. 10.1186/1471-2288-7-52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Dedoose Web application for managing, analyzing, and presenting qualitative and mixed method research data. Los Angeles, CA: SocioCultural Research Consultants, LLC, 2016. [Google Scholar]

- 52.MISTIC - MethodologIcal STudy reportIng Checklist: guidelines for reporting methodological studies in health research. Available: http://www.equator-network.org/library/reporting-guidelines-under-development/reporting-guidelines-under-development-for-other-study-designs/#MISTIC [DOI] [PMC free article] [PubMed]

- 53.Streiner DL, Norman GR, Cairney J. Health measurement scales : a practical guide to their development and use. 15th edn Oxford: Oxford University Press, 2015. [Google Scholar]

- 54.MacDrive MacDrive. Hamilton ON: McMaster University, 2019. [Google Scholar]

- 55.SNSF - P3 Research Database: Project 190566. Available: http://p3.snf.ch/Project-190566

- 56.Collins GS, Reitsma JB, Altman DG, et al. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. Ann Intern Med 2015;162:55–63. 10.7326/M14-0697 [DOI] [PubMed] [Google Scholar]

- 57.Eldridge SM, Chan CL, Campbell MJ, et al. Statement: extension to randomised pilot and feasibility trials. Pilot Feasibility Stud 2010;2016:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Guyatt GH, Oxman AD, Vist GE, et al. Grade: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008;336:924–6. 10.1136/bmj.39489.470347.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Welch V, Petticrew M, Tugwell P, et al. PRISMA-Equity 2012 extension: reporting guidelines for systematic reviews with a focus on health equity. PLoS Med 2012;9:e1001333. 10.1371/journal.pmed.1001333 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wolff RF, Moons KGM, Riley RD, et al. PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann Intern Med 2019;170:51–8. 10.7326/M18-1376 [DOI] [PubMed] [Google Scholar]

- 61.Hardwicke TE, Serghiou S, Janiaud P, et al. Calibrating the scientific ecosystem through Meta-Research. Annu Rev Stat Appl 2020;7:11–37. 10.1146/annurev-statistics-031219-041104 [DOI] [Google Scholar]

- 62.Bastuji-Garin S, Sbidian E, Gaudy-Marqueste C, et al. Impact of STROBE statement publication on quality of observational study reporting: interrupted time series versus before-after analysis. PLoS One 2013;8:e64733. 10.1371/journal.pone.0064733 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Jin Y, Sanger N, Shams I, et al. Does the medical literature remain inadequately described despite having reporting guidelines for 21 years? - A systematic review of reviews: an update. J Multidiscip Healthc 2018;11:495–510. 10.2147/JMDH.S155103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Mills EJ, Wu P, Gagnier J, et al. The quality of randomized trial reporting in leading medical journals since the revised consort statement. Contemp Clin Trials 2005;26:480–7. 10.1016/j.cct.2005.02.008 [DOI] [PubMed] [Google Scholar]

- 65.Moher D, Jones A, Lepage L, et al. Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA 2001;285:1992–5. 10.1001/jama.285.15.1992 [DOI] [PubMed] [Google Scholar]

- 66.Samaan Z, Mbuagbaw L, Kosa D, et al. A systematic scoping review of adherence to reporting guidelines in health care literature. J Multidiscip Healthc 2013;6:169–88. 10.2147/JMDH.S43952 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Stevens A, Shamseer L, Weinstein E, et al. Relation of completeness of reporting of health research to journals' endorsement of reporting guidelines: systematic review. BMJ 2014;348:g3804. 10.1136/bmj.g3804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Page MJ, Moher D. Evaluations of the uptake and impact of the preferred reporting items for systematic reviews and meta-analyses (PRISMA) statement and extensions: a scoping review. Syst Rev 2017;6:263. 10.1186/s13643-017-0663-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Ayre C, Scally AJ. Critical values for Lawshe’s content validity ratio: revisiting the original methods of calculation. Meas Eval Couns Dev 2014;47:79–86. [Google Scholar]

- 70.Wilson FR, Pan W, Schumsky DA. Recalculation of the Critical Values for Lawshe’s Content Validity Ratio. Meas Eval Couns Dev 2012;45:197–210. 10.1177/0748175612440286 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2020-040478supp001.pdf (530.1KB, pdf)