Abstract

The aim of this paper is to develop a low-rank linear regression model (L2RM) to correlate a high-dimensional response matrix with a high dimensional vector of covariates when coefficient matrices have low-rank structures. We propose a fast and efficient screening procedure based on the spectral norm of each coefficient matrix in order to deal with the case when the number of covariates is extremely large. We develop an efficient estimation procedure based on the trace norm regularization, which explicitly imposes the low rank structure of coefficient matrices. When both the dimension of response matrix and that of covariate vector diverge at the exponential order of the sample size, we investigate the sure independence screening property under some mild conditions. We also systematically investigate some theoretical properties of our estimation procedure including estimation consistency, rank consistency and non-asymptotic error bound under some mild conditions. We further establish a theoretical guarantee for the overall solution of our two-step screening and estimation procedure. We examine the finite-sample performance of our screening and estimation methods using simulations and a large-scale imaging genetic dataset collected by the Philadelphia Neurodevelopmental Cohort (PNC) study.

Keywords: Imaging Genetics, Low Rank, Matrix Linear Regression, Spectral norm, Trace norm

1. Introduction

Multivariate regression modeling with a matrix response and a multivariate covariate is an important statistical tool in modern high-dimensional inference, with wide applications in various large-scale applications, such as imaging genetic studies. Specifically, in imaging genetics, matrix responses (Y) as phenotypic variables often represent the weighted (or binary) adjacency matrix of a finite graph for characterizing structural (or functional) connectivity pattern, whereas covariates (x) include genetic markers (e.g., single nucleotide polymorphisms (SNPs)), age, and gender, among others. The joint analysis of imaging and genetic data may ultimately lead to discoveries of genes for many neuropsychiatric and neurological disorders, such as schizophrenia (Scharinger et al., 2010; Peper et al., 2007; Chiang et al., 2011; Thompson et al., 2013; Medlan et al., 2014). This motivates us to systematically investigate a statistical model with a multivariate response Y and a multivariate covariate x.

Let {(xi, Yi) : 1 ≤ i ≤ n} denote independent and identically distributed (i.i.d.) observations, where xi = (xi,1, …, xis)T is a s × 1 vector of scalar covariates (e.g., clinical variables and genetic variants) and Yi is a p × q response matrix. Without loss of generality, we assume that xil has mean 0 and variance 1 for every 1 ≤ l ≤ s, and Yi has mean 0. Throughout the paper, we consider a L2RM, which is given by

| (1) |

where Bl is a p × q coefficient matrix characterizing the effect of the lth covariate on Yi and Ei is a p × q matrix of random errors with mean 0. The symbol “ * “ denotes the scalar multiplication. Model (1) differs significantly from the existing matrix regression, which was developed for matrix covariates and univariate responses (Leng and Tang, 2012; Zhao and Leng, 2014; Zhou and Li, 2014). Our goal is to discover a small set of important covariates from x that strongly influence Y.

We focus on the most challenging setting that both the dimension of Y (or pq) and that of x (or s) can diverge with the sample size. Such a setting is general enough to cover high-dimensional univariate and multivariate linear regression models in the literature (Negahban et al., 2012; Fan and Lv, 2010; Buhlmann and van de Geer, 2011; Tibshirani, 1997; Yuan et al., 2007; Candes and Tao, 2007; Breiman and Friedman, 1997; Cook et al., 2013; Park et al., 2017). In the literature, there are two major categories of statistical methods for jointly analyzing high-dimensional matrix Y and high-dimensional vector x.

The first category is a set of mass univariate methods. Specifically, it fits a marginal linear regression to correlate each element of Yi with each element of xi, leading to a total of pqs massive univariate analyses and an expanded search space with pqs elements. It is also called voxel-wise genome-wide association analysis (VGAWS) in the imaging genetics literature (Hibar et al., 2011; Shen et al., 2010; Huang et al., 2015; Zhang et al., 2014; Medland et al., 2014; Zhang et al., 2014; Thompson et al., 2014; Liu and Calhoun, 2014). For instance, Stein et al. (2010) used 300 high performance CPU nodes to run approximately 27 hours to carry out a VGWAS analysis on an imaging genetic dataset with only 448,293 SNPs and 31,622 imaging measures for 740 subjects. Such computational challenges are becoming more severe as the field is rapidly advancing to the most challenging setting with large pq and s. More seriously, for model (1), the massive univariate method can miss some important components of x that strongly influence Y due to the interaction among x.

The second category is to fit a model accommodating all (or part of) covariates and responses (Vounou et al., 2010, 2012; Zhu et al., 2014; Wang et al., 2012a,b; Peng et al.,2010). These methods use regularization methods, such as Lasso or group Lasso, to select a set of covariate-response pairs. However, when the product pqs is extremely large, it is very difficult to allocate computer memory for such an array of size pqs in order to accommodate all coefficient matrices Bls, rendering all these regularization methods being intractable. Therefore, almost all existing methods in this category have to use some dimension reduction techniques (e.g., screening methods) to reduce both the number of responses and that of covariates. Subsequently, these methods fit a multivariate linear regression model with the selected elements of Y as new responses and those of x as new covariates. However, this approach can be unsatisfactory, since it does not incorporate the matrix structural information.

The aim of this paper is to develop a low-rank linear regression model (L2RM) as a novel extension of both VGAWS and regularization methods. Specifically, instead of repeatedly fitting a univariate model to each covariate-response pair, we consider all elements in Yi as a high-dimensional matrix response and focus on the coefficient matrix of each covariate, which is approximately low-rank (Candès and Recht, 2009). There is a literature on the development of matrix variate regression (Ding and Cook, 2014; Fosdick and Hoff, 2015; Zhou and Li, 2014), but these papers focus on the case when covariates have a matrix structure. In contrast, there is a large literature on the development of various function-on-scalar regression models that emphasize the inherent functional structure of responses. See Chapter 13 of Ramsay and Silverman (2005) for a comprehensive review on this topic. Variable selection methods have been developed for some function-on-scalar regression models (Wang et al., 2007; Chen et al., 2016), but these methods focus on one-dimensional functional response rather than two-dimensional matrix response. Recently, there has been some literature considering matrix or tensor responses regression (Ding and Cook, 2018; Li and Zhang, 2017; Raskutti and Yuan, 2018; Rabusseau and Kadri, 2016), but they only consider the case when the dimension of the covariates is fixed or slowly diverging with the sample size.

In this paper, we aim at efficiently correlating matrix responses with a high dimensional vector of covariates. Four major methodological contributions of this paper are as follows.

We introduce a low-rank linear regression model to fit high-dimensional matrix responses with a high dimensional vector of covariates, while explicitly accounting for the low-rank structure of coefficient matrices.

We introduce a novel rank-one screening procedure based on the spectral norm of the estimated coefficient matrix to eliminate most “noisy” scalar covariates and show that our screening procedure enjoys the sure independence screening property (Fan and Lv, 2008) with vanishing false selection rate. The use of such spectral norm is critical for dealing with a large number of noisy covariates.

When the number of covariates is relatively small, we propose a low rank estimation procedure based on trace norm regularization, which explicitly characterizes the low-rank structure of coefficient matrices. An efficient algorithm for solving the optimization problem is developed. We systematically investigate some theoretical properties of our estimation procedure, including estimation and rank consistency when both p and q are fixed and an non-asymptotic error bound when both p and q are allowed to diverge.

We investigate how incorrectly screening results can affect the low-rank regression model estimation both numerically and theoretically. We establish a theoretical guarantee for the overall solution, while accounting for the randomness of the first-step screening procedure.

The rest of this paper is organized as follows. In Section 2, we introduce a rank-one screening procedure to deal with a high dimensional vector of covariates and describe our estimation procedure when the number of covariates is relatively small. Section 3 investigates the theoretical properties of our method. Simulations are conducted in Section 4 to evaluate the finite-sample performance of the proposed two-step screening and estimation procedure. Section 5 illustrates an application of L2RM in the joint analysis of imaging and genetic data from the Philadelphia Neurodevelopmental Cohort (PNC) study discussed above. We finally conclude with some discussions in Section 6.

2. Methodology

Throughout the paper, we focus on addressing three fundamental issues for L2RM as follows:

(I) The first one is to eliminate most ‘noisy’ covariates xil when the number of candidate covariates and that of response matrix are much larger than n, that is min(s, pq) >> n.

(II) The second one is to estimate the coefficient matrix Bl when Bl does have a low-rank structure.

(III) The third one is to investigate some theoretical properties of the screening and estimation methods.

2.1. Rank-one Screening Method

We consider the case that both pq and s diverge at an exponential order of n, and we also denote s by sn. To address (I), it is common to assume that most scalar covariates have no effects on the matrix responses, that is, Bl0 = 0 for most 1 ≤ l ≤ sn, where Bl0 is the true value for Bl. In this case, we define the true model and its size as

| (2) |

Our aim is to estimate the set and coefficient matrices Bl. Simultaneously estimating and Bl is difficult since it is computationally infeasible to fit a model when both sn and pq are quite high. For example, in the PNC data, we have pq = 692 = 4, 761 and sn ≈ 5 × 106. Therefore, it may be imperative to employ a screening technique to reduce the model size. However, developing a screening technique for model (1) can be more challenging than many existing screening methods, which focus on univariate responses (Fan and Lv, 2008; Fan and Song, 2010).

Similar to Fan and Lv (2008) and Fan and Song (2010), it is assumed that all covariates have been standardized so that

We also assume that every element of Yi = (Yi,jk) has been standardized, that is,

We propose to screen covariates based on the estimated marginal ordinary least squares (OLS) coefficient matrix for l = 1, …, sn. Although the interpretations and implications of the marginal models are biased from the joint model, the nonsparse information about the joint model can be passed along to the marginal model under a mild condition. Hence it is suitable for the purpose of variable screening (Fan and Song, 2010). Specifically, we calculate the spectral norm (operator norm or largest singular value) of , denoted as , and define a submodel as

| (3) |

where γn is a prefixed threshold.

The key advantage of using is that it explicitly accounts for the low-rank structure of Bl0s for most noisy covariates, while being robust to noise and more sensitive to various signal patterns (e.g., sparsely strong signals and low rank weak signals) in coefficient matrices. In our screening step, we use the marginal OLS estimates of the coefficient matrices, which can be regarded as the true coefficient matrices corrupted with some noise. One may directly use some other summary statistics of based on the component-wise information of , such as (sum of the absolute value of all the elements), , or the global Wald-type statistic used in Huang et al. (2015). It is well known that those summary statistics are sensitive to noise and suffer from the curse of dimensionality. This is further confirmed in our simulation studies that our rank-one screening based on is more robust to noise and sensitive to small signal regions. Moreover, the other advantage of using is that it is computationally efficient. In contrast, we may calculate some other regularized estimates (e.g., Lasso or fused Lasso) for screening, but it is computationally infeasible for L2RM when sn is much larger than the sample size.

A difficult issue in (3) is how to properly select γn. As shown in Section 3.1, when γn is chosen properly, our screening procedure enjoys the sure independence property (Fan and Lv, 2008). However, it is difficult to precisely determine γn in practice since it involves in two unknown positive constant terms C1 and α as shown in Theorem 1, which cannot be easily determined for finite sample. We propose to use random decoupling to select γn, which is similar to that used in Barut et al. (2016). Let {, i = 1, …, n} be a random permutation of the original data (xi = 1, …, n}. We apply our screening procedure on the random decoupling data . As the original association between xi and Yi is destroyed by random decoupling, when we perform screening using , it mimics the null model, e. the model when there is no association. We obtain the estimated marginal coefficient matrix , which is a statistical estimate of zero matrix, and the corresponding operator norm for all 1 ≤ l ≤ sn. Define νn = max1≤l≤sn , which is the the minimum thresholding parameter that makes no false positives. Since νn depends on the realization of the permutation, we set the threshold value γn as the median of these threshold values {, 1 ≤ k ≤ K} from K different random permutations, where is the threshold value for the kth permutation. We set K = 10 in this paper.

2.2. Estimation Method

To address (II), we consider the estimation of B when the true coefficient matrices Bl0s truly have a low-rank structure. The following refined estimation step can be applied after the screening step when the number of covariates is relatively small. For simplicity, we denote the set selected by the screening step by . Suppose , where . Define .

Recently, the trace norm regularization ∥Bl∥* = ∑k σk(Bl) has been widely used to recover the low-rank structure of Bl due to its computational efficiency, where αk (Bl) is the kth singular value of Bl. For instance, the trace norm has been used for matrix completion (Candès and Recht, 2009), for matrix regression models with matrix covariates and univariate responses (Zhou and Li, 2014), and for multivariate linear regression with vector responses and scalar covariates (Yuan et al., 2007). Similarly, we propose to calculate the regularized least squares estimator of B by minimizing

| (4) |

where ∥ · ∥F is the Frobenius norm of a matrix and λ is a tuning parameter. The low rank structure can be regarded as a special spatial structure, since it is very similar to functional principal component analysis. We use the five-fold cross validation to select the tuning parameter λ. Ideally, we may choose different tuning parameters for different Bl, but it can dramatically increase computational complexity.

We apply the Nesterov gradient method to solve problem (4) even though Q(B) is non-smooth (Nesterov, 2004; Beck and Teboulle, 2009). The Nesterov gradient method utilizes the first-order gradient of the objective function to obtain the next iterate based on the current search point. Unlike the standard gradient descent algorithm, the Nesterov gradient algorithm uses two previous iterates to generate the next search point by extrapolating, which can dramatically improve the convergence rate. Before we introduce the Nesterov gradiant algorithm, we first state a singular value thresholding formula for the trace norm (Cai et al., 2010).

Proposition 1. For a matrix A with {ak}1≤k≤r being its singular values, the solution to

| (5) |

shares the same singular vectors as A and its singular values are bk = (ak – λ)+ for k = 1, …, r.

We present the Nesterov gradient algorithm for problem (4) as follows. Denote and . We also define

where ∇R(S(t)) denotes the first-order gradient of R(S(t)) with respect to S(t), S(t) is an interpolation between B(t) and B(t–1) and will be defined below, c(t) denotes all terms that are irrelevant to B, and δ > 0 is a suitable step size. Given a previous search point S(t), the next search point S(t+1) would be the minimizer of g(B∣S(t), δ). For the search point S(t), it can be generated by linearly extrapolating two previous algorithmic iterates. A key advantage of using the Nestrov gradient method is that it has an explicit solution at each iteration. Specifically, let Bld, , and ∇R(S(t))ld be the (dq – q + 1)th to the dqth columns of the corresponding matrices B, S(t), and ∇R(S(t)), respectively. Minimizing is equivalent to solving sub-problems, each of which minimizes for d = 1, …, , while each sub-problem can be exactly solved by using the singular value thresholding formula given in Proposition 1.

Define is an matrix and λmax(·) denotes the largest eigenvalue of a matrix. Our algorithm can be stated as follows:

Initialize B(0) = B(1), α(0) = 0 and α(1) = 1, t = 1, and .

- Repeat

until objective function Q(B(t)) converges.

For the above matrices Atemp and Btemp, (Atemp)ld and (Btemp)ld denote the (dq – q + 1)th to the (dq)th columns of the corresponding matrices, respectively.

A sufficient condition for the convergence of {B(t)}t≥1 is that the step size δ should be smaller than or equal to 1/L(R), where L(R) is the smallest Lipschitz constant of the function R(B) (Beck and Teboulle, 2009; Facchinei and Pang, 2003). In our case, L(R) is equal to .

Remarks: For model (1), it is assumed that xil has mean 0 and variance 1 for every 1 ≤ l ≤ s, and Yi has mean 0 throughout the paper. If these assumptions are not valid in practice, a simple solution is to carry out a standardization step including standardizing covariates and centering responses. We use this approach in simulations and real data analysis. An alternative approach is to introduce an intercept matrix term B0 in model (1). Our screening procedure is invariant to such standardization step if we calculate , the (j, k)–th element of Bl, as the sample correlation between xil and Yi,jk. In the Supplementary Material, we present a modified algorithm of our estimation procedure and evaluate the effects of the standardization step on estimating Bl by using simulations. According to our experience, scaling covariates is necessary in order to ensure that all covariates are at the same scale, whereas centering covariates and responses is not critical.

3. Theoretical Properties

To address (III), we systematically investigate several key theoretical properties of the screening procedure and the regularized estimation procedure as well as a theoretical guarantee of our two-step estimator. First, we investigate the sure independence screening property of the rank-one screening procedure when s (also denoted by sn) diverges at an exponential rate of the sample size. Second, we investigate the estimation and rank consistency of our regularized estimator when both p and q are fixed. Third, we derive the non-asymptotic error bound for our estimator when both p and q are diverging. Finally, we establish an overall theoretical guarantee for our two-step estimator. We state the following theorems, whose detailed proofs can be found in the Appendix B.

3.1. Sure Screening Property

The following assumptions are used to facilitate the technical details, even though they may not be the weakest conditions but help to simplify the proof.

(A0) The covariates Xi are i.i.d from a distribution with mean 0 and covariance matrix ∑x. Define . The vectorized error matrices vec(Ei) are i.i.d from a distribution with 0 and covariance matrix ∑e, where vec(·) denotes the vectorization of a matrix. Moreover, xi and Ei = (Ei,jk) are independent.

(A1) There exist some constants C1 > 0, b > 0, and 0 < κ < 1/2 such that

where is a p × q matrix with the (j, k)th element being , and .

(A2) There exist positive constants C2 and C3 such that

for every 1 ≤ l ≤ sn, 1 ≤ j ≤ p and 1 ≤ k ≤ q.

(A3) There exists a constant C4 > 0 such that log(sn) = C4nξ for ξ ϵ (0, 1 – 2κ).

(A4) There exist constants C5 > 0 and τ > 0 such that λmax(∑x) ≤ C5nτ.

(A5) We assume log(pq) = o(n1–2κ).

Remarks: Assumptions (A0)-(A5) are used to establish the theory of our screening procedure when sn diverges to infinity. Assumption (A1) is analogous to Condition 3 in Fan and Lv (2008) and equation (4) in Fan and Song (2010), in which κ controls the rate of probability error in recovering the true sparse model. Assumption (A2) is analogous to Condition (D) in Fan and Song (2010) and Condition (E) in Fan et al. (2011). Assumption (A2) requires that xil and Ei,jk are sub-gaussian, which ensures the tail probability to be exponentially light. Assumption (A3) allows the dimension sn to diverge at an exponential rate of the sample size n, which is analogous to Condition 1 in Fan and Lv (2008). Assumption (A4) is analogous to Condition 4 in Fan and Lv (2008), which rules out the case of strong collinearity. Assumption (A5) allows the product of the row and column dimensions of the matrix pq to diverge at an exponential rate of the sample size n.

The following theorems show the sure screening property of the screening procedure. We allow p and q to be either fixed or diverging with sample size n.

Theorem 1. Under Assumptions (A0)-(A3) and (A5), let γn = αC1(pq)1/2n−κ with 0 < α < 1, then we have as n → ∞.

Theorem 1 shows that if γn is chosen properly, then our rank-one screening procedure will not miss any significant variables with an overwhelming probability. Since the screening procedure automatically includes all the significant covariates for small values of γn, it is necessary to consider the size of when γn = αC1(pq)1/2n−κ holds.

Theorem 2. Under Assumptions (A0)-(A5), we have for γn = αC1(pq)1/2n−κ with 0 < α < 1 as n → ∞.

Theorem 2 indicates that the selected model size with the sure screening property is only at a polynomial order of n, even though the original model size is at an exponential order of n. Therefore, the false selection rate of our screening procedure vanishes as n → ∞.

3.2. Theory for Estimation Procedure

From this subsection, we will denote by for notation simplicity. We first provide some theoretical results for our estimation procedure. We assume that we can exactly select all the important variables in , i.e. , and is fixed. The results are also applicable if our original s is fixed, in which we only need to apply our estimation procedure.

We need more notations before we introduce more assumptions. Suppose the rank of Bl0 is rl. For every l = 1, …, sn, we denote as the singular value decomposition of Bl0 and use and to denote the orthogonal complements of Ul0 and Vl0, respectively. Define as the covariance matrix for , where . We further define , and for , where ⊗ denotes the Kronecker product. Let and . We define for such that . The Λl has some interesting interpretation. For instance, it can be shown that it is the Lagrange multiplier of an optimization problem. We include more interpretation of Λl in the Appendix C.

We then state additional assumptions that are needed to establish the theory of our estimation procedure when both p and q are assumed to be fixed.

The following assumptions (A6)-(A8) are needed.

(A6) The is nonsingular.

(A7) The holds.

(A8) For every , we assume ∥Λl∥op < 1.

Remarks: Assumption (A6) is a regularity condition in the low dimensional context, which rules out the scenario when one covariate is exactly a linear combination of other covariates. Assumption (A7) is used for rank consistency. Assumption (A8) can be regarded as the irrepresentable condition of Zhao and Yu (2006) in the rank consistency context. A similar condition can be found in Bach (2008).

Define the regularized low rank estimator of Bl for . We have the following consistent results when the tuning parameter converges in different rates when both p and q are fixed.

Theorem 3. (Estimation Consistency) Under Assumptions (A0) and (A6), we have

-

(i)

if n1/2 λ → ∞ and λ → 0, then for all ;

-

(ii)

if n1/2 λ → ρ ϵ [0, ∞) and n → ∞, then for all .

Theorem 3 reveals an interesting phase-transition phenomenon. When λ is relatively small or moderate, the convergence rate of is of order n−1/2, whereas as γ gets large, the convergence rate of can be approximated as the order of λ. Although we have established the consistency of as λ → 0, the next question is whether the rank of is consistent under the same set of conditions. It turns out that such rank consistency only holds for relatively large λ, whose convergence rate is slower than n−1/2.

Theorem 4. (Rank Consistency) Under Assumptions (A0) and (A6)-(A8), if λ → 0 and n1/2λ → ∞ hold, we have that for all .

Theorem 4 establishes the rank consistency of our regularized estimates. Theorems 3 and 4 reveal that both of the element consistency and the rank consistency hold only for λ → 0 and n1/2λ → ∞. This phenomenon is similar to that for the Lasso estimator. Specifically, although the Lasso estimator can achieve model selection consistency, the convergence rate of the Lasso estimator cannot achieve the rate of n−1/2 when selection consistency is satisfied (Zou, 2006).

We then consider the case when p and q are assumed to be diverging. The following assumptions (A9)-(A12) are needed.

(A9) There exist positive constants CL and CM such that .

(A10) We assume that are i.i.d multivariate normal with mean 0 and covariance matrix .

(A11) The vectorized error matrices vec(Ei) are i.i.d N(0, ∑e), where .

(A12) We assume max(p, q) → ∞ and max(p, q) = o(n) as n → ∞.

Remarks: Assumptions (A9)-(A12) are needed for our estimation procedure when both p and q are diverging with the sample size n. Assumption (A9) assumes the largest eigenvalue of is bounded and the smallest eigenvalue of is greater than 0. Assumption (A10) assumes that the covariates xils are gaussian. Assumption (A11) assumes that the largest eigenvalue of ∑e is bounded. Assumption (A12) allows p and q to diverge slower than n, but it does allow that pq > n.

We then show the following non-asymptotic bound for our estimation procedure when both p and q are diverging.

Theorem 5. (Nonasymptotic bound when both p and q diverge) Under Assumptions (A9)-(A12), when , there exist some positive constants c1, c2 and c3 such that with probability at least 1 – c1 exp{−c2(p + q)} – c3 exp(−n), we have

for some constant C > 0.

Theorem 5 implies that when and are fixed and λ ≍ n−1/2(p1/2 + q1/2), the estimator would be consistent with probability going to 1. The convergence rate of the estimator in Theorem 5 coincides with that in Corollary 5 of Negahban et al. (2009), where they studied the low-rank matrix learning problem using the trace norm regularization. Although considering different models, they also require the dimension of the matrix max(p, q) = o(n). It differs significantly from the L1 regularized problem, where the dimension of the matrix may diverge at the exponential order of the sample size. The result in this theorem can also be regarded as a special case of the result in Raskutti and Yuan (2018), where they derived non-asymptotic error bound in a class of tensor regression model with sparse or low-rank penalties.

3.3. Theory for Two-step Estimator

In this section, we give a unified theory for our two-step estimator. In particular, we derive the non-asymptotic bound for our final estimate. To begin with, we first introduce some notations. For simplicity, we will use to denote , which is the set selected from the first step. Define and the true value of as . Define as the solution of the regularized trace norm penalization problem given by

| (6) |

We need the following assumptions.

(A13) Assume 2κ + τ < 1. Define for any m = O(n2κ+τ) and . We further assume ιL > 0.

(A14) Assume max(p, q)/ log(n) → ∞ and max(p, q) = o(n1–2τ) as n → ∞ with τ < 1/2.

Theorem 6. (Nonasymptotic bound for two-step estimator) Under Assumptions (A0)-(A5), (A10), (A11), (A13), and (A14), when λ ≥ 4C5nτ–1/2(p1/2 + q1/2), there exist some positive constants c1, c2, c3, c4, c5 such that with probability at least 1–c1n2κ+τ exp{−c2(p+q)} – c3n2κ+τ exp(−n) – c4 exp(−c5n1−2κ), we have

for some constant C > 0.

Theorem 6 implies that when and are fixed and ιL is fixed, the estimator is consistent with probability going to 1 when λ ≍ nτ–1/2(p1/2+q1/2). Theorem 6 gives an overall theoretical guarantee for our two-step estimator by considering the random selection procedure in the first step. A key fact that we use in the proof of Theorem 6 is that our first-step screening procedure enjoys the sure independence property. In this case, we only need to derive the non-asymptotic bound for the case when we exactly select or over-select the important variables as it holds with overwhelming probability.

4. Simulations

We conduct simulations to examine the finite sample performance of the proposed estimation and screening procedures. For the sake of space, we include additional simulation results in the Supplementary Material.

4.1. Regularized Low-rank Estimate

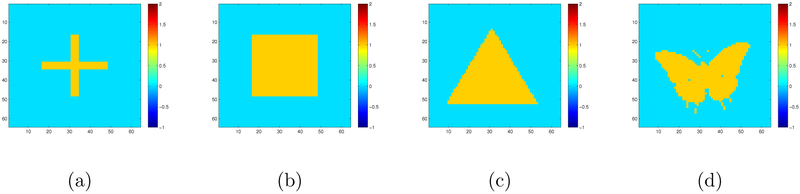

In the first simulation, we simulate 64 × 64 matrix responses according to model (1) with s = 4 covariates. We set the four true coefficient matrices to be a cross shape (B10), a square shape (B20), a triangle shape (B30), and a butterfly shape (B40). The images of Bl0 are shown in Figure 1, and each of them consists of a yellow region of interest (ROI) containing ones and a blue ROI containing zeros.

Figure 1:

Simulation I setting: the four 64×64 true coefficient matrices for the first simulation setting: the cross shape for B10 in panel (a), the square shape for B20 in panel (b), the triangle shape of B30 in panel (c), and the butterfly shape for B40 in panel (d). The regression coefficient at each pixel is either 0 (blue) or 1 (yellow).

We independently generate all scalar covariates xi from N(0, ∑x), where ∑x = (σx,ll′) is a covariance matrix with an autoregressive structure such that holds for 1 ≤ l,l′ ≤ s with ρ1 = 0.5. We independently generate vec(Ei) from N(0, ∑e). Specifically, we set the variances of all elements in Ei to be and the correlation between Ei,jk and Ei,j′k′ to be for 1 ≤ j, k, j′, k′ ≤ 64 with ρ2 = 0.5. We consider three different sample sizes including n = 100, 200, and 500, and set to be 1 and 25.

We use 100 replications to evaluate the finite sample performance of our regularized low-rank (RLR) estimates defined as . To evaluate the estimation accuracy, we compute the mean squared errors of , denoted by MSE(), for all 1 ≤ l ≤ 4. We also calculate the prediction errors (PE) by generating ntest = 500 independent test observations.

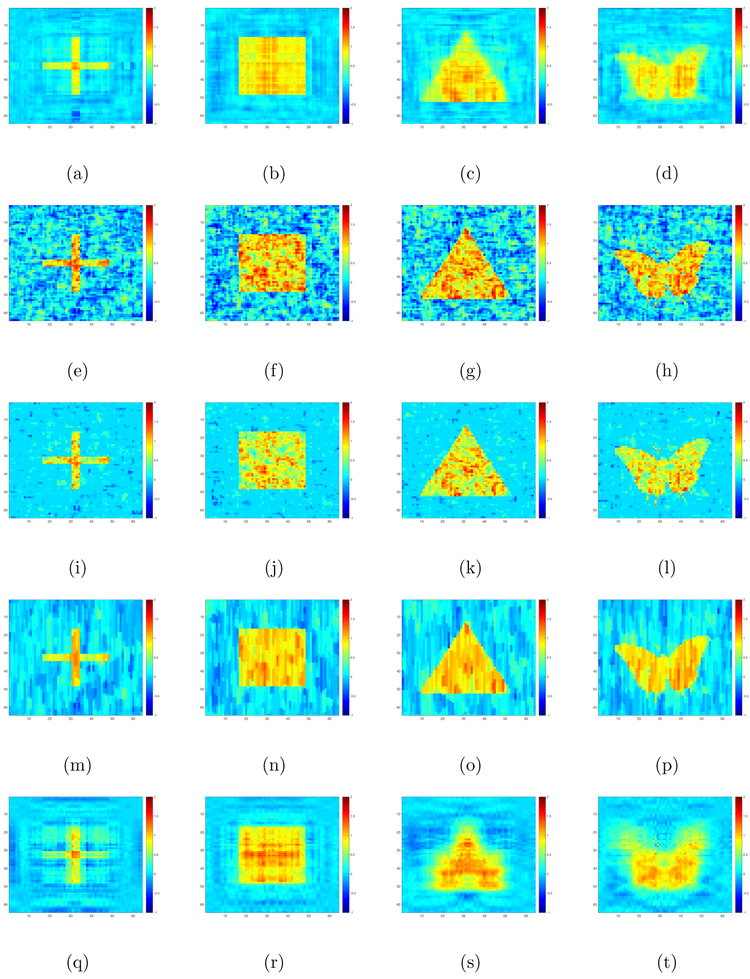

We compare our method with OLS, Lasso, fused Lasso and tensor envelope method (Li and Zhang, 2017). For fair comparison, we also use five-fold cross validation to select regularization parameters of Lasso and fused Lasso and the envelope dimension of the tensor envelope method. The results are shown in Table 1. We also plot the RLR, OLS, Lasso, fused Lasso and tensor envelope estimates of (, 1 ≤ l ≤ 4} obtained from a randomly selected data set with n = 500 and in Figure 2.

Table 1:

Simulation I results: the means of PEs and MSEs for regularized low-rank (RLR), OLS, Lasso, fused Lasso (Fused) and tensor envelope (Envelope) estimates and their associated standard errors in the parentheses. For each case, 100 simulated data sets are used.

| (n, ) | Method | MSE(B1) | MSE(B2) | MSE(B3) | MSE(B4) | PE |

|---|---|---|---|---|---|---|

| (100, 1) | RLR | 11.67(0.21) | 9.96(0.22) | 43.21(0.43) | 44.88(0.52) | 1.03(0.0002) |

| OLS | 58.08(0.83) | 72.38(1.08) | 71.66(1.01) | 58.00(0.92) | 1.05(0.0004) | |

| Lasso | 42.96(0.79) | 53.79(1.08) | 53.21(0.98) | 44.87(0.75) | 1.04(0.0004) | |

| Fused | 11.85(0.20) | 11.25(0.22) | 13.61(0.23) | 17.87(0.26) | 1.02(0.0002) | |

| Envelope | 21.20(0.34) | 24.95(0.36) | 51.14(0.49) | 55.62(0.67) | 1.04(0.0002) | |

| (200, 1) | RLR | 7.27(0.09) | 6.73(0.10) | 23.77(0.20) | 23.08(0.23) | 1.02(0.0001) |

| OLS | 28.61(0.30) | 34.88(0.36) | 34.85(0.38) | 28.11(0.32) | 1.03(0.0001) | |

| Lasso | 19.29(0.38) | 23.86(0.43) | 23.73(0.41) | 20.30(0.29) | 1.02(0.0002) | |

| Fused | 5.93(0.09) | 5.62(0.08) | 6.59(0.10) | 8.63(0.10) | 1.01(0.0001) | |

| Envelope | 11.21(0.16) | 13.13(0.14) | 38.40(0.30) | 43.33(0.35) | 1.03(0.0001) | |

| (500, 1) | RLR | 3.46(0.03) | 3.53(0.03) | 10.54(0.06) | 9.75(0.06) | 1.006(0.00003) |

| OLS | 11.01(0.08) | 13.87(0.10) | 13.88(0.09) | 11.04(0.07) | 1.009(0.00003) | |

| Lasso | 5.93(0.17) | 7.89(0.17) | 7.78(0.18) | 6.70(0.13) | 1.007(0.00009) | |

| Fused | 2.36(0.03) | 2.37(0.02) | 2.73(0.03) | 3.29(0.03) | 1.004(0.00002) | |

| Envelope | 5.44(0.07) | 6.60(0.07) | 31.72(0.21) | 38.29(0.30) | 1.02(0.0001) | |

| (100, 25) | RLR | 121.61(1.69) | 119.58(2.37) | 227.58(2.01) | 263.90(2.77) | 25.37(0.0027) |

| OLS | 1451.95(20.64) | 1809.40(27.01) | 1791.53(25.36) | 1450.10(23.10) | 26.27(0.0099) | |

| Lasso | 1360.23(19.03) | 1683.95(24.74) | 1669.88(23.97) | 1367.71(21.34) | 26.22(0.0093) | |

| Fused | 238.87(3.04) | 254.78(4.36) | 290.50(4.47) | 283.07(3.89) | 25.42(0.0031) | |

| Envelope | 175.09(1.57) | 139.95(2.71) | 259.48(2.18) | 286.98(1.81) | 25.39(0.0023) | |

| (200, 25) | RLR | 79.44(1.01) | 71.27(1.25) | 171.12(1.21) | 201.43(1.63) | 25.26(0.0013) |

| OLS | 715.28(7.49) | 872.02(8.93) | 871.33(9.41) | 702.70(8.00) | 25.66(0.0037) | |

| Lasso | 657.54(7.19) | 798.43(8.58) | 798.01(9.04) | 652.91(7.14) | 25.62(0.0035) | |

| Fused | 156.75(2.00) | 162.27(1.95) | 174.79(2.34) | 175.61(2.20) | 25.27(0.0016) | |

| Envelope | 151.68(1.55) | 105.18(1.61) | 202.96(2.78) | 230.24(2.63) | 25.29(0.0016) | |

| (500, 25) | RLR | 42.17(0.50) | 39.70(0.59) | 110.16(0.79) | 125.08(0.75) | 25.10(0.0005) |

| OLS | 275.31(2.05) | 346.69(2.54) | 346.93(2.36) | 276.05(1.83) | 25.24(0.0008) | |

| Lasso | 238.43(2.33) | 299.22(2.99) | 298.81(2.90) | 243.44(1.95) | 25.22(0.0011) | |

| Fused | 80.31(0.84) | 89.14(0.79) | 93.22(0.90) | 89.8(0.83) | 25.10(0.0006) | |

| Envelope | 95.49(0.99) | 75.41(0.99) | 142.24(1.51) | 171.34(1.31) | 25.14(0.0008) |

Figure 2:

Simulation I results: the RLR (panels (a)-(d)), OLS (panels (e)-(h)), Lasso (panels (i)-(l)), Fused Lasso (panels (m)-(p)) and Envelope (panels (q)-(t)) estimates of coefficient matrices from a randomly selected training dataset with n = 500, ρ1 = 0.5, ρ2 = 0.5 and σ2 = 25: (the first column); (the second column); (the third column); and (the fourth column).

Inspecting Figure 2 and Table 1 reveals the following findings. First, our method always outperforms OLS and envelope method. Second, when the images are of low rank (cross and square), our estimation method truly outperforms Lasso. Third, our method outperforms fused Lasso when either the sample size is small or the noise variance is large, whereas fused Lasso outperforms our method in other cases. Fourth, when the images are not of low rank (triangle and butterfly), fused Lasso performs best in most cases, whereas our method outperforms Lasso when either noise level is high or sample size is small.

These findings are not surprising. First, in all settings, since all the true coefficient matrices are piecewise sparse, the fused Lasso method is expected to perform well. Second, Lasso works reasonably well since it still imposes sparse structure. Third, since our method is designed for low rank cases, it performs well for the low rank cross and square cases, whereas it performs relatively worse for the triangle and butterfly cases.

We then conduct the second simulation study when the images only have low rank structure, but no sparse structure. Specifically, we simulate 64 × 64 matrix responses according to model (1) with s = 2 covariates. We set the two true coefficient matrices as and , where λ1 = (λ1,1, …, λ1,10T) = (2, 1.8, 1.6, 1.4, 1.2, 1, 0.8, 0.6, 0.4, 0.2)T, λ2 = (λ2,1, λ2,2, λ2,3, λ2,4, λ2,5)T = (2, 1.6, 1.2, 0.8, 0.4)T, and u1,j, u2,j, v1,j, v1,j, are column vectors of dimension 64. For U1 = (u1,1, …, u1,10) and V1 = (v1,1, …, v1,10), each of them is generated by orthogonalizing a 64 × 10 matrix with all elements being i.i.d standard normal. For U2 = (u2,1, …, u2,5) and V2 = (v2,1, …, v2,5), each of them is generated by orthogonalizing a 64 × 5 matrix with all elements being i.i.d standard normal. For all other settings, they are the same as those in Section 4.1. Table 2 summarizes the obtained results. Our method outperforms all the comparison methods when the true coefficient matrices are of low rank structure, but of no sparse structure.

Table 2:

Simulation II results: the means of PEs and MSEs for regularized low-rank (RLR) OLS, Lasso, fused Lasso (Fused) and tensor envelope (Envelope) estimates and their associated standard errors in the parentheses. For each case, 100 simulated data sets are used.

| (n, | Method | MSE(B1) | MSE(B2) | PE |

|---|---|---|---|---|

| (100, 1) | RLR | 21.86(0.33) | 13.91(0.20) | 1.02(0.0001) |

| OLS | 41.85(0.16) | 56.82(0.82) | 1.03(0.0003) | |

| Lasso | 55.78(0.88) | 54.40(0.73) | 1.03(0.0002) | |

| Fused | 57.39(0.94) | 56.61(0.77) | 1.03(0.0002) | |

| Envelope | 41.46(0.15) | 33.23(0.54) | 1.02(0.0002) | |

| (200, 1) | RLR | 10.84(0.12) | 6.77(0.07) | 1.011(0.00005) |

| OLS | 20.75(0.07) | 27.80(0.30) | 1.018(0.0001) | |

| Lasso | 27.90(0.31) | 27.03(0.29) | 1.018(0.0001) | |

| Fused | 27.69(0.30) | 27.29(0.29) | 1.018(0.0001) | |

| Envelope | 20.68(0.07) | 18.33(0.22) | 1.014(0.00008) | |

| (500, 1) | RLR | 4.19(0.05) | 2.73(0.02) | 1.004(0.00002) |

| OLS | 8.22(0.03) | 10.94(0.08) | 1.006(0.00003) | |

| Lasso | 12.49(0.18) | 12.18(0.17) | 1.007(0.00006) | |

| Fused | 10.99(0.08) | 10.91(0.09) | 1.006(0.00003) | |

| Envelope | 8.26(0.03) | 9.37(0.09) | 1.006(0.00003) | |

| (100, 25) | RLR | 391.95(5.50) | 254.67(3.54) | 25.37(0.0024) |

| OLS | 1044(4.10) | 1447(23.94) | 25.77(0.0060) | |

| Lasso | 1378.32(22.99) | 1360.95(18.52) | 25.75(0.0059) | |

| Fused | 1232.31(17.74) | 1042.72(12.13) | 25.68(0.0055) | |

| Envelope | 1033.69(3.66) | 626.57(7.71) | 25.46(0.0027) | |

| (200, 25) | RLR | 219.13(2.14) | 136.41(1.33) | 25.26(0.0011) |

| OLS | 518.63(1.83) | 694.98(7.47) | 25.45(0.0025) | |

| Lasso | 657.39(7.19) | 644.78(7.20) | 25.44(0.0025) | |

| Fused | 637.01(6.45) | 589.9(5.68) | 25.43(0.0022) | |

| Envelope | 516.52(1.81) | 395.64(3.67) | 25.33(0.0015) | |

| (500, 25) | RLR | 101.8(0.91) | 64.19(0.57) | 25.09(0.0005) |

| OLS | 206.57(0.73) | 275.3(2.05) | 25.16(0.0008) | |

| Lasso | 259.2(1.95) | 254.26(2.17) | 25.16(0.0008) | |

| Fused | 265.44(1.89) | 255.88(1.99) | 25.16(0.0008) | |

| Envelope | 206.41(0.73) | 226.94(1.54) | 25.14(0.0006) |

4.2. Rank-one Screening using SNP Covariates

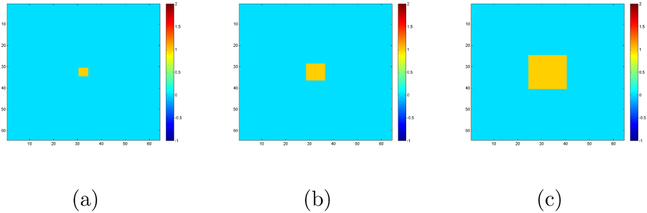

We generate 64 × 64 matrix responses according to model (1). We use the same method as Section 4.1 to generate Ei with ρ2 = 0.5 and or 25. We generate genetic covariates by mimicking the SNP data used in Section 5. Specifically, we use Linkage Disequilibrium (LD) blocks defined by the default method (Gabriel et al., 2002) of Haploview (Barrett et al., 2005) and PLINK (Purcell et al., 2007) to form SNP-sets. To calculate LD blocks, n subjects are simulated by randomly combining haplotypes of HapMap CEU subjects. We use PLINK to determine the LD blocks based on these subjects. We randomly select sn/10 blocks, and combine haplotypes of HapMap CEU subjects in each block to form genotype variables for these subjects. We randomly select 10 SNPs in each block, and thus we have sn SNPs for each subject. We set sn = 2, 000 and 5, 000 and choose the first 20 SNPs as the significant SNPs. That is, we set the first 20 true coefficient matrices as nonzero matrices B1,0 = … = B20,0 = Btrue, and the remaining coefficient matrices as zero. We consider three types of coefficient matrices Btrue with different significant regions, i.e. (ps, qs) = (4,4), (8, 8), and (16, 16), where ps and qs denote the true size of the significant regions of interest. Figure presents the true images Btrue and each of them contains a yellow ROI containing ones and a blue ROI containing zeros.

In this subsection, we evaluate the effect of using different γn on the finite sample performance of the screening procedure. We will investigate the proposed random decoupling in the next subsection. Specifically, by sorting the magnitude of in descending order, we define as

| (7) |

We apply our screening procedure to each simulated data set and then report the average true nonzero coverage proportion as k varies from 1 to 200. In this case, is the set of all true nonzero indices, and is the selected index set by using our screening method. The true nonzero coverage proportion is defined as . We consider three different sample sizes including n = 100, 200, and 500. We run 100 Monte Carlo replications for each scenario.

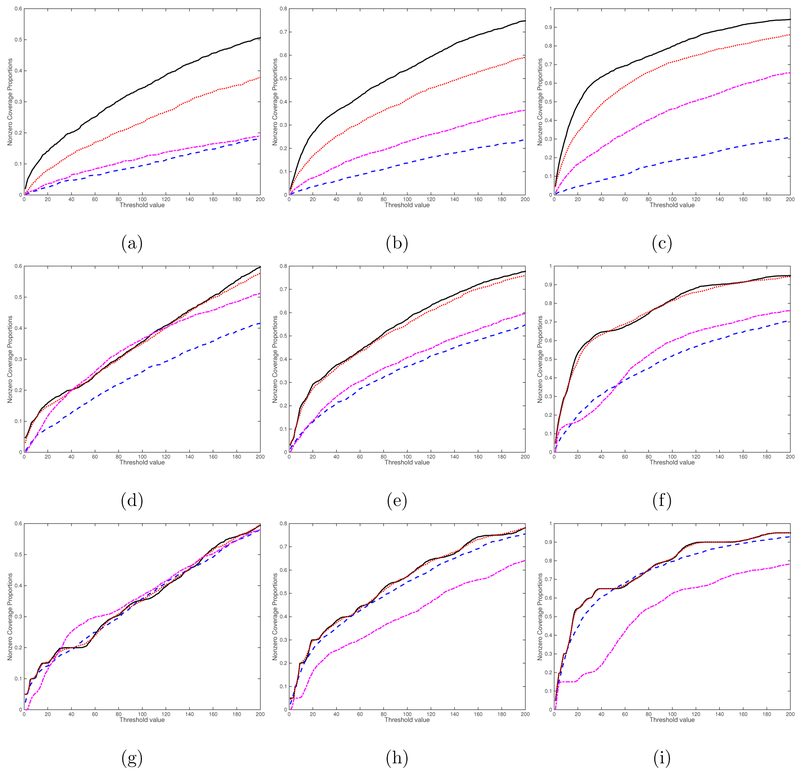

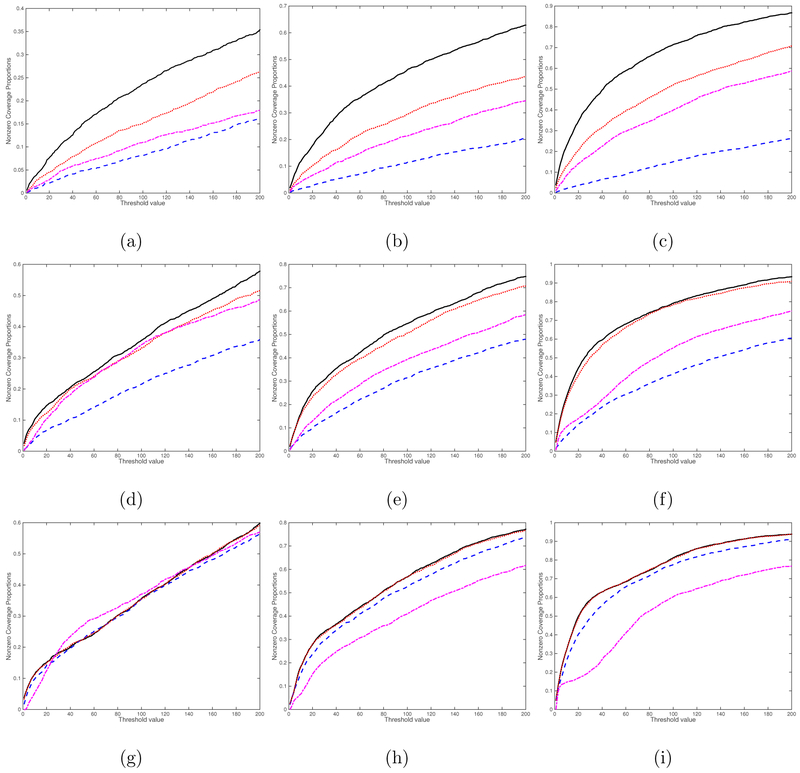

We consider four screening methods including the rank-one screening method, the L1 entrywise norm screening, the Frobenius norm screening, and the global Wald test screening proposed in Huang et al. (2015). The curves of percentage of the average true nonzero coverage proportion for different threshold values are presented for the case (, sn) = (1, 2000) in Figure 4 and for the case (, sn) = (25, 2000) in Figure 5. Inspecting Figures 4 and 5 reveals that the rank-one screening significantly outperforms all other three methods, followed by the Frobenius norm screening. As expected, increasing the sample size n and/or k increases the true nonzero coverage proportion of all four methods. We also include additional simulation results for the cases (, sn) = (1, 5000) in Figure S1 and (, sn) = (25, 5000) in Figure S2 in the Supplementary Material. The findings are similar. Overall, the rank-one screening method is more robust to noise and signal region size.

Figure 4:

Screening simulation results for the case (, sn) = (1, 2000): the curves of percentage of the average true nonzero coverage proportion. The black solid, blue dashed, red dotted, and purple dashed dotted lines correspond to the rank-one screening, the L1 entrywise norm screening, the Frobenius norm screening, and the global Wald test screening, respectively. Panels (a)-(i) correspond to (n, ps, qs) = (100, 4, 4), (200, 4, 4), (500, 4, 4), (100, 8, 8), (200, 8, 8), (500, 8, 8), (100, 16, 16), (200, 16, 16), and (500, 16, 16), respectively.

Figure 5:

Screening simulation results for the case (, sn) = (25, 2000): the curves of percentage of the average true nonzero coverage proportion. The black solid, blue dashed, red dotted, and purple dashed dotted lines correspond to the rank-one screening, the L1 entrywise norm screening, the Frobenius norm screening, and the global Wald test screening, respectively. Panels (a)-(i) correspond to (n, ps, qs) = (100, 4, 4), (200, 4, 4), (500, 4, 4), (100, 8, 8), (200, 8, 8), (500, 8, 8), (100, 16, 16), (200, 16, 16), and (500, 16, 16), respectively.

4.3. Simulation study for two-step procedure

In this subsection, we perform a simulation study to evaluate our two-step screening and estimation procedure. We simulate 64 × 64 matrix responses according to model (1) with sn covariates. We set the first four true coefficient matrices to be a cross shape (B10), a square shape (B20), a triangle shape (B30), and a butterfly shape (B40) shown in Figure 1. For the remaining coefficient matrices {Bl0, 5 ≤ l ≤ sn}, we set them as zero matrices. We consider sn = 2000 and 5000.

We independently generate all scalar covariates xi from N(0, ∑x), where ∑x = (σx,ll′) is a covariance matrix with an autoregressive structure such that holds for 1 ≤ l,l′ ≤ s with ρ1 = 0.5. We independently generate vec(Ei) from N(0, ∑e). Specifically, we set the variances of all elements in Ei to be and the correlation between Ei,jk and Ei,j′k′ to be for 1 ≤ j, k, j′, k′ ≤ 64 with ρ2 = 0.5. We consider three different sample sizes including n = 100, 200, and 500, and set to be 1 and 25.

First, we evaluate the finite sample performance of the random decoupling. We perform our screening procedure based on the random decoupling and then apply our regularized low rank estimation procedure. We report the MSEs of (l = 1, 2, 3, 4), model size, and prediction error based on 100 replications in Table 3. We report the proportion of times that we exactly select the true model , the proportion that we over-select some variables, but include all the true ones, and the proportion that we miss some of the important covariates in Table 4. The proposed random decoupling works pretty well in choosing γn, since the selected covariate set based on γn includes the true covariates with high probabilities in all scenarios.

Table 3:

The means of predictor errors (PEs) and MSEs for our two-step procedure, and the average selected model size for our screening procedure. Their associated standard errors are in the parentheses. For each case, 100 simulated data sets are used.

| (n, sn, ) | MSE(B1) | MSE(B2) | MSE(B3) | MSE(B4) | PE | Model Size |

|---|---|---|---|---|---|---|

| (100, 2000, 1) | 15.87(3.22) | 10.73(0.67) | 43.52(0.46) | 47.07(0.58) | 1.05(0.001) | 5.24(0.11) |

| (200, 2000, 1) | 5.92(0.10) | 5.10(0.11) | 27.61(0.27) | 28.23(0.31) | 1.03(0.0003) | 5.87(0.11) |

| (100, 5000, 1) | 32.28(6.57) | 12.60(1.08) | 44.28(0.52) | 47.14(0.65) | 1.07(0.002) | 5.03(0.10) |

| (200, 5000, 1) | 5.92(0.09) | 4.94(0.09) | 28.03(0.29) | 28.35(0.29) | 1.03(0.0005) | 5.83(0.13) |

| (100, 2000, 25) | 126.17(1.84) | 119.15(2.56) | 227.86(1.96) | 279.22(3.17) | 25.99(0.027) | 5.15(0.11) |

| (200, 2000, 25) | 84.42(1.25) | 73.69(1.41) | 177.90(1.66) | 214.67(2.15) | 25.54(0.012) | 5.82(0.11) |

| (100, 5000, 25) | 136.27(3.45) | 118.73(2.63) | 228.65(2.19) | 278.43(3.42) | 26.04(0.024) | 4.96(0.11) |

| (200, 5000, 25) | 82.69(1.12) | 73.53(1.37) | 177.44(1.57) | 211.25(2.12) | 25.56(0.012) | 5.75(0.13) |

Table 4:

The means of PEs and MSEs for our two-step procedure in three scenarios: exact selection (“Exact”), over selection (“Over”) and missing variables (“Miss”). The proportion of times among 100 simulated data sets for each scenario is also reported. The “NA” denotes the values that are not applicable.

| (n, sn, ) | Scenario | MSE(B1) | MSE(B2) | MSE(B3) | MSE(B4) | PE | Proportion |

|---|---|---|---|---|---|---|---|

| (100, 2000, 1) | Exact | 11.61(0.18) | 9.18(0.16) | 43.22(0.38) | 45.55(0.47) | 1.06(0.001) | 0.27 |

| Over | 11.17(0.17) | 10.11(0.20) | 43.6(0.46) | 47.31(0.56) | 1.05(0.001) | 0.71 | |

| Miss | 240(0) | 53.49(1.68) | 44.58(1.61) | 59.11(1.18) | 1.1(0.001) | 0.02 | |

| (200, 2000, 1) | Exact | 7.09(0.08) | 6.6(0.12) | 23.97(0.16) | 23.11(0.09) | 1.03(0.0005) | 0.04 |

| Over | 5.87(0.09) | 5.03(0.11) | 27.76(0.26) | 28.44(0.30) | 1.03(0.0003) | 0.96 | |

| Miss | NA | NA | NA | NA | NA | 0 | |

| (100, 5000, 1) | Exact | 11.69(0.17) | 9.74(0.19) | 44.14(0.65) | 45.79(0.41) | 1.07(0.001) | 0.27 |

| Over | 11.76(0.20) | 9.75(0.19) | 44.24(0.43) | 46.60(0.54) | 1.06(0.001) | 0.64 | |

| Miss | 240(0) | 41.48(1.93) | 44.96(0.65) | 55.10(1.23) | 1.12(0.001) | 0.09 | |

| (200, 5000, 1) | Exact | 7.52(0.09) | 6.69(0.11) | 24.91(0.22) | 24.24(0.08) | 1.04(0.001) | 0.05 |

| Over | 5.84(0.08) | 4.84(0.08) | 28.19(0.28) | 28.57(0.28) | 1.03(0.0005) | 0.95 | |

| Miss | NA | NA | NA | NA | NA | 0 | |

| (100, 2000, 25) | Exact | 121.75(1.27) | 114.12(2.19) | 229.79(1.95) | 270.16(3.47) | 26.18(0.024) | 0.29 |

| Over | 126.37(1.49) | 121.47(2.69) | 227.21(1.98) | 283.29(3.00) | 25.91(0.023) | 0.70 | |

| Miss | 240(NA) | 102.16(NA) | 217.49(NA) | 256.52(NA) | 26.45(NA) | 0.01 | |

| (200, 2000, 25) | Exact | 79.26(0.80) | 64.22(0.61) | 177.62(0.86) | 207.41(1.18) | 25.54(0.017) | 0.07 |

| Over | 84.81(1.27) | 74.4(1.43) | 177.92(1.71) | 215.21(2.20) | 25.54(0.012) | 0.93 | |

| Miss | NA | NA | NA | NA | NA | 0 | |

| (100, 5000, 25) | Exact | 122.88(1.74) | 114.5(2.49) | 217.93(1.87) | 260.99(2.64) | 26.20(0.019) | 0.34 |

| Over | 129.81(1.53) | 122.59(2.63) | 233.46(2.17) | 286.15(3.34) | 25.92(0.020) | 0.58 | |

| Miss | 240(0) | 108.61(3.01) | 239.31(2.06) | 296.51(4.25) | 26.23(0.022) | 0.08 | |

| (200, 5000, 25) | Exact | 85.2(0.87) | 72.92(1.48) | 174.83(1.20) | 206.34(2.32) | 25.66(0.014) | 0.06 |

| Over | 82.53(1.14) | 73.57(1.37) | 177.61(1.60) | 211.56(2.12) | 25.55(0.012) | 0.94 | |

| Miss | NA | NA | NA | NA | NA | 0 |

Second, we consider over-selecting and/or missing some covariates. For each of the three above cases, we report the MSEs of (l = 1, 2, 3, 4) and the prediction error in Table 4. When the screening procedure over-selects more irrelevant variables, the MSEs of the true non-zero coefficient matrices and prediction error of the fitted model are similar to those obtained from the model with the correct set of covariates. In contrast, if the screening procedure misses several important variables, then the estimates corresponding to these missed variables completely fail since the corresponding coefficient matrices are estimated zero. However, according to the simulation results, the MSEs corresponding to those important variables that have been selected, are similar to those obtained from the model with the correct set of covariates. The prediction error increases due to missing some important variables.

5. The Philadelphia Neurodevelopmental Cohort

5.1. Data Description and Preprocessing Pipeline

To motivate the proposed methodology, we consider a large database with imaging, genetic, and clinical data collected by the Philadelphia Neurodevelopmental Cohort (PNC) study. This study was a collaboration between the Center for Applied Genomics (CAG) at Children’s Hospital of Philadelphia (CHOP) and the Brain Behavior Laboratory at the University of Pennsylvania (Penn). The PNC cohort consists of youths aged 8-21 years in the CHOP network and volunteered to participate in genomic studies of complex pediatric disorders. All participants underwent clinical assessment and a neuroscience based computerized neurocognitive battery (CNB) and a subsample underwent neuroimaging. We consider 814 subjects with 429 females and 385 males. The age range of the 814 participants is 8-21 (years) with mean value 14.36 (years) and standard deviation 3.48 (years). Specifically, each subject has a resting state functional magnetic resonance imaging (rs-fMRI) connectivity matrix, which is represented as a 69×69 matrix, and a large genetic data set with around 5, 400, 000 genotyped and imputed single-nucleotide polymorphisms (SNPs) on all of the 22 chromosomes. Other clinical variables of interest include age and gender, among others. Our primary question of interest is to identify novel genetic effects on the local rs-fMRI connectivity changes.

We preprocess the resting state fMRI data using C-PAC pipeline. First, we register the fMRI data to the standard MNI 2mm resolution level and did segmentation using the C-PAC default setting. Next, we do motion correction using the Friston 24-parameter method. We also perform nuisance signal correction by regressing out the following variables: top 5 principle components in the noise regions of interest (ROIs), Cerebrospinal fluid (CSF), motion parameters, and the linear trends in time series. Finally, we extract the ROI time series by taking the average of voxel-wise time series in each ROI. The atlases that we use are HarvardOxford Cortical Atlas (48 regions) and HarvardOxford Subcortical Atlas (21 regions), which could be found in FSL. In total, we extract time series for each of the 69 regions and each time series has 120 observations after deleting the first and last 3 scans.

5.2. Analysis and Results

We first fit model (1) with the rs-fMRI connectivity matrices from 814 subjects as 69 × 69 matrix responses and age and gender as clinical covariates. We also include the first 5 principal component scores based on the SNP data as covariates to correct for population stratification. We first calculate the ordinary least squares estimates of coefficient matrices and then compute the corresponding residual matrices for the brain connectivity response matrix after adjusting the effects of the clinical covariates and the SNP principal component scores.

Second, we apply the rank-one screening procedure by using the residual matrices as responses to select important SNPs from the whole set of 5, 354, 265 SNPs that are highly associated with the residual matrices. We use the random decoupling method described in Section 2.1 to choose the thresholding value γn and select all those indices whose is the larger than γn. Finally, seven covariates are selected, where the names are shown in Table 5. Among these seven SNPS, the first three ones on Chromosome 5 have exactly the same genotypes for all the subjects and the next four ones on Chromosome 10 have exactly the same genotypes for all the subjects.

Table 5:

PNC data analysis results: the top 7 SNPs selected by our screening procedure.

| Ranking | Chromosome | SNP |

|---|---|---|

| 1 | 5 | rs72775042 |

| 2 | 5 | rs6881067 |

| 3 | 5 | rs72775059 |

| 4 | 10 | rs200328746 |

| 5 | 10 | rs75860012 |

| 6 | 10 | rs200248696 |

| 7 | 10 | rs78309702 |

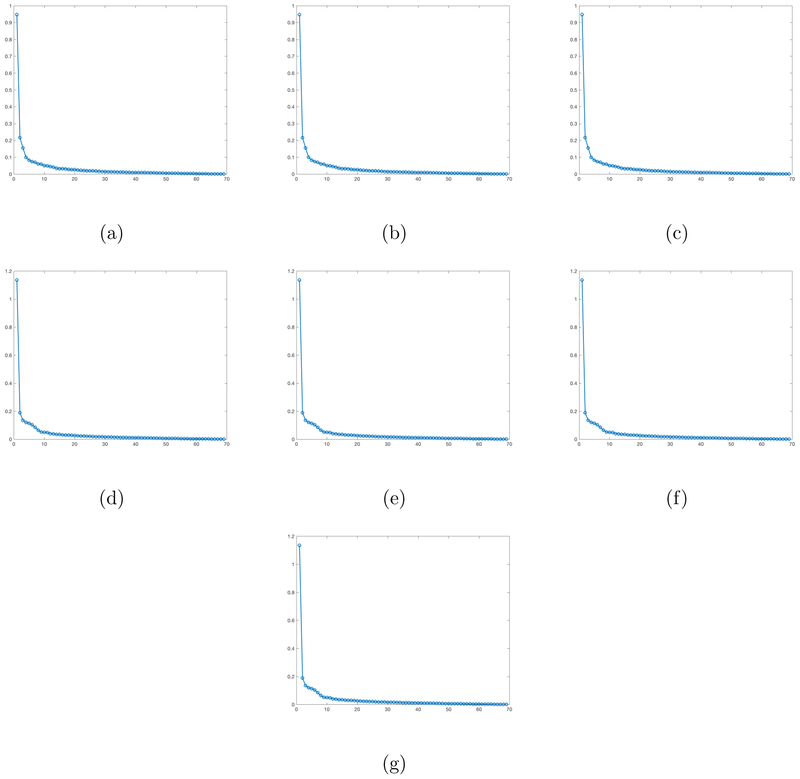

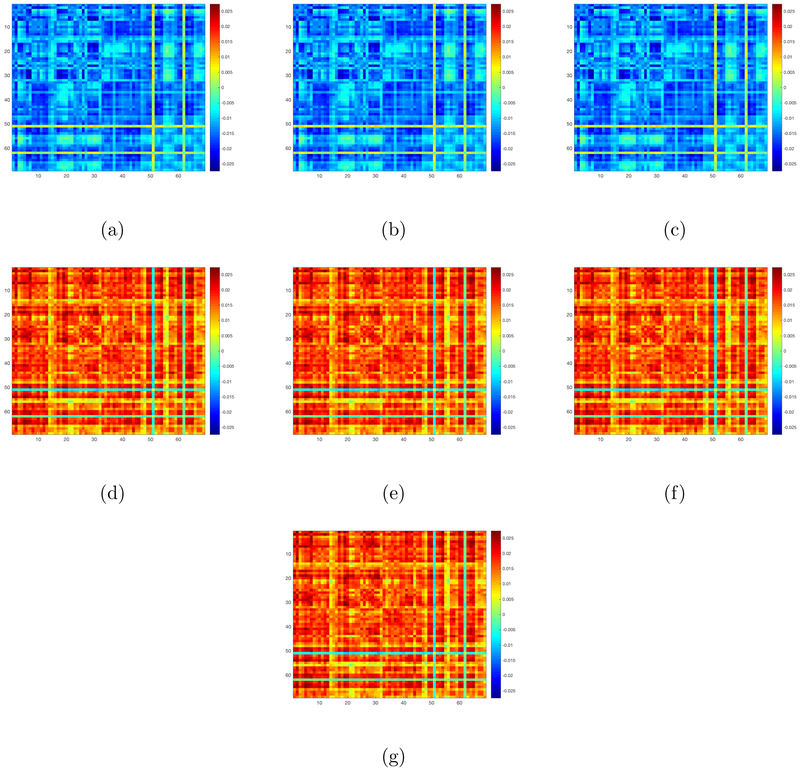

Finally, we examine the effects of these selected SNPs on our matrix response. We first fit the OLS to these 7 SNPs. Since the first three ones have exactly the same genotypes and the next four ones have exactly the same genotypes, we regress our matrix response on the first selected SNP and the fourth selected SNP, yielding two coefficient matrix estimates and . The OLS estimates for the 7 SNPs are defined as and . We then calculate the singular values of these 7 OLS estimates and plot these singular values in decreasing order in Figure 6. Inspecting Figure 6 reveals that these estimated coefficient matrices have a clear low rank pattern since the first few singular values dominate the remaining ones. This motivates us to apply our RLR estimation procedure to estimate the coefficient matrices corresponding to these 7 SNP covariates. Figure 7(a)-(g) presents the coefficient matrix estimates associated with these SNPs. The coefficient matrices corresponding to the first three selected SNPs are the same and the coefficient matrices corresponding to next four selected SNPs are the same. The estimated ranks of these seven coefficient matrices are given by 11, 11, 11, 8, 8, 8, and 8, respectively.

Figure 6:

PNC data: Panel (a)-(g) are the plots for the singular values of the OLS estimates corresponding to the 7 SNPs selected by our screening step, with singular values sorted from largest to smallest.

Figure 7:

PNC data: Panel (a)-(g) are the plots for our RLR estimates corresponding to the 7 SNPs selected by our screening step.

6. Discussion

Motivated from the analysis of imaging genetic data, we have proposed a low-rank linear regression model to correlate high-dimensional matrix responses with a high dimensional vector of covariates when coefficient matrices are approximately low-rank. We have developed a fast and efficient rank-one screening procedure, which enjoys the sure independence screening property as well as vanishing false selection rate, to reduce the covariate space. We have developed a regularized estimate of coefficient matrices based on the trace norm regularization, which explicitly incorporates the low-rank structure of coefficient matrices, and established its estimation consistency. We have further established a theoretical guarantee for the overall solution obtained from our two-step screening and estimation procedure. We have demonstrated the efficiency of our methods by using simulations and the analysis of PNC dataset.

Supplementary Material

Figure 3:

Screening setting: Panel (a)-(c) are the true coefficient images Btrue with regions of interest with different sizes: effective regions of interest (yellow ROI) and non-effective regions of interest (blue ROI).

Acknowledgement

The authors would like to thank the Editor, Associate Editor and two reviewers for their constructive comments, which have substantially improved the paper.

Dr. Kong’s work was partially supported by National Science Foundation, National Institute of Health, and Natural Science and Engineering Research Council of Canada. Dr. Baiguo An is work was partially supported by NSF of China (No. 11601349). Dr. Zhu’s work was partially supported by NIH grants R01MH086633 and R01MH116527, and NSF grants SES-1357666 and DMS-1407655. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH or any other funding agency.

The PNC data were obtained through dbGaP (phs000607.v1.p1). Support for the collection of the data sets was provided by grant RC2MH089983 awarded to Raquel Gur and RC2MH089924 awarded to Hakon Hakonarson. All subjects were recruited through the Center for Applied Genomics at The Children’s Hospital in Philadelphia.

A. Auxiliary Lemmas

In this section, we include the auxiliary lemmas needed for the theorems and their proofs.

Lemma 1. (Bernstein’s inequality) Let Z1, …, Zn be independent random variables with zero mean such that E∣Zi∣m ≤ m!Mm–2vi/2 for every m ≥ 2 (and all i) and some positive constants M and vi. Then P(∣Z1 + … + Zn∣ > x) ≤ 2 exp[−x2 / {2(v + Mx)}] for v ≥ v1 + … + vn.

This lemma is Lemma 2.2.11 of van der Vaart and Wellner (2000), and we omit the proof.

Lemma 2. Under Assumptions A0-A2, for arbitrary t > 0 and every l, l′, j, k, we have that

and

Proofs of Lemma 2: By Assumptions A1 and A2, we have

For every m ≥ 2, one has

It follows from Lemma 1 that we have

Similarly, we obtain

For every m ≥ 2, we have . Therefore, it follows from Lemma 1 that we have

This completes the proof of Lemma 2.

The next lemma is about the subdifferential and directional derivatives of the trace norm. For more details about this lemma and its proof, please refer to Recht et al. (2010) and Borwein and Lewis (2010).

Lemma 3. For an arbitrary matrix W, its singular value decomposition is denoted by W = UDVT, where and have orthonormal columns, D = diag(d1, …, dm), and d1 ≥ … ≥ dm > 0 are the singular values of W. Then the trace norm of W is and its subdifferential is equal to

The directional derivative at W is

where U⊥, V⊥ are the orthonormal complements of U and V.

The following lemma is a standard result called Gaussian comparison inequality (Anderson, 1955).

Lemma 4. Let X and Y be zero-mean vector Gaussian random vectors with covariance matrix ∑X and ∑Y respectively. If ∑X – ∑Y is positive semi-definite, then for any convex symmetric set , .

B. Proof of Theorems

Proof of Theorem 1: Recall that . For every 1 ≤ j ≤ p, 1 ≤ k ≤ q and 1 ≤ l ≤ sn, we have

It follows from Assumptions (A0) (A1) (A2) and Lemma 2 that for any t > 0, we have

For every , we have

Let c1 = 2pq(s0 + 1),

We have , where c0 = min{c2, c3}. By Assumption (A5), one has

This completes the proof of Theorem 1.

Proof of Theorem 2: The proof consists of two steps. In Step 1, we will show that , where . It follows from the definition of that we have

Moreover, we have

By Assumptions (A3) and (A5), one has for some constants c4 > 0 and c5 > 0. Therefore, we have by Assumption (A1).

In Step 2, we will show that . Define . As , we have . By the definition of , we have

Define B0,jk = (B10,jk, –, Bs0,jk)T, we can write . Multiplying Xi on both sides and taking expectations yield ∑xB0,jk = E(xi * Yi,jk). Therefore, we have

By Assumption A4, we have , which implies that .

Combining the results of above two steps leads to

This completes the proof of Theorem 2.

Theorems 3, 4 and 5 are theoretical results for our estimation procedure, and we assume and is fixed.

Proof of Theorem 3: Without loss of generality, for the proof of Theorem 3, we assume with s fixed for notation simplicity. We first prove Theorem 3 (i). We define

where Δl = λ−1(Bl – Bl0) for l = 1, …, s. Therefore, we have

where for l = 1, …, s.

When λ → 0, n1/2λ → ∞, we have

where is the (l, l′)–th element of for 1 ≤ l, l’ ≤ s. By the Central Limit Theorem, converges in distribution to a normally distributed matrix Dl with mean 0 and var(vec(Dl)) = mll∑e for every 1 ≤ l ≤ s. Hence

For every l = 1, …, s, recall that the singular value decomposition of Bl0 is , and , and denote orthogonal complements of Ul0 and Vl0, respectively. By Lemma 3, we have

Consequently, L(Δ1, …, Δs) →p L0(Δ1, …, Δs) for each Δ1 ϵ Gl,l = 1, …, s with Gl’s compact sets in , where

One can see that L0 (Δ1, …, Δs) is convex, hence it has unique minimum value point (Δ10, …, Δs0). As L(Δ1, …, Δs) is also convex, by (Knight and Fu, 2000) we have . This implies that , l = 1, ... , s.

We second prove Theorem 3 (ii). We define

where Ψl = n1/2(Bl – Bl0) for l = 1, …, s. Let , then we have that , l = 1, …, s. Under the Assumption (A6), and n1/2λ → ρ, we have f(Ψ1 …, Ψs) → f0(Ψ1 …, Ψs) and

where Dl is a random matrix, and vec(D1) is normally distributed. One can see that f0(Ψ1, …, Ψs) is convex, hence it has unique minimum value point (Ψ10, …, Ψs0) with Ψl0 = Op(1) for l = 1, …, s. Consequently, by (Knight and Fu, 2000), we have for l = 1, …, s, which indicates that for l = 1, …, s. This completes the proof of Theorem 3.

Proof of Theorem 4: Without loss of generality, for the proof of Theorem 4, we assume with s fixed for notation simplicity. It follows from Theorem 3(i) that holds for every 1 ≤ l ≤ s. Since the rank function is lower semi-continuous, . We will then prove for every 1 ≤ l ≤ s with probability tending to one.

Denote the singular value decomposition of as , where and . Let be the submatrix of without the first rl columns, and is the submatrix of without the first rl columns, where rl is the rank of Bl0. Denote the rank of by . We prove the theorem by two steps.

Step 1. In this step, we will show if

then . We will prove the statement by contradiction.

Let be the submatrix of corresponding to the first columns, and be the submatrix of corresponding to the first columns. If , we can write , as , and respectively, where , , , and . By the definition of , we have

Hence, by Lemma 3, we have

with , and ∥Nl∥op ≤ 1. Furthermore, we have

From the above formula, it follows that we have as long as . Consequently,

if , we have .

Step 2. In this step, we will prove that with probability tending to 1, one has

We have

Since is a consistent estimator of Bl0, we have and . Consequently, we have

As ∥Λl∥op < 1, we have . with probability 1. This completes the proof of Theorem 4.

Proof of Theorem 5. Without loss of generality, for the proof of Theorem 5, we assume with s fixed for notation simplicity. To prove Theorem 5, we first introduce some notations and definitions used in Negahban et al. (2012). Given a pair of subspaces , a norm based regularizer J is decomposable with respect to () if

where is the orthogonal complement of the space defined as .

We define the projection operator

Similarly, we can define the projections ΠM⊥, and .

We then introduce the definition of the subspace compatibility constant. For the subspace M, the subspace compatibility constant with respect to the pair (J, ∥ · ∥) is given by

We introduce the definition of restricted strong convexity. For a loss function L(θ), define δL(Δ, θ) = L(θ + Δ) – L(θ) – ⟨∇L(θ),Δ), where . The loss function satisfies a restricted strong convexity condition with curvature κL > 0 and tolerance function τL if

where .

Now we begin to prove Theorem 5. We need to use the result in Theorem 1 of Negahban et al. (2012). We first check the conditions of the theorem under our context.

Recall that , and rl = rank(Bl0). Let us consider the class of matrices that have rank rl ≤ min{p, q} and we define .

Let and denote its row space and column space, respectively. Let Ul and Vl be a given pair of rl-dimensional subspaces and . Define U = [U1, …, Ul] and V = [V1, …, Vl]. For a given pair (U, V), we can define the subspaces M(U, V), and of given by

and

where is the orthogonal complement of the space . For simplicity, we will use M, and to denote M(U,V), and respectively in the following proof.

Define , and we can easily see J(B) is a norm. It is easy to see that the norm J is decomposable with respect to the subspace pair (M, ), where . Therefore, the regularizer J satisfies Condition (G1) in Negahban et al. (2012).

Under condition (A9), it is easy to see the loss function R is convex and differentiable, and satisfies the restricted strong convexity with curvature κL = CL and tolerance τL = 0, and therefore the Condition (G2) in Negahban et al. (2012) holds.

After we check the conditions, we need to calculate and . It is easy to see . For , one has

Therefore, by Theorem 1 in Negahban et al. (2012), when λ ≥ 2J*(∇R(B0)), one has for some constant C > 0.

The term J*(∇R(B0)) is actually a random quantity, and our next step is to derive the order of this term.

Define J*(·) as the dual norm of J(·). For any matrix , we will first prove the following result

| (8) |

To prove (8), we first show that J*(A) ≥ max1≤l≤s ∥Al∥op. Let with for any k ≠ l and . One has

It is easy to see J*(A) ≥ ∥Al∥op holds for any 1 ≥ l ≥ s. Consequently, one has J*(A) ≥ max1≤l≤s ∥Al∥op.

Our next step is to show that J*(A) ≤ max1≤l≤s ∥Al∥op. Define the singular value decomposition of . One has

where θlk is the kth diagonal element of the diagonal matrix ϴl, is the kkth element of the matrix , (Ul)(k) and (Vl)(k) are the kth column of the matrices Ul and Vl respectively.

Combining the two inequalities, we show that J*(A) = max1≤l≤s ∥Al∥op.

Next we need to calculate J*(∇R(B0)), where with . We first need to calculate ∥Dl∥op. We know that operator norm is the dual norm of the trace norm.

From the definition of J*(·), one has

To obtain a bound for ∥Dl∥op, we use similar technique as the one used in Raskutti and Yuan (2018). Let Wi be a p × q random matrix with each entry i.i.d. standard normal. Assuming condition (A11) and by Lemma 4, conditioning on xil, we get

since .

As , conditioning on Wi, each entry of the matrix is i.i.d , where Xl = (x1l, …, xnl)T. Since is a χ2 random variable with n degree of freedom, where , one has

using the tail bounds of χ2. Then combining with the standard random matrix theory, we know that with probability at least where and are some positive constants. Therefore, under conditions (A10) and (A12), there exist some positive constants c1, c2 and c3 such that max1≤l≤s ∥Dl∥op ≤ 4CUn−1/2(max1≤l≤s σl)(p1/2 + q1/2) holds with probability at least 1 – c1 exp{−c2(p + q)} – c3exp(−n). Thus, when , λ ≥ J*(∇R(B0)) with probability at least 1 – c1 exp{−c2(p + q)} – c3 exp(−n).

Therefore, with probability 1 – c1 exp{—c2(p + q)} – c3 exp(−n), one has for some positive constant C. This completes the proof of Theorem 5.

Proof of Theorem 6:

To prove the theorem, we consider the event as it holds with probability goes to 1. We will derive the non-asymptotic error bound under the event . Recall that rl = rank(Bl0), one has rl = 0 for . Let us consider the class of matrices that have rank rl ≤ min{p, q} and we define . Let and denote its row space and column space, respectively. Let Ul and Vl be a given pair of rl-dimensional subspaces and , respectively. Define and . For a given pair (U, V), we can define the subspaces , and of as follows:

where is the orthogonal complement of the space . For simplicity, we will use , and to denote , and , respectively.

For the norm , it is easy to see that the norm J is decomposable with respect to the subspace pair , where . Therefore, the regularizer J satisfies Condition (G1) in Negahban et al. (2012).

We need to calculate and . It is easy to see . For , since rl = 0 holds for , one has

For any , we define as

We will derive a lower bound on F(Δ). In particular, we have

where the first inequality follows from condition (A13) and the second inequality follows from Lemma 3 in Negahban et al. (2012) by applying to the pair .

By the Cauchy-Schwarz inequality applied to the regularizer J and its dual J*, we have . Since holds by assumption, one has , where the second inequality holds due to the triangle inequality. Therefore, we have

By the subspace compatibility, we have . As the projection is non-expansive and , one has , and thus . Substituting it into the previous inequality, and noticing that , we obtain . The righthand side is a quadratic form of Δ, as long as , one has F(Δ) > 0. By Lemma 4 in Negahban et al. (2012), we have for some positive constant C.

Next we need to calculate , where with . By similar argument as the one in the proof of Theorem 5, one has . To calculate ∥Dl∥op, by the same argument as the one in proof of Theorem 5, one has with probability at least , where and are some positive constants. Therefore, one has with probability at least . By condition (A4), one has . By the proof of Theorem 2, one has with probability at least for some positive constants and . Thus, when λ ≥ 4C5nτ–1/2(p1/2 + q1/2), one has with probability at least for some positive constants c1, c2, c3, and .

By the proof of Theorem 1, the event holds with probability goes to 1. In particular, for some positive constants and . Therefore, there exists some positive constants c1, c2, c3, c4 and c5 such that with probability 1 – c1n2κ+τ exp{−c2(p + q)} – c3n2κ+τ exp(−n) – c4 exp(−c5n1–2κ), one has for some positive constant C. When Assumptions (A5) and (A14) hold, with probability goes to 1, one has . This completes the proof of Theorem 6.

C. Interpretations of Λl

In this section, we include some detailed interpretations of the definition Λl. Without loss of generality, we assume with s fixed for notation simplicity. We first give a necessary condition for rank consistency presented in Theorem 4. By proposition 18 of (Bach, 2008), for any 1 ≤ l ≤ s, we have if . Since and Δl0 is a nonrandom quantity, we have . Recall that {Δl0 : 1 ≤ l ≤ s} is the minimizer of l0(Δ1, …, Δs), and thus {Δl0 : 1 ≤ l ≤ s} is the solution of the optimal problem

| (9) |

Using Lagrange multiplier method, consider the minimizer of

where {Λl, l = 1, …, s} are Lagrange multipliers. Thus, for l = 1, …, s, {Δl0 : 1 ≤ l ≤ s} satisfies

Recall that , , and for l = 1, . . . s, where ⊗ denotes the Kronecker product. Let , K = diag{K1, …, Ks}, for l = 1, …, s such that

Then the Lagrange equation can be written as

It is easy to show that

From the above calculation, we can see that vec(Λ) = (vec(Λ1)T, …, vec(Λs)T)T is actually the Lagrange multiplier for the optimization problem (9).

Footnotes

Supplementary Material

Supplementary Material available online includes modified algorithm for our regularized low rank estimation procedure when response and covariates are not centered and additional simulation results.

Contributor Information

Dehan Kong, Department of Statistical Sciences, University of Toronto.

Baiguo An, School of Statistics, Capital University of Economics and Business.

Jingwen Zhang, Department of Biostatistics, University of North Carolina at Chapel Hill.

Hongtu Zhu, Department of Biostatistics, University of North Carolina at Chapel Hill.

References

- Anderson TW (1955), “The integral of a symmetric unimodal function over a symmetric convex set and some probability inequalities,” Proceedings of the American Mathematical Society, 6, 170–176. [Google Scholar]

- Bach FR (2008), “Consistency of trace norm minimization,” Journal of Machine Learning Research, 9, 1019–1048. [Google Scholar]

- Barrett JC, Fry B, Maller J, and Daly MJ (2005), “Haploview: analysis and visualization of LD and haplotype maps,” Bioinformatics, 21, 263–265. [DOI] [PubMed] [Google Scholar]

- Barut E, Fan J, and Verhasselt A (2016), “Conditional sure independence screening,” Journal of the American Statistical Association, 111, 1266–1277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beck A and Teboulle M (2009), “A fast iterative shrinkage-thresholding algorithm for linear inverse problems,” SIAM Journal on Imaging Sciences, 2, 183–202. [Google Scholar]

- Borwein JM and Lewis AS (2010), Convex analysis and nonlinear optimization: theory and examples, Springer Science & Business Media. [Google Scholar]

- Breiman L and Friedman J (1997), “Predicting multivariate responses in multiple linear regression,” Journal of the Royal Statistical Society, 59, 3–54. [Google Scholar]

- Buhlmann P and van de Geer S (2011), Statistics for High-Dimensional Data: Methods, Theory and Applications., New York, N. Y.: Springer. [Google Scholar]

- Cai J-F, Candès EJ, and Shen Z (2010), “A singular value thresholding algorithm for matrix completion,” SIAM Journal on Optimization, 20, 1956–1982. [Google Scholar]

- Candès E and Tao T (2007), “The Dantzig selector: Statistical estimation when p is much larger than n,” The Annals of Statistics, 2313–2351. [Google Scholar]

- Candes EJ and Recht B (2009), “Exact matrix completion via convex optimization,” Foundations of Computational Mathematics., 9, 717–772. [Google Scholar]

- Chen Y, Goldsmith J, and Ogden T (2016), “Variable Selection in Function-on-Scalar Regression,” Stat, 5, 88–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiang MC, Barysheva M, Toga AW, Medland SE, Hansell NK, James MR, McMahon KL, de Zubicaray GI, Martin NG, Wright MJ, and Thompson PM (2011), “BDNF gene effects on brain circuitry replicated in 455 twins,” NeuroImage, 55, 448–454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook R, Helland I, and Su Z (2013), “Envelopes and partial least squares regression,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 75, 851–877. [Google Scholar]

- Ding S and Cook D (2018), “Matrix variate regressions and envelope models,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 80, 387–408. [Google Scholar]

- Ding S and Cook RD (2014), “Dimension folding PCA and PFC for matrix-valued predictors,” Statistica Sinica, 24, 463–492. [Google Scholar]

- Facchinei F and Pang J-S (2003), Finite-dimensional variational inequalities and complementarity problems Vol. I, Springer Series in Operations Research, Springer-Verlag, New York. [Google Scholar]

- Fan J, Feng Y, and Song R (2011), “Nonparametric independence screening in sparse ultra-high-dimensional additive models,” Journal of the American Statistical Association, 106, 544–557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J and Lv J (2008), “Sure independence screening for ultrahigh dimensional feature space,” Journal of the Royal Statistical Society. Series B., 70, 849–911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- —(2010), “A selective overview of variable selection in high dimensional feature space,” Statistica Sinica, 20, 101. [PMC free article] [PubMed] [Google Scholar]

- Fan J and Song R (2010), “Sure independence screening in generalized linear models with NP-dimensionality,” The Annals of Statistics, 38, 3567–3604. [Google Scholar]

- Fosdick BK and Hoff PD (2015), “Testing and modeling dependencies between a network and nodal attributes,” Journal of the American Statistical Association, 110, 1047–1056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabriel SB, Schaffner SF, Nguyen H, Moore JM, Roy J, Blumenstiel B, Higgins J, DeFelice M, Lochner A, Faggart M, et al. (2002), “The structure of haplotype blocks in the human genome,” Science, 296, 2225–2229. [DOI] [PubMed] [Google Scholar]

- Hibar D, Stein JL, Kohannim O, Jahanshad N, Saykin AJ, Shen L, Kim S, Pankratz N, Foroud T, Huentelman MJ, Potkin SG, Jack C, Weiner MW, Toga AW, Thompson P, and ADNI (2011), “Voxelwise gene-wide association study (vGeneWAS): multivariate gene-based association testing in 731 elderly subjects,” NeuroImage, 56, 1875–1891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang M, Nichols T, Huang C, Yang Y, Lu Z, Feng Q, Knickmeyer RC, Zhu H, and ADNI (2015), “FVGWAS: Fast Voxelwise Genome Wide Association Analysis of Large-scale Imaging Genetic Data,” NeuroImage, 118, 613–627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knight K and Fu W (2000), “Asymptotics for lasso-type estimators,” The Annals of Statistics, 28, 1356–1378. [Google Scholar]

- Leng C and Tang CY (2012), “Sparse matrix graphical models,” Journal of the American Statistical Association, 107, 1187–1200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li L and Zhang X (2017), “Parsimonious tensor response regression,” Journal of the American Statistical Association, 112, 1131–1146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J and Calhoun VD (2014), “A review of multivariate analyses in imaging genetics,” Frontiers in Neuroinformatics, 8, 29. [DOI] [PMC free article] [PubMed] [Google Scholar]