Abstract

Background

Use of patient portals has been associated with positive outcomes in patient engagement and satisfaction. Portal studies have also connected portal use, as well as the nature of users’ interactions with portals, and the contents of their generated data to meaningful cost and quality outcomes. Incentive programs in the United States have encouraged uptake of health information technology, including patient portals, by setting standards for meaningful use of such technology. However, despite widespread interest in patient portal use and adoption, studies on patient portals differ in actual metrics used to operationalize and track utilization, leading to unsystematic and incommensurable characterizations of use. No known review has systematically assessed the measurements used to investigate patient portal utilization.

Objective

The objective of this study was to apply systematic review criteria to identify and compare methods for quantifying and reporting patient portal use.

Methods

Original studies with quantifiable metrics of portal use published in English between 2014 and the search date of October 17, 2018, were obtained from PubMed using the Medical Subject Heading term “Patient Portals” and related keyword searches. The first search round included full text review of all results to confirm a priori data charting elements of interest and suggest additional categories inductively; this round was supplemented by the retrieval of works cited in systematic reviews (based on title screening of all citations). An additional search round included broader keywords identified during the full-text review of the first round. Second round results were screened at abstract level for inclusion and confirmed by at least two raters. Included studies were analyzed for metrics related to basic use/adoption, frequency of use, duration metrics, intensity of use, and stratification of users into “super user” or high utilizers. Additional categories related to provider (including care team/administrative) use of the portal were identified inductively. Additional analyses included metrics aligned with meaningful use stage 2 (MU-2) categories employed by the US Centers for Medicare and Medicaid Services and the association between the number of portal metrics examined and the number of citations and the journal impact factor.

Results

Of 315 distinct search results, 87 met the inclusion criteria. Of the a priori metrics, plus provider use, most studies included either three (26 studies, 30%) or four (23 studies, 26%) metrics. Nine studies (10%) only reported the patient use/adoption metric and only one study (1%) reported all six metrics. Of the US-based studies (n=76), 18 (24%) were explicitly motivated by MU-2 compliance; 40 studies (53%) at least mentioned these incentives, but only 6 studies (8%) presented metrics from which compliance rates could be inferred. Finally, the number of metrics examined was not associated with either the number of citations or the publishing journal’s impact factor.

Conclusions

Portal utilization measures in the research literature can fall below established standards for “meaningful” or they can substantively exceed those standards in the type and number of utilization properties measured. Understanding how patient portal use has been defined and operationalized may encourage more consistent, well-defined, and perhaps more meaningful standards for utilization, informing future portal development.

Keywords: patient portals, meaningful use, American Recovery and Reinvestment Act, Health Information Technology for Economic and Clinical Health Act, portal utilization, patient-generated health data, portal, systematic review

Introduction

A patient portal is a secure online website, managed by a health care organization, that provides patients access to their personal health information [1-3]. Portals were developed to provide patients with a platform through which to claim ownership over their health care. For patients that adopt health care portals, usage of the portal has been shown to positively impact health outcomes [1]. Despite their introduction in the late 1990s to augment patient engagement [2], widespread adoption of patient portals was not seen until 2006 [2,4]. As of 2018, a reported 90% of health care organizations offer patients portal access, with the remaining 10% reporting plans to adopt this tool [5].

Numerous studies have investigated the relationship between patient portal utilization and health outcomes, specifically indicating a link between increased portal use and increased rates of patient engagement [6-9]. Notably, engaged individuals more actively participate in the management of their health care [10] and report enhanced patient satisfaction [11], a finding increasingly critical in patients with chronic diseases [12]. Patient portal utilization has been linked to “significant decreases in office visits…, changes in medication regimen, and better adherence to treatment” [13], along with improved chronic disease management and disease awareness [8,9]. Interestingly, even the content of patient messages was recently found to be associated with estimated readmission rates in patients with ischemic heart disease [14]. In these ways, patient portals have been cited as essential components of the solution to the cost and quality health care crisis in the United States [2].

A driving force behind the adoption and current progression of patient portals is the meaningful use (MU) criteria [13,15,16]. Introduced in 2009, the American Recovery and Reinvestment Act [2,16] included $30 billion [17] for the incentive program’s implementation to fund government reimbursements for patient-centered health care [13] with the goal of utilizing electronic exchange of health information to improve quality of care [2]. Specific program guidelines, including an emphasis on increasing patient-controlled data and financial incentives to interact with patients through a patient portal [1,18], resulted in increased portal utilization [1]. Interactive, MU-mandated features of patient portals currently include (1) a clinical summary following each patient visit, (2) support of secure messaging between the patient and health care provider, and (3) the functionality of viewing, downloading, and transferring patient data [2].

Coupled with advances in technology and continued movement toward focusing on patient-centered care, features beyond those described by MU criteria have been implemented, including online appointment scheduling and bill payments, and continue to shape portal evolution. Mirroring the benefits of this technology, numerical projections demonstrate that the rate at which patients wish to utilize patient portals far exceeds the rate at which this technology is provided to them by their health care providers [19], with an estimated 75% of individuals accessing their personal health records via patient portals by 2020 [19].

Despite widespread portal interest and adoption, as well as comprehensive reviews on patient engagement with portals [2], no review has systematically assessed measurements investigating patient portal utilization. Currently, measurement of patient portal use varies widely, with inconsistent conceptual definitions serving as a consistent limitation to robust analysis [20]. Understanding how patient portal use has been defined and operationalized, both previously and currently, will encourage consistent and well-defined utilization of patient portals. Further, standardization of patient portal measurements will provide a basis from which to systematically analyze how to continue developing patient portals best suited to consumer needs.

Methods

Study Eligibility Criteria

This systematic review includes original studies with quantifiable metrics of portal use, broadly construed. Subjective reporting on usability, design requirements, or other qualitative analyses were excluded as nontopical. Systematic reviews were also excluded, although their bibliographies were utilized for reference crawling. The criteria used to determine eligibility of studies employing self-reported use and prospective studies emerged inductively through interrater review and discussion (between TM, LLB, and JMK) based on preliminary results. Self-reporting measures were excluded unless they reported direct portal usage data that were quantifiable and similar to actual portal use tracking (eg, by frequency of logins, duration of sessions, number of functions used, etc). Prospective trial designs were omitted if they artificially influenced portal use but were included if the portal use metric could be reasonably abstracted from its experimental context (eg, as either a quantified outcome or an uncontrolled baseline measure).

Studies available in English, published between 2014 and the end search date of October 17, 2018, were eligible for inclusion. The year 2014 was selected due to the full rollout of Centers for Medicare & Medicaid Services (CMS) meaningful use (MU) stage 2 (MU-2) requirements and the emergence of “Patient Portals” as a Medical Subject Headings (MeSH) term (automatically including cognate terms “Patient Internet Portal,” “Patient Portal,” “Patient Web Portal,” and “Patient Web Portals,” and subsumed under “Health Records, Personal,” with previous indexing via the less focused term, “Electronic Health Records,” from 2010-2016). Although US MU-2 regulations were of particular interest, we did not exclude studies from other countries so that potentially informative use metrics employed outside the United States would also inform the results. Studies from outside the United States were not included in the analysis focused on MU.

Identification and Selection of Studies

The search proceeded in two major rounds. In round 1, authors JMK and LLB identified studies in PubMed by applying the MeSH term “Patient Portals” with no other limiters. All round 1 results were initially reviewed in English full text if available rather than relying on title/abstract screening alone. Full texts were read to better orient the raters to the patient portal utilization literature, suggest secondary sources and search terms, confirm a priori data charting categories of interest (use/adoption, frequency, duration, intensity, and super user), and suggest additional charting elements inductively. Within the round 1 results, secondary searching was performed on all article bibliographies, and relevant titles were retrieved for review. Results prior to 2014 were excluded based on the emerging relevance and centrality of CMS MU.

After reviewing the literature (241 full-text articles from round 1, including 148 articles from PubMed and 93 articles from the bibliographic search), themes that emerged as commonly studies metrics became the basis of our coding for the complete two-part search process. These confirmed our a priori themes of interest and would serve as relevant limiters in the broader keyword-based second search to complement the more restrictive round 1 MeSH-based search. Round 2 searching (by TM) applied the following terms at title/abstract and keyword levels: “patient portal” AND (frequency OR use OR duration OR intensity). Duplicate results were removed by an automatic process using Stata matching on title, author, and year, followed by a manual check to remove additional duplicates that were missed (eg, formatting, punctuation, etc).

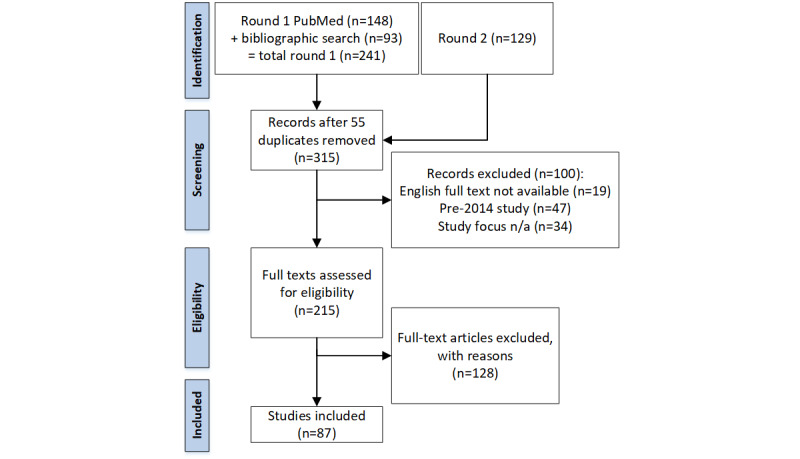

The full results were screened at the abstract level to exclude non–English articles, those without full text, sources older than 2014, and articles lacking quantified portal metrics. Two raters (LLB and JMK) assessed inclusion at the abstract level, followed by full text review; any inclusion or data charting discrepancies were resolved in rater meetings (by LLB, JMK, and TM). Figure 1 summarizes the screening and inclusion process.

Figure 1.

Study selection and inclusion process reported per guidelines of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [21]. n/a: Not applicable.

Data Collection and Study Appraisal

For coding purposes, use/adoption, frequency, duration, intensity, and super user (or similar user stratification) were considered a priori themes from which to extract definitions; provider use emerged as a theme inductively. Super user, in this context, is synonymous with high utilizer and should not be confused with the information technology standard definition implying a user with elevated privileges. All metrics were coded as binary, indicating the presence of a measure for and/or definition of each respective metric. These data were coded and recorded in a spreadsheet containing the article citation information and columns for themes of interest for both portal use metric definitions and MU criteria. Extractors’ working definitions of metric types are summarized in Table 1.

Table 1.

Study inclusion metric definitions.

| Metric | Definition used for data charting |

| Provider use |

|

| Patient use/adoption |

|

| Frequency |

|

| Duration |

|

| Intensity |

|

| Super user |

|

For included articles from the United States, a set of CMS MU themes emerged as relevant and were added upon agreement by all raters. An “MU motive” coding tag indicated either explicit desire for MU compliance in the article or at least mention of MU (eg, in the introduction or discussion). “MU consistent” tags rated apparent compatibility between the metric used and basic MU-2 requirements (regardless of motive), and included (1) “access”—coded if the percentage of patients accessing the portal information could be derived; (2) “send”—coded if patient-initiated messaging was tracked; (3) “view-download-transmit” (VDT)—coded if the percentage of patients who could VDT (specifically within the 4-day window following an office visit or 36 hours after discharge from hospital) could be determined from the metrics reported; and (4) MU-2—coded as shorthand when all three conditions, which together entail the full MU-2 compliance requirements, were met.

Finally, in lieu of assessing the methodological quality of these wide-ranging patient portal studies, we assessed quality based on two criteria: the quality of the journals in which articles were published based on their 2019 impact factor (except in one instance where the 2018 impact factor was used), and the citation count of each article. For the latter, we extracted the total number of citations (as of September 22, 2020) to calculate the mean number of citations per year that each article received based on the time period that elapsed since online publication date. Regression analyses were conducted to determine if the number of use metrics was predictive of the mean number of citations per year or the impact factor of the journal in which the article was published.

Results

A total of 315 search results remained after the removal of duplicates. All 315 articles were examined for defined patient portal metrics, with records excluded (n=100) for the following reasons: lack of the full-text English-language article or a suitably detailed abstract (non–English-language [n=18] or no text available [n=1]), study publication date prior to 2014 (n=47), and/or nonapplicable study focus (n=34). The remaining 215 studies were analyzed; of these, 128 were excluded, leaving 87 studies for inclusion in the analysis (see Figure 1). Notably, the abstracts (or translations thereof) of 18 non–English-language exclusions also met other exclusion criteria (eg, qualitative only/no metrics defined, portal development or usability studies, or literature reviews of unrelated portal topics).

Patient use was the most commonly studied patient portal metric, analyzed in 90% (78/87) of studies. Super user designations were only found in 24% (21/87) of studies, making this the least commonly studied metric. Table 2 identifies the frequency with which each metric was included in each study, with totals for each metric [6-10,18,22-102]. There were 32 different combinations of study metrics, identified in Table 3, with the two most common metric combinations being patient use/adoption, frequency, and intensity (n=9) and patient use/adoption alone (n=9). The majority of studies (53/87, 61%) analyzed three or fewer metrics, with 3.11 as the average number of metrics reported. The definitions of these 271 metrics are summarized by study in Multimedia Appendix 1.

Table 2.

Frequencies of metric inclusion in analyzed studies (N=87).

|

|

Metrica | |||||

| Study | Provider use | Patient use/adoption | Frequency | Duration | Intensity | Super user |

| Ackerman et al, 2017 [60] | ✓b | ✓ | ✓ | ✓ | ✓ |

|

| Ahmedani et al, 2016 [92] |

|

✓ | ✓ |

|

|

|

| Aljabri et al, 2018 [69] |

|

✓ |

|

|

✓ |

|

| Alpert et al, 2017 [98] | ✓ |

|

✓ | ✓ | ✓ | ✓ |

| Arcury et al, 2017 [47] |

|

✓ | ✓ |

|

✓ | |

| Bajracharya et al, 2016 [89] |

|

✓ | ✓ |

|

✓ | |

| Baldwin et al, 2017 [83] |

|

✓ |

|

|

|

|

| Bell et al, 2018 [50] |

|

✓ | ✓ |

|

✓ | |

| Boogerd et al, 2017 [31] | ✓ | ✓c | ✓ |

|

|

✓ |

| Bose-Brill et al, 2018 [67] | ✓ |

|

✓ |

|

✓ | ✓ |

| Bower et al, 2017 [93] |

|

✓ |

|

|||

| Chung et al, 2017 [70] | ✓ | ✓c | ✓ |

|

✓ | ✓ |

| Crotty et al, 2014 [58] | ✓ | ✓ | ✓ |

|

✓ | |

| Crotty et al, 2015 [100] | ✓ | ✓ | ✓ | ✓ | ||

| Dalal et al, 2016 [101] | ✓ | ✓c | ✓ |

|

✓ | |

| Davis et al, 2015 [96] | ✓ | ✓ | ||||

| Devkota et al, 2016 [51] | ✓ | ✓ | ||||

| Dexter et al, 2016 [77] | ✓ | |||||

| Emani et al, 2016 [30] | ✓ | ✓ | ✓ | ✓ | ||

| Fiks et al, 2015 [68] | ✓ | ✓ | ||||

| Fiks et al, 2016 [53] | ✓ | ✓ | ||||

| Forster et al, 2015 [22] | ✓ | ✓ | ✓ | ✓ | ||

| Garrido et al, 2014 [39] | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Garrido et al, 2015 [23] | ✓ | |||||

| Gheorghiu and Hagen, 2017 [24] | ✓ | ✓ | ||||

| Gordon and Hornbrook, 2016 [62] | ✓ | ✓ | ||||

| Graetz et al, 2016 [86] | ✓ | ✓ | ||||

| Griffin et al, 2016 [18] | ✓ | ✓ | ✓ | ✓ | ||

| Groen et al, 2017 [49] | ✓ | ✓ | ✓ | |||

| Haun et al, 2014 [73] | ✓ | ✓ | ✓ | |||

| Henry et al, 2016 [46] | ✓ | |||||

| Jhamb et al, 2015 [44] | ✓ | ✓ | ✓ | |||

| Jones et al, 2015 [71] | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Kamo et al, 2017 [56] | ✓ | ✓ | ✓ | ✓ | ||

| Kelly et al, 2017 [72] | ✓ | ✓ | ✓ | ✓ | ||

| King et al, 2017 [34] | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Kipping et al, 2016 [25] | ✓ | ✓ | ||||

| Krasowski et al, 2017 [36] | ✓ | ✓ | ✓ | |||

| Krist et al, 2014 [61] | ✓ | ✓ | ✓ | |||

| Laccetti et al, 2016 [41] | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Lau et al, 2014 [29] | ✓ | |||||

| Lyles et al, 2016 [9] | ✓c | ✓ | ✓ | ✓ | ||

| Mafi et al, 2016 [45] | ✓ | ✓ | ✓ | |||

| Manard et al, 2016 [35] | ✓ | ✓ | ||||

| Masterman et al, 2017 [99] | ✓ | ✓ | ||||

| Masterson Creber et al, 2016 [7] | ✓ | ✓ | ✓ | |||

| Mickles and Mielenz, 2014 [78] | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Miles et al, 2016 [82] | ✓c | |||||

| Mook et al, 2018 [74] | ✓ | |||||

| Neuner et al, 2015 [42] | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| North et al, 2014 [102] | ✓c | ✓ | ✓ | ✓ | ||

| Oest et al, 2018 [80] | ✓ | ✓ | ||||

| Payne et al, 2016 [84] | ✓ | ✓ | ||||

| Pearl, 2014 [38] | ✓ | ✓ | ✓ | ✓ | ||

| Pecina et al, 2017 [54] | ✓ | ✓c | ✓ | |||

| Peremislov, 2017 [91] | ✓ | ✓c | ✓ | ✓ | ||

| Perzynski et al, 2017 [63] | ✓ | ✓ | ✓ | |||

| Petullo et al, 2016 [75] | ✓ | ✓ | ✓ | |||

| Phelps et al, 2014 [52] | ✓ | ✓ | ✓ | ✓ | ||

| Pillemer et al, 2016 [37] | ✓ | ✓ | ✓ | |||

| Price-Haywood and Luo, 2017 [6] | ✓ | |||||

| Quinn et al, 2018 [97] | ✓c | ✓ | ✓ | |||

| Redelmeier and Kraus, 2018 [26] | ✓ | ✓ | ✓ | |||

| Reed et al, 2015 [57] | ✓ | ✓ | ✓ | |||

| Reicher and Reicher, 2016 [64] | ✓ | ✓ | ✓ | |||

| Riippa et al, 2014 [27] | ✓ | ✓ | ✓ | ✓ | ||

| Robinson et al, 2017 [81] | ✓ | ✓ | ✓ | ✓ | ||

| Ronda et al, 2014 [48] | ✓ | ✓ | ||||

| Runaas et al, 2017 [28] | ✓ |

|

✓ | ✓ | ||

| Sarkar et al, 2014 [8] | ✓c | ✓ | ✓ | |||

| Shaw et al, 2017 [65] | ✓ | ✓ | ✓ | ✓ | ||

| Shenson et al, 2016 [76] | ✓ | ✓ | ✓ | |||

| Shimada et al, 2016 [95] | ✓c | ✓ | ✓ | ✓ | ||

| Smith et al, 2015 [85] | ✓ | ✓ | ✓ | ✓ | ||

| Sorondo et al, 2017 [43] | ✓ | ✓ | ✓ | |||

| Steitz et al, 2017 [87] | ✓ | ✓ | ✓ | ✓ | ||

| Thompson et al, 2016 [32] | ✓ | ✓ | ✓ | |||

| Toscos et al, 2016 [40] | ✓ | ✓ | ✓ | |||

| Tulu et al, 2016 [10] | ✓ | ✓ | ✓ | |||

| Vydra et al, 2015 [90] | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Wallace et al, 2016 [88] | ✓ | ✓ | ✓ | ✓ | ||

| Weisner et al, 2016 [66] | ✓ | ✓ | ||||

| Williamson et al, 2017 [55] | ✓ | ✓ | ✓ | ✓ | ||

| Wolcott et al, 2017 [59] | ✓ | ✓c | ✓ | ✓ | ✓ | |

| Wolff et al, 2016 [94] | ✓ | ✓ | ||||

| Woods et al, 2017 [33] | ✓ | ✓ | ✓ | |||

| Zhong et al, 2018 [79] | ✓ | ✓ | ||||

| Total | 30 (34%) | 78 (90%) | 56 (64%) | 27 (31%) | 59 (68%) | 21 (24%) |

aSee Multimedia Appendix 1 for full definition of each metric from each article.

bIndicates presence of the metric.

cSpecial contexts shaped the form of the metric in ways atypical of direct use analysis (eg, due to experimental controls).

Table 3.

Number of metrics and metric combinations analyzed in 87 studies.

| Number of metrics analyzed | Studies analyzing stated metrics, n (%) | Metric combinations |

| 1 | 9 (10) |

|

| 2 | 18 (21) |

|

| 3 | 26 (30) |

|

| 4 | 23 (26) |

|

| 5 | 10 (11) |

|

| 6 | 1 (1) |

|

MU serves as a driving criterion for patient portal adoption and utilization, reflected by the 87% (66/76) of US publications that included MU criteria, irrespective of an explicit motive, and 24% (18/76) of US studies explicitly implicating MU criteria as a driving force behind their publication. However, 33% (29/87) of the total manuscripts did not include any MU motive, whether because of foreign publication (n=11) or, among the 76 US sources, lack of statement of MU motivation (n=17) or a statement that the data and analysis did not fit the MU criteria because the study conduct predated its release (n=1). These studies indicate that investigation of metrics surrounding portal utilization extends beyond MU motives and metrics. Lau and colleagues [29] mentioned that while exact login time stamps were noted to be available, frequency, duration, and intensity metrics went unanalyzed in favor of a simplified metric. Similarly, the study conducted by Emani et al [30] mentioned MU stage 1 requirements, yet researchers purposefully extended the CMS 3-day window to 5 days for patients to access their postvisit summary. Further, Boogerd and colleagues [31] analyzed portal implementation through portal inaccessibility; this was achieved through measuring login difficulties and downtime while stratifying implementation efficacy through self-reported parenting stress, an efficacy measure not widely seen in the published literature. Mirroring Boogerd, Thompson et al [32] included information regarding the number of patient and proxy portal password changes, and Woods et al [33] reported on the number of unsuccessful or incomplete logins per study subject. Thus, several studies examined meaningful metrics of portal use and usability exceeding, or at least not anticipated by, MU-2 requirements. These metrics reflect portal utilization beyond the criteria of MU.

The patient use/adoption metric was the most frequently studied of the analyzed variables, included as a study metric in 90% (78/87) of studies (Table 2). Comparatively, provider use was analyzed in only 34% (30/87) of studies and rarely studied without simultaneously investigating patient use/adoption (5/87, 6%). Teasing apart patient use from provider use is an important distinction; however, some studies combine these distinct data points together and analyze the summed use [34]. Mirroring this, other studies group together patients not registered for the portal with registered patients that haven’t messaged, highlighting the variability in reported metrics [35]. Similarly to the definitions of other analyzed metrics, provider use definitions revealed variability: while Krasowski et al [36] and Pillemer et al [37] tabulated provider use through “manual release of test results ahead of automatic release,” others calculated this metric through provider response to patient messages [38,39]. The combined analysis of patient and provider use continues in more recent literature. Margolius et al [103] found that having a wealthier or larger patient population and working more days per week resulted in primary care physicians receiving more messages from patients, which the authors stratified into message types. Provider use has been shown to lead to patient use [20], but while patients can be led toward engagement with a system required by their physicians, they can also be led away from a system not utilized by their providers [104] (eg, if patients message their providers but don’t receive a response). As mentioned previously, patient portal utilization has been employed as a proxy for patient engagement, with increased portal usage associated with better patient outcomes [105].

Notably, of the investigated metric groupings seen in Table 3, 59 studies (68%) included intensity as an analyzed metric, signaling the perceived importance of the depth at which patients were engaging with the portal. The definitions utilized by these studies varied: Emani and colleagues [30] distinguished between portal sessions and portal message use; Toscos et al [40] included intensity as the proportion of users engaging the daily health diary function; and Laccetti et al [41] investigated “staff MyChart actions performed per patient-initiated message.”

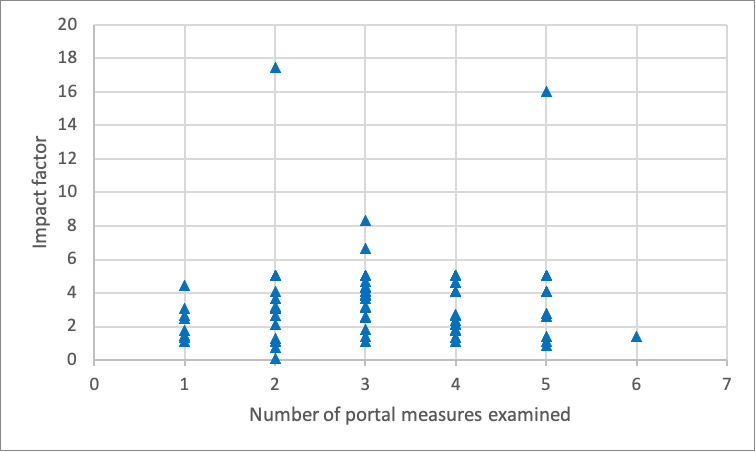

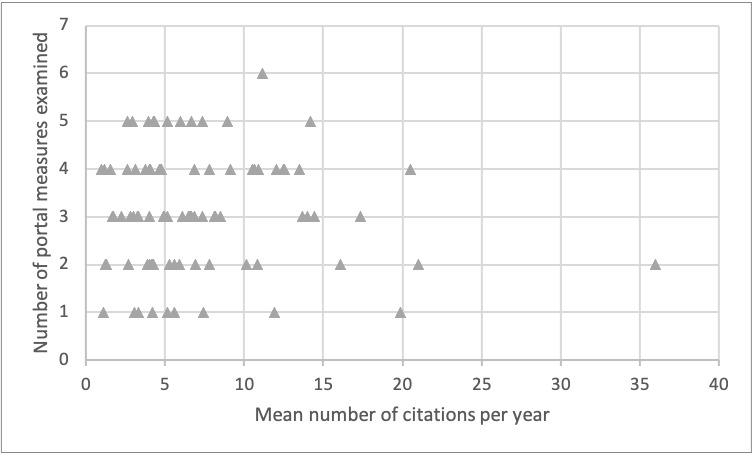

Neither of the quality variables (ie, journal impact factor and citation count) were shown to be statistically significantly associated with the number of patient portal metrics described in Table 3. The relationship between the number of patient portal metrics examined and the journal impact factor and the mean number of citations per year are visually depicted in Figures 2 and 3, respectively.

Figure 2.

Relationship between the number of portal measures examined in an article and the impact factor of the publishing journal.

Figure 3.

Relationship between the number of portal measures examined in an article and the mean number of citations per year (via Google Scholar).

Table 4 depicts studies that were consistent with some metric of MU criteria. Articles not published in the United States were excluded from MU analysis due to variability in portal guidelines by country. Combining the three MU metrics (ie, access, send, and VDT), 10 studies (13%) out of the 76 studies conducted in the United States did not meet at least one of the MU metrics, meaning that 66 studies (87%) did analyze MU criteria in some capacity, irrespective of an explicit motive. However, only 18 (24%) of the 76 US studies explicitly implicated MU criteria as a driving force behind their study.

Table 4.

Meaningful use (MU) definitions in US studies (n=76).

| Measure | Definition | Studies with requisite measure, n (%)a | Study citations |

| Explicit MU motive |

|

18 (24) | [7,18,30,32,37,42,45,55-65] |

| Mention of MU |

|

40 (53) | [6,8-10,33,36,38-40,43,44,47,50,53,54,66-90] |

| Access |

|

45 (59) | [6,9,18,30,32,33,36-38,40,42-45,50,51,53-56,58,60-66,69,71,72,80-82,85,87-96] |

| Send |

|

45 (59) | [8-10,18,30,32,35,39,40,42-44,50,51,54-60,62,63,65-67,70-73,75-78,85-88,91,94-99] |

| View-download-transmit (VDT) |

|

9 (12) | [36,42,56,60,61,65,72,81,96] |

| MU-2 |

|

6 (8) | [42,56,60,65,72,96] |

aPercentages exceed 100% total because studies could meet more than one criterion, and MU-2 represents studies that met all three conditions (access, send, and VDT) that together entail the full requirements of Centers for Medicare & Medicaid Services MU stage 2.

Further data analysis revealed that a larger percentage of manuscripts investigated three (30%) or four (26%) metrics rather than two metrics (21%), nodding to the perceived complexity of the relationship between variables influencing portal utilization. Only one study, by Neuner and colleagues [42], investigated all six variables included in MU guidelines, focusing on investigating enrollment and use based on MU guidelines, as well as satisfaction, highlighting the lack of exhaustive analysis of all available metrics. While “use” in this manuscript was defined as patients accessing their patient portal, use in other studies has been defined as number of enrollees [32], access plus protocol-specific assessments and secure messaging [43], percentage with at least one login [44], and number of patients who viewed physician notes within 30 days of their visit [45]. While Henry and colleagues [46] defined use as registration versus not, Arcury et al [47] and Graetz et al [86] analyzed use as a patient-reported binary metric, highlighting the variability in this metric’s composition. The many studies stratifying use based on at least one login could be capturing the login required to create the account and not portal utilization as proxy for health care engagement [6,29,48-50]. Some of these studies created specific classifications for users, including “nonusers,” “readers,” and “readers and writers” [51], potentially to mitigate their definition of use. As an example of the complexity of patient portal data, in an effort to ensure accuracy of the study population, Phelps et al [52] stratified users by the absence of any portal login in the past 6 months, despite at least two lab uploads, to ensure the population studied was alive and had reason to access the portal. Others classified use by the completion of at least one survey during the study period [53], the total number of patients on the mobile app [38], the number of patients initiating the online refill function [8], or contact with the care messenger via the portal [54], highlighting the variability in the definition of this fundamental metric.

Lacking standardized definitions, variable consistency in the application of MU terminology appears throughout the published literature [2,32,43,106]. At the most fundamental level, the lack of distinction between the patient health record (PHR), whose ownership and management lies with the patient, and the patient portal, whose ownership and management lies with the health care organization, was evident in publications that investigated patient portals but included information on PHRs in the statistical analysis [13,104,107]. Further, Devkota and colleagues [51] highlighted that mixed outcomes regarding the relationship between frequency of portal utilization and health outcomes are rooted in how studies analyze portal interaction; while some studies focused on message counts [31,41,70], others focused on interaction intensity with providers through portal messaging, stratifying by no use, read-only, and read-and-write [18,43]. Further, Jones et al [71] included consistency as part of their frequency measurement, an inclusion not found in other manuscripts. Baldwin and colleagues [83] explicitly stated in their manuscript that “registration rates and ID verifications do not account for the people who register but do not actively use the portal,” citing difficulties in their “use” analysis from patients who “report login issues and difficulty navigating portals.”

Discussion

Principal Findings

Portal analyses have extended beyond MU-2 criteria in an effort to best meet provider and patient needs. Fast Pass—an “automated rescheduling system” requiring opt-in through a patient portal—not only indirectly measured patient use/adoption through logins to enter the program but showed that automated rescheduling prompts reduced no-show appointments by 38% [108]. Patient portal utilization extending beyond MU criteria has been critical during the COVID-19 pandemic, with Patel et al [109] describing how pediatric patient portals pivoted from traditional, in-person enrollment methods and Judson et al [110] detailing the creation of a COVID-19 self-triage and assessment tool for primary care patients. The widespread patient portal adoption in the United States provides the necessary foundation for patients to access telemedicine visits while simultaneously creating a digital divide—a topic vastly cited since the emergence of patient portals [111-113]. Graetz et al [86] asserted that the digital divide particularly impacts disadvantaged groups; they observed that the use of a personal computer and internet access “explained 52% of the association between race and secure message use and 60% of the association between income and use,” and suggested that providing portal access across multiple platforms, including telephones, could reduce message use disparities. Further, initiation of portal use has been found to be “lower for racial and ethnic minorities, persons of lower socioeconomic status, and those without neighborhood broadband internet access,” leading to a digital divide in portal utilization [63]. A mismatch between “MU-based metrics of patient engagement and the priorities and needs of safety net populations” has also been cited [60], mirroring the recent combination of patient and provider use by Margolius et al [103] that found an increased quantity of patient-driven messages received by clinicians with a more robust or wealthier patient base. We recognize that portal utilization varies widely across institutions, with some institutions using patient portals for appointment scheduling, uploading demographic data and completing assessments prior to clinical visits, and even downloading parking passes for on-site visits, while other institutions emphasize portal use more heavily for message utilization and/or lab results. While our investigation was focused primarily on definitional differences in use across institutions, future investigations should explore portal-specific patient education and training, along with differences in portal functionality.

Recognizing that the intended target/purpose of portal interventions varies widely, it follows that the patient portal metrics utilized will be based on the functionalities being tested in each intervention. This fact results in the inability to generate specific overarching recommendations regarding portal analysis; however, systematic analysis of portal functions using clear definitions provides a foundation from which future studies can more readily compare portal use. Further, defining “use” more substantively by removing the baseline of single login—which could be the login used to create the portal itself without meaningful interaction with portal functions—could further facilitate the generation of meaningful utilization data. Therefore, we recommend that future studies clearly and specifically define the portal metrics utilized to allow for comparisons across studies and avoid using a binary measure of patient use/adoption that includes just one login, as the creation of a portal account often requires an initial login that does not necessarily equate to any MU. Relatedly, Gheorghiu and Hagens [106] criticized analyzing only the aggregate number of portal accesses because this cannot distinguish between a large yet infrequent number of users from a few frequent users. Further, we recommend that all future patient portal studies include the following population characteristics: the total organization population that could have access to the portal; the number of patients (and percentage of total) that currently have a patient portal account (regardless of use); and the number of patients that have used their account within the past year, with use being defined as two logins. This information will allow for meaningful comparisons across studies without being overly cumbersome to attain.

The diversity of metrics found in this review may also inform patient portal operations and dashboards of what may be worth tracking for research purposes. Additionally, the categories that emerged during this review could be used going forward to classify the variables of interest in future patient portal studies (ie, patient and/or provider use/adoption, duration, frequency, intensity, and super user). A few articles noted in the study limitations that a metric the authors deemed valuable to report could not be tracked for lack of available data. These included data on frequency for early adopters [84], intensity in the form of portal components accessed [35], and patient access to radiology images—the importance of which the authors noted was independent of MU-2 compliance, but, being unrelated to compliance, was not available [64]. One cannot study—or improve—what one does not track or offer to patients.

Further, the stratification of patient use/adoption provides an important area for future analysis. For instance, Zhong et al [79] cited the lack of quantification of active use (eg, in terms of per-user frequency or by-function intensity) as a study limitation. Some studies analyzed portal utilization through more “active” measures using a variety of multicomponent or stratified criteria. For instance, Devkota et al [51] grouped patients into “nonusers” who either did not activate their account or activated the account but did not write a message, “readers” who accessed but did not reply to emails, and “readers and writers” who read and subsequently wrote emails [51]. Oest et al [80] delineated commonly accessed portal features, including access to outpatient laboratory and radiology results. Miles et al [82] stratified use by types of available reports accessed—that is, the percentage of patients who viewed their radiology results were compared with views for other reports among all portal-registered patients. Manard et al [35] delineated active versus no active use by patients who wrote messages versus those who did not register or registered but only read messages.

Examples of methods used to stratify patient use/adoption in more “passive” terms include grouping patients by login versus no login (eg, Ronda et al [48] and Price-Haywood and Luo [6]) and defining use as patients registered for notifications versus those not registered at all (Henry et al [46]). Jones et al [71] defined an “active user” as one who had at least two portal sessions over the study period, with session defined as login-to-logout or until the 20-minute timeout occurred. Mirroring this, Petullo et al [75] distinguished account activation, defined as “active,” from patients sending messages, defined as “users.” Both Jones et al [71] and Petullo et al [75] created an interesting dynamic in which the publication employed the terminology of “active” without necessitating further portal engagement beyond login. Further, Masterson Creber et al [7] defined their own “Patient Activation Measure” to gauge patient engagement with portal functions, highlighting the need to more concretely define the parameters surrounding “active” versus “passive” portal use (and to disambiguate “active” as in user activity from merely “active” as in activated/registered accounts of otherwise passive users). Future measures should attempt to create a distinction between active and passive use.

Provider portal utilization drives patient utilization, with provider messaging levels and types predicting subsequent patient communication behavior [56] and provider responses to other patients’ messages driving a statistically significant increase in messages initiated by their patients [59]. A total of 30 studies in our systematic review specifically analyzed provider use, with nearly half of those studies analyzing provider-initiated messages. This fact further highlights the notion of physician use predicting patient use of portal functions and the intersection of physician portal use with institutional support of portal utilization. Recognizing that organizational policies mandate physician portal use, and nonuse, future studies should examine metrics for provider use more distinctly from patient use. As mentioned previously, provider use was only analyzed separately from patient use in 6% of studies, generating an untapped lens through which to investigate the driving forces of patient portal utilization. Mafi et al [45] found that individualized physician reminders to patients alerting them of completed visit notes drove patient portal utilization and engagement. However, provider and patient utilization are also intrinsically linked: Laccetti et al’s [41] analysis on portal use by clinical staff hinged on the clinical actions performed based on received patient messages, and Crotty et al’s [100] analysis of characteristics of unread messages necessitated message sending by the provider and lack of message reading by the patient. A recent study by Huerta et al [105] provided guidance on patient portal log file analysis and developed a taxonomy of computed analytic metrics. Patients who utilize their portal for longer periods are more likely to prefer communication through said portal, highlighting the importance of analyzing both patient and provider utilization [114].

Conclusion

Our investigation supports the claim that not all health care systems study patient portal utilization systematically; thus, health care system support of different communication modalities is essential. Currently, the published literature is limited to analysis that is mostly based on patient portal utilization, as defined by MU criteria. More in-depth studies, mirroring the log file analysis conducted by Huerta et al [105] and, more recently, Di Tosto et al [115] that included a blueprint of individual patients’ portal actions, would fulfill our endeavor to utilize patient portal data more completely than the literature currently reports routinely. A systematic approach to measurement of portal usage is necessary to more readily draw comparisons across existing and future studies. Investigation of both provider and patient use/adoption will provide insight to generate a platform that is most beneficial for all users. One important limitation to note is that our review was limited to one database, but the main outlets for patient portal studies were included. Further, this is the largest known review examining patient portal research and the only review focusing on associated MU compliance assessment. Future investigation should more holistically analyze patient portal components in combination with the utilization of health services to elicit potential relationships currently unseen between portal use and patient health outcomes and to explore use that is, in the given context, truly meaningful.

Abbreviations

- CMS

Centers for Medicare & Medicaid Services

- MeSH

Medical Subject Heading

- MU

meaningful use

- MU-2

Centers for Medicare & Medicaid Services meaningful use stage 2

- PHR

patient health record

- VDT

view-download-transmit

Appendix

Detailed summary of included studies.

Footnotes

Authors' Contributions: TM conceptualized the research aim and supervised the project; TM and JMK designed the search strategy; LLB, JMK, and TM conducted literature searches and performed the data charting analysis; LLB and TM drafted the manuscript; TM, LLB, SLJ, and AK interpreted the study results; and all authors reviewed, revised, and approved the manuscript.

Conflicts of Interest: None declared.

References

- 1.Turner K, Hong Y, Yadav S, Huo J, Mainous A. Patient portal utilization: Before and after stage 2 electronic health record meaningful use. J Am Med Informatics Assoc. 2019;26(10):960–967. doi: 10.1093/jamia/ocz030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Irizarry T, DeVito DA, Curran CR. Patient Portals and Patient Engagement: A State of the Science Review. J Med Internet Res. 2015 Jun;17(6):e148. doi: 10.2196/jmir.4255. http://www.jmir.org/2015/6/e148/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lockwood MB, Dunn-Lopez K, Pauls H, Burke L, Shah SD, Saunders MA. If you build it, they may not come: modifiable barriers to patient portal use among pre- and post-kidney transplant patients. JAMIA Open. 2018 Oct;1(2):255–264. doi: 10.1093/jamiaopen/ooy024. http://europepmc.org/abstract/MED/31984337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Weitzman ER, Kaci L, Mandl KD. Acceptability of a personally controlled health record in a community-based setting: implications for policy and design. J Med Internet Res. 2009 Apr 29;11(2):e14. doi: 10.2196/jmir.1187. https://www.jmir.org/2009/2/e14/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Heath S. Patient Portal Adoption Tops 90%, But Strong Patient Use Is Needed. Patient Engagement HIT. [2020-04-29]. https://patientengagementhit.com/news/patient-portal-adoption-tops-90-but-strong-patient-use-is-needed.

- 6.Price-Haywood EG, Luo Q. Primary Care Practice Reengineering and Associations With Patient Portal Use, Service Utilization, and Disease Control Among Patients With Hypertension and/or Diabetes. Ochsner J. 2017;17(1):103–111. http://europepmc.org/abstract/MED/28331456. [PMC free article] [PubMed] [Google Scholar]

- 7.Masterson Creber Ruth, Prey J, Ryan B, Alarcon I, Qian M, Bakken S, Feiner S, Hripcsak G, Polubriaginof F, Restaino S, Schnall R, Strong P, Vawdrey D. Engaging hospitalized patients in clinical care: Study protocol for a pragmatic randomized controlled trial. Contemp Clin Trials. 2016 Mar;47:165–71. doi: 10.1016/j.cct.2016.01.005. http://europepmc.org/abstract/MED/26795675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sarkar U, Lyles CR, Parker MM, Allen J, Nguyen R, Moffet HH, Schillinger D, Karter AJ. Use of the refill function through an online patient portal is associated with improved adherence to statins in an integrated health system. Med Care. 2014 Mar;52(3):194–201. doi: 10.1097/MLR.0000000000000069. http://europepmc.org/abstract/MED/24374412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lyles CR, Sarkar U, Schillinger D, Ralston JD, Allen JY, Nguyen R, Karter AJ. Refilling medications through an online patient portal: consistent improvements in adherence across racial/ethnic groups. J Am Med Inform Assoc. 2016 Apr;23(e1):e28–33. doi: 10.1093/jamia/ocv126. http://europepmc.org/abstract/MED/26335983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tulu B, Trudel J, Strong DM, Johnson SA, Sundaresan D, Garber L. Patient Portals: An Underused Resource for Improving Patient Engagement. Chest. 2016 Jan;149(1):272–7. doi: 10.1378/chest.14-2559. [DOI] [PubMed] [Google Scholar]

- 11.Dendere R, Slade C, Burton-Jones A, Sullivan C, Staib A, Janda M. Patient Portals Facilitating Engagement With Inpatient Electronic Medical Records: A Systematic Review. J Med Internet Res. 2019 Apr 11;21(4):e12779. doi: 10.2196/12779. https://www.jmir.org/2019/4/e12779/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McAlearney AS, Sieck CJ, Hefner JL, Aldrich AM, Walker DM, Rizer MK, Moffatt-Bruce SD, Huerta TR. High Touch and High Tech (HT2) Proposal: Transforming Patient Engagement Throughout the Continuum of Care by Engaging Patients with Portal Technology at the Bedside. JMIR Res Protoc. 2016 Nov 29;5(4):e221. doi: 10.2196/resprot.6355. https://www.researchprotocols.org/2016/4/e221/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kruse CS, Bolton K, Freriks G. The effect of patient portals on quality outcomes and its implications to meaningful use: a systematic review. J Med Internet Res. 2015 Feb 10;17(2):e44. doi: 10.2196/jmir.3171. https://www.jmir.org/2015/2/e44/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sulieman L, Yin Z, Malin BA. Why Patient Portal Messages Indicate Risk of Readmission for Patients with Ischemic Heart Disease. AMIA Annu Symp Proc. 2019;2019:828–837. http://europepmc.org/abstract/MED/32308879. [PMC free article] [PubMed] [Google Scholar]

- 15.Irizarry T, Shoemake J, Nilsen ML, Czaja S, Beach S, DeVito Dabbs A. Patient Portals as a Tool for Health Care Engagement: A Mixed-Method Study of Older Adults With Varying Levels of Health Literacy and Prior Patient Portal Use. J Med Internet Res. 2017 Mar 30;19(3):e99. doi: 10.2196/jmir.7099. https://www.jmir.org/2017/3/e99/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wright A, Feblowitz J, Samal L, McCoy AB, Sittig DF. The Medicare Electronic Health Record Incentive Program: provider performance on core and menu measures. Health Serv Res. 2014 Feb;49(1 Pt 2):325–46. doi: 10.1111/1475-6773.12134. http://europepmc.org/abstract/MED/24359554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Levine DM, Healey MJ, Wright A, Bates DW, Linder JA, Samal L. Changes in the quality of care during progress from stage 1 to stage 2 of Meaningful Use. J Am Med Inform Assoc. 2017 Mar 01;24(2):394–397. doi: 10.1093/jamia/ocw127. http://europepmc.org/abstract/MED/27567000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Griffin A, Skinner A, Thornhill J, Weinberger M. Patient Portals: Who uses them? What features do they use? And do they reduce hospital readmissions? Appl Clin Inform. 2016;7(2):489–501. doi: 10.4338/ACI-2016-01-RA-0003. http://europepmc.org/abstract/MED/27437056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ford EW, Hesse BW, Huerta TR. Personal Health Record Use in the United States: Forecasting Future Adoption Levels. J Med Internet Res. 2016 Mar 30;18(3):e73. doi: 10.2196/jmir.4973. https://www.jmir.org/2016/3/e73/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Powell KR. Patient-Perceived Facilitators of and Barriers to Electronic Portal Use: A Systematic Review. Comput Inform Nurs. 2017 Nov;35(11):565–573. doi: 10.1097/CIN.0000000000000377. [DOI] [PubMed] [Google Scholar]

- 21.Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009 Jul 21;6(7):e1000097. doi: 10.1371/journal.pmed.1000097. https://dx.plos.org/10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Megan Forster Megan, Dennison K, Callen J, Andrew Andrew, Westbrook JI. Maternity patients' access to their electronic medical records: use and perspectives of a patient portal. Health Inf Manag. 2015;44(1):4–11. doi: 10.1177/183335831504400101. [DOI] [PubMed] [Google Scholar]

- 23.Garrido T, Kanter M, Meng D, Turley M, Wang J, Sue V, Scott L. Race/ethnicity, personal health record access, and quality of care. Am J Manag Care. 2015 Feb 01;21(2):e103–13. https://www.ajmc.com/pubMed.php?pii=85966. [PubMed] [Google Scholar]

- 24.Gheorghiu B, Hagens S. Use and maturity of electronic patient portals. Stud Health Technol Inform. 2017;234:136–141. doi: 10.3233/978-1-61499-742-9-136. [DOI] [PubMed] [Google Scholar]

- 25.Kipping S, Stuckey MI, Hernandez A, Nguyen T, Riahi S. A Web-Based Patient Portal for Mental Health Care: Benefits Evaluation. J Med Internet Res. 2016 Nov 16;18(11):e294. doi: 10.2196/jmir.6483. https://www.jmir.org/2016/11/e294/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Redelmeier DA, Kraus NC. Patterns in Patient Access and Utilization of Online Medical Records: Analysis of MyChart. J Med Internet Res. 2018 Feb 06;20(2):e43. doi: 10.2196/jmir.8372. https://www.jmir.org/2018/2/e43/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Riippa I, Linna M, Rönkkö Ilona, Kröger Virpi. Use of an electronic patient portal among the chronically ill: an observational study. J Med Internet Res. 2014 Dec 08;16(12):e275. doi: 10.2196/jmir.3722. https://www.jmir.org/2014/12/e275/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Runaas L, Hanauer D, Maher M, Bischoff E, Fauer A, Hoang T, Munaco A, Sankaran R, Gupta R, Seyedsalehi S, Cohn A, An L, Tewari M, Choi SW. BMT Roadmap: A User-Centered Design Health Information Technology Tool to Promote Patient-Centered Care in Pediatric Hematopoietic Cell Transplantation. Biol Blood Marrow Transplant. 2017 May;23(5):813–819. doi: 10.1016/j.bbmt.2017.01.080. https://linkinghub.elsevier.com/retrieve/pii/S1083-8791(17)30227-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lau M, Campbell H, Tang T, Thompson DJS, Elliott T. Impact of patient use of an online patient portal on diabetes outcomes. Can J Diabetes. 2014 Feb;38(1):17–21. doi: 10.1016/j.jcjd.2013.10.005. [DOI] [PubMed] [Google Scholar]

- 30.Emani S, Healey M, Ting DY, Lipsitz SR, Ramelson H, Suric V, Bates DW. Awareness and Use of the After-Visit Summary Through a Patient Portal: Evaluation of Patient Characteristics and an Application of the Theory of Planned Behavior. J Med Internet Res. 2016 Apr 13;18(4):e77. doi: 10.2196/jmir.5207. https://www.jmir.org/2016/4/e77/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Boogerd E, Maas-Van Schaaijk NM, Sas TC, Clement-de Boers A, Smallenbroek M, Nuboer R, Noordam C, Verhaak CM. Sugarsquare, a Web-Based Patient Portal for Parents of a Child With Type 1 Diabetes: Multicenter Randomized Controlled Feasibility Trial. J Med Internet Res. 2017 Aug 22;19(8):e287. doi: 10.2196/jmir.6639. https://www.jmir.org/2017/8/e287/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Thompson LA, Martinko T, Budd P, Mercado R, Schentrup AM. Meaningful Use of a Confidential Adolescent Patient Portal. J Adolesc Health. 2016 Feb;58(2):134–40. doi: 10.1016/j.jadohealth.2015.10.015. [DOI] [PubMed] [Google Scholar]

- 33.Woods SS, Forsberg CW, Schwartz EC, Nazi KM, Hibbard JH, Houston TK, Gerrity M. The Association of Patient Factors, Digital Access, and Online Behavior on Sustained Patient Portal Use: A Prospective Cohort of Enrolled Users. J Med Internet Res. 2017 Oct 17;19(10):e345. doi: 10.2196/jmir.7895. https://www.jmir.org/2017/10/e345/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.King G, Maxwell J, Karmali A, Hagens S, Pinto M, Williams L, Adamson K. Connecting Families to Their Health Record and Care Team: The Use, Utility, and Impact of a Client/Family Health Portal at a Children's Rehabilitation Hospital. J Med Internet Res. 2017 Apr 06;19(4):e97. doi: 10.2196/jmir.6811. https://www.jmir.org/2017/4/e97/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Manard W, Scherrer JF, Salas J, Schneider FD. Patient Portal Use and Blood Pressure Control in Newly Diagnosed Hypertension. J Am Board Fam Med. 2016;29(4):452–9. doi: 10.3122/jabfm.2016.04.160008. http://www.jabfm.org/cgi/pmidlookup?view=long&pmid=27390376. [DOI] [PubMed] [Google Scholar]

- 36.Krasowski MD, Grieme CV, Cassady B, Dreyer NR, Wanat KA, Hightower M, Nepple KG. Variation in Results Release and Patient Portal Access to Diagnostic Test Results at an Academic Medical Center. J Pathol Inform. 2017;8:45. doi: 10.4103/jpi.jpi_53_17. http://www.jpathinformatics.org/article.asp?issn=2153-3539;year=2017;volume=8;issue=1;spage=45;epage=45;aulast=Krasowski. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Pillemer F, Price RA, Paone S, Martich GD, Albert S, Haidari L, Updike G, Rudin R, Liu D, Mehrotra A. Direct Release of Test Results to Patients Increases Patient Engagement and Utilization of Care. PLoS One. 2016;11(6):e0154743. doi: 10.1371/journal.pone.0154743. https://dx.plos.org/10.1371/journal.pone.0154743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Pearl R. Kaiser Permanente Northern California: current experiences with internet, mobile, and video technologies. Health Aff (Millwood) 2014 Feb;33(2):251–7. doi: 10.1377/hlthaff.2013.1005. [DOI] [PubMed] [Google Scholar]

- 39.Garrido T, Meng D, Wang JJ, Palen TE, Kanter MH. Secure e-mailing between physicians and patients: transformational change in ambulatory care. J Ambul Care Manage. 2014;37(3):211–8. doi: 10.1097/JAC.0000000000000043. http://europepmc.org/abstract/MED/24887522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Toscos T, Daley C, Heral L, Doshi R, Chen Y, Eckert GJ, Plant RL, Mirro MJ. Impact of electronic personal health record use on engagement and intermediate health outcomes among cardiac patients: a quasi-experimental study. J Am Med Inform Assoc. 2016 Jan;23(1):119–28. doi: 10.1093/jamia/ocv164. http://europepmc.org/abstract/MED/26912538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Laccetti AL, Chen B, Cai J, Gates S, Xie Y, Lee SJC, Gerber DE. Increase in Cancer Center Staff Effort Related to Electronic Patient Portal Use. J Oncol Pract. 2016 Dec;12(12):e981–e990. doi: 10.1200/JOP.2016.011817. http://europepmc.org/abstract/MED/27601511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Neuner J, Fedders M, Caravella M, Bradford L, Schapira M. Meaningful use and the patient portal: patient enrollment, use, and satisfaction with patient portals at a later-adopting center. Am J Med Qual. 2015;30(2):105–13. doi: 10.1177/1062860614523488. http://europepmc.org/abstract/MED/24563085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Sorondo B, Allen A, Fathima S, Bayleran J, Sabbagh I. Patient Portal as a Tool for Enhancing Patient Experience and Improving Quality of Care in Primary Care Practices. EGEMS (Wash DC) 2016;4(1):1262. doi: 10.13063/2327-9214.1262. http://europepmc.org/abstract/MED/28203611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Jhamb M, Cavanaugh KL, Bian A, Chen G, Ikizler TA, Unruh ML, Abdel-Kader K. Disparities in Electronic Health Record Patient Portal Use in Nephrology Clinics. Clin J Am Soc Nephrol. 2015 Nov 06;10(11):2013–22. doi: 10.2215/CJN.01640215. https://cjasn.asnjournals.org/cgi/pmidlookup?view=long&pmid=26493242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Mafi JN, Mejilla R, Feldman H, Ngo L, Delbanco T, Darer J, Wee C, Walker J. Patients learning to read their doctors' notes: the importance of reminders. J Am Med Inform Assoc. 2016 Sep;23(5):951–5. doi: 10.1093/jamia/ocv167. http://europepmc.org/abstract/MED/26911830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Henry SL, Shen E, Ahuja A, Gould MK, Kanter MH. The Online Personal Action Plan: A Tool to Transform Patient-Enabled Preventive and Chronic Care. Am J Prev Med. 2016 Jul;51(1):71–7. doi: 10.1016/j.amepre.2015.11.014. [DOI] [PubMed] [Google Scholar]

- 47.Arcury TA, Quandt SA, Sandberg JC, Miller DP, Latulipe C, Leng X, Talton JW, Melius KP, Smith A, Bertoni AG. Patient Portal Utilization Among Ethnically Diverse Low Income Older Adults: Observational Study. JMIR Med Inform. 2017 Nov 14;5(4):e47. doi: 10.2196/medinform.8026. https://medinform.jmir.org/2017/4/e47/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ronda MCM, Dijkhorst-Oei L, Rutten GEHM. Reasons and barriers for using a patient portal: survey among patients with diabetes mellitus. J Med Internet Res. 2014 Nov 25;16(11):e263. doi: 10.2196/jmir.3457. https://www.jmir.org/2014/11/e263/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Groen WG, Kuijpers W, Oldenburg HS, Wouters MW, Aaronson NK, van Harten Wim H. Supporting Lung Cancer Patients With an Interactive Patient Portal: Feasibility Study. JMIR Cancer. 2017 Aug 08;3(2):e10. doi: 10.2196/cancer.7443. https://cancer.jmir.org/2017/2/e10/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Bell K, Warnick E, Nicholson K, Ulcoq S, Kim SJ, Schroeder GD, Vaccaro A. Patient Adoption and Utilization of a Web-Based and Mobile-Based Portal for Collecting Outcomes After Elective Orthopedic Surgery. Am J Med Qual. 2018;33(6):649–656. doi: 10.1177/1062860618765083. [DOI] [PubMed] [Google Scholar]

- 51.Devkota B, Salas J, Sayavong S, Scherrer JF. Use of an Online Patient Portal and Glucose Control in Primary Care Patients with Diabetes. Popul Health Manag. 2016 Apr;19(2):125–31. doi: 10.1089/pop.2015.0034. [DOI] [PubMed] [Google Scholar]

- 52.Phelps RG, Taylor J, Simpson K, Samuel J, Turner AN. Patients' continuing use of an online health record: a quantitative evaluation of 14,000 patient years of access data. J Med Internet Res. 2014 Oct 24;16(10):e241. doi: 10.2196/jmir.3371. https://www.jmir.org/2014/10/e241/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Fiks AG, DuRivage N, Mayne SL, Finch S, Ross ME, Giacomini K, Suh A, McCarn B, Brandt E, Karavite D, Staton EW, Shone LP, McGoldrick V, Noonan K, Miller D, Lehmann CU, Pace WD, Grundmeier RW. Adoption of a Portal for the Primary Care Management of Pediatric Asthma: A Mixed-Methods Implementation Study. J Med Internet Res. 2016 Jun 29;18(6):e172. doi: 10.2196/jmir.5610. https://www.jmir.org/2016/6/e172/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Pecina J, North F, Williams MD, Angstman KB. Use of an on-line patient portal in a depression collaborative care management program. J Affect Disord. 2017 Jan 15;208:1–5. doi: 10.1016/j.jad.2016.08.034. [DOI] [PubMed] [Google Scholar]

- 55.Williamson RS, Cherven BO, Gilleland Marchak Jordan, Edwards P, Palgon M, Escoffery C, Meacham LR, Mertens AC. Meaningful Use of an Electronic Personal Health Record (ePHR) among Pediatric Cancer Survivors. Appl Clin Inform. 2017 Mar 15;8(1):250–264. doi: 10.4338/ACI-2016-11-RA-0189. http://europepmc.org/abstract/MED/28293684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Kamo N, Bender AJ, Kalmady K, Blackmore CC. Meaningful use of the electronic patient portal - Virginia Mason's journey to create the perfect online patient experience. Healthc (Amst) 2017 Dec;5(4):221–226. doi: 10.1016/j.hjdsi.2016.09.003. [DOI] [PubMed] [Google Scholar]

- 57.Reed M, Graetz I, Gordon N, Fung V. Patient-initiated e-mails to providers: associations with out-of-pocket visit costs, and impact on care-seeking and health. Am J Manag Care. 2015 Dec 01;21(12):e632–9. https://www.ajmc.com/pubMed.php?pii=86469. [PubMed] [Google Scholar]

- 58.Crotty BH, Tamrat Y, Mostaghimi A, Safran C, Landon BE. Patient-to-physician messaging: volume nearly tripled as more patients joined system, but per capita rate plateaued. Health Aff (Millwood) 2014 Oct;33(10):1817–22. doi: 10.1377/hlthaff.2013.1145. http://europepmc.org/abstract/MED/25288428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Wolcott V, Agarwal R, Nelson DA. Is Provider Secure Messaging Associated With Patient Messaging Behavior? Evidence From the US Army. J Med Internet Res. 2017 Apr 06;19(4):e103. doi: 10.2196/jmir.6804. https://www.jmir.org/2017/4/e103/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Ackerman SL, Sarkar U, Tieu L, Handley MA, Schillinger D, Hahn K, Hoskote M, Gourley G, Lyles C. Meaningful use in the safety net: a rapid ethnography of patient portal implementation at five community health centers in California. J Am Med Inform Assoc. 2017 Sep 01;24(5):903–912. doi: 10.1093/jamia/ocx015. http://europepmc.org/abstract/MED/28340229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Krist AH, Woolf SH, Bello GA, Sabo RT, Longo DR, Kashiri P, Etz RS, Loomis J, Rothemich SF, Peele JE, Cohn J. Engaging primary care patients to use a patient-centered personal health record. Ann Fam Med. 2014;12(5):418–26. doi: 10.1370/afm.1691. http://www.annfammed.org/cgi/pmidlookup?view=long&pmid=25354405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Gordon NP, Hornbrook MC. Differences in Access to and Preferences for Using Patient Portals and Other eHealth Technologies Based on Race, Ethnicity, and Age: A Database and Survey Study of Seniors in a Large Health Plan. J Med Internet Res. 2016 Mar 04;18(3):e50. doi: 10.2196/jmir.5105. https://www.jmir.org/2016/3/e50/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Perzynski AT, Roach MJ, Shick S, Callahan B, Gunzler D, Cebul R, Kaelber DC, Huml A, Thornton JD, Einstadter D. Patient portals and broadband internet inequality. J Am Med Inform Assoc. 2017 Sep 01;24(5):927–932. doi: 10.1093/jamia/ocx020. http://europepmc.org/abstract/MED/28371853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Reicher JJ, Reicher MA. Implementation of Certified EHR, Patient Portal, and "Direct" Messaging Technology in a Radiology Environment Enhances Communication of Radiology Results to Both Referring Physicians and Patients. J Digit Imaging. 2016 Jun;29(3):337–40. doi: 10.1007/s10278-015-9845-x. http://europepmc.org/abstract/MED/26588906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Shaw CL, Casterline GL, Taylor D, Fogle M, Granger B. Increasing Health Portal Utilization in Cardiac Ambulatory Patients: A Pilot Project. Comput Inform Nurs. 2017 Oct;35(10):512–519. doi: 10.1097/CIN.0000000000000361. [DOI] [PubMed] [Google Scholar]

- 66.Weisner CM, Chi FW, Lu Y, Ross TB, Wood SB, Hinman A, Pating D, Satre D, Sterling SA. Examination of the Effects of an Intervention Aiming to Link Patients Receiving Addiction Treatment With Health Care: The LINKAGE Clinical Trial. JAMA Psychiatry. 2016 Aug 01;73(8):804–14. doi: 10.1001/jamapsychiatry.2016.0970. http://europepmc.org/abstract/MED/27332703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Bose-Brill S, Feeney M, Prater L, Miles L, Corbett A, Koesters S. Validation of a Novel Electronic Health Record Patient Portal Advance Care Planning Delivery System. J Med Internet Res. 2018 Jun 26;20(6):e208. doi: 10.2196/jmir.9203. https://www.jmir.org/2018/6/e208/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Fiks AG, Mayne SL, Karavite DJ, Suh A, O'Hara R, Localio AR, Ross M, Grundmeier RW. Parent-reported outcomes of a shared decision-making portal in asthma: a practice-based RCT. Pediatrics. 2015 Apr;135(4):e965–73. doi: 10.1542/peds.2014-3167. http://europepmc.org/abstract/MED/25755233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Aljabri D, Dumitrascu A, Burton MC, White L, Khan M, Xirasagar S, Horner R, Naessens J. Patient portal adoption and use by hospitalized cancer patients: a retrospective study of its impact on adverse events, utilization, and patient satisfaction. BMC Med Inform Decis Mak. 2018 Jul 27;18(1):70. doi: 10.1186/s12911-018-0644-4. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-018-0644-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Chung S, Panattoni L, Chi J, Palaniappan L. Can Secure Patient-Provider Messaging Improve Diabetes Care? Diabetes Care. 2017 Oct;40(10):1342–1348. doi: 10.2337/dc17-0140. [DOI] [PubMed] [Google Scholar]

- 71.Jones JB, Weiner JP, Shah NR, Stewart WF. The wired patient: patterns of electronic patient portal use among patients with cardiac disease or diabetes. J Med Internet Res. 2015 Feb 20;17(2):e42. doi: 10.2196/jmir.3157. https://www.jmir.org/2015/2/e42/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Kelly MM, Hoonakker PLT, Dean SM. Using an inpatient portal to engage families in pediatric hospital care. J Am Med Inform Assoc. 2017 Jan;24(1):153–161. doi: 10.1093/jamia/ocw070. http://europepmc.org/abstract/MED/27301746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Haun JN, Lind JD, Shimada SL, Martin TL, Gosline RM, Antinori N, Stewart M, Simon SR. Evaluating user experiences of the secure messaging tool on the Veterans Affairs' patient portal system. J Med Internet Res. 2014 Mar 06;16(3):e75. doi: 10.2196/jmir.2976. https://www.jmir.org/2014/3/e75/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Mook PJ, Trickey AW, Krakowski KE, Majors S, Theiss MA, Fant C, Friesen MA. Exploration of Portal Activation by Patients in a Healthcare System. Comput Inform Nurs. 2018 Jan;36(1):18–26. doi: 10.1097/CIN.0000000000000392. [DOI] [PubMed] [Google Scholar]

- 75.Petullo B, Noble B, Dungan KM. Effect of Electronic Messaging on Glucose Control and Hospital Admissions Among Patients with Diabetes. Diabetes Technol Ther. 2016 Sep;18(9):555–60. doi: 10.1089/dia.2016.0105. http://europepmc.org/abstract/MED/27398824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Shenson JA, Cronin RM, Davis SE, Chen Q, Jackson GP. Rapid growth in surgeons' use of secure messaging in a patient portal. Surg Endosc. 2016 Apr;30(4):1432–40. doi: 10.1007/s00464-015-4347-y. http://europepmc.org/abstract/MED/26123340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Dexter EN, Fields S, Rdesinski RE, Sachdeva B, Yamashita D, Marino M. Patient-Provider Communication: Does Electronic Messaging Reduce Incoming Telephone Calls? J Am Board Fam Med. 2016;29(5):613–9. doi: 10.3122/jabfm.2016.05.150371. http://www.jabfm.org/cgi/pmidlookup?view=long&pmid=27613794. [DOI] [PubMed] [Google Scholar]

- 78.Mikles SP, Mielenz TJ. Characteristics of electronic patient-provider messaging system utilisation in an urban health care organisation. J Innov Health Inform. 2014 Dec 18;22(1):214–21. doi: 10.14236/jhi.v22i1.75. doi: 10.14236/jhi.v22i1.75. [DOI] [PubMed] [Google Scholar]

- 79.Zhong X, Liang M, Sanchez R, Yu M, Budd PR, Sprague JL, Dewar MA. On the effect of electronic patient portal on primary care utilization and appointment adherence. BMC Med Inform Decis Mak. 2018 Oct 16;18(1):84. doi: 10.1186/s12911-018-0669-8. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-018-0669-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Oest Ser, Hightower M, Krasowski Md. Activation and Utilization of an Electronic Health Record Patient Portal at an Academic Medical Center-Impact of Patient Demographics and Geographic Location. Acad Pathol. 2018;5:2374289518797573. doi: 10.1177/2374289518797573. https://journals.sagepub.com/doi/10.1177/2374289518797573?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Robinson JR, Davis SE, Cronin RM, Jackson GP. Use of a Patient Portal During Hospital Admissions to Surgical Services. AMIA Annu Symp Proc. 2016;2016:1967–1976. http://europepmc.org/abstract/MED/28269956. [PMC free article] [PubMed] [Google Scholar]

- 82.Miles RC, Hippe DS, Elmore JG, Wang CL, Payne TH, Lee CI. Patient Access to Online Radiology Reports: Frequency and Sociodemographic Characteristics Associated with Use. Acad Radiol. 2016 Sep;23(9):1162–9. doi: 10.1016/j.acra.2016.05.005. [DOI] [PubMed] [Google Scholar]

- 83.Baldwin JL, Singh H, Sittig DF, Giardina TD. Patient portals and health apps: Pitfalls, promises, and what one might learn from the other. Healthc (Amst) 2017 Sep;5(3):81–85. doi: 10.1016/j.hjdsi.2016.08.004. https://linkinghub.elsevier.com/retrieve/pii/S2213-0764(16)30012-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Payne TH, Beahan S, Fellner J, Martin D, Elmore JG. Health Records All Access Pass. Patient Portals That Allow Viewing of Clinical Notes and Hospital Discharge Summaries: The University of Washington Opennotes Implementation Experience. J AHIMA. 2016 Aug;87(8):36–9. [PubMed] [Google Scholar]

- 85.Smith SG, O'Conor R, Aitken W, Curtis LM, Wolf MS, Goel MS. Disparities in registration and use of an online patient portal among older adults: findings from the LitCog cohort. J Am Med Inform Assoc. 2015 Jul;22(4):888–95. doi: 10.1093/jamia/ocv025. http://europepmc.org/abstract/MED/25914099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Graetz I, Gordon N, Fung V, Hamity C, Reed ME. The Digital Divide and Patient Portals: Internet Access Explained Differences in Patient Portal Use for Secure Messaging by Age, Race, and Income. Med Care. 2016 Aug;54(8):772–9. doi: 10.1097/MLR.0000000000000560. [DOI] [PubMed] [Google Scholar]

- 87.Steitz B, Cronin R, Davis S, Yan E, Jackson G. Long-term Patterns of Patient Portal Use for Pediatric Patients at an Academic Medical Center. Appl Clin Inform. 2017 Aug 02;8(3):779–793. doi: 10.4338/ACI-2017-01-RA-0005. http://europepmc.org/abstract/MED/28765865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Wallace LS, Angier H, Huguet N, Gaudino JA, Krist A, Dearing M, Killerby M, Marino M, DeVoe JE. Patterns of Electronic Portal Use among Vulnerable Patients in a Nationwide Practice-based Research Network: From the OCHIN Practice-based Research Network (PBRN) J Am Board Fam Med. 2016;29(5):592–603. doi: 10.3122/jabfm.2016.05.160046. http://www.jabfm.org/cgi/pmidlookup?view=long&pmid=27613792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Bajracharya AS, Crotty BH, Kowaloff HB, Safran C, Slack WV. Improving health care proxy documentation using a web-based interview through a patient portal. J Am Med Inform Assoc. 2016 May;23(3):580–7. doi: 10.1093/jamia/ocv133. http://europepmc.org/abstract/MED/26568608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Vydra TP, Cuaresma E, Kretovics M, Bose-Brill S. Diffusion and Use of Tethered Personal Health Records in Primary Care. Perspect Health Inf Manag. 2015;12:1c. http://europepmc.org/abstract/MED/26755897. [PMC free article] [PubMed] [Google Scholar]

- 91.Peremislov D. Patient Use of the Electronic Communication Portal in Management of Type 2 Diabetes. Comput Inform Nurs. 2017 Sep;35(9):473–482. doi: 10.1097/CIN.0000000000000348. [DOI] [PubMed] [Google Scholar]

- 92.Ahmedani BK, Belville-Robertson T, Hirsch A, Jurayj A. An Online Mental Health and Wellness Intervention Supplementing Standard Care of Depression and Anxiety. Arch Psychiatr Nurs. 2016 Dec;30(6):666–670. doi: 10.1016/j.apnu.2016.03.003. [DOI] [PubMed] [Google Scholar]

- 93.Bower JK, Bollinger CE, Foraker RE, Hood DB, Shoben AB, Lai AM. Active Use of Electronic Health Records (EHRs) and Personal Health Records (PHRs) for Epidemiologic Research: Sample Representativeness and Nonresponse Bias in a Study of Women During Pregnancy. EGEMS (Wash DC) 2017;5(1):1263. doi: 10.13063/2327-9214.1263. http://europepmc.org/abstract/MED/28303255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Wolff JL, Berger A, Clarke D, Green JA, Stametz R, Yule C, Darer JD. Patients, care partners, and shared access to the patient portal: online practices at an integrated health system. J Am Med Inform Assoc. 2016 Nov;23(6):1150–1158. doi: 10.1093/jamia/ocw025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Shimada SL, Allison JJ, Rosen AK, Feng H, Houston TK. Sustained Use of Patient Portal Features and Improvements in Diabetes Physiological Measures. J Med Internet Res. 2016 Jul 01;18(7):e179. doi: 10.2196/jmir.5663. https://www.jmir.org/2016/7/e179/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Davis SE, Osborn CY, Kripalani S, Goggins KM, Jackson GP. Health Literacy, Education Levels, and Patient Portal Usage During Hospitalizations. AMIA Annu Symp Proc. 2015;2015:1871–80. http://europepmc.org/abstract/MED/26958286. [PMC free article] [PubMed] [Google Scholar]

- 97.Quinn CC, Butler EC, Swasey KK, Shardell MD, Terrin MD, Barr EA, Gruber-Baldini AL. Mobile Diabetes Intervention Study of Patient Engagement and Impact on Blood Glucose: Mixed Methods Analysis. JMIR Mhealth Uhealth. 2018 Feb 02;6(2):e31. doi: 10.2196/mhealth.9265. https://mhealth.jmir.org/2018/2/e31/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Alpert JM, Dyer KE, Lafata JE. Patient-centered communication in digital medical encounters. Patient Educ Couns. 2017 Oct;100(10):1852–1858. doi: 10.1016/j.pec.2017.04.019. http://europepmc.org/abstract/MED/28522229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Masterman M, Cronin RM, Davis SE, Shenson JA, Jackson GP. Adoption of Secure Messaging in a Patient Portal across Pediatric Specialties. AMIA Annu Symp Proc. 2016;2016:1930–1939. http://europepmc.org/abstract/MED/28269952. [PMC free article] [PubMed] [Google Scholar]

- 100.Crotty B, Mostaghimi A, O'Brien J, Bajracharya A, Safran C, Landon B. Prevalence and Risk Profile Of Unread Messages To Patients In A Patient Web Portal. Appl Clin Inform. 2015;6(2):375–82. doi: 10.4338/ACI-2015-01-CR-0006. http://europepmc.org/abstract/MED/26171082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Dalal Anuj K, Dykes Patricia C, Collins Sarah, Lehmann Lisa Soleymani, Ohashi Kumiko, Rozenblum Ronen, Stade Diana, McNally Kelly, Morrison Constance R C, Ravindran Sucheta, Mlaver Eli, Hanna John, Chang Frank, Kandala Ravali, Getty George, Bates David W. A web-based, patient-centered toolkit to engage patients and caregivers in the acute care setting: a preliminary evaluation. J Am Med Inform Assoc. 2016 Jan;23(1):80–7. doi: 10.1093/jamia/ocv093. http://europepmc.org/abstract/MED/26239859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.North Frederick, Crane Sarah J, Chaudhry Rajeev, Ebbert Jon O, Ytterberg Karen, Tulledge-Scheitel Sidna M, Stroebel Robert J. Impact of patient portal secure messages and electronic visits on adult primary care office visits. Telemed J E Health. 2014 Mar;20(3):192–8. doi: 10.1089/tmj.2013.0097. http://europepmc.org/abstract/MED/24350803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Margolius D, Siff J, Teng K, Einstadter D, Gunzler D, Bolen S. Primary Care Physician Factors Associated with Inbox Message Volume. J Am Board Fam Med. 2020;33(3):460–462. doi: 10.3122/jabfm.2020.03.190360. http://www.jabfm.org/cgi/pmidlookup?view=long&pmid=32430380. [DOI] [PubMed] [Google Scholar]

- 104.Hulter P, Pluut B, Leenen-Brinkhuis C, de Mul M, Ahaus K, Weggelaar-Jansen AM. Adopting Patient Portals in Hospitals: Qualitative Study. J Med Internet Res. 2020 May 19;22(5):e16921. doi: 10.2196/16921. https://www.jmir.org/2020/5/e16921/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Huerta T, Fareed N, Hefner JL, Sieck CJ, Swoboda C, Taylor R, McAlearney AS. Patient Engagement as Measured by Inpatient Portal Use: Methodology for Log File Analysis. J Med Internet Res. 2019 Mar 25;21(3):e10957. doi: 10.2196/10957. https://www.jmir.org/2019/3/e10957/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Gheorghiu B, Hagens S. Measuring interoperable EHR adoption and maturity: a Canadian example. BMC Med Inform Decis Mak. 2016 Jan 25;16:8. doi: 10.1186/s12911-016-0247-x. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-016-0247-x. [DOI] [PMC free article] [PubMed] [Google Scholar]