Abstract

Background

The 3D point cloud is the most direct and effective data form for studying plant structure and morphology. In point cloud studies, the point cloud segmentation of individual plants to organs directly determines the accuracy of organ-level phenotype estimation and the reliability of the 3D plant reconstruction. However, highly accurate, automatic, and robust point cloud segmentation approaches for plants are unavailable. Thus, the high-throughput segmentation of many shoots is challenging. Although deep learning can feasibly solve this issue, software tools for 3D point cloud annotation to construct the training dataset are lacking.

Results

We propose a top-to-down point cloud segmentation algorithm using optimal transportation distance for maize shoots. We apply our point cloud annotation toolkit for maize shoots, Label3DMaize, to achieve semi-automatic point cloud segmentation and annotation of maize shoots at different growth stages, through a series of operations, including stem segmentation, coarse segmentation, fine segmentation, and sample-based segmentation. The toolkit takes ∼4–10 minutes to segment a maize shoot and consumes 10–20% of the total time if only coarse segmentation is required. Fine segmentation is more detailed than coarse segmentation, especially at the organ connection regions. The accuracy of coarse segmentation can reach 97.2% that of fine segmentation.

Conclusion

Label3DMaize integrates point cloud segmentation algorithms and manual interactive operations, realizing semi-automatic point cloud segmentation of maize shoots at different growth stages. The toolkit provides a practical data annotation tool for further online segmentation research based on deep learning and is expected to promote automatic point cloud processing of various plants.

Keywords: Label3DMaize, 3D point cloud, segmentation, maize shoot, data annotation

Introduction

Plant structure and morphology are important features for expressing growth and development. At present many research studies underpin the significance of integrating the 3D morphological characteristics of plants when conducting genetic mapping, adaptability evaluation, and crop yield analysis [1, 2]. Using 3D data acquisition technology to obtain a 3D point cloud is the most effective way to perceive plant structure and morphology digitally. However, 3D point clouds are initially obtained in an unordered, unstructured manner and with little semantic information. Therefore, it is critical to use computer graphics technologies and plant morphology knowledge to convert the unstructured 3D point clouds into well-organized and structured data that contain rich morphological features with semantic information. Therefore, plant morphology research based on measured point clouds forms a critical component of 3D plant phenomics [3–6], 3D plant reconstruction [2, 7], and functional-structural plant models [8, 9].

The development of 3D data acquisition technology [10] has significantly enriched approaches for fine-scale 3D data acquisition of individual plants, including 3D scanning [11, 12], LiDAR [13], depth camera [14], time-of-flight reconstruction [15], and multi-view stereo (MVS) reconstruction [16, 17]. Owing to the low cost of sensors and better quality of reconstructed point clouds, MVS reconstruction has been widely adopted in many applications. Recently, multi-view image acquisition platforms that can realize semi-automatic and high-throughput 3D data acquisition for individual plants have been developed [18–21] and enable 3D data acquisition for the phenotypic analysis of large-scale breeding materials [22, 23]. However, how to efficiently and automatically process the acquired big data of 3D point clouds is a bottleneck in 3D plant phenotyping.

The key technologies for 3D point cloud data processing include data registration, extraction of the region of interest, denoising, segmentation, feature extraction, and mesh generation. Among these tasks, point cloud segmentation is challenging. Therefore, automatic and accurate point cloud segmentation could significantly affect subsequent results of phenotype extraction and 3D reconstruction. Point cloud segmentation can be classified as population-shoot or shoot-organ segmentation. Population-shoot segmentation allows for automatic segmentation of maize populations under low density [24] or at early growth stages [25, 26] with little overlap, which can be realized via the spatial distance between shoots. However, it is difficult to achieve automatic segmentation of high-density populations or those with many overlapping organs in late growth stages. Comparatively, more attention has been paid to shoot-organ segmentation. Though high-quality input point clouds and restricted connections between organs are required, color-based [27] and point clustering [28–30] approaches have also been widely used. For instance, Elnashef et al. [16] used the local geometric features of the organs to segment maize leaves and stems at the 6-leaf stage. Paulus et al. [31, 32] segmented grape shoot organs by integrating fast point feature histograms, support vector machine (SVM), and region growing approaches. However, these methods can only segment plant shoots with clear connection characteristics between stems and leaves [11] and have difficulty solving leaf-wrapping stem segmentation problems. For time-series 3D point clouds, the leaf multi-labeling segmentation method was used for organ segmentation and plant growth monitoring [33]. While plant organs could also be segmented through skeleton extraction and hierarchical clustering [34, 35], these methods need interactive manual correction for complex plants to guarantee the segmentation accuracy. Jin et al. [36] proposed a median normalized vector growth algorithm that can segment the stems and leaves of maize shoots. On this basis, an annotation dataset of maize shoots was constructed, and the deep learning method was introduced to improve the automatic segmentation level [37]. However, parameter interactions are still needed for different shoot architecture and cannot meet the needs of high realistic 3D reconstruction.

Owing to the complexity of plant morphology and structure, almost all 3D point cloud segmentation methods for plants need certain manual interactions, which is inconvenient for huge amounts of point cloud data processing, and substantially decreases the efficiency. Therefore, it is necessary to improve the automation of segmentation and increase the throughput of 3D point cloud data processing for plants. Deep learning approaches can effectively solve this problem [21, 38, 39], among which the construction of high-quality training datasets is a prerequisite. For example, LabelMe [40] can realize high-quality data annotation for image segmentation. However, 3D point cloud tools for data annotation are rare, especially for plants. Besides, current datasets used for point cloud segmentation are oriented to general segmentation tasks [41–44]. The existing datasets for 3D plant segmentation contain only small amounts of data [21, 45, 46], which cannot meet the data requirements for high-quality deep learning models.

Because point cloud annotation of plants is labor-intensive and time-consuming, deep learning approaches can be applied to segment plant point clouds. Hence, how to improve the efficiency of high-quality data annotation and develop supporting software tools is the key to automatic point cloud segmentation of plants by deep learning. To meet this data annotation demand, the present study used maize as an example and proposes a top-down point cloud segmentation algorithm. In addition, the toolkit Label3DMaize for point cloud annotation of maize shoots is developed, which could provide technical support for automatic and high-throughput processing of plant point clouds. The toolkit integrates clustering approaches and computer interactions supported through maize structural knowledge. Optimal transportation-based coarse segmentation is satisfactory for basic segmentation tasks, and fine segmentation offers users a way to calibrate the segmentation details. This plant-oriented tool could be used to segment point cloud data of various maize growth periods and provide a practical data-labeling tool for segmentation research based on deep learning.

Materials and Methods

Field experiment and data acquisition

Three maize cultivars, including MC670, Xianyu 335 (XY335), and NK815, were planted on 20 May 2019 at the Tongzhou experimental field of Beijing Academy of Agriculture and Forestry Sciences (116 70.863 E, 39 70.610 N). The planting density of all the plots was 6 plants/m2 with a row spacing of 60 cm. Morphological representative shoots of each cultivar at sixth leaf (V6), ninth leaf (V9), 13th leaf (V13), and blister (R2) stages [47] were selected and transplanted into pots. Then multi-view images were acquired using the MVS-Pheno platform [18], after which 3D point clouds of the shoots were reconstructed. For validation, 12 shoot point clouds at 4 growth stages (V3, V6, V9, and V12) were acquired using a 3D scanner (FreeScan X3, Tianyuan Inc., Beijing, China), to test the segmentation performance of a different data source.

Overview of the segmentation pipeline

The point cloud of a maize shoot can be segmented into 5 instances: stem, leaf, tassel, ear, and pot. The stem, tassel, and pot on a shoot can be regarded as an instance for each. For each transplanted shoot at stage R2, assuming that it contains n1 ears and n2 leaves, the point cloud of this shoot can thus be segmented into N = 3 + n1+ n2 instances.  represents the point cloud to be segmented, and

represents the point cloud to be segmented, and  represent the ith point cloud instance. In particular,

represent the ith point cloud instance. In particular,  and

and  refer to the stem and pot (if exists) instance, respectively. Before the segmentation begins,

refer to the stem and pot (if exists) instance, respectively. Before the segmentation begins,  contains all the points of the shoot, and

contains all the points of the shoot, and  are all empty. With the progression of segmentation, the points in

are all empty. With the progression of segmentation, the points in  are gradually assigned to

are gradually assigned to  . The segmentation completes when

. The segmentation completes when  is empty.

is empty.

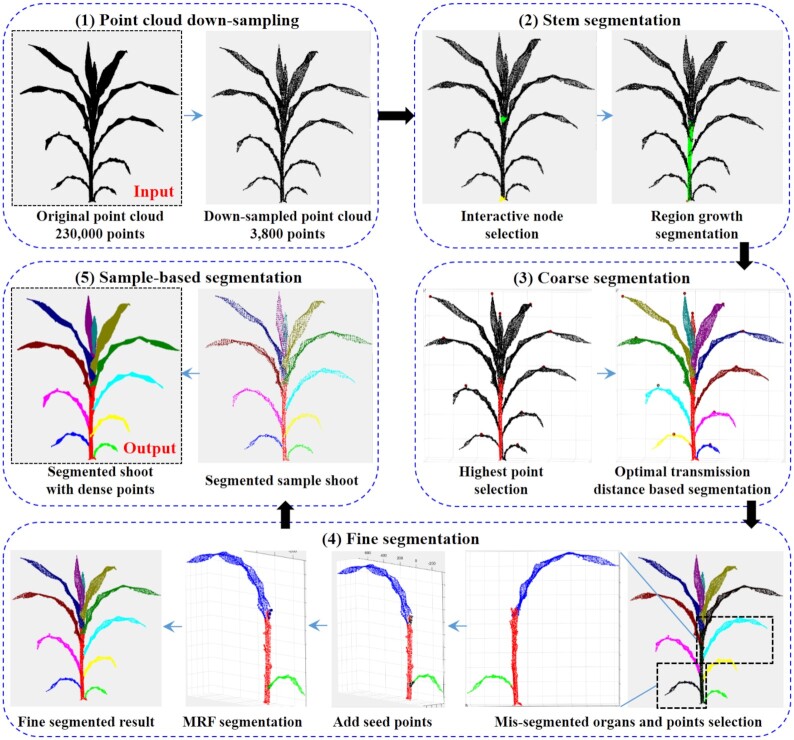

The segmentation pipeline includes 5 parts (Fig. 1): point cloud down-sampling, stem segmentation, coarse segmentation, fine segmentation, and sample-based segmentation. (1) Point cloud down-sampling: the original input point cloud is down-sampled to maintain the shoot morphological features, which improves the segmentation efficiency and quickens the entire segmentation process. (2) Stem segmentation: the top and bottom points of the stem are interactively selected, and the corresponding radius parameters are interactively adjusted. Subsequently, the median region growth is applied to segment the stem points from the shoot automatically. (3) Coarse segmentation: the highest points of each organ instance, except the stem, are obtained via manual interaction, after which all organ instances are segmented automatically on the basis of the optimal transportation distances. (4) Fine segmentation: the unsatisfactory segmentation point regions are selected interactively, and the seed points of organ instances are selected. Organs are then segmented by Markov random fields (MRF). (5) Sample-based segmentation: maize shoots with high-resolution point clouds are segmented on the basis of the fine segmentation result of low-resolution point clouds.

Figure 1:

Workflow of the segmentation.

Stem segmentation

Two seed points  and

and  at the bottom and top of each stem were selected interactively. Then, a median-based region growing algorithm [36] was applied to segment the stem points. This segmentation procedure updates the seed point iteratively along the direction from

at the bottom and top of each stem were selected interactively. Then, a median-based region growing algorithm [36] was applied to segment the stem points. This segmentation procedure updates the seed point iteratively along the direction from  to

to  . Points around the seed points were classified into stem points. Supposing the algorithm is currently at the kth iteration and the seed point is

. Points around the seed points were classified into stem points. Supposing the algorithm is currently at the kth iteration and the seed point is  , the segmentation process was evaluated as follows:

, the segmentation process was evaluated as follows:

Step1: Points lying in a sphere were classified as stem points, where  is the center of the sphere,

is the center of the sphere,  is its radius, and

is its radius, and  is a user-specified parameter.

is a user-specified parameter.

Step 2: The growth direction  was determined according to:

was determined according to:

|

|

|

In this formula,  is L2 normal form and median {} represents the median operation.

is L2 normal form and median {} represents the median operation.  and

and  are weight parameters set by users and

are weight parameters set by users and  is the normalized vector from the median of already segmented points of the stem to the seed point

is the normalized vector from the median of already segmented points of the stem to the seed point  . Meanwhile,

. Meanwhile,  is the normalized vector from

is the normalized vector from  to

to  , which corrects the growth direction to coincide with the stem. In practice,

, which corrects the growth direction to coincide with the stem. In practice,  while

while  . This parameter setting ensures that the stem points can be correctly segmented under different

. This parameter setting ensures that the stem points can be correctly segmented under different  values during the entire growing process.

values during the entire growing process.

Step 3: A new seed point  for the next iteration was estimated according to

for the next iteration was estimated according to  .

.

Step 4: Region growth finish condition judgement. Supposing L represents the line segment from  to

to  , then project

, then project  on L. If the projection point was not on L, it indicates that the current regional growth was beyond the stem region and the iteration should be stopped. Otherwise, continue the k+ 1 times iteration and execute Step 1.

on L. If the projection point was not on L, it indicates that the current regional growth was beyond the stem region and the iteration should be stopped. Otherwise, continue the k+ 1 times iteration and execute Step 1.

Because the maize stem gradually thins from bottom to top, a uniform radius  may generate over-segmentation, i.e., classifying the points of other organs into the stem. Besides, the region growing algorithm also over-segments points in some regions where the stem bends. Therefore, a simple median operation was adopted to eliminate the over-segmented points. First, the already segmented stem points were evenly divided into M segments along the direction of

may generate over-segmentation, i.e., classifying the points of other organs into the stem. Besides, the region growing algorithm also over-segments points in some regions where the stem bends. Therefore, a simple median operation was adopted to eliminate the over-segmented points. First, the already segmented stem points were evenly divided into M segments along the direction of  , and the median axis of each segment was fitted using least squares. The average distance from each point to the central axis was then calculated. If the distance from a point to the central axis was less than the average distance, it was retained as the stem point; otherwise it was removed from the stem to the unsegmented point set. Users can perform the median operation several times in the toolkit to reduce the over-segmentation problem. Although multiple median operations cause an under-segmentation of the stem point cloud, the issue is resolved in the subsequent organ segmentation processes.

, and the median axis of each segment was fitted using least squares. The average distance from each point to the central axis was then calculated. If the distance from a point to the central axis was less than the average distance, it was retained as the stem point; otherwise it was removed from the stem to the unsegmented point set. Users can perform the median operation several times in the toolkit to reduce the over-segmentation problem. Although multiple median operations cause an under-segmentation of the stem point cloud, the issue is resolved in the subsequent organ segmentation processes.  represents the segmented stem points, and these points are removed from

represents the segmented stem points, and these points are removed from  . Subsequent organ segmentation is performed in the remaining point cloud. Stem point cloud segmentation is illustrated in Fig. 2.

. Subsequent organ segmentation is performed in the remaining point cloud. Stem point cloud segmentation is illustrated in Fig. 2.

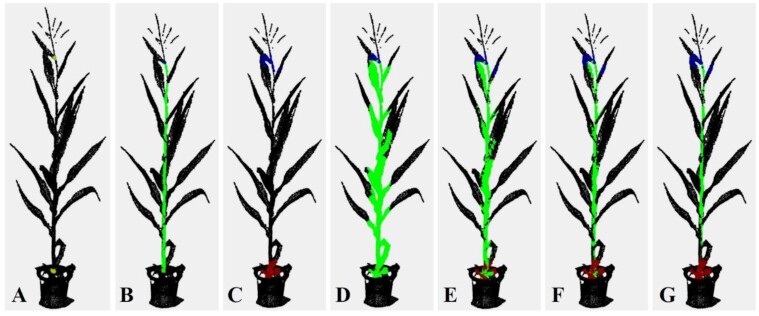

Figure 2:

Stem point cloud segmentation. (A) Seed points at the bottom and top of the stem are interactively selected, and an appropriate segmentation radius is set. (B) Stem segmentation result based on (A). (C) A big radius is set. (D) Segmentation result based on (C). (E–G) Stem segmentation results with 1, 2, and 3 median operations based on (D).

Shoot alignment

The shoot points were transformed into a regular coordinate system to access the position of each point in the cloud conveniently. The midpoint of the already segmented stem point cloud was taken as the origin O of the new shoot coordinate system. In contrast, the Z-axis of the new coordinate system was the middle axis estimated by the least-squares method from the stem point cloud. Then, the shoot point cloud was projected onto the plane using the Z-axis as its normal vector. The first and second principal component vectors of the projection points were determined by principal component analysis and assigned as the X- and Y-axis of the new shoot coordinate system, respectively. Subsequently, the original point cloud coordinates were transformed into the new shoot coordinate system, and the coordinates of their z-value judged the height of points in the shoot. Points are higher with greater z-values.

Coarse segmentation of organs

A top-down point cloud segmentation algorithm for maize organs from a shoot was applied. The highest point of each organ was taken as the seed point of the organ (Fig. 3A). The other shoot points after stem segmentation were classified into corresponding organ instances from the top down by the optimal transportation distances (Fig. 3B).

Figure 3:

Illustration of coarse segmentation. (A) Highest point determination of each organ. (B) Visualization of segmented shoot from different angles of view.

Organ seed points determination

After stem segmentation, the point cloud of maize shoots was spatially divided into several relatively discrete point clouds (excluding the stem). However, the exact number of organs is always larger than the number of discrete point clouds, owing to the spatial organ connection, especially near the upper leaves. Thus, the seed point for each organ has to be determined for the next step segmentation. The highest point of each organ was regarded as the seed point (Fig. 3A). If a pot was involved in the point cloud, all points with a z-value less than the lowest point of the stem were directly classified as pot points. Usually, the highest point of a new leaf appears at the tip region; the middle and lower fully unfolded leaves are mostly curved. Meanwhile, the highest point lies in the middle of the leaf, and the highest points of a tassel or ear are at the top. Therefore, it was assumed that the distance between the highest points of any 2 organs was >5 cm. On this basis, the highest point of each organ was determined by searching for the point with the maximum z-value within the point cloud of the organ.

Owing to the complicated spatial points at the organ connection areas, automatic estimation of the highest points of instances may not be accurate. Label3DMaize provides a manual interaction module to determine the highest seed point of each organ. Simultaneously, this operation can also assign a serial number to each organ for further output. Because the number of maize organs is relatively small, this interactive correction operation is convenient and acceptable. The derived seed points of each organ are set into the corresponding instance point cloud  . At this time, each leaf, tassel, and ear instance point cloud only contains the highest point, and there are multiple points in the pot and stem instances.

. At this time, each leaf, tassel, and ear instance point cloud only contains the highest point, and there are multiple points in the pot and stem instances.

Coarse segmentation based on optimal transportation distances

After obtaining the seed points of all the instances, the left points in  were traversed 1 by 1 to determine the instance to which they belong. For each point to

were traversed 1 by 1 to determine the instance to which they belong. For each point to  , the distance between the point and each other point cloud instance was evaluated, and it was classified into the nearest instance. The classified points were evaluated from top to bottom; i.e., the points with bigger z-coordinates were evaluated preferentially. The process was as follows:

, the distance between the point and each other point cloud instance was evaluated, and it was classified into the nearest instance. The classified points were evaluated from top to bottom; i.e., the points with bigger z-coordinates were evaluated preferentially. The process was as follows:

Step 1: The points in the point set  were reordered from big to small according to their z-values.

were reordered from big to small according to their z-values.

Step 2: For point  , the organ instance it belongs to was determined. The distance

, the organ instance it belongs to was determined. The distance  from point p to the ith instance was defined as

from point p to the ith instance was defined as

|

where  is the optimal transportation distance between any 2 points calculated based on the Sinkhorn algorithm [48]. Then point p is assigned into the organ instance with the lowest

is the optimal transportation distance between any 2 points calculated based on the Sinkhorn algorithm [48]. Then point p is assigned into the organ instance with the lowest  .

.  , in the ith instance, is the nearest neighbor of point p under the optimal transportation distance.

, in the ith instance, is the nearest neighbor of point p under the optimal transportation distance.

Step 3: Move point p from  into the corresponding

into the corresponding  . Continue traversing the next point in

. Continue traversing the next point in  , and perform Step 2 until

, and perform Step 2 until  is empty.

is empty.

The detailed description of  in Step 2 is as follows. The optimal transportation strategy of point cloud Q to its identical set

in Step 2 is as follows. The optimal transportation strategy of point cloud Q to its identical set  is the one that transmits all the quality of any point

is the one that transmits all the quality of any point  to the same point

to the same point  . The Sinkhorn algorithm [48] was used here to calculate the optimal transportation distances. It allocates the quality of any point

. The Sinkhorn algorithm [48] was used here to calculate the optimal transportation distances. It allocates the quality of any point  to all points in

to all points in  . A point with higher allocation quality suggests the point is closer to p than any other points under the optimal transportation strategy. Suppose that point cloud Q contains

. A point with higher allocation quality suggests the point is closer to p than any other points under the optimal transportation strategy. Suppose that point cloud Q contains  points.

points.  represents the same point set of Q.

represents the same point set of Q.  is the uth point in Q, and

is the uth point in Q, and  indicates the quality of point

indicates the quality of point  . Similarly,

. Similarly,  is the vth point in

is the vth point in  , and

, and  indicates the quality of point

indicates the quality of point  .

.  represents the transported quality from

represents the transported quality from  to

to  . Then the optimal transportation energy from point cloud Q to point cloud

. Then the optimal transportation energy from point cloud Q to point cloud  can be described as:

can be described as:

|

|

In this equation,  is the adjustment parameter, which was set to 5 in this article, and

is the adjustment parameter, which was set to 5 in this article, and  is the L2 normal form. The above equation can be solved by the Sinkhorn matrix scaling algorithm [49], and the optimal transportation from Q to

is the L2 normal form. The above equation can be solved by the Sinkhorn matrix scaling algorithm [49], and the optimal transportation from Q to  can be derived; i.e., an

can be derived; i.e., an  optimal transportation matrix M is obtained. The element

optimal transportation matrix M is obtained. The element  at u row and v column in the matrix is the transported quality from the uth to the vth point. A larger

at u row and v column in the matrix is the transported quality from the uth to the vth point. A larger  indicates that the 2 points are closer. After obtaining the optimal transportation solution, the optimal transportation distance from the uth to the vth point in the point cloud can be defined as

indicates that the 2 points are closer. After obtaining the optimal transportation solution, the optimal transportation distance from the uth to the vth point in the point cloud can be defined as  . The pseudocode for calculating the optimal transportation distance M is shown in Table 1.

. The pseudocode for calculating the optimal transportation distance M is shown in Table 1.

Table 1:

Pseudocode for calculating optimal transportation matrix

| Algorithm 1. Computation of optimal transportation matrix M, using MATLAB syntax. |

|---|

| Input: Parameter ε; Point cloud matrix QNQ×3; % NQ is the point number of the point cloud |

| n = NQ; |

| Hn × n = pdist2(Q, Q);H = H./max(H(:)); |

| Kn × n = exp(-εH); |

| Un × n = K.*H; |

| an ×1 = ones(n,1)/n; |

| hn×1 = a; |

| Jn × n = diag(1./a)*K; |

| while h changes or any other relevant stopping criterion do |

| h = 1./(J*(a./(h'*K)')); |

| end while |

| z n ×1 = a./((h'*K)'); |

| M n × n = diag(h(:,1)) * K * diag(z(:,1)); |

In the optimal transportation energy equation, when parameter  increases, the transportation strategy gets closer to the classical optimal transportation, and the segmentation result using optimal transportation distance

increases, the transportation strategy gets closer to the classical optimal transportation, and the segmentation result using optimal transportation distance  is also closer to that using Euclidean distance. The same results can be derived using the 2 distances when the ε is >100. When ε is smaller, the solution becomes smoother, and the nearest neighbor calculated under the

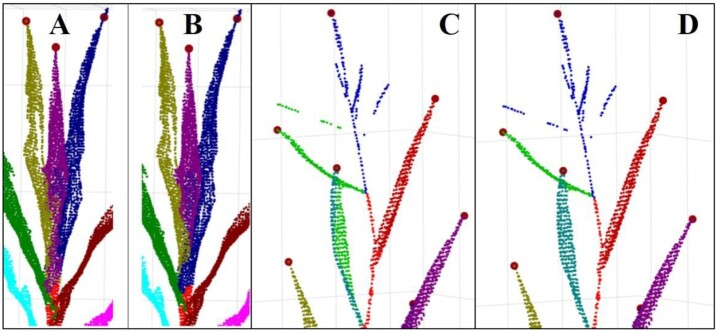

is also closer to that using Euclidean distance. The same results can be derived using the 2 distances when the ε is >100. When ε is smaller, the solution becomes smoother, and the nearest neighbor calculated under the  distance tends to the region with higher point density. Compared with the Euclidean distance, using the optimal transportation distance to estimate the distance between points can better deal with the challenge of big leaves wrapping on leaflets than using the Euclidean distance (Fig. 4A and B). When the adhesion area of the 2 organs is not significantly large, the segmentation result using the optimal transportation distance is better than that of the Euclidean distance (Fig. 4C and D).

distance tends to the region with higher point density. Compared with the Euclidean distance, using the optimal transportation distance to estimate the distance between points can better deal with the challenge of big leaves wrapping on leaflets than using the Euclidean distance (Fig. 4A and B). When the adhesion area of the 2 organs is not significantly large, the segmentation result using the optimal transportation distance is better than that of the Euclidean distance (Fig. 4C and D).

Figure 4:

Organ segmentation comparison using optimal transportation distance and Euclidean distance. Point cloud segmentation result for big leaf wrapping small leaf base case using Euclidean distance (A) and optimal transportation distance (B). Point cloud segmentation result for close or slight organ adhesion case using Euclidean distance (C) and optimal transportation distance (D).

Fine segmentation of organs

Coarse segmentation can provide preliminary results, but false segmentation is frequently observed in the intersecting regions of organs. To obtain more precise segmentation results, this study developed a fine segmentation module for organs in Label3DMaize, which included the following processes:

Step 1: n (n > 1) organ instances to be fine segmented were selected, and  represents the ith instance.

represents the ith instance.

Step 2: The region of interest was selected among the above instance point cloud, represented by  .

.

Step 3: The seed point for the ith instance  was selected from region

was selected from region  . The selected points were removed from

. The selected points were removed from  and stored in

and stored in  .

.

Step 4: The points in  were re-segmented using MRF.

were re-segmented using MRF.

The re-segment algorithm was detailed using MRF in Step 4, as explained in the following. The fine segmentation of the interest region mentioned above is a multi-classification problem. It allocates  into n organ instances

into n organ instances  , i.e., search for the right organ tag for point

, i.e., search for the right organ tag for point  . Hence a mapping function

. Hence a mapping function  is defined for any point

is defined for any point  . When a point

. When a point  is mapped to the ith instance,

is mapped to the ith instance,  , the energy function is defined as:

, the energy function is defined as:

|

|

|

In this function,  is the k-neighborhood of

is the k-neighborhood of  . The data item

. The data item  measures the loss of classifying

measures the loss of classifying  to n instances

to n instances  .

.  represents the distance from point

represents the distance from point  to instance

to instance  , which is the distance from

, which is the distance from  to the nearest point in

to the nearest point in  .

.  is a weight parameter that controls the proportion of distance term in the energy function. The smooth item

is a weight parameter that controls the proportion of distance term in the energy function. The smooth item  quantifies the corresponding loss when assigning the tag

quantifies the corresponding loss when assigning the tag  and

and  for point

for point  and

and  , respectively. This smooth term encourages spatial consistency; i.e., the probability that adjacent points belong to the same class is higher. The smooth term is composed of the product of the distance term on the left and the angle term on the right. Meanwhile,

, respectively. This smooth term encourages spatial consistency; i.e., the probability that adjacent points belong to the same class is higher. The smooth term is composed of the product of the distance term on the left and the angle term on the right. Meanwhile,  is the Euclidean distance of the 2 points and

is the Euclidean distance of the 2 points and  is the maximum Euclidean distance between all points and their neighborhood points, regulating the distance term in the range of (0, 1].

is the maximum Euclidean distance between all points and their neighborhood points, regulating the distance term in the range of (0, 1].  and

and  are the normal vectors of points

are the normal vectors of points  and

and  , respectively.

, respectively.  is the angle between the 2 normals.

is the angle between the 2 normals.  and

and  are the weight parameters for the distance and angle term, respectively, both with a default value of 1.0. The minimum solution of the energy function is solved by

are the weight parameters for the distance and angle term, respectively, both with a default value of 1.0. The minimum solution of the energy function is solved by  -expansion MRF [50].

-expansion MRF [50].

In addition, users cloud assign an organ label to the region of interest points after the aforementioned Step 2, which offers a more direct way for fine segmentation.

Sample-based segmentation

It is suggested that the number of points per shoot should be <15,000 to ensure data processing efficiency. Therefore, Label3DMaize provides point cloud simplification and sample-based segmentation modules. Voxel-based simplification is adopted in the toolkit. Sample-based segmentation refers to the automatic segmentation of a dense point cloud via the segmentation result of the corresponding simplified point cloud. Specifically, suppose that point cloud A is the simplification of dense point cloud B, and A has already been segmented while B is to be segmented. The k-nearest neighbors in A of any point  are calculated, followed by counting how many points of these k-nearest neighbors belong to each instance. The instance with the maximum neighbor points is determined as the instance of point p.

are calculated, followed by counting how many points of these k-nearest neighbors belong to each instance. The instance with the maximum neighbor points is determined as the instance of point p.

Results

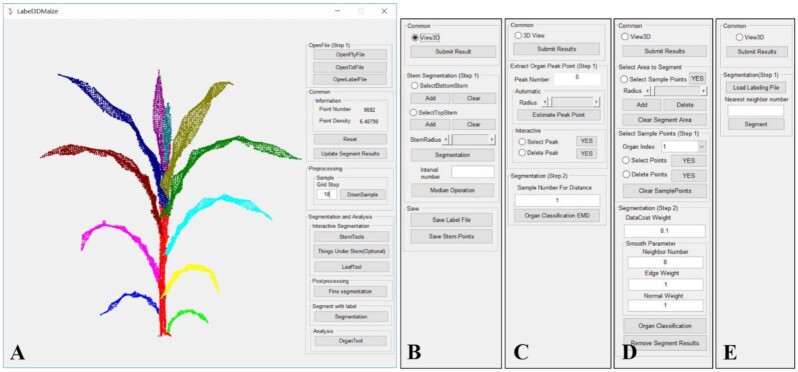

Interface and operations of Label3DMaize

The Label3DMaize toolkit was developed using MATLAB. The interface is composed of the main interface and multiple sub-interfaces, including stem segmentation, coarse segmentation, fine segmentation, and sample-based segmentation (Fig. 5). Each sub-interface pops up after the corresponding button on the main interface is triggered. The main interface and each sub-interface are composed of an embedded dialog and an interactive visual window (only the embedded dialog in each sub-interface is shown in Fig. 5). The interactive visual window enables the user to rotate, zoom, translate, select points of interest in the view, and improve the segmentation effect visually and interactively. The input of the toolkit includes point cloud files in text format, such as txt or ply. According to the operational process shown in Fig. 5, segmentation results can be refined step by step by inputting parameters and manually selecting points. The output of the toolkit is a text file with annotation information; i.e., each 3D coordinate point in the text has a classification identification number, and the points with the same identification number belong to the same instance. These format files are applicable for 3D deep learning of maize shoots. The executable program of Label3DMaize can be found in the attachment.

Figure 5:

Interfaces of Label3DMaize. (A) The main interface of the toolkit, composed of a visualization window and an embedded dialog. (B–E) Dialog of stem segmentation, coarse segmentation, fine segmentation, and sample-based segmentation. The visualization window is not shown in these sub-interfaces.

Visualization and accuracy evaluation

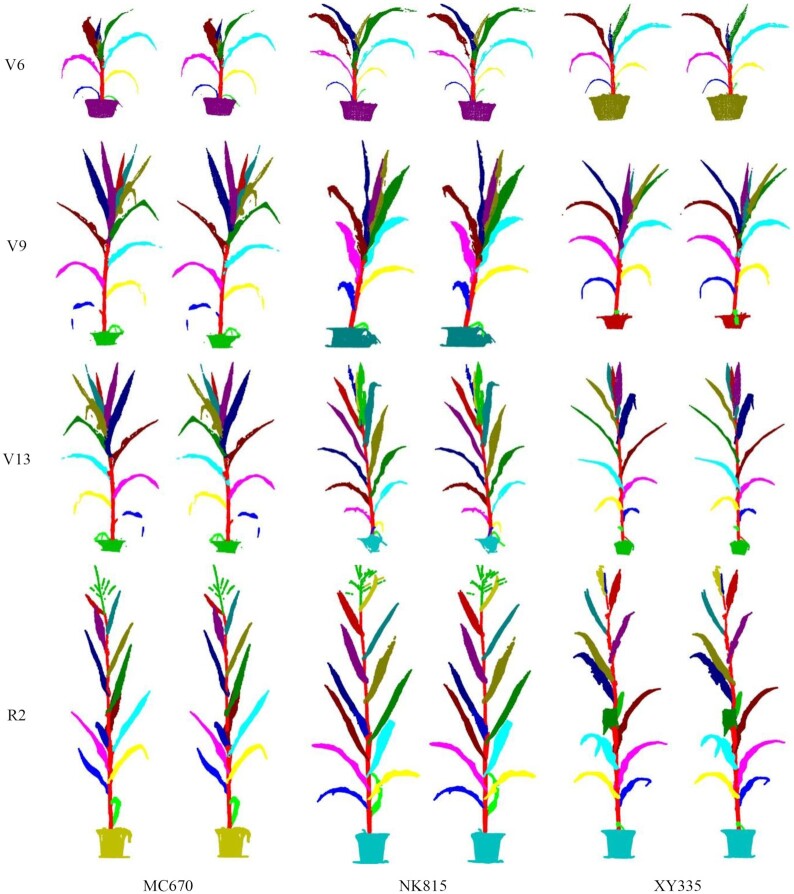

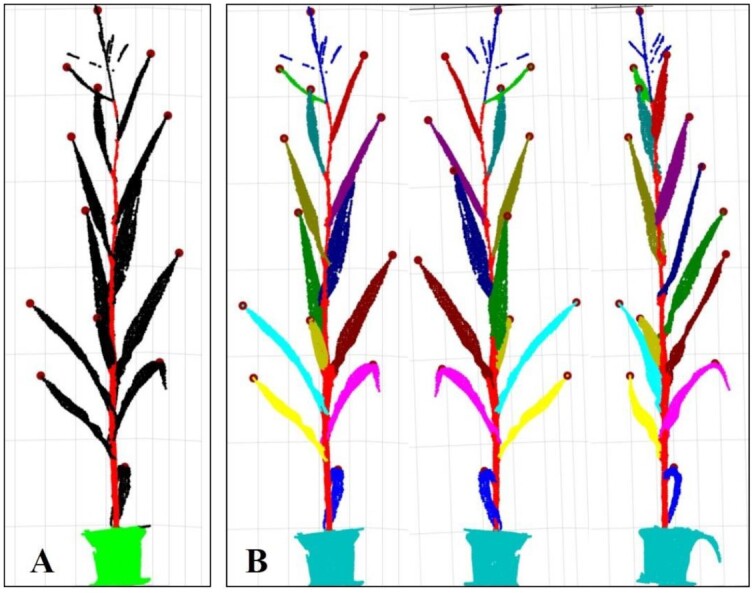

To evaluate the accuracy of coarse and fine segmentation, the point clouds of 3 varieties in 4 different growth stages of maize shoots are segmented using Label3DMaize. Figure 6 shows the visualization results. According to the visualization results, no significant differences were observed between the coarse and fine segmentation. Yet, fine segmentation improved the segmentation effect of the details, especially near the connection region of organs.

Figure 6:

Visualization of maize shoot segmentation results of 3 cultivars at 4 growth stages. In each pair of images, the left and right are coarse and corresponding fine segmentation results, respectively.

The present study has further provided numerical accuracy results to quantitatively evaluate the difference between coarse and fine segmentation (Table 2). The precision, recall, and F1-score of each organ were estimated on the basis of fine segmentation as the ground truth. The averaged precision and recall of all shoot organs were taken as the precision and recall. Macro-F1 and micro-F1 are calculated using the precision and recall of the shoot and organs averaged value, respectively. It can be seen from Table 2 that although the accuracy of coarse and fine segmentation differed, the overall difference was not significant.

Table 2:

Accuracy evaluation of coarse segmentation vs fine segmentation

| Overall accuracy (%) | Precision (%) | Recall (%) | Micro-F1 (%) | Macro-F1 (%) | |

|---|---|---|---|---|---|

| Mean (range) | 97.2 (89.8–99.4) | 96.7 (92.0–99.2) | 95.6 (84.1–99.1) | 96.1 (87.9–99.2) | 95.6 (85.3–99.1) |

Segmentation efficiency

The efficiency of plant point cloud segmentation is an essential indicator for the practicality of training data annotation tools for deep learning. Table 3 shows the time consumed in the different steps for maize shoot segmentation at 4 growth stages using Label3DMaize on a workstation (Intel Core i7 processor, 3.2 GHz CPU, 32 GB of memory, Windows 10 operating system), including the interactive manual operations and segmentation computations. It can be seen that point cloud segmentation takes ∼4–10 minutes per shoot, in which coarse segmentation takes ∼10–20% of the total time. In the whole segmentation process, the manual interaction time cost is significantly higher than that of automated computation. The segmentation efficiency is positively related to the number of leaves.

Table 3:

Segmentation time of different steps on maize shoots at 4 growth stages using Label3DMaize

| Growth period | No. points of a maize shoot | Time cost (s) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Input | After simplification | t 1 | t2 | t 3 | t4 | t 5 | t 6 | t7 | t8 | t 9 | Total | |

| V6 | 45,833 | 13,196 | 10 | 0.2 | 16 | 4 | 30.2 | 60 | 0.05 | 0.5 | 100 | 190.75 |

| V9 | 62,523 | 13,953 | 10 | 0.2 | 21 | 4 | 35.2 | 140 | 0.05 | 0.6 | 100 | 275.85 |

| V13 | 70,873 | 12,102 | 14 | 0.2 | 32 | 5 | 51.2 | 260 | 0.05 | 0.6 | 100 | 411.85 |

| R2 | 71,909 | 13,224 | 14 | 0.2 | 35 | 5 | 54.2 | 268 | 0.05 | 0.6 | 100 | 422.85 |

t1: Time for stem point selection and radius setting. t2: Time for segmentation computation of stem points. t3: Time for seed points selection of organ instances. t4: Time for organ segment computation. t5: Time for coarse segmentation, where t5 = t1 + t2 + t3 + t4. t6: Time for fine segmentation operations. t7: Time for fine segmentation computation. t8: Time for sample-based segmentation. t9: Time for other operations, e.g., the alternation between main and sub-interfaces. Underscored and non-underscored identifiers indicate the time cost for manual interactions and automated computation, respectively.

This study also analyzed the detailed time costs. (i) The time cost of stem segmentation. In the early growth stages of a maize shoot, the stem is relatively upright, so users only need to select the bottom and upper points of the stem and specify a suitable radius. However, in the late growth stages, the maize shoot height increases and the stem becomes thinner from bottom to top. Meanwhile, the upper part is curved, so interactive median segmentation is needed, which increases the segmentation time. (ii) The time cost of coarse segmentation. The major interactive operation of coarse segmentation is that the user selects or adjusts the highest organ points. As the maize shoot grows, the number of organs gradually increases, so the time costs for the interactive operation of picking points also increase. Meanwhile, the growth of shoot organs significantly increases the occlusion among organs. Thus, the appropriate angles of view for users have to be found to determine the highest organ points, which is time-consuming. (iii) The time cost of fine segmentation. An increase in the number of organs causes false segmentation of more organs at the connection regions. Therefore, the fine segmentation of maize shoots with more organs would take more time. Besides, the segmentation efficiency is related to the shoot architecture; the spatial distances between adjacent organs are much larger in flattened shoots than those of relatively compact ones, which increases the segmentation efficiency of flattened shoots.

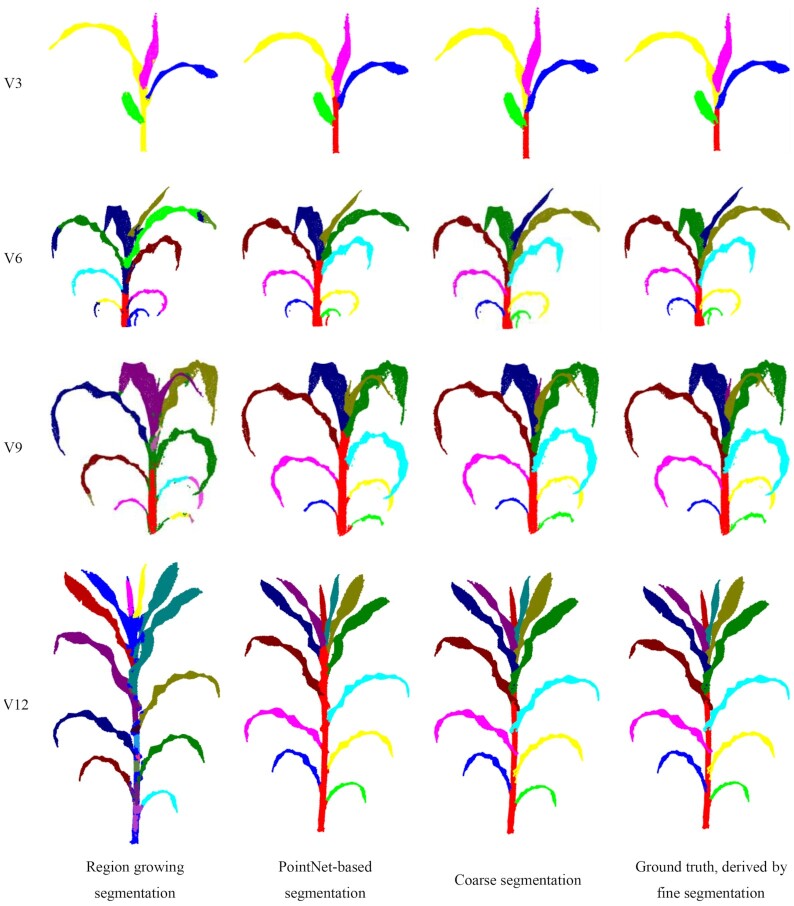

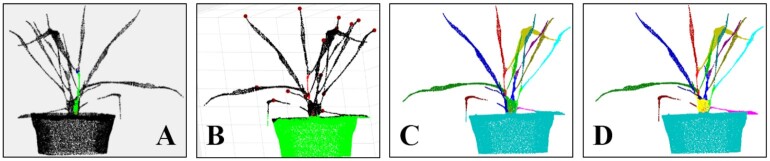

Comparison with other methods

Method comparison was conducted to evaluate the algorithm performance in coarse segmentation. The point cloud data used here consisted of 12 shoots obtained from the 3D scanner (mentioned in the data acquisition section). Region growing in Point Cloud Library (PCL) [51] and PointNet-based segmentation are considered the state-of-the-art methods for comparison. The best segmentation result was obtained through parameter exhaustion for each shoot using region growing. For PointNet-based segmentation [52], a training dataset containing 1,000 labeled maize shoots was built using Label3DMaize. The PointNet model was then trained, and the segmentation model was derived. The segmentation accuracy is reported in Table 4, and representative results of each growth stage are shown in Fig. 7. The fine segmentation results derived using Label3DMaize were regarded as the well-segmented reference for comparison. Results showed that Label3DMaize could deal with MVS reconstructed point clouds and also handle the point cloud derived using 3D scanner. Region growing is oriented to solve general segmentation problems; the segmentation effect is obviously different from the other 2 methods in maize point cloud segmentation. Thus, the efficiency of region growing is less than that of PointNet and coarse segmentation. The segmentation result of coarse segmentation presented in this article is more accurate than that of PointNet. Although the PointNet model can realize automatic segmentation compared with the rough segmentation containing interaction in this article, dealing with many details could be challenging. For instance, it is difficult to accurately extract the point cloud at the stem and leaf boundary, segmenting a big leaf wrapping a small leaf at the shoot top could be challenging, and it always ignores the newly emerged leaves.

Table 4:

Accuracy comparison of region growing, PointNet, and coarse segmentation

| Overall accuracy (%) | Precision (%) | Recall (%) | Micro-F1 (%) | Macro-F1 (%) | |

|---|---|---|---|---|---|

| Region growing | 79.1 | 74.7 | 75.3 | 76.8 | 80.5 |

| PointNet | 92.6 | 92.6 | 92.6 | 91.7 | 90.7 |

| Coarse segmentation | 99.2 | 99.0 | 99.1 | 99.0 | 99.0 |

Figure 7:

Visualization of segmentation results using region growing, PointNet, coarse segmentation, and fine segmentation.

Performance on other plants

This study determined the performance of Label3DMaize in segmenting other plants with only 1 main stem, including tomato, cucumber, and wheat.

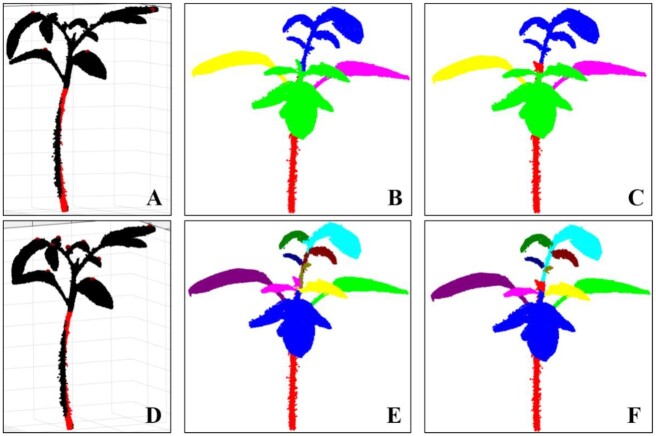

Two types of segmentation have been conducted on tomato in the literature [11]; the first (Type I) treats a big leaf with several small leaves as a cluster leaf, while the second (Type II) treats each big or small leaf as independent. This study aimed to realize these 2 type forms using Label3DMaize. The Type I segmentation result (Fig. 8B) was derived by selecting the highest point of each leaf cluster (Fig. 8A) in the coarse segmentation procedure and details were enhanced by fine segmentation (Fig. 8C). For Type II segmentation, the highest points of all the leaves have to be specified (Fig. 8D). Consequently, coarse and fine segmentation could be derived (Fig. 8E and F). The segmentation method used by Ziamtsov and Navlakha [11] is based on a machine learning model; thus, it can only segment trained plants. In contrast, Label3DMaize has better generality.

Figure 8:

Performance evaluation of Label3DMaize on tomato for 2 types of segmentation. Type I: leaf cluster segmentation (A–C). Type II: individual leaf segmentation (D–F). A and D illustrate the highest point selection in the 2 forms of coarse segmentation. B and E show the coarse segmentation results. C and F are fine segmentation results

Cucumber was selected as a plant representative to test the segmentation performance of Label3DMaize on plants with a soft stem. Different from the topological structure of maize, cucumber has larger stem curvature and has leaf petioles. Thus, the interactive end point selection for stem segmentation of cucumber differs from that in maize. Selection of the highest point of cucumber stem is similar to that in maize. When selecting the other stem end point, we could find the lowest point that coincides with the straight-line direction from the stem top to bottom (Fig. 9A). Although the unselected stem part will be missing, it can be completed in the subsequent coarse segmentation (Fig. 9B). The coarse segmentation and directly fine segmentation tend to segment each leaf and corresponding petiole into an individual organ (Fig. 9C). The separated petiole and leaf can be obtained by fine segmentation, which segments all the petioles and a single stem as a whole (Fig. 9D).

Figure 9:

Visualization of cucumber point cloud segmentation. (A) Illustration of the lowest and highest selection points in stem segmentation. (B) Coarse segmentation result. (C) Fine segmentation. Each leaf and corresponding petiole are classified as an instance. (D) Fine segmentation. All the petioles and the main stem are classified as an instance.

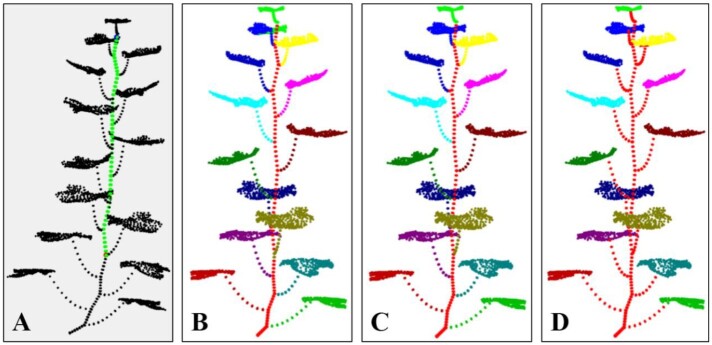

A point cloud of wheat shoot at the early growth stage was acquired using the MVS-Pheno platform. Because the wheat shoot is small with a thin stem, the tiller points are fused together near the shoot base. However, the tiller tops could be identified, which enables segmentation of the wheat shoot by Label3DMaize. For plants with tillers, only 1 stem is selected in the stem segmentation procedure (Fig. 10A). When selecting the organ's highest points in coarse segmentation, not only the highest point of each leaf but also the highest point of each tiller has to be selected (Fig. 10B). Coarse segmentation can ensure a better effect of leaf segmentation (Fig. 10C). However, tillers and stem are prone to undersegmentation, which needs to be adjusted by fine segmentation (Fig. 10D).

Figure 10:

Visualization of wheat shoot segmentation using Label3DMaize. (A) Stem point selection. (B) Selection of highest points in leaves and tillers. (C) Coarse segmentation. (D) Fine segmentation.

Discussion

Shoot-organ point cloud segmentation

In representative shoot-organ segmentation approaches [36], leaf overlap challenges shoot segmentation, especially for upper leaves in compact shoot architecture. Once the segmentation is complete, it is difficult to correct the false segmentation points. Although commercial software, such as Geomagic Studio, can solve this problem, it is complicated and time-consuming. In contrast, the Label3DMaize toolkit integrates a top-down segmentation algorithm and interactive operations according to the morphological structure of maize shoots, which can realize semi-automatic fine point cloud segmentation. The top-down coarse segmentation ensures topological accuracy, and the interactive operations improve the segmentation accuracy and details. Although coarse segmentation can meet the basic demand for phenotype extraction, it is not satisfactory for high-precision phenotypic analysis and 3D reconstruction based on point clouds. In contrast, fine segmentation is more satisfactory for the latter demands. The toolkit can solve the point cloud segmentation problem of compact architecture or organ-overlapping shoots. Although skeleton extraction methods [34, 35] also provide an interactive way to improve the segmentation accuracy, they offer skeleton interaction, which hardly improves the segmentation point details.

Because 3D point cloud annotation tools for plants are lacking, researchers segment plants through multi-view image labeling, deep learning–based image segmentation, MVS reconstruction, and a voting strategy [53]. However, these methods cause a lot of organ occlusion from different view angles; thus, it is hard to segment plants with multiple organs through image labeling and MVS reconstruction. Jin et al. [37] transformed point cloud data into a voxel format, constructed a training set containing 3,000 maize shoots via data enhancement, and proposed a voxel-based convolutional neural network to segment stem and leaf point cloud of maize shoots. Label3DMaize enables researchers to directly handle 3D point cloud segmentation and data annotation without transforming point cloud data into the voxel form. Meanwhile, using the acquired data directly improves the diversity of training set data, rather than by data enhancement, and can thus improve the robustness of the learned model. In addition, label3DMaize can separate the tassel and ear except for the stem and leaf, facilitating phenotype extraction of the tassel (such as the number of tassel branches or the compactness of the tassel) and ears (such as the ear height).

Practicability of Label3DMaize

In our recent works, the MVS-Pheno platform [18] was used to obtain high-throughput 3D point cloud data of maize shoots at different ecological sites for various genotypes and growth stages. However, the underlying knowledge about genotypes and the differences in cultivation management have not been fully explored, indicating that high-throughput phenotypic acquisition is far from practical application. Therefore, it is urgent to establish automatic and online data analysis approaches [54]. However, owing to the complexity of plant morphological structure, it is difficult to realize automatic 3D segmentation from the plant morphological characteristics and regional growth method only. Deep learning is a feasible way to realize automatic segmentation by mining deep features of plant morphology. The greatest challenge in 3D point cloud segmentation by deep learning is the lack of high-precision and efficient data annotation tools. Most of the existing 3D data annotation methods are for voxel data [37, 55], not 3D point clouds. Thus, Label3DMaize provides a practical tool for 3D point cloud data annotation for maize and could be a reference for other plants. It has been demonstrated that the toolkit can segment or label other plants, such as tomato, cucumber, and wheat. Coarse segmentation, i.e., the top-down point cloud segmentation algorithm using optimal transportation distance, suits plants with a single stem. Meanwhile, if a plant has too many organs, selecting all the highest points of each organ is rather complicated. Above all, interactive operations in fine segmentation enable extension of the toolkit to other specific plants. Specifically, Label3DMaize does not depend on data generated through MVS-Pheno. Any point cloud of maize shoot can be the toolkit input, including data acquired using 3D scanners (Fig. 7), or reconstructed from multi-view images acquired by handheld cameras.

Unlike RGB image data annotation [40], data enhancement does not significantly improve the model robustness of 3D point cloud segmentation models. Thus high-quality data annotation is important. It takes 4–10 minutes to label a maize shoot point cloud by Label3DMaize, and this labeling efficiency can meet the needs of constructing a training dataset for deep learning. The fine segmentation module in Label3DMaize ensures accurate segmentation of detailed features at the organ connections and is thus satisfactory for organ-level 3D reconstruction. Of note, coarse segmentation results can be used as the annotation data if high precision of the annotation is not required, thus saving a lot of time.

Label3DMaize is designed for individual shoots and does not support segmentation of multiple maize shoots. Thus, point clouds containing multiple shoots have to be preprocessed into individual shoot point clouds first, through spatial connection property of points, or interactively separated using commercial software (such as CloudCompare or Geomagic Studio). This shoot separation preprocess is easy for scenarios without cross organs. Thus, point cloud data acquisition is important for subsequent segmentation. Point clouds with less noise are required when using Label3DMaize. For shoots with much random noise [35], point cloud denoising should be performed first and then set as the toolkit input for segmentation. Compared with image annotation, the data annotation efficiency of Label3DMaize is still lower, and fine segmentation requires more manual interaction, which has higher requirements for user experience and concentration. Thus the algorithm for Label3DMaize needs improvement to raise the automation level of point cloud segmentation.

Future work

At present, a large amount of 3D point cloud data of maize shoots has been obtained using MVS-Pheno. In our future study, representative data will be selected and annotated by Label3DMaize, then a 3D maize shoot annotation dataset will be constructed. A deep learning–based point cloud segmentation model will then be developed to realize the automatic segmentation of maize shoots. In addition, ted -aize organ data could be used to build a 3D shape model of maize. All the above technologies or data will conversely simplify the segmentation and labeling processes of the toolkit. Subsequently, online phenotypic extraction and 3D reconstruction of maize shoot algorithms will be studied using the well-segmented point clouds. The segmentation algorithm and this toolkit will be extended to other crops according to their morphological characteristics, which will promote the automatic 3D point cloud segmentation of plants.

Availability of Supporting Source Code and Requirements

Project name: Label3DMaize Toolkit

Project home page: https://github.com/syau-miao/Label3DMaize.git

Source code and executable program: [57]

Operating systems: Windows

Programming languages: MATLAB

License: GNU General Public License (GPL)

biotools ID: label3dmaize

Data Availability

The data underlying this article and snapshots of our code are available in the GigaDB repository [56].

Additional Files

Supplementary Program. Executable program of Label3DMaize, which requires that MATLAB runtime (Version 9.2 or above) be installed.

Supplementary Data S1: The point clouds of maize shoots described in Fig. 6, including the point clouds acquired using MVS-Pheno, coarse segmentation results, fine segmentation results, and sample-based segmentation results.

Supplementary Data S2: Point cloud data described in Fig. 7. These point clouds are acquired using a 3D scanner.

Supplementary Data S3: Segmentation results on other plants, including tomato data described in Fig. 8, cucumber data described in Fig. 9, and wheat data described in Fig. 10.

Abbreviations

CPU: central processing unit; MRF: Markov random fields; MVS: multi-view stereo; PCL: Point Cloud Library; R2: blister stage; SVM: support vector machine; V6: sixth leaf stage; V9: ninth leaf stage; V13: 13th leaf stage.

Competing Interests

The authors declare that they have no competing interests.

Funding

This work was partially supported by Construction of Collaborative Innovation Center of Beijing Academy of Agricultural and Forestry Sciences (No. KJCX201917), Science and Technology Innovation Special Construction Funded Program of Beijing Academy of Agriculture and Forestry Sciences (No. KJCX20210413), the National Natural Science Foundation of China (No. 31,871,519, No. 32,071,891), Reform and Development Project of Beijing Academy of Agricultural and Forestry Sciences, China Agriculture Research System (No. CARS-02).

Authors' Contributions

T.M. performed the major part of methodology in Label3DMaize and developed the toolkit. W.W. improved the methodology. W.W. wrote and revised the manuscript. S.W. and C.Z. acquired the point cloud data and performed methodology comparison. Y.L. evaluated the performance of the toolkit and conducted PointNet–based segmentation for comparison. W.W. and X.G. applied for funding support. X.G. proposed the demand and designed this study, and participated in writing the manuscript. All authors read and approved the final manuscript.

Supplementary Material

Chris Armit -- 12/18/2020 Reviewed

Dong Chen -- 12/30/2020 Reviewed

Xiaopeng Zhang -- 12/31/2020 Reviewed

Luis Diaz-Garcia -- 1/4/2021 Reviewed

Luis Diaz-Garcia -- 3/24/2021 Reviewed

ACKNOWLEDGEMENTS

We would like to thank Tianjun Xu, Maize Research Center of Beijing Academy of Agricultural and Forestry Sciences, for providing experimental materials.

Contributor Information

Teng Miao, College of Information and Electrical Engineering, Shenyang Agricultural University, Dongling Road, Shenhe District, Liaoning Province, Shenyang 110161, China.

Weiliang Wen, Beijing Research Center for Information Technology in Agriculture, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China; National Engineering Research Center for Information Technology in Agriculture, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China; Beijing Key Lab of Digital Plant, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China.

Yinglun Li, National Engineering Research Center for Information Technology in Agriculture, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China; Beijing Key Lab of Digital Plant, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China.

Sheng Wu, Beijing Research Center for Information Technology in Agriculture, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China; National Engineering Research Center for Information Technology in Agriculture, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China; Beijing Key Lab of Digital Plant, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China.

Chao Zhu, College of Information and Electrical Engineering, Shenyang Agricultural University, Dongling Road, Shenhe District, Liaoning Province, Shenyang 110161, China.

Xinyu Guo, Beijing Research Center for Information Technology in Agriculture, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China; National Engineering Research Center for Information Technology in Agriculture, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China; Beijing Key Lab of Digital Plant, 11#Shuguang Huayuan Middle Road, Haidian District, Beijing 100097, China.

References

- 1. Bucksch A, Atta-Boateng A, Azihou AF, et al. Morphological plant modeling: unleashing geometric and topological potential within the plant sciences. Front Plant Sci. 2017;8:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Gibbs JA, Pound M, French AP, et al. Approaches to three-dimensional reconstruction of plant shoot topology and geometry. Funct Plant Biol. 2017;44(1):62–75. [DOI] [PubMed] [Google Scholar]

- 3. Lin Y. LiDAR: An important tool for next-generation phenotyping technology of high potential for plant phenomics?. Comput Electron Agric. 2015;119:61–73. [Google Scholar]

- 4. Zhao C, Zhang Y, Du J, et al. Crop phenomics: current status and perspectives. Front Plant Sci. 2019;10:714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Perez-Sanz F, Navarro PJ, Egea-Cortines M. Plant phenomics: an overview of image acquisition technologies and image data analysis algorithms. Gigascience. 2017;6(11):18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Pieruschka R, Schurr U. Plant phenotyping: past, present, and future. Plant Phenomics. 2019;2019, doi: 10.1155/2019/7507131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Rahman A, Mo C, Cho B-K. 3-D image reconstruction techniques for plant and animal morphological analysis-a review. J Biosystems Eng. 2017;42(4):339–49. [Google Scholar]

- 8. Vos J, Evers JB, Buck-Sorlin GH, et al. Functional-structural plant modelling: a new versatile tool in crop science. J Exp Bot. 2010;61(8):2101–15. [DOI] [PubMed] [Google Scholar]

- 9. Louarn G, Song Y. Two decades of functional–structural plant modelling: now addressing fundamental questions in systems biology and predictive ecology. Ann Bot. 2020;126(4):501–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Jin SC, Sun XL, Wu FF, et al. Lidar sheds new light on plant phenomics for plant breeding and management: Recent advances and future prospects. ISPRS J Photogramm Remote Sens. 2021;171:202–23. [Google Scholar]

- 11. Ziamtsov I, Navlakha S. Machine learning approaches to improve three basic plant phenotyping tasks using three-dimensional point clouds. Plant Physiol. 2019;181(4):1425–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Rist F, Herzog K, Mack J, et al. High-precision phenotyping of grape bunch architecture using fast 3D sensor and automation. Sensors. 2018;18(3):763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Thapa S, Zhu F, Walia H, et al. A novel LiDAR-based instrument for high-throughput, 3D measurement of morphological traits in maize and Sorghum. Sensors. 2018;18(4):1187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Hu Y, Wang L, Xiang L, et al. Automatic non-destructive growth measurement of leafy vegetables based on Kinect. Sensors. 2018;18(3):806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Chaivivatrakul S, Tang L, Dailey MN, et al. Automatic morphological trait characterization for corn plants via 3D holographic reconstruction. Comput Electron Agric. 2014;109:109–23. [Google Scholar]

- 16. Elnashef B, Filin S, Lati RN. Tensor-based classification and segmentation of three-dimensional point clouds for organ-level plant phenotyping and growth analysis. Comput Electron Agric. 2019;156:51–61. [Google Scholar]

- 17. Duan T, Chapman SC, Holland E, et al. Dynamic quantification of canopy structure to characterize early plant vigour in wheat genotypes. J Exp Bot. 2016;67(15):4523–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Wu S, Wen W, Wang Y, et al. MVS-Pheno: a portable and low-cost phenotyping platform for maize shoots using multiview stereo 3D reconstruction. Plant Phenomics. 2020;2020, doi: 10.34133/2020/1848437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Nguyen CV, Fripp J, Lovell DR, et al. 3D scanning system for automatic high-resolution plant phenotyping. 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA). IEEE; 2016, doi: 10.1109/DICTA.2016.7796984. [DOI] [Google Scholar]

- 20. Cao W, Zhou J, Yuan Y, et al. Quantifying variation in soybean due to flood using a low-cost 3D imaging system. Sensors. 2019;19(12):2682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Bernotas G, Scorza LCT, Hansen MF, et al. A photometric stereo-based 3D imaging system using computer vision and deep learning for tracking plant growth. GigaScience. 2019;8(5), doi: 10.1093/gigascience/giz056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Zhang XH, Huang CL, Wu D, et al. High-throughput phenotyping and QTL mapping reveals the genetic architecture of maize plant growth. Plant Physiol. 2017;173(3):1554–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Cabrera-Bosquet L, Fournier C, Brichet N, et al. High-throughput estimation of incident light, light interception and radiation-use efficiency of thousands of plants in a phenotyping platform. New Phytol. 2016;212(1):269–81. [DOI] [PubMed] [Google Scholar]

- 24. Jin S, Su Y, Song S, et al. Non-destructive estimation of field maize biomass using terrestrial lidar: an evaluation from plot level to individual leaf level. Plant Methods. 2020;16(1), doi: 10.1186/s13007-020-00613-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Zermas D, Morellas V, Mulla D, et al. 3D model processing for high throughput phenotype extraction – the case of corn. Comput Electron Agric. 2019;172:105047. [Google Scholar]

- 26. Jin SC, Su YJ, Gao S, et al. Deep learning: individual maize segmentation from terrestrial lidar data using faster R-CNN and regional growth algorithms. Front Plant Sci. 2018;9, doi: 10.3389/fpls.2018.00866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Zhan Q, , Liang Y, , Xiao Y. Color-based segmentation of point clouds. Laser Scanning. 2009, 38;248–252. [Google Scholar]

- 28. Itakura K, Hosoi F. Automatic leaf segmentation for estimating leaf area and leaf inclination angle in 3D plant images. Sensors. 2018;18(10):3576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Sun SP, Li CY, Chee PW, et al. Three-dimensional photogrammetric mapping of cotton bolls in situ based on point cloud segmentation and clustering. ISPRS J Photogramm Remote Sens. 2020;160:195–207. [Google Scholar]

- 30. Li D, Shi G, Kong W, et al. A leaf segmentation and phenotypic feature extraction framework for multiview stereo plant point clouds. IEEE J Sel Top Appl Earth Obs Remote Sens. 2020;13:2321–36. [Google Scholar]

- 31. Paulus S, Dupuis J, Mahlein AK, et al. Surface feature based classification of plant organs from 3D laserscanned point clouds for plant phenotyping. BMC Bioinformatics. 2013;14(1):12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Wahabzada M, Paulus S, Kersting K, et al. Automated interpretation of 3D laserscanned point clouds for plant organ segmentation. BMC Bioinformatics. 2015;16(1):11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Li YY, Fan XC, Mitra NJ, et al. Analyzing growing plants from 4D point cloud data. IEEE Trans Graph. 2013;32(6), doi: 10.1145/2508363.2508368. [DOI] [Google Scholar]

- 34. Xiang LR, Bao Y, Tang L, et al. Automated morphological traits extraction for sorghum plants via 3D point cloud data analysis. Comput Electron Agric. 2019;162:951–61. [Google Scholar]

- 35. Wu S, Wen W, Xiao B, et al. An accurate skeleton extraction approach from 3D point clouds of maize plants. Front Plant Sci. 2019;10:248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Jin SC, Su YJ, Wu FF, et al. Stem-leaf segmentation and phenotypic trait extraction of individual maize using terrestrial LiDAR data. IEEE Trans Geosci Remote Sens. 2019;57(3):1336–46. [Google Scholar]

- 37. Jin S, Su Y, Gao S, et al. Separating the structural components of maize for field phenotyping using terrestrial LiDAR data and deep convolutional neural networks. IEEE Trans Geosci Remote Sens. 2019;58(4):2644–58. [Google Scholar]

- 38. Griffiths D, Boehm J. A review on deep learning techniques for 3D sensed data classification. Remote Sens. 2019;11(12):1499. [Google Scholar]

- 39. Engelmann F, Bokeloh M, Fathi A, et al. 3D-MPA: multi-proposal aggregation for 3D semantic instance segmentation. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2020:9028–37. [Google Scholar]

- 40. Russell BC, Torralba A, Murphy KP, et al. LabelMe: a database and web-based tool for image annotation. Int J Comput Vision. 2008;77(1-3):157–73. [Google Scholar]

- 41. Chang AX, Funkhouser T, Guibas L, et al. ShapeNet: an information-rich 3D model repository. arXiv:1512.03012. 2015. [Google Scholar]

- 42. Hackel T, Savinov N, Ladicky L, et al. Semantic3D.net: A new Large-scale Point Cloud Classification Benchmark. 2017, ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci, 10.5194/isprs-annals-IV-1-W1-91-2017. [DOI] [Google Scholar]

- 43. Behley J, Garbade M, Milioto A, et al. SemanticKITTI: a dataset for semantic scene understanding of LiDAR sequences. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 2019:9296–306. [Google Scholar]

- 44. Ku T, Veltkamp RC, Boom B, et al. SHREC 2020: 3D point cloud semantic segmentation for street scenes. Comput Graph. 2020;93:13–24. [Google Scholar]

- 45. Dutagaci H, Rasti P, Galopin G, et al. ROSE-X: an annotated data set for evaluation of 3D plant organ segmentation methods. Plant Methods. 2020;16(1):14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Gené-Mola J, Sanz-Cortiella R, Rosell-Polo JR, et al. Fuji-SfM dataset: a collection of annotated images and point clouds for Fuji apple detection and location using structure-from-motion photogrammetry. Data Brief. 2020;30:105591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Abendroth LJ, Elmore RW, Matthew J. B, et al. Corn Growth and Development. Report No. PMR 1009, 2011. Ames, IA: Iowa State University Extension. [Google Scholar]

- 48. Cuturi M. Sinkhorn distances: lightspeed computation of optimal transportation distances. Adv Neural Inf Processing Syst. 2013;26:2292–300. [Google Scholar]

- 49. Richard S. Diagonal equivalence to matrices with prescribed row and column sums. Am Math Monthly. 1967;74(4):402–5. [Google Scholar]

- 50. Boykov Y, Kolmogorov V. An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision. IEEE Trans Pattern Anal Mach Intell. 2004;26(9):1124–37. [DOI] [PubMed] [Google Scholar]

- 51. Rusu RB, Cousins S. 3D is here: Point Cloud Library (PCL). In: IEEE International Conference on Robotics and Automation. 2011, doi: 10.1109/ICRA.2011.5980567. [DOI] [Google Scholar]

- 52. Charles RQ, Su H, Kaichun M, et al. PointNet: deep learning on point sets for 3D classification and segmentation. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017:77–85. [Google Scholar]

- 53. Shi WN, van de Zedde R, Jiang HY, et al. Plant-part segmentation using deep learning and multi-view vision. Biosystems Eng. 2019;187:81–95. [Google Scholar]

- 54. Artzet S, Chen T-W, Chopard J, et al. Phenomenal: An automatic open source library for 3D shoot architecture reconstruction and analysis for image-based plant phenotyping. bioRxiv. 2019:805739. [Google Scholar]

- 55. Liu Z, Tang H, Lin Y, et al. Point-voxel CNN for efficient 3D deep learning. In 33rd Conference on Neural Information Processing Systems. Vancouver, BC, Canada: Curran Associates; 2019:965–75. [Google Scholar]

- 56. Miao T, Wen W, Li Y, et al. Supporting data for “Label3DMaize: toolkit for 3D point cloud data annotation of maize shoots.”. GigaScience Database. 2021. 10.5524/100884. [DOI] [PMC free article] [PubMed]

- 57. Label3DMaize. https://github.com/syau-miao/Label3DMaize.git, Accessed: April 8, 2021. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Miao T, Wen W, Li Y, et al. Supporting data for “Label3DMaize: toolkit for 3D point cloud data annotation of maize shoots.”. GigaScience Database. 2021. 10.5524/100884. [DOI] [PMC free article] [PubMed]

Supplementary Materials

Chris Armit -- 12/18/2020 Reviewed

Dong Chen -- 12/30/2020 Reviewed

Xiaopeng Zhang -- 12/31/2020 Reviewed

Luis Diaz-Garcia -- 1/4/2021 Reviewed

Luis Diaz-Garcia -- 3/24/2021 Reviewed

Data Availability Statement

The data underlying this article and snapshots of our code are available in the GigaDB repository [56].