Summary

Thanks to the increasing availability of genomics and other biomedical data, many machine learning algorithms have been proposed for a wide range of therapeutic discovery and development tasks. In this survey, we review the literature on machine learning applications for genomics through the lens of therapeutic development. We investigate the interplay among genomics, compounds, proteins, electronic health records, cellular images, and clinical texts. We identify 22 machine learning in genomics applications that span the whole therapeutics pipeline, from discovering novel targets, personalizing medicine, developing gene-editing tools, all the way to facilitating clinical trials and post-market studies. We also pinpoint seven key challenges in this field with potentials for expansion and impact. This survey examines recent research at the intersection of machine learning, genomics, and therapeutic development.

Keywords: machine learning, therapeutics discovery and development, genomics

The bigger picture

The genome contains instructions for building the function and structure of organisms. Recent high-throughput techniques have made it possible to generate massive amounts of genomics data. However, there are numerous roadblocks on the way to turning genomic data into tangible therapeutics. We observe that genomics data alone are insufficient for therapeutic development. We need to investigate how genomics data interact with other types of data such as compounds, proteins, electronic health records, images, and texts. Machine learning techniques can be used to identify patterns and extract insights from these complex data. In this review, we survey a wide range of genomics applications of machine learning that can enable faster and more efficacious therapeutic development. Challenges remain, including technical problems such as learning under different contexts given low-resource constraints, and practical issues such as mistrust of models, privacy, and fairness.

Recent high-throughput techniques have made it possible to generate massive amounts of genomics data. However, there are numerous roadblocks on the way to turning genomic data into tangible therapeutics. We need to investigate how genomics data interact with other types of data such as compounds, proteins, electronic health records, images, and texts. In this review, we survey a wide range of genomics applications of machine learning that can enable faster and more efficacious therapeutic development.

Introduction

Genomics studies the function, structure, evolution, mapping, and editing of genomes.1 It allows us to understand biological phenomena, such as the roles that the genome plays in diseases. A deep understanding of genomics has led to a vast array of successful therapeutics to cure a wide range of diseases, both complex and rare.2,3 It also allows us to prescribe more precise treatments4 or seek more effective therapeutics strategies such as genome editing.5

Recent advances in high-throughput technologies have led to an outpouring of large-scale genomics data.6,7 However, the bottlenecks along the path of transforming genomics data into tangible therapeutics are innumerable. For instance, diseases are driven by multifaceted mechanisms, so to pinpoint the right disease target requires knowledge about the entire suite of biological processes, including gene regulation by non-coding regions,8 DNA methylation status,9 and RNA splicing;10 personalized treatment requires accurate characterization of disease subtypes, and the compound's sensitivity to various genomics profiles;4 gene-editing tools require an understanding of the interplay between guide RNA and the whole-genome to avoid off-target effects;11 monitoring therapeutics efficacy and safety after approval requires the mining of gene-drug-disease relations in the electronic health record (EHR) and literature.12 We argue that genomics data alone are insufficient to ensure clinical implementation, but it requires the integration of a diverse set of data types, from compounds, proteins, cellular image, and EHRs to scientific literature. This heterogeneity and scale of data enable the application of sophisticated computational methods such as machine learning (ML).

Over the years, ML has profoundly impacted many application domains, such as computer vision,13 natural language processing,14 and complex systems.15 ML has changed computational modeling from expert-curated features to automated feature construction. It can learn useful and novel patterns from data, often not found by experts, to improve prediction performance on various tasks. This ability is much needed in genomics and therapeutics, as our understanding of human biology is vastly incomplete. Uncovering these patterns can also lead to the discovery of novel biological insights. Also, therapeutic discovery often consists of large-scale resource-intensive experiments, which limit the scope of experiments, and many potent candidates are therefore missed. Using accurate prediction by ML can drastically scale up and facilitate the experiments, catching or generating novel therapeutics candidates.

Interests in ML for genomics through the lens of therapeutic development have also grown for two reasons. First, for pharmaceutical and biomedical researchers, ML models have undergone proof-of-concept stages in yielding astounding performance often for previously infeasible tasks.16,17 Second, for ML scientists, large/complex data and difficult/impactful problems present exciting opportunities for innovation.

This survey summarizes recent ML applications related to genomics in therapeutic development and describes associated challenges and opportunities. We broadly define the term genomics as functional aspects of genes, including what genes are present, how genes are expressed given different contexts, what the relations are among genes, and so forth. Several reviews of ML for genomics have been published.18, 19, 20 Most of these previous works focused on studying genomics for biological applications, whereas we study them in the context of bringing genomics discovery to therapeutic implementations. We identify 22 “ML for therapeutics” tasks with genomics data, ranging across the entire therapeutic pipeline, which was not covered in previous surveys. Moreover, most of the previous reviews focused on DNA sequences, while we go beyond DNA sequences and study a wide range of interactions among DNA sequences, compounds, proteins, multi-omics, and EHR data.

In this survey, we organize ML applications into four therapeutic pipelines: (1) target discovery: basic biomedical research to discover novel disease targets to enable therapeutics; (2) therapeutic discovery: large-scale screening designed to identify potent and safe therapeutics; (3) clinical study: evaluating the efficacy and safety of the therapeutics in vitro, in vivo, and through clinical trials; and (4) post-market study: monitoring the safety and efficacy of marketed therapeutics and identifying novel indications. We also formulate these tasks and data modalities in ML languages, which can help ML researchers with limited domain background to understand those tasks. In summary, this survey presents a unique perspective on the intersection of ML, genomics, and therapeutic development.

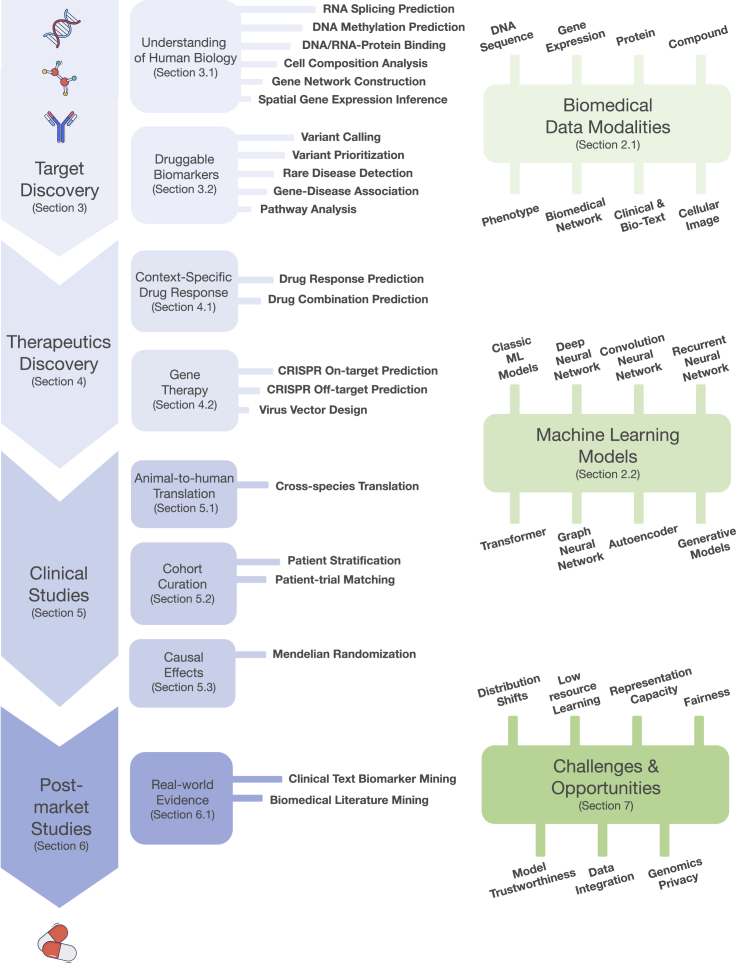

The survey is organized as follows (Figure 1). In the next section, we provide a brief primer on genomics-related data. We also review popular ML models for each data type. In the subsequent three sections, we discuss ML applications in genomics across the therapeutics development pipeline. Each section describes a phase in the therapeutics pipeline and contains several ML applications and ML models and formulations. In the penultimate section, we identify seven open challenges that present numerous opportunities for ML model development and also novel applications. We provide a GitHub repository (https://github.com/kexinhuang12345/ml-genomics-resources) that curates a list of resources discussed in this survey.

Figure 1.

Organization and coverage of this survey

Our survey covers a wide range of important ML applications in genomics across the therapeutics pipelines. In addition, we provide a primer on biomedical data modalities and machine learning models. Finally, we identify seven challenges filled with opportunities.

A primer on genomics data and machine learning models

With advances in high-throughput technologies and data management systems, we now have vast and heterogeneous datasets in the field of biomedicine. This section introduces the basic genomics-related data types and their ML representation and provides a primer on popular ML methods applied to these data. First, we discuss the data representatsion in genomics-related tasks. In Table 1, we provide a set of pointers to high-quality datasets that cover the discussed data representations.

Table 1.

High-quality machine learning datasets references and pointers for genomics therapeutics tasks

Genomics-related biomedical data

DNAs

The human genome can be thought of as the instructions for building functional individuals. DNA sequences encode these instructions. Like a computer, for which we build a program based on 0/1 bit, the basic DNA sequence units are called nucleotides (A, C, G, and T). Given a list of nucleotides, a cell can build a diverse range of functional entities (programs). There are approximately 3 billion base pairs for the human genome, and more than 99.9% are identical between individuals. If a subset of the population has different nucleotides in a genome position than the majority, this position is called a variant. This single nucleotide variant is often called a SNP. While most variants are not harmful (they are said to be functionally neutral), many correspond to the potential driver for phenotypes, including diseases.

Machine learning representations

A DNA sequence is a list of ACGT tokens of length N. It is typically represented in three ways: (1) a string ; (2) a two dimensional matrix , where the ith column corresponds to the ith nucleotide and is a one-hot encoding vector of length 4, where A, C, T, and G are encoded as [1,0,0,0], [0,1,0,0], [0,0,1,0], and [0,0,0,1], respectively; or (3) a vector of , where 0 means it is not a variant and 1 a variant. An example is depicted in Figure 2A.

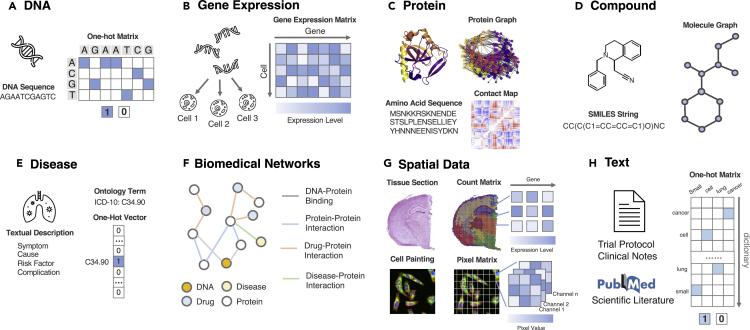

Figure 2.

Therapeutics data modalities and their machine learning representation

Detailed descriptions of each modality can be found in “genomics-related biomedical data.”

(A) DNA sequences can be represented as a matrix where each position is a one-hot vector corresponding to A, C, G, T.

(B) Gene expressions are a matrix of real value, where each entry is the expression level of a gene in a context such as a cell.

(C) Proteins can be represented in amino acid strings, a protein graph, and a contact map where each entry is the connection between two amino acids.

(D) Compounds can be represented as a molecular graph or a string of chemical tokens, which are a depth-first traversal of the graph.

(E) Diseases are usually described by textual descriptions and also symbols in the disease ontology.

(F) Networks connect various biomedical entities with diverse relations. They can be represented as a heterogeneous graph.

(G) Spatial data are usually depicted as a 3D array, where two dimensions describe the physical position of the entity and the third dimension corresponds to colors (in cell painting) or genes (in spatial transcriptomics).

(H) Texts are typically represented as a one-hot matrix where each token corresponds to its index in a static dictionary.

The protein image is adapted from Gaudelet et al.;21 the spatial transcriptomics image is adapted from 10x Genomics; the cell painting image is from Charles River Laboratories.

Gene expression/transcripts

In a cell, the DNA sequence of each gene is transcribed into messenger RNA (mRNA) transcripts. While most cells share the same genome, the individual genes are expressed at very different levels across cells and tissue types and given different interventions and environments. These expression levels can be measured by the count of mRNA transcripts. Given a disease, we can compare the gene expression in people with the disease with expression to people in healthy cohorts (without the disease of interest) and associate various genes with the underlying biological processes in this disease. With the advance of single-cell RNA sequencing (scRNA-seq) technology, we can now obtain gene expression for the different types of cells that make up a tissue. The availability of transcripts of tens of thousands of cells creates new opportunities for understanding interactions among the behaviors of different cell types in a cell population.

Machine learning representations

Gene expressions/transcripts are counts of mRNA. For a scRNA-seq experiment, given M cells/individuals with N genes, we can obtain a gene expression matrix , where each entry corresponds to the transcript counts of gene j for cell/individual i. An example is depicted in Figure 2B.

Proteins

Most of the genes encoded in the DNA provide instructions to build a diverse set of proteins, which perform a vast array of functions. For example, transcription factors are proteins that bind to the DNA/RNA sequence and regulate their expression in different conditions. A protein is a macro-molecule and is represented by a sequence of 20 standard amino acids or residues, where each amino acid is a simple compound. Based on this sequence code, it naturally folds into a three-dimensional (3D) structure, which determines its function. As the functional units, proteins present a large class of therapeutic targets. Many drugs are designed to inhibit/promote proteins in the disease pathways. Proteins can also be used as therapeutics such as antibodies and peptides.

Machine learning representations

Proteins have diverse forms. For a protein with N amino acids, it can be represented in the following formats: (1) a string of amino acid sequence tokens; (2) a contact map matrix where is the physical distance between ith and jth amino acids; (3) a protein graph G with nodes corresponding to amino acids, where nodes are connected based on rules such as a physical distance threshold or k-nearest neighbors; (4) a protein 3D grid with 3D discretized tensor, where each grid point corresponds to amino acids in the 3D space. An example is depicted in Figure 2C.

Compounds

Compounds are molecules that are composed of atoms connected by chemical bonds. They can interact with proteins and drive important biological processes. In their natural form, compounds have a 3D structure. Small-molecule compounds are the major class of therapeutics.

Machine learning representations

A compound is usually represented as (1) an SMILES string where it is a depth traversal order of the molecule graph or (2) a molecular graph G where each node is an atom and edges are the bonds. An example is illustrated in Figure 2D.

Diseases

A disease is an abnormal condition that affects the function and/or modifies the structure of an organism. It is derived from factors such as genotypes, environments, and economic status, with intricate mechanisms driven by biological processes. They are observable and can be described by certain symptoms.

Machine learning representations

Diseases are represented by (1) symbols in the disease ontology such as ICD-10 codes or (2) text description of the specific disease. An example is depicted in Figure 2E.

Biomedical networks

Biological processes are not driven by individual units but consist of numerous interactions among various types of entities such as cell-signaling pathways, protein-protein interactions, and gene regulation. These interactions can be characterized by biomedical networks, where they provide a systems view toward biological phenomena. In the context of diseases, a network can also contain interactions among phenotypes or disease mechanisms. These networks are also referred to as disease maps.

Machine learning representations

Biomedical networks are represented as graphs, where each node is a biomedical entity and an edge corresponds to relations among them. An example is depicted in Figure 2F.

Spatial data

With the advance of microscopes and fluorescent probes, we can visualize cell dynamics through cellular images. By imaging cells under various conditions such as drug treatment, they allow us to identify the effect of conditions at a cellular level. Furthermore, spatial genomic sequencing techniques now allow us to visualize and understand the gene expression for cellular processes in the tissue environment.

Machine learning representations

Cellular image or spatial transcriptomics can be represented as a matrix of size , where is the width and height of the data or number of pixels/transcripts along this dimension, and each entry corresponds to the pixel of the image or the transcript count in the case of spatial transcriptomics. Additional channels (a separate matrix of size ) encode for information such as colors or various genes for spatial transcriptomics. After aggregation, the spatial data can be represented as a tensor of size , where H is the number of channels. An example is illustrated in Figure 2G.

Texts

One common categorization of texts is structured versus unstructured data. Structured data follow rigid form and are easily searchable, whereas unstructured data are in a free-form format such as texts. While they are more difficult to process, they contain crucial information that usually does not exist in structured data. The first important example of text encountered in therapeutics development includes clinical trial design protocols, where texts describe inclusion and exclusion criteria for trial participation, often as a function of genome markers. For example, in a trial to study gefitinib for EGFR-mutant non-small cell lung cancer, one of the trial eligibility criteria would be “An EGFR sensitizing mutation must be detected in tumor tissue.”22 The second type of clinical text is clinical notes documented in EHRs. While the majority of the EHR data are structured, the unstructured clinical notes contain valuable information to support various applications such as post-market research on treatments.

Machine learning representations

Clinical texts are similar to texts in common natural language processing. The standard way to represent them is a matrix of size , where M is the number of total vocabularies and N is the number of tokens in the texts. Each column is a one-hot encoding for the corresponding token. An example is depicted in Figure 2H.

Machine learning methods for biomedical data

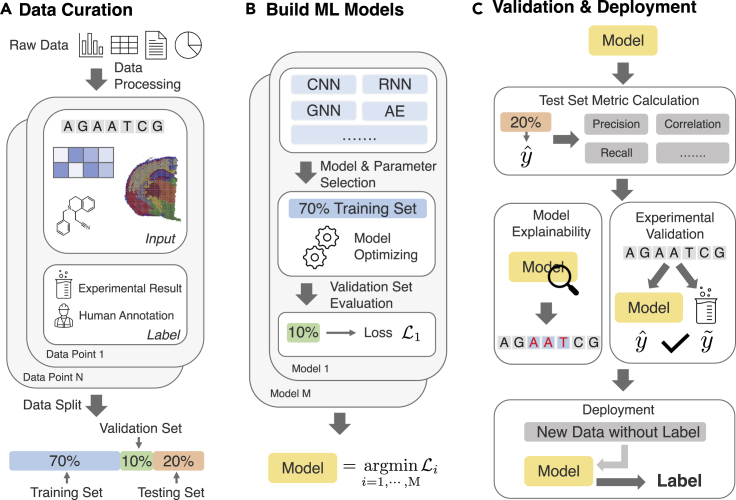

ML models learn patterns from data and leverage these patterns to make accurate predictions. Numerous ML models have been proposed to tackle different challenges. This section briefly introduces the main mechanisms of popular ML models used to analyze genomic data. Figure 3 describes a typical ML for genomics data workflow. We also provide a list of public benchmarks or competitions that compare various discussed ML methods in Table 2.

Figure 3.

Machine learning for genomics workflow

(A) The first step is to curate a machine learning dataset. Raw data are extracted from databases of various sources and are processed into data points. Each data point corresponds to an input of a series of biomedical entities and a label from annotation or experimental results. These data points constitute a dataset, and they are split into three sets. The training set is for the ML model to learn and identify useful and generalizable patterns. The validation set is for model selection and parameter tuning. The testing set is for the evaluation of the final model. The data split could be constructed in a way to reflect real-world challenges.

(B) Various ML models can be trained using the training set and tuned based on a quantified metric on the validation set such as loss that measures how good this model predicts the output given the input. Lastly, we select the optimal model given the lowest loss.

(C) The optimal model can then predict on the test set, where various evaluation metrics are used to measure how good the model is on new unseen data points. Models can also be probed with explainability methods to identify biological insights captured by the model. Experimental validation is also common to ensure the model can approximate wet-lab experimental results. Finally, the model can be deployed to make predictions on new data without labels. The prediction becomes a proxy for the label from downstream tasks of interest.

Table 2.

Public benchmarks and competitions of machine learning for therapeutics with genomics data

| Name | Focus | Link |

|---|---|---|

| MoleculeNet | molecule learning | http://moleculenet.ai/ |

| Therapeutics Data Commons | general therapeutics | https://tdcommons.ai/benchmark/overview/ |

| DREAM | general biomedicine | https://dreamchallenges.org/ |

| SBV-IMPROVER | human-mouse translation | https://www.intervals.science/resources/sbv-improver/stc |

| TAPE | protein engineering | https://github.com/songlab-cal/tape |

| CASP | protein structure | https://predictioncenter.org/ |

| GuacaMol | molecule generation | https://www.benevolent.com/guacamol |

| Open Problems | single-cell analysis | https://openproblems.bio/ |

| RxRx | cell painting | https://www.rxrx.ai/ |

| Kaggle-MoA | mechanism of action | https://www.kaggle.com/c/lish-moa |

| Kaggle-HPA | single-cell classification | https://www.kaggle.com/c/hpa-single-cell-image-classification |

Preliminary

A typical ML model for genomics usage is as follows. Given an input of a set of data points, where each data point consists of input features and a ground-truth biological label, an ML model aims to learn a mapping from input to a label based on the observed data points, which are also called training data. This setting of predicting by leveraging known supervised labels is also called supervised learning. The size of the training data is called the sample size. ML models are data-hungry and usually need a large sample size to perform well.

The input features can be DNA sequences, compound graphs, or clinical texts, depending on the task at hand. The ground-truth label is usually obtained via biological experiments. The ground truth also presents the goal for an ML model to achieve. Thus, if the ground-truth label contains errors (e.g., human labeling error or wet-lab experiments error), the ML model could optimize over the wrong signals, highlighting the necessity of high-quality data curation and control. It is also worth mentioning that the input can also present quality issues, such as shifts of the cell image, batch effect for gene expressions, and measurement errors. There are various forms of ground-truth labels. If the labels are continuous (e.g., binding scores), the learning problem is a regression problem. And if the labels are discrete variables (e.g., the occurrence of interaction), the problem is a classification problem. Models focusing on predicting the labels of the data are called discriminative models. Besides making predictions, ML models can also generate new data points by modeling the statistical distribution of data samples. Models following this procedure are called generative models.

When labels are not available, an ML model can still identify the underlying patterns within the unlabeled data points. This problem setting is called unsupervised learning, whereby models discover patterns or clusters (e.g., cell types) by modeling the relations among data points. Self-supervised learning uses supervised learning methods for handling unlabeled data. It creatively produces labels from the unlabeled data (e.g., masking out a motif and using the surrounding context to predict the motif).14,23

In many biological cases ground-truth labels are scarce, in which case few-shot learning can be considered. Few-shot learning assumes only a few labeled data points but many unlabeled data points. Another strategy is called meta-learning, which aims to learn from a set of related tasks to form the ability to learn quickly and accurately on an unseen task.

If a model integrates multiple data modalities (e.g., DNA sequence plus compound structure), it is called multi-modal learning. When a model predicts multiple labels (e.g., multiple target endpoints), it is called multi-task learning.

In biomedical ML problems, high-quality data curation is a key step. Biomedical data are usually generated from wet-lab experiments, and are thus prone to numerous experimental glitches. A dataset usually results from different biotechnology platforms, batches, time points, and conditions. Thus, accurate and careful pre-processing and data fusion are tremendously important; otherwise, the ML model may learn from biased or erroneous data. Numerous data-processing protocols have also been formulated, such as batch-effect corrections,24 imputation,25 and datasets integration.26 Efforts in curating ML-ready therapeutics datasets have also been initiated.27

In this survey, we argue that the integration of genomics data with other biomedical entity types is the key to transforming data into therapeutic products. This integration also goes beyond integrating different contexts such as cellular, tissue, and organism at temporal scales. Data integration in ML is a well-studied subject. There are mainly three categories.28 The first is at the dataset level, where datasets are fused and aligned to form a combined dataset and then fed into the ML model. The second is for each data type or dataset foe which a separate ML model is used to encode it, after which the ML models are combined in the ML latent space. The third is integration on the output space, where model predictions are aggregated through ensembles. For each task covered in this survey, we specify the data-integration strategy.

Classic ML models

Traditional ML usually requires a transformation of input to tabular real-valued data, where each data point corresponds to a feature vector. In our context, these are pre-defined features such as the SNP vector, polygenic risk scores, and chemical fingerprints. These tabular data can then be fed into a wide range of supervised models, such as linear/logistic regression, decision trees, random forest, support vector machine, and naive Bayes.29 They work well when the features are well defined. A multi-layer perceptron30 (MLP) consists of at least three layers of neurons, where each layer is fed into a non-linear activation function to capture these patterns. When the number of layers is large, it is called a deep neural network (DNN). Classic ML models are very simple to implement and are highly scalable. They can serve as a strong baseline. However, they only accept real-valued vectors as inputs and do not fit the diverse biomedical entity types such as sequence and graph. Also, these vectors are usually features engineered by humans, which further limits their predictive powers. Examples are shown in Figures 4A and 4B.

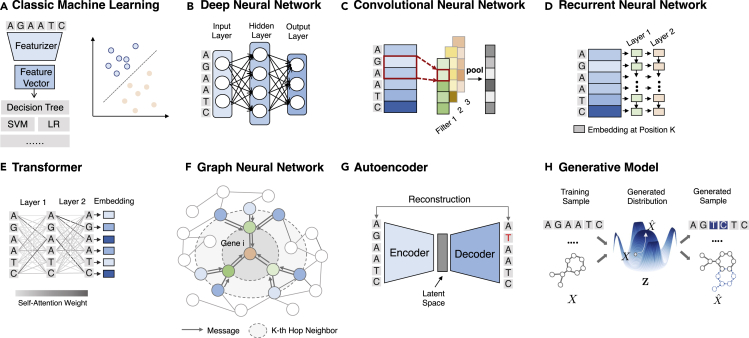

Figure 4.

Illustrations of machine learning models

Details about each model can be found in “machine learning methods for biomedical data.”

(A) Classic machine learning models featurize raw data and apply various models (mostly linear) to classify (e.g., binary output) or regress (e.g., real value output).

(B) Deep neural networks map input features to embeddings through a stack of non-linear weight multiplication layers.

(C) Convolutional neural networks apply many local filters to extract local patterns and aggregate local signals through pooling.

(D) Recurrent neural networks generate embeddings for each token in the sequence based on the previous tokens.

(E) Transformers apply a stack of self-attention layers that assign a weight for each pair of input tokens.

(F) Graph neural networks aggregate information from the local neighborhood to update the node embedding.

(G) Autoencoders reconstruct the input from an encoded compact latent space.

(H) Generative models generate novel biomedical entities with more desirable properties.

Suitable biomedical data

Any real-valued feature vectors built upon biomedical entities such as SNP profile and chemical fingerprints.

Convolution neural network

Convolution neural networks (CNNs) represent a class of DNNs widely applied for image classification, natural language processing, and signal processing such as speech recognition.31 A CNN model has a series of convolution filters, which allow it to identify local patterns in the data (e.g., edges, shapes for images). Such networks can automatically extract hierarchical patterns in data. The weight of each filter reveals patterns. CNNs are powerful in picking up the patterns locally, which is ideal for biomedical tasks whereby local structures are important to the outcome, such as consecutive blocks of genes (conserved motifs) in DNA sequence and substructures in compound string representation. However, they are restricted to grid-structured data and do not work for non-Euclidean objects such as gene regulatory networks or 3D biochemical structures. Also, CNNs are translation invariant, which is a double-edged sword. On the one hand, it can robustly predict even if one perturbs the input by translation. On the other hand, it might not be ideal with biomedical data whereby order/spatial location information is crucial for the outcome such as time-series gene expression trajectory. An example is depicted in Figure 4C.

Suitable biomedical data

Short DNA sequence, compound SMILES strings, gene expression profile, and cellular images.

Recurrent neural network

A recurrent neural network (RNN) is designed to model sequential data, such as time series, event sequences, and natural language text.32 The RNN model is sequentially applied to a sequence. The input at each step includes the current observation and the previous hidden state. RNN is natural to model variable-length sequences. There are two widely used variants of RNNs: long short-term memory (LSTM)33 and gated recurrent units.34 RNNs are natural candidates for sequential data such as DNA/protein sequence and textual data, where the next token depends on previous tokens. However, they often suffer from the vanishing gradient problem, which precludes them from modeling long-range sequences. Thus, it is not ideal for a long DNA sequence. An example is depicted in Figure 4D.

Suitable biomedical data

DNA sequence, protein sequence, and texts.

Transformer

Transformers35 are a recent class of neural networks that leverage self-attention: assigning a score of interaction among every pair of input features (e.g., a pair of DNA nucleotides). By stacking these self-attention units, the model can capture more expressive and complicated interactions. Transformers have shown superior performances on sequence data, such as natural language processing. They have also been successfully adapted for state-of-the-art performances on proteins36 and compounds.37 Transformers are powerful, but they are not scalable due to the expensive self-attention calculation. Despite several recent advances to increase the maximum size to the order of tens of thousands,38 this limitation has still prevented its usage for extremely long sequences such as genome sequences and usually requires partitioning and aggregation strategies. An example is depicted in Figure 4E.

Suitable biomedical data

DNA sequence, protein sequence, texts, and image.

Graph neural networks

Graphs are universal representations of complex relations in many real-world objects. In biomedicine, graphs can represent knowledge graphs, gene expression similarity networks, molecules, protein-protein interaction networks, and medical ontologies. However, graphs do not follow rigid data structures as in sequences and images. Graph neural networks (GNNs) are a class of model that converts graph structures into embedding vectors (i.e., node representation or graph representation vectors).39 In particular, GNNs generalize the concept of convolution operations to graphs by iterative passing and aggregating messages from neighboring nodes. The resulting embedding vectors capture the node attributes and the network structures. GNNs are a powerful tool to model any graph-structured biomedical data. However, when adapting GNNs to the biomedical domain, special attention is required. For example, GNNs heavily rely on the assumption of homophily, where similar nodes are connected. In biomedical networks, however, it has been shown to exhibit more complicated behavior such as skip similarity.40 Besides, the local message-passing schemes oversimplify biochemical graph structures. Domain-motivated GNN design where biophysical principles are integrated is highly desirable.41 An example is depicted in Figure 4F.

Suitable biomedical data

Biomedical networks, compound/protein graphs, and similarity network.

Autoencoders

Autoencoders (AEs) are an unsupervised method in deep learning. AEs map the input data into a latent embedding (encoder) and then reconstruct the input from the latent embedding (decoder).42 Their objective is to reconstruct the input from a low-dimensional latent space, thus allowing the latent representation to focus on essential properties of the data. Both encoders and decoders are neural networks. AEs can be considered as a non-linear analog to principal component analysis. The generated latent representation captures patterns in the input data and can thus be used to carry out unsupervised learning tasks such as clustering. Among its variants, the denoising autoencoders take partially corrupted inputs and are trained to recover original undistorted inputs.43 Variational autoencoders (VAEs) model the latent space with probabilistic models. As these probabilities are complex and usually intractable, they adopt a variational inference technique to approximate these probabilistic models.44 AEs are widely used to map gene expression to latent states without any labels, and these latent embeddings are useful for downstream single-cell analyses. One disadvantage of AEs is that they model training data, while in single-cell analysis test data can come from different settings from training data. It is thus challenging to obtain accurate latent embeddings with AEs on novel test data. An example is depicted in Figure 4G.

Suitable biomedical data

Unlabeled data.

Generative models

In contrast to making a prediction, generative models aim to learn a sufficient statistical distribution that characterizes the underlying datasets (e.g., a set of DNA sequences for a disease) and its generation process.45 Based on the learned distribution, various kinds of downstream tasks can be supported. For example, from this distribution one can intelligently generate optimized data points. These optimized samples can be novel images, compounds, or RNA sequences. One popular model is called generative adversarial networks (GANs)46 consisting of two submodels: a generator that captures the data distribution of a training dataset in a latent representation and a discriminator that determines whether a sample is real or generated. These two submodels are trained iteratively such that the resulting generator can produce realistic samples that potentially fool the discriminator. An example is depicted in Figure 4H.

Suitable biomedical data

Data in which new variants can have more desirable properties (e.g., molecule generation for drug discovery).47,48 Depending on the data modality, different encoders can be chosen for the generative models.

Machine learning for genomics in target discovery

A therapeutic target is a molecule (e.g., a protein) that plays a role in the disease's biological process. The molecule could be targeted by a drug to produce a therapeutic effect such as inhibition, thereby blocking the disease process. Much of target discovery relies on fundamental biological research in depicting a full picture of human biology and, based on this knowledge, to identify target biomarkers. In this section, we review ML for genomics tasks in target discovery. First, we review six tasks that use ML to facilitate understanding of human biology, and second, we describe four tasks in using ML to help identify druggable biomarkers more accurately and more quickly.

Facilitating understanding of human biology

Oftentimes, the first step for developing any therapeutic agent is to generate a disease hypothesis and understand the disease mechanisms. This requires some understanding of basic human biology, since diseases are complicated and driven by many factors. ML applied to genomics can facilitate basic biomedical research and help us to understand disease mechanisms. A wide range of relevant tasks have been tackled by ML, from predicting splicing patterns,49,50 DNA methylation status,51 to decoding the regulatory roles of genes.52,53 The majority of previous reviews have focused on this theme only. Many tasks could be covered in this theme. In this review, we chose six important tasks based on the following criteria: (1) the task is closely tight to understanding disease mechanism and discovering targets, as this survey focuses on therapeutics development; (2) the task is popular (i.e., sufficient literature exists), and ML has successfully been applied to it; (3) the overall selection is diverse to cover different data modalities, ML task formulation and ML representations (e.g., graphs, images, vectors). For a full review of biological understanding, we refer readers to Angermueller et al.54

DNA-protein and RNA-protein binding prediction

DNA-binding proteins bind to specific DNA strands (binding sites/motifs) to influence the transcription rate to RNA, chromatin accessibility, and so forth. These motifs regulate gene expression and, if mutated, can potentially contribute to diseases. Similarly, RNA-binding proteins bind to RNA strands to influence RNA processing, such as splicing and folding. Thus, it is important to identify the DNA and RNA motifs for these binding proteins.

Traditional approaches are based on position weight matrices (PWMs), but they require existing knowledge about the motif length and typically ignore interactions among the binding-site loci. ML models trained directly on sequences to predict binding scores circumvent these challenges. A CNN is a great match for this task because CNN's filters operate in a mechanism similar to that of PWMs by convolving over snippets of motifs and assigning higher weights to the motifs that are important. We can also examine binding-site motifs through visualizing CNN filter weights. Based on this key observation, various methods have been proposed. For example, Alipanahi et al.55 use a CNN to train large-scale DNA/RNA sequences with varying lengths to predict the binding scores. While DNA sequence alone provides strong signals, other channels of information could further aid the binding prediction. For example, Kircher et al.56 show that including evolutionary features for identifying chromatin proteins/histone marks binding can further improve the performance. Similarly, Zhou and Troyanskaya53 show that integrating another CNN model on additional information from the epigenomics profile further improves performance. Extending CNN-based models, a large body of works has been proposed to predict DNA- and RNA-protein binding.57,58, 59, 60 While CNN models are highly predictive, the interpretability is limited in its resolution, as the CNN filter has a window size of around 100–200 base pairs. The base-resolution model is highly ideal for identifying granular information such as transcription factor (TF) cooperativity. Recently, Avsec et al.61 have shown the benefits of the base-resolution CNN model in TF binding prediction.

Machine learning formulation

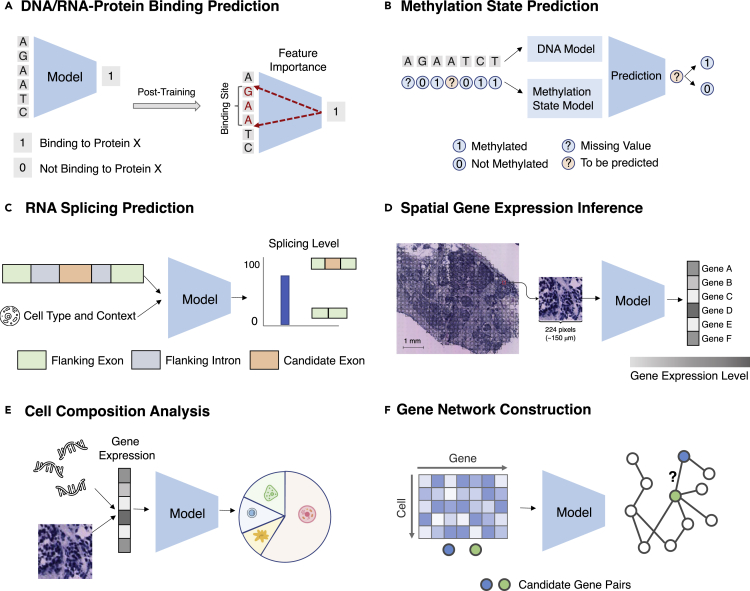

Given a set of DNA/RNA sequences, predict their binding scores. After training, use feature importance attribution methods to identify the motifs. An illustration of the task is presented in Figure 5A.

Figure 5.

Task illustrations for the theme “facilitating understanding of human biology”

(A) A model predicts whether a DNA/RNA sequence can bind to a protein. After training, one can identify binding sites based on feature importance (see “DNA-protein and RNA-protein binding prediction”).

(B) A model predicts missing DNA methylation state based on its neighboring states and DNA sequence (see “methylation state prediction”).

(C) A model predicts the splicing level given the RNA sequence and the context (see “RNA splicing prediction”).

(D) A model predicts spatial transcriptomics from tissue image (see “spatial gene expression inference”).

(E) A model predicts the cell-type compositions from the gene expression (see “cell-composition analysis”).

(F) A model constructs a gene regulatory network from gene expressions (see “gene network construction”.

Panel (C) is adapted from Xiong et al.,50 and the spatial transcriptomics image in panel (D) is from Bergenstråhle et al.62

Methylation state prediction

DNA methylation adds methyl groups to individual A or C bases in the DNA to modify gene activity without changing the sequence. It is a commonly used mediator for biological processes such as cancer progression and cell differentiation.63 Thus, it is important to know the methylation status of DNA sequences in various cells. However, since the single-cell methylation technique has low coverage, most of the methylation status at specific DNA positions is missing, requiring accurate imputation.

Classical methods can only predict population-level status given features instead of cell-level status because cell-level prediction requires granular and complex modeling of long sequential methylation status.64,65 Sequential ML models such as RNNs and CNNs are ideal choices because they can capture the non-linear sequential dependencies that are hidden in the methylation sequence. For example, given a set of cells with their available sequenced methylation status for each DNA position and the DNA sequence, Angermueller et al.51 accurately infer the unmeasured methylation statuses at a single-cell level. More specifically, the imputation of DNA methylation positions uses a bidirectional RNN on a sequence of cells' neighboring available methylation states and a CNN on the DNA sequence. The combined embedding takes into account information between DNA and methylation status across cells and within cells. Alternative architecture choices have also been proposed, such as using Bayesian clustering66 or a variational AE.67 Notably, it can also be extended to RNA methylation state prediction. Zou et al.68 apply CNN on the neighboring methylation status and the word2vec model on RNA subsequence for RNA methylation status prediction. The main challenge in DNA methylation prediction is the number of CpG sites, which could be many millions. The ability of the model to accommodate such long-range information is limited in current ML models due to issues such as vanishing gradient problems in RNN and scalability issues for transformers.

Machine learning formulation

For a DNA/RNA position with missing methylation status, given its available neighboring methylation states and the DNA/RNA sequence, predict the methylation status on the position of interest. The task is illustrated in Figure 5B.

RNA splicing prediction

RNA splicing is a mechanism to assemble the coding regions and remove the non-coding ones to be translated into proteins. A single gene can have various functions by splicing the same gene in different ways given different conditions. López-Bigas et al.69 estimate that as many as 60% of pathogenic variants responsible for genetic diseases may influence splicing. Gefman et al.70 identify around 2% of synonymous variants and 0.6% of intronic variants as likely pathogenic due to alternative splicing defects. Thus, it is important to be able to identify the genetic variants that cause alternative splicing. Traditional wet-lab measurements of splicing levels are highly unscalable.

Xiong et al.50 pioneer the use of ML in splicing prediction. They model this problem as predicting the splicing level of an exon, measured by the transcript counts of this exon, given its neighboring RNA sequence and the cell-type information. It uses Bayesian neural network ensembles on top of curated RNA features and has demonstrated its accuracy by identifying known mutations and discovering new ones. Notably, this model is trained on large-scale data across diverse disease areas and tissue types. Thus, the resulting model can predict the effect of a new unseen mutation within hundreds of nucleotides on the splicing of an intron without experimental data. This property of generalization across new contexts is crucial but is particularly difficult for ML due to its natural tendency to overfit on spurious correlation. In addition, to predict the splicing level given a triplet of exons in various conditions, recent models have been developed to annotate the nucleotide branchpoint of RNA splicing. Paggi and Beherano71 feed an RNA sequence into an RNN, predicting the likelihood of being a branchpoint for each nucleotide. Jagadeesh et al.72 further improve the performance by integrating features from the splicing literature and generate a highly accurate splicing-pathogenicity score.

Machine learning formulation

Given an RNA sequence and its cell type, if available, for each nucleotide, predicts the probability of being a spliced breakpoint and the splicing level. The task is illustrated in Figure 5C.

Spatial gene expression inference

Gene expression varies across the spatial organization of tissue. This heterogeneity contains important insights into the biological effects. Regular sequencing, whether of single cells or bulk tissue, does not capture this information. Recent advances in spatial transcriptomics characterize gene expression profiles in their spatial tissue context.73 However, integrating the sequencing output with the tissue context provided by histopathology images takes resources and time. Automatic annotations could drastically save resources. He et al.62 introduce ML to this important problem by formulating it as gene expression prediction from histopathology images. As a histopathology slide is an image, a natural model is through CNN. They develop a deep CNN that predicts gene expression from histopathology of patients with breast cancer at a resolution of 100 μm. They also show that the model can generalize to other breast cancer datasets without retraining. Building upon the inferred spatial gene expression levels, many downstream tasks are enabled. For example, Levy-Jurgenson et al.74 construct a pipeline that characterizes tumor heterogeneity on top of the CNN gene expression inference step. Bergenstråhle et al.75 model the spatial transcriptomics and histology image jointly through latent state and infer high-resolution denoised gene expression by posterior estimation. Despite the promises, one crucial challenge of this task is the requirement of a large number of ground-truth annotations, which are expensive to acquire in a novel set of histopathology slides or genes. This challenge highlights the need for the model to learn from few examples by adopting techniques such as meta-learning or transfer learning.

Machine learning formulation

Given the histopathology image of the tissue, predict the gene expression for every gene at each spatial transcriptomics spot. The task is illustrated in Figure 5D.

Cell-composition analysis

Different cell types can drive changes in gene expressions that are unrelated to the interventions. Analyzing the average gene expression for a batch of mixed cells with distinct cell types could lead to bias and false results.76 Thus, it is important to deconvolve the gene expressions of the cell-type composition from the real signals for tissue-based RNA-seq data.

ML models can help estimate the cell-type proportions and the gene expression. The rationale is to obtain parameters of gene expression (a signature matrix) that characterize each cell type through single-cell profiles. The signature matrix should contain gene expressions that are stably expressed across conditions. These parameters are then integrated into the RNA-seq data to infer cell composition for a set of query gene expression profiles. Various methods, including linear regression77 and support vector machines,78 are used to predict a cell-composition vector when combined with the signature matrix to approximate the gene expression. In these works the signature matrix is pre-defined, which may not be optimal. A learnable signature matrix could lead to improved accuracy. Pioneering this direction, Menden et al.79 apply DNNs to predict cell-composition profile directly from the gene expression, where the hidden neurons can be considered as the learned signature matrix. Cell deconvolution is also crucial for spatial transcriptomes where each spot could contain 2 to 20 cells from a mixture of dozens of possible cell types. Andersson et al.80 model various cell-type-specific parameters using a customized probabilistic model. As spots in a slide have spatial dependencies, modeling them as a graph can further improve performance. Notably, Su and Song81 initiate the use of graph convolutional network to leverage information from similar spots in the spatial transcriptomics. There are two major challenges for this task. The first is the quality of the gold-standard annotations as the cell-proportion estimates are usually noisy. This calls for ML methods that can model the label noise.82 Another challenge is that the proportions are highly dependent on phenotypes such as age, gender, and disease status. How to take into account this information in the ML models is also valuable for more accurate deconvolution.

Machine learning formulation

Given the gene expressions of a set of cells (in bulk RNA-seq or a spot in spatial transcriptomics), infer proportion estimates of each cell type for this set. The task is illustrated in Figure 5E.

Gene network construction

The expression levels of a gene are regulated via TFs produced by other genes. Aggregating these TF-gene relations results in the gene regulatory network. Accurate characterization of this network is crucial because it describes how a cell functions. However, it is difficult to quantify gene networks on a large scale through experiments alone.

Computational approaches have been proposed to construct gene networks from gene expression data. The majority of them learn a mapping from expressions of a gene to TF. If the mapping is successful, it is likely that this TF affects this gene. Various mapping methods using classic ML have been proposed, such as linear regression,83 random forest,84 and gradient boosting.85 However, gene networks constructed through these methods are not controllable in sparsity and are sensitive to parameter changes, and thus are filled with noises. Recently, Shrivastava et al.86 introduced a specialized unrolled algorithm to control the sparsity of the learned network. They also leveraged supervision obtained through synthetic data simulators to improve robustness further. Despite the promises, gene network construction is difficult due to the sparsity, heterogeneity, and noise of the gene expression data, particularly the diverse datasets from the integration of scRNA-seq experiments. The clinical validation of the predicted gene associations also poses challenges, since it is difficult to screen such a large set of predictions.

Machine learning formulation

Given a set of gene expression profiles of a gene set, identify the gene regulatory network by predicting all pairs of interacting genes. The task is illustrated in Figure 5F.

Identifying druggable biomarkers

Diseases are driven by complicated biological processes in which each step may be associated with a biomarker. By identifying these biomarkers, we can design therapeutics to break the disease pathway and cure the disease. Machine learning can help identify these biomarkers by mining through large-scale biomedical data to predict genotype-phenotype associations accurately. Probing the trained models can uncover potential biomarkers and identify patterns related to the disease mechanisms. Next, we present several important tasks related to biomarker identification.

Variant calling

Variant calling is the very first step before relating genotypes to diseases. It is used to specify which genetic variants are present in each individual's genome from sequencing. The majority of the variants are biallelic, meaning that each locus has only one possible alternative form of nucleotide compared with the reference, while a small fraction is also multi-allelic, meaning that each locus can have more than one alternative form. As each locus has two copies, one from mother and another from father, the variant is measured by the total set of nucleotides (e.g., for biallelic variant, suppose B is the reference nucleotide and b is the alternative; three genotypes are possible: homozygous [BB], heterozygous [Bb], and homozygous alternate [bb]). Raw sequencing outputs are usually billions of short reads, and these reads are aligned to a reference genome. In other words, for each locus we have a set of short reads that contain this locus. Since sequencing techniques have errors, the challenge is to predict the variant status of this locus accurately from the set of reads. Manual processing of such a large number of reads to identify each variant is infeasible. Thus, efficient computational approaches are needed for this task.

A statistical framework called the Genome Analysis Toolkit (GATK),87 which combines logistic regression, hidden Markov models, and Gaussian mixture models, is commonly used for variant calling. While previous works operate on sequencing statistics, DeepVariant88 treats the sequencing alignments as images. The images are raw data and they contain more information than the engineered sequencing features. It then applies CNN to extract useful signals and has been shown to have superior performance to previous modeling efforts. DeepVariant also works for multi-allelic variant calling. In addition to predicting zygosity, Luo et al.89 use multi-task CNNs to predict the variant type, alternative allele, and indel length. Many other deep learning-based methods are proposed to tackle more specific challenges, such as long sequencing length using LSTMs.90 Benchmarking efforts have also been conducted.91 Although most methods have greater than 99% accuracy, thousands of variants are still being called incorrectly, since the genome sequence is extremely long. How to adjust ML models to focus on the hard locus is a promising direction. Besides, variability persists across different sequencing technologies. Another challenge is the phasing problem, which estimates whether the two mutations in a gene are on the same chromosome (haplotypes) or opposite ones.92 Recently, Zhao et al.93 have extended the prediction of variants from RNA-seq gene expression data with improved accuracy over DNA-based data. This suggests another potentially promising avenue for future research or a complementary approach with DNA-based methods to reduce misclassification.94

Machine learning formulation

Given the aligned sequencing data ([1] read pileup image, which is a matrix of dimension M and N, with M the number of reads and N the length of reads; or [2] the raw reads, which are a set of sequences strings) for each locus, classify the multi-class variant status. The task is illustrated in Figure 6A.

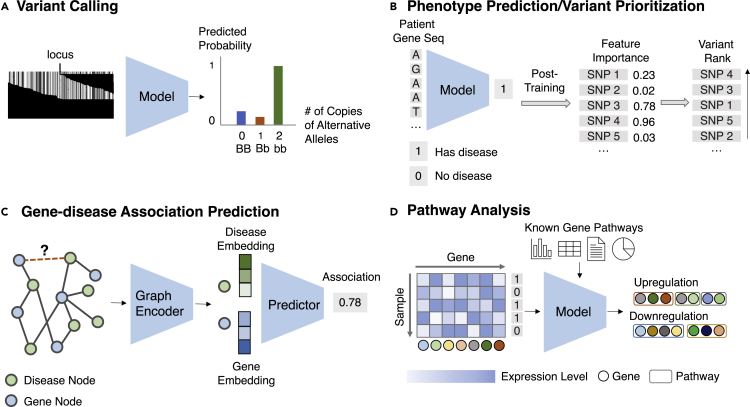

Figure 6.

Task illustrations for the theme “identifying druggable biomarkers”

(A) A model predicts the zygosity given a read pileup image (see “variant calling”).

(B) A model predicts whether the patient is at risk for the disease given the genomic sequence. After training, feature importance attribution methods assign importance for each variant, which is then ranked and prioritized (see “variant pathogenicity prioritization/phenotype prediction”).

(C) A graph encoder obtains embeddings for each disease and gene node, and they are fed into a predictor to predict their association (see “gene-disease association prediction”).

(D) A model identifies a set of gene pathways from the gene expression profiles and the known gene pathways (see “pathway analysis and prediction”).

Variant pathogenicity prioritization/phenotype prediction

There are many genomic variants in the human genome, at least 1 million per person. While many influence complex traits and are relatively harmless, some are associated with diseases. Complex diseases are associated with multiple variants in both coding and non-coding regions of the genome. Thus, prioritization of pathogenic variants from the entire variant set can potentially lead to disease targets.

There are mainly two computational approaches. The first one is to predict the pathogenicity given a set of features for a single variant. These features are usually curated from biochemical knowledge, such as amino acid identities. Kircher et al.56 build on these features using a linear support vector machine and Quang et al.95 use deep neural networks to classify whether a variant is pathogenic. DNN shows improved performance on classification metrics. After training, the model can generate a ranked list of variants based on their predicted pathogenicity likelihood whereby the top ones are prioritized. Note that this line of work considers each variant as an input data point and assumes some knowledge of the pathogenicity of the variants, which is not the case in many scenarios, especially for new diseases.

Another line of work is to use each genome profile as a data point and use a computational model to predict disease risks from this profile. If the model is accurate, one can obtain variants contributing to the prediction of the disease phenotype. Predicting directly from the whole-genome sequence is challenging for two reasons. First, as the whole-genome is high-dimensional while the cohort size for each disease is relatively limited, this presents the “curse of dimensionality” challenge in ML. Second, most SNPs in the input genome are irrelevant to the disease, presenting difficulty in correctly identifying these signals from the noise. Kooperberg et al.96 use a sparse regression model to predict the risk of Crohn's disease for patients using genomics data in the coding region. Paré et al.97 use gradient boosted regression to approximate polygenic risk score for complex traits such as diabetes, height, and body mass index. Isgut et al.98 use logistic regression on polygenic risk scores to improve myocardial infarction risk prediction. Zhou et al.99 apply DNNs on the epigenomic features of both the coding and non-coding regions to predict gene expression for more than 200 tissue and cell types and later identify disease-causing SNPs. Building upon DeepSEA, Zhou and colleagues53,100 apply CNN on epigenomic profiles, which are modifications of the DNA sequence such as DNA methylation or chromatin accessibility, to predict autism and identify experimentally validated non-coding variant mutations. ML models usually output multiple potential candidates of biomarkers, each associated with a value estimating the likelihood for being pathogenic. The standard procedure includes a post-training ranking step to retrieve the top-K biomarkers based on the pathogenic likelihood. However, in many cases, the model ends up with many potential candidates, limiting their utility. To circumvent this issue, sparsification of the model might be useful by tricks such as adding L1 penalization of the model output and model pruning.101 Besides, injecting model uncertainty score could be leveraged to better inform the prediction by removing scores with low certainty.102

Machine learning formulation

Given features about a variant, predict its corresponding disease risk and then rank all variants based on the disease risk. Alternatively, given the DNA sequence or other related genomics features, predict the likelihood of disease risk for this sequence and retrieve the variant in the sequence that contributes highly to the risk prediction. The task is illustrated in Figure 6B.

Rare disease detection

In the United States, a rare disease is defined as one that affects fewer than 200,000 people, with other countries similarly defining a rare disease based on low prevalence. There are around 7,000 rare diseases, which collectively affect 350 million people worldwide.103 Due to limited financial incentives, unknown disease mechanisms, and potential difficulties in recruiting sufficient patients for clinical trials, more than 90% of rare diseases lack effective treatments. Also, initial misdiagnosis is common. On average, it takes more than 7 years and eight physicians for a patient to be correctly diagnosed. Importantly, it is likely that targets identified for rare diseases may also be useful for therapeutic intervention of similar more common diseases.

ML models are good at identifying patterns from complex patient data. Rare disease detection can be formulated as a classification task, similar to phenotype prediction. It aims to identify whether the patient has a rare disease from the patient's genomic sequence and information such as EHRs. If sufficient data from patients with a rare disease and suitable controls exist, ML models can be applied to detect rare diseases. Also, the genetic complexity of rare diseases is that they have missing heritability, which could be harbored in regulatory regions instead of the coding regions. Leveraging this important knowledge, Yin et al.104 propose a two-step CNN approach whereby one CNN first predicts the promoter regions likely associated with amyotrophic lateral sclerosis. Another CNN detects whether the patient has a rare disease based on genotypes in the selected genomic regions.

However, rare diseases pose special challenges to ML compared with classical phenotype prediction because these diseases have an extremely low prevalence in the data while most data points belong to the control set. This data imbalance makes it difficult for ML models to pick up signals and prevent them from making an accurate prediction. Thus, special model designs are required. The standard way for data imbalance includes oversampling the rare cases or downsampling the majority of cases. A more sophisticated and powerful strategy is using synthetic data by generating fake but realistic rare cases. Popular approaches combine minority points to forge a new point.105, 106, 107 Intelligent synthetic data generation by modeling the minority data distribution through generative models could lead to more realistic samples. Cui et al.108 pioneer a generative adversarial network (GAN) model to generate synthetic but realistic rare disease patient embeddings to alleviate the class imbalance problem and show a significant performance increase in rare disease detection. Besides generating realistic data, low-resource learning techniques can also be applied to rare disease cases. For example, Taroni et al.109 use a transfer learning framework to adapt a smaller set of rare disease genomic data from large-scale genomic data with a diverse set of diseases. Specifically, they leverage biological principles by constructing latent variables shared across a wide range of diseases. These variables correspond to genetic pathways. As these variables are the fundamental biology units, they can be naturally adopted even for smaller datasets such as rare disease cohorts.

Machine learning formulation

Given the gene expression data and other auxiliary data of a patient, predict whether this patient has a rare disease. Also, identify genetic variants for this rare disease. The task is illustrated in Figure 6B, which is the same as phenotype prediction.

Gene-disease association prediction

Although numerous genes are now mapped to diseases, human knowledge about gene-disease association mapping is vastly incomplete. At the same time, we know many genes are similar to each other, as is also the case for diseases. We can impute unknown associations from known ones by many similarity rules that govern the gene-disease networks to leverage these similarities. One notable rule is the “guilt by association” principle.110 For example, disease X and gene a are more likely to be associated if we know gene b associated with disease X has a similar functional role as gene a. In contrast to variant prioritization focusing on predicting one specific disease, gene-disease association predictions aim to predict any disease-gene pairs.

Many graph-theoretic approaches such as diffusion111 have been applied to gene-disease association prediction. However, they require strong assumptions about the data. Learnable methods have also been heavily investigated. Studies have shown that integrating similarity across multiple data types can help gene-disease prediction.112 Notably, Luo et al.113 fuse information from protein-protein interaction and gene ontology through a multi-modal deep belief network. Cáceres and Paccanaro114 use phenotype data to transfer knowledge from other phenotypically similar diseases using a network diffusion method, whereby the phenotypical similarity is defined by the distance on the disease ontology trees. As gene-disease relations can be viewed as graphs, GNN is an ideal modeling choice by formulating it as a link prediction problem. However, GNNs highly rely on the principle of homophily in social networks whereas biomedical interaction networks present more complicated graph connectivity. Notably, Huang et al.40 observe the skip similarity in biomedical graphs and propose a novel GNN to improve gene-disease association prediction. As some diseases such as rare diseases are not well annotated compared with other common diseases, predicting molecularly uncharacterized (no known biological function or genes) diseases is difficult but crucial. This poses a special requirement for ML models to generalize to low-represented data groups, often formulated under the long-tail prediction regime.115

Machine learning formulation

Given the known gene-disease association network and auxiliary information, predict the association likelihood for every unknown gene-disease pair. The task is illustrated in Figure 6C.

Pathway analysis and prediction

Many diseases are driven by a set of genes forming disease pathways. Pathway analysis identifies these gene sets through transcriptomics data and leads toward a more complete understanding of disease mechanisms. Many statistical approaches have been proposed. For example, Gene Set Enrichment Analysis116 leverages existing known pathways and calculates statistics on omics data to see whether any pathway is activated. However, it treats each pathway as a set while no relation among the genes is provided. Other topology-based pathway analyses117 that take into account the gene relational graph structure are also proposed. Many pathway analyses suffer from noise and provide unstable pathway activation and inhibition patterns across samples and experiments. Ozerov et al.118 introduce a clustered gene importance factor to reduce noise and improve robustness. Current pathway analysis heavily relies on network-based methods.119 Another approach is to understand potential disease mechanisms by probing explainable ML models that predict genotype-to-disease association. Explainable artificial intelligence (AI) models identify small gene sets or a gene subgraph that mostly contribute to the prediction. However, this requires modeling the underlying biological processes. Many efforts have been made to simulate cell-signaling pathways and corresponding hierarchical biological processes in silico. Karr et al.120 devised the first whole-cell approach to predict cell growth from genotype using a set of differential equations. Recently, an ML model called visible neural network121 simulates the hierarchical biological processes (gene ontology) in a eukaryotic cell as a feedforward neural network where each neuron corresponds to a biological subsystem. This model is trained end-to-end from genotype to cell fitness phenotype with good accuracy. A post hoc interpretability method that assigns scores for each subsystem generates a likely mechanism for the fitness of a cell after training. This method has been extended recently to train on genomics data related to prostate cancer phenotype to generate disease pathways.122

Machine learning formulation

Given the gene expression data for a phenotype and known gene relations, identify a set of genes corresponding to disease pathways. The task is illustrated in Figure 6D.

Machine learning for genomics in therapeutics discovery

After a drug target is identified, a campaign to design potent therapeutic agents to modulate the target and block the disease pathway is initiated. These therapeutics can be a small molecule, an antibody, or gene therapy, among others. The discovery consists of numerous phases and subtasks to ensure the efficacy and safety of the therapeutics. Genomics data also play a role in this process. In this section, we review ML for genomics in therapeutics discovery under two main themes. We first investigate the relation of small-molecule drug efficacy given different cellular genomic contexts. We then review how ML can enable the design of various gene therapies.

Improving context-specific drug response

Precision medicine aims at developing the treatment strategy based on a patient's genetic profile. This contrasts with the traditional “one-size-fits-all” approach, which assigns the same treatments to patients with the same diseases. Personalized approaches have been one of the most sought-after endeavors in the field due to their numerous advantages such as improving outcomes and reducing side effects,4 especially in oncology, where several biomarkers could lead to drastically different treatment plans.3 Despite the promise to understand the relations among treatments, diseases, high-dimensional genomics profiles, and the various outcomes, large-scale experiments in combinatorial complexity are required to investigate these relationships.123 ML provides valuable tools to facilitate this process.

Drug response prediction

It is known that the same small-molecule drug could have various response levels given different genomic profiles. For example, an anticancer drug has a different response to different tumors. Thus, it is crucial to generate an accurate response profile given drug-genomics profile pairs. However, to experimentally test each combination of available drugs and cell-line genomics profiles is prohibitively expensive.

An ML model can be used to predict a drug's response in a diverse set of cell lines in silico. An accurate ML model can greatly narrow down the drug screening space and reduce experimental costs and resources. Various models have been proposed to improve the accuracy, such as matrix factorization,124 VAEs,125 ensemble learning,126 similarity network model,127 and feature selection.128 While promising, one challenge is that the current public database has a limited number of drugs and genomics profiles tested, focusing on a small set of tissues or approved drug classes. It is often difficult for a model to generalize automatically to new contexts such as novel cell types and structurally diverse drugs with limited samples. For realistic adoption, ML models that can generalize to new domains given only a few labeled data points are thus highly desirable. This problem fits well with the few-shot meta-learning regime. Recently, Ma et al.129 tackled the few-shot drug response prediction problem. They apply model-agnostic meta-learning to learn from screening data of a set of tissues to generalize to new contexts such as new tissue types and pre-clinical studies in mice.130 In addition to accurate prediction, for a domain scientist to adopt the usage, it is also important to allow understanding of how the ML model makes the drug response prediction and what drug response mechanism is leveraged by it. Motivated by this, Kuenzi et al.131 firstly apply visible neural networks121 in the drug response prediction context by generating potential mechanisms and validating them through experiments using CRISPR, in vitro screening, and patient-derived tissue cultures.

Machine learning formulation

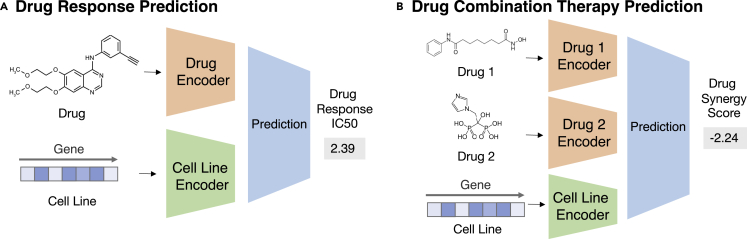

Given a pair of drug compound molecular structures and gene expression profiles of the cell line, predict the drug response in this context. The task is illustrated in Figure 7A.

Figure 7.

Task illustrations for the theme “improving context-specific drug response”

(A) A drug encoder and a cell-line encoder produce embeddings for drug and cell line, respectively, which are then fed into a predictor to estimate drug response (see “drug response prediction”).

(B) Drug encoders first map two drugs into embedding, and a cell-line encoder maps a cell line into embeddings. Three embeddings are then fed into a predictor for drug synergy scores (see “drug combination therapy prediction”).

Drug combination therapy prediction

Drug combination therapy, also called cocktails, can expand the use of existing drugs, improve outcomes, and reduce side effects. For example, drug cocktails can modulate multiple targets to provide a novel mechanism of action in cancer treatments. Also, by reducing dosages for each drug, it may be possible to reduce adverse effects. However, screening the entire space of possible drug combinations and various cell lines is not feasible experimentally.

ML that can predict synergistic responses given the drug pair and the genomic profile for a cell line can prove valuable. Classical ML methods such as naive Bayes132 and random forests133 have shown initial success on independent external data. Deep learning methods such as DNNs134 and deep belief networks135 have shown improved performance. Integration with multi-omics data on cell lines has also further improved the performance, such as microRNA expression and proteomic features.136 Similar to drug response prediction, one important challenge is to transfer across tissue types and drug classes. Kim et al.137 pioneer this direction by conducting transfer learning to adapt models trained on data-rich tissues such as brain and breast tissues to understudied tissues such as bone and prostate tissues.

Machine learning formulation

Given a combination of drug compound structures and a cell line's genomics profile, predict the combination response. The task is illustrated in Figure 7B.

Improving efficacy and delivery of gene therapy

Gene therapy is an emerging therapeutics class, which delivers nucleic acid instruction into patients’ cells to prevent or cure disease. These instructions include (1) replacing disease-causing genes with healthy ones, (2) turning off genes that cause diseases, and (3) inserting genes to produce disease-fighting proteins. Special vehicles called vectors are used to deliver these instructions (cargoes) into the cells and induce sufficient therapeutic effects. Many choices exist, such as naked DNA, virus, and nanoparticles. Virus vectors have become popular due to their natural ability to directly enter cells and replicate their genetic material. Despite the promise, numerous challenges still exist in reaching the expected effect, such as the host immune response, viral vector toxicity, and off-target effects. In recent years, ML tools have been shown to help tackle many of these challenges.

CRISPR on-target outcome prediction

CRISPR/Cas9 is a biotechnology that can edit genes in a precise location. It allows the correction of genetic defects to treat disease and provides a tool with which to alter the genome and to study gene function. CRISPR/Cas9 is a system with two important players. Cas9 protein is an enzyme that can cut through DNA, where the CRISPR sequence guides the cut location. The guide RNA sequence (gRNA) determines the specificity for the target DNA sequence in the CRISPR sequence. While existing CRISPR mostly make edits by small deletions, it is also under active research to carry out repair which, after cutting, a DNA template is provided to fill in the missing part of the gene. In theory, CRISPR can correctly edit the target DNA sequence and even restore a normal copy, but in reality the outcome varies significantly given different gRNAs.138 It has been shown that the outcome is decided by factors such as gRNA secondary structure and chromatin accessibility.139 Some of the desirable outcomes include insertion/deletion length, indel diversity, and the fraction of insertions/frameshifts. Thus, it is crucial to design a gRNA sequence such that the CRISPR/Cas system can achieve its effect on the designated target (also called on-target).

ML methods that can accurately predict the on-target outcome given the gRNA would facilitate the gRNA design process. Many classic ML methods have been investigated to predict various repair outcomes given gRNA sequence, such as linear models,140,141 support vector machines,142 and random forests.143 However, they do not capture the high-order non-linearity of gRNA features. Deep learning models that apply CNNs to automatically learn gRNA features show further improved performance.144,145 Despite the promise, numerous challenges still exist. For example, ML models are data-hungry. There is only a limited set of data with CRISPR knockout experiments, affecting the model's generalizability to new contexts such as new tissues. Besides, current models can only predict outcome while being incapable of generating the mechanism of how this gRNA sequence leads to the CRISPR outcome. For this high-stake biotechnology explainability is crucial, as an unexplained adverse effect could be detrimental for ML-designed gRNA sequence.

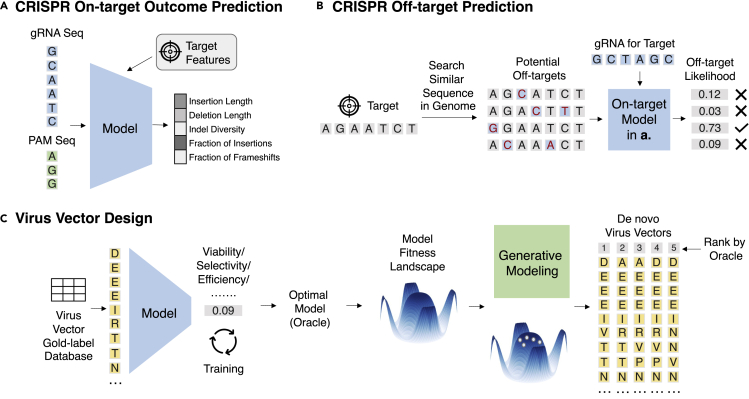

Machine learning formulation

With a fixed target, given the gRNA sequence and other auxiliary information such as target gene expression and epigenetic profile, predict its on-target repair outcome. The task is illustrated in Figure 8A.

Figure 8.

Task illustrations for the theme “improving efficacy and delivery of gene therapy”

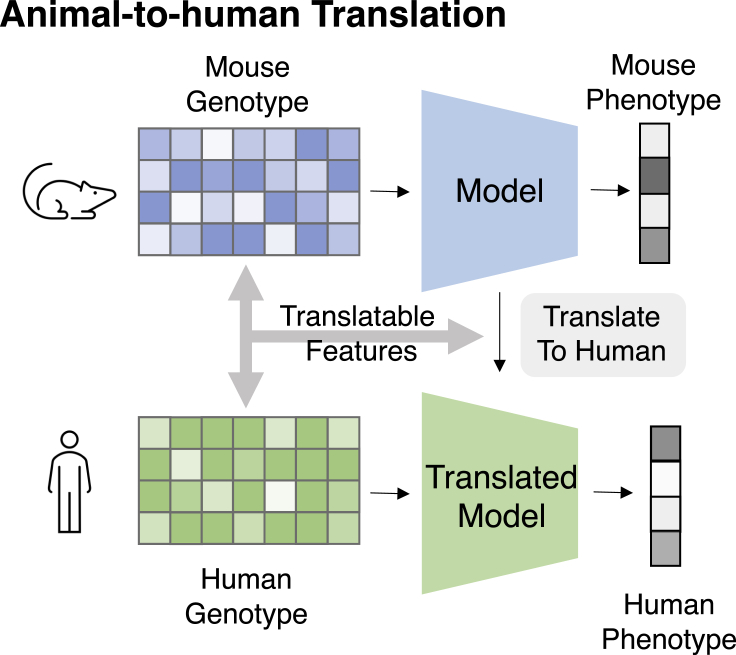

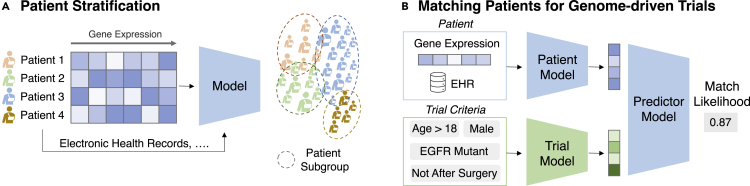

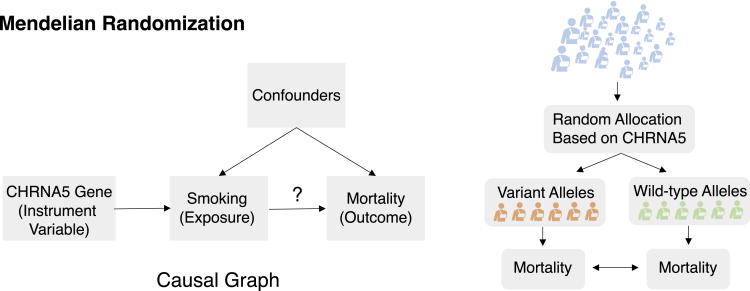

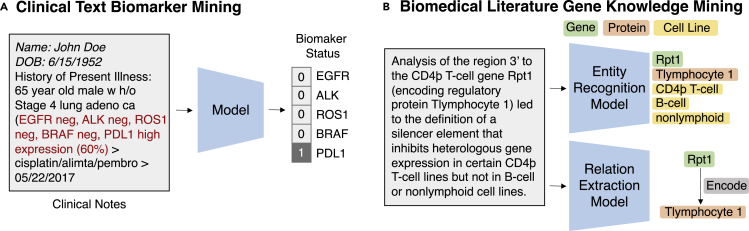

(A) A model predicts various gene-editing outcomes given the gRNA sequence and the target DNA features (see “CRISPR on-target outcome prediction”).