SUMMARY

Engineering gene and protein sequences with defined functional properties is a major goal of synthetic biology. Deep neural network models, together with gradient ascent-style optimization, show promise for sequence design. The generated sequences can however get stuck in local minima and often have low diversity. Here, we develop deep exploration networks (DENs), a class of activation-maximizing generative models, which minimize the cost of a neural network fitness predictor by gradient descent. By penalizing any two generated patterns on the basis of a similarity metric, DENs explicitly maximize sequence diversity. To avoid drifting into low-confidence regions of the predictor, we incorporate variational autoencoders to maintain the likelihood ratio of generated sequences. Using DENs, we engineered polyadenylation signals with more than 10-fold higher selection odds than the best gradient ascent-generated patterns, identified splice regulatory sequences predicted to result in highly differential splicing between cell lines, and improved on state-of-the-art results for protein design tasks.

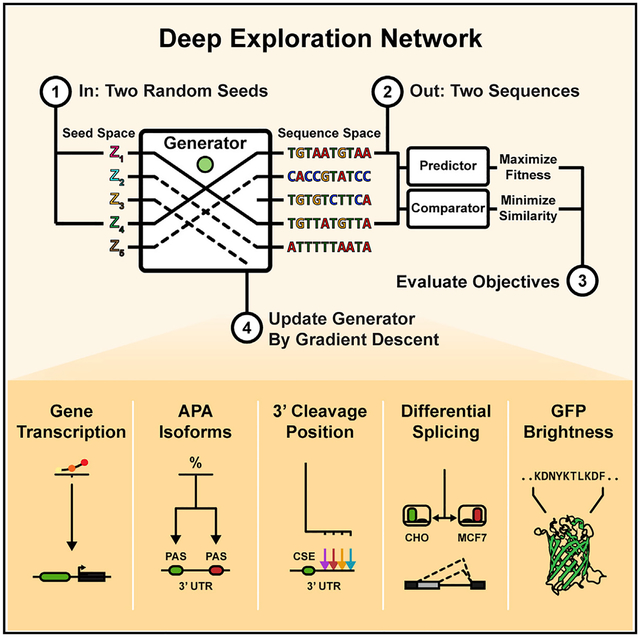

Graphical Abstract

In Brief

A generative neural network jointly optimizes fitness and diversity in order to design maximally strong polyadenylation signals, differentially used splice sites among organisms, functional GFP variants, and transcriptionally active gene enhancer regions.

INTRODUCTION

Designing DNA sequences for a target cellular function is a difficult task, as the cis-regulatory information encoded in any stretch of DNA can be very complex and affect numerous mechanisms, including transcriptional and translational efficiency, chromatin accessibility, splicing, 3′ end processing, and more. Similarly, protein design is challenging due to the non-linear, long-ranging dependencies of interacting residues. Yet, sequence-level design of genetic components and proteins has been making rapid progress in the past few years. Part of this advancement can be attributed to the collection of large biological datasets and improved bioinformatics modeling. In particular, deep learning has emerged as state of the art in predictive modeling for many sequence-function problems (Alipanahi et al., 2015; Zhou and Troyanskaya, 2015; Quang and Xie, 2019; Avsec et al., 2019; Kelley et al., 2016, 2018; Greenside et al., 2018; Jaganathan et al., 2019; Cuperus et al., 2017; Eraslan et al., 2019). These models are now beginning to be combined with design methods and high-throughput assays to forward-engineer DNA and protein sequences (Rocklin et al., 2017; Biswas et al., 2018; Sample et al., 2019; Bogard et al., 2019). The ability to code regulatory DNA and protein function could prove useful for a wide range of applications. For example, controlling cell-type-specific transcriptional, translational, and isoform activity would enable engineering of highly specific delivery vectors and gene circuits. Functional protein design, e.g., generating heterodimer binders or proteins with optimally stable 3D structures, could prove transformative in T cell therapy and drug development.

Discrete search heuristics such as genetic algorithms have long been considered the standard method for sequence design (Eiben and Smith, 2015; Shukla et al., 2015; Mirjalili et al., 2020). Recently, however, deep generative models, such as variational autoencoders (VAEs) (Kingma and Welling, 2013), autoregressive models, and generative adversarial networks (GANs) (Goodfellow et al., 2014), have been adapted for biomolecules (Riesselman et al., 2019; Costello and Martin, 2019; Repecka et al., 2019). Methods based on directed evolution have been proposed to condition such generative models for a target biological property (Gupta and Zou, 2019; Brookes et al., 2019).

Alternatively, differentiable methods based on activation-maximization can directly optimize sequences for maximal fitness by gradient ascent through a neural network fitness predictor. At its core, sequence design via gradient ascent treats the input pattern as a position weight matrix (PWM). A neural network, pre-trained to predict a biological function, is used to evaluate the PWM fitness. The gradient of the fitness score with respect to the PWM parameters is used to iteratively optimize the PWM by gradient ascent. This class of algorithms, applied to sequences, was first employed to visualize transcription factor-binding motifs learned from chromatin immunoprecipitation sequencing (ChIP-seq) data (Lanchantin et al., 2016). A modified version of the algorithm, with gradient estimators to allow passing sampled one-hot coded patterns as input, was used to design alternative polyadenylation (APA) sites (Bogard et al., 2019). The method has also been used to indirectly optimize sequences with respect to a fitness predictor by traversing a pre-trained generative model and iteratively updating its latent input (Killoran et al., 2017).

Gradient ascent-style sequence optimization is a continuous relaxation of discrete nucleotide-swapping searches and as such makes efficient use of neural network differentiability; rather than naively trying out random changes, we follow a gradient to make stepwise local improvements on the fitness objective. Still, the basic method has a number of limitations. First, it might get stuck in local minima and the fitness of the converged patterns is dependent on PWM initialization (Bogard et al., 2019). Second, it is computationally expensive to re-run gradient ascent for every sequence to generate. Third, the method has no means of controlling the diversity of optimized sequences. However, generating large, diverse sets of sequences might be necessary, given that the predictor has been trained on finite empirical data and will likely incorrectly score certain sequences. Methods that generate diverse sequences effectively increase the likelihood that some candidates have high fitness when tested experimentally.

To address these limitations, we developed deep exploration networks (DENs), an activation-maximizing generative neural network capable of optimizing sequences for a differentiable fitness predictor. The core contribution of DENs is to explicitly control sequence diversity during training. By penalizing any two generated sequences on the basis of similarity, we force the generator to traverse local minima and explore a much larger region of the cost landscape, effectively maximizing both sequence fitness and diversity (Figure 1A). Because DENs are parametric models, we can efficiently sample many sequences after having trained the generator. The architecture shares similarities with Killoran et al. (2017) but instead of optimizing the latent input seed of a pre-trained GAN, we optimize the weights of the generator itself for any pair of input seeds.

Figure 1. DEN Architecture.

(A) A sequence produced by an input seed to a generative model (red-blue) shares the cost landscape with other generated sequences (orange). Patterns are penalized by similarity during training, resulting in an updated generator that transforms the red-blue seed into a different sequence, away from other patterns and potentially toward a new local minimum.

(B) Sequences are optimized on the basis of a pre-trained fitness predictor for some target function. We consider five different engineering applications: maximizing gene transcription, maximizing APA isoform selection, targeting 3′ cleavage positions, designing differential splicing between organisms, and improving green fluorescent proteins.

(C) In DENs, the generator is executed twice (independently) on two random seeds (z1, z2), producing two sequence patterns. One of the patterns is evaluated by the predictor, resulting in a fitness cost. The two patterns are also penalized on the basis of a similarity cost, and the generator is updated to minimize both costs.

(D) The PWM is multiplied by a mask (zeroing fixed nucleotides), and a template is added (encoding fixed letters). One-hot-coded patterns are outputted by sampling nucleotides from stochastic neurons, and gradients are propagated by straight-through estimation.

See also Figure S1.

For specific design tasks, the fitness predictor might quickly lose its predictive accuracy when the generated sequences move away from the training data in sequence space, making unbounded optimization of the predictor ill-suited. For example, stably folding proteins reside along a narrowly defined manifold in the space of all possible sequences and most protein datasets only contain measurements on this manifold. To overcome this issue, we integrate importance sampling of VAEs into the differentiable training pipeline of DENs, which allows us to maintain the likelihood ratio, or confidence, in generated sequences with respect to the measured data.

We evaluate the utility of DENs on several synthetic biology applications (Figure 1B): first, we develop a basic model to generate 3′ UTR sequences with target APA isoform abundance. Second, we extend the model to do conditional multi-class generation in the context of guiding 3′ cleavage position. Third, we apply DENs to construct splice regulatory sequences that are predicted to result in maximal differential splicing between two organisms. Fourth, on the level of DNA regulation, we benchmark DENs against competing methods when designing maximally transcriptionally active gene enhancer sequences. Finally, we use DENs for rational protein design and demonstrate state-of-the-art results on the task of designing green fluorescent protein (GFP) sequences.

RESULTS

Exploration in Deep Generative Models

The DEN architecture is based around a generative neural network and a differentiable fitness predictor (Figure 1C). Given an input pattern x, is used to predict a property that we wish to design new patterns for. Here, x is a DNA or protein sequence represented as a one-hot-coded matrix x ∈ {0, 1}N×M, where N denotes sequence length and M the number of nucleotides (4) or amino acids (20). Given a D-dimensional seed vector as input, the generator outputs an approximate one-hot-coded pattern , where f transforms the real-valued nucleotide scores generated by into an approximate one-hot-coded representation, mask matrix M zeroes out fixed sequence positions, and template matrix T encodes fixed nucleotides (Figure 1D). The predictor output is used to define a fitness cost , and the overall goal is to optimize such that the generated sequences minimize this cost. Only is optimized, having pre-trained to accurately predict the target biological function.

By strictly minimizing , the generator will likely only learn to produce one single pattern, regardless of z, given that it is trivially optimal to always output the pattern located at the bottom of the local fitness cost minimum. There might however exist much better minima. Additionally, as the predictor might be inaccurate for certain sequences, the generator should ideally learn to sample many diverse patterns with maximal fitness scores. The distinguishing feature of a DEN is to force the generator to map randomly sampled vectors from the D-dimensional uniform distribution U(−1, 1)D to many different sequences with maximal fitness score. This is achieved by making the generator compete with itself; we run twice at each step of the optimization for a pair of independently sampled seeds z(1), z(2) ~ U(−1, 1)D and penalize the generator on the basis of both the fitness cost and a diversity cost evaluated on the generated patterns x(z(1)), x(z(2)):

| (Equation 1) |

This monte-carlo optimization differs from a classical GAN (Goodfellow et al., 2014), which is typically trained to minimize some cost such that an adversarial discriminator cannot distinguish between the real data and the distribution generated by . Also note that, in contrast to Killoran et al. (2017) where optimization is done on a single input seed of a pre-trained GAN, , we optimize the generator itself for all pairs of generator seeds. As training progresses, the generator will become injective over different seeds z ~ U(−1, 1)D. Consequently, we can sample diverse high-fitness sequences by drawing samples from U(−1, 1)D and transforming them through .

We investigate several cost functions for . First, a cosine distance is used to directly penalize one-hot patterns on the basis of the fraction of identical nucleotides, considering multiple ungapped alignments (Figures S1A and S1B). We found that allowing a fraction of the sequences to be identical up to an allowable margin without incurring any cost gives the best results. We also evaluate a latent diversity penalty, where sequences are implicitly penalized on the basis of similarity in a (differentiable) latent space. Here, we use one of the fully connected layers of the predictor as a latent feature space, coupled with a cosine similarity cost function (Figures S1C and S1D).

The generator can be trained to minimize the compound cost of Equation 1 by using any gradient-based optimizer. We built the DEN in Keras (Chollet, 2015) and optimized the generator with Adam (Kingma and Ba, 2014). For all design tasks, we set the diversity cost coefficient (1 − λ) to a sufficiently large value such that the cost approaches the allowable similarity margin early in the training. Function f used above to transform the real-valued nucleotide scores generated by into an approximate one-hot-coded representation x(z) must maintain differentiability. We investigate two methods for achieving this: (1) representing x(z) as a softmax-relaxed PWM (Killoran et al., 2017; Stewart et al., 2018), and (2) representing x(z) by discrete one-hot-coded samples and approximating the gradient by using a softmax straight-through estimator (Bengio et al., 2013; Courbariaux et al., 2016; Chung et al., 2016; Bogard et al., 2019). See STAR Methods for further details on cost functions, differentiable sequence representations, and DEN training.

Engineering APA Isoforms

We first demonstrate DENs in the context of APA. APA is a post-transcriptional 3′ end processing event where competing polyadenylation (polyA) signals (PASs) in the same 3′ UTR give rise to multiple mRNA isoforms (Figure 2A) (Di Giammartino et al., 2011; Tian and Manley, 2017). A typical PAS consists of a core sequence element (CSE), often the hexamer AATAAA, as well as diverse upstream and downstream sequence elements (USE, DSE). Cleavage and polyadenylation occur approximately 17 nt downstream of the CSE within the DSE. In a competitive situation with multiple PASs in the same 3′UTR, the sequence of each PAS is the major determinant of isoform selection. We used APA REgression NeT (APARENT)—a neural network for predicting APA isoform abundance—as the predictor (Bogard et al., 2019) (Figure S2A). The generator followed a DC-GAN architecture (Radford et al., 2015) (Figure S2B).

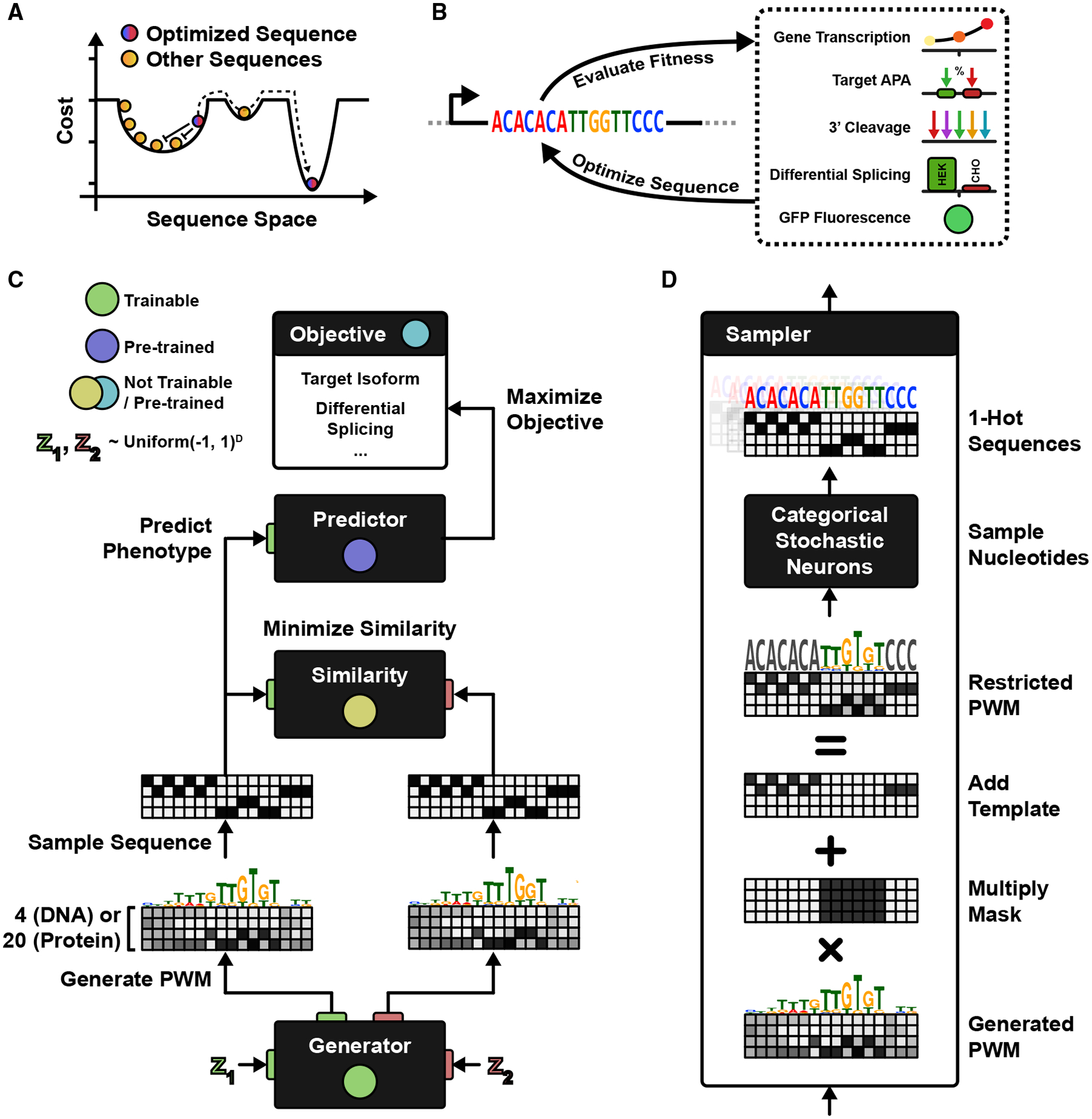

Figure 2. Engineering APA Isoforms.

(A) Two PASs in a 3′ UTR compete for polyadenylation. The generative task is to design proximal PASs, which are selected at a target proportion.

(B) Five DENs were trained to generate sequences according to the APA isoform targets 5%, 25%, 50%, 75%, and 100% (“max”), with a sequence similarity margin (the fraction of nucleotides allowed to be identical) of 30% for the first four DENs and 50% for the max-target DEN. (Top) Predicted isoform proportions (n = 1,000 sequences per generator). Mean isoform proportion per generator (target in parenthesis): (5%) 5.25%, (25%) 25.06%, (50%) 50.6%, (75%) 74.2%, and (“max”) 99.98%. (Bottom) Pixel grid where rows denote sequences and columns nucleotide position (n = 20 sequences per generator). 0% duplication rate at 100,000 sampled sequences by any generator.

(C) The max-target DEN was re-trained with a low diversity cost coefficient (left) and a high coefficient (right). (Top) Predicted isoform proportions (n = 1,000 sequences). (Bottom) Sequence pixel grid (n = 100 sequences). Low coefficient (left): mean isoform log odds = 6.06, 99.5% duplication rate (n = 100,000 sequences). High coefficient (right): mean isoform log odds = 8.91, 0% duplication rate (n = 100,000 sequences).

(D) The max-target DEN was retrained for different values of the allowable sequence similarity margin. Plotted are the 50th/99th percentile of predicted fitness scores (isoform log odds) and pairwise normalized edit distances (n = 4,096 sequences per generator).

(E) Experiment validating the performance of the generated sequences. Two max-target sequences generated by the DEN were synthesized as either the proximal or distal PASon, a minigene reporter in competition with baseline gradient ascent-generated sequences (Bogard et al., 2019). Isoform odds ratios (preference fold changes) of the DEN PAS were assayed by using qPCR. Shown are the δδ cycle threshold values and associated odds ratios.

We trained 5 DENs, each tasked with generating PASs according to the following target-isoform proportions: 5%, 25%, 50%, 75%, and maximal use (“max”); there was a 30% allowable similarity margin for the first four DENs and a 50% margin for the max-target DEN. In order to fit each generator to its target, we defined the fitness cost as the symmetric Kullback–Leibler (KL) divergence between the predicted and target-isoform proportion (Figure S2C; see STAR Methods for details). After training, each DEN could accurately generate sequences according to its objective (Figure 2B, top), as the mean of each generated isoform distribution was within 1% from the target proportion. The generated sequences for the max-target objective were predicted to be extremely efficient PASs (on average 99.98% predicted use). All five DENs exhibited a high degree of diversity (Figures 2B, bottom, S2D, and S2E; Videos S1 and S2); when sampling 100,000 sequences per generator, no two sequences were ever identical (0% duplication rate). Estimated from 1,000 sampled sequences, each generator had a hexamer entropy between 9.11 and 10.0 bits (of 12 bits maximum).

The optimization trajectories show that, as training progresses, the DENs immediately enforce sequence diversity (35%–45% normalized edit distance) whereas the fitness scores quickly converge to their optima within only 5,000 updates (Figure S2H). The trajectories also highlight differences when using one-hot samples with straight-through gradients, the continuous softmax relaxation, or a combination of both as input to the predictor. In general, the straight-through method consistently outperformed the softmax relaxation. The overall best configuration was achieved by using both representations and walking down the average gradient, with an explicit PWM entropy penalty. Using a nearest neighbor (NN) search, we also directly compared the DEN-generated sequences with PASs with measured isoform proportions from one of the massively parallel reporter assay (MPRA) training datasets (Bogard et al., 2019) (Figures S2F and S2G). The NN-inferred mean isoform proportion of each generator was close to its respective target (6.00%, 25.1%, 53.2%, and 73.7% respectively for targets 5%, 25%, 50%, and 75%), with a low standard deviation (between 3.03% and 7.18%). For the max-isoform target, the NN-inferred mean proportion was above the 99th percentile of measured values.

To evaluate the importance of exploration during training, we re-trained the max-isoform DEN with two different parameter settings; in one instance, we lowered the diversity cost coefficient to a small value, and in another instance, we increased it (Figure 2C). With a low coefficient, the generator only learned to sample few, low-diversity sequences, all of similar isoform log odds (Figure 2C, left; mean isoform log odds = 6.06, 99.5% duplication rate at 100,000 samples). With an increased coefficient, generated sequences became much more diverse and the mean isoform odds increased almost 20-fold (Figure 2C, right; mean isoform log odds = 8.91, 0% duplication rate). These results indicate that exploration during training drastically improves the final fitness of the generator. To find the optimal similarity penalty (for this design problem), we re-trained the max-isoform DEN under different similarity margins (the fraction by which we allow sequences to be similar), measuring the 50th and 99th percentile of fitness scores and sequence edit distances of each converged generator (Figure 2D). Both fitness and diversity increased up to a sequence dissimilarity of 40%. Beyond that point, the generated fitness scores monotonically decreased.

Finally, we evaluated the utility of promoting diversity in latent feature spaces. To this end, we re-trained the max-isoform DEN with an additional cosine similarity penalty enforced on the latent feature space of the fully connected hidden layer of APARENT (Figure S2I). Although the median fitness score dropped, the 99th percentile of generated scores remained above the 75th percentile of the original generator. Furthermore, the median normalized sequence edit distance increased from 38% to 50%. Interestingly, diversity in the latent space of an independently trained APA VAE also increased.

Experimental Validation of Deep Exploration Sequences

As suggested in Figures 2C and 2D, exploration increases the capability of generating high-fitness sequences. Next, we characterized DEN-generated PASs experimentally and compared their fitness with sequences generated by the baseline gradient ascent method. To that end, we synthesized APA reporters with two adjacent PASs (Figure 2E): each reporter contained one of the newly generated max-target PASs as well as one of the strongest gradient ascent-optimized signals from (Bogard et al., 2019). It is believed that the proximal PAS is slightly preferred compared with the distal PAS (Bentley, 2014). In order to discount this bias, we experimentally assayed both signal orientations where the DEN-generated PAS was either the proximal signal or the distal signal. The reporters were cloned onto plasmids and delivered to HEK293 cells. We quantified the expressed RNA isoform levels by using a qPCR assay, measuring the Ct values of total and distal RNA, respectively. Using Ct differences to estimate odds ratio lower bounds, we found that the DEN-generated sequences were on average 11.6-fold more preferred (usage odds increase) than the gradient ascent-generated sequences (Figure 2E). To put this in perspective, the strongest gradient ascent sequence had usage odds of 127:1 (99.22%) in relation to a distal bGH PAS separated by 200 nt. The DEN sequences would have usage odds of 1,481:1 (99.93%) in relation to the same signal.

A Comparison of Generative Models for Sequence Design

We carried out extensive benchmark comparisons between DENs and competing methods on three tasks (Figure 3A): (1) designing PASs for maximal polyadenylation isoform abundance (APARENT) (Bogard et al., 2019), (2) designing gene enhancer sequences for maximal transcriptional activity (MPRA-DragoNN) (Movva et al., 2019; Ernst et al., 2016), and (3) designing longer (1,000 nt) gene enhancer regions with maximal SPI1-binding score (DragoNN). We compared DENs with 6 competing methods: (1) GANs trained on the subset of the predictor’s data with the highest measured fitness score (Wang et al., 2019b), (2) activation-maximization of GANs (AM-GAN) (Killoran et al., 2017), (3) feedback-GANs (FB-GANs) (Gupta and Zou, 2019), (4) optimizing a single softmax-relaxed PWM by gradient ascent and sampling sequences from it (the baseline method), (5) optimizing several PWMs by gradient ascent, and (6) simulated annealing with the Metropolis acceptance criterion (Kirkpatrick et al., 1983; Metropolis et al., 1953). For FB-GAN, we tried using both a fixed feedback threshold (as in the original publication) as well as an adaptive threshold that was adjusted at each iteration. Only the two most competitive methods, DENs and simulated annealing, were compared on the third design task. See STAR Methods for further details. Fitness scores were compared to the median score of the baseline method (PWM gradient ascent), where the median score of random sequences was treated as 0% (i.e., −100%) of the baseline value.

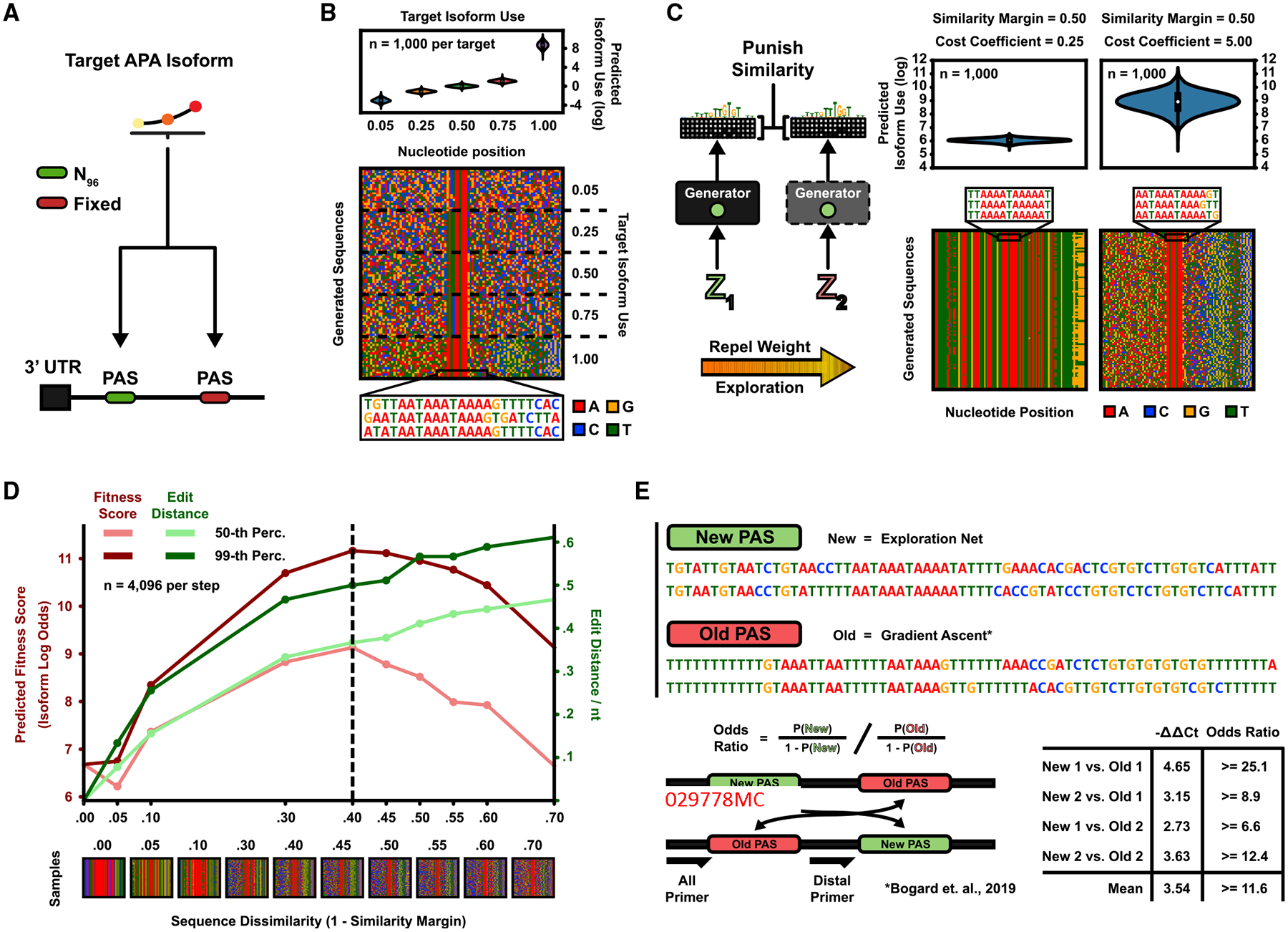

Figure 3. Comparison of Sequence Design Methods.

(A) Design methods were benchmarked on three tasks: (1) designing PASs with maximal isoform abundance (APARENT) (Bogard et al., 2019), (2) designing enhancer sequences for maximal transcription activity (MPRA-DragoNN) (Movva et al., 2019), and (3) designing sequences for maximal SPI1 binding (DragoNN).

(B) Seven design methods were evaluated (listed in top legend). Parametric models (GAN, FB-GAN, and DEN) were trained to convergence and subsequently used to sample n = 4,096 sequences. The GAN was trained on high-fitness data for each design task. FB-GAN and AM-GAN were based on GANs trained on a uniform subset of data. FB-GAN used an adaptive feedback threshold. DEN was trained with 50% sequence similarity margin for the first two design tasks (APARENT and MPRA-DragoNN). For the final task (DragoNN), we tried two latent penalties (70% or 90% margin). Per-sequence methods (AM-GAN, PWM Gradient Ascent, and simulated annealing) were re-initialized and optimized n = 4,096 times. (Top) Predicted fitness scores. (Bottom) Normalized pairwise sequence edit distances. Dashed lines indicate DEN median scores. See also Figures S3C–S3F.

(C) Extrapolated cumulative number of iterations (total sequence budget; y axis) required by DEN and simulated annealing to generate 1,000, 100,000, and 10,000,000 sequences (x axis) with a median predicted fitness score of 80% of the maximum value. Extrapolations were based on 960 optimized samples per method. We trained and evaluated two versions of DEN, where the number of one-hot samples used for the straight-through approximation during training was either 1 (yellow) or 10 (orange).

See also Figure S3.

For the APA design task, methods DEN, FB-GAN (with adaptive feedback threshold), and simulated annealing generated sequences with fitness score medians that were approximately +115% compared with baseline (Figures 3B, left, S3C, and S3D). However, sequence diversity was lower for FB-GAN (15% median normalized edit distance) compared with DEN (35%) and simulated annealing (45%). DEN-generated diversity could be increased by changing the allowable similarity margin, at the cost of diminished fitness scores (Figure S3C, left). However, the fitness scores were still higher than the majority of competing methods. Although sequence diversity increased for FB-GAN with fixed feedback threshold (45% median edit distance; Figure S3C, left), its median fitness score dropped significantly (−23% of the baseline median). Similarly, fitness scores were low for GAN and activation-maximization of GAN (−61.5% and −46.1% below baseline, respectively). Measured as the total number of sequences sampled during optimization, DEN converges to near-optimal fitness scores in fewer iterations than all other methods (Figure S3C, right). The results were more pronounced for the gene enhancer design task (Figures 3B, middle, S3E, and S3F): fitness score medians were +600% and +660% compared with baseline for DEN and simulated annealing respectively, whereas FB-GAN had a median score of +200%. DEN had approximately 3% lower median edit distance compared with baseline, but it was 17% higher compared with FB-GAN. Overall, DEN and simulated annealing generated sequences of comparable fitness, but simulated annealing was more diverse (between 7% and 10% increased edit distance). In fact, simulated annealing is a meta-heuristic known to find near-global minima given enough iterations (Wales and Doye, 1997), and the method optimally finds diverse basins by starting from random sequences. But simulated annealing is fundamentally more computationally expensive when generating many samples; the method must be re-initialized and optimized for every sequence to make. For the first two design tasks, extrapolations show that DEN can generate 100,000 sequences in fewer iterations (total sequence budget) than simulated annealing, which requires a similarly sized budget to produce only 1,000–10,000 sequences of comparable fitness (Figures 3C and S3G; the exact improvement depends on whether we use multiple one-hot samples for the straight-through approximation during DEN training). For the third task where sequences are longer, DEN can generate 10 million sequences with the same sequence budget simulated annealing requires for 1,000–10,000 sequences (Figures 3B, right, and S3G, right).

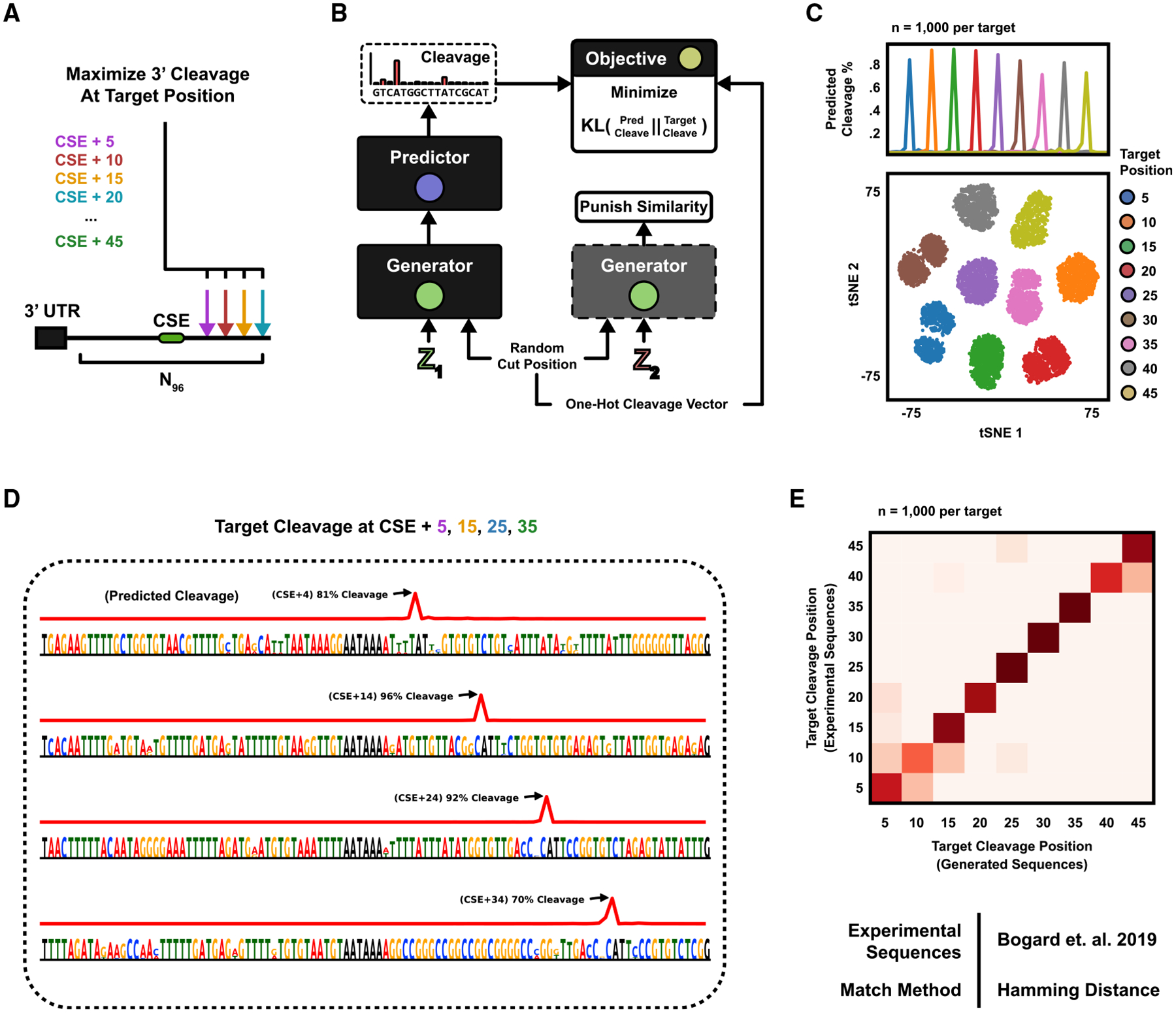

Engineering 3′ Cleavage Position

The next design task is closely related to APA, but rather than multiple competing PASs, we here concern ourselves with the position of 3′ cleavage within a single signal (Figure 4A). Cleavage occurs downstream of the central polyadenylation element—the CSE hexamer—however, the exact position and magnitude are tightly regulated by a complex code (Elkon et al., 2013). The predictor used for APA above—APARENT—can also predict the 3′ cleavage distribution, and so we re-use the model here (Figures S4A and S4B). Tasked with generating sequences that maximize cleavage at 9 distinct positions, we constructed a multi-class exploration network with a generator architecture similar to class-conditional GANs (Mirza and Osindero, 2014) (Figures 4B and S4C), where an embedding layer transforms the class label (target cut position) into a feature vector, which is concatenated onto every layer of the generator. We specify the fitness cost as the negative log likelihood of cleaving at the target position (given the predicted cleavage distribution of generated sequences).

Figure 4. Engineering 3′ Cleavage Positions.

(A) The 3′ mRNA cleavage position is governed by a cis-regulatory code within the PAS. The generative task is formulated as designing PASs, which maximize cleavage at target nucleotide positions downstream of the central hexamer (CSE) AATAAA.

(B) A class-conditional DEN is optimized to generate sequences that are predicted to cleave according to a randomly sampled target cut position. The sampled cut position is also supplied as input to the generator.

(C) The DEN was trained to generate sequences with maximal cleavage at 9 positions, +5 to +45 nt downstream of the CSE, with 50% similarity margin. (Top) Mean predicted cleavage profile (n = 1,000 sequences per target position). Predicted versus target cut position R2 = 0.998. (Bottom) All 9,000 sequences were clustered in tSNE and colored by target position.

(D) Example sequences generated for target positions +5, +15, +25, and +35. 0% duplication rate (n = 100,000 sequences). Hexamer entropy = 9.07 of 12 bits.

(E) The newly generated sequences were compared against gradient ascent-generated sequences for the same target (Bogard et al., 2019) by defining each cluster centroid as the mean one-hot pattern of all DEN-generated sequences and assigning each gradient ascent-pattern to the closest centroid on the basis of L1 distance. Agreement = 0.87.

After training, the generator could sample diverse sequences with highly specific cleavage distributions given an input target position (Figures 4C and 4D; predicted versus target cut position R2 = 0.998, 0% duplication rate at 100,000 sampled sequences; Figures S4D–S4F; Videos S3 and S4). When clustering the sequences in t-distributed stochastic neighbor embedding (tSNE) (Maaten and Hinton, 2008) (Figure 4C, bottom), we observe clearly separated clusters based on the target cleavage position. We further confirmed the function of the sequences by comparing them with a set of gradient ascent-optimized sequences, which had previously been validated experimentally with RNA-seq (Bogard et al., 2019) (Figure 4E; nearest neighbor agreement = 87%).

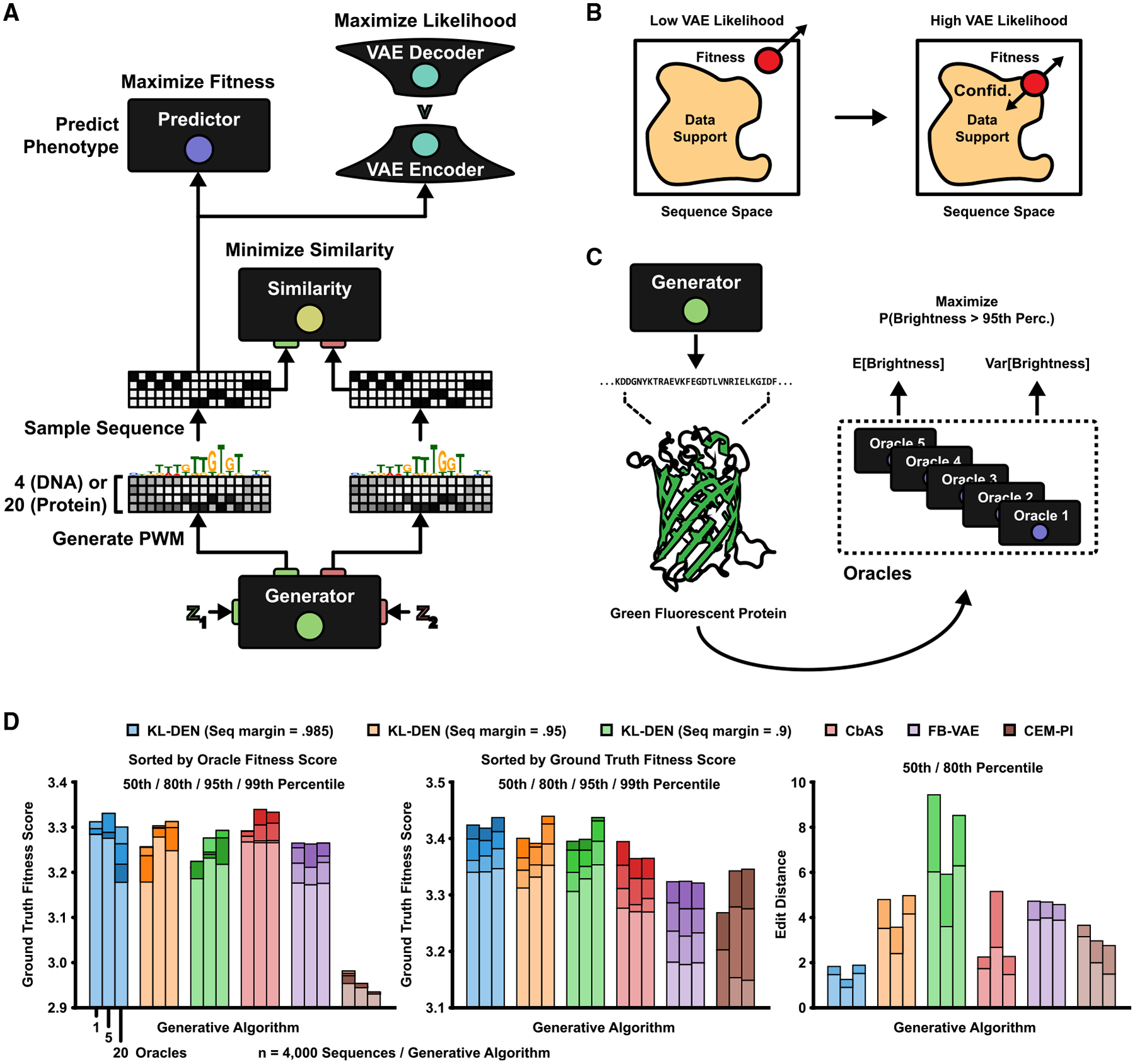

Engineering Proteins with Likelihood-Bounded Exploration Networks

The current DEN formulation maximizes fitness and diversity without regard to how confident the predictor (or any other model) is in the generated sequences. However, assuming the predictor loses its predictive power as the generated sequences drift from the measured training data in design space, it becomes necessary to maintain the marginal likelihood of sequences with respect to the data. This problem is particularly evident in protein design, where functional sequences are thought to reside in a manifold much smaller than the space of all possible sequences, and where measured training data only span this manifold. A related design method based on in silico directed evolution, conditioning by adaptive sampling (CbAS) (Brookes et al., 2019), controls the likelihood by adaptively sampling and retraining a VAE. Here, we integrate VAEs in the DEN framework (Figures 5A and S5A), enabling direct control of the approximate likelihood ratio of generated sequences with respect to a reference likelihood estimated on the training data. This allows us to tune, during backpropagation, how “confident” the DEN should be in the generated sequences (Figure 5B). We estimate the expected log likelihood of generated sequences by using importance sampling (Owen, 2013; Kingma and Welling, 2013; Blei et al., 2017) (see STAR Methods for details) and apply straight-through gradient estimation to optimize the generator for an additional likelihood ratio penalty with respect to the median training data likelihood. We validated this likelihood cost on the maximal APA design task (Figures S5B–S5D). By training two VAE instances on a subset of low- and high-fitness sequences, respectively, we confirmed that the likelihood cost restricted DEN optimization only when using the low-fitness VAE (which is expected when tasked with generating high-fitness samples).

Figure 5. Engineering Fluorescent Proteins with Likelihood-Bounded DENs.

(A) The integration of a VAE in the DEN framework (KL-DEN). The one-hot-coded sequence patterns sampled from the generator are passed to the VAE. Gradients are backpropagated by using a straight-through estimator (Chung et al., 2016).

(B) Left: unbounded DEN optimization along the fitness gradient. Right: bounded optimization with a gradient pointing toward the VAE likelihood of the measured data.

(C) The task is to generate GFP sequence variants that maximize the predicted probability of their brightness being above a target (95th percentile of training data). Predictions are made by using an ensemble of oracles (Lakshminarayanan et al., 2017; Brookes et al., 2019).

(D) GFP benchmark comparison (methods listed in top legend). The KL-DEN was trained with a minimum likelihood ratio margin of 1/10 compared with the median training data likelihood, with three different sequence similarity margins: 98.5%, 95%, and 90%. Each method was used to sample 100 sequences per training epoch, for a total of 40 epochs (after 10 epochs of “warm up” training), resulting in n = 4,000 sequences. (Left) Sequences were sorted on oracle fitness scores. Shown are the “ground truth” scores for the 50th, 80th, 95th, and 99th percentile of oracle scores, as estimated by a GP regression model (Shen et al., 2014). (Middle) The 50th, 80th, 95th, and 99th percentile of ground truth scores (regardless of oracle value). (Right) 50th and 80th percentile of sequence edit distances.

See also Figure S5.

This KL-bounded DEN (abbreviated KL-DEN—given that its KL divergence with respect to the measured data are bounded) was benchmarked on the task of designing GFP sequences for maximal brightness, using variant data from Sarkisyan et al. (2016). Replicating the analysis of Brookes et al. (2019), we evaluated three increasingly large predictor ensembles (referred to as oracles) that enabled predicting the mean and variance of normalized brightness (Lakshminarayanan et al., 2017) (Figures 5C and S5E). We also changed the generator architecture by appending an long short term memory (LSTM) layer, as it increased convergence speed (Figure S5F). We tasked the KL-DEN with maximizing the probability of the predicted brightness being above the 95th percentile of the training data, while simultaneously enforcing generated sequences to be at least 1/10th as likely as the training data. We trained three KL-DEN versions with 98.5%, 95%, and 90% sequence similarity margins respectively. Performance was evaluated by using an independent Gaussian process regression model (Brookes et al., 2019; Shen et al., 2014) (considered the ground truth). We compared KL-DEN against CbAS, FB-VAE (a VAE-based version of FB-GAN) (Gupta and Zou, 2019), and CEM-PI (probability of improvement) (Snoek et al., 2012). The results showed that although KL-DEN was less consistent than CbAS (Figures 5D, left and S5G), it generated higher overall ground truth scores than those generated by CbAS, FB-VAE, and CEM-PI (Figures 5D, middle and S5H; 50th percentile of KL-DEN ground truth scores were consistently equal to or higher than the 80th percentile of CbAS scores). Furthermore, KL-DEN could generate more diverse sequences than all competing methods (Figures 5D, right, S5I; 80th percentile of edit distances were consistently higher for KL-DEN with 90% similarity margin). Finally, we compared against a regular DEN with no likelihood regularization penalty (Figure S5J). Although the predicted oracle scores increased rapidly, the DEN-generated ground truth values flatlined, highlighting the importance of maintaining the data likelihood to avoid overfitting to the oracle.

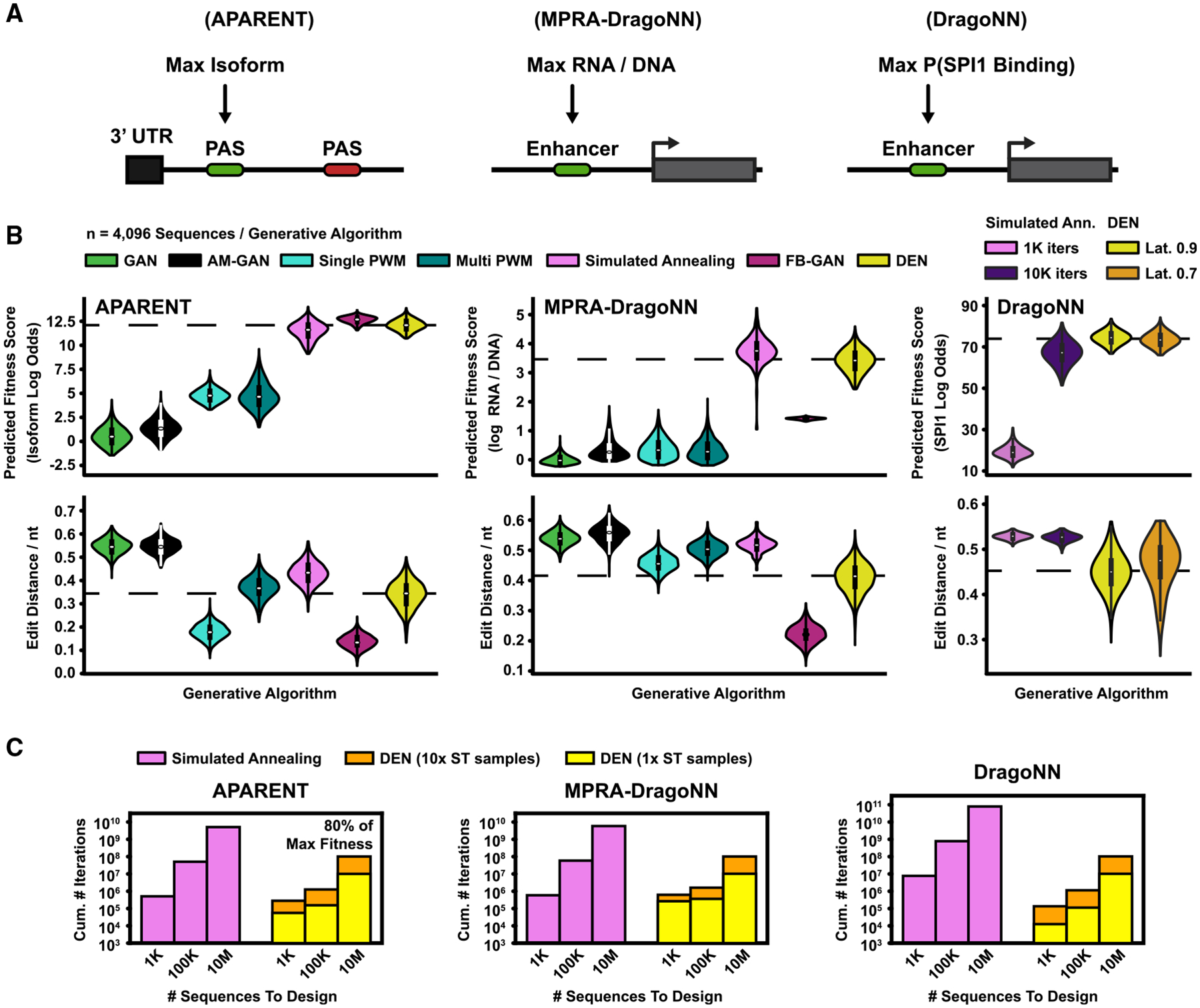

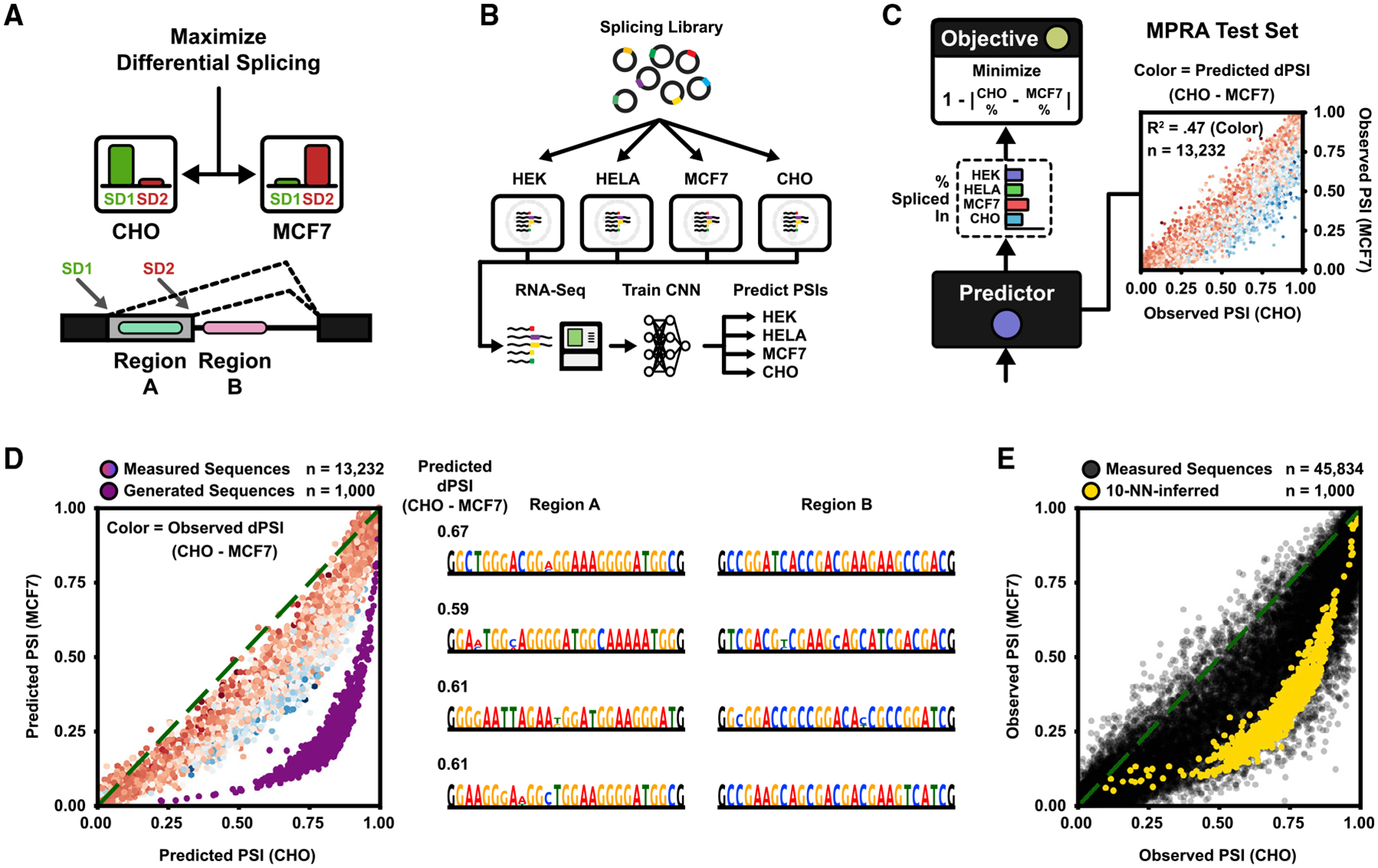

Engineering Organism-Specific Differential Splicing

Although precise cis-regulatory control in a single organism, cell type, or other condition has important applications, one of the hardest yet perhaps most interesting problems in genomics and synthetic biology is to code cis-regulatory functions that are differentially expressed across multiple cell types or conditions. Here, we consider the task of engineering organism-specific differential splice forms (Blencowe, 2006; Roca et al., 2013; Lee and Rio, 2015) (Figure 6A). Specifically, we define the task as maximizing the difference in splice donor usage (PSI) for an alternative 5′ splicing event in two different cell lines, by designing the regulatory sequences (25 nt) downstream of each alternative donor. This particular splicing construct has been studied in the context of HEK293 cells (Rosenberg et al., 2015), where MPRA data measuring hundreds of thousands of variants were collected. To study differential effects across multiple cell lines and organisms, we report additional MPRA measurements of this splicing library in HELA, MCF7, and CHO cells, which we used together with the original HEK data to train a cell-line-specific 5′ splice site usage prediction network (Figures 6B and S6A). The trained network could accurately predict splicing isoform proportions on a held-out test (Figure S6B; mean R2 = 0.88 across cell lines). The predicted difference in splice site usage between cell lines had a strong correlation with measured differences (Figure S6C; predicted versus measured dPSI R2 ranged between 0.35 and 0.47 depending on cell line pair). We focused on MCF7 and chinese hamster ovary (CHO), as the largest average differential trend was observed between these two cell lines.

Figure 6. Engineering Differential Splicing across Organisms.

(A) Two 5′ splice donors compete for splicing. The task is to design two sequence regions, region A, which is located between the donors, and region B, which is located downstream of the 3′-most donor, to maximize differential usage (PSI) of donor 1 between two cell lines.

(B) Summary of the experimental pipeline. The MPRA of Rosenberg et al. (2015) was originally measured in HEK293 cells. Here, the library was replicated in additional cell lines HELA, MCF7, and CHO and measured by RNA-seq. A neural network (CNN) was trained on all four cell-line datasets to predict PSI per cell line given only the DNA sequence as input.

(C) (Left) The predicted MCF7 and CHO PSIs are used to maximize absolute difference. (Right) Measured MPRA test set PSIs for MCF7 and CHO. Color indicates predicted dPSI (blue or red = more or less used in CHO, respectively). Predicted versus measured delta PSI (dPSI) R2 = 0.47.

(D) The DEN was trained to maximize dPSI between MCF7 and CHO, with 50% sequence similarity margin. (Left) Predicted PSI in MCF7 and CHO for generated sequences (purple; n = 1,000). Plotted are also the predicted PSI for MPRA test sequences (color indicates measured dPSI; n = 13,232). Mean predicted dPSI of test sequences = 0.08. Mean predicted dPSI of generated sequences = 0.56. (Right) Example generated sequences. 4% duplication rate (n = 100,000 sequences). Hexamer entropy = 8.31 of 12 bits.

(E) Validation of 1,000 generated sequences against the RNA-seq measured MPRA (n = 45,834) using nearest neighbors. The first dense layer of the fitness predictor was used as feature space (256 features). Measured PSIs of the entire MPRA (black) are plotted with the interpolated PSIs of the generated sequences (yellow), estimated from 10 neighbors. Mean MPRA dPSI = 0.07. Mean dPSI of generated sequences = 0.38.

See also Figure S6.

Next, we trained a DEN with the same generator architecture as in Figure 2B to maximize the difference in predicted organism-specific PSI (dPSI) between MCF7 and CHO (Figure 6C). We used the trained generator to sample 1,000 sequences, the majority of which were predicted to be far more differentially spliced than any of the sequences from the MPRA (Figure 6D; mean predicted dPSI of generated sequences = 0.56, compared with the average dPSI = 0.08 of the MPRA test set). For validation, we compared the generated sequences with the measured MPRA by using an NN search. We found that the DEN indeed learned to sample regulatory sequences centered on maximal differential splicing between the target cell lines (Figures 6E and S6D; mean NN-dPSI of generated sequences = 0.38, mean measured dPSI of MPRA sequences = 0.07).

Finally, we replicated the analysis by using a linear logistic regression model with hexamer counts as features rather than a convolutional neural network fitness predictor. By reducing the regression model to a set of differentiable tensor operations, we could seamlessly integrate the model in the DEN pipeline (Figures S6E and S6F). Allowing both high- and low-variance models enable more flexibility in tailoring the predictor properties for the given design task. In some applications it might even be suitable to compose predictor ensembles to increase rigidity. In our case, we could retrain the DEN to jointly maximize the neural network and hexamer regression predictors, striking a balance between the two models (Figure S6G).

DISCUSSION

We developed an end-to-end differentiable generative network architecture, DENs, capable of synthesizing large, diverse sets of sequences with high fitness. The model could generate PASs, which precisely conformed to target-isoform ratios and 3′ cleavage positions, differentially spliced sequences between two organisms (human MCF7 cells and hamster CHO cells respectively), maximally transcriptionally active gene enhancers, and even functional GFP variants. DENs control exploration during training by sampling two sequence patterns given two random seeds as input and penalizing sequence pairs that are similar above a certain threshold. Our analysis showed that the magnitude by which we punish similarity almost entirely determines final generator diversity and also largely determines the final fitness of the generated patterns. During training, the optimizer trades off exploring (repelling similar patterns) with exploiting (maximizing pattern fitness) on the basis of the temperature (diversity cost coefficient) until convergence is reached.

Benchmark comparisons showed that DENs produce more diverse, higher-fitness sequences than competing parametric generative models. Another concern in sequence design is computational efficiency; for some applications, we might want to generate millions of candidate patterns for high-throughput screening in the lab. As we demonstrated on several design tasks, DENs are fundamentally more efficient than per-sequence optimization methods, such as simulated annealing because, after paying the initial cost of training the DEN, we can sample new sequences with a single forward pass (scaling additively with the number of target sequences). For example, using a Tesla K80 GPU, it takes approximately 60 ms to predict a 64-sequence batch with the DragoNN model or 0.9 ms per sequence. Assuming that predictions make up most of the computational cost and that a single generator training pass takes 5 times longer than a batch prediction, then using the DEN from Figure 3C to generate 1 million sequences would take approximately 25,000 × 60 × 5 (training time) + 1,000,000 × 0.9 (design time) = 8,400,000 ms or 140 min. Using simulated annealing, which needed 10,000 iterations per sequence, would require roughly 1,000,000 × 10,000 × 0.9 = 9 billion ms or 100 days to finish.

DENs can be thought of as invertible networks for a highly surjective function value (namely maximal predicted fitness score). In fact, we can turn DENs into fully invertible regression models by making a few minor architectural changes. We demonstrate a proof of principle of an “inverse regression” DEN in Figure S7 (see STAR Methods for details). Current architectures of invertible neural networks are based around learning a bijective one-to-one mapping of the input data distribution to a latent code (Ardizzone et al., 2018). Inverse regression DENs similarly learn to map a simple latent space to the inverse domain (sequence) but without restrictions on the latent space or generator architecture. We also showed that DENs could be optimized to promote diversity not only by sequence-level comparison but also by latent similarity metrics. In particular, penalizing latent similarity in one of the fully connected predictor layers implicitly promoted diversity in the “true” latent feature space, as indicated by an independently trained VAE. In the case of differential splice sites, DENs could optimize sequences for both a neural network and hexamer regression predictor. The hexamer regression model, by its low-variance design, provides regularization. We further generalized the notion of regularization during DEN training, by incorporating a VAE. This allowed us to tune the likelihood ratio of generated sequences with respect to training data. We demonstrated competitive results against state-of-the-art cross entropy methods, such as CbAS (Brookes et al., 2019) and FB-GANs (Gupta and Zou, 2019). However, it is worth noting that the intended design cycle of CbAS differs fundamentally from DENs. CbAS is very sample efficient, which is important if we intend to experimentally measure generated sequences during training (the “oracle” is an actual experiment). By contrast, for DENs, we do not care much about the number of calls made to the oracle during training, as we assume it is always a differentiable predictor. Also note that, although simulated annealing was competitive with DENs when strictly maximizing a predictor, it is unclear if that method would even be applicable for this design task, as it is not obvious how a VAE would be incorporated.

In future work, there are several technical aspects to explore. First, the sequence diversity cost coefficient is currently kept constant. Although this efficiently enforces exploration, it might be too rigid in cases where cost landscapes have “pointy”—deep but narrow—valleys. By treating the diversity penalty as a temperature to anneal, or by changing the latent input distribution of the generator to allow a dynamic range of sequence similarity margins, DENs might be able to discover the pointy valleys during high-temperature periods of training and descend these during low-temperature periods. We also note that replacing the DC-GAN generator with a more complex model, for example, a deep residual network (He et al., 2016), might further increase the DEN’s capacity to learn diverse sequences.

Experimental assays provide us with powerful tools to validate sequences produced by generative models. Here, we tested a subset of our generated PASs. We observed that the new PASs were orders of magnitude stronger than any previously known sequence. In some applications, the initial predictor might not be sufficiently accurate, and the generated samples might reveal incorrect predictions once tested in the lab. Similar to earlier work on generative models employed for molecular design (Segler et al., 2018; Sample et al., 2019), DENs could be used with active learning, where the network generates a large set of candidate sequences, which, after synthesis and high-throughput measurements, provide augmented training data for the predictor, and this cycle is repeated until the generated patterns are in concordance with real biology. Beyond the design tasks covered here, there are many suitable biological applications for DEN. DENs could be used together with gene expression data to engineer cell-type-specific enhancer or promoter sequences with differential affinities. DENs might also prove useful for generating candidate CRISPR-Cas9 guide RNA with minimal off-target effects (Lin and Wong, 2018; Chuai et al., 2018; Wang et al., 2019a). Rational design of heterodimer protein pairs with orthogonal interaction has recently been demonstrated (Chen et al., 2019). As more data are collected and used to train functional models of interaction, we would be able to use DENs for generation of candidate orthogonal binder sets or even generalized interaction graphs. Finally, DENs could be coupled with models of protein structure (Senior et al., 2020; AlQuraishi, 2019) to generate stably folded proteins.

STAR★METHODS

RESOURCE AVAILABILITY

Lead Contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Johannes Linder (jlinder2@cs.washington.edu).

Materials Availability

This study did not generate new materials. The APA reporter plasmid backbone from (Bogard et al., 2019) was used for the polyadenylation qPCR assay. The plasmid backbone from (Rosenberg et al., 2015) was used for the splicing MPRA.

Data and Code Availability

The splicing MPRA dataset generated during this study is available at GitHub (https://github.com/johli/splirent). All original code is freely available for download at https://github.com/johli/genesis.

EXPERIMENTAL MODELS AND SUBJECT DETAILS

Four cell lines were used in this study: Human embryonic kidney cells (HEK293; female), human cervical cancer cells (HeLa; female), human breast cancer cells (MCF7; female), and chinese hamster ovary cells (CHO; female).

METHOD DETAILS

Experimental Methods

Here we describe the physical experiments reported in this study, including the polyadenylation qPCR reporter assay and the cell line-specific 5’ alternative splicing MPRA.

APA qPCR Reporter Assay

To evaluate the performance of the DEN-generated pA sequences, we experimentally compared two of the strongest newly generated sequences to two of the strongest gradient ascent-generated sequences from (Bogard et al., 2019).

Experiment

Each of the two DEN-generated pA sequences were constructed on plasmid reporters with one of the gradient ascent-sequences, such that they competed for polyadenylation when expressed in cells. Proximal pA signals have a preferential bias of selection compared to distal pA signals, so to discount this phenomenon we constructed two orientations of each reporter: In one orientation, the DEN-generated PAS was used as the proximal signal and the gradient ascent-generated PAS was the distal signal. In the other orientation, the gradient ascent-generated PAS was used as the proximal signal and the DEN-generated PAS was the distal signal. The plasmid reporters were identical to the APA reporters of (Bogard et al., 2019). See the Key Resources Table for plasmid maps.

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Chemicals, Peptides and Recombinant Proteins | ||

| DMEM, high glucose, pyruvate | ThermoFisher Scientific | SKU#11995–065 |

| Fetal Bovine Serum | Atlanta Biologicals | Cat#S11150 |

| NEBNext Poly(A) mRNA Magnetic Isolation Module | New England Biolabs | Cat#E7490S |

| Fast Evagreen qPCR Master Mix | Biotium | Cat#31003 |

| Opti-MEM Reduced Serum Medium | Invitrogen | Cat#22600050 |

| Lipofectamine LTX Reagent with PLUS Reagent | Invitrogen | Cat#A12621 |

| RNeasy | QIAGEN | Cat#74104 |

| PolyA Spin mRNA Isolation Kit | New England Biolabs | Cat#S1560 |

| MultiScribe Reverse Transcriptase | Invitrogen | Cat#4311235 |

| Deposited Data | ||

| 5’ Alternative Splicing MPRA | This study and Rosenberg et al., 2015 | https://github.com/johli/splirent |

| APA Reporter Plasmids (qPCR Assay) | This study | https://github.com/johli/genesis/tree/master/analysis/apa/qpcr_experiment |

| Experimental Models: Cell Lines | ||

| HEK293FT | Invitrogen | Cat#R70007 |

| HELA | ATCC | Cat#CCL-2.2 |

| MCF7 | ATCC | Cat#HTB-22 |

| CHO-K1 | ATCC | Cat#CCL-61 |

| Software and Algorithms | ||

| DEN Software & Analysis | This study | https://github.com/johli/genesis |

| APA Predictor Software | Bogard et al., 2019 | https://github.com/johli/aparent |

| Splicing Predictor Software | This study | https://github.com/johli/splirent |

| Feedback-GAN Software | Gupta and Zou, 2019 | https://github.com/av1659/fbgan |

| CbAS Software & Benchmark | Brookes et al., 2019 | https://github.com/dhbrookes/CbAS/ |

| MPRA-DragoNN Software | Movva et al., 2019 | https://github.com/kundajelab/MPRA-DragoNN |

| DragoNN (SPI1) Software | Kundaje Lab | https://github.com/kundajelab/dragonn |

For each pair of competing signals, we measured the odds ratio of proximal isoform abundance of orientation 1 (DEN-signal is proximal) with respect to orientation 2 (gradient ascent-signal is proximal) using a qPCR assay, since this is equivalent to the fold change in selection preference for the DEN-generated signals. The plasmids were transfected in HEK293 cells and expressed for 48 hours before RNA extraction, following the protocol used in (Bogard et al., 2019). The total mRNA from each reporter were split into two samples – in one sample, we amplified both polyadenylation isoforms and in the other sample we selectively amplified only the distal isoform. For each sample, we used a universal forward primer (qPCR_FWD) and a variable reverse primer for PCR amplification. The reverse primer for each sample targeted either a sequence in the proximal PAS (qPCR_REV_upstream; amplifying both proximal and distal isoforms), or a sequence downstream of the proximal cleavage region (qPCR_REV_dnstream_A – qPCR_REV_dnstream_D; amplifying only the distally polyadenylated isoforms). See Table S1 for primer sequences. Cycle threshold (Ct) values of each qPCR experiment were read on a Biorad CFX.

Ct Difference Lower Bound On Isoform Odds Ratio

For each pair of reporters, we measure four different qPCR cycle threshold values:

, the Ct for both proximal and distal RNA in reporter orientation 1.

, the Ct for both distal RNA only in reporter orientation 1.

, the Ct for both proximal and distal RNA in reporter orientation 2.

, the Ct for both distal RNA only in reporter orientation 2.

Each cycle threshold value is inversely proportional to the log2 of the RNA count, and so we can compute the following ΔCt values of log ratios:

Here, p1 and d1 are the proximal and distal RNA count of reporter orientation 1, p2 and d2 are the proximal and distal RNA count of reporter orientation 2, and ΔCt1 and ΔCt2 are the two reporters’ respective isoform log ratios. In the rest of this section, we prove that is a lower bound on the isoform fold change, or Odds Ratio, of reporter 1 w.r.t. reporter 2. Using the definitions of ΔCt1 and ΔCt2 above, we have:

Next, define variables x1 and x2, the proximal isoform proportions of reporter 1 and 2:

We can rewrite our expression for 2−ΔΔCt using only these variables:

Next, we multiply both of the smaller fractions with x1 and x2 on both sides, respectively:

We can now solve for the Odds Ratio in terms of 2−ΔΔCt. At first glance, it appears we get a rest term x1/x2 that we cannot solve. However, we know from our experiments that and we previously derived that. In order for both these equations to be true, then, and as a consequence x1/x2>1. Hence, our measurements are proven to be lower bounds of the isoform odds ratio:

Cell Line-Specific Splicing MPRA

Previously unpublished repeat experiments of the alternative 5’ splicing MPRA from (Rosenberg et al., 2015) in additional cell lines HELA, MCF7 and CHO were used in this paper to study differential splicing trends and design maximally differentially used splice sites between CHO and MCF7. We outline the assay below and refer to the original publication for further details on the experimental protocol.

Experiment

The Citrine-based plasmid reporter library from (Rosenberg et al., 2015) was used, which contains two competing 5’ alternative splice donors with 25 degenerate bases inserted downstream of each splice donor. A degenerate barcode region is located in the Citrine 3’ UTR to enable mapping spliced mRNA back to originating plasmids. See the original publication for the plasmid map. Cell lines MCF7, HELA and CHO were cultured in DMEM on coated plates and transfected with the splicing library as described in (Rosenberg et al., 2015). RNA was extracted 24 hours after transfection and prepared for sequencing (Rosenberg et al., 2015). The purified mRNA library was sequenced on Illumina HiSeq2000, capturing both the 5’ splice junction of the degenerate intron and the barcode in the 3’ UTR. Finally, spliced and unspliced RNA reads were associated with the originating DNA plasmid based on the barcode from the sequenced 3’ UTR, enabling isoform count aggregation and Percent Spliced-In (PSI) estimation per unique library member.

After mapping and aggregating mRNA reads, the number of library members with non-zero read count in MCF7, CHO and HELA were 265, 016, 265, 010 and 264, 792 respectively. The mean read depth in these cell lines was 66.7, 72.0 and 29.1 respectively. (The previously described HEK293 dataset contained 265, 044 non-zero members with on average 50.0 reads per member).

Computational Methods

Here we present the computational methods developed and applied in this paper. We first describe the Deep Exploration Network (DEN) architecture, differentiable sequence representations, pattern masking operations and cost function definitions.

Next, we describe the sequence design tasks considered in this paper. For each task, we describe the predictor model used, the data it was trained on and the specific generator architecture used for training the DEN. Finally, for the benchmark comparison, we describe the details and parameter configuration of each design method tested.

Notation

The following notation is used:

Scalars – Lowercase italic letters, e.g. c.

Vectors and tensors – Lowercase bold italic letters, e.g. x.

Scalar constants – Uppercase italic letters, e.g. N.

Matrix and tensor constants – Uppercase bold letter, e.g. M.

Subscripts are used to reference tensor elements, e.g xij.

Parentheses are used to show implicit functional dependence, e.g. that x depends on z: x(z).

Definitions

The list below briefly summarizes the most important variables and entities defined in section ”Deep Exploration Network (DEN)” below. Note that there sometimes exist multiple independent instances of some of these variables, and we distinguish between variable instances using superscripts encased in parentheses (for example: x(1) and x(2)).

– Generator model function, i.e. .

z – Generator seed input.

l – Generator output matrix (nucleotide log probabilities).

– Predictor model function, i.e. .

x – Sequence pattern (Either as PWM σ(l) or as one-hot sample δ(l)).

σ(l) – PWM matrix obtained by softmax-normalizing l.

δ(l) – one-hot-coded pattern sampled from σ(l).

Modeling and Optimization Software

We used the auto-differentiation and deep learning package Keras in Python for all neural network implementation and training (Chollet, 2015). For training the generator networks, we used the Adam optimizer (Kingma and Ba, 2014) with default parameters. To implement certain operations required during DEN training, we wrote custom code in Tensorflow (Abadi et al., 2016). The Tensorflow implementation for categorical straight-through gradient estimation (the one-hot sampling operation for sequence PWMs) was based on (Pitis, 2017).

Deep Exploration Network

Here we describe the model, cost function and training procedure of Deep Exploration Networks (DENs). For generator or predictor details, see the sections further below concerning specific applications.

Generator Architecture

The generator model is a feed-forward neural network which receives a D-dimensional latent seed vector as input and outputs a real-valued pattern . Here N denotes the number of letters of the generated sequence pattern and M denotes the number of channels (the alphabet size). In the context of genomics, the alphabet is the set of nucleotides and M = 4. The generated pattern l(z) is treated as a matrix of nucleotide logits. For some applications presented in this paper, the generator model takes auxiliary information as input in order to produce class-conditional patterns, in which case the formula can be described as , where c is either an integer representing the class index () or a real-valued scalar representing a target output value (; for inverse regression).

An approximate one-hot-coded pattern x(z) is obtained by transforming the generated logit matrix l(z) through the formula:

| (Equation 1) |

Here f is a differentiable function which transforms the nucleotide logit matrix l(z) into an approximate one-hot-coded representation (function f detailed below). M and T are matrices used to mask and template the sequence pattern with fixed nucleotide content. This is useful if we want to bias the generation by locking particular motifs in the sequence. To support this, M masks the pattern by zeroing out fixed positions and T encodes the fixed sequence. M is constructed by encoding 1’s at changeable nucleotide positions and 0’s at fixed positions:

| (Equation 2) |

T is constructed by encoding 0’s at changeable nucleotide positions and a 1’s at every fixed position and column corresponding to the specific nucleotide identity:

| (Equation 3) |

The exact architecture of the generator network depends on the application, but the overall design remains the same throughout the paper and is based on Deep Convolutional GANs (DC-GANs) (Radford et al., 2015) (see Figure S2B for a high-level illustration of the model). First, a dense layer with ReLU activations transforms the input seed into a high-dimensional vector. This vector is reshaped into a two-dimensional matrix, where the first dimension encodes sequence position and the second dimension encodes sequence channel. The position dimension is initially scaled down to a fraction of the final target sequence length, such that it can be upsampled by strided deconvolutions in subsequent layers. The channel dimension starts with a large number of channels and is gradually compressed to smaller numbers throughout the generator network, until the final layer outputs a sequence with only 4 channels (4 nucleotides). The de-convolutional layers are followed by convolutional layers. The filters vary in width between 6 and 8. All convolutional layers except the final layer have ReLU activations; the final layer has linear activations, corresponding to nucleotide log probabilities. We perform batch normalization between every convolutional layer.

Predictor Architecture

The goal of DENs is to generate diverse sequences which maximize some predicted biological fitness score. Given a one-hot coded sequence pattern x ∈ {0, 1}N×M, predicts a property , which can be a real-valued score () or proportion (), and can be either scalar or multi-dimensional ( for som K). The efficacy of x(z) is evaluated by a fitness cost function , based on the predicted property (further details below).

Any predictor is compatible with Deep Exploration Networks as long as it is differentiable, i.e. the gradient must be defined (Simonyan et al., 2013; Lanchantin et al., 2016).

Training Procedure

In each forward pass, we sample two latent seed vectors z(1), z(2) from the D-dimensional uniform distribution, z(1),z(2) ~ U (−1, 1)D. The seeds are independently passed as input to , generating two output patterns (nucleotide logit matrices) and . Approximate one-hot-coded patterns x(z(1)) and x(z(2)) are obtained by transforming l(z(1)) and l(z(2)) through Equation 1. In its most basic form, the weights of the generator are trained to minimize the following compound fitness and diversity cost function:

| (Equation 4) |

Here, is the fitness cost evaluated on the predicted property and is the diversity cost evaluated on the patterns x(z(1)),x(z(2)). In addition to these two cost components, DENs can optionally minimize two auxiliary losses: An entropy cost defined on a softmax relaxation σ(l(z(1))) and a regularization cost (details below). We define the total DEN cost function as:

| (Equation 5) |

λF, λD, λE, λR are the coefficients of each respective cost component.

The gradients and with respect to generator weights can be computed with auto-differentiation in Keras, since the generator is a regular convolutional ReLU network. Also note that and are differentiable since we require the one-hot transform f from Equation 1 to be differentiable.

Differentiable Pattern Representation for Sequences

Equation 1 transforms the generated, real-valued, nucleotide logits into an approximate one-hot-coded representation x(z) through function f. This representation must be carefully chosen in order to maintain differentiability with respect to . We investigate two different methods: (1) representing x(z) as a continuous, differentiable distribution, and (2) representing x(z) by discrete samples and approximating the gradient. The first pattern representation is referred to here as RIFR (Relaxed Input Form Representation), and is equivalent to a softmax-relaxed sequence PWM (Position Weight Matrix). We define the nucleotide-wise softmax function σ(lij) as:

| (Equation 6) |

Equation 6 transforms the generated logit matrices l(z(1)) and lz(2) into corresponding softmax-relaxed PWMs σ(l(z(1))) and σ(l(z(2))) (Killoran et al., 2017). In RIFR, the PWMs σ(l(z(1))) and σ(l(z(2))) are used as approximations for one-hot-coded patterns x(z(1)) and x(z(2)) in the DEN cost function . We redefine Equation 1 as:

| (Equation 7) |

Note that in RIFR, the (masked) softmax-relaxed PWM σ(l(z(1))) is directly used as input to . Since Equation 7 is differentiable , an auto-differentiation package like Keras can compute the gradients and . RIFR has a fundamental drawback: The predictor has never been trained on real-valued patterns and may perform poorly on high-entropy PWMs. We can push the PWMs toward a one-hot-coded state during training by minimizing PWM entropy in the cost function. However, the gradient approaches zero as we explicitly minimize the entropy of the softmax probabilities. Put differently, we optimize the system for vanishing gradients, which may halt convergence.

The second pattern representation is referred to as SIFR (Sampled Input Form Representation) and consists of K discrete, one-hot-coded samples drawn from the PWMs σ(l(z(1))) and σ(l(z(2))). Specifically, The nucleotide logits l(z)ij are used as parameters to N independent categorical distributions , where the probability of sampling nucleotide j at position i is equal to the softmax value σ(l(z)ij). Formally, we define the discrete one-hot sampling function δ as:

| (Equation 8) |

Using Equation 8, we sample K one-hot patterns x(z(1))(k), x(z(2))(k) from l(z(1)) and l(z(2)):

| (Equation 9) |

Finally, the cost function (Equation 5) is redefined as an empirical mean across all K sampled pairs and :

| (Equation 10) |

The gradients and are approximated using the straight-through estimator of (Chung et al., 2016), where the gradient of δ is replaced by that of the softmax:

| (Equation 11) |

We implement custom operations in Keras/Tensorflow to compute Equation 11 during training (Bengio et al., 2013; Courbariaux et al., 2016; Pitis, 2017). The increased sample variance of SIFR can be mitigated by increasing the number of samples drawn (K). However, optimization can be noisy even with infinitely many samples (K → ∞), since straight-through gradients may at times be poor estimates of the gradient. As our results indicate in Figure S2H, combining both methods and walking down the average gradient (Dual Input Form Representation, or DIFR) can reduce variance and estimation artifacts.

Cost Functions

We minimize 4 costs when training a DEN:

A fitness cost

A diversity cost

An entropy cost

A regularization cost .

Cost term:

The predictor output is used to define a fitness cost . The choice of is detailed in later sections for each specific design problem.

Cost term:

The diversity cost can be any differentiable comparator function of the two generated patterns x(z(1)) and x(z(2)). The standard diversity cost used throughout the paper is a sequence-level cosine similarity metric, which directly penalizes the patterns proportional to the fraction of identical nucleotides. To support translational invariance, we penalize patterns by the maximum cosine similarity across multiple offsets σ, ranging from σ = − σmax to σmax. The penalty is defined as a margin loss, allowing patterns to be identical up to a margin ϵ without incurring any cost. Given two masked one-hot coded patterns and , we define the sequence similarity cost as:

| (Equation 12) |

Here, x(1) and x(2) are masked by M (to zero out fixed positions) but not templated by T since fixed nucleotides should not affect the diversity penalty, and N* refers to the number of non-zeroed positions by M. N refers to the total sequence length and M the number of one-hot channels. Theoretically, we should set σmax = N − 1 to test all possible offsets, however practically we found that σmax = 1 was enough to remove offset artifacts.

When using RIFR (x(1) and x(2) are softmax-relaxed PWMs), is similar to an L2 penalty, where two sequence letters are penalized proportional to the magnitude of their PWM entries. When using SIFR (x(1) and x(2) are one-hot samples), corresponds to a sparse L1 penalty. For the tasks considered in this paper, we found that SIFR worked best. For all design tasks, we set the diversity cost coefficient to a sufficiently large value such that the cost reaches the allowable similarity margin ϵ early in the training. The margin ϵ is chosen by training the DEN for a range of values and selecting the setting with minimal fitness cost.

We also evaluate a latent diversity cost, which is defined on a pair of latent feature vectors u(1) and u(2) obtained from the generated sequence patterns x(1) and x(2) by applying some (differentiable) feature transform and . In theory, can be any type of encoder model, e.g. a variational autoencoder (VAE). In the paper, we let be the subnetwork of predictor ending at the first fully connected (dense) layer, i.e. we let the first fully connected layer of be the latent feature space for u(1) and u(2). We define the latent similarity cost as the cosine similarity margin loss between u(1) and u(2):

| (Equation 13) |

Cost term:

We explicitly control the entropy of the generated softmax-relaxed PWM σ(l(z)) by fitting the Shannon Entropy to a target (or minimum) value. When fitting to a target conservation tbits (bits), we minimize a squared error between the average nucleotide entropy and tbits:

| (Equation 14) |

When enforcing a minimum average conservation mbits, we minimize a margin cost instead:

| (Equation 15) |

Cost term:

We optionally penalize or reward specific motifs in the generated pattern by shifted multiplication with itself to mask out sub-patterns. This is useful for example to repress known artifacts of the predictor. Same as , the regularization cost can either be used with SIFR (sampled discrete one-hot patterns) to provide sparse regularization or with RIFR (softmax-relaxed PWMs), to support L2 penalties. We find that SIFR gives better results when penalizing motifs and RIFR gives better convergence for promoting motifs.

For each one-hot-coded motif F of length L, we either add or subtract to/from , to penalize or promote generation of F respectively in positions [a, b] of x(z):

| (Equation 16) |

Here argmax (Fj) corresponds to the nucleotide identity at the j:th position of F.

Variational Inference and KL-Bounded DENs

In the analysis presented in Figures 5 and S5, a variational autoencoder (VAE) was used to approximate and maintain the marginal likelihood pData(x(z)) of generated sequences x(z) with respect to the measured data. By specifying a minimum target likelihood ratio that must be upheld by the DEN during backpropagation, we avoid drift into low-confidence regions of sequence design space. Specifically, assuming we have trained a VAE such that pVAE(x) ≈ pData(x), ∀x ∈ {0, 1}N×M (see below for VAE training details), we estimate the expected log-likelihood of x(z) using importance sampling during the forward pass, and optimize for all seeds z(1), z(2) ~ U(−1, 1)D by gradient descent to minimize:

| (Equation 17) |

Here is a cost function defined in terms of the estimated expected log likelihood of x(z). The likelihood weight λL is to a sufficiently large value such that quickly reaches 0 during training.

The expected log-likelihood is approximated in the forward pass during DEN training as follows: The (differentiable) one-hot sequence pattern x(z) generated by (Equation 1) is used as input to the VAE encoder producing a mean vector μ(z) ∈ RD and a log-variance vector ϵ(z) ∈ RD. We sample S latent vectors . Each vector v(s) is decoded by the VAE decoder into a reconstructed matrix of nucleotide logits and passed through a softmax layer σ to obtain S reconstructed softmax-relaxed PWMs σ(r(s)). Next, we use importance-weighted inference to estimate the log likelihood log pVAE(x(z)) (Owen, 2013; Kingma and Welling, 2013; Blei et al., 2017). We approximate log pVAE(x(z)) as an importance-weighted average of the S samples (Owen, 2013):

| (Equation 18) |

The summands may be very small at the start of optimization, as is randomly initialized. To maintain numerical stability, we calculate Equation 18 in log space using the log-sum-exp trick:

| (Equation 19) |

We compute the importance-weighted probability ps(x(z)) of each sample s in log space:

| (Equation 20) |

Here, is the decoder reconstruction probability of PWM σ(r(s)):

| (Equation 21) |

is the density of latent sample v(s) under the standard normal distribution:

| (Equation 22) |

Finally, is the density of v(s) under the importance sampling distribution:

| (Equation 23) |

Note that all quantities are differentiable with respect to x(z): Equation 19 is computed using the log-sum-exp-trick, which is (sub-) differentiable. Equation 21 is directly differentiable w.r.t. x(z) and Equations 22 and 23 are differentiable through latent vector v(s) via the VAE encoder and the reparameterization trick of (Kingma and Welling, 2013). Finally, x(z) is approximately differentiable w.r.t. the generator weights by applying the straight-through estimator of Equation 11.

Given the differentiable estimate of log pVAE(x(z)) above, we could theoretically use this quantity to form a cost function that we optimize with respect to the generator , enabling control of the likelihood ratio of generated sequences during training. Specifically, using a logarithmic margin loss, we can bound the likelihood ratio with respect to the mean likelihood of the training data pref by a factor 10−ρ (assuming log10-base):

| (Equation 24) |

However, there is a problem with this cost function: It skews the distribution log pVAE(x(z)) when taken over many seeds z. This is because every value log pVAE(x(z)) crossing the allowable margin will be penalized by the cost function, which means that the expected log likelihood will be shifted far beyond the margin log pref − ρ to accommodate the worst-case samples. Rather, what we want is for the center of the generated distribution, , to lie against log pref − ρ.

We estimate during each forward pass of backpropagation as an empirical mean over mini-batches generator seeds. Specifically, we optimize the DEN on a batch of L seeds z [l] ~ U(−1, 1)D (the brackets denote batch index). We break the L-sized batch into H-sized mini-batches, and compute V = L/H independent estimates of :

| (Equation 25) |

We now have an L/H -sized batch of estimates , which are broadcasted back to the original batch size L and used with each respective seed z[l] of the batch to compute the expected likelihood ratio cost function:

| (Equation 26) |

Inverse Regression Models Based on DENs

Up until now, we have focused on optimizing the DEN for a fixed fitness objective (for example: maximal APA isoform abundance), which is achieved by minimizing the cost function of Equation 5 for all randomly sampled pairs of seeds z(1), z(2) ~ U(−1, 1)D. However, by supplying an additional real-valued regression target as input, DENs can be trained to stochastically sample sequences according to the supplied target, effectively becoming a type of invertible regression model. This approach is similar to work by (Ardizzone et al., 2018), but the training scheme lifts any restriction on the choice of network architecture or latent space. During training, we randomly sample new target regression values t, feed it to the generator as input and simultaneously specify the same target in the cost function (Figure S7A). As a result, the generator learns to sample diverse sequences which fulfill whatever target regression value was supplied as input.

We provide a supplementary analysis in Figures S7B–S7D where we demonstrate the inverse regression DEN on the APA isoform design task. Details about the training procedure can be found in the ”APA Inverse Regression” section below.

APA Predictor Model (CNN)