Abstract

Background

Inadequate screening and diagnostic testing in the United States throughout the first several months of the COVID-19 pandemic led to undetected cases transmitting disease in the community and an underestimation of cases. Though testing supply has increased, maintaining testing uptake remains a public health priority in the efforts to control community transmission considering the availability of vaccinations and threats from variants.

Objective

This study aimed to identify patterns of preferences for SARS-CoV-2 screening and diagnostic testing prior to widespread vaccine availability and uptake.

Methods

We conducted a discrete choice experiment (DCE) among participants in the national, prospective CHASING COVID (Communities, Households, and SARS-CoV-2 Epidemiology) Cohort Study from July 30 to September 8, 2020. The DCE elicited preferences for SARS-CoV-2 test type, specimen type, testing venue, and result turnaround time. We used latent class multinomial logit to identify distinct patterns of preferences related to testing as measured by attribute-level part-worth utilities and conducted a simulation based on the utility estimates to predict testing uptake if additional testing scenarios were offered.

Results

Of the 5098 invited cohort participants, 4793 (94.0%) completed the DCE. Five distinct patterns of SARS-CoV-2 testing emerged. Noninvasive home testers (n=920, 19.2% of participants) were most influenced by specimen type and favored less invasive specimen collection methods, with saliva being most preferred; this group was the least likely to opt out of testing. Fast-track testers (n=1235, 25.8%) were most influenced by result turnaround time and favored immediate and same-day turnaround time. Among dual testers (n=889, 18.5%), test type was the most important attribute, and preference was given to both antibody and viral tests. Noninvasive dual testers (n=1578, 32.9%) were most strongly influenced by specimen type and test type, preferring saliva and cheek swab specimens and both antibody and viral tests. Among hesitant home testers (n=171, 3.6%), the venue was the most important attribute; notably, this group was the most likely to opt out of testing. In addition to variability in preferences for testing features, heterogeneity was observed in the distribution of certain demographic characteristics (age, race/ethnicity, education, and employment), history of SARS-CoV-2 testing, COVID-19 diagnosis, and concern about the pandemic. Simulation models predicted that testing uptake would increase from 81.6% (with a status quo scenario of polymerase chain reaction by nasal swab in a provider’s office and a turnaround time of several days) to 98.1% by offering additional scenarios using less invasive specimens, both viral and antibody tests from a single specimen, faster turnaround time, and at-home testing.

Conclusions

We identified substantial differences in preferences for SARS-CoV-2 testing and found that offering additional testing options would likely increase testing uptake in line with public health goals. Additional studies may be warranted to understand if preferences for testing have changed since the availability and widespread uptake of vaccines.

Keywords: SARS-CoV-2, testing, discrete choice experiment, latent class analysis, COVID-19, pattern, trend, preference, cohort, United States, discrete choice, diagnostic, transmission, vaccine, uptake, public health

Introduction

Screening and diagnostic testing for SARS-CoV-2 infection is a critical tool in the public health response to the COVID-19 pandemic, as early detection allows for the implementation of isolation and quarantine measures to reduce community transmission [1]. Negative tests are often required for work, school, and leisure activities. The importance of testing has been well demonstrated globally, such as in South Korea, where a “test, trace, isolate” strategy was largely credited for rapidly controlling transmission in spring 2020 [2]. Unfortunately, insufficient SARS-CoV-2 testing in the United States throughout the first several months of the pandemic led to both undetected cases transmitting disease in the community and an underestimation of the burden of COVID-19 [3]. Though the SARS-CoV-2 diagnostic testing supply has increased, maintaining testing uptake remains a major US public health priority in the efforts to control community transmission in the current pandemic phase of vaccinations and variants [4-6]. Currently, the US Centers for Disease Control and Prevention recommends diagnostic testing for individuals with symptoms of COVID-19 and unvaccinated individuals in close contact with a confirmed or suspected COVID-19 case; they also recommend screening tests for unvaccinated people, for example, for work, school, or travel [7-9]. Hereafter, we define SARS-CoV-2 testing as including both screening and diagnostic testing.

Individuals’ preferences about testing, specifically about the test itself or the service model that delivers the test, are important to consider in determining strategies to increase and maintain the uptake of SARS-CoV-2 testing in the vaccine era. In other contexts, individual preferences about a health-related product or service have been shown to be predictive of adoption of health-related behaviors [10]. Discrete choice experiments (DCEs), which are surveys that elicit stated preferences to identify trade-offs that a person makes with a product or service, have emerged as a tool to understand patient preferences and barriers to health care engagement [11], and are increasingly being used to inform patient-centered health care [12,13]. We previously conducted a DCE to understand SARS-CoV-2 testing preferences and found strong preferences for both viral and antibody testing, less invasive specimen collection, and rapid result turnaround time [14]. However, as observed in DCEs on other topics, patient preferences are often heterogeneous, and there may be distinct patterns of preferences within a population [15].

If indeed preferences are relevant to SARS-CoV-2 testing uptake and different patterns of preferences exist, these patterns may also be characterized by distinct demographic profiles. Previous work has documented demographic disparities in SARS-CoV-2 testing uptake [16-19]. For example, among individuals receiving care at US Department of Veterans Affairs sites, overall SARS-CoV-2 testing rates within the Veterans Affairs system were lowest among non-Hispanic White individuals, especially among those who were male and those who lived in rural settings; however, testing rates per positive case were lowest among non-Hispanic Black and Hispanic individuals [17]. Patterns of preferences may also differ based on experience with a product or service, as observed with the frequency of past testing in the preferences for HIV self-testing [20]. Concern or perceived risk is another component involved in making decisions about health, and since it is not uniformly distributed, it may also differ by patterns of preferences [21].

We hypothesized that discernable patterns of SARS-CoV-2 testing preferences would emerge and that individuals in these patterns would have distinct demographic profiles, SARS-CoV-2 testing history, and concern about infection. Identifying and characterizing heterogenous testing preferences could facilitate the design and implementation of an array of services and ultimately enhance testing uptake and engagement.

Methods

Recruitment and Study Ethics

The survey design of our DCE has been previously described [14], but here we provide a summary. Participants enrolled in the CHASING COVID (Communities, Households, and SARS-CoV-2 Epidemiology) Cohort Study [22] who completed a routine follow-up assessment from July 30 through September 8, 2020, were invited to complete the DCE via a unique survey link at the end of the follow-up assessment. A US $5 Amazon gift card incentive was offered to participants completing the DCE. All study procedures were approved by the City University of New York (CUNY) Graduate School of Public Health and Health Policy Institutional Review Board.

DCE Design

The DCE was designed and implemented using Lighthouse Studio 9.8.1 (Sawtooth Software). Prior to the main DCE tasks, participants who agreed to complete the DCE were presented with a sample task related to ice cream preferences to help demonstrate the method and orient them to the DCE format. Each participant was then presented with 5 choice tasks with illustrations where they were asked to indicate which of 2 different SARS-CoV-2 testing scenarios was preferable or if neither was acceptable, imagining that “...the number of people hospitalized or dying from the coronavirus in your community was increasing” (see Multimedia Appendix 1 for a sample choice task). Specific testing attributes and levels examined are described in Multimedia Appendix 2. These attributes and levels were based on the current options for SARS-CoV-2 testing in the United States at the time of study design, as well as aspirational features hypothesized by the study investigators to be relevant to individual preferences. The combination and order of attribute levels presented to each participant were randomized for a balanced and orthogonal design using Sawtooth’s Balanced Overlap method [23,24]. However, we did constrain the combination of certain levels to reflect real-world possibilities. For example, we did not allow the nasopharyngeal (NP) swab specimen type level to be combined with either of the at-home specimen collection venue levels. The survey was tested internally by study team members prior to deployment.

Latent Class Analysis

For the unsegmented analysis reported previously [14], we estimated individual-level part-worth utilities for each attribute level and relative importance for each attribute using a hierarchical Bayesian multinomial logit (MNL) model, which iterates through the upper aggregate level of the hierarchy and the lower individual level of the hierarchy until convergence [25,26]. For this analysis, however, we used a latent class MNL model to identify different segments of respondents based on their response patterns. Latent class MNL estimation first selects random estimates of each segment’s utility values, then uses those values to fit each participant’s data and estimate the relative probability of each respondent belonging to each class [27]. Next, using probabilities as weights, logit weights are re-estimated for each pattern and log-likelihoods are accumulated across all classes. This process repeats until reaching the convergence limit. We estimated individual-level utilities as the weighted average of the group utilities weighted by each participant’s likelihood of belonging to each group, and zero-centered the utilities using effects coding, so that the reference level is the negative sum of the preferences of the other levels within each attribute [28-31]. Compared to aggregate logit, the effect of the independence of irrelevant alternatives assumption is reduced in latent class MNL [28]. We calculated relative importance at the individual level as the range of utilities within an attribute over the sum of the ranges of utilities of all attributes, and then calculated the mean and 95% CI for each segment as the mean ± 1.96 × SE. We calculated the mean of the weighted utilities by segment, and 95% CIs in the same manner as previously described [29,32].

We ran the latent class analysis with 2 to 10 classes, 5 replications per class, and 100 iterations per replication to facilitate convergence. We used the Akaike information criterion, Bayesian information criterion, and log-likelihood to inform best model selection. In addition, we sought a segmentation model that balanced statistical fit with interpretability and reasonably sized groups. We ran the latent class analysis with multiple starting seeds to facilitate finding the globally best-fit solution.

Respondent Quality

To assess respondent quality, we computed DCE exercise completion time statistics and examined straightlining behavior (always picking the left-hand alternative or the right-hand alternative) [33]. We reran the latent class analysis excluding participants with a combination of straightlining behavior, or completion times in the 5th or 10th percentile of all participants, to determine whether these participants affected the final model. All latent class analyses were done using Lighthouse Studio 9.8.1.

Simulation

We then extrapolated the 2-alternative choice task to a multiscenario simulation to estimate preferences for 5 testing approaches, summarized in Table 1, using the individual-level part-worth utility estimates from the latent class MNL analysis and stratifying by the latent class segments. Our simulations included the following:

Table 1.

Testing approaches used in the simulations.

| Testing scenario | Test | Specimen type | Venue | Result turnaround time | Included in simulation 1 |

Included in simulation 2 |

|

| 1. | Standard testing, drive-through | PCRa | NPb swab | Drive-through community testing site | 48 hours | ✓c | ✓ |

| 2. | Standard testing, walk-in | PCR | NP swab | Walk-in community testing site | 48 hours | ✓ | ✓ |

| 3. | Less invasive testing | PCR | Spit sample | Walk-in community testing site | 48 hours |

|

✓ |

| 4. | Dual testing | PCR and serology | Finger prick | Walk-in community testing site | 48 hours |

|

✓ |

| 5. | At-home testing | PCR | Shallow nasal swab | Home collection, receiving and returning the kit via mail | Within 5 days |

|

✓ |

| 6. | None | N/A | N/A | N/A | N/A | ✓ | ✓ |

aPCR: polymerase chain reaction.

bNP: nasopharyngeal.

c✓: Check marks indicate whether the testing scenario was included in each simulation.

Scenarios 1 and 2: “standard testing” scenarios were based on major health departments’ testing programs in fall 2020—viral test (NP swab) and a result turnaround time of 48 hours. We included two unique scenarios to cover two variations in venue [34,35]—drive-through testing site and walk-in community testing site. Result turnaround time was based on reports from major health departments (eg, >85% of results within 2 days in California) [35].

Scenario 3: “less invasive testing” was based on some jurisdictions offering less invasive specimen collection, such as saliva [34]—viral test, saliva specimen, walk-in community testing site, and a result turnaround time of 48 hours.

Scenario 4: “dual testing” consisted of both viral and antibody testing and would necessitate a finger prick [36]—viral and antibody test, finger prick specimen, walk-in community testing site, and a result turnaround time of 48 hours.

Scenario 5: “at-home testing” was based on a commercially available at-home testing kit [37]—viral test, shallow nasal swab, home collection, receiving and returning the kit in the mail, and a result turnaround time within 5 days, as additional time would be required for mailing the specimen.

We conducted two sets of simulations to predict testing uptake: (1) the 2 standard testing scenarios, with a “no test” option to capture the proportion of participants in each class who would opt out of testing altogether, given the choices, and (2) the 2 standard test scenarios as well as the less invasive, dual testing, and at-home testing scenarios, including a “no test” option. Predicted uptake for the 3 total options for the first simulation and 6 total options for the second simulation were generated using the Randomized First Choice (RFC) method with utilities from the latent class MNL as inputs [25,38,39]. The RFC approach assumes that participants would choose the testing scenario with the highest total utility summed across attributes using each participant’s own individual estimated utilities, with some perturbation around the utilities to account for test scenario similarities and reduce the independence of an irrelevant alternatives problem. The simulator performs thousands of simulated draws per participant, then computes the proportion of participants who would choose each testing scenario based on its total utility. The simulations were done using Lighthouse Studio 9.8.1.

Additional Measures

Other measures of interest were merged from participants’ responses from the CHASING COVID Cohort Study [22] baseline interview (age, gender, race/ethnicity, education, region, urbanicity, comorbidities) and a combination of baseline, visit 1, and visit 2 follow-up interviews (employment, concern about infection, previous SARS-CoV-2 testing) [40] (see Multimedia Appendix 3 for details on how the variables were defined).

We computed descriptive statistics (frequencies and proportions) for these characteristics by class and compared the distributions of these variables using Pearson chi-square tests. An alpha level of .05 was the criterion for statistical significance. The descriptive statistics and bivariate analyses were done using SAS 9.4 (SAS Institute).

Results

Participant Demographic Characteristics

Of the 5098 invited cohort participants, 4793 participants completed the DCE (response rate 94.0%). The median age was 39 (IQR 30-53) years, 51.5% (n=2468) were female, 62.8% (n=3009) were non-Hispanic White, 16.4% (n=788) were Hispanic, 9.2% (n=442) were non-Hispanic Black, 7.4% (n=361) were Asian or Pacific Islander, and 3.9% (n=189) were another race.

Respondent Quality

We assessed respondent quality and reran the 5-group latent class analysis four times excluding participants who exhibited combinations of either straightlining behavior (n=392), completion times in the 5th (n=239) or 10th (n=473) percentile of all responders, or combinations of straightlining and speeding. Though there was some volatility in the part-worth utility estimates for the smallest class size in the models with exclusions, there were no qualitative differences to class sizes or relative attribute importance. Therefore, we used the model that retained all 4793 participants (see Multimedia Appendix 4 for additional details).

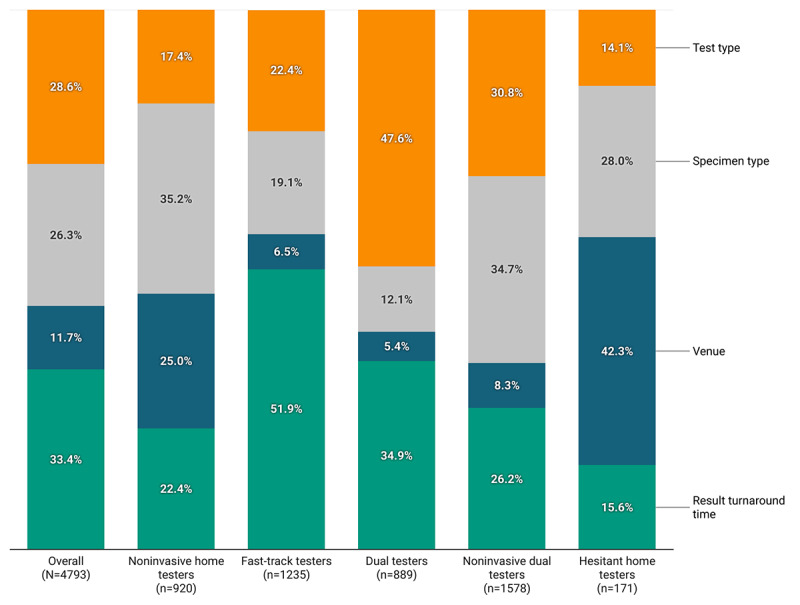

Patterns of Preferences: Relative Attribute Importance and Preferences for Levels of Attributes

Among the 4793 participants who completed the DCE, 5 distinct classes were identified balancing quantitative measures of model fit (Akaike information criterion, Bayesian information criterion, and log-likelihood) with class size and the ability to interpret the final solution. Multimedia Appendix 5 presents a summary of these criteria. Each class had a distinct profile or pattern of attribute relative importance (Figure 1) and preferences for specific levels of attributes (Table S1, Multimedia Appendix 6). We characterized the patterns based on the preferences within each class: noninvasive home testers (n=920, 19.2%), fast-track testers (n=1235, 25.8%), dual testers (n=889, 18.5%), noninvasive dual testers (n=1578, 32.9%), and hesitant home testers (n=171, 3.6%).

Figure 1.

Mean relative attribute importance for SARS-CoV-2 testing by preference pattern.

To elaborate, among noninvasive home testers, specimen type had the highest relative importance (35.2%, 95% CI 34.8%-35.5%), followed by venue (25.0%, 95% CI 24.4%-25.5%), result turnaround time (22.4%, 95% CI 22.0%-22.9%), and test type (17.4%, 95% CI 16.9%-17.9%). Participants in this pattern favored less invasive specimen types, with saliva being most preferred (utility 48.6) and NP swab and blood draw specimen types being least preferred (utilities –92.1 and –41.3, respectively). They preferred home sample collection, either returning the sample for testing by mail (utility 55.3) or to a collection site (utility 42.7), and least preferred testing at a doctor’s office or urgent care clinic (utility –41.6) or walk-in community testing site (utility –44.5). Participants in this pattern most preferred a fast turnaround time for their results (immediate, utility 42.0; same day, utility 30.4) and antibody and viral tests together (utility 39.5). The none option had a large negative utility (–299.6).

The attribute with the highest relative importance for fast-track testers was result turnaround time (51.9%, 95% CI 51.6%-52.3%), followed by test type (22.4%, 95% CI 22.1%-22.8%) and specimen type (19.1%, 95% CI 18.8%-19.4%); venue was least important (6.5%, 95% CI 6.4%-6.6%). Participants in this pattern had the most extreme range of values for relative importance. They most preferred an immediate (utility 98.6) and same-day (utility 63.8) result turnaround time and both antibody and viral tests (utility 53.0). They preferred less invasive specimen types, with a cheek swab being most preferred (utility 25.4) and NP swab and blood draw being least preferred (utilities –51.1 and –20.7, respectively). Although venue was the least important attribute for this group, among the specific options, testing at a community drive-through was most preferred (utility 10.8). Similar to noninvasive home testers, the fast-track testers had a large negative utility for the none option (–238.0).

Among dual testers, test type had the highest relative importance (47.6%, 95% CI 47.2%-47.9%), followed by result turnaround time (34.9%, 95% CI 34.7%-35.2%), with specimen type being less important (12.2%, 95% CI 11.8%-12.4%) and venue being least important (5.4%, 95% CI 5.3%-5.5%). Participants in this pattern most preferred both antibody and viral tests (utility 93.3) and fast turnaround times for results (immediate: utility 64.9; same day: utility 40.5). Less invasive specimen types were preferred, with saliva (utility 14.2) and cheek swab (utility 16.4) being most preferred, and NP swab being least preferred (utility –30.5). Regarding venue, testing at drive-through community sites was most preferred (utility 12.2). Dual testers had a large negative utility for the none opt-out choice (utility –217.4).

Among noninvasive dual testers, specimen type had the highest relative importance (34.7%, 95% CI 34.5%-34.8%), followed by test type (30.8%, 95% CI 30.7%-31.0%) and then result turnaround time (26.2%, 95% CI 26.0%-26.5%), with venue least important (8.3%, 95% CI 8.2%-8.4%). In this pattern, the most preferred specimen types were saliva (utility 28.1) and cheek swab (utility 37.8), and the least preferred was NP swab (utility –100.9). Both antibody and viral tests were preferred (utility 73.3), as well as fast turnaround times for results (immediate: utility 54.7; same day: utility 25.1). Regarding venue, home collection with returning the sample to a collection site was most preferred (utility 16.8). Similar to the previous three patterns, noninvasive dual testers had a large negative utility for the none opt-out choice (–226.6).

Finally, among participants in the hesitant home testers pattern, venue had the highest relative importance (42.3%, 95% CI 41.4%-43.1%) followed by specimen type (28.0%, 95% CI 27.7%-28.2%); result turnaround time (15.6%, 95% CI 14.9%-16.4%) and test type (14.1%, 95% CI 13.8%-14.5%) were similarly less important. In contrast to the other 4 patterns, hesitant home testers had a positive utility (32.5) for the none option, hence the use of “hesitant” in this pattern’s name. Participants in this pattern preferred less invasive specimens, including urine (utility 33.5), finger prick (utility 24.5), cheek swab and saliva (utilities 25.6 and 18.7, respectively), and least preferred NP swab (utility –77.7) and blood draw (utility –21.1). They most preferred home sample collection either returning the sample for testing by mail (utility 93.2) or to a collection site (utility 60.2), and least preferred testing at a walk-in community site (utility –75.9) or a doctor’s office/urgent care clinic (utility –44.6). Although test type and turnaround time were the least important attributes for hesitant home testers, participants with this pattern preferred both antibody and viral tests (utility 36.1) and fast turnaround times for results (immediate: utility 32.5; same day: utility 21.2).

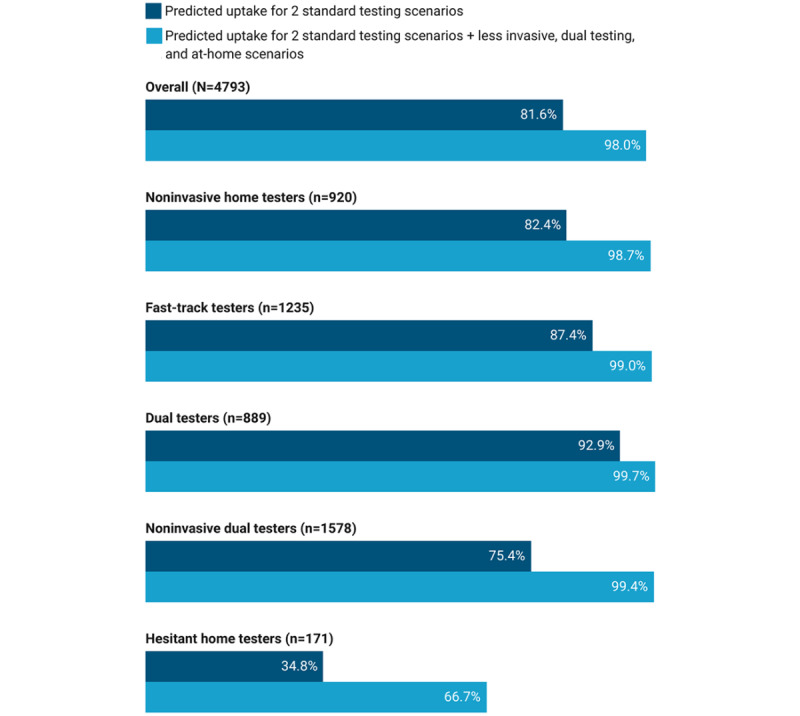

Simulated Preferences for Standard Testing, Less Invasive Testing, Dual Testing, and At-Home Testing

Predicted testing uptake for the 2 standard scenarios among all participants was 81.6%, ranging from 34.8% for hesitant home testers to 92.9% for dual testers (Figure 2). By including less invasive testing, dual testing, and at-home testing scenarios in our second simulation, predicted testing uptake among all participants increased by 16.4 percentage points to 98.0%. The addition of these 3 scenarios had the biggest impact on hesitant home testers, with an increase in uptake from 34.8% to 66.7% (31.9 percentage points), and noninvasive dual testers, with an increase in uptake from 75.4% to 99.4% (24.0 percentage points).

Figure 2.

Simulated uptake of SARS-CoV-2 testing for 2 standard testing scenarios versus the addition of less invasive dual testing and at-home scenarios, overall and by preference pattern.

In our simulation of all 6 scenarios (Table S2, Multimedia Appendix 6), the standard testing scenarios generally had the lowest predicted uptake, though with higher uptake for the drive-through option (overall 6.6%) compared with the walk-in community site option (overall 0.9%). Of the 3 additional scenarios, the dual testing scenario combining polymerase chain reaction and serology had the highest predicted uptake overall (61.8%) and was highest for fast-track testers (60.8%), dual testers (65.0%), and noninvasive dual testers (80.9%). The at-home testing scenario had the highest predicted uptake for noninvasive home testers (37.9%) and hesitant home testers (38.2%); however, for noninvasive home testers, there was also similar uptake for the dual testing scenario (35.5%). For hesitant home testers, one-third (33.3%) were predicted to opt out of testing altogether.

Demographic Characteristics of Participants by Preference Pattern

There were statistically significant differences by preference pattern in age group, gender, race/ethnicity, education, and employment, but not in geographic region, urbanicity, or presence of any comorbidity (Table S3, Multimedia Appendix 6). Hesitant home testers were older with less representation in the youngest age group of 18 to 39 years (69/171, 40.4%) compared with participants in other patterns (range 49.3%-56.1%). Fast-track testers were less often female (583/1235, 47.2%), especially when compared with hesitant home testers (100/171, 58.5%) and to a lesser extent when compared with participants in other patterns (range 50.5%-53.6%). Dual testers (625/889, 70.3%) and noninvasive dual testers (1059/1578, 67.1%) were more often non-Hispanic White, especially compared with hesitant home testers (85/171, 49.7%) and to a lesser extent with noninvasive home testers (516/920, 56.1%) and fast-track testers (725/1235, 58.6%). Dual testers tended to be college graduates (628/889, 70.6%), especially compared with noninvasive home testers (508/920, 55.2%) and to a lesser extent when compared with participants in other patterns (range 59.1%-64.3%). Regarding employment, hesitant home testers were more often out of work (37/171, 21.6%) compared with participants in other patterns (range 9.3%-13.8%).

Previous SARS-CoV-2 Testing, COVID-19 Diagnosis, and Infection Concern by Preference Pattern

There were statistically significant differences by preference pattern for previous SARS-CoV-2 testing, reported COVID-19 diagnosis, concern about getting infected, concern about loved ones getting infected, concern about hospitals being overwhelmed, personally knowing someone who had died from COVID-19, and submitting a dried blood spot (DBS) for testing as part of our cohort study (Table S3, Multimedia Appendix 6). Fast-track testers (424/1235, 34.3%) and dual testers (298/889, 33.5%) more often had previously tested for SARS-CoV-2 compared with participants in other patterns (range 23.4%-25.9%). Reporting a previous laboratory-confirmed diagnosis of COVID-19 was lowest among dual testers (35/889, 3.9%) and noninvasive dual testers (51/1578, 3.2%) compared with participants in other patterns (range 5.3%-7.5%). Among those who did not report being previously diagnosed with COVID-19, dual testers were less often not at all worried or not too worried about getting infected with SARS-CoV-2 (179/889, 21.0%), especially when compared with hesitant home testers (52/171, 32.1%) and to a lesser extent participants in other patterns (range 23.9%-29.6%). A similar pattern was observed for concern about loved ones getting infected with SARS-CoV-2, where dual testers had the lowest proportion of people who reported being not at all worried or not too worried about loved ones getting infected (82/889, 9.2%) compared with hesitant home testers (38/171, 22.2%) and to a lesser extent compared with participants in other patterns (range 13.0%-17.5%). Likewise, dual testers had the lowest proportion of people who reported being not at all worried or not too worried about hospitals being overwhelmed by COVID-19 (112/889, 12.6%) compared to participants in other patterns (range 19.3%-32.2%).

Fast-track testers were more likely to personally know someone who had died from COVID-19 (320/1235, 25.9%) than hesitant home testers (34/171, 19.9%) and participants in other patterns (range 21.0%-22.5%). Noninvasive dual testers (1323/1578, 83.8%) and dual testers (741/889, 83.4%) were more likely to have submitted at least one DBS specimen for serology as part of our cohort study, compared with hesitant home testers (121/171, 70.8%), noninvasive home testers (671/920, 72.9%), and fast-track testers (915/1235, 74.1%).

Discussion

Principal Results

A one-size-fits-all approach to SARS-CoV-2 testing may alienate or exclude segments of the population with preferences for different testing modalities. If the goal is to increase and maintain testing uptake and engagement, then the identification of patterns of heterogeneous testing preferences could inform the design and implementation of complementary testing services that support greater coverage.

We identified substantial differences in preferences for aspects of SARS-CoV-2 testing, as shown by the differences in attribute relative importance and part-worth utilities. Overall, participants preferred getting both antibody and viral tests with less invasive specimens and fast turnaround time for results; however, the degree to which these features influenced participants’ choices varied across patterns. Our 6-scenario simulation showed that offering additional venues and test type options would increase testing uptake at a time when case numbers were increasing in many parts of the United States, with dual viral and antibody testing expected to have the biggest uptake. Though identifying previous infections via antibody tests does not help control transmission, including antibody tests with diagnostic testing could incentivize some people to get tested. An at-home testing option would also be expected to increase uptake, especially for participants in the noninvasive home testers and hesitant home testers patterns, which comprised about one-fifth of the sample.

Since this study was undertaken, there have been developments in SARS-CoV-2 viral and serologic testing that could address many of the distinct preferences across patterns, including the expansion of at-home specimen collection, affordable fully at-home tests commercially available without a prescription, rapid point-of-care tests, and less invasive specimens [41-44]. Though not yet approved by the US Food and Drug Administration, a promising saliva-based antibody test is in development [45], which may, in the future, allow for a single saliva specimen to be used for both serology and molecular testing. In our study, noninvasive home testers and hesitant home testers, who placed most importance on specimen type and venue, respectively, were more often non-Hispanic Black and Hispanic. These testing developments may provide a pathway to increase lower testing rates among Black and Latino individuals, who have experienced a disproportionate burden of cases, hospitalization, and deaths due to COVID-19 [46].

We also observed differences in sample characteristics by preference pattern, including demographic characteristics, previous testing, and COVID-19 diagnosis, and concern about infection. These differences could be leveraged to promote testing through media tools and campaigns targeting specific populations, similar to “The Conversation: Between Us, About Us” [47], created by the Kaiser Family Foundation’s Greater than COVID and the Black Coalition Against COVID and designed to address “some of the most common questions and concerns Black people have about COVID-19 vaccines,” or targeting specific behaviors, such as New York City’s Test and Trace Corps’ “Do it for them. Get Tested for COVID-19” advertisements on Twitter, bus shelters, and pizza boxes [48-50] with pictures of families of different races and ethnicities, sometimes multigenerational.

Across most of the preference patterns, the large negative utilities that we observed for the no-test option indicated a willingness to test. The exception was the hesitant home testers pattern, which had a positive utility for the none option, suggesting that this group of participants would be more likely to opt out of testing altogether compared to participants in the other patterns. Though hesitant home testers were least prevalent in our study, qualitative work may be warranted to understand the factors that influence their willingness to test.

Limitations

Our results should be interpreted in the context of their limitations. An important limitation of our analysis is related to latent class analysis in general, as best practices for using it to study heterogeneity in preferences in health-related research are still evolving [15]. We selected 5 classes after comparing sample size, fit statistics, and overall interpretability of 2 to 10 classes. On the one hand, the hesitant home testers pattern was small relative to the other patterns, and one could argue that it could have been combined with a larger class in a solution with fewer groups. However, in every lower dimension solution, a similarly small-sized class was identified that was strongly influenced by venue and specimen type, and had a nonnegative utility for opting out of testing. On the other hand, it is possible that additional distinct patterns of preference remained undetected with only 5 classes.

Another potential limitation is that the stated preferences regarding SARS-CoV-2 testing in our DCE may not necessarily align with actual behavior (ie, revealed preferences); however, a systematic review and meta-analysis found that, in general, stated preferences in DCEs did align with revealed preferences [10]. To minimize cognitive burden, DCE design must balance the inclusion of relevant and actionable attributes and levels with the complexity of each choice task [51]. However, one reason for lack of concordance between stated and revealed preferences in general is the omission of attributes in DCEs that may influence real-life decisions [10,29]. Aspects of accessibility including cost, transportation time, availability of testing, and wait time could be explored in future studies, as well as how participants’ prior knowledge of test options may have influenced their decisions, the effects of operator error, and test validity (ie, sensitivity and specificity). Nevertheless, the different patterns of preferences for features of the test and testing experience as ascertained in our study could be used to inform the development of strategies deployed by public health agencies, who can account for the operating characteristics of tests. In some instances, even a less accurate test implemented at scale could have a larger public health impact than a more accurate test with lower uptake [52].

Although our sample was large and geographically diverse, it was not a nationally representative sample, so it may be that there are additional patterns of preferences that exist beyond our study in other populations. Not all testing options are available in every jurisdiction, and different patterns of testing preferences may emerge in different settings. Furthermore, most participants (78.3%) in the DCE had already completed at-home self-collection of a DBS specimen as part of our larger cohort study, which may have influenced preferences regarding the venue of testing.

Lastly, participants’ preferences about SARS-CoV-2 testing may change over time as the pandemic continues to evolve. Research on other topics has demonstrated that choices stated in a DCE are generally consistent, with good test-retest reliability [53,54]; however, knowledge about SARS-CoV-2 and COVID-19 has rapidly evolved and is widely disseminated in mainstream media [55], which could plausibly impact preferences. The first report of reinfection and the potential waning of antibodies appeared in the United States in October 2020 [56], approximately 1 month after the completion of our DCE, and could influence current preferences about antibody testing. In addition, the availability of highly efficacious vaccines starting in December 2020 [57] could have an impact on testing service preferences more globally, potentially causing more people to opt out of testing when case numbers, hospitalizations, and deaths decrease. It will also be important to examine preferences since new testing modalities have become available, such as fully at-home molecular tests that provide rapid results [58-60], and as vaccine uptake increases.

Conclusions

Our study may inform ways to better design and deliver SARS-CoV-2 testing services in line with pandemic response goals. The heterogeneity in preferences observed across patterns highlights that having more options available (and educating the public about their availability) is one way to increase testing uptake in an emerging and ongoing pandemic. Importantly, our analysis highlights that preferences for SARS-CoV-2 testing differ by population characteristics, including demographics, which must also be considered in the context of existing health disparities in the United States. Even as increasing proportions of the population are vaccinated, we anticipate that testing will remain a critical tool in the pandemic response until vaccine coverage and herd immunity are sufficiently high to reduce transmission and control more pathogenic or virulent variants; offering a mix of testing options is an important aspect of increasing and maintaining testing uptake.

Acknowledgments

This study was funded by the National Institute of Allergy and Infectious Diseases of the National Institutes of Health (NIH), award number 3UH3AI133675-04S1 (multiple principal investigators: authors DN and CG), the City University of New York (CUNY) Institute for Implementation Science in Population Health, and the COVID-19 Grant Program of the CUNY Graduate School of Public Health and Health Policy, and National Institute of Child Health and Human Development grant P2C HD050924 (Carolina Population Center). The NIH had no role in the publication of this manuscript nor necessarily endorses the findings.

The authors wish to thank the participants of the CHASING COVID Cohort Study. We are grateful for their contributions to the advancement of science around the SARS-CoV-2 pandemic. We thank Patrick Sullivan and MTL Labs for local validation work on the serologic assays for use with dried blood spots that greatly benefited our study. We are also grateful to MTL Labs for processing specimen collection kits and serologic testing of our cohort’s specimens.

Abbreviations

- CHASING COVID Cohort Study

Communities, Households, and SARS-CoV-2 Epidemiology Cohort Study

- CUNY

City University of New York

- DBS

dried blood spot

- DCE

discrete choice experiment

- MNL

multinomial logit

- NIH

National Institutes of Health

- NP

nasopharyngeal

- RFC

Randomized First Choice

Desktop example of SARS-CoV-2 testing preferences choice task.

SARS-CoV-2 testing discrete choice experiment attributes and levels.

Definition of variables from the CHASING COVID Cohort Study.

Respondent quality details.

Summary of best replications of latent class analysis multinomial logit.

Supplementary tables on zero-centered part-worth utilities for SARS-CoV-2 testing attribute levels by preference pattern (Table S1); simulated uptake of standard testing, less invasive testing, dual testing, and at-home testing scenarios by preference pattern (Table S2); and sample characteristics, previous testing, COVID-19 diagnosis, and concern about infection stratified by preference pattern for SARS-CoV-2 testing (Table S3).

CHERRIES (Checklist for Reporting Results of Internet E-Surveys) checklist.

Footnotes

Authors' Contributions: RZ, SGK, AB, WY, CM, DAW, AMP, LW, MSR, SK, MMR, ARM, CG, and DN designed and conducted the cohort study and discrete choice experiment. MLR and RZ conducted the statistical analysis. RZ, MLR, and DN interpreted the results. RZ and MLR drafted the manuscript. All authors critically reviewed the manuscript and approved the final version.

Conflicts of Interest: None declared.

References

- 1.Dinnes J, Deeks J, Berhane S, Taylor M, Adriano A, Davenport C, Dittrich S, Emperador D, Takwoingi Y, Cunningham J, Beese S, Domen J, Dretzke J, Ferrante di Ruffano L, Harris I, Price M, Taylor-Phillips S, Hooft L, Leeflang M, McInnes M, Spijker R, Van den Bruel A, Cochrane COVID-19 Diagnostic Test Accuracy Group Rapid, point-of-care antigen and molecular-based tests for diagnosis of SARS-CoV-2 infection. Cochrane Database Syst Rev. 2021 Mar 24;3:CD013705. doi: 10.1002/14651858.CD013705.pub2. http://europepmc.org/abstract/MED/33760236 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Dighe A, Cattarino L, Cuomo-Dannenburg G, Skarp J, Imai N, Bhatia S, Gaythorpe KAM, Ainslie KEC, Baguelin M, Bhatt S, Boonyasiri A, Brazeau NF, Cooper LV, Coupland H, Cucunuba Z, Dorigatti I, Eales OD, van Elsland SL, FitzJohn RG, Green WD, Haw DJ, Hinsley W, Knock E, Laydon DJ, Mellan T, Mishra S, Nedjati-Gilani G, Nouvellet P, Pons-Salort M, Thompson HA, Unwin HJT, Verity R, Vollmer MAC, Walters CE, Watson OJ, Whittaker C, Whittles LK, Ghani AC, Donnelly CA, Ferguson NM, Riley S. Response to COVID-19 in South Korea and implications for lifting stringent interventions. BMC Med. 2020 Oct 09;18(1):321. doi: 10.1186/s12916-020-01791-8. https://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-020-01791-8 .10.1186/s12916-020-01791-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wu SL, Mertens AN, Crider YS, Nguyen A, Pokpongkiat NN, Djajadi S, Seth A, Hsiang MS, Colford JM, Reingold A, Arnold BF, Hubbard A, Benjamin-Chung J. Substantial underestimation of SARS-CoV-2 infection in the United States. Nat Commun. 2020 Sep 09;11(1):4507. doi: 10.1038/s41467-020-18272-4.10.1038/s41467-020-18272-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Biden J. National strategy for the COVID-19 response and pandemic preparedness. The White House. 2021. Jan 21, [2021-06-23]. https://www.whitehouse.gov/wp-content/uploads/2021/01/National-Strategy-for-the-COVID-19-Response-and-Pandemic-Preparedness.pdf .

- 5.Morrison S. What Covid-19 testing looks like in the age of vaccines. Vox. 2021. Apr 01, [2021-06-23]. https://www.vox.com/recode/22340744/covid-19-coronavirus-testing-vaccines-rapid-home-antigen .

- 6.Meek K. What is the utility of COVID-19 laboratory testing in vaccinated individuals? ARUP Laboratories. 2021. Feb 10, [2021-06-23]. https://www.aruplab.com/news/2-10-2021/utility-of-covid-19-laboratory-testing-in-vaccinated-individuals .

- 7.Overview of testing for SARS-CoV-2 (COVID-19): coronavirus disease 2019 (COVID-19) Centers for Disease Control and Prevention. 2020. [2020-10-18]. https://www.cdc.gov/coronavirus/2019-ncov/hcp/testing-overview.html .

- 8.COVID-19 and your health--Domestic travel during COVID-19. Centers for Disease Control and Prevention. 2020. [2021-05-21]. https://www.cdc.gov/coronavirus/2019-ncov/travelers/travel-during-covid19.html .

- 9.COVID-19 and your health--international travel during COVID-19. Centers for Disease Control and Prevention. 2020. [2021-05-21]. https://www.cdc.gov/coronavirus/2019-ncov/travelers/international-travel-during-covid19.html .

- 10.Quaife M, Terris-Prestholt F, Di Tanna GL, Vickerman P. How well do discrete choice experiments predict health choices? A systematic review and meta-analysis of external validity. Eur J Health Econ. 2018 Nov;19(8):1053–1066. doi: 10.1007/s10198-018-0954-6.10.1007/s10198-018-0954-6 [DOI] [PubMed] [Google Scholar]

- 11.Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user's guide. Pharmacoeconomics. 2008;26(8):661–77. doi: 10.2165/00019053-200826080-00004.2684 [DOI] [PubMed] [Google Scholar]

- 12.Viney R, Lancsar E, Louviere J. Discrete choice experiments to measure consumer preferences for health and healthcare. Expert Rev Pharmacoecon Outcomes Res. 2002 Aug;2(4):319–26. doi: 10.1586/14737167.2.4.319. [DOI] [PubMed] [Google Scholar]

- 13.Janus SIM, Weernink MGM, van Til JA, Raisch DW, van Manen JG, IJzerman MJ. A systematic review to identify the use of preference elicitation methods in health care decision making. Value Health. 2014 Nov;17(7):A515–6. doi: 10.1016/j.jval.2014.08.1596. https://linkinghub.elsevier.com/retrieve/pii/S1098-3015(14)03526-8 .S1098-3015(14)03526-8 [DOI] [PubMed] [Google Scholar]

- 14.Zimba R, Kulkarni S, Berry A, You W, Mirzayi C, Westmoreland D, Parcesepe A, Waldron L, Rane M, Kochhar S, Robertson M, Maroko A, Grov C, Nash D. SARS-CoV-2 testing service preferences of adults in the United States: discrete choice experiment. JMIR Public Health Surveill. 2020 Dec 31;6(4):e25546. doi: 10.2196/25546. https://publichealth.jmir.org/2020/4/e25546/ v6i4e25546 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zhou M, Thayer WM, Bridges JFP. Using latent class analysis to model preference heterogeneity in health: a systematic review. Pharmacoeconomics. 2018 Feb;36(2):175–187. doi: 10.1007/s40273-017-0575-4.10.1007/s40273-017-0575-4 [DOI] [PubMed] [Google Scholar]

- 16.Lieberman-Cribbin W, Tuminello S, Flores RM, Taioli E. Disparities in COVID-19 testing and positivity in New York City. Am J Prev Med. 2020 Sep;59(3):326–332. doi: 10.1016/j.amepre.2020.06.005. http://europepmc.org/abstract/MED/32703702 .S0749-3797(20)30263-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rentsch CT, Kidwai-Khan F, Tate JP, Park LS, King JT, Skanderson M, Hauser RG, Schultze A, Jarvis CI, Holodniy M, Lo Re V, Akgün KM, Crothers K, Taddei TH, Freiberg MS, Justice AC. Patterns of COVID-19 testing and mortality by race and ethnicity among United States veterans: a nationwide cohort study. PLoS Med. 2020 Sep;17(9):e1003379. doi: 10.1371/journal.pmed.1003379. https://dx.plos.org/10.1371/journal.pmed.1003379 .PMEDICINE-D-20-02701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dryden-Peterson S, Velásquez GE, Stopka TJ, Davey S, Lockman S, Ojikutu BO. Disparities in SARS-CoV-2 testing in Massachusetts during the COVID-19 pandemic. JAMA Netw Open. 2021 Feb 01;4(2):e2037067. doi: 10.1001/jamanetworkopen.2020.37067. https://jamanetwork.com/journals/jamanetworkopen/fullarticle/10.1001/jamanetworkopen.2020.37067 .2776043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mody A, Pfeifauf K, Bradley C, Fox B, Hlatshwayo MG, Ross W, Sanders-Thompson V, Joynt Maddox K, Reidhead M, Schootman M, Powderly WG, Geng EH. Understanding drivers of coronavirus disease 2019 (COVID-19) racial disparities: a population-level analysis of COVID-19 testing among Black and White populations. Clin Infect Dis. 2021 Nov 02;73(9):e2921–e2931. doi: 10.1093/cid/ciaa1848. http://europepmc.org/abstract/MED/33315066 .6033727 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ong JJ, De Abreu Lourenco R, Street D, Smith K, Jamil MS, Terris-Prestholt F, Fairley CK, McNulty A, Hynes A, Johnson K, Chow EPF, Bavinton B, Grulich A, Stoove M, Holt M, Kaldor J, Guy R. The preferred qualities of human immunodeficiency virus testing and self-testing among men who have sex with men: a discrete choice experiment. Value Health. 2020 Jul;23(7):870–879. doi: 10.1016/j.jval.2020.04.1826. https://linkinghub.elsevier.com/retrieve/pii/S1098-3015(20)32061-1 .S1098-3015(20)32061-1 [DOI] [PubMed] [Google Scholar]

- 21.Harrison M, Rigby D, Vass C, Flynn T, Louviere J, Payne K. Risk as an attribute in discrete choice experiments: a systematic review of the literature. Patient. 2014;7(2):151–70. doi: 10.1007/s40271-014-0048-1. [DOI] [PubMed] [Google Scholar]

- 22.Robertson MM, Kulkarni SG, Rane M, Kochhar S, Berry A, Chang M, Mirzayi C, You W, Maroko A, Zimba R, Westmoreland D, Grov C, Parcesepe AM, Waldron L, Nash D, CHASING COVID Cohort Study Team Cohort profile: a national, community-based prospective cohort study of SARS-CoV-2 pandemic outcomes in the USA-the CHASING COVID Cohort study. BMJ Open. 2021 Sep 21;11(9):e048778. doi: 10.1136/bmjopen-2021-048778. https://bmjopen.bmj.com/lookup/pmidlookup?view=long&pmid=34548354 .bmjopen-2021-048778 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Getting started with interviewing. Sawtooth Software. [2020-07-28]. https://legacy.sawtoothsoftware.com/help/lighthouse-studio/manual/

- 24.Orme BK. Fine-tuning CBC and adaptive CBC questionnaires. Sawtooth Software. 2009. [2020-07-28]. https://sawtoothsoftware.com/resources/technical-papers/fine-tuning-cbc-and-adaptive-cbc-questionnaires-2009 .

- 25.Orme BK, Chrzan K. Becoming an Expert in Conjoint Analysis. Provo, UT: Sawtooth Software, Inc; 2017. 326 pp. [Google Scholar]

- 26.CBC/HB technical paper. Sawtooth Software. 2009. [2020-08-06]. https://sawtoothsoftware.com/resources/technical-papers/cbc-hb-technical-paper-2009 .

- 27.Latent Class v4.5 Software for latent class estimation for CBC data. Sawtooth Software. 2012. [2020-11-01]. https://content.sawtoothsoftware.com/assets/cd185165-1b9f-4213-bac0-bddb5510da03 .

- 28.The Latent Class Technical Paper V4.8. Sawtooth Software. 2021. [2021-04-30]. https://sawtoothsoftware.com/resources/technical-papers/latent-class-technical-paper .

- 29.Orme BK. Getting Started With Conjoint Analysis. Manhattan Beach, CA: Research Publishers, LLC; 2014. p. 234. [Google Scholar]

- 30.Chrzan K. Raw utilities vs zero-centered diffs. Sawtooth Software. 2014. [2020-09-24]. https://legacy.sawtoothsoftware.com/forum/7160/raw-utilities-vs-zero-centered-diffs?show=7160#q7160 .

- 31.Sawtooth Software, Inc Scaling conjoint part worths: points vs zero-centered diffs. Sawtooth Solutions. 1999. [2020-09-25]. https://legacy.sawtoothsoftware.com/about-us/news-and-events/sawtooth-solutions/ss10-cb/1201-scaling-conjoint-part-worths-points-vs-zero-centered-diffs .

- 32.Rosner B. Fundamentals of Biostatistics, 5th edition. Pacific Grove, CA: Duxbury Press; 2000. p. 792. [Google Scholar]

- 33.Orme BK. Consistency cutoffs to identify "Bad" respondents in CBC, ACBC, and MaxDiff. Sawtooth Software. 2019. [2020-09-01]. https://sawtoothsoftware.com/resources/technical-papers/consistency-cutoffs-to-identify-bad-respondents-in-cbc-acbc-and-maxdiff .

- 34.New York State COVID-19 testing. Novel Coronavirus (COVID-19) [2020-11-11]. https://coronavirus.health.ny.gov/covid-19-testing .

- 35.Testing. California Department of Public Health. [2020-11-11]. https://www.cdph.ca.gov/Programs/CID/DCDC/Pages/COVID-19/Testing.aspx .

- 36.Coronavirus disease 2019 testing basics. US Food and Drug Administration. 2021. Apr 16, [2020-11-11]. https://www.fda.gov/consumers/consumer-updates/coronavirus-disease-2019-testing-basics .

- 37.pixel by Labcorp COVID-19 test (at-home collection kit) Labcorp OnDemand. [2020-11-12]. https://www.pixel.labcorp.com/at-home-test-kits/covid-19-test-home-collection-kit .

- 38.Randomized First Choice. Sawtooth Software. [2020-08-21]. https://sawtoothsoftware.com/help/lighthouse-studio/manual/hid_randomizedfirstchoice.html .

- 39.Orme BK, Huber J. Improving the value of conjoint simulations. Marketing Res. 2000;12(4):12–20. [Google Scholar]

- 40.CHASING COVID research study, study materials and resources. CUNY Institute for Implementation Science in Population Health. [2021-05-21]. https://cunyisph.org/chasing-covid/

- 41.Sheridan C. Coronavirus testing finally gathers speed. Nat Biotechnol. 2020 Nov 05;:1. doi: 10.1038/d41587-020-00021-z.10.1038/d41587-020-00021-z [DOI] [PubMed] [Google Scholar]

- 42.In vitro diagnostics EUAs - antigen diagnostic tests for SARS-CoV-2. US Food and Drug Administration. 2021. May 21, [2021-06-04]. https://www.fda.gov/medical-devices/coronavirus-disease-2019-covid-19-emergency-use-authorizations-medical-devices/in-vitro-diagnostics-euas-antigen-diagnostic-tests-sars-cov-2 .

- 43.In vitro diagnostics EUAs - serology and other adaptive immune response tests for SARS-CoV-2. US Food and Drug Administration. 2021. Jun 01, [2021-06-04]. https://www.fda.gov/medical-devices/coronavirus-disease-2019-covid-19-emergency-use-authorizations-medical-devices/in-vitro-diagnostics-euas-serology-and-other-adaptive-immune-response-tests-sars-cov-2 .

- 44.In vitro diagnostics EUAs - molecular diagnostic tests for SARS-CoV-2. US Food and Drug Administration. 2021. Jun 01, [2021-06-04]. https://www.fda.gov/medical-devices/coronavirus-disease-2019-covid-19-emergency-use-authorizations-medical-devices/in-vitro-diagnostics-euas-molecular-diagnostic-tests-sars-cov-2 .

- 45.Pisanic N, Randad PR, Kruczynski K, Manabe YC, Thomas DL, Pekosz A, Klein SL, Betenbaugh MJ, Clarke WA, Laeyendecker O, Caturegli PP, Larman HB, Detrick B, Fairley JK, Sherman AC, Rouphael N, Edupuganti S, Granger DA, Granger SW, Collins MH, Heaney CD. COVID-19 serology at population scale: SARS-CoV-2-specific antibody responses in saliva. J Clin Microbiol. 2020 Dec 17;59(1):e02204-20. doi: 10.1128/JCM.02204-20. http://europepmc.org/abstract/MED/33067270 .JCM.02204-20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Risk for COVID-19 infection, hospitalization, and death by race/ethnicity. Centers for Disease Control and Prevention. [2020-12-09]. https://www.cdc.gov/coronavirus/2019-ncov/covid-data/investigations-discovery/hospitalization-death-by-race-ethnicity.html .

- 47.The conversation: between us, about us. Greater Than COVID. [2021-06-04]. https://www.greaterthancovid.org/theconversation/toolkit/

- 48.DiGioia P. The Wave. Rockaway Beach, NY: 2021. Feb 11, [2021-06-04]. The Glorified Tomato: pandemic pizza box. https://www.rockawave.com/articles/the-glorified-tomato-150/ [Google Scholar]

- 49.City of New York Do it for them! Get a FREE COVID-19 test and make sure your family stays healthy and safe. Twitter. 2021. Feb 03, [2021-06-04]. https://twitter.com/nycgov/status/1357174048132849668 .

- 50.Kliff S. The Upshot: coronavirus tests are supposed to be free. the surprise bills come anyway. The New York Times. 2020. Sep 09, [2021-06-04]. https://www.nytimes.com/2020/09/09/upshot/coronavirus-surprise-test-fees.html .

- 51.Reed Johnson F, Lancsar E, Marshall D, Kilambi V, Mühlbacher A, Regier DA, Bresnahan BW, Kanninen B, Bridges JFP. Constructing experimental designs for discrete-choice experiments: report of the ISPOR Conjoint Analysis Experimental Design Good Research Practices Task Force. Value Health. 2013;16(1):3–13. doi: 10.1016/j.jval.2012.08.2223. https://linkinghub.elsevier.com/retrieve/pii/S1098-3015(12)04162-9 .S1098-3015(12)04162-9 [DOI] [PubMed] [Google Scholar]

- 52.Larremore DB, Wilder B, Lester E, Shehata S, Burke JM, Hay JA, Tambe M, Mina MJ, Parker R. Test sensitivity is secondary to frequency and turnaround time for COVID-19 screening. Sci Adv. 2021 Jan;7(1):eabd5393. doi: 10.1126/sciadv.abd5393. http://europepmc.org/abstract/MED/33219112 .sciadv.abd5393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Gamper E, Holzner B, King MT, Norman R, Viney R, Nerich V, Kemmler G. Test-retest reliability of discrete choice experiment for valuations of QLU-C10D health states. Value Health. 2018 Aug;21(8):958–966. doi: 10.1016/j.jval.2017.11.012. https://linkinghub.elsevier.com/retrieve/pii/S1098-3015(18)30192-X .S1098-3015(18)30192-X [DOI] [PubMed] [Google Scholar]

- 54.Skjoldborg US, Lauridsen J, Junker P. Reliability of the discrete choice experiment at the input and output level in patients with rheumatoid arthritis. Value Health. 2009;12(1):153–8. doi: 10.1111/j.1524-4733.2008.00402.x. https://linkinghub.elsevier.com/retrieve/pii/S1098-3015(10)60687-0 .S1098-3015(10)60687-0 [DOI] [PubMed] [Google Scholar]

- 55.Ali SH, Foreman J, Tozan Y, Capasso A, Jones AM, DiClemente RJ. Trends and predictors of COVID-19 information sources and their relationship with knowledge and beliefs related to the pandemic: nationwide cross-sectional study. JMIR Public Health Surveill. 2020 Oct 08;6(4):e21071. doi: 10.2196/21071. https://publichealth.jmir.org/2020/4/e21071/ v6i4e21071 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Tillett RL, Sevinsky JR, Hartley PD, Kerwin H, Crawford N, Gorzalski A, Laverdure C, Verma SC, Rossetto CC, Jackson D, Farrell MJ, Van Hooser S, Pandori M. Genomic evidence for reinfection with SARS-CoV-2: a case study. Lancet Infect Dis. 2021 Jan;21(1):52–58. doi: 10.1016/S1473-3099(20)30764-7. http://europepmc.org/abstract/MED/33058797 .S1473-3099(20)30764-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Oliver SE, Gargano JW, Marin M, Wallace M, Curran KG, Chamberland M, McClung N, Campos-Outcalt D, Morgan RL, Mbaeyi S, Romero JR, Talbot HK, Lee GM, Bell BP, Dooling K. The Advisory Committee on Immunization Practices' interim recommendation for use of Pfizer-BioNTech COVID-19 Vaccine - United States, December 2020. MMWR Morb Mortal Wkly Rep. 2020 Dec 18;69(50):1922–1924. doi: 10.15585/mmwr.mm6950e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lucira COVID-19 All-In-One Test Kit. US Food and Drug Administration. 2020. Nov 17, [2020-11-18]. https://www.fda.gov/media/143810/download .

- 59.Ellume COVID-19 Home Test. US Food and Drug Administration. 2020. Dec 15, [2021-06-04]. https://www.fda.gov/media/144457/download .

- 60.BinaxNOW COVID-19 Ag Card Home Test. US Food and Drug Administration. 2021. Apr 12, [2021-06-04]. https://www.fda.gov/media/144576/download .

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Desktop example of SARS-CoV-2 testing preferences choice task.

SARS-CoV-2 testing discrete choice experiment attributes and levels.

Definition of variables from the CHASING COVID Cohort Study.

Respondent quality details.

Summary of best replications of latent class analysis multinomial logit.

Supplementary tables on zero-centered part-worth utilities for SARS-CoV-2 testing attribute levels by preference pattern (Table S1); simulated uptake of standard testing, less invasive testing, dual testing, and at-home testing scenarios by preference pattern (Table S2); and sample characteristics, previous testing, COVID-19 diagnosis, and concern about infection stratified by preference pattern for SARS-CoV-2 testing (Table S3).

CHERRIES (Checklist for Reporting Results of Internet E-Surveys) checklist.