Abstract

Objectives

The aim of our study was to determine and enhance physicians’ acceptance, performance expectancy and credibility of health apps for chronic pain patients. We further investigated predictors of acceptance.

Design

Randomised experimental trial with a parallel-group repeated measures design.

Setting and participants

248 physicians working in various, mainly outpatient settings in Germany.

Intervention and outcome

Physicians were randomly assigned to either an experimental group (short video about health apps) or a control group (short video about chronic pain). Primary outcome measure was acceptance. Performance expectancy and credibility of health apps were secondary outcomes. In addition, we assessed 101 medical students to evaluate the effectiveness of the video intervention in young professionals.

Results

In general, physicians’ acceptance of health apps for chronic pain patients was moderate (M=9.51, SD=3.53, scale ranges from 3 to 15). All primary and secondary outcomes were enhanced by the video intervention: A repeated-measures analysis of variance yielded a significant interaction effect for acceptance (F(1, 246)=15.28, p=0.01), performance expectancy (F(1, 246)=6.10, p=0.01) and credibility (F(1, 246)=25.61, p<0.001). The same pattern of results was evident among medical students. Linear regression analysis revealed credibility (β=0.34, p<0.001) and performance expectancy (β=0.30, p<0.001) as the two strongest factors influencing acceptance, followed by scepticism (β=−0.18, p<0.001) and intuitive appeal (β=0.11, p=0.03).

Conclusions and recommendations

Physicians’ acceptance of health apps was moderate, and was strengthened by a 3 min video. Besides performance expectancy, credibility seems to be a promising factor associated with acceptance. Future research should focus on ways to implement acceptability-increasing interventions into routine care.

Keywords: pain management, medical education & training, education & training (see medical education & training)

Strengths and limitations of this study.

This is the first study to examine physicians’ acceptance and expectations about health apps for chronic pain.

A strength of the study is the investigation of both practitioners and medical students as future physicians.

The study has a strong active control group.

A limitation is the online-only data collection, due to which a selection bias may have occurred.

Introduction

Since the Global Burden of Disease Study was first conducted in the 1990s, chronic pain has been identified as the leading cause of years lived with disability.1 Chronic pain has various negative health consequences and adverse impacts on quality of life.2–4 Although there are effective treatments for chronic pain,5 6 effect sizes tend to be small.7 Further, the sustained efficacy of treatments is uncertain.8 This is problematic, because chronic pain raises costs dramatically for healthcare systems9 10 and is a significant contributor to work disability.11 The likelihood of returning to work correlates with the duration of pain: the longer patients are out of work, the less likely they are to return to full-time employment.12 13 Therefore, the principle for treating pain is that it should start as early as possible. However, many people, especially in rural areas, have no access to adequate pain treatment,14 15 even though it is considered a human right.16

eHealth offerings can help to alleviate these problems and provide patients with evidence-based interventions.17 Smartphone apps, falling under the mHealth category, especially have great potential for both practitioners and patients.18 First, because of the widespread use of smartphones, they can reach patients with chronic pain at a low threshold.19 Second, they can help patients better manage their pain, for example as a treatment adjunct or in the absence of a pain expert.20–22 Pain apps offer a wide range of application possibilities ranging from diary functions for monitoring pain to specific interventions. Two recent meta-analyses concluded that pain apps can reduce patients’ pain by a small effect23 and have a small positive effect on depression and short-term pain catastrophising.24 However, despite their positive potential, it should be mentioned that most pain apps have not been scientifically evaluated yet and privacy protection is often not sufficiently guaranteed.25 Besides these problems, there are various other barriers to the implementation of health apps into clinical practice.

One barrier on the practitioners’ side is that they play a gatekeeping role in electronic treatment forms.26 Even if physicians consider health apps to be helpful,27 integrating health apps into their daily work is slow.28 Although many patients are eager to try health apps29 health professionals recommend them seldom.30 31 One potential reason for this is their moderate acceptance of eHealth.32 There is ample evidence that acceptance is an important prerequisite for implementing new technologies into practice.33 34 Across studies, an important factor influencing acceptance (respective the intention to apply new technology) is performance expectancy.32 35–38

To increase acceptance, acceptance-enhancing video interventions have proven to be effective in patients and health practitioners.33 39 40 However, not all studies were able to increase practitioners’ acceptance,41 42 suggesting that the presentation and content of educational videos are relevant.33

Since previous research mainly investigated eHealth in general focusing on internet interventions, little is known about the acceptance of mobile health apps. The main aims of this study were to assess physicians’ acceptance of health apps and to increase their acceptance, performance expectancy and credibility via a short video intervention. Our further aim was to identify variables that influence physicians’ acceptance of health apps for chronic pain. To the best of our knowledge, this is the first experimental study assessing and modifying physicians’ acceptance of health apps in the context of chronic pain.

Methods

Study design

This study is a web-based experimental trial with a parallel-group design using simple randomisation procedure (1:1 allocation ratio). Self-rating questionnaires were used to assess preintervention and postintervention outcomes.

Completing the survey took an average of 14 min. Measurements were collected online via the software platform Unipark (Enterprise Feedback Suite survey, version Fall 2020, Questback). Randomisation was performed within the Unipark software. All procedures complied with the German Psychological Society’s ethical guidelines.

Participants

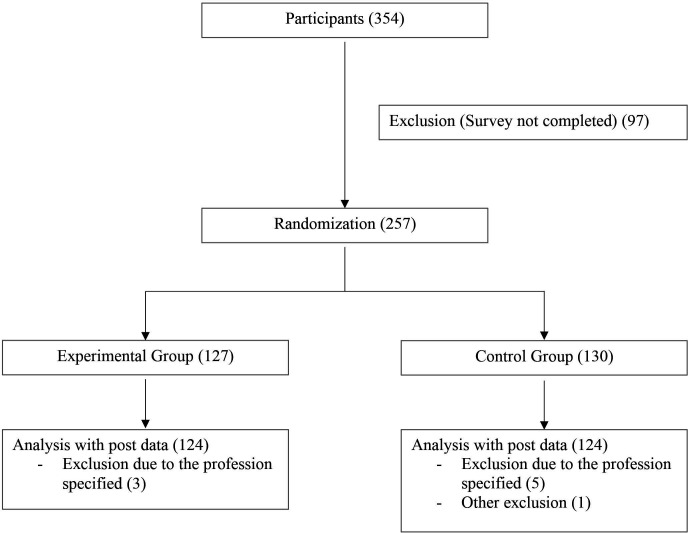

Data collection was performed between December 2020 and April 2021. The sample size was determined using an a priori power analysis with G*Power V.3.1.9.3.43 Following a similar preceding study,33 we based our calculations on a small effect between groups (expected f=0.16; power=0.8; alpha error probability of 0.05), resulting in a necessary sample size of 230. Because we assumed a 10% drop-out rate, we planned to survey 253 subjects. We recruited physicians via email distribution lists, physician networks and emails to practices. Due to the different recruitment methods, we can only estimate the number of physicians contacted. We assume that we reached approximately 10 000 physicians, of whom 354 started the survey. The response rate is comparable to a similar study.33 A total of 257 participants completed the questionnaires at postintervention, yielding a completer rate of 73% (figure 1). Inclusion criteria were being employed as a physician and sufficient knowledge of the German language. Study participants were collected online through practices, hospitals and medical communities. In addition, we recruited a sample of 101 medical students via Facebook groups for medical students as well as email distribution lists of medical schools.

Figure 1.

Flow chart.

Measures

Primary outcome

Acceptance of the Unified Theory of Acceptance and Use of Technology model (UTAUT)34 was our primary outcome. Acceptance according to the UTAUT model is conceived as the intention to use (new) technologies. The three acceptance items (table 1) were added together as a cumulative score, giving a range of 3–15. To make our data easier to interpret, we considered values as low (3–6), moderate (7–11) and high (12–15). This classification is similar to other studies.32 33 Cronbach’s alpha was 0.93.

Table 1.

UTAUT items

| UTAUT scale | Items |

| Acceptance | 1. I can basically imagine prescribing a health app. |

| 2. I would prescribe health apps regularly. | |

| 3. I would recommend health apps to colleagues. | |

| Performance expectancy | 1. Using health apps would improve the effectiveness of my work. |

| 2. Using health apps would help me in my work and increase my productivity. | |

| 3. Overall, health apps would help me treat my patients. | |

| Effort expectancy | 1. Using health apps would be easy. |

| 2. Using health apps would be easy for me. | |

| 3. The use of health apps would be clear and understandable to me. | |

| Social influence | 1. Colleagues would advise me to use health apps. |

| 2. My supervisors and/or experienced colleagues would recommend that I use health apps. | |

| Facilitating conditions | 1. I would get support for technical problems with health apps. |

| 2. I have the necessary technical skills to use health apps. |

Notes. Items are adapted from refs 39 45 46.

UTAUT, Unified Theory of Acceptance and Use of Technology.

Secondary outcomes

Performance expectancy of the UTAUT model was our secondary outcome. It was surveyed by means of 3 items (table 1). Performance expectancy is conceptualised as the expectation that an intervention will be beneficial.

An additional secondary outcome was the credibility of health apps, which we assessed via the Credibility/Expectancy Questionnaire (CEQ).44 The credibility scale (eg, ‘How logical does the medical use of health apps for chronic pain seem to you?’), includes three items and asks about treatment credibility on a 9-point response scale (ranging from 1=not at all useful to 9=very useful). Cronbach’s alpha for the credibility scale was 0.91.

Primary and secondary outcomes were measured both before and after the intervention. With our cohort of medical students, only the primary and secondary outcomes were assessed, but not the predictors of acceptance.

Predictors of acceptance

Predictors of acceptance were examined. For this purpose, we used the baseline variable of acceptance as dependent variable and multiple predictors as independent variables (see the Statistical analysis section).

Sociodemographic variables included age, gender, daily smartphone time and smartphone use in a professional context. All of the following items had to be slightly adapted for the purpose of this study.

We assessed the four main constructs of UTAUT model.34 The UTAUT model is an established model which states that the four constructs performance expectancy (Cronbach’s alpha of 0.94); effort expectancy (Cronbach’s alpha of 0.84); facilitating conditions (Spearman’s correlation of 0.17) and social influence (Spearman’s correlation of 0.79) have an effect on the acceptance and intention to use (new) technologies. The scales consist of statements (table 1) that can be agreed to on a 5-point response scale (answers ranging from 1=totally disagree to 5=totally agree). Higher values indicate a higher level of the construct. Items were adapted from different studies.39 45 46

From the Attitudes towards Psychological Online Interventions questionnaire (APOI),47 we used the scepticism and perception of risks scale, which contains four statements (eg, ‘It is difficult for patients to effectively integrate health apps into their daily lives.’) that can be agreed on a 5-point scale (ranging from 1=totally agree to 5=totally disagree). We excluded one item because its content did not fit the survey (‘By using a POI (Psychological Online Interventions), I do not receive professional support.’). Cronbach’s alpha for this scale was 0.57.

Openness (eg, ‘I would use new treatments to help my patients.’) and intuitive appeal (eg, ‘If you learned about a new health app, how likely would you be to use it if it appealed to you intuitively?’) were assessed with the Evidence-based Practice Attitude Scale-36 (EBPAS).48 The EBPAS measures difficulties and supportive factors in implementing evidence-based treatment approaches. Both scales consist of four statements or questions that can be agreed to on a 5-point response scale (ranging from 0=not at all to 4=to a very great extent). Cronbach’s alpha of 0.84 (openness) and 0.87 (intuitive appeal).

Before starting the survey, we gave participants a brief definition of health apps and instructed them that all questions are related to health apps for chronic pain patients.

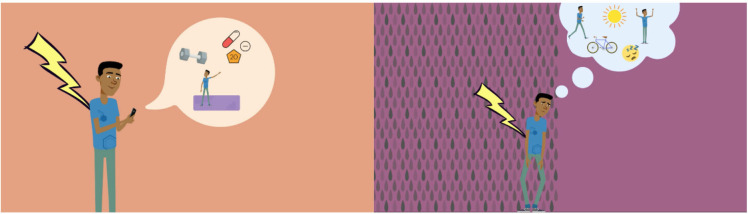

Intervention

The control group (CG) watched a video (3:10 min) providing general information about chronic pain (eg, prevalence and costs for the healthcare system and psychosocial consequences for people suffering from chronic pain). The experimental group (EG,) watched a video (3:23 min) that discussed the content of health apps (eg, how they can be used and the results of recent studies). We kept the information of both videos in simple language. In terms of content, the videos only gave a general overview of the topic without going into too much detail. Both videos were matched in terms of visuals (figure 2). Skipping the video was not possible due to the survey software. We produced the video with the commercial software Powtoon (2012–2021 Powtoon). A professional narrator recorded the audio track. An English translation of the spoken text is in online supplemental material.

Figure 2.

Screenshots of the video interventions. Left: Video of the EG describing possible applications of pain apps; Right: Video of the CG describing psychosocial consequences of chronic pain. CG, control group; EG, experimental group.

bmjopen-2021-060020supp001.pdf (68.7KB, pdf)

Statistical analysis

We used the V.26 of IBM SPPS Statistics software for statistical analyses. There were no missing data due to the software (participants had to answer all questions to get to the next page). For all analyses, we used a type-1 error level of 5%.

Both Mahalanobis distance and Cook’s distance were used to detect multivariate outliers.49 According to the suggestion of Pituch and Stevens, univariate outliers were calculated using standardised values.49 We checked data for plausibility before exclusion. In addition, we checked subjects' comments at the end of the survey for possible bias.

To detect any differences between baseline values, we conducted a multivariate analysis of variance for age; APOI; EBPAS; CEQ; and the UTAUT variables. We assessed gender differences using a χ2 test.

The video’s influence on our primary and secondary outcomes was assessed via a 2 (condition) × 2 (time) repeated measures analysis of variance. Partial eta squared was used as the effect size measure, as suggested by Richardson. Effect sizes were classified according to Richardson50 based on Cohen.51 To reduce inflation of the alpha error, we applied Bonferroni correction to secondary outcomes.52

The variables influencing health apps’ acceptance were calculated using linear regression, in which we added predictor groups blockwise: first, demographic variables (age; gender; daily smartphone time; smartphone use in a working context). The APOI, EBPAS and CEQ scales were then added. Last, the four UTAUT predictors were added to the model. Acceptance from the premeasurement was the dependent variable.32 Because of the large number of predictors and resulting overestimation of R2, we referred to an adjusted R2 as the outcome.53

Patient and public involvement

No patient involved.

Results

Sample characteristics

After inspecting the data, there was one exclusion because the subject stated that he had filled in the questionnaires arbitrarily. Eight subjects were excluded because they had stated ‘psychological psychotherapist’ as their specialist direction, which in Germany indicates that they were not physicians but psychologists. This reduced our sample to 248 (38.71% female) (nEG=124; nCG=124). The average age was 49.56 years (SD=11.51). There were no baseline differences between conditions. The most common fields of specialisation were general practitioners (89); surgeons (39); anesthesiologists (29); neurologists and psychiatrists (23). Acceptance levels at baseline across both conditions were moderate (M=9.51, SD=3.53) with 21.4% in the low range, 47.1% in the moderate range, and 31.5% in the high range. See table 2 for a complete list of specialty directions, additional demographic variables as well as prevalues of the baseline measures.

Table 2.

Demographic characteristics

| Variables | Experimental group | Control group |

| Age | 49.65±11.57 | 49.47±11.49 |

| No (% female) | 124 (35.50) | 124 (41.90) |

| Professional environment (%) | ||

| Outpatient | 89 (71.8) | 77 (62.1) |

| Inpatient | 30 (24.2) | 33 (26.6) |

| Other | 5 (4.0) | 14 (11.3) |

| Medical specialty (%)* | ||

| General medicine | 49 (39.5) | 40 (32.3) |

| Surgery | 17 (13.7) | 22 (17.7) |

| Neurology | 17 (13.7) | 6 (4.8) |

| Anaesthesiology | 11 (8.9) | 18 (14.5) |

| Orthopaedics | 6 (4.8) | 8 (6.5) |

| Paediatrics | 5 (4) | 8 (6.5) |

| Other | 19 (15.4) | 22 (17.7) |

| CEQ | ||

| Credibility | 5.28±1.78 | 5.14±1.96 |

| APOI | ||

| Scepticism and perception of risks | 2.66±0.74 | 2.68±0.81 |

| EBPAS | ||

| Openness | 3.65±0.87 | 3.66±0.93 |

| Intuitive appeal | 3.64±0.88 | 3.57±0.93 |

| UTAUT | ||

| Acceptance | 9.73±3.33 | 9.30±3.72 |

| Performance expectancy | 8.60±3.00 | 8.30±3.10 |

| Effort expectancy | 11.03±2.47 | 10.73±2.42 |

| Social influence | 5.80±2.10 | 5.40±1.95 |

| Facilitating conditions | 7.60±1.71 | 7.48±1.95 |

Notes. Values represent averages (±SD), frequency or percentages.

*Only those medical specialties are listed that were represented by more than 5% in one of the two groups.

APOI, Attitudes towards Psychological Online Interventions; CEQ, Credibility/Expectancy Questionnaire; EBPAS, Evidence-based Practice Attitude Scale-36; UTAUT, Unified Theory of Acceptance and Use of Technology.

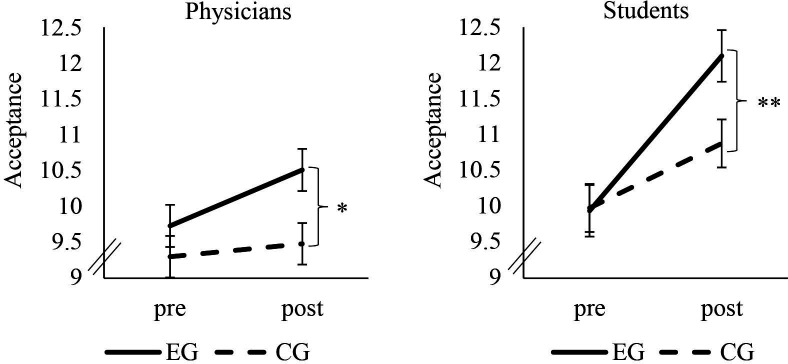

Primary outcome

Our subjects’ acceptance was increased by means of the video (significant main effect of time (F(1, 246)=15.28, p<0.001, ɳ2p=.06)). Further subjects of the EG showed higher increases than those of the CG (significant time × condition interaction (F(1, 246)=15.28, p=0.01, ɳ2p=.02)). After the intervention, the EG (M=10.51, SD=3.28) had higher postacceptance scores than the CG (M=9.48, SD=3.57) (t(246)=-2.37, p=0.01). Group comparison of postassessment data revealed a small effect (Cohen’s d=0.30). Figure 3 shows a comparison between the medical student sample and the physicians.

Figure 3.

Change in acceptance. Error bars indicate SEs. *P<0.05; **P<0.005. CG, control group; EG, experimental group; pre, measurement before the video; post, measurement after the video.

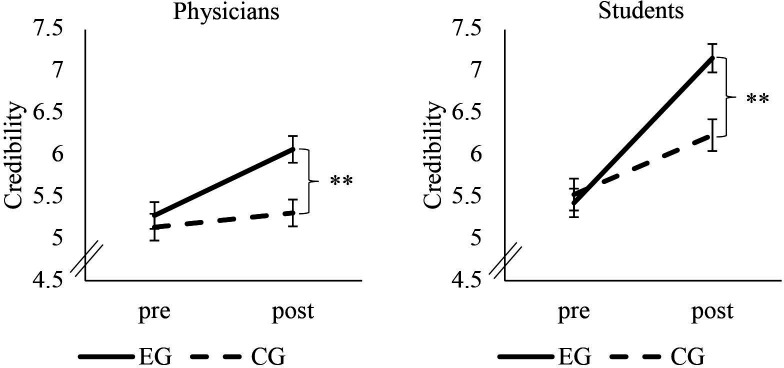

Secondary outcomes

Performance expectancy could also be increased by the video (main effect of time (F(1, 246)=66.85, p<0.001, ɳ2p=0.21)). Again, the increase was higher in the EG than in the CG (significant time × condition interaction (F(1, 246)=6.10, p=0.01, ɳ2p=0.02)). The EG (M=9.94, SD=3.16) had higher post-performance expectancy scores than the CG (M=9.02, SD=3.34) (t(246)=−2.23, p=0.01). Again, group comparison of the post-assessment data revealed a small effect (Cohen’s d=0.28).

We found the same pattern of results for credibility. It was increased by the video (significant effect of time (F(1, 246)=64.47, p<0.001, ɳ2p=0.21), with a higher increase in the EG (significant time × condition interaction (F(1, 246)=25.61, p<0.001, ɳ2p=0.09)). Postvalues of the EG (M=6.07, SD=1.87) were higher than those of the CG (M=5.31. SD=2.14) (t(246)=−2.95, p=0.002). Postassessment group comparison revealed a small to moderate effect for credibility (Cohen’s d=0.38). Figure 4 shows a comparison between the medical student sample and the physicians in terms of credibility.

Figure 4.

Change in credibility. Error bars indicate SEs. **P<0.01. CG, control group; EG, experimental group; pre, measurement before the video; post, measurement after the video.

The medical students’ pattern of results was identical to those illustrated above (see online supplemental material for a detailed presentation of results and demographic variables). The time × condition interaction effect for acceptance had an effect size of ɳ2p=0.13 (figure 2); for performance expectancy an effect size of ɳ2p=0.09; and for credibility an effect size of ɳ2p=0.21 (figure 3).

bmjopen-2021-060020supp002.pdf (316.6KB, pdf)

Predictors of acceptance

Linear regression with the predictors from the first block was significant (R2adj=0.14, F(4, 242)=11.01, p<0.001). Age (β=−0.23, p=0.001) and smartphone use in a professional context (β=0.20, p=0.002) were related to acceptance.

The model improved when we added the second block with APOI, EBPAS as well as CEQ scales (R2adj=0.70, F(8, 238)=72.35, p<0.001). Credibility (β=0.51, p<0.001) was the strongest predictor followed by scepticism (β=−0.24, p<0.001) and intuitive appeal (β=0.13, p=0.01). None of the predictors from the first block were significant.

The model improved marginally after adding the UTAUT variables (R2adj=0.73, F(12, 234)=56.24, p<0.001). Again, credibility was the best predictor (β=0.34, p<0.001), followed by performance expectancy (β=0.30, p<0.001), scepticism (β=−0.18, p<0.001) and intuitive appeal (β=0.11, p=0.03). None of the other predictors were significant. A table with all predictors is provided in online supplemental material.

bmjopen-2021-060020supp003.pdf (38.3KB, pdf)

Discussion

This study is the first to explicitly investigate physicians’ acceptance of health apps focusing on chronic pain. Our results complement preceding studies by adding the physicians’ perspective within an outpatient setting. The main aims of this study were to survey physicians' current acceptance of health apps for patients with chronic pain and to increase their acceptance. In general, physicians’ and medical students’ acceptance for health apps was moderate, which indicates a higher openness than previous studies.32 The experimental intervention successfully increased acceptance, performance expectancy and credibility of health apps among physicians and medical students. Our additional study aim was to identify variables that influence acceptance. Credibility and performance expectancy were the strongest predictors of acceptance, followed by scepticism and intuitive appeal.

We found that our physicians’ moderate acceptance of health apps was higher than that reported in previous studies: A survey conducted between 2015 and 2016 among various healthcare professionals observed rather low acceptance rates for electronic health interventions.32 According to a recent study, psychotherapists exhibited mixed acceptance of blended care (a combination of internet and mobile based interventions and face-to-face therapy).33 However, the aforementioned study was conducted several years ago and perceptions of eHealth may have changed in the meantime. In particular, the COVID-19 pandemic may have influenced opinions about electronic health interventions.54 Also, unlike the studies mentioned above, we specifically asked about health apps in our survey.

Our results indicate that brief, visually appealing educational videos may be an effective acceptance-facilitating intervention for physicians. Results from acceptance-enhancing interventions in other studies were inconclusive. Some researchers demonstrated positive effects,33 while others identified no effects.41 42 Most researchers employed video interventions to increase acceptance toward eHealth interventions in general (eg, online interventions) but not by focusing on apps in particular. Another potential explanation of our positive findings is the specific focus on chronic pain, as the perceived usefulness of eHealth and mHealth interventions could be disorder-specific.

However, the higher effect sizes of the student sample lead us to cautiously conclude that the intervention may be more effective with students. Although young age does not automatically lead to higher digital health competencies,55 young professionals appear to be more receptive to interventions that promote the acceptance of health apps. This could be due to a generally higher familiarity of younger people in using smartphones and their preference for this medium for obtaining health information.56 Since high acceptance does not automatically lead to action,57 long-term studies examining the actual use of health apps among (prospective) physicians would be worthwhile.

The strong association we detected between performance expectancy and acceptance is in line with other research findings. Across studies, performance expectancy has consistently shown to be one of the most important predictors of acceptance of new technologies in the healthcare sector.32 37 This strong association between performance expectancy and acceptance suggests that physicians’ acceptance can be increased by highlighting the benefits of health apps for their patients and themselves. This is also supported by a study which found that physicians are more likely to use mobile devices with drug reference software if they believe it will help their patients.58 In contrast to Hennemann et al,32 we found no impact of social influence on acceptance, nor did we find any influence of facilitating conditions as Liu et al colleagues did.37 Note that the subjects in those two studies were surveyed in inpatient settings. We mainly surveyed physicians in an outpatient setting. Accordingly, our physicians were probably relying less on their employer’s facilitation because they are often self-employed. The same might apply to social support: Medical practices employ much less staff than hospitals, a fact that may have contributed to this construct being less significant in this survey. Additionally, it is worth mentioning that the two studies above did not specifically survey acceptance towards health apps and that they were conducted a few years ago. The relevance of certain constructs like facilitating conditions may have lessened since then.

The association we found between credibility and acceptance also concurs with previous research findings. A study with college students concluded that credibility influences the perceptions of health apps positively.59 The credibility of new technologies in the healthcare field is important60 as it increases the likelihood that the technology will be used in the short term and long term.61 62 Accordingly, the low prescription rates (or the paucity of recommendations) of health apps by physicians could be partly attributable to their lack of credibility. One potential reason for this is the low quality of many health apps on the market.63 Important to the credibility of information about new electronic health measures is the source of the information. Websites controlled by editors are perceived to be more credible, as is information from independent medical experts.64 Because the source of the material appears to be more important than its design,65 independent research institutes can play an important role in disseminating evidence-based information about electronic healthcare interventions. By including highly visible videos on their websites, they could increase both the acceptance and awareness of health apps. Our results indicate that such an approach holds particular promise for medical students, highlighting the call for establishing eHealth curricula in education.60 66

Technological influences will continue to make strong inroads into medicine,67 which requires that healthcare professionals are able to adapt new technologies flexibly. Especially considering the rapid technological progress in this area, the evidence from earlier studies and from ours provide valuable information about the importance of communicating with physicians, psychotherapists and other professional groups in the healthcare sector about eHealth in general and health apps in particular. Video interventions can be an effective and cost-saving method of communicating the potential, opportunities and limitations of these new technologies. They reach the target group at a low threshold, for example, by being included on informational websites, newsletters or at training courses. This informational material should emphasise both performance expectancy and the credibility of the intervention being addressed.

In addition to increasing acceptance of health apps, it is also important to provide physicians with specific recommendations on which apps are best to use for which patients. Due to the volume of the still growing market, it is hardly possible for individuals to get a comprehensive overview of the range of health apps available. It, therefore, seems sensible to establish guidelines for physicians on which apps can be helpful for which problems—just as there are guidelines for medications for diseases. To achieve this, a recent study suggests specific recommendations from medical associations or scientific societies, as well as special training in this area.68 This could help physicians integrate health apps into their workflows.69

Limitations

Our study has some limitations. First, due to our broad definition of pain apps, participants may have assumed different usage scenarios for health apps. This could have influenced their acceptance. Accordingly, future studies could investigate attitudes toward specific apps, for example, psychological intervention apps. There may have been a selection bias due to the data collection method. Thus, physicians who were already open and interested in mHealth may have participated, which would restrict the generalisability of our results. Furthermore, our results relied solely on self-reporting. Most of our items were adaptations of already tested items or scales on questionnaires. This approach was necessary due to the lack of appropriate health app-specific questionnaires, but it remains a limitation. In addition, the scale facilitating conditions had low correlation measures, accordingly results of this scale should be interpreted with caution. Because of the survey’s brevity, we could not collect many other potentially relevant constructs like technologisation threat47 or previous experience with health apps. As acceptance due to self-regulatory deficits70 does not guarantee that intention becomes an action in the future,57 longitudinal surveys to examine whether video interventions increase the actual recommendations or prescriptions of the respective technologies should be one of the next steps in research.

Strengths

To our knowledge, this is the first study that investigated and increased physicians’ acceptance of health apps for managing chronic pain. This professional group is of particular interest due to the gatekeeper role they play in the healthcare system. Furthermore, we based the UTAUT questionnaires on predecessor studies, to increase comparability. In addition, we engaged a strong CG whose intervention was timed, visually and audibly matched to the intervention video. Despite the brevity of the survey and our strong CG, we identified a superior effect of the intervention video. The video intervention was very short and can be integrated at a low-threshold within different platforms.

Conclusion

Our results show that physicians are open to using health apps for chronic pain patients as they demonstrated moderate to high acceptance rates. Our study also shows that performance expectancy and credibility had the strongest influence on acceptance. As low-threshold entities, brief video interventions are useful tools that can strengthen these constructs and reach a high number of health professionals. They can thus be helpful in overcoming certain barriers to implementing mobile health interventions in clinical practice. Future studies should examine the long-term effect of acceptance facilitating interventions and their impact on behavioural measures.

Supplementary Material

Acknowledgments

We would like to thank Nora Jander for her excellent voice-over on the video and Benno Glöckler and Kari Fuhrmann for their support in recruiting the physicians.

Footnotes

Contributors: HJH, JAG, WR and JR: Conception and design of the study; HJH: data collection, analysis and interpretation, manuscript preparation; JAG, WR and JR: supervision, manuscript editing and reviewing; JR: project administration and guarantor. All authors approved the final manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data are available on reasonable request. A request can be made to the corresponding author.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

This study involves human participants and was approved by Ethics Committee of the Philipps University of Marburg, reference number: 2020-72k-2.

References

- 1.GBD 2015 Disease and Injury Incidence and Prevalence Collaborators . Global, regional, and national incidence, prevalence, and years lived with disability for 310 diseases and injuries, 1990-2015: a systematic analysis for the global burden of disease study 2015. Lancet 2016;388:1545–602. 10.1016/S0140-6736(16)31678-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Graham JE, Streitel KL. Sleep quality and acute pain severity among young adults with and without chronic pain: the role of biobehavioral factors. J Behav Med 2010;33:335–45. 10.1007/s10865-010-9263-y [DOI] [PubMed] [Google Scholar]

- 3.Ratcliffe GE, Enns MW, Belik S-L, et al. Chronic pain conditions and suicidal ideation and suicide attempts: an epidemiologic perspective. Clin J Pain 2008;24:204–10. 10.1097/AJP.0b013e31815ca2a3 [DOI] [PubMed] [Google Scholar]

- 4.Ryan S, Hill J, Thwaites C, et al. Assessing the effect of fibromyalgia on patients’ sexual activity. Nurs Stand 2008;23:35–41. 10.7748/ns2008.09.23.2.35.c6669 [DOI] [PubMed] [Google Scholar]

- 5.Veehof MM, Trompetter HR, Bohlmeijer ET, et al. Acceptance- and mindfulness-based interventions for the treatment of chronic pain: a meta-analytic review. Cogn Behav Ther 2016;45:5–31. 10.1080/16506073.2015.1098724 [DOI] [PubMed] [Google Scholar]

- 6.Glombiewski JA, Holzapfel S, Riecke J, et al. Exposure and CBT for chronic back pain: an RCT on differential efficacy and optimal length of treatment. J Consult Clin Psychol 2018;86:533–45. 10.1037/ccp0000298 [DOI] [PubMed] [Google Scholar]

- 7.Williams AC de C, Fisher E, Hearn L, et al. Psychological therapies for the management of chronic pain (excluding headache) in adults. Cochrane Database Syst Rev 2021;2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Morley S. Relapse prevention: still neglected after all these years. Pain 2008;134:239–40. 10.1016/j.pain.2007.12.004 [DOI] [PubMed] [Google Scholar]

- 9.Gaskin DJ, Richard P. The economic costs of pain in the United States. J Pain 2012;13:715–24. 10.1016/j.jpain.2012.03.009 [DOI] [PubMed] [Google Scholar]

- 10.Leadley RM, Armstrong N, Lee YC, et al. Chronic diseases in the European Union: the prevalence and health cost implications of chronic pain. J Pain Palliat Care Pharmacother 2012;26:310–25. 10.3109/15360288.2012.736933 [DOI] [PubMed] [Google Scholar]

- 11.Landmark T, Romundstad P, Dale O, et al. Chronic pain: one year prevalence and associated characteristics (the HUNT pain study). Scand J Pain 2013;4:182–7. 10.1016/j.sjpain.2013.07.022 [DOI] [PubMed] [Google Scholar]

- 12.Patel S, Greasley K, Watson PJ. Barriers to rehabilitation and return to work for unemployed chronic pain patients: a qualitative study. Eur J Pain 2007;11:831–40. 10.1016/j.ejpain.2006.12.011 [DOI] [PubMed] [Google Scholar]

- 13.Turner JA. Pain and disability. Clinical, behavioral, and public policy perspectives (Institute of Medicine Committee on pain, disability, and chronic illness behavior). Pain 1988;32:385–6. [Google Scholar]

- 14.Austrian JS, Kerns RD, Reid MC. Perceived barriers to trying self-management approaches for chronic pain in older persons. J Am Geriatr Soc 2005;53:856–61. 10.1111/j.1532-5415.2005.53268.x [DOI] [PubMed] [Google Scholar]

- 15.Becker WC, Dorflinger L, Edmond SN, et al. Barriers and facilitators to use of non-pharmacological treatments in chronic pain. BMC Fam Pract 2017;18:41. 10.1186/s12875-017-0608-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.International Pain Summit Of The International Association For The Study Of Pain . Declaration of montréal: declaration that access to pain management is a fundamental human right. J Pain Palliat Care Pharmacother 2011;25:29–31. 10.3109/15360288.2010.547560 [DOI] [PubMed] [Google Scholar]

- 17.Holmes EA, Ghaderi A, Harmer CJ, et al. The Lancet Psychiatry Commission on psychological treatments research in tomorrow’s science. Lancet Psychiatry 2018;5:237–86. 10.1016/S2215-0366(17)30513-8 [DOI] [PubMed] [Google Scholar]

- 18.Luxton DD, McCann RA, Bush NE, et al. mHealth for mental health: integrating smartphone technology in behavioral healthcare. Prof Psychol Res Pract 2011;42:505–12. [Google Scholar]

- 19.Demiris G, Afrin LB, Speedie S, et al. Patient-centered applications: use of information technology to promote disease management and wellness. A white paper by the AMIA knowledge in motion Working group. J Am Med Informatics Assoc 2008;15:8–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Alexander JC, Joshi GP. Smartphone applications for chronic pain management: a critical appraisal. J Pain Res 2016;9:731–4. 10.2147/JPR.S119966 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chhabra HS, Sharma S, Verma S. Smartphone APP in self-management of chronic low back pain: a randomized controlled trial. Eur Spine J 2018;27:2862–74. 10.1007/s00586-018-5788-5 [DOI] [PubMed] [Google Scholar]

- 22.Toelle TR, Utpadel-Fischler DA, Haas K-K, et al. App-based multidisciplinary back pain treatment versus combined physiotherapy plus online education: a randomized controlled trial. NPJ Digit Med 2019;2:34. 10.1038/s41746-019-0109-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pfeifer A-C, Uddin R, Schröder-Pfeifer P, et al. Mobile Application-Based interventions for chronic pain patients: a systematic review and meta-analysis of effectiveness. J Clin Med 2020;9:3557. 10.3390/jcm9113557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Moman RN, Dvorkin J, Pollard EM, et al. A systematic review and meta-analysis of unguided electronic and mobile health technologies for chronic pain-Is it time to start prescribing electronic health applications? Pain Med 2019;20:2238–55. 10.1093/pm/pnz164 [DOI] [PubMed] [Google Scholar]

- 25.Terhorst Y, Messner E-M, Schultchen D, et al. Systematic evaluation of content and quality of English and German pain apps in European APP stores. Internet Interv 2021;24:100376. 10.1016/j.invent.2021.100376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cowan KE, McKean AJ, Gentry MT, et al. Barriers to use of telepsychiatry: clinicians as gatekeepers. Mayo Clin Proc 2019;94:2510–23. 10.1016/j.mayocp.2019.04.018 [DOI] [PubMed] [Google Scholar]

- 27.Kayyali R, Peletidi A, Ismail M, et al. Awareness and use of mHealth Apps: a study from England. Pharmacy 2017;5:33. 10.3390/pharmacy5020033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Anastasiadou D, Folkvord F, Serrano-Troncoso E, et al. Mobile health adoption in mental health: user experience of a mobile health APP for patients with an eating disorder. JMIR Mhealth Uhealth 2019;7:e12920. 10.2196/12920 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Parker SJ, Jessel S, Richardson JE, et al. Older adults are mobile too!Identifying the barriers and facilitators to older adults’ use of mHealth for pain management. BMC Geriatr 2013;13:43. 10.1186/1471-2318-13-43 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Byambasuren O, Beller E, Glasziou P. Current knowledge and adoption of mobile health Apps among Australian general practitioners: survey study. JMIR Mhealth Uhealth 2019;7:e13199. 10.2196/13199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ross EL, Jamison RN, Nicholls L, et al. Clinical integration of a smartphone APP for patients with chronic pain: retrospective analysis of predictors of benefits and patient engagement between clinic visits. J Med Internet Res 2020;22:e16939. 10.2196/16939 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hennemann S, Beutel ME, Zwerenz R. Ready for eHealth? Health professionals' acceptance and adoption of eHealth interventions in inpatient routine care. J Health Commun 2017;22:274–84. 10.1080/10810730.2017.1284286 [DOI] [PubMed] [Google Scholar]

- 33.Baumeister H, Terhorst Y, Grässle C, et al. Impact of an acceptance facilitating intervention on psychotherapists’ acceptance of blended therapy. PLoS One 2020;15:e0236995. 10.1371/journal.pone.0236995 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Venkatesh V, Morris MG, Davis GB, et al. User acceptance of information technology: toward a unified view. Mis Q 2003;27:425. 10.2307/30036540 [DOI] [Google Scholar]

- 35.Gagnon M-P, Ngangue P, Payne-Gagnon J, et al. m-Health adoption by healthcare professionals: a systematic review. J Am Med Inform Assoc 2016;23:212–20. 10.1093/jamia/ocv052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lazuras L, Dokou A. Mental health professionals’ acceptance of online counseling. Technol Soc 2016;44:10–14. [Google Scholar]

- 37.Liu L, Miguel Cruz A, Rios Rincon A, et al. What factors determine therapists’ acceptance of new technologies for rehabilitation – a study using the unified theory of acceptance and use of technology (UTAUT). Disabil Rehabil 2015;37:447–55. 10.3109/09638288.2014.923529 [DOI] [PubMed] [Google Scholar]

- 38.Sezgin E, Özkan-Yildirim S, Yildirim S. Investigation of physicians’ awareness and use of mHealth apps: A mixed method study. Health Policy Technol 2017;6:251–67. 10.1016/j.hlpt.2017.07.007 [DOI] [Google Scholar]

- 39.Baumeister H, Seifferth H, Lin J, et al. Impact of an acceptance facilitating intervention on patients' acceptance of Internet-based pain interventions: a randomized controlled trial. Clin J Pain 2015;31:528–35. 10.1097/AJP.0000000000000118 [DOI] [PubMed] [Google Scholar]

- 40.Lin J, Faust B, Ebert DD, et al. A web-based acceptance-facilitating intervention for identifying patients' acceptance, uptake, and adherence of internet- and mobile-based pain interventions: randomized controlled trial. J Med Internet Res 2018;20:e244. 10.2196/jmir.9925 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Donovan CL, Poole C, Boyes N, et al. Australian mental health worker attitudes towards cCBT: what is the role of knowledge? Are there differences? Can we change them? Internet Interv 2015;2:372–81. 10.1016/j.invent.2015.09.001 [DOI] [Google Scholar]

- 42.Schuster R, Pokorny R, Berger T, et al. The advantages and disadvantages of online and blended therapy: survey study amongst licensed psychotherapists in Austria. J Med Internet Res 2018;20:e11007. 10.2196/11007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Faul F, Erdfelder E, Lang A-G, et al. G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods 2007;39:175–91. 10.3758/bf03193146 [DOI] [PubMed] [Google Scholar]

- 44.Devilly GJ, Borkovec TD. Psychometric properties of the credibility/expectancy questionnaire. J Behav Ther Exp Psychiatry 2000;31:73–86. 10.1016/s0005-7916(00)00012-4 [DOI] [PubMed] [Google Scholar]

- 45.Baumeister H, Nowoczin L, Lin J, et al. Impact of an acceptance facilitating intervention on diabetes patients’ acceptance of Internet-based interventions for depression: a randomized controlled trial. Diabetes Res Clin Pract 2014;105:30–9. 10.1016/j.diabres.2014.04.031 [DOI] [PubMed] [Google Scholar]

- 46.Ebert DD, Berking M, Cuijpers P, et al. Increasing the acceptance of internet-based mental health interventions in primary care patients with depressive symptoms. A randomized controlled trial. J Affect Disord 2015;176:9–17. 10.1016/j.jad.2015.01.056 [DOI] [PubMed] [Google Scholar]

- 47.Schröder J, Sautier L, Kriston L, et al. Development of a questionnaire measuring attitudes towards psychological online Interventions-the APOI. J Affect Disord 2015;187:136–41. 10.1016/j.jad.2015.08.044 [DOI] [PubMed] [Google Scholar]

- 48.Rye M, Torres EM, Friborg O, et al. The evidence-based practice attitude scale-36 (EBPAS-36): a brief and pragmatic measure of attitudes to evidence-based practice validated in US and Norwegian samples. Implement Sci 2017;12:1–11. 10.1186/s13012-017-0573-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Pituch KA, Stevens JP. Applied multivariate statistics for the social sciences: analyses with SAS and IBM’s SPSS 2016.

- 50.Richardson JTE. Eta squared and partial ETA squared as measures of effect size in educational research. Educ Res Rev 2011;6:135–47. [Google Scholar]

- 51.Cohen J. Statistical power analysis for the behavioural sciences. New York: Academic Press, 1969. [Google Scholar]

- 52.Armstrong RA. When to use the Bonferroni correction. Ophthalmic Physiol Opt 2014;34:502–8. 10.1111/opo.12131 [DOI] [PubMed] [Google Scholar]

- 53.Miles J. R -squared, adjusted R -squared. Encycl Stat Behav Sci 2005;4:1655–7. [Google Scholar]

- 54.Wind TR, Rijkeboer M, Andersson G, et al. The COVID-19 pandemic: The ‘black swan’ for mental health care and a turning point for e-health. Internet Interv 2020;20:100317. 10.1016/j.invent.2020.100317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Machleid F, Kaczmarczyk R, Johann D, et al. Perceptions of digital health education among European medical students: mixed methods survey. J Med Internet Res 2020;22:e19827. 10.2196/19827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Granger D, Vandelanotte C, Duncan MJ, et al. Is preference for mHealth intervention delivery platform associated with delivery platform familiarity? BMC Public Health 2016;16:619. 10.1186/s12889-016-3316-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Sheeran P, Webb TL. The intention-behavior gap. Soc Personal Psychol Compass 2016;10:503–18. 10.1111/spc3.12265 [DOI] [Google Scholar]

- 58.Handler SM, Boyce RD, Ligons FM, et al. Use and perceived benefits of mobile devices by physicians in preventing adverse drug events in the nursing home. J Am Med Dir Assoc 2013;14:906–10. 10.1016/j.jamda.2013.08.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Cho J, Lee HE, Quinlan M. Complementary relationships between traditional media and health apps among American college students. J Am Coll Health 2015;63:248–57. 10.1080/07448481.2015.1015025 [DOI] [PubMed] [Google Scholar]

- 60.van Gemert-Pijnen JEWC, Wynchank S, Covvey HD, et al. Improving the credibility of electronic health technologies. Bull World Health Organ 2012;90:323. 10.2471/BLT.11.099804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Shin D-H, Lee S, Hwang Y. How do credibility and utility play in the user experience of health informatics services? Comput Human Behav 2017;67:292–302. 10.1016/j.chb.2016.11.007 [DOI] [Google Scholar]

- 62.Lin J, Wang B, Wang N, et al. Understanding the evolution of consumer trust in mobile commerce: a longitudinal study. Inf Technol Manag 2014;15:37–49. 10.1007/s10799-013-0172-y [DOI] [Google Scholar]

- 63.Reynoldson C, Stones C, Allsop M, et al. Assessing the quality and usability of smartphone apps for pain self-management. Pain Med 2014;15:898–909. 10.1111/pme.12327 [DOI] [PubMed] [Google Scholar]

- 64.Hu Y, Shyam Sundar S. Effects of online health sources on credibility and behavioral intentions. Communic Res 2010;37:105–32. 10.1177/0093650209351512 [DOI] [Google Scholar]

- 65.Chang Y-S, Zhang Y, Gwizdka J. The effects of information source and eHealth literacy on consumer health information credibility evaluation behavior. Comput Human Behav 2021;115:106629. 10.1016/j.chb.2020.106629 [DOI] [Google Scholar]

- 66.Gordon WJ, Landman A, Zhang H, et al. Beyond validation: getting health apps into clinical practice. NPJ Digit Med 2020;3:14. 10.1038/s41746-019-0212-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Seyhan AA, Carini C. Are innovation and new technologies in precision medicine paving a new era in patients centric care? J Transl Med 2019;17:114. 10.1186/s12967-019-1864-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Jacob C, Sanchez-Vazquez A, Ivory C. Social, organizational, and technological factors impacting clinicians' adoption of mobile health tools: systematic literature review. JMIR Mhealth Uhealth 2020;8:e15935. 10.2196/15935 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Dahlhausen F, Zinner M, Bieske L, et al. Physicians’ attitudes toward prescribable mHealth apps and implications for adoption in Germany: mixed methods study. JMIR Mhealth Uhealth 2021;9:e33012. 10.2196/33012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Gollwitzer PM, Sheeran P. Implementation intentions and goal achievement: a meta‐analysis of effects and processes. Adv Exp Soc Psychol 2006;38:69–119. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2021-060020supp001.pdf (68.7KB, pdf)

bmjopen-2021-060020supp002.pdf (316.6KB, pdf)

bmjopen-2021-060020supp003.pdf (38.3KB, pdf)

Data Availability Statement

Data are available on reasonable request. A request can be made to the corresponding author.