Abstract

Extant literature suggests that performance on visual arrays tasks reflects limited-capacity storage of visual information. However, there is also evidence to suggest that visual arrays task performance reflects individual differences in controlled processing. The purpose of this study is to empirically evaluate the degree to which visual arrays tasks are more closely related to memory storage capacity or measures of attention control. To this end, we conducted new analyses on a series of large data sets that incorporate various versions of a visual arrays task. Based on these analyses, we suggest that the degree to which the visual arrays is related to memory storage ability or effortful attention control may be task-dependent. Specifically, when versions of the task require participants to ignore elements of the target display, individual differences in controlled attention reliably provide unique predictive value. Therefore, at least some versions of the visual arrays tasks can be used as valid indicators of individual differences in attention control.

Keywords: attention control, change detection, visual arrays, working memory capacity

The visual arrays task, also known as the change-detection task, is one of the most commonly used tools to understand the cognitive and neurophysiological nature of visual working memory (Fukuda et al., 2010; Luck & Vogel, 1997). The task is typically interpreted as a fairly pure measure of visual memory storage capacity. However, the mechanisms reflected by these measures have been questioned. Engle and colleagues (Draheim et al., 2021; Shipstead et al., 2014) have suggested that the standard visual storage interpretation may be incomplete. In particular, they have shown a strong relationship between visual arrays tasks and attention control at the latent construct level that does not align with a strict visual working memory explanation. Although this finding does not preclude interpreting the visual arrays as a memory storage (i.e., working memory capacity) task, it does warrant further exploration of the nature of its relationship with constructs other than visual storage. Additionally, there are various types of visual arrays tasks that include differences in task design that may alter which construct(s) causally contribute to change detection accuracy. Therefore, our question is: Which theoretical construct(s) does the visual arrays task reflect and does this depend on the task design?

We approach this question by reviewing a subset of the literature on the visual arrays task and, using data collected in our lab over the last 10+ years, to empirically assess the extent to which performance on visual arrays metrics is uniquely predicted by both working memory capacity and attention control at the latent level. Crucially, our review is selective and informed by a theoretical approach which assumes that the working memory system comprises of both mechanisms for memory storage and attentional control processes related to the active maintenance and manipulation of the contents of working memory (Engle et al., 1999; Engle & Martin, 2018). Our intent is not to create the impression that no viable theoretical alternatives exist (e.g., Oberauer & Lin, 2017) but rather to narrow the scope and focus of this project toward an evaluation of the prevailing interpretation of visual arrays tasks as measures of visual storage capacity. We do, however, discuss alternative interpretations of our results based on multiple frameworks of attention and working memory.

Visual Arrays Tasks: An Introduction

The general procedure of a visual arrays task is to briefly present a target array of items (e.g., colored squares) on a computer monitor, typically for a duration of 100 ms. After a short interval, a probe array appears and the test-taker must decide whether or not one of the items has changed on some dimension (e.g., color) relative to the target array. When the target array includes 3–4 items, accuracy tends to be nearly perfect. As items are added to the display, performance declines in a linear manner (Luck & Vogel, 1997). This trend is typically interpreted as an indication that people can store a finite number of information chunks in visuospatial memory (Cowan et al., 2005; Rouder et al., 2011; but see Ma et al., 2014). Scores on these tasks are determined based on an equation originally developed by Pashler (1988) by which one can calculate a capacity score (denoted as k) for an individual’s performance on the task. This k capacity score is thought to reflect the number of items one can store in visual working memory (Cowan, 2010). Moreover, k values reach asymptote around 3 or 4, suggesting that most individuals can maintain three to four chunks in visual working memory at a time, a value that accords well with estimates of memory storage capacity derived from other measures (Cowan, 2010).

However, and critical to our empirical tests, there are different versions of the visual arrays task that may change what cognitive processes the resulting k capacity score represents. In particular, in visual arrays with a selection component, participants are cued to focus on only a subset of the items presented in the target array. Participants are asked to attend to only these cued items rather than the entire array, and the cued dimension can vary on location, color, size, shape, and so forth We will refer to the traditional visual arrays task as nonselective visual arrays and those that require ignoring some elements of the target array as selective visual arrays.

If the nonselective and selective visual arrays tasks are fundamentally the same (that is, they measure the same exact construct[s] to the same degree), then k scores should be constant across the two. In fact, mean k scores on selective visual arrays are typically lower, about half, compared with the typical three to four k scores observed with nonselective visual arrays (Shipstead et al., 2014; Shipstead & Yonehiro, 2016). To explain this dissociation, task specific aspects must be considered. Specifically, nonselective visual arrays tasks have no explicit attentional filtering/selection component and no distractors. By contrast, in the selective visual arrays tasks, ineffective selection/filtering would lead to nontarget elements of the target array being represented in working memory, effectively doubling the set-size of items attended and resulting in a lower k score (i.e., performance on a set-size of three items becomes equivalent to performance on a set-size of six items). Thus, the source of individual variability may be different depending on the nature of the visual arrays task in question (i.e., nonselective vs. selective tasks). Currently, there are two proposed sources of variability: storage capacity, which we have addressed (Cowan et al., 2005; Rouder et al., 2011 )m and attention control (Draheim et al., 2021; Shipstead et al., 2014), to which we now turn.

Attention Control

The ability to control and direct attention is necessary for the successful execution of many tasks (Redick et al., 2016; Shipstead et al., 2016). However, the degree to which a specific task reflects this ability to control attention varies.1 According to the executive attention account of working memory capacity, the executive attention system is responsible for maintaining and ignoring distraction in the service of executing a given task (Engle et al., 1999; Shipstead et al., 2016). In storage-based tasks, a principal function of the executive attention system is to actively maintain information in working memory and reduce interference by preventing the storage of irrelevant information. More interference diminishes available storage for relevant memory items, leading to lower scores on capacity tasks (Oberauer, 2002).

A critical feature of this theoretical approach is that there may be multiple sources of interference, some more obvious than others. For instance, interference can occur while trying to actively maintain items in memory by requiring the completion of a secondary task at the same time, such as in complex memory span tasks (Conway et al., 2005). Interference can also occur proactively, when memory items from previous trials interfere with memory items on the current trial (Kane & Engle, 2000; Lustig et al., 2001). Interference can even occur as a result of intrusive and off-task thoughts (McVay & Kane, 2010). Given this executive attention account of working memory capacity, it would be expected that even nonselective visual arrays would depend on the executive attention system to reduce interference from previous trials or from intrusive and off-task thoughts. We will now consider evidence for the role of attention control in nonselective and selective visual arrays tasks.

Attention Control in Nonselective Visual Arrays

First, most studies assessing individual variability in visual arrays performance include set-sizes exceeding the average capacity-limit of three to four items. From a pure storage capacity interpretation, low and high-capacity individuals should be similarly impacted by larger set-sizes. If k merely represents storage capacity independent of controlled processing, then k scores should plateau at their maximum limit for all subjects. In a large sample (N = 495), Fukuda et al. (2015) examined the change in k scores from a set-size of 4 to a set-size of 8. Whereas the mean k score was slightly lower for a set-size of eight, the variability in mean k scores nearly doubled. The main reason for this increased variability was that low-capacity subjects performed much worse on set-size eight compared with set-size four, whereas high-capacity subjects showed only a small difference in performance on set-size eight.

The variability in performance decrements at larger set-sizes is more readily explained within an attentional control framework. Fukuda et al. (2015) suggest that upon the initial presentation of a target array, there is a global attentional capture to all of the elements comprising the array. This capture becomes overwhelming at large set-sizes since not all items can be stored in working memory, and controlled processing is required to reorient attention to only a manageable subset of items. High-capacity individuals engage these control processes more quickly and effectively, leading to fairly stable performance across larger set-sizes. For low-capacity individuals, however, the ineffective execution of controlled processing causes a performance decrement at larger set-sizes.

To explore this interpretation further, Fukuda et al. (2015) increased the duration for which the target array was presented. Consistent with their controlled processing explanation, only low-capacity subjects benefited from the longer exposure. High-capacity individuals already effectively reorient their attention during the encoding phase, and therefore show no benefit from the extra time. This suggests that low-capacity individuals are unable to effectively engage controlled processing unless given more time do so and therefore show lower k scores even on nonselective visual arrays tasks. These results corroborate those from Fukuda and Vogel (2011), where low-capacity individuals were much slower at disengaging from attention capture than high-capacity subjects on a variant of a visual arrays task. Therefore, although these results are difficult to reconcile from a pure storage capacity interpretation of nonselective visual arrays performance, they do follow naturally from an attention control explanation.

In addition, there may be other aspects of attention control at play in visual arrays task besides dealing with larger set-sizes. Shipstead and Engle (2013) manipulated the length of the intertrial interval (ITI; the delay between the probe for trial n and the target for trial n + 1) and interstimulus interval (ISI; the delay period between target array and probe array for the same trial). They assumed that shorter ITIs would create more proactive interference. Consistent with this prediction, the correlation between working memory capacity and nonselective visual arrays capacity, k, was highest when the ITI was short and ISI was long. This makes sense, given the executive attention view of working memory capacity. Nonselective visual arrays performance showed a strikingly different relationship with fluid intelligence, which was most strongly related to k capacity scores with long ITIs and long ISIs. This finding suggests that high fluid intelligence individuals took advantage of the longer ITI to reduce proactive interference from previous trials (cf., Shipstead et al., 2016). This study provides some evidence that even the nonselective visual arrays tasks do not reflect a pure measure of storage capacity but also reflect individual differences in reducing interference.

Finally, asserting that individual differences in attention control are a determining factor in nonselective visual arrays capacity scores is consistent with theoretical accounts of other working memory capacity measures (see Shipstead et al., 2014). For instance, in complex working memory span tasks there is a long retention interval during which a secondary task is performed. In the nonselective visual arrays tasks, the retention interval is very short and there is no explicit interference or distractors. Given these differences, it would be expected that nonselective visual arrays would provide a more pure measure of storage capacity in working memory and complex-span tasks would reflect more active maintenance and interference reduction (i.e., controlled processing). The differences between these measures of working memory capacity have likely given face-validity to the interpretation of the nonselective visual arrays as a pure measure of visual working memory capacity. If this were the case, then it would be expected that complex-span measures of working memory capacity would be more highly related to tasks that measure the control of attention than would nonselective visual arrays. To the contrary, Shipstead et al. (2015) found that a nonselective visual arrays factor contributed unique variance to an attention control factor (i.e., antisaccade, Stroop, & flanker tasks) above and beyond complex-span measures of working memory capacity. Therefore, despite the face-validity of the visual arrays as a pure measure of storage capacity, there is evidence which suggests that individual variability in visual arrays performance reflects processes related to controlled processing in nonmemory based attention tasks.

Attention Control in Selective Visual Arrays

Even though the selective visual arrays have more face-validity to the involvement of attention control, these tasks are also typically considered as relatively pure measures of visual storage capacity. However, there are physiological and behavioral evidence that are more consistent with an attention control interpretation. A commonly used EEG signature in visual arrays tasks is the contralateral delay activity. It is obtained in selective visual arrays tasks in which participants are cued to only attend to either the left or right side of the target array and is characterized by a negative slow-wave event related potential (ERP) on the contralateral (opposite) side of the brain as attended items. As the number of memory items presented in the target array increases, so should arousal, effort, or task difficulty; however contralateral delay activity reaches a maximum at around three to four items, mirroring the capacity indicated by behavioral indices such as the k score (Vogel & Machizawa, 2004). This provides evidence that contralateral delay activity is sensitive to the number of items stored in visual working memory (Feldmann-Wüstefeld et al., 2018). Fukuda et al. (2015) observed a similar contralateral ERP response across larger set-sizes for both low and high-capacity subjects (as indexed by k score values). However, they also observed that low-capacity subjects showed an increase in ipsilateral (corresponding to the side with irrelevant items) ERP response with increasing set-size, but high-capacity subjects did not. This suggests that low-capacity individuals stored irrelevant items from the uncued side of the display, whereas high-capacity individuals effectively filtered out the irrelevant items. Therefore, in selective visual arrays tasks, attention control may determine k scores because failing to sort the irrelevant items would lead to a lower k score.

Additionally, Vogel, McCollough, et al. (2005) assessed whether high- and low-capacity individuals (as determined by k scores) were equally capable of selectively attending elements of a target array. To do so, they manipulated the set-size and number of irrelevant items in a selective visual arrays task in which participants had to respond to the orientation of a probed rectangle, but only in the target color (red (dark gray) or blue (light gray)). A cue before the target array presentation indicated whether to attend to red (dark gray) or blue (light gray) rectangles. There were three conditions (a) a two-item array with no distractors (e.g., two red (dark gray) rectangles), (b) a two-item array with two distractors (e.g., two red (dark gray) rectangles and two blue (light gray) rectangles), and (c) a four-item array with no distractors (e.g., four red (dark gray) rectangles). To the extent that an individual effectively ignores distractors, the contralateral delay activity for the first two conditions should be equivalent, because both are of set-size two. To the extent that an individual does not effectively ignore distractors and stores them in working memory, the contralateral delay activity for the second and third conditions should be equivalent, since both have four total items in the display. High-capacity individuals exhibited the former pattern, whereas low-capacity individuals exhibited the latter.

These studies converge on the interpretation that, for selective visual arrays tasks, high-capacity individuals are those who can effectively ignore irrelevant items in the target array. Low-capacity individuals cannot ignore irrelevant items and tend to store the irrelevant items in working memory, diminishing available capacity. Based on the different pattern of contralateral delay activity as a function of whether or not items were successfully selected, Vogel et al. (2005) concluded that control-led processes were an important determinant of which items are stored in visual working memory, and thereby one’s visual working memory capacity. Several other EEG investigations indicate that filtering efficiency in selective visual arrays is sensitive to the effects of sleep deprivation (Drummond et al., 2012), aging (Jost et al., 2011), and Parkinson’s disease (Lee et al., 2010), further supporting to the notion that selective visual arrays are affected by attentional processes.

Finally, domain-generality is a core feature of how attention control has been conceptualized (cf., Kane et al., 2004), and therefore is an important benchmark for viewing the selective visual arrays as reflecting attention control.2 Shipstead and Yonehiro (2016) found that selective visual arrays performance reflects two factors, one domain-general and one visuospatial. The domain-general factor correlated with reasoning ability, regardless of whether reasoning occurred in the visual or verbal domains. The visuospatial factor, meanwhile, was strictly related to visual reasoning. Shipstead et al. (2014) also found that, despite their heavily visuospatial nature, selective visual arrays performance correlated with verbal retrieval (i.e., secondary memory; see Unsworth & Engle, 2007). Moreover, this retrieval ability also partially mediated the correlation between selective visual arrays performance and fluid intelligence. Therefore, there is evidence that selective visual arrays performance reflects a unique domain-general resource beyond static visual storage capacity.3

The Current Study

The evidence reviewed so far calls into question the interpretation that visual arrays tasks only reflect individual differences in visual storage capacity and is therefore a relatively pure measure of capacity in visual working memory. With two diverging interpretations of what abilities the visual arrays task reflects (visual storage capacity and/or attention control), we find ourselves confronted with two questions, both theoretical and practical in nature:

Are visual arrays tasks more closely related to storage-based measures of working memory capacity or resistance-to-interference aspects of attention control?

Does the nature of the visual arrays task (i.e., nonselective or selective) influence which constructs that type of visual arrays task primarily reflects?

Four potential answers follow these questions:

All visual arrays tasks primarily reflect visual storage capacity related to working memory capacity measures.

All visual arrays tasks primarily reflect differences in controlled attention independent of storage.

All visual arrays tasks reflect both visual storage capacity and differences in controlled attention to the same degree.

The degree to which a visual array task primarily reflects visual storage capacity or controlled attention is task-(selection) dependent.

To answer these questions, we conducted three sets of analyses on four entirely separate data sets collected over an 11-year period by different groups of graduate students, postdoctoral researchers, and undergraduate research personnel. Common to all these data sets are measures of working memory capacity (mainly but not exclusively defined by complex span tasks), attention control (specifically the antisaccade, flanker, and Stroop tasks), and at least one visual arrays task. Each set of analyses was intended to identify whether various visual arrays tasks were more closely related to working memory capacity or attention control. These analyses were all novel; none of these results have been previously published in journals nor presented at conferences. The same set of analyses was used on each of the four data sets.

We began with an exploratory factor analysis. Exploratory factor analysis is a data-driven approach to defining the latent factor structure in a set of tasks. If the visual arrays task is more closely related to working memory capacity, then they should load more with those tasks than measures of attention control. Alternatively, if visual arrays measures are more closely related to measures of attention control, then they should load more consistently with measures of attention control than measures of working memory capacity.

Next, we conducted a structural equation model in which working memory capacity and attention control latent factors uniquely predict individual differences in visual arrays k capacity scores. This allowed us to assess whether individual differences in k capacity scores from the visual arrays tasks reflects primarily storage capacity, attention control, or both.

Finally, we conducted additional structural equation models to further understand the processes underlying individual differences in visual arrays performance. In two separate models, we tested (a) whether visual arrays predicts attention control over and above of working memory capacity and (b) whether visual arrays predicts working memory capacity over and above attention control.

Method

We analyzed data from four different data sets collected over 11 years (the total number of subjects are listed for each set of analyses, and the full population details for each data set are outlined in the Appendix A and Appendix B). In each data set, there were three or more measures of working memory capacity, three measures of attention control, and at least one visual arrays task. Participants for each data set were recruited from the Georgia Institute of Technology, surrounding universities, and the greater Atlanta community. At minimum, half of our population was recruited from outside of Georgia Tech to provide a sample which reflected a diverse background of socioeconomic status, race, gender, and education.

The studies from which the data are reported were all approved by the Georgia Institute of Technology IRB. The data were collected under four independent protocol numbers/titles. They are listed from most recent to oldest. (a) Protocol #H17116 “Understanding the nature of attention control,” (b) #H16322 “Differentiating between working memory capacity and fluid intelligence (Part II),” (c) #H12234 “The relationships among working memory tasks and their relations to fluid intelligence and higher-order cognition,” and (d) #H11309 “Relating the scope and control of attention within working memory.”

Visual Arrays Tasks

Four variations of the visual arrays task were used (see Figure 1). Two tasks explicitly involved a selection component (VA-color-S and VA-orient-S) which required participants to ignore specific distractor items (either those of a given color or those on one side of the array). Two did not (VA-color and VA-orient). The category listed after VA is the dimension on which individuals are making a yes/no change evaluation (i.e., did a box change color, or did a bar change orientation). In calculating the dependent variable, k, N was always defined as the number of valid target-items on a screen. Thus, if ten target/items are presented, but five are to-be-ignored, then N equaled 5. Two tasks required test-takers to decide whether a relevant characteristic of any item in the display had changed (VA-orient and VA-color-S). For these tasks, k was calculated using the whole display correction of Pashler (1988): k = N × (%hits − %false alarms/[1 − %false alarms]). Two tasks required test-takers to respond as to whether a relevant characteristic of a probed item had changed (VA-color and VA-orient-S). For these tasks, k was calculated using the single probe correction of Cowan et al. (2005): k = N × (%hits + %correct rejections −1). In all cases, k was first computed for each set size, and then the set sizes were averaged. In all tasks, participants responded via keypress. Change and no-change trials occurred with 50% probability and, along with set sizes, were randomly distributed. Items were presented within a silver 19.1° × 14.3° visual field at a distance of roughly 45 cm. Items were separated from one another by at least 2° and were all at least 2° from a central fixation point.

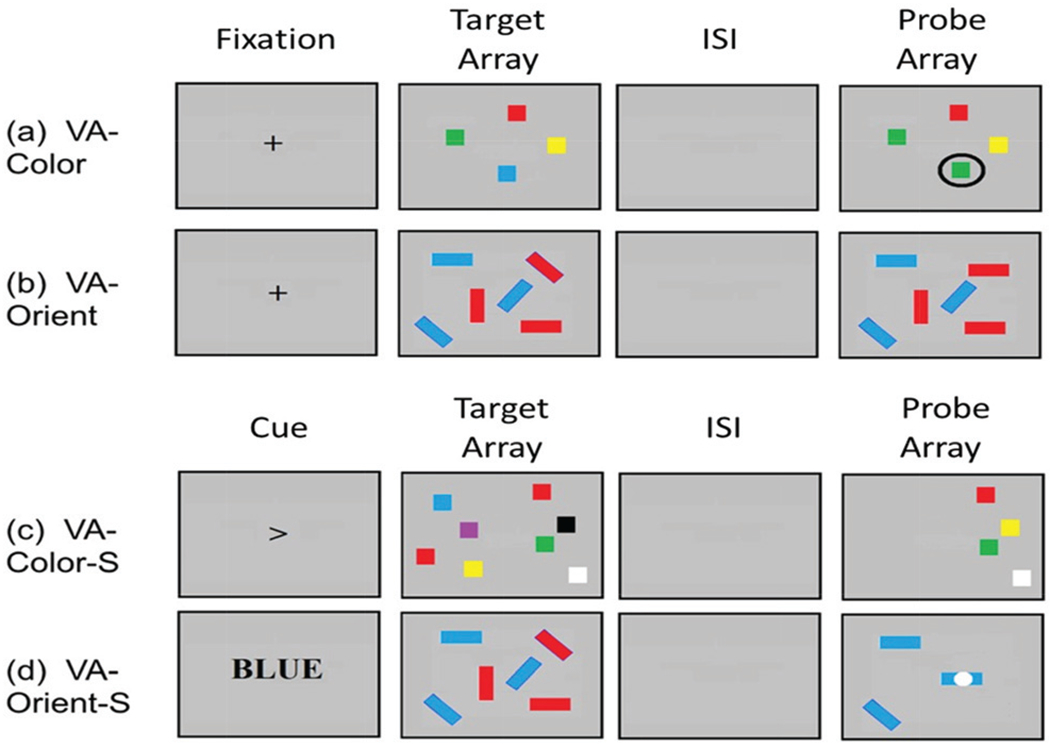

Figure 1. Examples of Visual Arrays Tasks Used in the Present Study.

Note. Visual arrays, with either a color change judgment or an orientation change judgment. The labeling of each task is based on the following criteria: VA-[the category of the change-based judgment] - [is there a selection component]. The two potential judgements for change are color or orientation (i.e., has a square changed color, or has a bar changed orientation. The selection components direct an individual to a pay attention to half of the array (either one side [the right or left subset] or one subset of stimuli [blue (light gray) or red (dark gray) bars only]). Going forward, (a) and (b) will be referred to as nonselection versions as all array information is needed for retrieval. In versions (c) and (d) the –S indicates a selection component as evidenced by the cue in lieu of a fixation. (a) and (b) begin with fixation, which is followed by a target array of to-be-remembered items, then an interstimulus interval (ISI). For (a) the test-taker must indicate whether the encircled box has changed colors. For (b) the test-taker must indicate whether any box has changed its orientation. (c) and (d) begin with a cue that indicates which information will be relevant. This is followed by the array of to-be-remembered items, along with distractors. After the ISI, the probe array appears with only cued information presented. For (c) the test-taker must indicate whether any box has changed color. For (d) the test-taker must indicate whether the box with the white dot has changed orientation. VA = visual arrays. See the online article for the color version of this figure.

VA-Color (Color Judgment Task)

Array sets were four, six, or eight colored blocks. Possible colors included white, black, red (dark gray), yellow, green, blue (light gray), and purple. Arrays were presented for 250 ms followed by a 900 ms ISI. Participants responded as to whether or not one circled item had changed color. Twenty-eight trials of each set size were included; 14 were no-change, 14 were change (see Figure 1a).

VA-Orient (Orientation Judgment Task)

The orientation judgment task was based on one of the conditions used by Luck and Vogel (1997). Arrays consisted of five or seven colored bars, each of which was either horizontal, vertical, or slanted 45° to the right or left. Participants needed to judge whether any bar had changed orientation. Colors included red (dark gray) and blue (light gray) and did not change within a trial. Forty trials of each set size were included; 20 were no-change and 20 were change (see Figure 1b).

VA-Color-S (Selective Color Judgment Task)

This task was based on Experiment 2 from Vogel, Woodman, et al. (2005) and was speeded relative to other tasks. Each trial began with a left- or right-pointing arrow at the center of a computer monitor indicating which side of the array participants needed to focus on. This arrow was presented for 100 ms, followed by a 100-ms interval. Next, two equally sized arrays of colored blocks were presented on the right and left sides of the screen for 100 ms. The array on each side contained either four, six, or eight items. After a 900-ms delay, the boxes on the side indicated by the arrow reappeared. Participants indicated whether any of these relevant boxes had changed color. Twenty-eight trials of each set size were included; 14 were no-change, 14 were change, and they occurred equally often on the right and left sides of the screen (see Figure 1c).

VA-Orient-S (Selective Orientation Judgment Task)

This task was based on the first experiment of Vogel, Woodman, et al. (2005). Single probe report was used. Each trial began with presentation of a word, either RED (dark gray) or BLUE (light gray) indicating the color of the items to be attended (the selection instruction) for 200 ms, followed by a 100-ms interval. Next, 10 or 14 bars were presented for 250 ms. Half of all bars were printed in the to-be-attended color, that is set size was either five or seven. Following a 900-ms delay, only the to-be-attended bars returned. The critical item was identified at test by a white dot superimposed on one of the bars. Test takers judged whether the orientation of the item indicated by the dot had changed, relative to the initial presentation. No other changes could occur within the display. Forty trials of each set size were included; 20 were change, and 20 were no-change (see Figure 1d).

Working Memory Capacity Tasks

Operation Span (OSpan; Kane et al., 2004; Turner & Engle, 1989)

This task required subjects to remember a series of letters presented in alternation with simple math equations which they were required to solve. On each trial, subjects first solved a simple math equation where they decided whether a solution was correct (e.g., “[2 × 2] + 1 = 5) or not (e.g., “[3 × 4] − 3 = 8”) followed by the presentation of a single letter. After a variable set size, participants attempted to recall the letters in their correct serial order. There were a total of 14 trials (two blocks of seven trials), set-sizes ranged from three to eight,4 and each set-size occurred twice (once in each block). The dependent variable was the partial span score, which is the total number of letters recalled in proper serial position (Conway et al., 2005).

Symmetry Span (SymSpan; Unsworth et al., 2009)

This task required subjects to judge whether remember a 16 × 16 matrix of black and white squares was symmetrical about the vertical midline and while memorizing the locations of a red (dark gray) square in a 4 × 4 matrix. Participants first made the symmetry judgment and were then presented with the to-be-remembered spatial location. This alternation continued until a variable set-size of spatial locations had been presented. There was a total of 12 trials (two blocks of six trials), set-sizes ranged from two to seven, and each set-size occurred twice (once in each block). The dependent variable was the partial span score, which is the total number of square locations recalled in proper serial position.

Rotation Span (RotSpan; Kane et al., 2004)

This task required subjects to solve a mental rotation task in which they had to mentally rotate and decide whether a letter was mirror reversed or not. Afterward, subjects were presented with a to-be-remembered arrow with a specific direction (eight possible directions; the four cardinal and four ordinal directions) and specific size (small or large).This alternation continued until a variable set-size of arrows had been presented, at which point participants attempted to recall the arrows in their correct serial order. There as a total of 12 trials (two blocks of six trials), set-sizes ranged from two to seven, and each set-size occurred twice (once in each block). The dependent variable was the partial span score, which is the total number of arrows recalled in proper serial position.

Running Letter Span (Broadway & Engle, 2010)

The automated running letter span presented a series of five to nine letters and required participants to remember the last three to seven. Participants were informed of how many items they would need to remember at the beginning of a block of three trials. Blocks were randomly presented. There as a total of 15 trials. Items were presented for 300 ms followed by a 200-ms pause.

Running Spatial Span (Harrison et al., 2013)

The running-spatial-span task was identical to the running-letter-span task, except that matrix locations on a 4 × 4 matrix were the to-be-remembered stimuli.

Rapid Running Digit Span (Cowan et al., 2005)

The automated running digit span presented a series of 12–20 digits and required participants to remember the last 6. Participants performed 18 critical trials. Digits were presented at the rate of four per second via headphones.

Attention Control Tasks

Antisaccade (Hallett, 1978; Hutchison, 2007; Kane et al., 2001)

Subjects saw a central fixation cross lasting a random amount of time between 2,000–3,000 ms followed by an alerting tone for 300 ms. After the alerting tone, an asterisk appeared for 300 ms at 12.3° visual angle to the left or the right of the central fixation followed immediately by a target “Q” or an “O” for 100 ms on the opposite side of the screen from the asterisk. The location of the asterisk and target letter were both masked for 500 ms by “##.” The subject’s goal was to avoid looking at the asterisk and instead look to the opposite side of the screen to catch the target “Q” or “O.” Subjects had as much time as needed to respond to which letter appeared by pressing the associated key on the keyboard. Subjects completed 72 trials, with trial-by-trial feedback for 500 ms following each response, and then a 1,000-ms waiting period until the fixation cross appeared again to indicate a new trial was beginning. The dependent variable was the number of correctly identified target letters.

Arrow Flanker (RT Flanker; Eriksen & Eriksen, 1974)

Subjects were presented with a target arrow in the center of the screen pointing left or right along with two flanking arrows on both sides. The flanking arrows were either all pointing in the same direction as the central target (congruent trial; for example, ← ← ← ← ←) or all in the opposite direction (incongruent trial; for example, ← ← → ← ←)5. The subject was asked to indicate direction the central arrow was pointing by pressing the “z” (left) or “/” (right) key. These keys had the words LEFT and RIGHT taped onto them to assist with response mapping. A total of 144 trials were administered; 96 congruent and 48 incongruent, with a randomized 400- to 700-ms ITI. The dependent variable was the flanker interference effect: the RT cost of the incongruent trials calculated by subtracting each subject’ s mean RT on congruent trials from their mean RT on incongruent trials, excluding inaccurate trials.

Color Stroop (RT Stroop; Stroop, 1935)

Subjects were shown the word “red (dark gray),” “green,” or “blue (light gray)” in red (dark gray), green, or blue (light gray) font. The words were either congruent with the color (for example, red (dark gray)), or incongruent with the color (for example, blue (light gray)). The subject’s task was to indicate which color the word was printed by pressing the “1,” “2,” or “3” key on the number pad. To assist with response mapping, the keys had a piece of paper of the corresponding color taped onto them. A total of 144 trials were administered; 96 congruent and 48 incongruent, with a randomized 400-to 700-ms ITI and a 5,000-ms response deadline. The dependent variable was the Stroop interference effect: the RT cost of the incongruent trials calculated by subtracting each subject’s mean RT on congruent trials from their mean RT on incongruent trials, excluding inaccurate trials.

Data Analysis

For each data set we ran the same general set of analyses. Before analysis we removed subjects that had missing data on any of the visual arrays tasks. We used the same criteria across all data sets and each visual array task. See Appendix A Table A1 for task reliabilities, and Appendix B Tables B1–B4 for correlation tables.

Exploratory Factor Analysis

For each data set we included all the working memory capacity, attention control, and visual arrays tasks that were available from that study. We used an oblimin rotation, and the number of factors was determined by taking into account numerous methods; the Kaiser criterion of eigenvalues greater than 1, scree plot, and parallel analysis.

Structural Equation Models

For our primary analyses we used structural equation modeling. For each data set we conducted four models to better understand the processes related to individual differences in visual arrays performance. (a) We conducted a structural equation model with both working memory capacity and attention control latent factors as correlated predictors of each visual arrays task. Rather than forming a latent visual arrays factor (Data Sets 3 and 4 only) we used each visual arrays task as a separate dependent variable. This model allowed us to assess the unique contributions of working memory capacity and attention control to individual differences in k capacity scores at the task level for each visual array task.6 In Data Sets 3 and 4, we essentially have an experimental manipulation where the only difference between task versions (besides the judgment dimension) is whether there is a selective component or not. This allowed us to evaluate whether the degree to which a visual arrays task primarily reflects visual storage capacity or controlled attention is dependent on there being a selective component. (b) We tested whether visual arrays performance can predict attention control over and above working memory capacity and (c) whether visual arrays performance can predict working memory capacity over and above attention control. For these models, in Data Sets 3 and 4, we formed latent nonselective and selective visual arrays factors. (d) Finally, we also conducted additional structural equation models testing whether working memory capacity and processing speed mediate the relationship between attention control and visual arrays. For processing speed, we used the mean RT from Flanker and Stroop congruent trials. This allowed us to assess whether speed of processing is a viable explanation for any attention control and visual arrays relationship. This is important because no task or latent factor is “process pure.” Therefore, we can rule out potential confounding factors by establish incremental validity.

Analyses were conducted in R statistical software (R Core Team, 2018). The R package psych (Revelle, 2018) was used to conduct the exploratory factor analysis. The R package lavaan (Rosseel, 2012) was used for all structural equation models, treatment of missing values was set to full-information maximum likelihood. Where necessary, we statistically compared models using the Bayes Information Criteria (BIC) to provide an estimate of the Bayes Factor value for the probability of one model over another (Bollen et al., 2014; Wagenmakers, 2007).

Results

Data Set 1

Data Set 1 consisted of OSpan, SymSpan, and RotSpan for working memory capacity; antisaccade, flanker, and Stroop for attention control; and VA-orient-S for visual arrays. There was a total of 397 subjects, with no more than 5% missing data for any one task. The data analyzed in this study was part of a larger data collection sample that occurred from 2017–2018. The following link has a summary of the larger data collection procedure and a reference list of all publications to come out of this data collection sample with information on which tasks were used for each publication: https://osf.io/yc48s/.

Exploratory Factor Analysis

We conducted an exploratory factor analysis using principal axis factoring with two factors and an oblimin rotation (Table 1). Two factors were specified because two factors had eigenvalues greater than 1, both scree plot and parallel analysis suggested that the number of factors was two. The OSpan, SymSpan, and RotSpan loaded most strongly onto the first factor (a working memory capacity factor). The VA-orient-S, antisaccade, flanker, and Stroop all loaded most strongly onto the second factor (an attention control factor). However, the flanker loading was poor for both factors, each under .30. The two factors moderately correlated at r = .6. The exploratory factor analysis supported the interpretation of VA-orient-S as being more similar to attention control measures.

Table 1.

Data Set 1 – Exploratory Factor Analysis With Oblimin Rotation

| Factor |

||

|---|---|---|

| Variable | F1 | F2 |

| OSpan | 0.74 | −0.13 |

| SymSpan | 0.76 | 0.06 |

| RotSpan | 0.62 | 0.24 |

| VA-orient-S | 0.12 | 0.57 |

| Antisaccade | 0.08 | 0.59 |

| Flanker | 0.02 | −0.29 |

| Stroop | 0.20 | −0.43 |

Note. VA = visual arrays.

Structural Equation Models

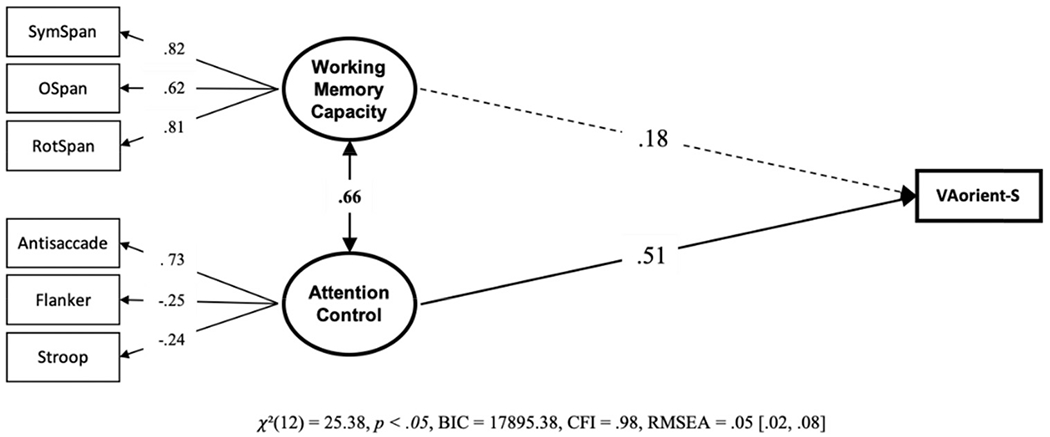

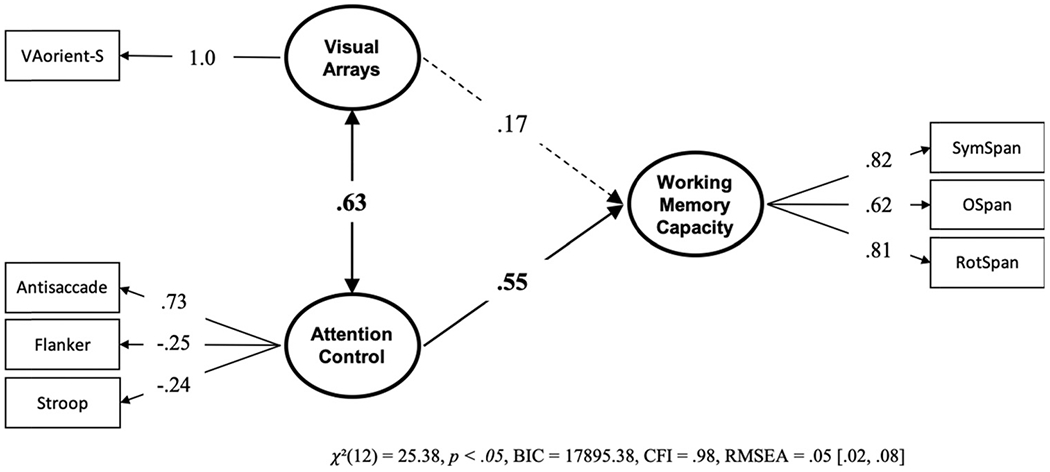

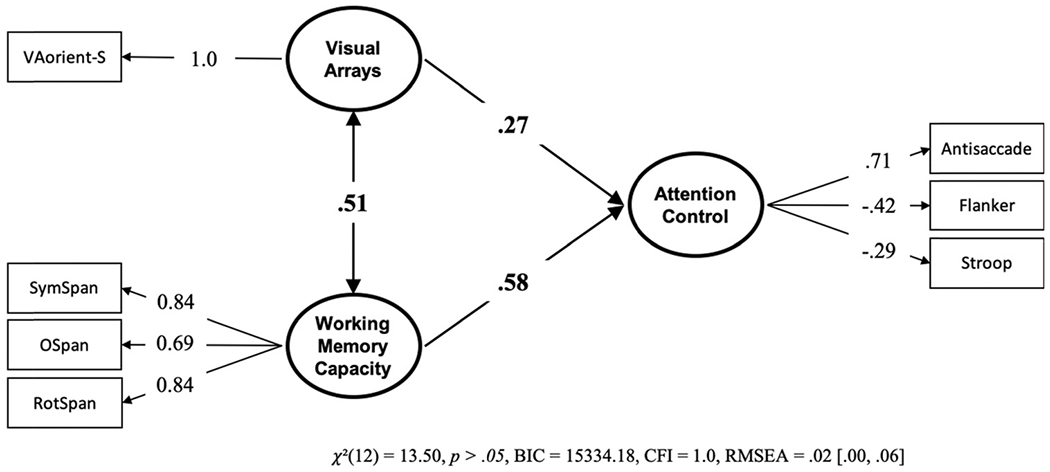

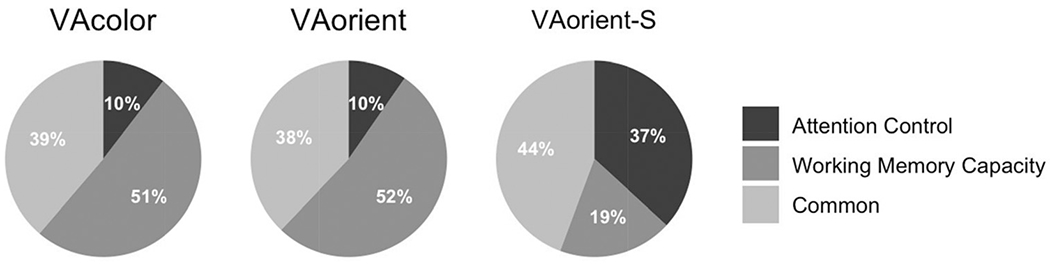

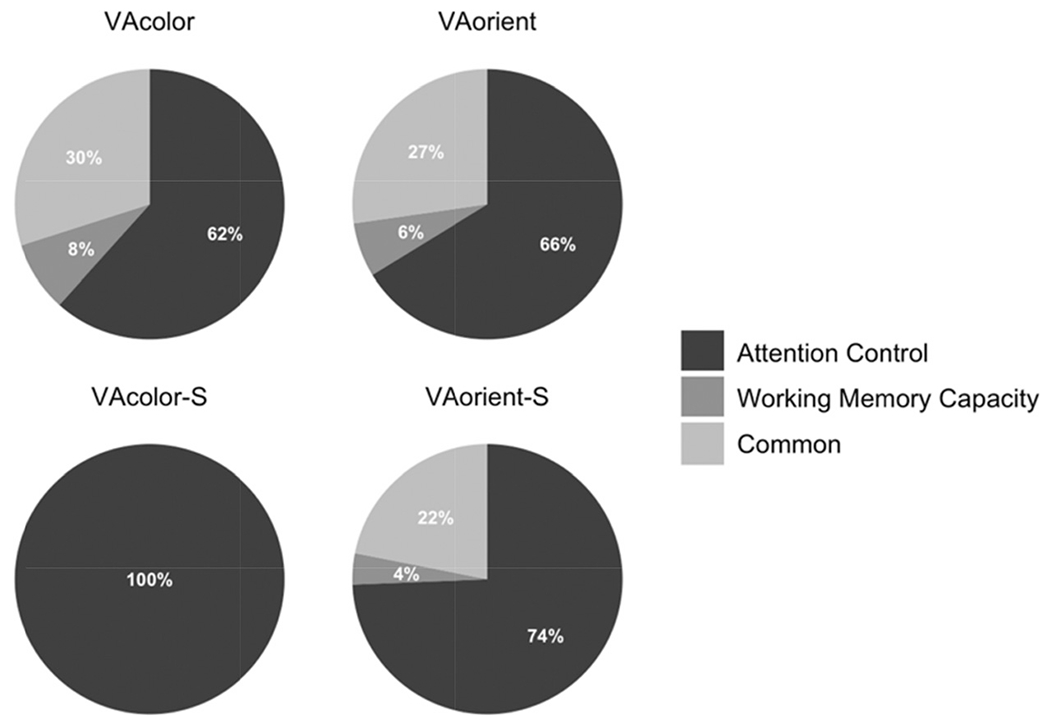

Our primary question of interest is; are individual differences in visual arrays k capacity score explained more by differences in working memory capacity, attention control, or both? To answer this, we conducted a structural equation model with working memory capacity and attention control predicting k capacity scores on the VA-orient-S task (see Figure 2). Attention control, but no working memory capacity, uniquely predicted k capacity scores in the VA-orient-S task. We compared this model with a “null” model in which the working memory capacity – VA-orient-S path was set to zero. The “null” model was 10.63 times more likely; BF01 = 10.63, P(H0 | Data) = .91. We also compared this model with a model where the predictive paths for attention control and working memory capacity were constrained to equality. This model was also preferred, being 6.03 times more likely than the freely estimated model, BF01 = 6.03, P(H0 | Data) = .86. We thus find evidence both for the hypothesis that the predictive path from working memory capacity is statistically unnecessary and that it does not reliably differ from the significant predictive path from attention control. To gain clarity on this apparent contradiction, we compared the Bayes Factors of the two constrained models. This reveals that the null model is slightly preferred over the model with equality constraints, BF01 = 1.76, P(H0 | Data) = .64, although the magnitude of the preference appears negligible. Figure 3 visualizes the contributions of working memory capacity and attention control relative to the 41% of explained variance in VA-orient-S.

Figure 2. Structural Equation Model From Data Set 1 With the Unique Relationships of Working Memory Capacity and Attention Control to VA-Orient-S.

Note. Bold numbers indicate significant values based on p < .05. VA = visual arrays.

Figure 3. Pie Chart Representing the Contributions Uniquely From Working Memory Capacity/Attention Control and Common Variance in the 41% of Explained Variance in VA-Orient-S.

Note. VA = visual arrays

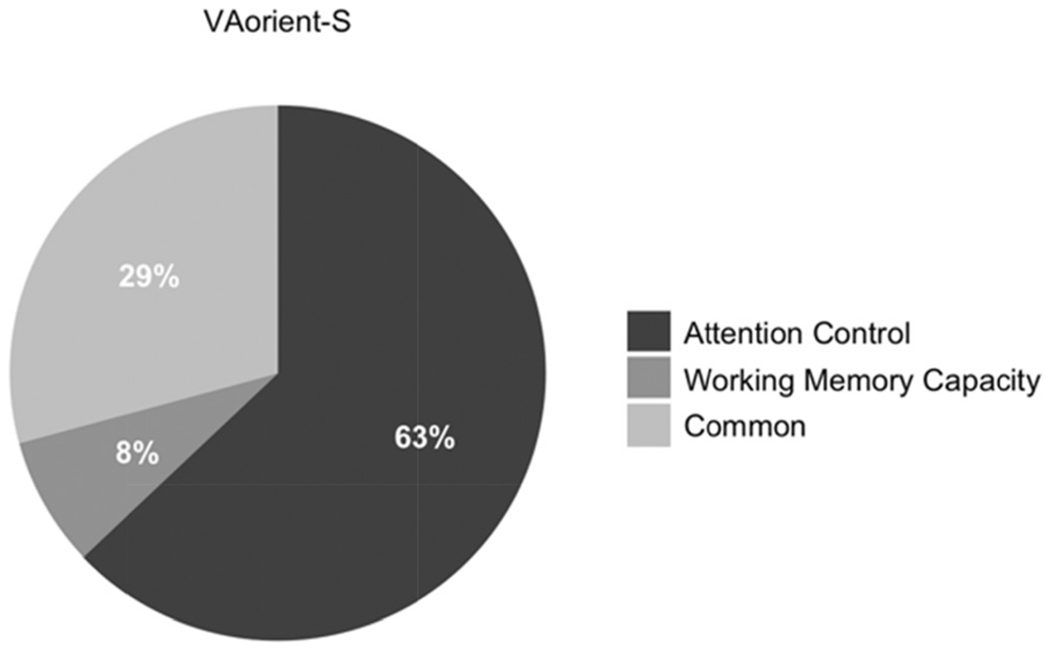

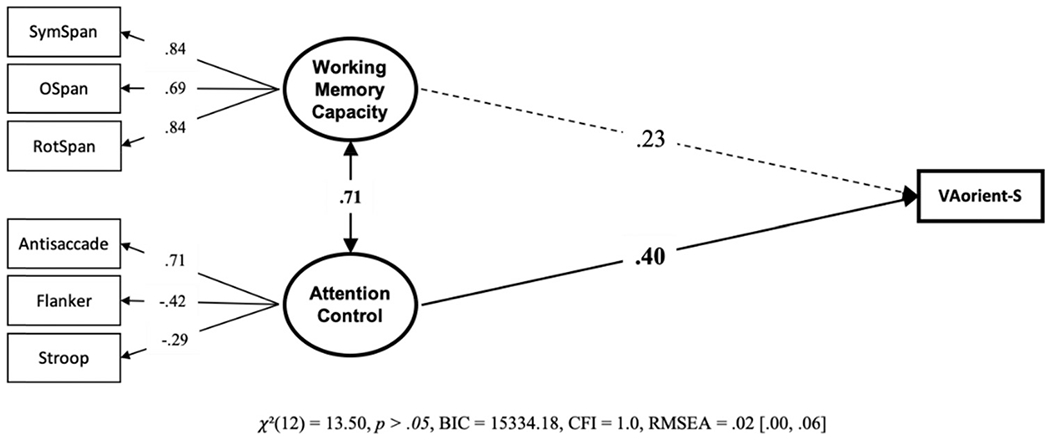

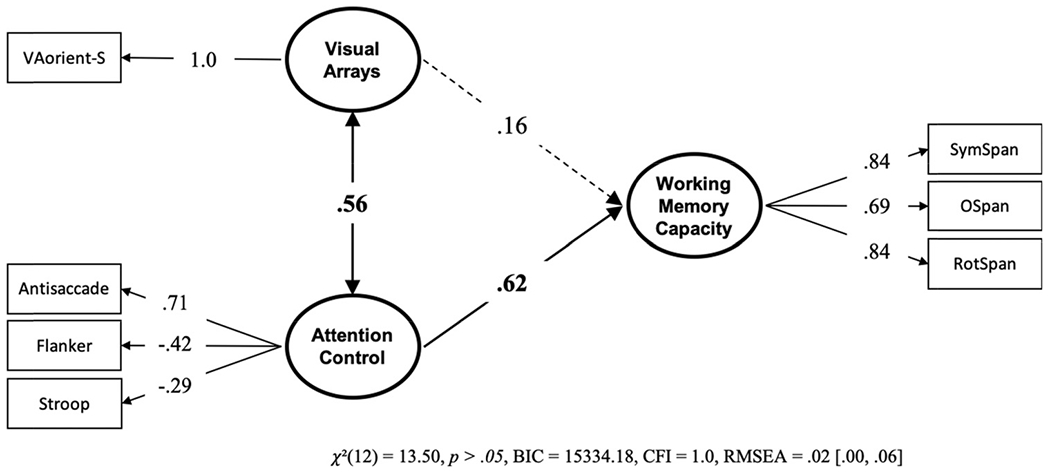

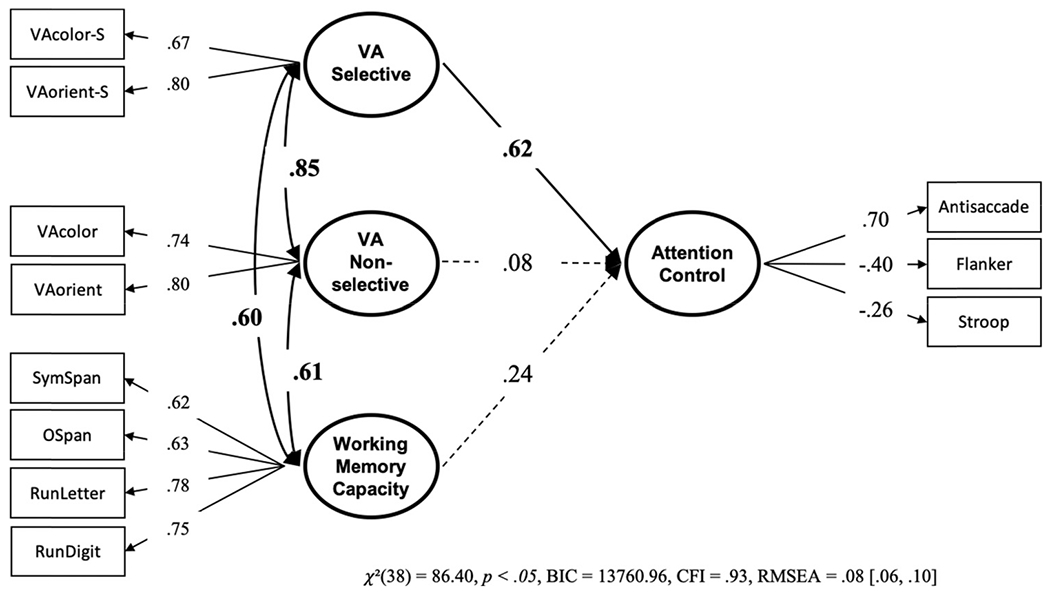

To better understand what individual variability in visual arrays performance reflects, we tested the relationship of unique variance in visual arrays with working memory capacity and attention control.7 First, we tested whether visual arrays capacity can predict attention control uniquely from working memory capacity. If variance in visual arrays reflects nothing more than the capacity of working memory, then visual arrays should not predict unique variance in attention control. However, the model (see Figure 4) does show that VA-orient-S uniquely predicts attention control above and beyond working memory capacity, uniquely accounting for 21.2% of the variance in attention control (based on squaring the path value of .46 between visual arrays and attention control). Furthermore, this freely estimated model is strongly preferred over a model where the selective visual arrays task and working memory tasks are loaded on a single predictive factor, BF01 = 119,593.70, P(H0 | Data) > .999. This is consistent with the notion that individual differences in selective visual arrays performance represents more than just the number of items stored in visual working memory.

Figure 4. Structural Equation Model From Data Set 1 With the Unique Relationships of Visual Arrays and Working Memory Capacity to Attention Control.

Note. Bold numbers indicate significant values based on p < .05. VA = visual arrays.

Next, we conducted a model with visual arrays and attention control uniquely predicting working memory capacity. If storage capacity, independent of attention control, is reflected in visual arrays capacity score, then it would be expected to predict working memory capacity uniquely from attention control. The model (see Figure 5) suggests that this is not the case. The k capacity score on VA-orient-S did not predict working memory capacity above and beyond attention control, and a model with a null predictive path from the selective visual arrays factor was preferred over a model where this path was estimated, BF01 = 10.633, P(H0 | Data) = .914.8 However, a model with the two predictive paths were constrained to equality was also preferred to the freely estimated model, BF01 = 3.279, P(H0 | Data) = .766, indicating that the two paths are not reliably different from one another.

Figure 5. Structural Equation Model From Data Set 1 With the Unique Relationships of Visual Arrays and Attention Control to Working Memory Capacity.

Note. Bold numbers indicate significant values based on p < .05. VA = visual arrays.

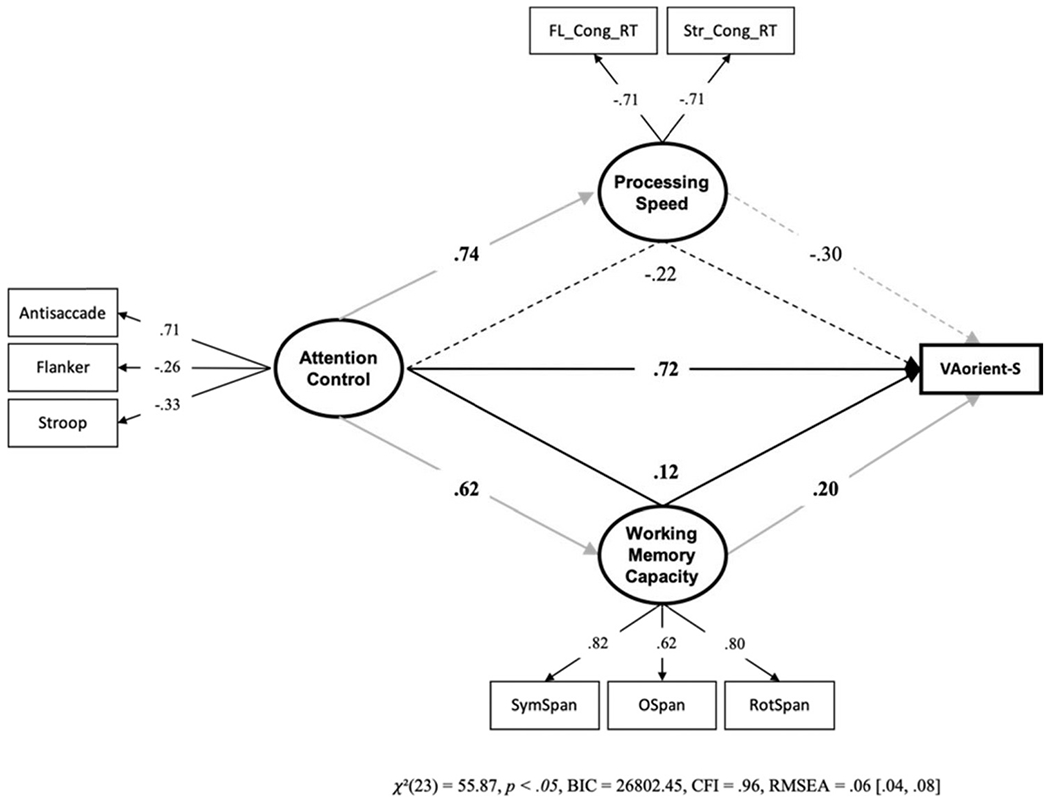

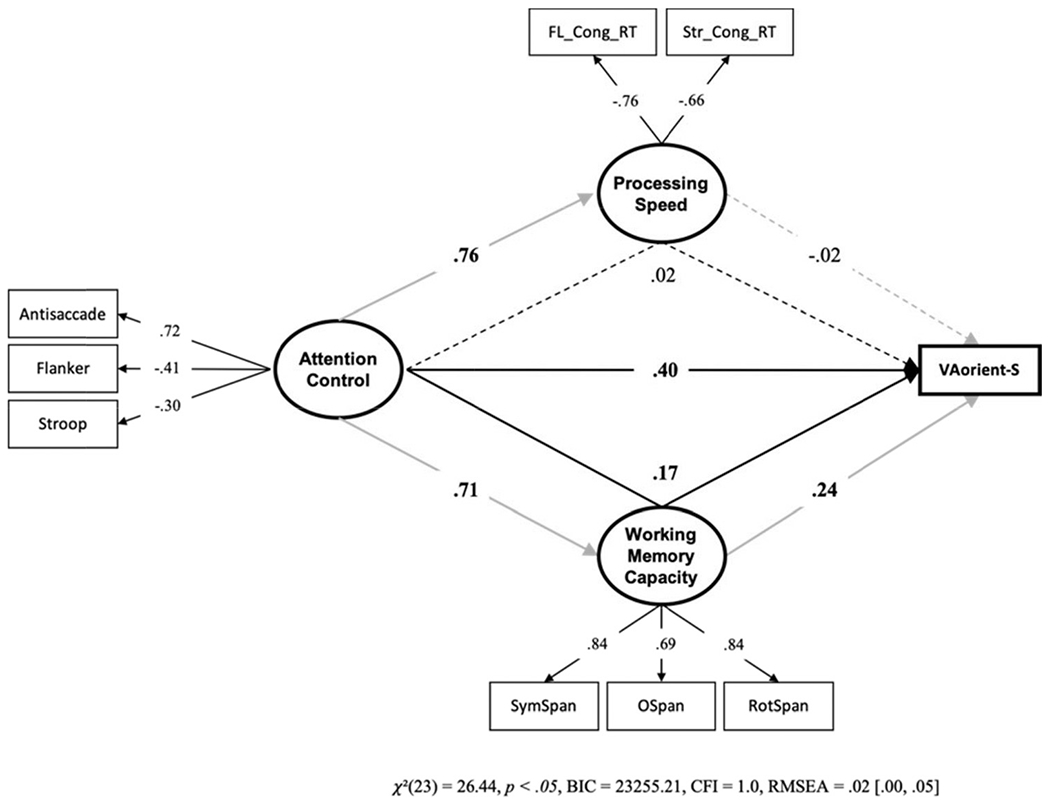

We conducted a final structural equation model to rule out processing speed as a potential confounding factor. We tested whether processing speed and working memory capacity can mediate the attention control – VA-orient-S relationship (see Figure 6).9 Working memory capacity only partially mediated the path from attention control to VA-orient-S; processing speed did not mediate the path from attention control to VA-orient-S. Therefore, the direct effect of attention control on individual differences in k capacity scores cannot be attributed to processing speed or working memory capacity.

Figure 6. Structural Equation Model With Processing Speed and Working Memory Capacity Mediating the Attention Control – VA-Orient-S Relationship.

Note. FL_Cong_RT = mean reaction time on congruent trials in the Flanker task; Str_Cong_RT = mean reaction time on congruent trials in the Stroop task. We multiplied the FL_Cong_RT and Str_Cong_RT values by −1 to reflect shorter reaction times as higher processing speed. To make this evident in the figure, the loadings onto the processing speed factor are shown to be negative. Dotted lines represent paths that were not statistically significant, p > .05. VA = visual arrays. Bold numbers indicate significant values based on p < .05. The indirect effect through working memory capacity, but not processing speed, was statistically significant.

In summary; the exploratory analysis revealed that the selective visual arrays loaded more so with measures of attention control than working memory capacity and the structural equation model revealed that only attention control, not working memory capacity, uniquely predicted variance in VA-orient-S. Likewise, after controlling for working memory capacity, VA-orient-S predicted additional variance in attention control. Finally, processing speed was not able to account for the attention control – VA-orient-S relationship. Overall, the results from Data Set 1 suggest that the VA-orient-S task shares substantial variance with attention control independently from working memory capacity. These findings are consistent with studies by Fukuda and Vogel (2009, 2011) showing an important role for attention control processes in visual arrays performance.

Data Set 2

The tasks used in Data Set 1 and Data Set 2 were very similar. There were some differences in the number of trials in the flanker and Stroop tasks. Also, the Stroop task in Data Set 2 included neutral trials (though they were excluded from the present analysis). Other than that, there were no major differences in the administration of the tasks. In Data Set 2 there were a total of 342 subjects with no more than 7% missing values for any one task. These data were collected from 2015–2017 and are associated with the following publications: Draheim et al. (2018) and Tsukahara et al. (2016). The same set of analyses were conducted for this data as in Data Set 1.

Exploratory Factor Analysis

We conducted an exploratory factor analysis using principal axis factoring with two factors and an oblimin rotation (Table 2). Two factors were specified because scree plot and parallel analysis suggested that the number of factors was two, although only one factor had an eigenvalue greater than 1. The OSpan, SymSpan, and RotSpan loaded most strongly onto the first factor (a working memory capacity factor). The antisaccade and flanker all loaded most strongly onto the second factor (an attention control factor). The Stroop task had poor loadings on both factors, each under .20. The VA-orient-S, meanwhile, loaded very similarly on both factors. The two factors correlated moderately at r = .6. The exploratory factor analysis supports the view that the VA-orient-S task shares considerable variance with putative measures of attention control independent of working memory capacity but relates to both constructs to a similar degree.

Table 2.

Data Set 2 – Exploratory Factor Analysis With Oblimin Rotation

| Factor |

||

|---|---|---|

| Variable | F1 | F2 |

| OSpan | 0.71 | −0.03 |

| SymSpan | 0.84 | −0.03 |

| RotSpan | 0.80 | 0.06 |

| VA-orient-S | 0.31 | 0.33 |

| Antisaccade | −0.01 | 0.78 |

| Flanker | −0.04 | −0.37 |

| Stroop | −0.16 | −0.13 |

Note. VA = visual arrays.

Structural Equation Models

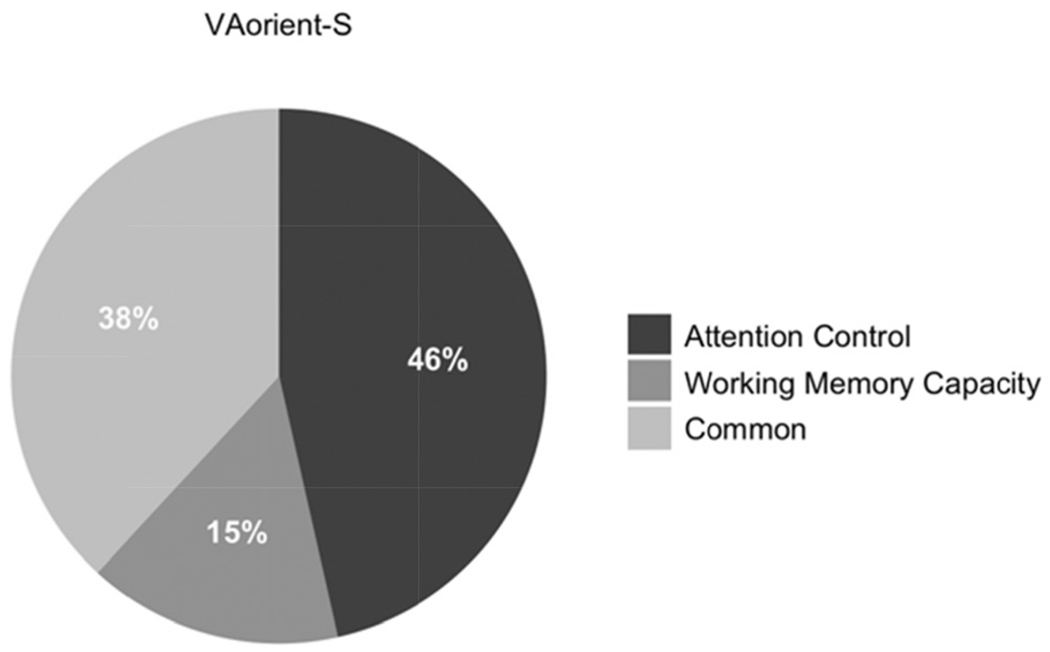

We conducted a structural equation model with working memory capacity and attention control predicting k capacity scores on the VA-orient-S task (see Figure 7). Only attention control uniquely predicted variance in the VA-orient-S task. We compared this model to a “null” model in which the working memory capacity – VA-orient-S path was set to zero. The “null” model was 6.07 times more likely; BF01 = 6.07, P(H0 | Data) = .86. However, as in Data Set 1, the predictive paths did not reliably differ and a model where both paths were constrained to equality was preferred to the freely estimated model, BF01 = 13.79, P(H0 | Data) = .93. Comparing the “null” and “equal” models, the latter was slightly preferred, BF10 = 2.26, P(H1 | Data) = .70. Figure 8 visualizes the relative contributions of working memory capacity and attention control to the 34% of explained variance in VA-orient-S.

Figure 7. Structural Equation Model From Data Set 2 With the Unique Relationships of Working Memory Capacity and Attention Control to VA-Orient-S.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

Figure 8. Pie Chart Representing the Contributions Uniquely From Working Memory Capacity/Attention Control and Common Variance Between the Two in the 34%o of Explained Variance in VA-Orient-S.

Note. VA = visual arrays.

Next, we tested whether visual arrays capacity can predict attention control uniquely from working memory capacity. If variance in visual arrays reflects nothing more than the capacity of working memory, then visual arrays should not predict unique variance in attention control. However, the model (see Figure 9) does show that VA-orient-S uniquely predicts attention control above and beyond working memory capacity. Furthermore, the predictive factors could not be combined without loss of fit, and the depicted model was strongly preferred, BF10 = 25.81, P(H1 | Data) = .96. This is further evidence that individual differences in VA-oreint-S performance represents more than just the number of items stored in visual working memory.

Figure 9. Structural Equation Model From Data Set 2 With the Unique Relationships of Visual Arrays and Working Memory Capacity to Attention Control.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

We then conducted a model with visual arrays and attention control uniquely predicting working memory capacity. If storage capacity, independent of attention control, is reflected in visual arrays capacity score, then it would be expected to predict working memory capacity uniquely from attention control. The model (see Figure 10) suggests that this is not the case. The k capacity score on VA-orient-S did not predict working memory capacity above and beyond attention control. Furthermore, a “null” model where the predictive visual arrays path was set to zero was preferred to the freely estimated model, BF01 = 6.06, P(H0 | Data) = .86.

Figure 10. Structural Equation Model From Data Set 2 With the Unique Relationships of Visual Arrays and Attention Control to Working Memory Capacity.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

Additionally, and contrary to Data Set 1, the freely estimated model was slightly preferred to a model with the predictive paths constrained to equality, BF10 = 2.09, P(H1 | Data) = .68. Thus, for Data Set 2, estimating the predictive path from visual arrays to working memory capacity was statistically superfluous. Put differently, attention control accounted for the relationship between selective visual arrays and working memory capacity in this model.

Finally, we conducted a structural equation model to rule out processing speed as a potential confounding factor.10 We tested whether processing speed and working memory capacity can mediate the attention control – VA-orient-S relationship (see Figure 11). Working memory capacity only partially mediated the path from attention control to VA-orient-S; processing speed did not mediate the path from attention control to VA-orient-S. Therefore, the effect of attention control on individual differences in k capacity scores cannot be attributed to processing speed or working memory capacity.

Figure 11. Structural Equation Model With Processing Speed and Working Memory Capacity Mediating the Attention Control – VA-Orient-S Relationship.

Note. FL_Cong_RT = mean reaction time on congruent trials in the Flanker task; Str_Cong_RT = mean reaction time on congruent trials in the Stroop task. We multiplied the FL_Cong_RT and Str_Cong_RT values by −1 to reflect shorter reaction times as higher processing speed. To make this evident in the figure, the loadings onto the processing speed factor are shown to be negative. Dotted lines represent paths that were not statistically significant, p > .05. VA = visual arrays. Bold numbers indicate significant values based on p < .05. The indirect effect through working memory capacity, but not processing speed, was statistically significant.

Overall, the results replicated what was found in Data Set 1. However, the unique relationship of attention control was numerically weaker than in Data Set 1. Converging evidence from all four models suggested that VA-orient-S reflects attention control to a greater degree than storage capacity. The exploratory analysis was not as clean as in Data Set 1, but it still suggested that VA-orient-S wanted to load with measures of attention control (no memory storage demand) just as much with working memory capacity tasks (high memory storage demand). The structural equation models showed that attention control, but not working memory capacity, uniquely predicted variance in k capacity scores on the VA-orient-S task. The total variance in VA-orient-S was less than in Data Set 1, and more of the variance explained was contributed by common variance between attention control and working memory capacity. On the one hand, our theoretical position identifies much of the variability in working memory capacity with individual differences in attention control (Engle et al., 1999). On the other hand, we hesitate to endorse this explanation for the pattern of results in Data Set 2 because the current data are not conducive to a stringent test and disconfirmation of that hypothesis. Finally, processing speed was not able to account for the attention control-visual arrays relationship.

Data Set 3

The tasks in Data Set 3 are more diverse than in Data Sets 1 and 2. There were three different versions of the visual arrays task; VA-color, VA-orient, VA-orient-S. There were also two additional measures of working memory capacity, the Running Spatial and Running Digit Spans. The attention control tasks were the same as those used in Data Set 2. Because there were additional visual arrays tasks, we were able to test additional models to better understand what factors might affect the degree to which visual arrays performance reflects visual working memory or attention control. In Data Set 3, there were 568 subjects and no more than 2% missing values on any task. These data were collected from 2011–2013 and are associated with the following publications: Draheim et al. (2016, 2018), Martin et al. (2019), and Shipstead et al. (2016).

Exploratory Factor Analysis

We conducted an exploratory factor analysis using principal axis factoring with two factors and an oblimin rotation (Table 3). Only one factor had an eigenvalue greater than 1, scree plot suggested two factors, and parallel analysis suggested three factors. When a model with three factors was specified only the Stroop task loaded onto the third factor, so we decided on a two-factor model. The OSpan, SymSpan, RotSpan, RunSpatial, and RunDigit loaded most strongly onto the first factor (a working memory capacity factor). The visual arrays task all loaded strongly onto the second factor. The antisaccade loaded about equally on both factors. The flanker and Stroop did not load well onto either factor, each loading under .30. The two factors were highly correlated, r =.8.

Table 3.

Data Set 3 – Exploratory Factor Analysis With Oblimin Rotation

| Factor |

||

|---|---|---|

| Variable | F1 | F2 |

| OSpan | 0.80 | −0.11 |

| SymSpan | 0.72 | 0.06 |

| RotSpan | 0.76 | 0.02 |

| RunSpatial | 0.49 | 0.37 |

| RunDigit | 0.57 | 0.11 |

| VA-color | 0.06 | 0.72 |

| VA-orient | −0.08 | 0.81 |

| VA-orient-S | 0.11 | 0.69 |

| Antisaccade | 0.33 | 0.31 |

| Flanker | −0.07 | −0.18 |

| Stroop | −0.28 | −0.04 |

Note. VA = visual arrays.

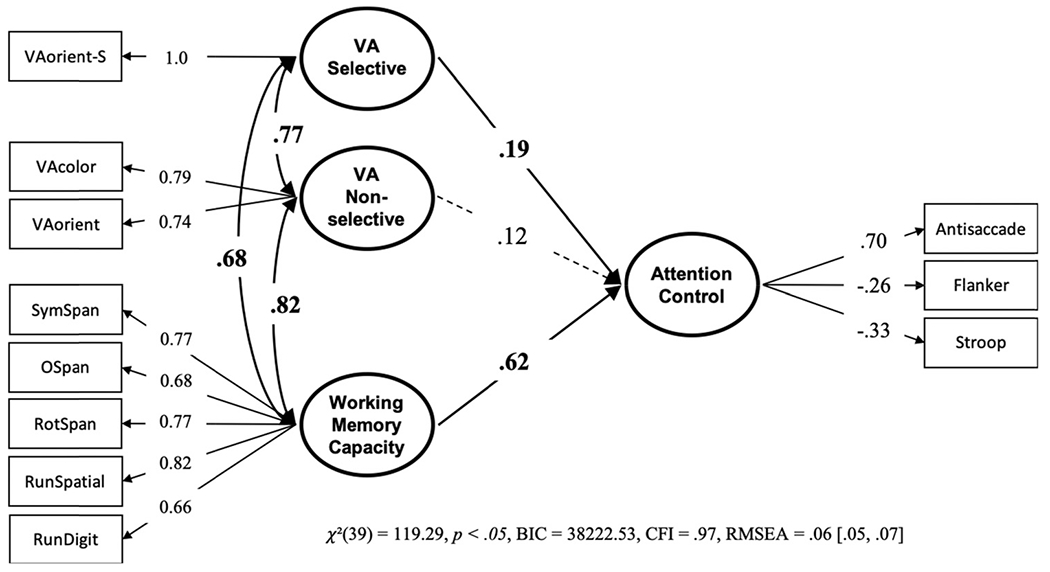

Structural Equation Models

We conducted a structural equation model with working memory capacity and attention control predicting k capacity scores on each visual arrays task (see Figure 12). The nonselective visual arrays task, VA-color and VA-orient, were uniquely predicted by only working memory capacity. The selective visual array task, VA-orient-S, was uniquely predicted only by attention control. We tested this model against a “null” model in which all the nonsignificant paths were set to zero. The “null” model was greater than 1,100 times more likely than the freely estimated model, BF01 = 1115.78, P(H0 | Data) > .99. We also tested a model where each predictive path from attention control and working memory capacity were constrained to equality (within task) across the three visual arrays task types. This model was also strongly preferred to the freely estimated model, BF01 = 3596.19, P(H0 | Data) > .99. Thus, the magnitude of prediction for working memory capacity versus attention control cannot be said to vary across task type.

Figure 12. Structural Equation Model From Data Set 3 With the Unique Relationships of Working Memory Capacity and Attention Control to Each Visual Arrays Task.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

Keeping the important caveat that the magnitude of the working memory capacity and attention control predictive paths do not reliably differ for selective versus nonselective visual arrays, the pattern of added incremental validity favors the interpretation that attention control adds prediction over and above working memory capacity only for selective visual arrays, but not nonselective visual arrays. For nonselective visual arrays, the pattern is reversed. This is not to say that attention control does not play any role in nonselective visual arrays tasks, nor that individual differences in working memory capacity are orthogonal to selective visual arrays performance. There is, after all, a considerable amount of common variance explaining performance in each task type. However, it is consistent with the notion that having a selective component in the visual arrays task increases attention control demands. The unique and common contributions of working memory capacity and attention control relative to the amount of explained variance in each visual array task is illustrated in Figure 13. In the model, working memory capacity and attention control explained 45% of variance in the VA-color task, 37% of variance in the VA-orient task, and 52% of variance in the VA-orient-S task.

Figure 13. Pie Chart Representing the Contributions Uniquely From Working Memory Capacity/Attention Control and Common Variance Between the Two.

Note. The model explained 45% of variance in the VA-color task, 37% of variance in the VA-orient task, and 52% of variance in the VA-orient-S task. VA = visual arrays.

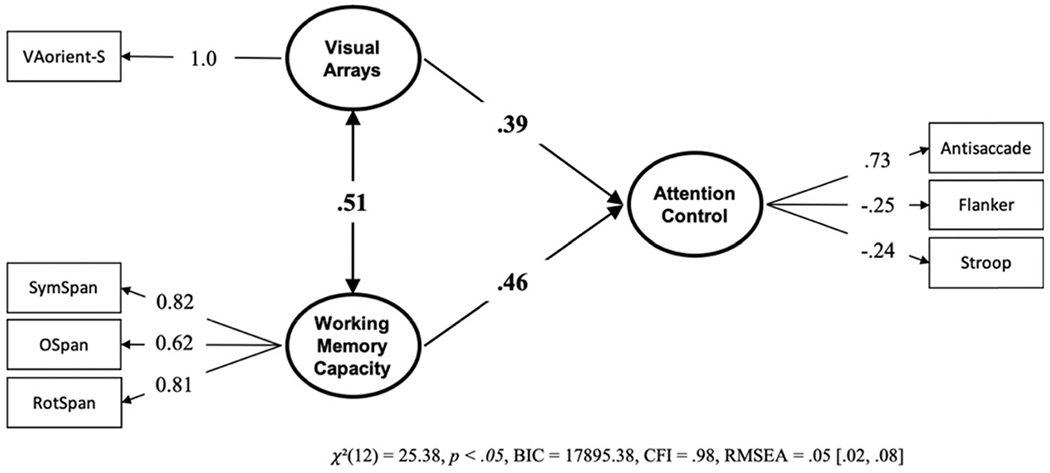

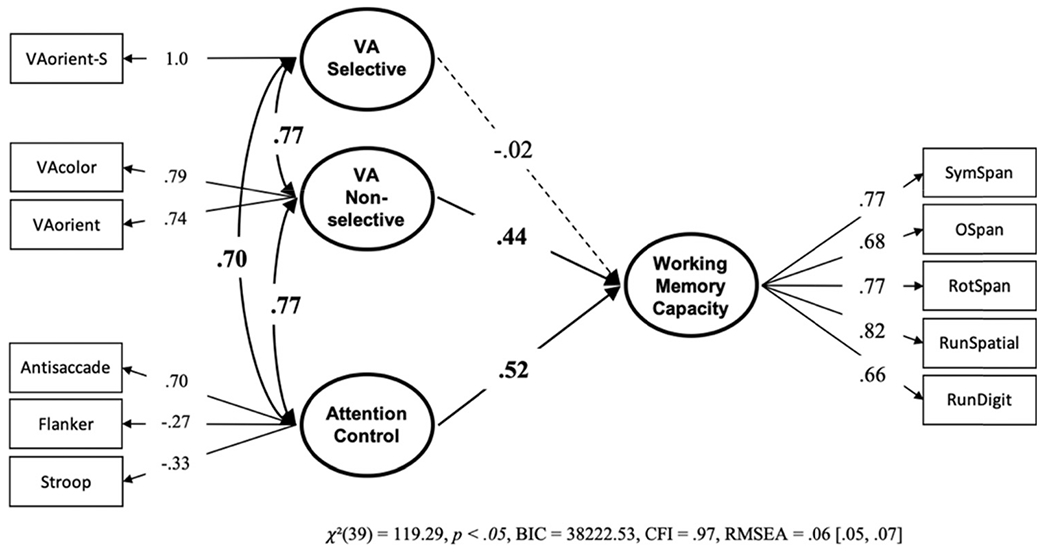

As discussed in the Introduction, there may be differences between the different versions of visual arrays. The VA-color and VA-orient do not require selecting/or filtering out any items in the display, whereas the VA-color-S and VA-orient-S do. Vogel and colleagues have shown that effectively filtering out distractor items from target items in the visual arrays tasks differentiates high capacity and low capacity individuals (Vogel & Machizawa, 2004; Vogel, McCollough, et al., 2005). To test for a differentiation between the selective and nonselective visual arrays tasks we conducted a structural equation model with VA-orient-S loading onto its own VA Selective factor and VA-color and VA-orient loading onto a VA nonselective factor. The results of the model (see Figure 14) showed that working memory capacity and the VA selective factor uniquely predicted attention control, but the VA nonselective factor did not, although the path values do not reliably differ, BF01 = 22.43, P(H0 | Data) = .96. This suggests that the selective filtering in the visual arrays task predicts attention control over and above the nonselective versions.

Figure 14. Structural Equation Model From Data Set 3 Testing the Unique Relationships of VA Selective, VA Nonselective, and Working Memory Capacity to Attention Control.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

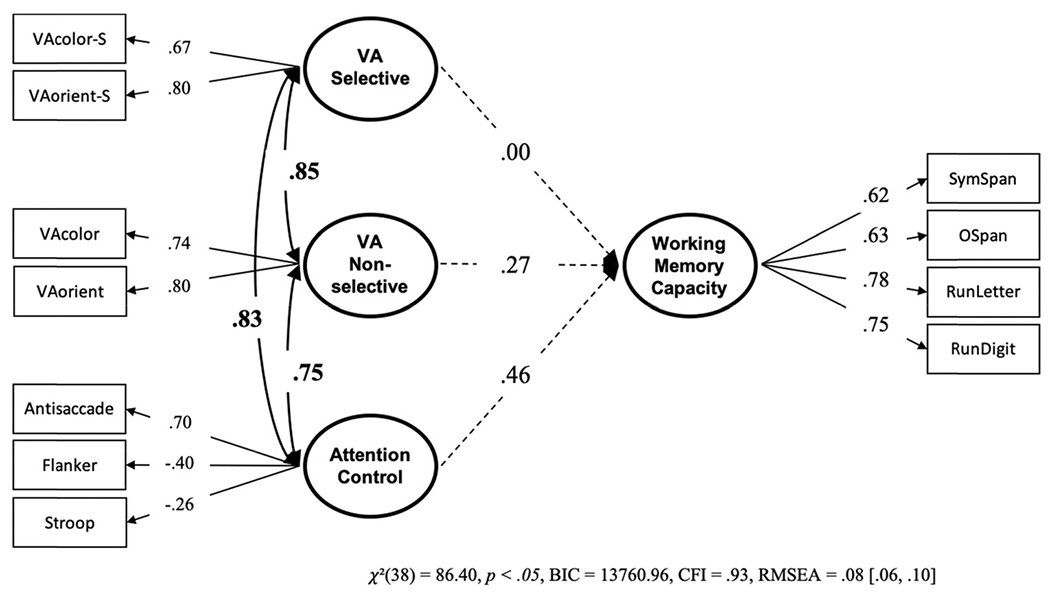

Next, we conducted a model with visual arrays and attention control uniquely predicting working memory capacity. If storage capacity, independent of attention control, is reflected in visual arrays capacity score then it would be expected to predict working memory capacity uniquely from attention control. Again, we split the visual arrays task into selective and nonselective factors. The model showed that only attention control and nonselective visual arrays were uniquely predictive of working memory capacity (see Figure 15). Setting the nonsignificant path from selective visual arrays to zero did not harm model fit, BF01 = 22.83, P(H0 | Data) = .96. We also tested a model where the predictive paths for the two visual arrays factors were constrained to be equal. While this constrained factor was slightly preferred over the freely estimated model, BF01 = 2.66, P(H0 | Data) = .73, adding this constraint did decrease model fit according to a chi-square test, Δχ2(1) = 4.39, p = .04.11

Figure 15. Structural Equation Model From Data Set 3 Testing the Unique Relationships of VA Nonselective, and Attention Control to Working Memory Capacity.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

Data Set 4

In Data Set 4 there were a total of 215 subjects, each with less than 3% missing data for any task. These data were collected from 2009–2010 and are related to the following publications: Shipstead et al. (2012, 2014, 2015).

The tasks in Data Set 4 are similar to those in Data Set 3. The main difference is that there was an additional visual arrays task for a total of four versions of the task; VA-color, VA-orient, VA-color-S, and VA-orient-S. This is the only data set that has more than one of each visual array version (selective and nonselective), which allows us to more strongly test differentiation between these versions. However, the sample size is not very large. Data Set 4 also does not contain the Rotation Span and Running Spatial Span, but it does include the Running Letter span.

Exploratory Factor Analysis

In an exploratory factor analysis (Table 4), three factors had an eigenvalue greater than 1, scree plot suggested two factors, and parallel analysis suggested three factors. The visual arrays tasks and antisaccade preferred to load onto the first factor. The RunLetter and RunDigit preferred to load onto their own factor (the second factor) and the OSpan, SymSpan, and Stroop preferred to load together. The flanker did not load well onto any factor, each loading under .30. The factors correlated from .4–.5 with each other. Although the exploratory factor analysis suggests the running span and complex span tasks can be treated as separate factors, for the sake of comparison with the previous data sets we conducted further models combing the tasks onto a single working memory capacity factor. Note that we did run the analysis with them separated but the overall interpretation of the models remained the same.

Table 4.

Data Set 4 – Exploratory Factor Analysis With Oblimin Rotation

| Factor |

|||

|---|---|---|---|

| Variable | F1 | F2 | F3 |

| OSpan | −0.08 | 0.31 | 0.52 |

| SymSpan | 0.20 | 0.04 | 0.64 |

| RunLetter | −0.05 | 0.69 | 0.23 |

| RunDigit | 0.13 | 0.82 | −0.08 |

| VA-color | 0.64 | 0.04 | 0.06 |

| VA-orient | 0.72 | 0.05 | −0.02 |

| VA-color-S | 0.67 | −0.02 | −0.01 |

| VA-orient-S | 0.74 | 0.04 | −0.01 |

| Antisaccade | 0.54 | 0.01 | 0.13 |

| Flanker | −0.29 | 0.10 | −0.21 |

| Stroop | −0.16 | 0.22 | −0.38 |

Note. VA = visual arrays.

Structural Equation Models

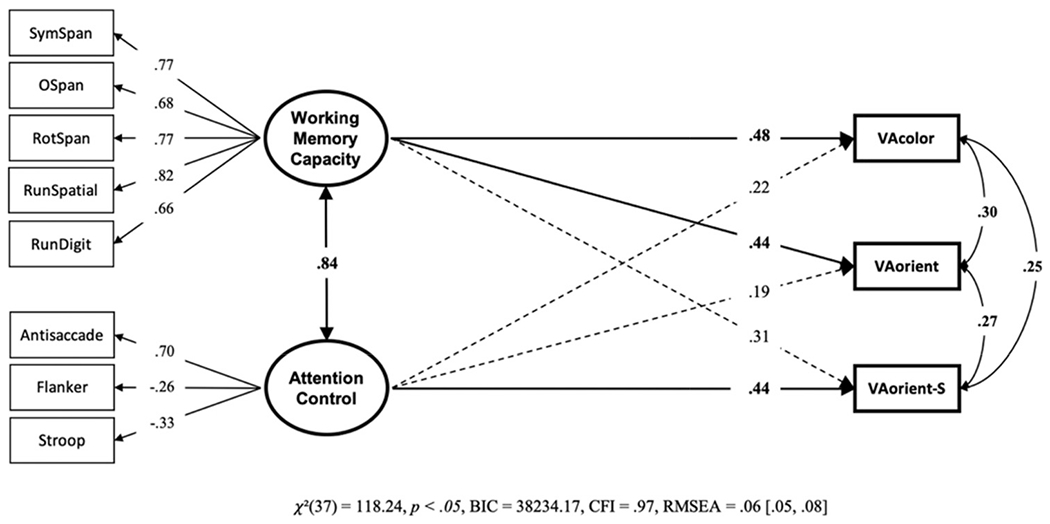

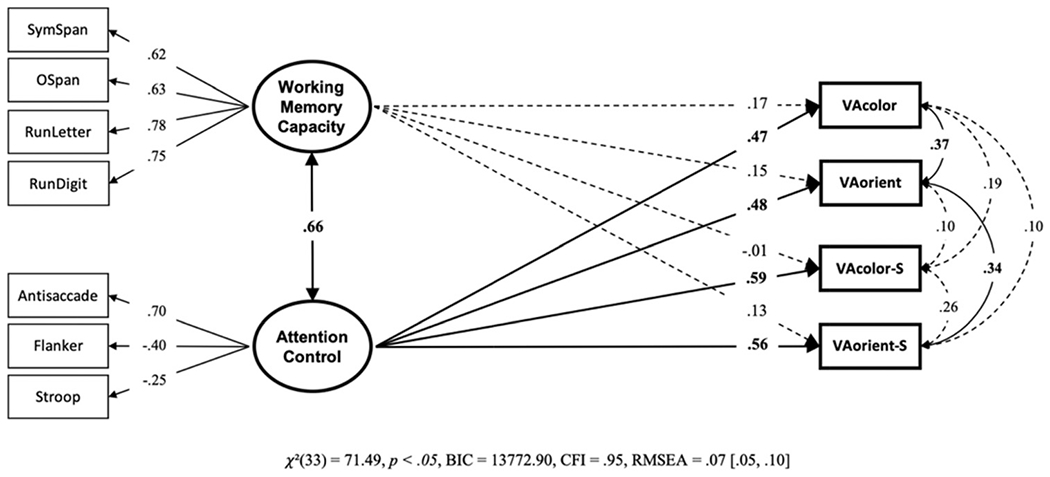

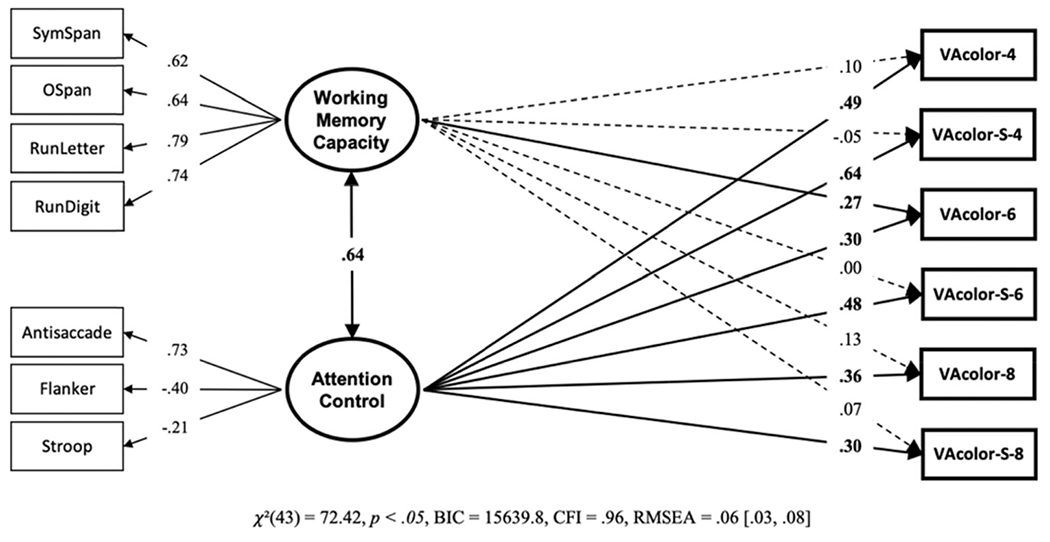

We conducted a structural equation model with working memory capacity and attention control predicting k capacity scores on each visual array task (see Figure 16). Only attention control, not working memory capacity, uniquely predicted individual differences in visual arrays k capacity scores, regardless of the type of visual arrays task. We compared this model against a “null” model in which all the nonsignificant working memory capacity paths were set to zero, the “null” model was strongly preferred, BF01 = 13,889.01, P(H0 | Data) > .99. However, as with Data Set 3, constraining paths from working memory capacity and attention control to equality for each visual array task did not worsen model fit, BF01 = 2098.20, P(H0 | Data) > .99. However, of the constrained models, the “null” model was preferred, BF01 = 6.62, P(H0 | Data) = .87. The unique and common contributions of working memory capacity and attention control relative to the amount of explained variance in each visual array task is illustrated in Figure 17. In the model, working memory capacity and attention control explained 35% of variance in the VA-color task, 34% of variance in the VA-orient task, 35% of variance in the VA-color-S task, and 42% of variance in the VA-orient-S task. The model (see Figure 16) is also explaining most of the variance between the visual arrays tasks (this is indicated by the residual correlations on the far right of the model).

Figure 16. Structural Equation Model From Data Set 4 With the Unique Relationships of Working Memory Capacity and Attention Control to Each Visual Array Task.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

Figure 17. Pie Chart Representing the Unique Contributions From Working Memory Capacity/Attention Control and Common Variance Between the Two.

Note. The model explained 35% of variance in the VA-color task, 34% of variance in the VA-orient task, 35% of variance in the VA-color-S task, and 42% of variance in the VA-orient-S task. VA = visual arrays.

The VA-color and VA-color-S both contained trials with set-sizes four, six, and eight. This allowed us to compare the effects of set-size and selective components of the task. We conducted a structural equation model with the set-size and selective components separated out as; VA-color-4, VA-color-6, VA-color-8, VA-color-S-4, VA-color-S-6, and VA-color-S-8 (see Figure 18). Overall, the model does not suggest much of an effect of set-size; in fact, working memory capacity and attention control are slightly more related to visual arrays performance at smaller set-sizes—contrary to a purely storage account of visual arrays. The largest path numerically, from attention control to visual arrays occurred at set-size 4 with a selective component. Admittedly post hoc, this does make sense given an attention control view of visual arrays. Successful filtering of nonrelevant items on a set-size of four (eight total items in the display) can maximally reduce the load from eight items to four items. Given that performance tends to peak at around four items, successful filtering of nonrelevant items can lead to optimal performance. Compare this to successful filtering of nonrelevant items on a set-size of eight (16 total items in the display). Successful filtering, at most, would lead to reducing the load from 16 to 8. While this would likely improve performance, one would still be under non-optimal load regardless of whether there is a selective component to the task. Therefore, although attention control would be involved in performance at lower and higher set-sizes, the impact on performance would be greatest at lower set-sizes. However, this would have to be studied more systematically in future studies to draw any strong conclusions from this pattern of findings.

Figure 18. Structural Equation Model From Data Set 4 With the Unique Relationships of Working Memory Capacity and Attention Control to Visual Arrays Broken Down Into Set-Sizes and Selective Components.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

Next, we tested the same structural equation models as in Data Set 3 in which we separated out selective and nonselective factors. In this Data Set, however, we had two task indicators on the selective factor instead of just one. The model (see Figure 19) showed that only the selective visual arrays latent factor had unique variance predictive of attention control. Meanwhile, the nonselective visual arrays and working memory capacity factors were not uniquely related to attention control. setting all nonsignificant paths to zero did not decrease model fit, and this model was preferred to the freely estimated one, BF01 = 40.61 P(H0 | Data) = .98. Further, while constraining the paths from the visual arrays factors to equality also did not harm model fit, BF01 = 8.67, P(H0 | Data) = .90, the “null” model was the preferred model, BF01 = 4.66, P(H0 | Data) = .82.

Figure 19. Structural Equation Model From Data Set 4 Testing the Unique Relationships of VA Selective, VA Nonselective, and Working Memory Capacity to Attention Control.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

In a model in which selective and nonselective visual arrays are predicting working memory capacity, neither set of tasks uniquely predicted working memory capacity (see Figure 20). But note the path values for attention control and VA nonselective (.46 and .27, respectively). The nonsignificance of these path values could have had to do with power issues as this study only had 215 subjects. This is supported by the fact that the path values in Data Set 4 are similar to that in Data Set 3 (in which they were significant). This would support the conclusion that the nonselective visual arrays tasks do include storage capacity related variance that is independent of attention control. However, the selective visual arrays tasks may not.12

Figure 20. Structural Equation Model From Data Set 4 Testing the Unique Relationships of VA Selective, VA Nonselective, and Attention Control to Working Memory Capacity.

Note. VA = visual arrays. Bold numbers indicate significant values based on p < .05.

Summary

We began this project as an empirical test of the theoretical nature of visual arrays tasks. Specifically, we tested whether:

visual arrays tasks are more closely related to storage-based measures of working memory capacity or to resistance-to-interference aspects of attention control, and

the nature of the visual arrays task (i.e., non-selective or selective) influences which constructs that type of visual arrays task primarily reflects

To address these questions, we conducted a series of statistical tests across four independently collected data sets. The conservative interpretation of the overall pattern which emerged across all four data sets is that visual arrays tasks (as a collective) are at the very least not a pure measure of working memory capacity but are multiply determined in terms of attention control and working memory capacity. The more liberal interpretation is that visual arrays tasks will reflect attention control more so than working memory capacity when a selection component is included in the task. Our pattern of results suggests that whereas nonselective measures of visual arrays reflect some attention control properties in addition to storage properties of working memory capacity, selective visual arrays tasks reflect the ability to control and manipulate attention more so than nonselection versions. Although the archival nature of these data limits the ability to make causal interpretations, the body of results as a whole, and their consistent replication call into question the traditional interpretation of visual arrays tasks as measures of visual storage capacity. Results and implications are outlined below.

In all four data sets, the exploratory factor analyses showed that both nonselective and selective visual arrays tasks loaded onto a separate factor from accepted working memory capacity tasks, preferring to load with other attention control tasks, or at least with the antisaccade task (our most reliable measure of attention control). Model fit and parsimony were generally optimized when the selective visual arrays task was predicted by an attention control factor only (i.e., when the path from working memory capacity to visual arrays was set to zero), rather than by both attention control and working memory capacity, although these simultaneously estimated paths were not statistically different from one another. Furthermore, selective visual arrays tasks uniquely predicted attention control over and above working memory capacity and nonselective visual arrays. In contrast, nonselective visual arrays tasks uniquely predicted working memory capacity over and above attention control and selective visual arrays. These results suggest that attention control related variance is at least as predictive of selective visual arrays than is working memory capacity related variance, if not more so. This is not necessarily the case for nonselective visual arrays tasks.

Discussion

We have already outlined areas of support for the role of attention control in selective and nonselective visual arrays, including domain generality, set-size and timing manipulations, and neurophysiological data. We will not readdress that evidence here, but we would like to highlight a few additional interpretations that are particularly relevant to the overlap in storage and attention control processes in nonselective and selective visual arrays.

Generally speaking, we believe these results call into question the idea that visual arrays measures are strictly visuospatial storage tasks (Luck & Vogel, 1997). Our results echo other findings which initially broadened the interpretation of the nature of nonselective visual arrays tasks. Morey and Cowan (2004; see also Saults & Cowan, 2007), for example, found that when a high verbal load was added, nonselective visual arrays performance decreased. As they argued, maintaining the higher verbal load required central resources (see Baddeley & Hitch, 1974) and when these resources were occupied, less attention was available for visual arrays performance. This effect was apparent in the performance decrements. In other words, visual arrays performance seemed to be drawing on central resources rather than visual-specific working memory resources.