Abstract

Deep generative models of molecules have grown immensely in popularity, trained on relevant datasets, these models are used to search through chemical space. The downstream utility of generative models for the inverse design of novel functional compounds, depends on their ability to learn a training distribution of molecules. The most simple example is a language model that takes the form of a recurrent neural network and generates molecules using a string representation. Since their initial use, subsequent work has shown that language models are very capable, in particular, recent research has demonstrated their utility in the low data regime. In this work, we investigate the capacity of simple language models to learn more complex distributions of molecules. For this purpose, we introduce several challenging generative modeling tasks by compiling larger, more complex distributions of molecules and we evaluate the ability of language models on each task. The results demonstrate that language models are powerful generative models, capable of adeptly learning complex molecular distributions. Language models can accurately generate: distributions of the highest scoring penalized LogP molecules in ZINC15, multi-modal molecular distributions as well as the largest molecules in PubChem. The results highlight the limitations of some of the most popular and recent graph generative models– many of which cannot scale to these molecular distributions.

Subject terms: Cheminformatics, Machine learning, Computational chemistry, Method development

Generative models for the novo molecular design attract enormous interest for exploring the chemical space. Here the authors investigate the application of chemical language models to challenging modeling tasks demonstrating their capability of learning complex molecular distributions.

Introduction

The efficient exploration of chemical space is one of the most important objectives in all of science, with numerous applications in therapeutics and materials discovery. However, exploration efforts have only probed a very small subset of the synthetically accessible chemical space1, therefore developing new tools is essential. The rise of artificial intelligence may provide the methods to unlock the mysteries of the chemical universe, given its success in other challenging scientific questions like protein structure prediction2.

Very recently, deep generative models have emerged as one of the most promising tools for this immense challenge3. These models are trained on relevant subsets of chemical space and can generate novel molecules similar to their training data. Their ability to learn the training distribution and generate valid, similar molecules—is important for success in downstream applications like the inverse design of functional compounds.

The first models involved re-purposing recurrent neural networks (RNNs)4 to generate molecules as SMILES strings5. These language models can be used to generate molecular libraries for drug discovery6 or built into variational autoencoders (VAE)3,7 where bayesian optimization can be used to search through the model’s latent space for drug-like molecules. Other models generate molecules as graphs either sequentially8–14 using graph neural networks15,16 or generate whole molecules in one shot17–20. Two of the most popular: CGAVE and JTVAE can be directly constrained to enforce valency restrictions. Other models generate molecules as point clouds in 3D space21.

Language models have been widely applied22 with researchers using them for ligand-based de novo design23. A few recent uses of language models include: targeting natural-product-inspired retinoid X receptor modulators24, designing liver X receptor agonists25, generating hit-like molecules from gene expression signatures26, designing drug analogs from fragments27, composing virtual quasi-biogenic compound libraries28 and many others. Additional studies have highlighted the ability of language models in the low-data regime29,30 with improved performance using data augmentation31.

Initially the brittleness of the SMILES string representation meant a single character could lead to invalid molecules. This problem has been largely solved with more robust molecular string representations32–35. Additionally, with improved training methods, deep generative models based on RNNs consistently generate a high proportion of valid molecules using SMILES6,9,36. One area that has not been studied is the ability of language models and generative models to generate larger more complex molecules or generate from chemical spaces with large ranges in size and structure. This is beneficial because of increased interest in larger more complex molecules for therapeutics37.

To test the ability of language models, we formulate a series of challenging generative modeling tasks by constructing training sets of more complex molecules than exist in standard datasets3,36,38. In particular, We focus on the ability of language models to learn the distributional properties of the target datasets. We train language models on all tasks and baseline many other graph generative model as well—although we focus on CGAVE and JTVAE. The results demonstrate that language models are powerful generative models and can learn complex molecular distributions better than most graph generative models.

Results

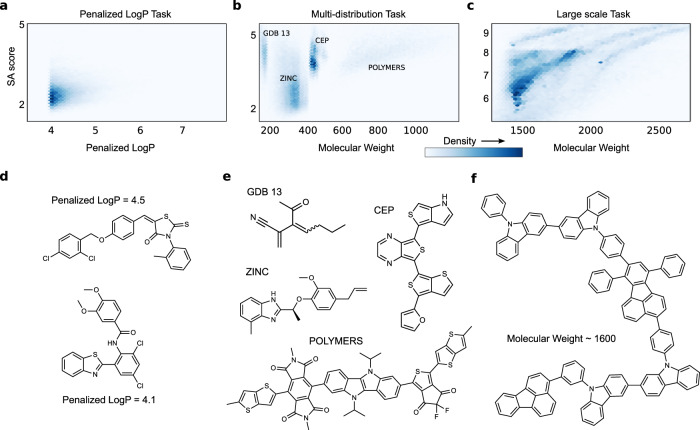

We define three tasks, generating: (1) distributions of molecules with high scores of penalized LogP3 (Fig. 1a, d), (2) multi-modal distributions of molecules (Fig. 1b, e), and (3) the largest molecules in PubChem (Fig. 1c, e). Necessarily, each different generative modeling task is defined by learning to generate from the distribution of molecules in a dataset. We build three datasets using relevant subsets of larger databases.

Fig. 1. The generative modeling tasks.

a–c The molecular distributions defining the three complex molecular generative modeling task. a The distribution of penalized LogP vs. SA score from the training data in the penalized logP task. b The four modes of differently weighted molecules in the training data of the multi-distribution task.c Large scale task’s molecular weight training distribution. d–f examples of molecules from the training data in each of the generative modeling tasks. d The penalized LogP task, e The multi-distribution task. f The large-scale task.

In Table 1 there are some summary statistics of atom and ring number in all datasets compared with two standard datasets Zinc3 and Moses36. All tasks involve larger molecules with more substructures and contain a larger range of atom and ring number per molecule.

Table 1.

Dataset statistics for all three tasks compared to standard datasets.

| # Atoms | # Rings | |||||

|---|---|---|---|---|---|---|

| Min | Mean | Max | Min | Mean | Max | |

| Zinc | 6 | 23.2 | 38 | 0 | 2.8 | 9 |

| Moses | 8 | 21.6 | 27 | 0 | 2.6 | 8 |

| LogP | 12 | 34.7 | 78 | 0 | 4.2 | 37 |

| Multi | 7 | 31.1 | 106 | 0 | 5.3 | 23 |

| Large | 101 | 140.1 | 891 | 0 | 11.2 | 399 |

For each task we assess performance by plotting the distribution of training molecules properties and the distribution learned by the language models and graph models. We use a histogram for the training molecules and fit a Gaussian kernel density estimator to it by tuning its bandwidth parameter. We plot KDE’s for molecular properties from all models using the same bandwidth parameter.

From all models we initially generate 10K (thousand) molecules, compute their properties and use them to produce all plots and metrics. Furthermore, for fair comparison of learned distributions, we use the same number of generated molecules from all models after removing duplicates and training molecules.

For quantitative evaluation of any model’s ability to learn its training distribution, we compute the Wasserstein distance between property values of generated molecules and training molecules. We also compute the Wasserstein distance between different samples of training molecules in order to determine a most optimal baseline, which we can compare with as an oracle.

For molecular properties we consider: quantitative estimate of drug-likeness (QED)39, synthetic accessibility score (SA)40, octanol–water partition coefficient (Log P)41, exact molecular weight (MW), Bertz complexity (BCT)42, natural product likeness (NP)22. We also use standard metrics like validity, uniqueness, novelty– to assess the model’s ability to generate a diverse set of real molecules distinct from the training data.

For models, our main consideration is a chemical language model using a recurrent neural network with long short-term memory43 and is trained on SMILES (SM-RNN) or SELFIES (SF-RNN). We also train two of the most popular deep graph generative models: the junction tree variational autoencoder (JTVAE)10 and the constrained graph variational autoencoder (CGVAE)9.

Penalized LogP Task

For the first task, we consider one of the most widely used benchmark assessments for searching chemical space, the penalized LogP task—finding molecules with high LogP44 penalized by synthesizability40 and unrealistic rings. We consider a generative modeling version of this task, where the goal is to learn distributions of molecules with high penalized LogP scores. Finding individual molecules with good scores (above 3.0) is a standard challenge but learning to directly generate from this part of chemical space, so that every molecule produced by the model has high penalized LogP, adds another degree of difficulty. For this we build a training dataset by screening the ZINC15 database45 for molecules with values of penalized LogP exceeding 4.0. Many machine learning approaches can only find a handful of molecules in this range, for example JTVAE10 found 22 total during all their attempts. After screening, the top scoring molecules in ZINC amounted to roughly 160K (K is thousand) molecules for the training data in this task. Thus, the training distribution is extremely spiked with most density falling around 4.0–4.5 penalized LogP as seen in Fig. 1a with most training molecules resembling the examples shown in Fig. 1d. However, some of the training molecules, around 10% have even higher penalized LogP scores—adding a subtle tail to the distribution.

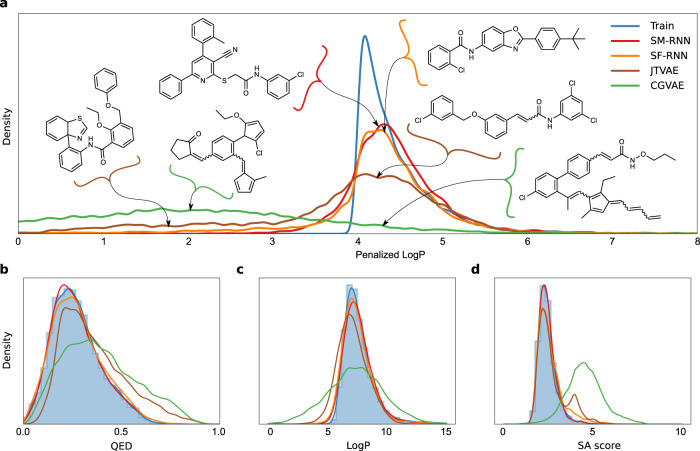

The results of training all models are shown in Figs. 2 and 3. The language models perform better than the graph models, with the SELFIES RNN producing a slightly closer match to the training distribution in Fig. 2a. The CGVAE and JTVAE learn to produce a large number of molecules with penalized LogP scores that are substantially worse than the lowest training scores. It is important to note, from the examples of these shown in Fig. 2a these lower scoring molecules are quite similar to the molecules from the main mode of the training distribution, this highlights the difficulty of learning this distribution. In Fig. 2b–d we see that JTVAE and CGVAE learn to produce more molecules with larger SA scores than the training data, as well, we see that all models learn the main mode of LogP in the training data but the RNNs produce closer distributions– similar results can be seen for QED. These results carryover for quantitative metrics and both RNNs achieve lower Wasserstein distance metrics than the CGVAE and JTVAE (Table 2) with the SMILES RNN coming closest to the TRAIN oracle.

Fig. 2. Penalized LogP Task I.

a The plotted distribution of the penalized LogP scores of molecules from the training data (TRAIN) with the SM-RNN trained on SMILES, the SF-RNN trained on SELFIES and graph models: CGVAE and JTVAE. For the graph models we display molecules from the out of distribution mode at penalized LogP score as well as molecules with penalized LogP score in the the main mode [4.0,4.5] from all models. b–d Distribution plots for all models and training data of molecular properties QED, LogP, and SA score.

Fig. 3. Penalized LogP Task II.

a–d Histograms of penalized LogP, Atoms #, Ring # and length of largest carbon chain (all per molecule) from molecules generated by all models or from the training data that have penalized LogP ≥ 6.0. e 2d histograms of penalized LogP and SA score from molecules generated by the models or from training data that have penalized LogP ≥ 6.0. f A few molecules generated by all models or from the training data that have penalized LogP ≥ 6.0.

Table 2.

Wasserstein distance metrics for LogP, SA, QED, MW, BT, and NP between molecules from the training data and generated by the models for all three tasks.

| Task | Samples | LogP | SA | QED | MW | BCT | NP |

|---|---|---|---|---|---|---|---|

| LogP | TRAIN | 0.020 | 0.0096 | 0.0029 | 1.620 | 7.828 | 0.013 |

| SM-RNN | 0.095 | 0.0312 | 0.0068 | 3.314 | 21.12 | 0.054 | |

| SF-RNN | 0.177 | 0.2903 | 0.0095 | 6.260 | 25.00 | 0.209 | |

| JTVAE | 0.536 | 0.2886 | 0.0811 | 35.93 | 76.81 | 0.164 | |

| CGVAE | 1.000 | 2.1201 | 0.1147 | 69.26 | 141.2 | 1.965 | |

| Multi | TRAIN | 0.048 | 0.0158 | 0.0020 | 2.177 | 14.149 | 0.010 |

| SM-RNN | 0.081 | 0.0246 | 0.0059 | 5.483 | 21.118 | 0.012 | |

| SF-RNN | 0.286 | 0.1791 | 0.0227 | 11.35 | 68.809 | 0.079 | |

| JTVAE | 0.495 | 0.2737 | 0.0343 | 27.71 | 171.87 | 0.109 | |

| CGVAE | 1.617 | 1.8019 | 0.0764 | 30.31 | 183.58 | 1.376 | |

| Large | TRAIN | 0.293 | 0.030 | 0.0003 | 18.92 | 85.04 | 0.005 |

| SM-RNN | 1.367 | 0.213 | 0.0034 | 124.49 | 363.0 | 0.035 | |

| SF-RNN | 1.095 | 0.342 | 0.0099 | 67.322 | 457.5 | 0.111 | |

| JTVAE | – | – | – | – | – | – | |

| CGVAE | – | – | – | – | – | – |

TRAIN is an oracle baseline-values closer to it are better.

We further investigate the highest penalized LogP region of the training data with values exceeding 6.0—the subtle tail of the training distribution. In the 2d distributions (Fig. 3e) it’s clear that both RNNs learn this subtle aspect of the training data while the graph models ignore it almost completely and only learn molecules that are closer to the main mode. In particular, CGVAE learns molecules with larger SA score than the training data. Furthermore, the molecules with highest penalized LogP scores in the training data typically contain very long carbon chains and fewer rings (Fig. 3b, d)—the RNNs are capable of picking up on this. This is very apparent in the samples the model produce, a few are show in Fig. 3f, the RNNs produce mostly molecules with long carbon chains while the CGVAE and JTVAE generate molecules with many rings that have penalized LogP scores near 6.0. The language models learn a distribution that is close to the training distribution in the histograms of Fig. 3a–d. Overall, the language models could learn distributions of molecules with high penalized LogP scores, better than the graph models.

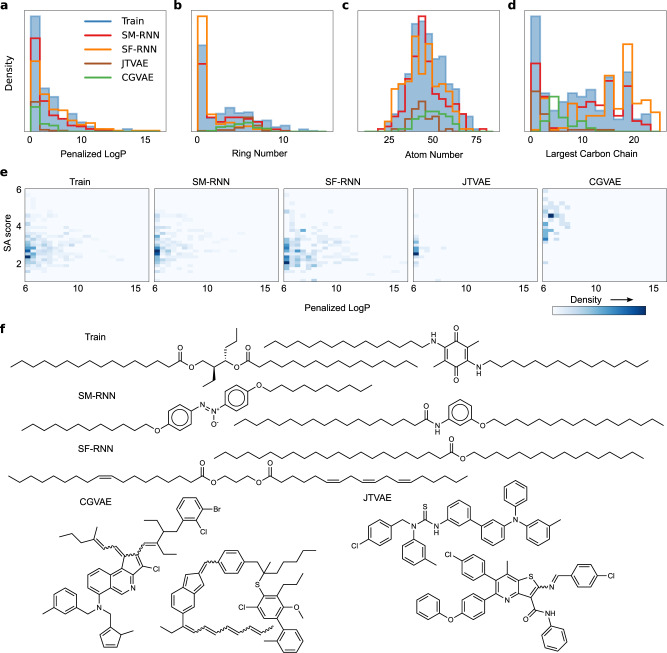

Multi-distribution task

For the next task, we created a dataset by combining subsets of: (1) GDB1346 molecules with molecular weight (MW) ≤ 185, (2) ZINC3,45 molecules with 185 ≤ MW ≤ 425, (3) Harvard clean energy project (CEP)47 molecules with 460 ≤ MW ≤ 600, and the (4) POLYMERS48 molecules with MW > 600. The training distribution has four modes– (Figs. 1b, e and 4a). CEP & GDB13 make up 1/3 and ZINC & POLYMERS take up 1/3 each of ∼200K training molecules.

Fig. 4. Multi-distribution Task.

a The histogram and KDE of molecular weight of training molecules along with KDEs of molecular weight of molecules generated from all models. Three training molecules from each mode are shown. b–d The histogram and KDE of QED, LogP and SA scores of training molecules along with KDES of molecules generated from all models. e 2d histograms of molecular weight and SA score of training molecules and molecules generated by all models.

In the multi-distribution task, both RNN models capture the data distribution quite well and learn every mode in the training distribution (Fig. 4a). On the other hand, JTVAE entirely misses the first mode from GDB13 then poorly learns ZINC and CEP. As well, CGVAE learns GDB13 but underestimates ZINC and entirely misses the mode from CEP. More evidence that the RNN models learn the training distribution more closely is apparent in Fig. 4e where CGVAE and JTVAE barely distinguish the main modes. Additionally, the RNN models generate molecules better resembling the training data (Supplementary Table 4). Despite this, all models– except CGVAE, capture the training distribution of QED, SA score and Bertz Complexity (Fig. 4b–d). Lastly, in Table 2 the RNN trained on SMILES has the lowest Wasserstein metrics followed by the SELFIES RNN then JTVAE and CGVAE.

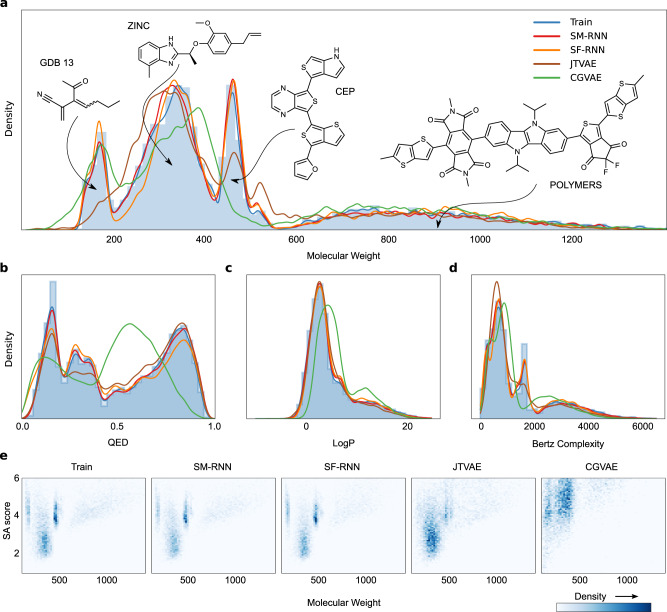

Large-scale task

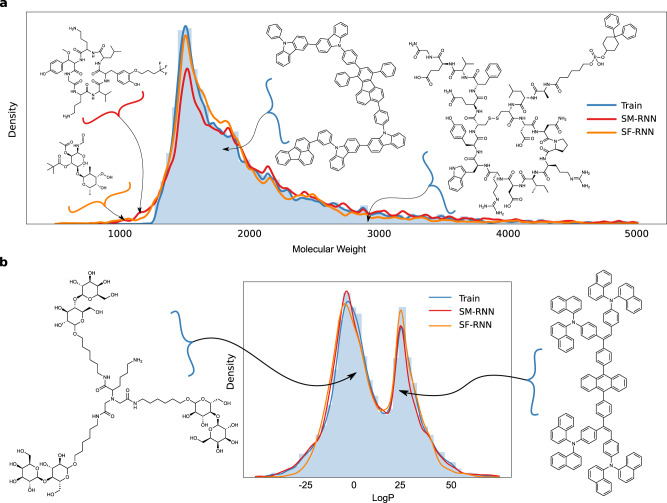

The last generative modeling task, involves testing the ability of deep generative models to learn large molecules, the largest possible molecules relevant to molecular generative models that use SMILES/SELFIES string representations or graphs. For this we turn to PubChem49 and screen for the largest molecules with more than 100 heavy atoms, producing ~300K molecules. These are molecules of various kinds: small biomolecules, photovoltaics and others. They also have a wide range of molecular weight from 1250 to 5000 but most molecules fall into the 1250–2000 range (Fig. 1c).

This task was the most challenging for the graph models, both failed to train and were entirely incapable of learning the training data. In particular, JTVAE’s tree decomposition algorithm applied to the training data produced a fixed vocabulary of ∼11,000 substructures. However, both RNN models were able to learn to generate molecules as large and as varied as the training data. The training molecules correspond to very long SMILES and SELFIES string representations, in this case, the SELFIES strings provided an additional advantage and the SELFIES RNN could match the data distribution more closely (Fig. 5a). In particular, learning valid molecules is substantially more difficult with the SMILES grammar, as there are many more characters to generate for these molecules and a higher probability that the model will make a mistake and produce an invalid string. In contrast, the SELFIES string generated will never be invalid. Interestingly, even when the RNN models generated molecules that were out of distribution and substantially smaller than the training molecules—they still had similar substructures and resemblance to the training molecules (Fig. 5a). In addition, the training molecules seemed to be divided into two modes of molecules with lower and higher LogP values (Fig. 5b): with biomolecules defining the lower mode and molecules with more rings and longer carbons chains defining the higher LogP mode (more example molecules can be seen in supplementary Fig. 8). The RNN models were both able to learn the bi-modal nature of the training distribution.

Fig. 5. Large-scale Task I.

a The histogram and KDE of molecular weight of training molecules along with the KDEs of molecular weight of molecules generated from the RNNs. Two molecules generated by the RNN’s with lower molecular weight than the training molecules are shown on the left of the plot. In addition, two training molecules from the mode and tail of the distribution of molecular weight are displayed on the right. b The histogram and KDE of LogP of training molecules along with the KDEs of LogP of molecules generated from the RNNs. On either side of the plot, for each mode in the LogP distribution, we display a molecule from the training data.

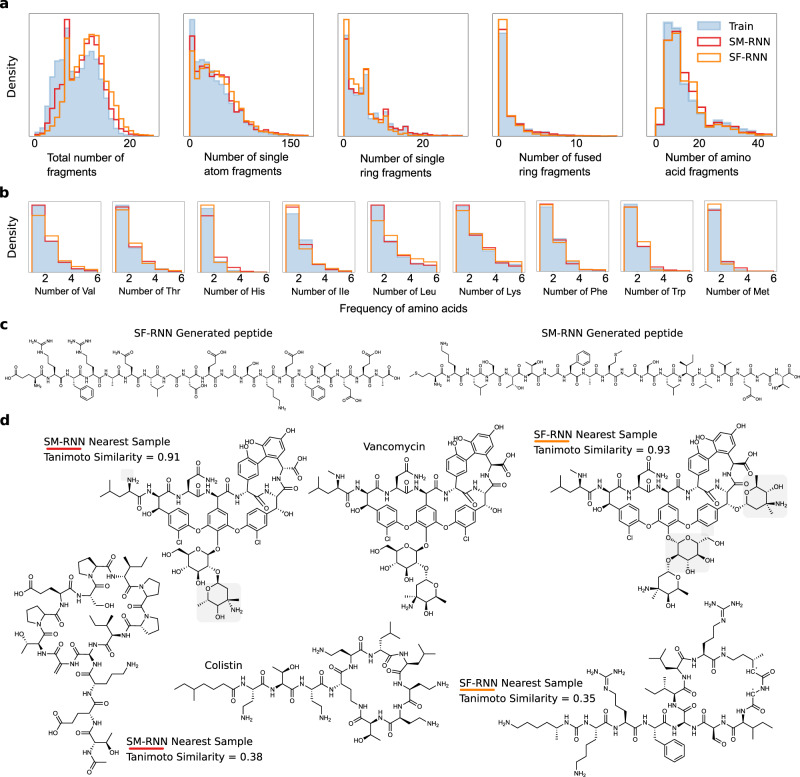

The training data has a variety of different molecules and substructures, in Fig. 6a the RNN models adequately learn the distribution of substructures arising in the training molecules. Specifically the distribution for the number of: fragments, single atom fragments as well as single, fused-ring and amino acid fragments in each molecule. As the training molecules get larger and occur less, both RNN models still learn to generate these molecules (Fig. 5a when molecular weigh >3000).

Fig. 6. Large-scale Task II.

a Histograms of fragment #, single atom fragment #, single ring fragment #, fused-ring fragment #, amino acid fragment # (all per molecule) from molecules generated by the RNN models or from the training data. b Histograms of specific amino acid number in each molecule generated by the RNNs or from the training data. c A peptide generated by the SM-RNN—MKLSTTGFAMGSLIVVEGT (right) and one generated by the SF-RNN—ERFRAQLGDEGSKEFVEEA (left). d Molecules generated by the SF-RNN and SM-RNN that are closest in Tanimoto similarity to colistin and vancomycin. The light gray shaded regions highlight differences from vancomycin.

The dataset in this task contains a number of peptides and cyclic peptides that arise in PubChem, we visually analyze the samples from the RNNs to see if they are capable of preserving backbone chain structure and natural amino acids. We find that the RNNs often sample snippets of backbone chains which are usually disjoint—broken up with other atoms, bonds and structures. In addition, usually these chains have standard side chains from the main amino acid residues but other atypical side chains do arise. In Fig. 6c we show two examples of peptides that are generated by the SM-RNN and SF-RNN. While there are many examples where both models do not preserve backbone and fantasize weird side-chains, it is very likely, that if trained entirely on relevant peptides the model could be used for peptide design. Even further, since these language models are not restricted to generating amino acid sequences that could be used to design any biochemical structure that mimic the structure of peptics or even replicate their biological behavior. This makes them very applicable to design modified peptides50, other peptide mimetics and complex natural products51,52. The only requirement would be for a domain expert to construct a training dataset for specific targets. We conduct an additional study on how well the RNNs learned the biomolecular structures in the training data, in Fig. 6b we see both RNNs match the distribution of essential amino acid (found using a substructure search). Lastly, it is also likely that the RNNs could also be used to design cyclic peptides. To highlight the promise of language models for this task we display molecules generated by the RNNs with the largest Tanimoto similarity to colistin and vancomycin (Fig. 6d). The results in this task demonstrate that language models could be used to design more complex biomolecules.

We also evaluate models on standard metrics in the literature: validity, uniqueness and novelty. Using the same 10K molecules generated from each model for each task we compute the following statistics defined in ref. 17 and store them in Table 3: (1) validity: the ratio between the number of valid and generated molecules, (2) uniqueness: the ratio between the number of unique molecules (that are not duplicates) and valid molecules, (3) novelty: the ratio between unique molecules that are not in the training data and the total number of unique molecules. In the first two tasks (Table 3), JTVAE and CGVAE have better metrics with very high validity, uniqueness and novelty (all close to 1), here the SMILES and SELFIES RNN perform worse but the SELFIES RNN is close to their performance. The SMILES RNN has the worse metrics due to its poor grammar but is not substantially worse than the other models.

Table 3.

Standard metrics validity, uniqueness and novelty of molecules generated by all models in every task.

| Task | Metric | SM-RNN | SF-RNN | JTVAE | CGVAE |

|---|---|---|---|---|---|

| LogP | Validity | 0.941 | 1.000 | 1.000 | 1.000 |

| Uniqueness | 0.987 | 1.000 | 0.982 | 1.000 | |

| Novelty | 0.721 | 0.871 | 0.980 | 1.000 | |

| Multi | Valid | 0.969 | 1.000 | 0.999 | 0.999 |

| Uniqueness | 0.996 | 0.989 | 0.998 | 0.996 | |

| Novelty | 0.937 | 0.950 | 0.998 | 1.000 | |

| Large | Valid | 0.876 | 1.000 | – | – |

| Uniqueness | 0.999 | 0.994 | – | – | |

| Novelty | 0.999 | 0.999 | – | – |

Closer to 1.0 indicates better performance.

We also considered many additional graph generative model baselines8,12,17,19,53–58 on all tasks. These include some GANs11,19, some autoregressive models8,53,57, normalizing flows54,58 and single shot models17 Most do not scale at all and the few baselines that do—could only handle the LogP and multi-distribution tasks, but do not perform better than the language models. Results are shown in Supplementary Tables 1, 2 and Fig. 1.

Discussion

In this work, in effort to test the ability of chemical language models, we introduce three complex modeling tasks for deep generative models of molecules. Language models and graph baselines perform each task, which entails learning to generate molecules from a challenging dataset.s The results demonstrate that language models are very powerful, flexible models that can learn a variety of very different complex distributions while the popular graph baselines are much less capable.

In comparison of SELFIES and SMILES, both the SM-RNN and SF-RNN perform well in all tasks, better than the baselines. We report that the SF-RNN has better standard metrics (Table 3) in every task, but the SM-RNN has better Wasserstein distance metrics (Table 2). Furthermore, the SF-RNN has better novelty than the SM-RNN—this may mean that the SELFIES grammar leads to less memorization of the training dataset in language models. This could also help explain why the SF-RNN has better standard metrics but worse Wasserstein metrics than the SM-RNN. In addition, data augmentation and random SMILES31 could be used to improve the novelty score of the SM-RNN. In future, it would be valuable to have a more comprehensive evaluation of the use of SMILES and SELFIES representations in deep generative models.

The results show that the main baseline graph generative models, JTVAE and CGVAE are not as flexible as language models. For the penalized LogP task, the difference between a molecule that has a score of 2 and one that scores 4 often can be very subtle. Sometimes changing a single carbon or other atom can cause a large drop in score—this likely explains why the CGVAE severely misfit the main training mode. For the multi-distribution task, JTVAE and CGVAE’s difficulties are clear but very understandable. For JTVAE, it has to learn a wide range of tree types: many of which have no large substructures like rings (the GDB13 molecules) while others are entirely rings (CEP and POLYMERS). For CGVAE, it has to learn a wide range of very different generation traces—which is difficult especially since it only uses one sample trace during learning. For the same reasons, these models were incapable of training on the largest molecules in PubChem.

The language models also perform better than the additional graph generative baselines—which have the same limitations as JTVAE and CGVAE. This is almost expected, as graph generative models have the more difficult task of generating both the atom and bond information—while a language model only has to generate a single sequence. Given this– it is natural that language models display such flexible capacity and the evaluated graph generative models do not. Outside of molecular design some graph generative models have attempted to scale to larger graphs59,60 but these models have not been augmented for molecules. The results here do highlight the fact that many widely used graph generative models are designed only for small drug-like39 molecules and do not scale to larger more complex molecules. On the other hand, while language models can scale and flexibly generate larger molecules, graph generative models are more interpretable53,57 which is important for drug and material discovery.

Based on the experiments conducted, language models are very powerful generative models for learning any complex molecular distribution and should see even more widespread use. However, it is still possible to see improvements to these models as these models cannot account for other important information like molecular geometry. In addition, we hope that the molecular modeling tasks and datasets introduced can motivate new generative models for these larger, more complex molecules. Future work will explore how capable chemical language models are in learning larger and larger snapshots of chemical space.

Methods

Hyper-parameter optimization

For hyper-parameter optimization we use the simplest most effective method—namely random search61. We randomly sample from discrete grids of hyper-parameters with equal probability of selection for each value. The values are roughly equally spaced with 3–5 values in each grid. The upper and lower bounds for each hyper-parameter are defined as such: learning rate , hidden units , layer number , dropout (probability) in [0.0,0.5]. We do not optimize the number of epochs– we just use the default value for the baseline models used during training on other datasets (MOSES, ZINC or Chembl).

Model selection criteria

There are many model selection criteria possible, for example—the MOSES benchmark36 suggest the Frechet Distance, however, this and other performance metrics have been shown to have issues62. We evaluate and select models using all metrics employed in combination with the distribution plots. First we compile the top 10% of models with highest validity, uniqueness and novelty. Then we plot distribution plots for the main property of interest (i.e. penalized logP for LogP task and molecular weight for others)—then take the model that has the closest distribution to the training distribution and scores the lowest on largest number of the six Wasserstein distance metrics.

Further details

Language models are implemented in Python 3 with PyTorch63 molecules are processed and relevant properties are computed using RDkit64. Wasserstein distances are computed using SciPy65 as scipy.stats.wasserstein_distance based on66—also known as the earth mover’s distance, it can be viewed as the minimum amount of distribution weight that must be moved, multiplied by the distance—in order to transform samples from one distribution into samples from the another.

Penalized LogP task details

For the SM-RNN we used an LSTM with 2 hidden layer with 400 units and dropout in the last layer with prob = 0.2 and learning rate of 0.0001. For the SF-RNN we used an LSTM with 2 hidden layer with 600 units and dropout in the last layer with prob = 0.4 and learning rate of 0.0002. The CGVAE used 8 propagation layers and hidden layer side of 100 with kl annealed to 0.1 and a learning rate of 0.0015. The JTVAE used a learning rate of 0.001 and 3 GNN layers with a hidden size of 356.

Multi-distribution task

For the SM-RNN we used an LSTM with 3 hidden layer with 512 units and dropout in the last layer with prob = 0.5 and learning rate of 0.0001. For the SF-RNN we used an LSTM with 2 hidden layer with 500 units and dropout in the last layer with prob = 0.2 and learning rate of 0.0003. The CGVAE used 8 propagation layers and hidden layer side of 100 with kl annealed to 0.1 and a learning rate of 0.001. The JTVAE used a learning rate of 0.0001 and 3 GNN layers with a hidden size of 356.

Large-scale task

For the SM-RNN we used an LSTM network with 2 hidden layers with 512 units and dropout in the last layer with prob = 0.25 and learning rate of 0.001. For the SF-RNN we used an LSTM network with 2 hidden layers with 800 units and dropout in the last layer with prob = 0.4 and learning rate of 0.0001.

Supplementary information

Acknowledgements

A.A.-G. acknowledge funding from Dr. Anders G. Frøseth. A.A.-G. also acknowledges support from the Canada 150 Research Chairs Program, the Canada Industrial Research Chair Program, and from Google, Inc. Models were trained using the Canada Computing Systems67.

Author contributions

D.F.-S. conceived the overall project, designed the experiments, prepared the datasets and wrote the paper. D.F.-S. and K.Z. trained the models and analyzed results. A.A.-G. led the project and provided overall directions.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work. Peer reviewer reports are available.

Data availability

The processed data used in this study are available in https://github.com/danielflamshep/genmoltasks.

Code availability

The code used to train models is publicly available. JTVAE: https://github.com/wengong-jin/icml18-jtnn. CGVAE: https://github.com/microsoft/constrained-graph-variational-autoencoder. The RNN models were trained using the char-rnn code from https://github.com/molecularsets/moses. Trained models are available upon request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Daniel Flam-Shepherd, Email: danielfs@cs.toronto.edu.

Alán Aspuru-Guzik, Email: alan@aspuru.com.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-022-30839-x.

References

- 1.Bohacek RS, McMartin C, Guida WC. The art and practice of structure-based drug design: a molecular modeling perspective. Med. Res. Rev. 1996;16:3. doi: 10.1002/(SICI)1098-1128(199601)16:1<3::AID-MED1>3.0.CO;2-6. [DOI] [PubMed] [Google Scholar]

- 2.Jumper J, et al. Highly accurate protein structure prediction with alphafold. Nature. 2021;596:583. doi: 10.1038/s41586-021-03819-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gómez-Bombarelli R, et al. Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent. Sci. 2018;4:268. doi: 10.1021/acscentsci.7b00572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sutskever, I., Martens, J. & Hinton, G. E. Generating text with recurrent neural networks. In International Conference on Machine Learning (2011).

- 5.Weininger D. Smiles, a chemical language and information system. 1. introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 1988;28:31. doi: 10.1021/ci00057a005. [DOI] [Google Scholar]

- 6.Segler MH, Kogej T, Tyrchan C, Waller MP. Generating focused molecule libraries for drug discovery with recurrent neural networks. ACS Cent. Sci. 2018;4:120. doi: 10.1021/acscentsci.7b00512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kingma, D. P. & Welling, M. Auto-encoding variational bayes. In International Conference on Learning Representations (2014).

- 8.Li, Y., Vinyals, O., Dyer, C., Pascanu, R. & Battaglia, P. Learning deep generative models of graphs. In International Conference on Machine Learning (2018).

- 9.Liu, Q., Allamanis, M., Brockschmidt, M. & Gaunt, A. in Advances in Neural Information Processing Systems 7795–7804 (2018).

- 10.Jin, W., Barzilay, R. & Jaakkola, T. Junction tree variational autoencoder for molecular graph generation. In International Conference on Machine Learning (2018).

- 11.You, J., Liu, B., Ying, Z., Pande, V. & Leskovec, J. Graph convolutional policy network for goal-directed molecular graph generation. Advances in Neural Information Processing Systems 31 (2018).

- 12.Seff, A., Zhou, W., Damani, F., Doyle, A. & Adams, R. P. in Advances in Neural Information Processing Systems.

- 13.Samanta, B. et al. Nevae: a deep generative model for molecular graphs. In: AAAI Conference on Artificial Intelligence (2019).

- 14.Mahmood O, Mansimov E, Bonneau R, Cho K. Masked graph modeling for molecule generation. Nat. Commun. 2021;12:1. doi: 10.1038/s41467-020-20314-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Duvenaud, D. et al. in Neural Information Processing Systems (2015).

- 16.Flam-Shepherd, D., Wu, T. C., Friederich, P. & Aspuru-Guzik, A. Neural message passing on high order paths. Mach. Learn.: Sci. Technol. (2021).

- 17.Simonovsky, M. & Komodakis, N. in International Conference on Artificial Neural Networks 412–422 (Springer, 2018).

- 18.Ma, T., Chen, J. & Xiao, C. in Advances in Neural Information Processing Systems 7113–7124 (2018).

- 19.De Cao, N. & Kipf, T. Molgan: an implicit generative model for small molecular graphs. Preprint at arXiv:1805.11973 (2018).

- 20.Flam-Shepherd, D., Wu, T. & Aspuru-Guzik, A. MPGVAE: improved generation of small organic molecules using message passing neural nets. Machine Learning: Science and Technology 2.4 (2021): 045010.

- 21.Gebauer, N., Gastegger, M. & Schütt, K. Symmetry-adapted generation of 3d point sets for the targeted discovery of molecules. Adv. Neural Inf. Process. Syst.32, (2019).

- 22.Ertl P, Roggo S, Schuffenhauer A. Natural product-likeness score and its application for prioritization of compound libraries. J. Chem. Inf. Model. 2008;48:68. doi: 10.1021/ci700286x. [DOI] [PubMed] [Google Scholar]

- 23.Perron, Q. et al. Deep generative models for ligand-based de novo design applied to multi-parametric optimization. Journal of Computational Chemistry 43,10 (2022). [DOI] [PubMed]

- 24.Merk D, Friedrich L, Grisoni F, Schneider G. De novo design of bioactive small molecules by artificial intelligence. Mol. Inform. 2018;37:1700153. doi: 10.1002/minf.201700153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Grisoni F, et al. Combining generative artificial intelligence and on-chip synthesis for de novo drug design. Sci. Adv. 2021;7:eabg3338. doi: 10.1126/sciadv.abg3338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Méndez-Lucio O, Baillif B, Clevert D-A, Rouquié D, Wichard J. De novo generation of hit-like molecules from gene expression signatures using artificial intelligence. Nat. Commun. 2020;11:1. doi: 10.1038/s41467-019-13807-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Awale M, Sirockin F, Stiefl N, Reymond J-L. Drug analogs from fragment-based long short-term memory generative neural networks. J. Chem. Inf. Model. 2019;59:1347. doi: 10.1021/acs.jcim.8b00902. [DOI] [PubMed] [Google Scholar]

- 28.Zheng S, et al. Qbmg: quasi-biogenic molecule generator with deep recurrent neural network. J. Cheminform. 2019;11:1. doi: 10.1186/s13321-019-0328-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Skinnider, M. A., R. G. Stacey, R.G., Wishart, D.S. & Foster, L. J. Deep generative models enable navigation in sparsely populated chemical space. (2021).

- 30.Moret M, Friedrich L, Grisoni F, Merk D, Schneider G. Generative molecular design in low data regimes. Nat. Mach. Intell. 2020;2:171. doi: 10.1038/s42256-020-0160-y. [DOI] [Google Scholar]

- 31.Arús-Pous J, et al. Randomized smiles strings improve the quality of molecular generative models. J. Cheminform. 2019;11:1. doi: 10.1186/s13321-018-0323-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kusner, M. J., Paige, B. & Hernández-Lobato, J. M. in International Conference on Machine Learning (2017).

- 33.Dai, H., Tian, Y., Dai, B., Skiena, S. & Song, L. Syntax-directed variational autoencoder for structured data. In International Conference on Learning Representations (2018).

- 34.O’Boyle, N. & Dalke, A. Deepsmiles: an adaptation of smiles for use in machine-learning of chemical structures. (2018).

- 35.Krenn, M., Häse, F., Nigam, A., Friederich, P. & Aspuru-Guzik, A. Self-referencing embedded strings (SELFIES): A 100% robust molecular string representation. Machine Learning: Science and Technology1, 4 045024 (2020).

- 36.Polykovskiy D, et al. Molecular sets (moses): a benchmarking platform for molecular generation models. Front. Pharmacol. 2020;11:1931. doi: 10.3389/fphar.2020.565644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Atanasov AG, Zotchev SB, Dirsch VM, Supuran CT. Natural products in drug discovery: advances and opportunities. Nat. Rev. Drug Discov. 2021;20:200. doi: 10.1038/s41573-020-00114-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gaulton A, et al. Chembl: a large-scale bioactivity database for drug discovery. Nucleic Acids Res. 2012;40:D1100. doi: 10.1093/nar/gkr777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bickerton GR, Paolini GV, Besnard J, Muresan S, Hopkins AL. Quantifying the chemical beauty of drugs. Nat. Chem. 2012;4:90. doi: 10.1038/nchem.1243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ertl P, Schuffenhauer A. Estimation of synthetic accessibility score of drug-like molecules based on molecular complexity and fragment contributions. J. Cheminform. 2009;1:1. doi: 10.1186/1758-2946-1-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Wildman SA, Crippen GM. Prediction of physicochemical parameters by atomic contributions. J. Chem. Inf. Comput. Sci. 1999;39:868. doi: 10.1021/ci990307l. [DOI] [Google Scholar]

- 42.Bertz SH. The first general index of molecular complexity. J. Am. Chem. Soc. 1981;103:3599. doi: 10.1021/ja00402a071. [DOI] [Google Scholar]

- 43.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9:1735. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 44.Ghose AK, Crippen GM. Atomic physicochemical parameters for three-dimensional structure-directed quantitative structure–activity relationships I. Partition coefficients as a measure of hydrophobicity. J. Comput. Chem. 1986;7:565. doi: 10.1002/jcc.540070419. [DOI] [PubMed] [Google Scholar]

- 45.Irwin JJ, Shoichet BK. Zinc—a free database of commercially available compounds for virtual screening. J. Chem. Inf. Model. 2005;45:177. doi: 10.1021/ci049714+. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Blum LC, Reymond J -L. 970 million druglike small molecules for virtual screening in the chemical universe database gdb-13. J. Am. Chem. Soc. 2009;131:8732. doi: 10.1021/ja902302h. [DOI] [PubMed] [Google Scholar]

- 47.Hachmann J, et al. The harvard clean energy project: large-scale computational screening and design of organic photovoltaics on the world community grid. J. Phys. Chem. Lett. 2011;2:2241. doi: 10.1021/jz200866s. [DOI] [Google Scholar]

- 48.St. John PC, et al. Message-passing neural networks for high-throughput polymer screening. J. Chem. Phys. 2019;150:234111. doi: 10.1063/1.5099132. [DOI] [PubMed] [Google Scholar]

- 49.Kim S, et al. Pubchem substance and compound databases. Nucleic Acids Res. 2016;44:D1202. doi: 10.1093/nar/gkv951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Bisht GS, Rawat DS, Kumar A, Kumar R, Pasha S. Antimicrobial activity of rationally designed amino terminal modified peptides. Bioorg. Med. Chem. Lett. 2007;17:4343. doi: 10.1016/j.bmcl.2007.05.015. [DOI] [PubMed] [Google Scholar]

- 51.Reker D, et al. Revealing the macromolecular targets of complex natural products. Nat. Chem. 2014;6:1072. doi: 10.1038/nchem.2095. [DOI] [PubMed] [Google Scholar]

- 52.Sorokina M, Merseburger P, Rajan K, Yirik MA, Steinbeck C. Coconut online: collection of open natural products database. J. Cheminform. 2021;13:1. doi: 10.1186/s13321-020-00478-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Mercado R, et al. Graph networks for molecular design. Mach. Learn.: Sci. Technol. 2021;2:025023. [Google Scholar]

- 54.Lippe, P. & Gavves, E. Categorical normalizing flows via continuous transformations. International Conference on Learning Representations. (2020).

- 55.Jin, W., Barzilay, R. & Jaakkola, T. in International Conference on Machine Learning (PMLR, 2020) 4839–4848.

- 56.Popova, M., Shvets, M., Oliva, J. & Isayev, O. Molecular-RNN: Generating realistic molecular graphs with optimized properties. Preprint at arXiv:1905.13372 (2019).

- 57.Li Y, Zhang L, Liu Z. Multi-objective de novo drug design with conditional graph generative model. J. Cheminform. 2018;10:1. doi: 10.1186/s13321-018-0287-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Madhawa, K., Ishiguro, K., Nakago, K. & Abe, M. Graphnvp: an invertible flow model for generating molecular graphs. Preprint at arXiv:1905.11600 (2019).

- 59.Dai, H., Nazi, A., Li, Y., Dai, B. & Schuurmans, D. in International Conference on Machine Learning (PMLR, 2020) 2302–2312.

- 60.Liao, R. et al. Efficient graph generation with graph recurrent attention networks. Adv. Neural Inf. Process. Syst.32, (2019).

- 61.Bergstra, J. & Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res.13, (2012).

- 62.Renz P, Van Rompaey D, Wegner JK, Hochreiter S, Klambauer G. On failure modes in molecule generation and optimization. Drug Discov. Today.: Technol. 2019;32:55. doi: 10.1016/j.ddtec.2020.09.003. [DOI] [PubMed] [Google Scholar]

- 63.Paszke, A. et al., Pytorch: an imperative style, high-performance deep learning library. Adv. Neural inf. Process. Syst.32, (2019).

- 64.Landrum, G. Rdkit: a software suite for cheminformatics, computational chemistry, and predictive modeling. (2013).

- 65.Virtanen P, et al. Scipy 1.0: fundamental algorithms for scientific computing in python. Nat. Methods. 2020;17:261. doi: 10.1038/s41592-019-0686-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Vaserstein LN. Markov processes over denumerable products of spaces, describing large systems of automata. Probl. Pereda. Inf. 1969;5:64. [Google Scholar]

- 67.Baldwin, S. in Journal of Physics: Conference Series, Vol. 341, 012001 (IOP Publishing, 2012).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The processed data used in this study are available in https://github.com/danielflamshep/genmoltasks.

The code used to train models is publicly available. JTVAE: https://github.com/wengong-jin/icml18-jtnn. CGVAE: https://github.com/microsoft/constrained-graph-variational-autoencoder. The RNN models were trained using the char-rnn code from https://github.com/molecularsets/moses. Trained models are available upon request.