Abstract

In recent work, Wang et al introduced the “Sum of Single Effects” (SuSiE) model, and showed that it provides a simple and efficient approach to fine-mapping genetic variants from individual-level data. Here we present new methods for fitting the SuSiE model to summary data, for example to single-SNP z-scores from an association study and linkage disequilibrium (LD) values estimated from a suitable reference panel. To develop these new methods, we first describe a simple, generic strategy for extending any individual-level data method to deal with summary data. The key idea is to replace the usual regression likelihood with an analogous likelihood based on summary data. We show that existing fine-mapping methods such as FINEMAP and CAVIAR also (implicitly) use this strategy, but in different ways, and so this provides a common framework for understanding different methods for fine-mapping. We investigate other common practical issues in fine-mapping with summary data, including problems caused by inconsistencies between the z-scores and LD estimates, and we develop diagnostics to identify these inconsistencies. We also present a new refinement procedure that improves model fits in some data sets, and hence improves overall reliability of the SuSiE fine-mapping results. Detailed evaluations of fine-mapping methods in a range of simulated data sets show that SuSiE applied to summary data is competitive, in both speed and accuracy, with the best available fine-mapping methods for summary data.

Author summary

The goal of fine-mapping is to identify the genetic variants that causally affect some trait of interest. Fine-mapping is challenging because the genetic variants can be highly correlated due to a phenomenon called linkage disequilibrium (LD). The most successful current approaches to fine-mapping frame the problem as a variable selection problem, and here we focus on one such approach based on the “Sum of Single Effects” (SuSiE) model. The main contribution of this paper is to extend SuSiE to work with summary data, which is often accessible when the full genotype and phenotype data are not. In the process of extending SuSiE, we developed a new mathematical framework that helps to explain existing fine-mapping methods for summary data, why they work well (or not), and under what circumstances. In simulations, we show that SuSiE applied to summary data is competitive with the best available fine-mapping methods for summary data. We also show how different factors such as accuracy of the LD estimates can affect the quality of the fine-mapping.

Introduction

Fine-mapping is the process of narrowing down genetic association signals to a small number of potential causal variants [1–4], and it is an important step in the effort to understand the genetic causes of diseases [5, 6]. However, fine-mapping is a difficult problem due to the strong and complex correlation patterns (“linkage disequilibrium”, or LD) that exist among nearby genetic variants. Many different methods and algorithms have been developed to tackle the fine-mapping problem [2, 7–19]. In recent work, Wang et al [17] introduced a new approach to fine-mapping, SuSiE (short for “SUm of SIngle Effects”), which has several advantages over existing approaches: it is more computationally scalable; and it provides a new, simple way to calculate “credible sets” of putative causal variants [2, 20]. However, the algorithms in [17] also have an important limitation—they require individual-level genotype and phenotype data. In contrast, many other fine-mapping methods require access only to summary data, such as z-scores from single-SNP association analyses and an estimate of LD from a suitable reference panel [7, 8, 11–13, 15, 16, 21]. Requiring only summary data is useful because individual-level data are often difficult to obtain, both for practical reasons, such as the need to obtain many data sets collected by many different researchers, and for reasons to do with consent and privacy. By comparison, summary data are much easier to obtain, and many publications share such summary data [22].

In this paper, we introduce new variants of SuSiE for performing fine-mapping from summary data; we call these variants SuSiE-RSS (RSS stands for “regression with summary statistics” [23].) Our work exploits the fact that (i) the multiple regression likelihood can be written in terms of a particular type of summary data, known as sufficient statistics (explained below), and (ii) these sufficient statistics can be approximated from the types of summary data that are commonly available (e.g., z-scores from single-SNP association tests and LD estimates from suitable reference panel). In the special case where the sufficient statistics themselves are available, the second approximation is unnecessary and SuSiE-RSS yields the same results as SuSiE applied to the original individual-level data; otherwise, it yields an approximation. By extending SuSiE to deal with widely available summary statistics, SuSiE-RSS greatly expands the applicability of the SuSiE fine-mapping approach.

Although our main goal here is to extend SuSiE to work with summary data, the approach we use, and the connections it exploits, are quite general, and could be used to extend other individual-level data methods to work with summary data. This general approach has two nice features. First it deals simply and automatically with non-invertible LD matrices, which arise frequently in fine-mapping. We argue, both through theory and example, that it provides a simpler and more effective solution to this issue than some existing approaches. Second, it shows how individual-level results can be obtained as a special case of summary-data analysis, by using the sufficient statistics as summary data.

By highlighting the close connection between the likelihoods for individual-level and summary data, our work generalizes results of [11], who showed a strong connection between Bayes Factors, based on specific priors, from individual-level data and summary data. Our results highlight that this connection is fundamentally due to a close connection between the likelihoods, and so will apply whatever prior is used (and will also apply to non-Bayesian approaches that do not use a prior). By focussing on likelihoods, our analysis also helps clarify differences and connections between existing fine-mapping methods such as FINEMAP version 1.1 [12], FINEMAP version 1.2 [21] and CAVIAR [7], which can differ in both the prior and likelihood used.

Finally, we introduce several other methodological innovations for fine-mapping. Some of these innovations are not specific to SuSiE and could be used with other statistical methods. We describe methods for identifying “allele flips”—alleles that are (erroneously) encoded differently in the study and reference data—and other inconsistencies in the summary data. (See also [24] for related ideas.) We illustrate how a single allele flip can lead to inaccurate fine-mapping results, emphasizing the importance of careful quality control when performing fine-mapping using summary data. We also introduce a new refinement procedure for SuSiE that sometimes improves estimates from the original fitting procedure.

Description of the method

We begin with some background and notation. Let denote the phenotypes of N individuals in a genetic association study, and let denote their corresponding genotypes at J genetic variants (SNPs). To simplify the presentation, we assume the y are quantitative and approximately normally distributed, and that both y and the columns of X are centered to have mean zero, which avoids the need for an intercept term in (1) [25]. We elaborate on treatment of binary and case-control phenotypes in the Discussion below.

Fine-mapping from individual-level data is usually performed by fitting the multiple linear regression model

| (1) |

where b = (b1, …, bJ)⊺ is a vector of multiple regression coefficients, e is an N-vector of error terms distributed as , with (typically unknown) residual variance σ2 > 0, IN is the N × N identity matrix, and denotes the r-variate normal distribution with mean μ and variance Σ.

In this multiple regression framework, the question of which SNPs are affecting y becomes a problem of “variable selection”; that is, the problem of identifying which elements of b are not zero. While many methods exist for variable selection in multiple regression, fine-mapping has some special features—in particular, very high correlations among some columns of X, and very sparse b—that make Bayesian methods with sparse priors a preferred approach (e.g., [7–9]). These methods specify a sparse prior for b, and perform inference by approximating the posterior distribution p(b ∣ X, y). In particular, the evidence for SNP j having a non-zero effect is often summarized by the “posterior inclusion probability” (PIP),

| (2) |

The Sum of Single Effects (SuSiE) model

The key idea behind SuSiE [17] is to write b as a sum,

| (3) |

in which each vector bl = (bl1, …, blJ)⊺ is a “single effect” vector; that is, a vector with exactly one non-zero element. The representation (3) allows that b has at most L non-zero elements, where L is a user-specified upper bound on the number of effects. (Consider that if single-effect vectors b1 and b2 have a non-zero element at the same SNP j, b will have fewer than L non-zeros.)

The special case L = 1 corresponds to the assumption that a region has exactly one causal SNP; i.e., exactly one SNP with a non-zero effect. In [17], this special case is called the “single effect regression” (SER) model. The SER is particularly convenient because posterior computations are analytically tractable [9]; consequently, despite its limitations, the SER has been widely used [2, 26–28].

For L > 1, Wang et al [17] introduced a simple model-fitting algorithm, which they called Iterative Bayesian Stepwise Selection (IBSS). In brief, IBSS iterates through the single-effect vectors l = 1, …, L, at each iteration fitting bl while keeping the other single-effect vectors fixed. By construction, each step thus involves fitting an SER, which, as noted above, is straightforward. Wang et al [17] showed that IBSS can be understood as computing an approximate posterior distribution p(b1, …, bL ∣ X, y, σ2), and that the algorithm iteratively optimizes an objective function known as the “evidence lower bound” (ELBO).

Summary data for fine-mapping

Motivated by the difficulties in accessing the individual-level data X, y from most studies, researchers have developed fine-mapping approaches that work with more widely available “summary data.” Here we develop methods that use various combinations of the summary data.

- Vectors and containing estimates of marginal association for each SNP j, and corresponding standard errors, from a simple linear regression:

(4)

An alternative to is the vector of z-scores,(5)

Many studies provide and (see [22] for examples), and many more provide the z-scores, or data that can be used to compute the z-scores (e.g., can be recovered from the p-value and the sign of [29]). Note that it is important that all and be computed from the same N samples.(6) - An estimate, , of the in-sample LD matrix, R, where R is the J × J SNP-by-SNP sample correlation matrix,

and where Dxx ≔ diag(X⊺X) is a diagonal matrix that ensures the diagonal entries of R are all 1. Often, the estimate is taken to be an “out-of-sample” LD matrix—that is, the sample correlation matrix of the same J SNPs in a suitable reference panel, chosen to be genetically similar to the study population, possibly with additional shrinkage or banding steps to improve accuracy [14].(7) Optionally, the sample size N and the sample variance of y. (Since y is centered, the sample variance of y is simply vy ≔ y⊺y/N). Knowing these quantities is obviously equivalent to knowing y⊺y and N, so for brevity we will use the latter. These quantities are not required, but they can be helpful as we will see later.

We caution that if the summary statistics come from a meta-analysis, the summary statistics should be computed carefully to avoid the pitfalls highlighted in [24]. Importantly, SNPs that are not analyzed in all the individual studies in the meta-analysis should not be included in the fine-mapping.

SuSiE with summary data

A key question—and the question central to this paper—is, how do we use summary data to estimate the coefficients b in a multiple linear regression (1)? And, more specifically, how do we use them to estimate the single-effect vectors b1, …, bL in SuSiE (3)? Here, we tackle these questions in two steps. First, we consider a special type of summary data, called “sufficient statistics,” which contain the same information about the model parameters as the individual-level data X, y. Given such sufficient statistics, we develop an algorithm that exactly reproduces the results that would have been obtained by running SuSiE on the original data X, y. Second, we consider the case where we have access to summary data that are not sufficient statistics; these summary data can be used to approximate the sufficient statistics, and therefore approximate the results from individual-level data.

The IBSS-ss algorithm

The IBSS algorithm of [17] fits the SuSiE model to individual-level data X, y. The data enter the SuSiE model only through the likelihood, which from (1) is

| (8) |

This likelihood depends on the data only through X⊺X, X⊺y, y⊺y and N. Therefore, these quantities are sufficient statistics. (These sufficient statistics can be computed from other combinations of summary data, which are therefore also sufficient statistics; we discuss this point below.) Careful inspection of the IBSS algorithm in [17] confirms that it depends on the data only through these sufficient statistics. Thus, by rearranging the computations we obtain a variant of IBSS, called “IBSS-ss”, that can fit the SuSiE model from sufficient statistics; see S1 Text.

We use IBSS(X, y) to denote the result of applying the IBSS algorithm to the individual-level data, and IBSS-ss(X⊺X, X⊺y, y⊺y, N) to denote the results of applying the IBSS-ss algorithm to the sufficient statistics. These two algorithms will give the same result,

| (9) |

However, the computational complexity of the two approaches is different. First, computing the sufficient statistics requires computing the J × J matrix X⊺X, which is a non-trivial computation, requiring O(NJ2) operations. However, once this matrix has been computed, IBSS-ss requires O(J2) operations per iteration, whereas IBSS requires O(NJ) operations per iteration. (The number of iterations should be the same.) Therefore, when N ≫ J, which is often the case in fine-mapping studies, IBSS-ss will usually be faster. In practice, choosing between these workflows also depends on whether one prefers to precompute X⊺X, which can be done conveniently in programs such as PLINK [30] or LDstore [31].

SuSiE with summary data: SuSiE-RSS

In practice, sufficient statistics may not be available; in particular, when individual-level data are unavailable, the matrix X⊺X is also usually unavailable. A natural approach to deal with this issue is to approximate the sufficient statistics, then to proceed as if the sufficient statistics were available by inputting the approximate sufficient statistics to the IBSS-ss algorithm. We call this approach “SuSiE-RSS”.

For example, let denote an approximation to the sample covariance , and assume the other sufficient statistics X⊺y, y⊺y, N are available exactly. (These are easily obtained from commonly available summary data, and ; see S1 Text.) Then SuSiE-RSS is the result of running the IBSS-ss algorithm on the sufficient statistics but with replacing X⊺X; that is, SuSiE-RSS is .

In practice, we found that estimating σ2 sometimes produced very inaccurate estimates, presumably due to inaccuracies in as an approximation to Vxx. (This problem did not occur when .) Therefore, when running the IBSS-ss algorithm on approximate summary statistics, we recommend to fix the residual variance, σ2 = y⊺y/N, rather than estimate it.

Interpretation in terms of an approximation to the likelihood

We defined SuSiE-RSS as the application the IBSS-ss algorithm to the sufficient statistics or approximations to these statistics. Conceptually, this approach combines the SuSiE prior with an approximation to the likelihood (8).

To formalize this, we write the likelihood (8) explicitly as a function of the sufficient statistics,

| (10) |

so that

| (11) |

Replacing Vxx with an estimate is therefore the same as replacing the likelihood (11) with

| (12) |

Note that when , the approximation is exact; that is, ℓRSS(b, σ2) = ℓ(b, σ2; X, y). Thus, applying SuSiE-RSS with Vxx is equivalent to using the individual-data likelihood (8), and applying it with is equivalent to using the approximate likelihood (12). Finally, fixing is equivalent to using the following likelihood:

| (13) |

General strategy for applying regression methods to summary data

The strategy used here to extend SuSiE to summary data is quite general, and could be used to extend essentially any likelihood-based multiple regression method for individual-level data X, y to summary data. Operationally, this strategy would involve two steps: (i) implement an algorithm that accepts as input sufficient statistics and outputs the same result as the individual-level data; (ii) apply this algorithm to approximations of the sufficient statistics computed from (non-sufficient) summary data (optionally, fixing the residual variance to σ2 = y⊺y/N). This involves replacing the exact likelihood (18) with an approximate likelihood, either (12) or (13).

Special case when X, y are standardized

In genetic association studies, it is common practice to standardize both y and the columns of X to have unit variance—that is, y⊺y = N and for all j = 1, …, J—before fitting the model (1). Standardizing X, y is commonly done in genetic association analysis and fine-mapping, and results in some simplifications that facilitates connections with existing methods, so we consider this special case in detail. (See [32, 33] for a discussion on the choice to standardize.)

When X, y are standardized, the sufficient statistics are easily computed from the in-sample LD matrix R, the single-SNP z-scores , and the sample size, N:

| (14) |

| (15) |

| (16) |

where we define

| (17) |

and we define Dz to be the diagonal matrix in which the jth diagonal element is [21]. Note the elements of Dz have the interpretation as being one minus the estimated PVE (“Proportion of phenotypic Variance Explained”), so we refer to as the vector of the “PVE-adjusted z-scores.” If all the effects are small, the estimated PVEs will be close to zero, the diagonal of Dz will be close to one, and .

Substituting Eqs (14)–(16) into (11) gives

| (18) |

When the in-sample LD matrix R is not available, and is replaced with , the SuSiE-RSS likelihood (13) becomes

| (19) |

These expressions are summarized in Table 1.

Table 1. Summary of SuSiE and SuSiE-RSS, the different data they accept, and the corresponding likelihoods.

In the “likelihood” column, is the vector of adjusted z-scores; see (17). In this summary, we assume X, y are standardized, which is common practice in genetic association studies. Note that when SuSiE-RSS is applied to sufficient statistics and σ2 is estimated (second row), the likelihood is identical to the likelihood for SuSiE applied the individual-level data (first row). See https://stephenslab.github.io/susieR/articles/susie_rss.html for an illustration of how these methods are invoked in the R package susieR.

| method | data type | data | σ 2 | likelihood | algorithm |

|---|---|---|---|---|---|

| SuSiE | individual | X, y | fit | ℓ(b, σ2) = ℓ(b, σ2; X, y) | IBSS |

| SuSiE-RSS | sufficient | fit | IBSS-ss | ||

| SuSiE-RSS | summary | 1 | IBSS-ss |

Connections with previous work

The approach we take here is most closely connected with the approach used in FINEMAP (versions 1.2 and later) [21]. In essence, FINEMAP 1.2 uses the same likelihoods (18, 19) as we use here, but the derivations in [21] do not clearly distinguish the case where the in-sample LD matrix is available from the case where it is not. In addition, the derivations in [21] focus on Bayes Factors computed with particular priors, rather than focussing on the likelihood. Our derivations emphasize that, when the in-sample LD matrix is available, results from “summary data” should be identical to those that would have been obtained from individual-level data. Our focus on likelihoods draws attention the generality of this strategy; it is not specific to a particular prior, nor does it require the use of Bayesian methods.

Several other previous fine-mapping methods (e.g., [7, 8, 12, 16]) are based on the following model:

| (20) |

where z = (z1, …, zJ)⊺ is an unobserved vector of scaled effects, sometimes called the noncentrality parameters (NCPs),

| (21) |

(Earlier versions of SuSiE-RSS were also based on this model [34].) To connect our method with this approach, note that, when is invertible, the likelihood (19) is equivalent to the likelihood for b in the following model:

| (22) |

(See S1 Text for additional notes.) This model was also used in Zhu and Stephens [23], where the derivation was based on the PVE-adjusted standard errors, which gives the same PVE-adjusted z-scores. Model (22) is essentially the same as (20) but with the observed z-scores, , replaced with the PVE-adjusted z-scores, . In other words, when is invertible, these previous approaches are the same as our approach except that they use the z-scores, , instead of the PVE-adjusted z-scores, . Thus, these previous approaches are implicitly making the approximation , whereas our approach uses the identity (Eq 15). If all effect sizes are small (i.e., PVE ≈ 0 for all SNPs), then , and the approximation will be close to exact; on the other hand, if the PVE is not close to zero for one or more SNPs, then the use of the PVE-adjusted z-scores is preferred [21]. Note that the PVE-adjusted z-scores require knowledge of N; in rare cases where N is unknown, replacing with may be an acceptable approximation.

Approaches to dealing with a non-invertible LD matrix

One complication that can arise in working directly with models (20) or (22) is that is often not invertible. For example, if is the sample correlation matrix from a reference panel, will not be invertible (i.e., singular) whenever the number of individuals in the panel is less than J, or whenever any two SNPs are in complete LD in the panel. In such cases, these models do not have a density (with respect to the Lebesgue measure). Methods using (20) have therefore required workarounds to deal with this issue. One approach is to modify (“regularize”) to be invertible by adding a small, positive constant to the diagonal [7]. In another approach, the data are transformed into a lower-dimensional space [35, 36], which is equivalent to replacing with its pseudoinverse (see S1 Text). Our approach is to use the likelihood (19), which circumvents these issues because the likelihood is defined whether or not is invertible. (The likelihood is defined even if is not positive semi-definite, but its use in that case may be problematic as the likelihood may be unbounded; see [37].) This approach has several advantages over the data transformation approach: it is simpler; it does not involve inversion or factorization of a (possibly very large) J × J matrix; and it preserves the property that results under the SER model do not depend on LD (see Results and S1 Text). Also note that this approach can be combined with modifications to , such as adding a small constant to the diagonal. The benefits of regularizing are investigated in the experiments below.

New refinement procedure for more accurate CSs

As noted in [17], the IBSS algorithm can sometimes converge to a poor solution (a local optimum of the ELBO). Although this is rare, it can produce misleading results when it does occur; in particular it can produce false positive CSs (i.e., CSs containing only null SNPs that have zero effect). To address this issue, we developed a simple refinement procedure for escaping local optima. The procedure is heuristic, and is not guaranteed to eliminate all convergence issues, but in practice it often helps in those rare cases where the original IBSS had problems. The refinement procedure applies equally to both individual-level data and summary data.

In brief, the refinement procedure involves two steps: first, fit a SuSiE model by running the IBSS algorithm to convergence; next, for each CS identified from the fitted SuSiE model, rerun IBSS to convergence after first removing all SNPs in the CS (which forces the algorithm to seek alternative explanations for observed associations), then try to improve this fit by running IBSS to convergence again, with all SNPs. If these refinement steps improve the objective function, the new solution is accepted; otherwise, the original solution is kept. This process is repeated until the refinement steps no longer make any improvements to the objective. By construction, this refinement procedure always produces a solution whose objective is at least as good as the original IBSS solution. For full details, see S1 Text.

Because the refinement procedure reruns IBSS for each CS discovered in the initial round of model fitting, the computation increases with the number of CSs identified. In data sets with many CSs, the refinement procedure may be quite time consuming.

Other improvements to fine-mapping with summary data

Here we introduce additional methods to improve accuracy of fine-mapping with summary data. These methods are not specific to SuSiE and can be used with other fine-mapping methods.

Regularization to improve consistency of the estimated LD matrix

Accurate fine-mapping requires to be an accurate estimate of R. When is computed from a reference panel, the reference panel should not be too small [31], and should be of similar ancestry to the study sample. Even when a suitable panel is used, there will inevitably be differences between and R. A common way to improve estimation of covariance matrices is to use regularization [38], replacing with ,

| (23) |

where is the sample correlation matrix computed from the reference panel, and λ ∈ [0, 1] controls the amount of regularization. This strategy has previously been used in fine-mapping from summary data (e.g., [8, 37, 39]), but in previous work λ was usually fixed at some arbitrarily small value, or chosen via cross-validation. Here, we estimate λ by maximizing the likelihood under the null (z = 0),

| (24) |

The estimated reflects the consistency between the (PVE-adjusted) z-scores and the LD matrix ; if the two are consistent with one another, will be close to zero.

Detecting and removing large inconsistencies in summary data

Regularizing can help to address subtle inconsistencies between and R. However, regularization does not deal well with large inconsistencies in the summary data, which, in our experience, occur often. One common source of such inconsistencies is an “allele flip” in which the alleles of a SNP are encoded one way in the study sample (used to compute ) and in a different way in the reference panel (used to compute ). Large inconsistencies can also arise from using z-scores that were obtained using different samples at different SNPs (which should be avoided by performing genotype imputation [23]). Anecdotally, we have found large inconsistencies like these often cause SuSiE to converge very slowly and produce misleading results, such as an unexpectedly large number of CSs, or two CSs containing SNPs that are in strong LD with each other. We have therefore developed diagnostics to help users detect such anomalous data. (We note that similar ideas were proposed in the recent paper [40].)

Under model (22), the conditional distribution of given the other PVE-adjusted z-scores is

| (25) |

where , denotes the vector excluding , and Ωj,−j denotes the jth row of Ω excluding Ωjj. This conditional distribution depends on the unknown bj. However, provided that the effect of SNP j is small (i.e., bj ≈ 0), or that SNP j is in strong LD with other SNPs, which implies 1/Ωjj ≈ 0, we can approximate (25) by

| (26) |

This distribution has been previously used to impute z-scores [41], and it is also used in DENTIST [40].

An initial quality control check can be performed by plotting the observed against its conditional expectation in (26), with large deviations potentially indicating anomalous z-scores. Since computing these conditional expectations involves the inverse of , this matrix must be invertible. When is not invertible, we replace with the regularized (and invertible) matrix following the steps described above. Note that while we have written (25) and (26) in terms of the PVE-adjusted z-scores, , it is valid to use the same expressions for the unadjusted z-scores, , so long as the effect sizes are small (DENTIST uses z-scores instead of the PVE-adjusted z-scores).

A more quantitative measure of the discordance of with its expectation under the model can be obtained by computing standardized differences between the observed and expected values,

| (27) |

SNPs j with largest tj (in magnitude) are most likely to violate the model assumptions, and are therefore the top candidates for followup. When any such candidates are detected, the user should check the data pre-processing steps and fix any errors that cause inconsistencies in summary data. If there is no way to fix the errors, removing the anomalous SNPs is a possible workaround. Sometimes removing a single SNP is enough to resolve the discrepancies—for example, a single allele flip can result in inconsistent z-scores among many SNPs in LD with the allele-flip SNP. We have also developed a likelihood-ratio statistic based on (26) specifically for identifying allele flips; see S1 Text for a derivation of this likelihood ratio and an empirical assessment of its ability to identify allele-flip SNPs in simulations. After one or more SNPs are removed, one should consider re-running these diagnostics on the filtered summary data to search for additional inconsistencies that may have been missed in the first round. Alternatively, DENTIST provides a more automated approach to filtering out inconsistent SNPs [40].

We caution that computing these diagnostics requires inverting or factorizing a J × J matrix, and may therefore involve a large computational expense—potentially a greater expense than the fine-mapping itself—when J, the number of SNPs, is large.

Verification and comparison

Fine-mapping with inconsistent summary data and a non-invertible LD matrix: An illustration

A technical issue that arises when developing fine-mapping methods for summary data is that the LD matrix is often not invertible. Several approaches to dealing with this have been suggested including modifying the LD matrix to be invertible, transforming the data into a lower-dimensional space, or replacing the inverse with the “pseudoinverse” (see “Approaches to dealing with a non-invertible LD matrix” above). In SuSiE-RSS, we avoid this issue by directly approximating the likelihood, so SuSiE-RSS does not require the LD matrix to be invertible. We summarize the theoretical relationships between these approaches in S1 Text. Here we illustrate the practical advantage of the SuSiE-RSS approach in a toy example.

Consider a very simple situation with two SNPs, in strong LD with each other, with observed z-scores . Both SNPs are significant, but the second SNP is more significant. Under the assumption that exactly one of these SNPs has an effect—which allows for exact posterior computations—the second SNP is the better candidate, and should have a higher PIP. Further, we expect the PIPs to be unaffected by LD between the SNPs (see S1 Text). However, the transformation and pseudoinverse approaches—which are used by msCAVIAR [42] and in previous fine-mapping analyses [35, 36], and are also used in DENTIST to detect inconsistencies in summary data [40]—do not guarantee that either of these properties are satisfied. For example, suppose the two SNPs are in complete LD in the reference panel, so is a 2 × 2 (non-invertible) matrix with all entries equal to 1. Here, is inconsistent with the observed because complete LD between SNPs implies their z-scores should be identical. (This could happen if the LD in the reference panel used to compute is slightly different from the LD in the association study.) The transformation approach effectively adjusts the observed data to be consistent with the LD matrix before drawing inferences; here it would adjust to , removing the observed difference between the SNPs and forcing them to be equally significant, which seems undesirable. The pseudoinverse approach turns out to be equivalent to the transformation approach (see S1 Text), and so behaves the same way. In contrast, our approach avoids this behaviour, and correctly maintains the second SNP as the better candidate; applying SuSiE-RSS to this toy example yields PIPs of 0.0017 for the first SNP and 0.9983 for the second SNP, and a single CS containing the second SNP only. To reproduce this result, see the examples accompanying the susie_rss function in the susieR R package.

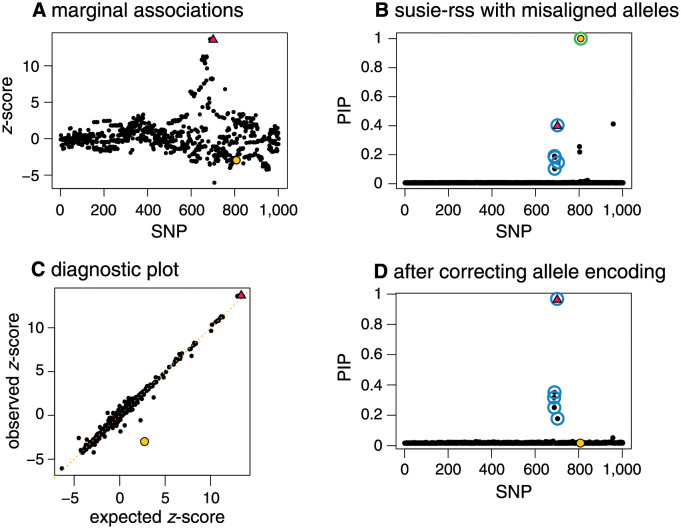

Effect of allele flips on accuracy of fine-mapping: An illustration

When fine-mapping is performed using z-scores from a study sample and an LD matrix from a different reference sample, it is crucial that the same allele encodings are used. In our experience, “allele flips,” in which different allele encoding are used in the two samples, are a common source of fine-mapping problems. Here we use a simple simulation to illustrate this problem, and the steps we have implemented to diagnose and correct the problem.

We simulated a fine-mapping data set with 1,002 SNPs, in which one out of the 1,002 SNPs was causal, and we deliberately used different allele encodings in the study sample and reference panel for a non-causal SNP (see S1 Text for more details). The causal SNP is among the SNPs with the highest z-scores (Panel A), and SuSiE-RSS correctly includes this causal SNP in a CS (Panel B). However, SuSiE-RSS also wrongly includes the allele-flip SNP in a second CS (Panel B). This happens because the LD between the allele-flip SNP and other SNPs is incorrectly estimated. Fig 1, Panel C shows a diagnostic plot comparing each z-score against its expected value under model (22). The allele-flip SNP stands out as a likely outlier (yellow circle), and the likelihood ratio calculations identify this SNP as a likely allele flip: LR = 8.2 × 103 for the allele-flip SNP, whereas all the other 262 SNPs with z-scores greater than 2 in magnitude have likelihood ratios less than 1. (See S1 Text for a more systematic assessment of the use of these likelihood ratio for identifying allele-flip SNPs.) After correcting the allele encoding to be the same in the study and reference samples, SuSiE-RSS infers a single CS containing the causal SNP, and the allele-flip SNP is no longer included in a CS; see Fig 1, Panel D.

Fig 1. Example illustrating importance of identifying and correcting allele flips in fine-mapping.

In this simulated example, one SNP (red triangle) affects the phenotype, and one SNP (yellow circle) has a different allele encoding in the study sample (the data used to compute the z-scores) and the reference panel (the data used to compute the LD matrix). Panel A shows the z-scores for all 1,002 SNPs. Panel B summarizes the results of running SuSiE-RSS on the summary data; SuSiE-RSS identifies a true positive CS (blue circles) containing the true causal SNP, and a false positive CS (green circles) that incorrectly contains the mismatched SNP. The mismatched SNP is also incorrectly estimated to have an effect on the phenotype with high probability (PIP = 1.00). The diagnostic plot (Panel C) compares the observed z-scores against the expected z-scores. In this plot, the mismatched SNP (yellow circle) shows the largest difference between observed and expected z-scores, and therefore appears furthest away from the diagonal. After fixing the allele encoding and recomputing the summary data, SuSiE-RSS identifies a single true positive CS (blue circles) containing the true-causal SNP (red triangle), and the formerly mismatched SNP is (correctly) not included in a CS (Panel D). This example is implemented as a vignette in the susieR package.

Simulations using UK Biobank genotypes

To systematically compare our new methods with existing methods for fine-mapping, we simulated fine-mapping data sets using the UK Biobank imputed genotypes [43]. The UK Biobank imputed genotypes are well suited to illustrate fine-mapping with summary data due to the large sample size, and the high density of available genetic variants after imputation. We randomly selected 200 regions on autosomal chromosomes for fine-mapping, such that each region contained roughly 1,000 SNPs (390 kb on average). Due to the high density of SNPs, these data sets often contain strong correlations among SNPs; on average, a data set contained 30 SNPs with correlation exceeding 0.9 with at least one other SNP, and 14 SNPs with correlations exceeding 0.99 with at least one other SNP.

For each of the 200 regions, we simulated a quantitative trait under the multiple regression model (1) with X comprising genotypes of 50,000 randomly selected UK Biobank samples, and with 1, 2 or 3 causal variants explaining a total of 0.5% of variation in the trait (total PVE of 0.5%). In total, we simulated 200 × 3 = 600 data sets. We computed summary data from the real genotypes and synthetic phenotypes. To compare how choice of LD matrix affects fine-mapping, we used three different LD matrices: in-sample LD matrix computed from the 50,000 individuals (R), and two out-of-sample LD matrices computed from randomly sampled reference panels of 500 or 1,000 individuals, denoted and , respectively. The samples randomly chosen for each reference panel had no overlap with the study sample but were drawn from the same population, which mimicked a situation where the reference sample was well matched to the study sample.

Refining SuSiE model fits improves fine-mapping performance

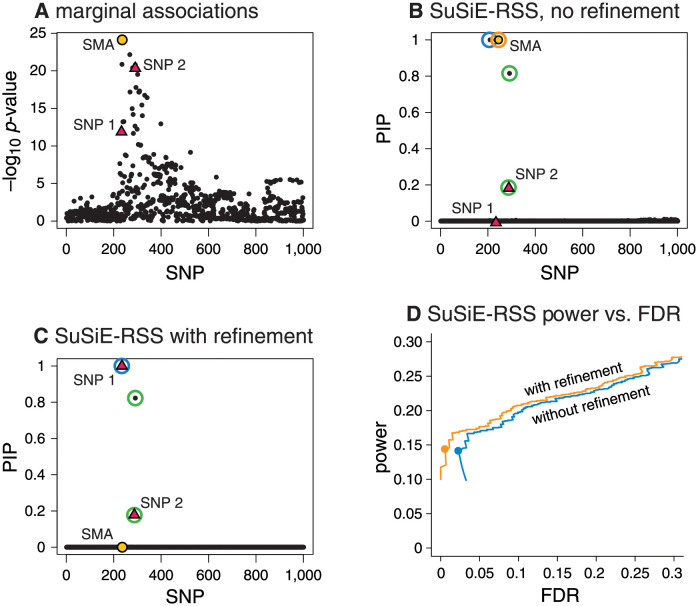

Before comparing the methods, we first demonstrate the benefits of our new refinement procedure for improving SuSiE model fits. Fig 2 shows an example drawn from our simulations where the regular IBSS algorithm converges to a poor solution and our refinement procedure improves the solution. The example has two causal SNPs in moderate LD with one another, which have opposite effects that partially cancel out each others’ marginal associations (Panel A). This example is challenging because the SNP with the strongest marginal association (SMA) is not in high LD with either causal SNP; it is in moderate LD with the first causal SNP, and low LD with the second causal SNP. Although [17] showed that the IBSS algorithm can sometimes deal well with such situations, that does not happen in this case; the IBSS algorithm yields three CSs, two of which are false positives that do not contain a causal SNP (Panel B). Applying our refinement procedure solves the problem; it yields a solution with higher objective function (ELBO), and with two CSs, each containing one of the causal SNPs (Panel C).

Fig 2. Refining SuSiE model fits improves fine-mapping accuracy.

Panels A, B and C show a single example, drawn from our simulations, that illustrates how refining a SuSiE-RSS model fit improves fine-mapping accuracy. In this example, there are 1,001 candidate SNPs, and two SNPs (red triangles “SNP 1” and “SNP 2”) explain variation in the simulated phenotype. The strongest marginal association (yellow circle, “SMA”) is not a causal SNP. Without refinement, the IBSS-ss algorithm (applied to sufficient statistics, with estimated σ2) returns a SuSiE-RSS fit identifying three 95% CSs (blue, green and orange circles); two of the CSs (blue, orange) are false positives containing no true effect SNP, one of these CSs contains the SMA (orange), and no CS includes SNP 1. After running the refinement procedure, the fit is much improved, as measured by the “evidence lower bound” (ELBO); it increases the ELBO by 19.06 (−70837.09 vs. −70818.03). The new SuSiE-RSS fit (Panel C) identifies two 95% CSs (blue and green circles), each containing a true causal SNP, and neither contains the SMA. Panel D summarizes the improvement in fine-mapping across all 600 simulations; it shows power and false discovery rate (FDR) for SuSiE-RSS with and without using the refinement procedure as the PIP threshold for reporting causal SNPs is varied from 0 to 1. (This plot is the same as a precision-recall curve after flipping the x-axis because and recall = power.) Circles are drawn at a PIP threshold of 0.95.

Although this sort of problem was not common in our simulations, it occurred often enough that the refinement procedure yielded a noticeable improvement in performance across many simulations (Fig 2, Panel D). In this plot, power and false discovery rate (FDR) are calculated as and , where FP, TP, FN, TN denote, respectively, the number of false positives, true positives, false negatives and true negatives. In our remaining experiments, we therefore always ran SuSiE-RSS with refinement.

Impact of LD accuracy on fine-mapping

We performed simulations to compare SuSiE-RSS with several other fine-mapping methods for summary data: FINEMAP [12, 21], DAP-G [14, 16] and CAVIAR [7]. These methods differ in the underlying modeling assumptions, the priors used, and in the approach taken to compute posterior quantities. For these simulations, SuSiE-RSS, FINEMAP and DAP-G were all very fast, usually taking no more than a few seconds per data set (Table 2); by contrast, CAVIAR was much slower because it exhaustively evaluated all causal SNP configurations. Other Bayesian fine-mapping methods for summary data include PAINTOR [8], JAM [15] and CAVIARBF [11]. FINEMAP has been shown [12] to be faster and at least as accurate as PAINTOR and CAVIARBF. JAM is comparable in accuracy to FINEMAP [15] and is most beneficial when jointly fine-mapping multiple genomic regions, which we did not consider here.

Table 2. Runtimes on simulated data sets with in-sample LD matrix.

Average runtimes are taken over 600 simulations. All runtimes are in seconds. All runtimes include the time taken to read the data and write the results to files.

| method | min. | average | max. |

|---|---|---|---|

| SuSiE-RSS, estimated σ, no refinement | 0.65 | 1.33 | 18.89 |

| SuSiE-RSS, estimated σ, with refinement | 1.62 | 5.50 | 72.57 |

| SuSiE-RSS, fixed σ, no refinement | 0.40 | 1.40 | 18.61 |

| SuSiE-RSS, fixed σ, with refinement | 1.44 | 4.81 | 62.34 |

| SuSiE-RSS, fixed σ, with refinement, L = true | 0.37 | 1.52 | 4.95 |

| DAP-G | 0.66 | 5.70 | 371.76 |

| FINEMAP | 1.67 | 16.11 | 39.27 |

| FINEMAP, L = true | 1.00 | 12.92 | 42.93 |

| CAVIAR, L = true | 3.54 | 1,516.91 | 4,831.95 |

We compared methods based on both their posterior inclusion probabilities (PIPs) [44] and credible sets (CSs) [2, 17]. These quantities have different advantages. PIPs have the advantage that they are returned by most methods, and can be used to assess familiar quantities such as power and false discovery rates. CSs have the advantage that, when the data support multiple causal signals, the multiple causal signals is explicitly reflected in the number of CSs reported. Uncertainty in which SNP is causal is reflected in the size of a CS.

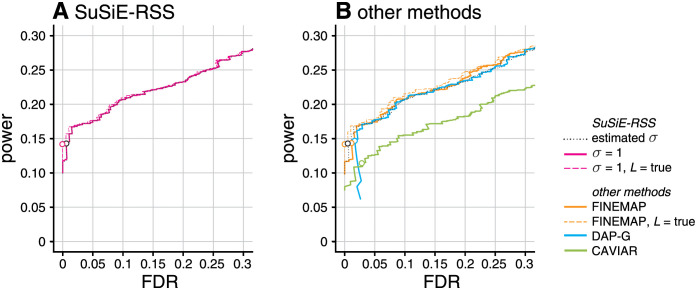

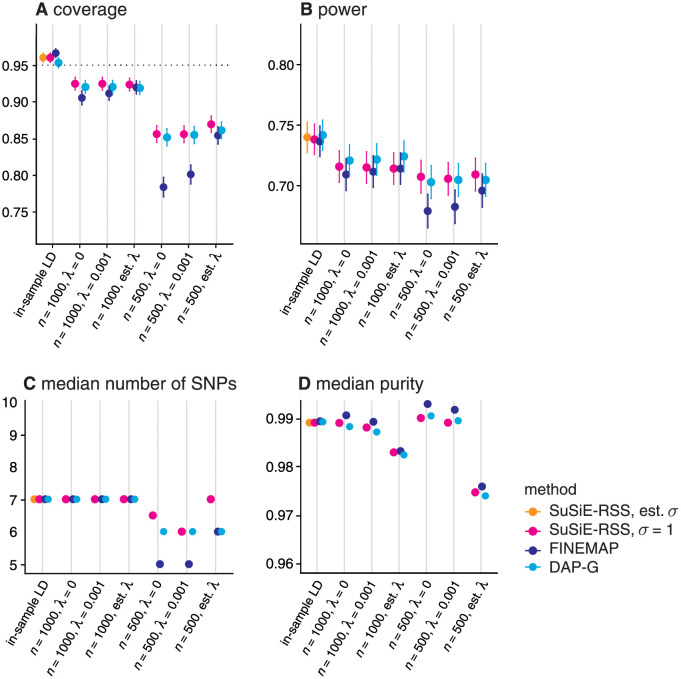

First, we assessed the performance of summary-data methods using the in-sample LD matrix. With an in-sample LD matrix, SuSiE-RSS applied to sufficient statistics (with estimated σ2) will produce the same results as SuSiE on the individual-level data, so we did not include SuSiE in this comparison. The results show that SuSiE-RSS, FINEMAP and DAP-G have very similar performance, as measured by both PIPs (Fig 3) and CSs (“in-sample LD” columns in Fig 4). Further, all four methods produced CSs whose coverage was close to the target level of 95% (Panel A in Fig 4). The main difference between the methods is that DAP-G produced some “high confidence” (high PIP) false positives, which hindered its ability to produce very low FDR values. Both SuSiE-RSS and FINEMAP require the user to specify an upper bound on the number of causal SNPs L. Setting this upper bound to the true value (“L = true” in the figures) only slightly improved their performance, demonstrating that, with an in-sample LD matrix, these methods are robust to overstating this bound. We also compared the sufficient-data (estimated σ2) and summary-data (fixed σ2) variants of SuSiE-RSS (see Table 1). The performance of the two variants was very similar, likely owing to the fact that the PVE was close to zero in all simulations, and so σ2 = 1 was not far from the truth. CAVIAR performed notably less well than the other methods for the PIP computations. (Note the CSs computed by CAVIAR are defined differently from CSs computed by other methods, so we did not include CAVIAR in Fig 4.)

Fig 3. Discovery of causal SNPs using posterior inclusion probabilities—in-sample LD.

Each curve shows power vs. FDR in identifying causal SNPs when the method (SuSiE-RSS, FINEMAP, DAP-G or CAVIAR) was provided with the in-sample LD matrix. FDR and power are calculated from 600 simulations as the PIP threshold is varied from 0 to 1. Open circles are drawn at a PIP threshold of 0.95. Two variants of FINEMAP and three variants of SuSiE-RSS are also compared: when L, the maximum number of estimated causal SNPs, is the true number of causal SNPs, or larger than the true number; and, for SuSiE-RSS only, when the residual variance σ2 is estimated (“sufficient data”) or fixed to 1 (“summary data”); see Table 1. The results for SuSiE-RSS with estimated σ2 is shown in both A and B to aid in comparing results. Note that power and FDR are virtually identical for all three variants of SuSiE-RSS so the three curves almost completely overlap in Panel A.

Fig 4. Assessment of 95% credible sets from SuSiE-RSS, FINEMAP and DAP-G with different LD estimates, and different LD regularization methods.

For in-sample LD, two variants of SuSiE-RSS were also compared (see Table 1): when the residual variance σ2 was estimated (“sufficient data”), or fixed to 1 (“summary data”). We evaluate the estimated CSs using the following metrics: (A) coverage, the proportion of CSs that contain a true causal SNP; (B) power, the proportion of true causal SNPs included in a CS; (C) median number of SNPs in each CS; and (D) median purity, where “purity” is defined as the smallest absolute correlation among all pairs of SNPs within a CS. These statistics are taken as the mean (A, B) or median (C, D) over all simulations; error bars in A and B show two times the standard error. The target coverage of 95% is shown as a dotted horizontal line in Panel A. Following [17], we discarded all CSs with purity less than 0.5.

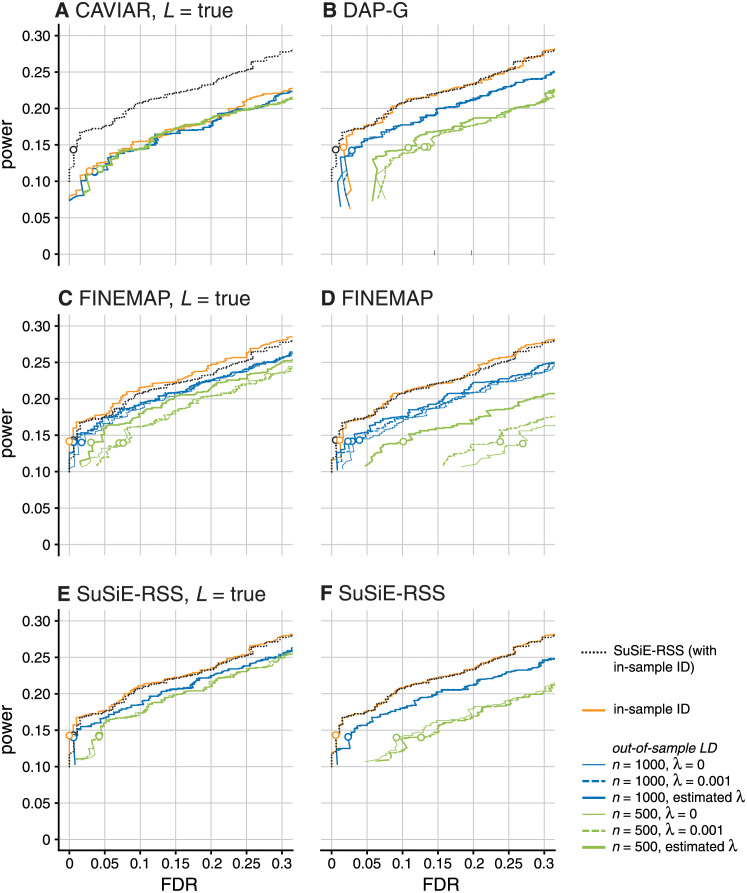

Next, we compared the summary data methods using different out-of-sample LD matrices, again using SuSiE-RSS with in-sample LD (and estimated σ2) as a benchmark. For each method, we computed out-of-sample LD matrices using two different panel sizes (n = 500, 1000) and three different values for the regularization parameter, λ (no regularization, λ = 0; weak regularization, λ = 0.001; and λ estimated from the data). As might be expected, the performance of SuSiE-RSS, FINEMAP and DAP-G all degraded with out-of-sample LD compared with in-sample LD; see Figs 4 and 5. Notably, the CSs no longer met the 95% target coverage (Panel A in Fig 4). In all cases, performance was notably worse with the smaller reference panel, which highlights the importance of using a sufficiently large reference panel [31]. Regarding regularization, SuSiE-RSS and DAP-G performed similarly at all levels of regularization, and so do not appear to require regularization; in contrast, FINEMAP required regularization with an estimated λ to compete with SuSiE-RSS and DAP-G. Since estimating λ is somewhat computationally burdensome, SuSiE-RSS and DAP-G have an advantage in this situation. All three methods benefited more from increasing the size of the reference panel than from regularization, again emphasizing the importance of sufficiently large reference panels. Interestingly, CAVIAR’s performance was relatively insensitive to choice of LD matrix; however the other methods clearly outperformed CAVIAR with the larger (n = 1, 000) reference panel.

Fig 5. Discovery of causal SNPs using posterior inclusion probabilities—Out-of-sample LD.

Plots compare power vs. FDR for fine-mapping methods with different LD matrices, across all 600 simulations, as the PIP threshold is varied from 0 to 1. Open circles indicate results at PIP threshold of 0.95. Each plot compares performance of one method (CAVIAR, DAP-G, FINEMAP or SuSiE-RSS) when provided with different LD estimates: in-sample (), or out-of-sample LD from a reference panel with either 1,000 samples () or 500 samples (). For out-of-sample LD, different levels of the regularization parameter λ are also compared: λ = 0; λ = 0.001; and estimated λ. Panels C–F show results for two variants of FINEMAP and SuSiE-RSS: in Panels C and E, the maximum number of causal SNPs, L, is set to the true value (“L = true”); in Panels D and F, L is set larger than the true value (L = 5 for FINEMAP; L = 10 for SuSiE-RSS). In each panel, the dotted black line shows the results from SuSiE-RSS with in-sample LD and estimated σ2, which provides a baseline for comparison (note that all the other SuSiE-RSS results were generated by fixing σ2 to 1, which is the recommended setting for out-of-sample LD; see Table 1). Some power vs. FDR curves may not be visible in the plots because they overlap almost completely with another curve, such as some of the SuSiE-RSS results at different LD regularization levels.

The fine-mapping results with out-of-sample LD matrix also expose another interesting result: if FINEMAP and SuSiE-RSS are provided with the true number of causal SNPs (L = true), their results improve (Fig 5, Panels C vs. D, Panels E vs. F). This improvement is particularly noticeable for the small reference panel. We interpret this result as indicating a tendency of these methods to react to misspecification of the LD matrix by sometimes including additional (false positive) signals. Specifying the true L reduces their tendency to do this because it limits the number of signals that can be included. This suggests that restricting the number of causal SNPs, L, may make fine-mapping results more robust to misspecification of the LD matrix, even for methods that are robust to overstating L when the LD matrix is accurate. Priors or penalties that favor smaller L may also help. Indeed, when none of the methods are provided with information about the true number of causal SNPs, DAP-G slightly outperforms FINEMAP and SuSiE-RSS, possibly reflecting a tendency for DAP-G to favour models with smaller numbers of causal SNPs (either due to the differences in prior or differences in approximate posterior inference). Further study of this issue may lead to methods that are more robust to misspecified LD.

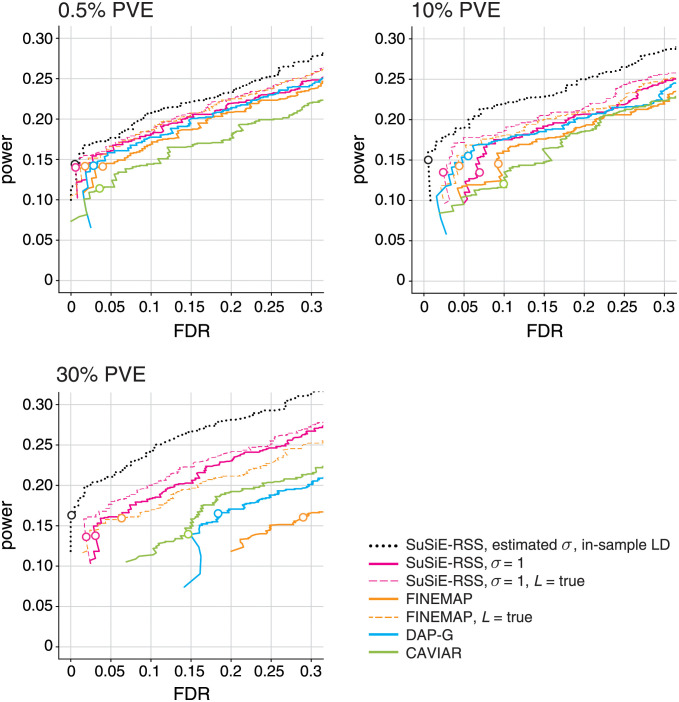

Fine-mapping causal SNPs with larger effects

Above, we evaluated the performance of fine-mapping methods in simulations when the simulated effects of the causal SNPs were small (total PVE of 0.5%). This was intended to mimic the typical situation encountered in genome-wide association studies [45, 46]. Here we scrutinize the performance of fine-mapping methods when the effects of the causal SNPs are much larger, which might be more representative of the situation in expression quantitative trait loci (eQTL) studies [47–49]. FINEMAP and SuSiE—and therefore SuSiE-RSS with sufficient statistics—are expected to perform well in this setting [17, 21], but, as mentioned above, some summary-data methods make the (implicit) assumption that the effects are small (see “Connections with previous work”), and this assumption may affect performance in settings where this assumption is violated.

To assess the ability of the fine-mapping methods to identify causal SNPs with larger effects, we performed an additional set of simulations, again using the UK Biobank genotypes, except that here we simulated the 1–3 causal variants so that they explained, in total, a much larger proportion of variance in the trait (PVE of 10% and 30%). To evaluate these methods at roughly the same level of difficulty (i.e., power), we simulated these fine-mapping data sets with much smaller sample sizes, N = 2, 500 and N = 800, respectively (the out-of-sample LD matrix was calculated using 1,000 samples).

The results of these high-PVE simulations are summarized in Fig 6. As expected, SuSiE-RSS with in-sample LD matrix performed consistently better than the other methods, which use an out-of-sample LD matrix, and therefore provides a baseline against which other methods can be compared. Overall, increasing PVE tended to increase variation in performance among the methods. In all PVE settings, SuSiE-RSS with out-of-sample LD was among the top performers, and it most clearly outperformed other methods in the highest PVE setting (30% PVE), where all of FINEMAP, DAP-G, and CAVIAR showed a notable decrease in performance. For DAP-G and CAVIAR, this decrease in performance was expected due to their implicit modeling assumption that the effect sizes are small. For FINEMAP, this drop in performance was unexpected since FINEMAP also uses the PVE-adjusted z-scores to account for larger effects. Although this situation is unusual in fine-mapping studies—that is, it is unusual for a handful of SNPs to explain such a large proportion of variance in the trait—we examined these FINEMAP results more closely to understand why this was happening. (We also prepared a detailed working example illustrating this result; see https://stephenslab.github.io/finemap/large_effect.html.) We confirmed that this performance drop only occurred with an out-of-sample LD matrix; with an in-sample LD matrix, FINEMAP’s performance was very similar to SuSiE-RSS’s with an in-sample LD matrix (results not shown). A partial explanation for the much worse performance with out-of-sample LD was that FINEMAP often overestimated the number of causal SNPs; in 17% of the simulations, FINEMAP assigned highest probability to configurations with more causal SNPs than the true number. By contrast, SuSiE-RSS overestimated the number of causal SNPs (i.e., the number of CSs) in only 1% of the simulations. Fortunately, in settings where causal SNPs might have larger effects, FINEMAP’s performance can be greatly improved by telling it the true number of causal SNPs (“L = true”), which is consistent with our earlier finding that restricting L in SuSiE-RSS and FINEMAP can improve fine-mapping with an out-of-sample LD matrix.

Fig 6. Discovery of causal SNPs using posterior inclusion probabilities—Out-of-sample LD and larger effects.

Each curve shows power vs. FDR for identifying causal SNPs with different effect sizes (total PVE of 0.5%, 10% and 30%). Each panel summarizes results from 600 simulations; FDR and power are calculated from the 600 simulations as the PIP threshold is varied from 0 to 1. Open circles depict power and FDR at a PIP threshold of 0.95. In addition to comparing different methods (SuSiE-RSS. FINEMAP, DAP-G, CAVIAR), two variants of FINEMAP and SuSiE-RSS are also compared: when L, the maximum number of estimated causal SNPs, is set to the true number of causal SNPs; and when L is larger than the true number. SuSiE-RSS with estimated residual variance σ2 and in-sample LD (dotted black line) is shown as a “best case” method against which other methods can be compared. All other methods are given an out-of-sample LD matrix computed from a reference panel with 1,000 samples, and with no regularization (λ = 0). The simulation results for 0.5% PVE (top-left panel) are the same as the results shown in previous plots (Figs 3 and 5), but presented differently here to facilitate comparison with the results of the higher-PVE simulations.

Discussion

We have presented extensions of the SuSiE fine-mapping method to accommodate summary data, with a focus on marginal z-scores and an out-of-sample LD matrix computed from a reference panel. Our approach provides a generic template for how to extend any full-data regression method to analyze summary data: develop a full-data algorithm that works with sufficient statistics, then apply this algorithm directly to summary data. Although it is simple, as far as we are aware this generic template is novel, and it avoids the need for any special treatment of non-invertible LD matrices.

In simulations, we found that our new method, SuSiE-RSS, is competitive in both accuracy and computational cost with the best available methods for fine-mapping from summary data, DAP-G and FINEMAP. Whatever method is used, our results underscore the importance of accurately computing out-of-sample LD from an appropriate and large reference panel (see also [31]). Indeed, for the best performing methods, performance depended more on choice of LD matrix than on choice of method. We also emphasize the importance of computing z-scores at different SNPs from the exact same samples, using genotype imputation if necessary [50]. It is also important to ensure that alleles are consistently encoded in study and reference samples.

Although our derivations and simulations focused on z-scores computed from quantitative traits with a simple linear regression, in practice it is common to apply summary-data fine-mapping methods to z-scores computed in other ways, e.g., using logistic regression on a binary or case-control trait, or using linear mixed models to deal with population stratification and relatedness. The multivariate normal assumption on z-scores, which underlies all the methods considered here, should also apply to these settings, although as far as we are aware theoretical derivation of the precise form (20) is lacking in these settings (although see [12, 51, 52]). Since the model (20) is already only an approximation, one might expect that the additional effect of such issues might be small, particularly compared with the effect of allele flips or small reference panels. Nonetheless, since our simulations show that model misspecification can hurt performance of existing methods, further research to improve robustness of fine-mapping methods to model misspecification would be welcome.

Supporting information

These plots summarize the likelihood ratios LRj for SNPs j in simulated fine-mapping data sets, separately for allele-flip SNPs with an effect (top row, right-hand side), without an effect on the trait (top row, left-hand side), and for SNPs without a flipped allele that affect the trait (middle row, right-hand side) and do not affect the trait (middle row, left-hand side). The two histograms in the bottom row show likelihood ratios after restricting to SNPs with z-scores greater than 2 in magnitude. The bar heights in the histograms in the middle and bottom rows are drawn on the logarithmic scale to better visualize the smaller numbers of SNPs with likelihood ratios greater than 1 (i.e., log LRj > 0).

(PDF)

More description of the methods, including: the single effect regression (SER) model with summary statistics; the IBSS-ss algorithm; computing the sufficient statistics; approaches to dealing with a non-invertible LD matrix; estimation of λ in the regularized LD matrix; likelihood ratio for detecting allele flips; SuSiE refinement procedure; detailed calculations for toy example; and more details on the UK Biobank simulations.

(PDF)

Acknowledgments

We thank Kaiqian Zhang for her contributions to the development and testing of the susieR package. We thank the University of Chicago Research Computing Center and the Center for Research Informatics for providing high-performance computing resources used to run the numerical experiments. This research has been conducted using data from UK Biobank, a major biomedical database (UK Biobank Application Number 27386).

Data Availability

The genotype data used in our analyses are available from UK Biobank (https://www.ukbiobank.ac.uk). All code implementing the simulations, and the raw and compiled results generated from our simulations, are available at https://github.com/stephenslab/dsc_susierss, and were deposited on Zenodo (https://doi.org/10.5281/zenodo.5611713). The methods are implemented in the R package susieR, available for download at https://github.com/stephenslab/susieR, and on CRAN at https://cran.r-project.org/package=susieR.

Funding Statement

MS acknowledges support from NIH NHGRI grant R01HG002585 (https://www.genome.gov) and a grant from the Gordon and Betty Moore Foundation (https://www.moore.org). GW acknowledges support from NIH NIA grant U01AG072572 (https://www.nia.nih.gov) and funding from the Thompson Family Foundation (TAME-AD; https://www.neurology.columbia.edu/research/research-programs-and-partners/thompson-family-foundation-initiative-columbia-university-tffi). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Hutchinson A, Asimit J, Wallace C. Fine-mapping genetic associations. Human Molecular Genetics. 2020;29(R1):R81–R88. doi: 10.1093/hmg/ddaa148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Maller JB, McVean G, Byrnes J, Vukcevic D, Palin K, Su Z, et al. Bayesian refinement of association signals for 14 loci in 3 common diseases. Nature Genetics. 2012;44(12):1294–1301. doi: 10.1038/ng.2435 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Kote-Jarai Z, Saunders EJ, Leongamornlert DA, Tymrakiewicz M, Dadaev T, Jugurnauth-Little S, et al. Fine-mapping identifies multiple prostate cancer risk loci at 5p15, one of which associates with TERT expression. Human Molecular Genetics. 2013;22(12):2520–2528. doi: 10.1093/hmg/ddt086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Schaid DJ, Chen W, Larson NB. From genome-wide associations to candidate causal variants by statistical fine-mapping. Nature Reviews Genetics. 2018;19(8):491–504. doi: 10.1038/s41576-018-0016-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Spain SL, Barrett JC. Strategies for fine-mapping complex traits. Human Molecular Genetics. 2015;24(R1):R111–R119. doi: 10.1093/hmg/ddv260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Visscher PM, Wray NR, Zhang Q, Sklar P, McCarthy MI, Brown MA, et al. 10 years of GWAS discovery: biology, function, and translation. American Journal of Human Genetics. 2017;101(1):5–22. doi: 10.1016/j.ajhg.2017.06.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Hormozdiari F, Kostem E, Kang EY, Pasaniuc B, Eskin E. Identifying causal variants at loci with multiple signals of association. Genetics. 2014;198(2):497–508. doi: 10.1534/genetics.114.167908 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Kichaev G, Yang WY, Lindstrom S, Hormozdiari F, Eskin E, Price AL, et al. Integrating functional data to prioritize causal variants in statistical fine-mapping studies. PLoS Genetics. 2014;10(10):e1004722. doi: 10.1371/journal.pgen.1004722 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Servin B, Stephens M. Imputation-based analysis of association studies: candidate regions and quantitative traits. PLoS Genetics. 2007;3(7):e114. doi: 10.1371/journal.pgen.0030114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Yang J, Ferreira T, Morris AP, Medland SE, Madden PAF, Heath AC, et al. Conditional and joint multiple-SNP analysis of GWAS summary statistics identifies additional variants influencing complex traits. Nature Genetics. 2012;44(4):369–375. doi: 10.1038/ng.2213 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Chen W, Larrabee BR, Ovsyannikova IG, Kennedy RB, Haralambieva IH, Poland GA, et al. Fine mapping causal variants with an approximate Bayesian method using marginal test statistics. Genetics. 2015;200(3):719–736. doi: 10.1534/genetics.115.176107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Benner C, Spencer CCA, Havulinna AS, Salomaa V, Ripatti S, Pirinen M. FINEMAP: efficient variable selection using summary data from genome-wide association studies. Bioinformatics. 2016;32(10):1493–1501. doi: 10.1093/bioinformatics/btw018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Li Y, Kellis M. Joint Bayesian inference of risk variants and tissue-specific epigenomic enrichments across multiple complex human diseases. Nucleic Acids Research. 2016;44(18):e144. doi: 10.1093/nar/gkw627 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Wen X, Lee Y, Luca F, Pique-Regi R. Efficient integrative multi-SNP association analysis via deterministic approximation of posteriors. American Journal of Human Genetics. 2016;98(6):1114–1129. doi: 10.1016/j.ajhg.2016.03.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Newcombe PJ, Conti DV, Richardson S. JAM: a scalable Bayesian framework for joint analysis of marginal SNP effects. Genetic Epidemiology. 2016;40(3):188–201. doi: 10.1002/gepi.21953 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Lee Y, Luca F, Pique-Regi R, Wen X. Bayesian multi-SNP genetic association analysis: control of FDR and use of summary statistics. bioRxiv. 2018. doi: 10.1101/316471 [DOI] [Google Scholar]

- 17. Wang G, Sarkar A, Carbonetto P, Stephens M. A simple new approach to variable selection in regression, with application to genetic fine mapping. Journal of the Royal Statistical Society, Series B. 2020;82(5):1273–1300. doi: 10.1111/rssb.12388 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Sesia M, Katsevich E, Bates S, Candès E, Sabatti C. Multi-resolution localization of causal variants across the genome. Nature Communications. 2020;11:1093. doi: 10.1038/s41467-020-14791-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Wallace C, Cutler AJ, Pontikos N, Pekalski ML, Burren OS, Cooper JD, et al. Dissection of a complex disease susceptibility region using a Bayesian stochastic search approach to fine mapping. PLoS Genetics. 2015;11(6):e1005272. doi: 10.1371/journal.pgen.1005272 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Hutchinson A, Watson H, Wallace C. Improving the coverage of credible sets in Bayesian genetic fine-mapping. PLoS Computational Biology. 2020;16(4):e1007829. doi: 10.1371/journal.pcbi.1007829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Benner C, Havulinna AS, Salomaa V, Ripatti S, Pirinen M. Refining fine-mapping: effect sizes and regional heritability. bioRxiv. 2018. doi: 10.1101/318618 [DOI] [Google Scholar]

- 22. Pasaniuc B, Price AL. Dissecting the genetics of complex traits using summary association statistics. Nature Reviews Genetics. 2017;18(2):117–127. doi: 10.1038/nrg.2016.142 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Zhu X, Stephens M. Bayesian large-scale multiple regression with summary statistics from genome-wide association studies. Annals of Applied Statistics. 2017;11(3):1561–1592. doi: 10.1214/17-aoas1046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Kanai M, Elzur R, Zhou W, analysis Initiative GBM, Daly MJ, Finucane HK. Meta-analysis fine-mapping is often miscalibrated at single-variant resolution. medRxiv. 2022. doi: 10.1101/2022.03.16.22272457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Chipman H, George EI, McCulloch RE. The practical implementation of Bayesian model selection. In: Model Selection. vol. 38 of IMS Lecture Notes. Institute of Mathematical Statistics; 2001. p. 65–116.

- 26. Veyrieras JB, Kudaravalli S, Kim SY, Dermitzakis ET, Gilad Y, Stephens M, et al. High-resolution mapping of expression-QTLs yields insight into human gene regulation. PLoS Genetics. 2008;4(10):e1000214. doi: 10.1371/journal.pgen.1000214 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Pickrell JK. Joint analysis of functional genomic data and genome-wide association studies of 18 human traits. American Journal of Human Genetics. 2014;94(4):559–573. doi: 10.1016/j.ajhg.2014.03.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Giambartolomei C, Vukcevic D, Schadt EE, Franke L, Hingorani AD, Wallace C, et al. Bayesian test for colocalisation between pairs of genetic association studies using summary statistics. PLoS Genetics. 2014;10(5):e1004383. doi: 10.1371/journal.pgen.1004383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Willer CJ, Li Y, Abecasis GR. METAL: fast and efficient meta-analysis of genomewide association scans. Bioinformatics. 2010;26(17):2190–2191. doi: 10.1093/bioinformatics/btq340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Chang CC, Chow CC, Tellier LC, Vattikuti S, Purcell SM, Lee JJ. Second-generation PLINK: rising to the challenge of larger and richer datasets. Gigascience. 2015;4(1):s13742–015–0047–8. doi: 10.1186/s13742-015-0047-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Benner C, Havulinna AS, Järvelin MR, Salomaa V, Ripatti S, Pirinen M. Prospects of fine-mapping trait-associated genomic regions by using summary statistics from genome-wide association studies. American Journal of Human Genetics. 2017;101(4):539–551. doi: 10.1016/j.ajhg.2017.08.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Stephens M, Balding DJ. Bayesian statistical methods for genetic association studies. Nature Reviews Genetics. 2009;10(10):681–690. doi: 10.1038/nrg2615 [DOI] [PubMed] [Google Scholar]

- 33. Wakefield J. Bayes factors for genome-wide association studies: comparison with P-values. Genetic Epidemiology. 2009;33(1):79–86. doi: 10.1002/gepi.20359 [DOI] [PubMed] [Google Scholar]

- 34. Zou Y, Carbonetto P, Wang G, Stephens M. Fine-mapping from summary data with the “Sum of Single Effects” model. bioRxiv. 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Lozano JA, Hormozdiari F, Joo JWJ, Han B, Eskin E. The multivariate normal distribution framework for analyzing association studies. bioRxiv. 2017. doi: 10.1101/208199 [DOI] [Google Scholar]

- 36. Park Y, Sarkar AK, He L, Davila-Velderrain J, De Jager PL, Kellis M. A Bayesian approach to mediation analysis predicts 206 causal target genes in Alzheimer’s disease. bioRxiv. 2017. doi: 10.1101/219428 [DOI] [Google Scholar]

- 37. Mak TSH, Porsch RM, Choi SW, Zhou X, Sham PC. Polygenic scores via penalized regression on summary statistics. Genetic Epidemiology. 2017;41(6):469–480. doi: 10.1002/gepi.22050 [DOI] [PubMed] [Google Scholar]

- 38. Ledoit O, Wolf M. A well-conditioned estimator for large-dimensional covariance matrices. Journal of Multivariate Analysis. 2004;88(2):365–411. doi: 10.1016/S0047-259X(03)00096-4 [DOI] [Google Scholar]

- 39. Pasaniuc B, Zaitlen N, Shi H, Bhatia G, Gusev A, Pickrell J, et al. Fast and accurate imputation of summary statistics enhances evidence of functional enrichment. Bioinformatics. 2014;30(20):2906–2914. doi: 10.1093/bioinformatics/btu416 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Chen W, Wu Y, Zheng Z, Qi T, Visscher PM, Zhu Z, et al. Improved analyses of GWAS summary statistics by reducing data heterogeneity and errors. Nature Communications. 2021;12:7117. doi: 10.1038/s41467-021-27438-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Lee D, Bigdeli TB, Riley BP, Fanous AH, Bacanu SA. DIST: direct imputation of summary statistics for unmeasured SNPs. Bioinformatics. 2013;29(22):2925–2927. doi: 10.1093/bioinformatics/btt500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. LaPierre N, Taraszka K, Huang H, He R, Hormozdiari F, Eskin E. Identifying causal variants by fine mapping across multiple studies. bioRxiv. 2020. doi: 10.1101/2020.01.15.908517 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Bycroft C, Freeman C, Petkova D, Band G, Elliott LT, Sharp K, et al. The UK Biobank resource with deep phenotyping and genomic data. Nature. 2018;562(7726):203–209. doi: 10.1038/s41586-018-0579-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Guan Y, Stephens M. Bayesian variable selection regression for genome-wide association studies and other large-scale problems. Annals of Applied Statistics. 2011;5(3):1780–1815. doi: 10.1214/11-AOAS455 [DOI] [Google Scholar]

- 45. Bodmer W, Bonilla C. Common and rare variants in multifactorial susceptibility to common diseases. Nature Genetics. 2008;40(6):695–701. doi: 10.1038/ng.f.136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Ioannidis JPA, Trikalinos TA, Khoury MJ. Implications of small effect sizes of individual genetic variants on the design and interpretation of genetic association studies of complex diseases. American Journal of Epidemiology. 2006;164(7):609–614. doi: 10.1093/aje/kwj259 [DOI] [PubMed] [Google Scholar]

- 47. Barbeira AN, Bonazzola R, Gamazon ER, Liang Y, Park Y, Kim-Hellmuth S, et al. Exploiting the GTEx resources to decipher the mechanisms at GWAS loci. Genome Biology. 2021;22:49. doi: 10.1186/s13059-020-02252-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. The GTEx Consortium. The Genotype-Tissue Expression (GTEx) pilot analysis: multitissue gene regulation in humans. Science. 2015;348(6235):648–660. doi: 10.1126/science.1262110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Wen X, Luca F, Pique-Regi R. Cross-population joint analysis of eQTLs: fine mapping and functional annotation. PLoS Genetics. 2015;11(4):e1005176. doi: 10.1371/journal.pgen.1005176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Zhu Z, Zhang F, Hu H, Bakshi A, Robinson MR, Powell JE, et al. Integration of summary data from GWAS and eQTL studies predicts complex trait gene targets. Nature Genetics. 2016;48(5):481–487. doi: 10.1038/ng.3538 [DOI] [PubMed] [Google Scholar]

- 51. Han B, Kang HM, Eskin E. Rapid and accurate multiple testing correction and power estimation for millions of correlated markers. PLoS Genetics. 2009;5(4):e1000456. doi: 10.1371/journal.pgen.1000456 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Pirinen M, Donnelly P, Spencer CCA. Efficient computation with a linear mixed model on large-scale data sets with applications to genetic studies. Annals of Applied Statistics. 2013;7(1):369–390. doi: 10.1214/12-AOAS586 [DOI] [Google Scholar]