Abstract

A key aspect of neuroscience research is the development of powerful, general-purpose data analyses that process large datasets. Unfortunately, modern data analyses have a hidden dependence upon complex computing infrastructure (e.g. software and hardware), which acts as an unaddressed deterrent to analysis users. While existing analyses are increasingly shared as open source software, the infrastructure and knowledge needed to deploy these analyses efficiently still pose significant barriers to use. In this work we develop Neuroscience Cloud Analysis As a Service (NeuroCAAS ): a fully automated open-source analysis platform offering automatic infrastructure reproducibility for any data analysis. We show how NeuroCAAS supports the design of simpler, more powerful data analyses, and that many popular data analysis tools offered through NeuroCAAS outperform counterparts on typical infrastructure. Pairing rigorous infrastructure management with cloud resources, NeuroCAAS dramatically accelerates the dissemination and use of new data analyses for neuroscientific discovery.

eTOC Blurb:

Computing infrastructure is a fundamental part of neural data analysis. Abe et al. present an open source, cloud based platform called NeuroCAAS to automatically build reproducible computing infrastructure for neural data analysis. They show that NeuroCAAS supports novel analysis design and can improve the efficiency of popular existing methods.

1. Introduction

Driven by the constant evolution of new recording technologies and the vast quantities of data that they generate, neural data analysis — which aims to build the path from these datasets to scientific understanding — has grown into a centrally important part of modern neuroscience, enabling significant new insights into the relationships between neural activity, behavior, and the external environment (Paninski and Cunningham, 2018). Accompanying this growth however, neural data analyses have become much more complex. Historically, the software implementation of a data analysis (what we call the core analysis- Figure 1A) was typically a small, isolated code script with few dependencies. In stark contrast, modern core analyses routinely incorporate video processing algorithms (Pnevmatikakis et al., 2016, Pachitariu et al., 2017, Mathis et al., 2018, Zhou et al., 2018, Giovannucci et al., 2019), deep neural networks (Batty et al., 2016, Gao et al., 2016, Lee et al., 2017, Parthasarathy et al., 2017, Mathis et al., 2018, Pandarinath et al., 2018, Giovannucci et al., 2019), sophisticated graphical models (Yu et al., 2009, Wiltschko et al., 2015, Gao et al., 2016; Wu et al., 2020), and other cutting-edge machine learning methods (Pachitariu et al., 2016, Lee et al., 2017) to create general purpose tools applicable to many datasets.

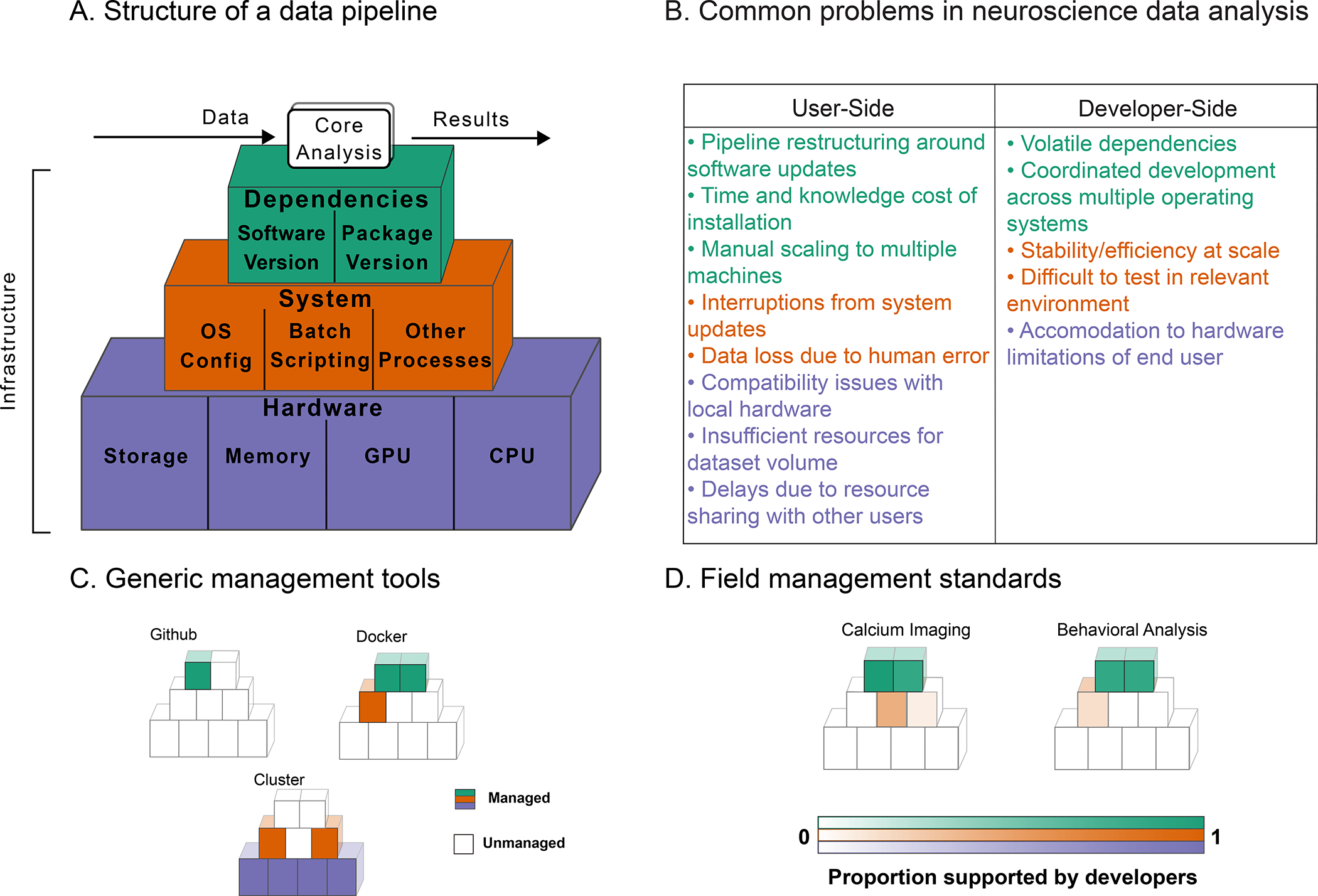

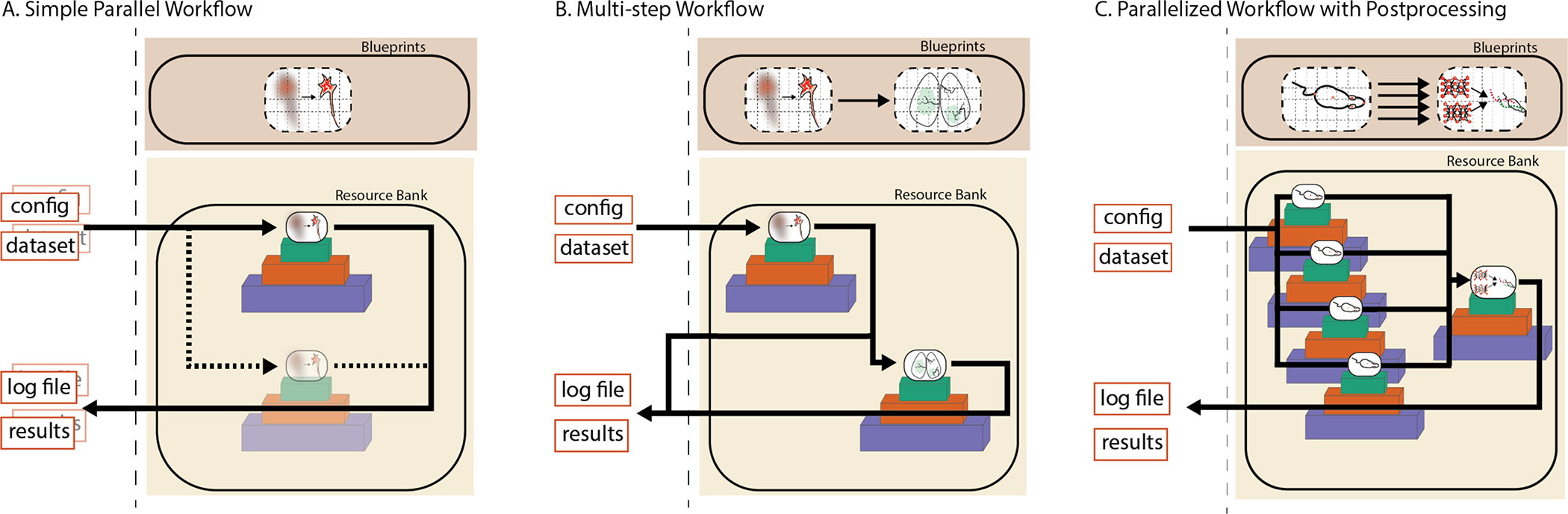

Figure 1: Data Analysis Infrastucture.

A. Core analysis code depends upon an infrastructure stack. B. Common problems arise at each layer of this infrastructure stack for analysis users and developers. C. Many common management tools deal only with one or two layers in the infrastructure stack, leaving gaps that users and developers must fill manually. D. In common neural data analysis tools for calcium imaging and behavioral analysis many infrastructure components are not managed by analysis developers and implicitly delegated to the user (see §9 for full details and supporting data in Tables S2,S1).

To support this increasing complexity, core analysis software is increasingly coupled to underlying analysis infrastructure (Figure 1A): software dependencies like the deep learning libraries PyTorch and TensorFlow (Abadi et al., 2016, Paszke et al., 2019), system level dependencies to manage jobs and computing resources (Merkel, 2014), and hardware dependencies such as a precisely configured CPU (central processing unit), access to a GPU (graphics processing unit), or a required amount of device memory. Figure 1A shows how these individual components form a full infrastructure stack: the necessary, but largely ignored foundation of resources enabling all data analyses (Demchenko et al., 2013, Jararweh et al., 2016, Zhou et al., 2016).

Neglected infrastructure has immediate implications already familiar to the neuroscience community: for every novel analysis, analysis users must spend labor and financial resources on hardware setup, software troubleshooting, unexpected interruptions during long analysis runs, processing constraints due to limited “on-premises” computational resources, and more (Figure 1B). However, far from simply being a nuisance, neglected infrastructure has wide reaching and urgent scientific consequences. Most prominently, infrastructure impacts analysis reproducibility. As data analyses become more dependent on complex infrastructure stacks, it becomes extremely difficult for analysis developers to work reproducibly (Monajemi et al., 2019, Nowogrodzki, 2019). The current treatment of analysis infrastructure is a major contributor to the endemic lack of reproducibility suffered by modern data analysis (Crook et al., 2013, Hinsen, 2015, Stodden et al., 2018, Krafczyk et al., 2019, Raff, 2019), and infrastructure-based barriers have been noted to impede the proliferation of new neuroscientific tools (Magland et al., 2020). Specific cases where seemingly small infrastructure issues directly affect the representation of data-derived quantities have been documented across the biological sciences (Ghosh et al., 2017, Miller, 2006, Glatard et al., 2015). Analogously, in machine learning, infrastructure components can dictate model performance (Sculley et al., 2015, Radiuk, 2017) and a recent survey of this literature observed that although local compute clusters claim to address the issue of hardware availability, none of the studies that required use of a compute cluster were reproducible (Raff, 2019).

Major efforts have been made by journals (Donoho, 2010, Hanson et al., 2011, ) and funding agencies (Carver et al., 2018) to encourage the sharing of core analysis software. Additionally, new tools have been developed to address related neuroscientific challenges like the formatting (Teeters et al., 2015, Rübel et al., 2019, Rübel et al., 2021) and storage of data (Dandi Team, 2019), or workflow management on existing infrastructure (Yatsenko et al., 2015, Gorgolewski et al., 2011) (see §3 for a detailed overview). However, these important efforts still neglect key issues in the configuration of infrastructure stacks. Despite calls to improve standards of practice in the field (Vogelstein et al., 2016), and work in fields such as astronomy, genomics, and high energy physics (Hoffa et al., 2008, Riley, 2010, Goecks et al., 2010, Zhou et al., 2016, Chen et al., 2019, Monajemi et al., 2019), there has been little concrete progress in our field towards a scientifically acceptable infrastructure solution for many popular core analyses. Some tools – compute clusters, versioning tools like Github (https://github.com), and containerization services like Docker (Merkel, 2014) – provide various infrastructure components (Figure 1C), but it is nontrivial to combine these components into a complete infrastructure stack. The ultimate effect of these partially used toolsets is a hodgepodge of often slipshod infrastructure practices (Figure 1D; supporting data in Tables S1, S2).

Critically, management of these issues most often falls upon trainees who are neither scientifically rewarded (Landhuis, 2017, Chan Zuckerberg Initiative, 2014), nor specifically instructed (Merali, 2010) on how to set up increasingly complex core analyses with infrastructure stacks, reliant on whatever resources are available on hand. We term this conventional model Infrastructure-as-Graduate-Student, or IaGS – infrastructure stacks treated as a scientific afterthought, delegated entirely to underresourced trainees and operating as a silent source of errors and inefficiency. The IaGS status quo fails any reasonable standard of scientific rigor, reduces the accessibility of valuable analytical tools, and impedes scientific training and progress.

Of course, infrastructure challenges are not specific to neuroscience, or even science generally. Entities that deploy software at industrial scale have recently adopted the Infrastructure-as-Code (IaC) paradigm, automating the creation and management of infrastructure stacks (Morris, 2016, Aguiar et al., 2018). In contrast to IaGS approaches, the IaC paradigm begins with a code document that completely specifies the infrastructure stack supporting any given core software. From this code document, the corresponding infrastructure stack can be assembled automatically (most often on a cloud platform), in a process called deployment. After deployment, anyone with access to the platform can use the core software in question without knowledge of its underlying infrastructure stack, while still having the assurance that core software is functioning exactly as indicated in the corresponding code document. Altogether, IaC enables reproducible usage at scale, skirting all of the issues shown in Figure 1B. Despite these benefits, there has been no previous effort to extend IaC to general-purpose neuroscience data analyses and associated infrastructure stacks.

In response, we developed Neuroscience Cloud Analysis as a Service (NeuroCAAS ), an IaC platform that pairs core analyses for neuroscience data with bespoke infrastructure stacks through deployable code documents. NeuroCAAS assigns each core analysis a corresponding infrastructure stack, using a set of modular components concisely specified in code (see §2.1 for details). NeuroCAAS stores the specification of this core analysis and infrastructure stack in a code document called a blueprint, which any analysis user can then deploy to analyze their data. To maximize the scale and accessibility benefits of our platform, we provide an open source web interface to NeuroCAAS (§2.2), available to the neuroscience community at large. The result is scalable, reproducible, drag-and-drop usage of neural data analysis: neuroscientists can log on to the NeuroCAAS website, set some parameters for an analysis, and simply submit their data. A new infrastructure stack is then deployed on the cloud according to a specified blueprint and autonomously produces analysis results, which are returned to the user. This aspect of NeuroCAAS warrants emphasis, as it diverges starkly from traditional scientific practice: NeuroCAAS is not only a platform design, or suggestion that the reader can attempt to recreate on their own; instead, NeuroCAAS is offered as an open source infrastructure platform available for immediate use, via a website (www.neurocaas.org).

We first describe IaC analysis infrastructure on NeuroCAAS (§2.1), and how it addresses common engineering challenges related to analysis reproducibility, accessibility and scale (§2.2). In (§2.3) we compare NeuroCAAS ‘s solution to these engineering challenges with features of existing data analysis platforms. Next, in §2.4,2.5, we show how NeuroCAAS can enable novel analyses designed to take advantage of the platform’s infrastructure benefits. Finally, in §2.6, we quantify the performance of popular data analyses on NeuroCAAS, and find that analyses encoded in blueprints are cheaper and faster than analogues run on local infrastructure (e.g. a compute cluster).

2. Results

NeuroCAAS ‘s primary technical contribution is a method to precisely specify the entire infrastructure stack underlying any core analysis, and reproduce it on demand. Treating core analysis and infrastructure as a unified whole within NeuroCAAS makes analyses more reproducible and accessible at scale than existing alternatives.

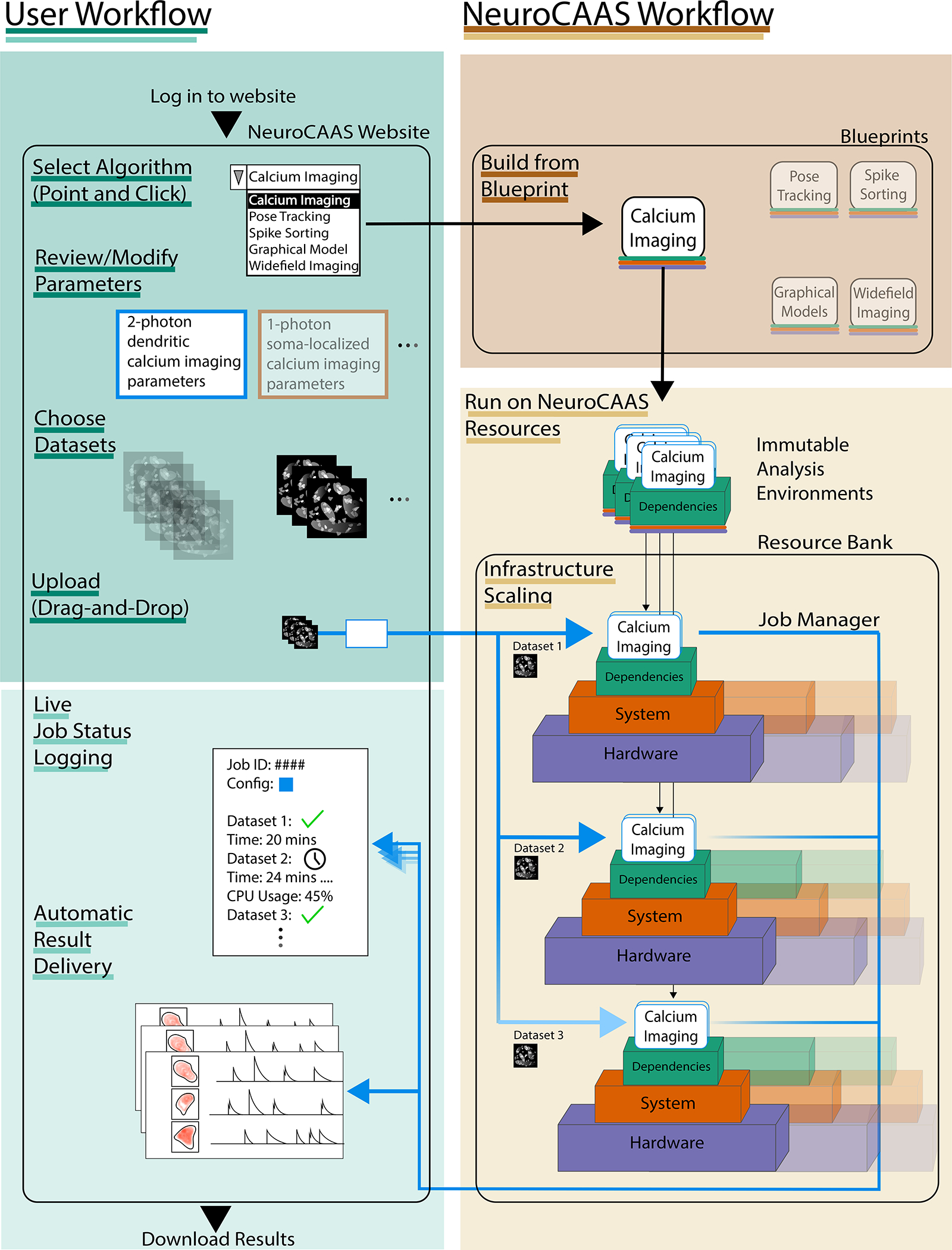

In the simplest use case, users simply log in to the platform and drag and drop their dataset(s) into a web browser (Figure 2, top left), sending it to cloud based user storage. After specifying a set of developer-defined parameters to apply to the selected dataset(s), they can submit a NeuroCAAS “job.” No further user input is needed: given the relevant datasets(s) and parameters, NeuroCAAS sets up core analysis for each dataset on an entirely new infrastructure stack from the corresponding blueprint (Figure 2, black arrows). NeuroCAAS then pulls data and parameters independently into each infrastructure stack (Figure 2, blue arrows), providing scalable and reproducible computational processing as needed (Figure 2, bottom right). Analysis outputs (including live status logs and a complete record of the job’s inputs and infrastructure) are then delivered back to timestamped folders in user storage for inspection by analysis users (Figure 2, bottom left), and finally infrastructure stacks are dissolved when data processing is complete (Figure 2, bottom right). As an example, Supplementary Video 1 shows how users can train three separate DeepGraphPose models (Wu et al., 2020) on three separate datasets simultaneously using the NeuroCAAS web interface.

Figure 2: Overview of NeuroCAAS User Workflow.

Left indicates the user’s experience; right indicates the work that NeuroCAAS performs. The user chooses from the analyses encoded in NeuroCAAS. They then modify corresponding configuration parameters as needed. Finally, the user uploads dataset(s) and a configuration file for analysis. NeuroCAAS detects upload event and deploys the requested analysis using an infrastructure blueprint (§2.1.4). NeuroCAAS builds the appropriate number of IAEs (§2.1.1) and corresponding hardware instances (§2.1.3). Multiple infrastructure stacks may be deployed in parallel for multiple datasets and the job manager (§2.1.2) automatically handles input and output scaling. The deployed resources persist only as necessary, and results, as well as diagnostic information, are automatically routed back to the user. See Figure S1 for comparison with IaGS, and Figure S3 for IAE list.

2.1. NeuroCAAS Builds Complete Infrastructure Stacks

The structure of NeuroCAAS naturally solves the issues of reproducibility, accessibility, and scale that burden existing infrastructure tools and platforms. NeuroCAAS partitions a complete infrastructure stack into three decoupled parts that together are sufficient to support virtually any given core analysis. First, to address all software level infrastructure, NeuroCAAS offers all analyses in immutable analysis environments (§2.1.1). Second, to address system configuration, each NeuroCAAS analysis has a built-in job manager (§2.1.2) that automates all of the logistical tasks associated with analyzing data: configuring hardware, logging outputs, parallelizing jobs and more. Third, to provide reproducible computing hardware on demand, NeuroCAAS manages a resource bank (§2.1.3) built on the public cloud, making the service globally accessible at unmatched scale. For a given core analysis, the configuration of these three infrastructure components is concisely summarized in a NeuroCAAS blueprint, from which it can be automatically rebuilt (§2.1.4). We describe component implementation in further depth in §9.2.

2.1.1. Immutable Analysis Environments for Software Infrastructure

On NeuroCAAS, all core analyses run inside immutable analysis environments (IAEs). An IAE is an isolated computing environment containing the installed core analysis code and all necessary software dependencies, similar to a Docker container (Merkel, 2014). Importantly, an IAE also contains a single script that parses input and parameters in a prescribed way, and runs the steps of core analysis workflow (Figure 2, right; Figure S6, top left). The fact that analysis workflow is entirely governed by this script (i.e. non-interactive) makes our analysis environments immutable. IAEs eliminate the possibility of bugs resulting from incompatible dependencies, mid-analysis misconfiguration (Figure S1A, installation and troubleshooting), or other so-called “user degrees of freedom” and ensure that analyses are run within developer-defined workflows. Immutability has a long history as a principle of effective programming and resource management in computer science (Bloch, 2008, Morris, 2016), and in this context is closely related to the view that data analysis should be automated as much as possible (Tukey, 1962, Waltz and Buchanan, 2009). These views are justified by observed benefits to analysis at scale, which we leverage in (§2.4.,2.5).

Each IAE has a unique ID, and analysis updates can be recorded in IAEs linked through blueprint versions (see §2.1.4 for details). We have currently implemented 22 analyses in a series of immutable analysis environments (Table S3) and are actively developing more (see www.neurocaas.org for current options).

2.1.2. Job Managers for System Infrastructure

Given a dataset and analysis parameters, how does NeuroCAAS set up the right IAE and computing hardware to process these inputs? This configuration is the responsibility of the NeuroCAAS job manager, which monitors analysis progress and returns timestamped job outputs to user storage from the IAE (including live job logs). Although similar in these regards to a cluster workload manager like slurm (Yoo et al., 2003) (Figure 2, blue arrows) the NeuroCAAS job manager does not assign jobs to running infrastructure, but rather set up all other infrastructure components “on the fly,” removing the need for manual infrastructure maintenance (Figure 2; black arrows). The job manager for each analysis functions according to a code “protocol” that describes what steps should be taken when a new NeuroCAAS job is requested. Importantly, protocols can be customized for each analysis, allowing developers to implement simple features like input parsing, or complex multi-stack workflows as shown in §2.4.,2.5.

2.1.3. Resource Banks for Hardware Infrastructure

To automatically reproduce infrastructure on demand, we crucially need a way to create identical hardware configurations across multiple users of the same analysis, who may be analyzing data simultaneously at many different locations around the world. This key requirement is handled by the NeuroCAAS resource bank. The NeuroCAAS resource bank can make hardware available through pre-specified instances: bundled collections of virtual CPUs, memory, and GPUs that can emulate any number of familiar hardware configurations (e.g. personal laptop, workstation, on premise cluster). However unlike these persistent computing resources, the NeuroCAAS resource bank is built upon globally available, virtualized compute hardware offered through the public cloud (currently Amazon Web Services). At any time, the resource bank can provide a large number of effectively identical hardware instances to execute a particular task (Figure 2, bottom right). The reproducible nature of hardware instances in the resource bank complements the immutability of workflows imposed by IAEs. By default, we fix a single instance type per analysis in order to facilitate reproducibility (see 9.2 for details).

2.1.4. Blueprints for Instant Reproducibility

For any given analysis, each of NeuroCAAS ‘s infrastructure components (§2.1.1–2.1.3) has a specification in code (IAEs and resource bank instances have IDs, job managers have protocol scripts). The collection of all infrastructure identifying code associated with a given NeuroCAAS analysis is stored in the blueprint of that analysis (Figure 2, top right- for an example see Figure S2), from which new instances of the infrastructure stack can be deployed at will, providing reproducibility by design. Despite sustained efforts to promote reproducible research, (Buckheit and Donoho, 1995), in many typical cases data analysis remains frustratingly non-reproducible (Crook et al., 2013, Gorgolewski et al., 2017, Stodden et al., 2018, Raff, 2019). NeuroCAAS sidesteps all of the typical barriers to reproducible research by tightly coupling the creation and function of infrastructure stacks to their documentation. For transparency, NeuroCAAS stores all currently available and developing analysis blueprints in a public code repository (see §9.2). Updates made to any component of an infrastructure stack on NeuroCAAS (IAEs, job managers, or hardware instances) can only be implemented through subsequent deployments of updated blueprints. We record these changes systematically using simple version control on the blueprint itself, ensuring a publicly visible record of analysis development.

2.2. NeuroCAAS Supports Simple Use and Development

Users analyze data on NeuroCAAS solely through interactions with cloud storage. Therefore, NeuroCAAS supports any interface that allows users to transfer data files to and from cloud storage. The standard interface to NeuroCAAS is a website, www.neurocaas.org, where users can sign up for an account, browse analyses, deposit data and monitor analysis progress until results are returned to them as described in Figure 2. We will describe other interfaces to NeuroCAAS in §2.4 and §3. Regardless of interface, there is no need to manage persistent compute resources during or after analysis, and costs directly reflect usage time.

For comparison, IaGS begins with a number of time-consuming manual steps, including hardware acquisition, hardware setup, and software installation. With a functional infrastructure stack in hand, the user must prepare datasets for analysis, manually recording analysis parameters and monitoring the system for errors as they work. While parallel processing is possible, it must be scripted by the user, and in many cases datasets are run serially. What results from IaGS is massive inefficiency of time and resources. Users must also support the cost of new hardware “up front,” before ever seeing the scientific value of the infrastructure that they are purchasing. Likewise, labs or institutions must pay support costs to maintain infrastructure when it is not being used, and replace components when they fail or become obsolete (see Figure S1 for a side-by-side comparison with NeuroCAAS ). Two editorial remarks bear mentioning at this point: first, the stark difference laid out in these workflows is the essence of IaGS vs IaC, and explains the dominance of IaC in modern industrial settings. Second, NeuroCAAS is and will remain an open-source tool for the scientific community, in keeping with its sole purpose of improving the reproducibility and dissemination of neuroscience analysis tools.

For analysis developers interested in NeuroCAAS, we designed a developer workflow and companion python package that streamlines the process of migrating an existing analysis to the NeuroCAAS platform (see §9.2 and Figure S6 for an overview). Our developer workflow abstracts away the cloud infrastructure that NeuroCAAS is built on, allowing for analysis development entirely from the developer’s command line. We highlight several key features of this workflow here. Importantly, we do not expect any previous experience in containerization technology, cloud tools, or IaC from developers.

Curated deployments.

After setting up an IAE and initializing a corresponding blueprint, developers submit blueprints and test data to our code repository in publicly visible pull requests. NeuroCAAS team members then review the submitted blueprint and deploy the corresponding infrastructure stack in “test mode,” reviewing outputs, requesting updates, and ensuring stated function in a public forum before releasing the analysis to users.

Improving analysis robustness.

A central challenge of building general-purpose data analysis is the difficulty of anticipating all analysis use cases as a developer and ensure robustness to all possible datasets. This is true even with the testing, review, and development practices that NeuroCAAS puts in place. However, NeuroCAAS ‘s design has several features intended to accelerate the process of improving analysis robustness both during and after initial deployment. When errors occur, users can refer the analysis developer to version controlled analysis outputs using standard interfaces like Github issues, greatly simplifying error replication. Developers can then set up fixes on the same infrastructure, and update the analysis blueprint in subsequent deployments. Since infrastructure is rebuilt from the blueprint for each NeuroCAAS job, an updated blueprint fixes the bug, for all future analysis runs of all analysis users. Importantly, updates can be made to a public analysis without influencing the reproducibility of past results (see 9.2 and Figure S6 for details, and §9.4 for links to a full developer guide).

2.2.1. Testing the NeuroCAAS Usage Model

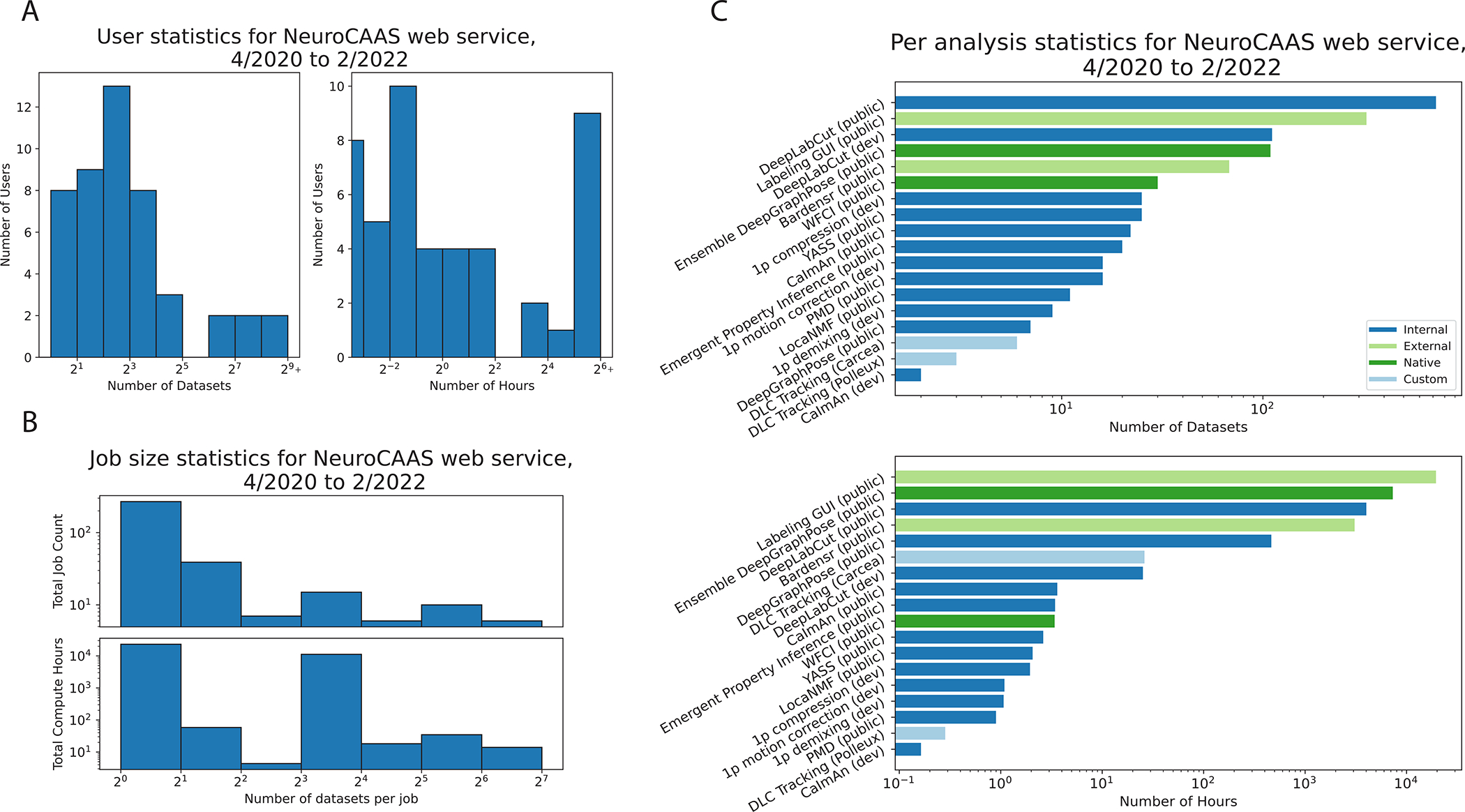

Next, we study how the design of NeuroCAAS translates into quantifiable analysis benefits. We confirmed the accessibility of data analyses on NeuroCAAS by opening the platform to a group of alpha testers (users and developers) over a period of 22 months. In Figure 3A, we see that while some users analyzed a handful of datasets, others analyzed hundreds and spent days of compute with the platform. Figure 3B further studies the co-occurrence of different usage patterns: a large number of single dataset jobs are suggestive of one-off exploratory use, while there is also a considerable proportion of jobs that leverage NeuroCAAS ‘s capacity for parallelism, analyzing anywhere from 2 to 70 datasets in a single job. We also grouped usage by data analysis (Figure 3C). We classified different analyses as follows: dark blue bars indicate existing analysis adapted for NeuroCAAS by manuscript authors. These analyses were developed collaboratively, and in many cases, we iterated on an initial “dev” version of an analysis adapted for NeuroCAAS with feedback from users, before releasing a “public” version that was robust to various differences in workflow and dataset type. Light green bars indicate analyses developed by independent researchers following our developer workflow. We highlight the fact that analyses built following the developer workflow are well used, indicating the viability of the workflow that we have built. Dark green bars indicate analyses that we introduce in this paper specifically for NeuroCAAS infrastructure, described further in §2.4, 2.5. Finally, light blue bars indicate “custom” analyses that we built for particular user groups. NeuroCAAS authors built custom analyses through simple copying and editing of existing, general purpose blueprints. While per-user custom analyses are not a focus of our platform, these results demonstrate the ease with which different variants of an analysis can be provided within NeuroCAAS ‘s design, and we discuss how users can leverage NeuroCAAS for custom use cases in §3.

Figure 3: Usage statistics NeuroCAAS Platform.

Usage data over a 22-month alpha test period. A. Histogram for number of datasets (left) and corresponding compute hours (right) spent by each active user of NeuroCAAS. B. Histograms for job size indicates the number of datasets (top) and corresponding compute hours (bottom) concurrently analyzed in jobs. C. Usage grouped by platform developer. Dark blue: analyses adapted for NeuroCAAS by paper authors. Light green: analyses that were not developed by NeuroCAAS authors. Dark green: NeuroCAAS native analyses (§2.4, 2.5). Light blue: custom versions of generic analyses built for individual alpha users. We exclude usage attributed to NeuroCAAS team members.

Next we confirmed that the design of data analysis using NeuroCAAS ‘s IaC approach does indeed provide robust reproducibility. We selected two analyses available on our platform and we analyzed the similarity of analysis outputs across multiple runs in Table 2 (see Figure S3 for in depth analysis, and Figure S4 for corresponding analysis of timings). Fixing a single dataset, configuration file, and blueprint for each analysis, we evaluated reproducibility of results in terms of differences between analysis outputs (see Table 2 caption for metrics.) First, we observe that over multiple runs conducted by the same researcher in the United States, (Table 2, vs Run 2), results showed no scientifically relevant differences. Second, we varied the identity and physical location of the person requesting NeuroCAAS jobs: compared to jobs started by independent researchers in India and Switzerland (Table 2, vs Run 5, Run 10 respectively) we once again note no meaningful differences in the outputs of these analyses. Whereas physical location might bias or restrict researchers to use specific analysis infrastructure on other platforms, they have access to the exact same analysis infrastructure through NeuroCAAS. Finally, we conducted a test to measure if our platform was truly IaC: given dataset, configuration file, and analysis blueprint, there should be no reliance on the compute resources that we used to develop these analysis and perform reference runs. For a final run, we automatically deployed a complete clone of the NeuroCAAS platform on a new set of cloud resources, as any user of our platform can do in a few simple steps (details in §3, Figure S5). We then ran jobs with the blueprints, datasets and configuration files for the corresponding analyses (Table 2, vs Run 14), showing that results from this cloned platform are indistinguishable from those generated by the original platform.

Table 2: Quantifying reproducibility via output comparisons for two analyses on NeuroCAAS.

For CaImAn, (an algorithm to analyze calcium imaging data) we independently characterized differences in the spatial and temporal components recovered by the model. Differences in spatial components are measured by the average Jaccard Distance over pairs of spatial components. A Jaccard distance of 0 corresponds to two spatial components that perfectly overlap. Differences in temporal components were calculated as the average root mean squared error (RMSE) taken over paired time series of component activity. For Ensemble DeepGraphPose (an algorithm to track body parts of animals during behavior from video), we considered multiple sets of outputs from a single, pretrained model. RMSE takes units of pixels, so differences of order 1e-8 are not relevant for behavioral quantification. For both analyses, we fixed a single dataset, configuration file and blueprint across runs. See Figures S3,S4 for more.

| Reference Run Output | vs. Run 2 | vs. Run 5 | vs. Run 10 | vs. Run 14 | |

|---|---|---|---|---|---|

| Analysis | (Comparison Metric) | (US) | (India) | (Switzerland) | (Platform Clone) |

| Spatial Components | 0.0 | 0.0 | 0.0 | 0.0 | |

| CaImAn | (Jaccard Distance) | ||||

| (Giovannucci et al., 2019) | Temporal Components | 0.0 | 0.0 | 0.0 | 0.0 |

| (RMSE) | |||||

| Ensemble | Body Part Traces | 1.2e-8 | 1.2e-8 | 2.3e-8 | 1.4e-8 |

| DeepGraphPose (§2.5) | (RMSE) |

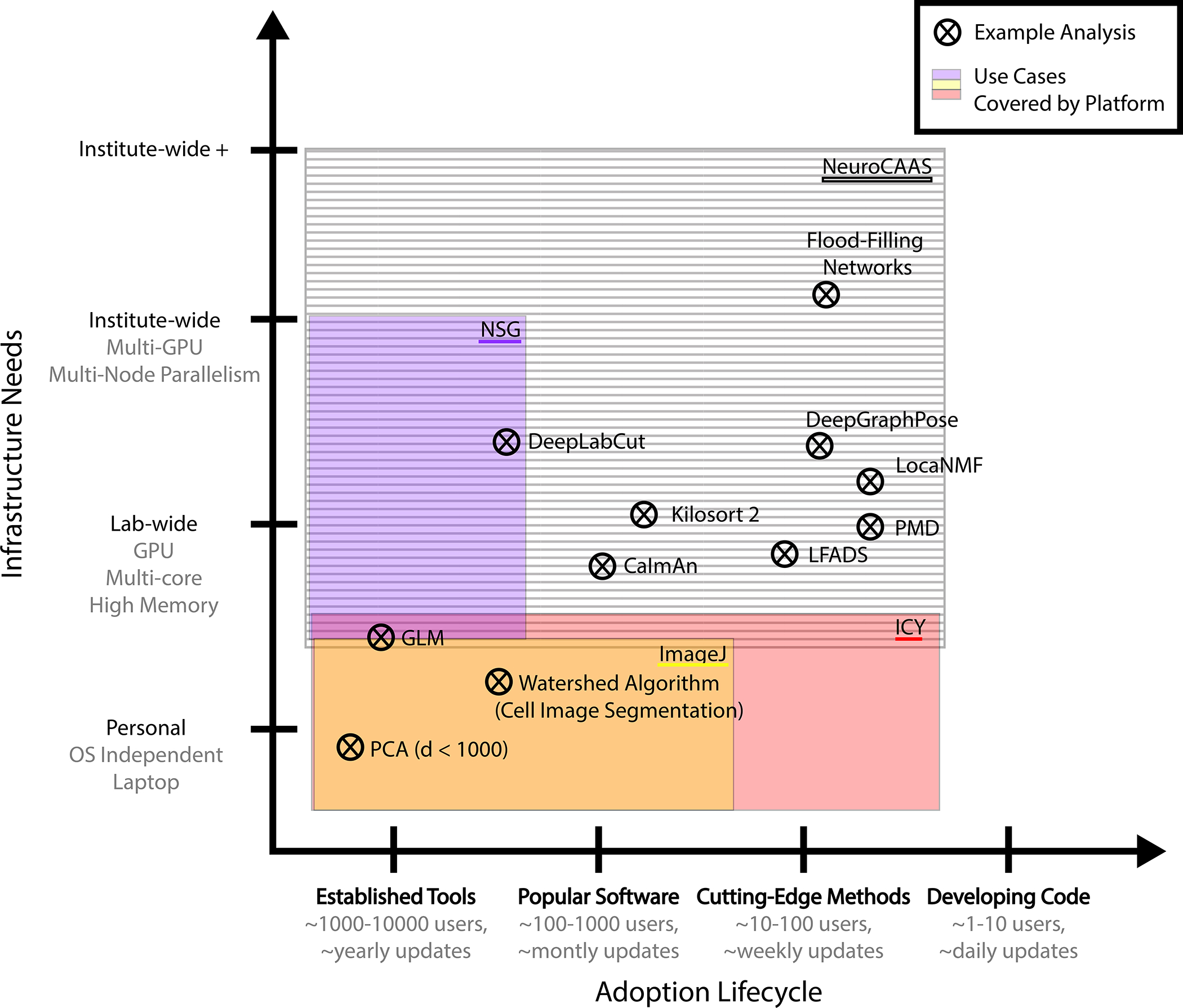

2.3. Existing Platforms Leave Infrastructure Gaps

Although we do not attempt an exhaustive review of existing analysis platforms in neuroscience here, we characterize some exemplars in order to contrast NeuroCAAS from typical alternatives. In Figure 4, we plot a variety of popular neuroscience analyses onto a space defined by 1) their place in the adoption lifecycle and 2) corresponding infrastructure needs. We overlay several exemplar platforms on this graph, with shading representing the kinds of analyses they are able to support. The degree to which a platform’s support extends to the right defines its accessibility, or the ease with which developers and users can configure analyses on the platform, and begin to process data. Accessibility is a key feature for analyses that are still early in the adoption lifecycle with active development and a growing user base. Likewise, the degree to which a platform’s support extends upwards defines its scale, a one dimensional approximation of the infrastructure needs for which it can provide. While the exact positioning of these analyses and platforms is subjective and dynamic, there are general features of the analysis platform landscape that we discuss in what follows.

Figure 4: Landscape of Cellular/Circuit-Level Neuroscience Analysis Platforms.

Crosses: popular analyses in terms of their place in the adoption lifecycle (number of users, rate of software updates), and their infrastructure needs. Coloring: representative platforms, indicating the parts of analysis space that are covered by a given platform. (Example analyses: (Goodman and Brette, 2009; Pnevmatikakis et al., 2016; Mathis et al., 2018; Pachitariu et al., 2016; Pandarinath et al., 2018; Januszewski et al., 2018; Saxena et al., 2020; Buchanan et al., 2018; Graving et al., 2019); Representative platforms: (Sanielevici et al., 2018; Chaumont et al., 2012; Schneider et al., 2012).

Local platforms like CellProfiler (Carpenter et al., 2006), Ilastik (Sommer et al., 2011) (cell-based image processing), Icy (Chaumont et al., 2012), ImageJ (Schneider et al., 2012) (generic bioimage analysis), BIDS Apps (Gorgolewski et al., 2017) (MRI analyses for Brain Imaging Data Structure format), and Bioconductor (Amezquita et al., 2020) (genomics) have all achieved success in the field by packaging together popular analyses with necessary software dependencies and intuitive, streamlined user interfaces. Most of these local platforms also have an open contribution system for interested developers. Local platforms are thus highly accessible to both developers and users, but are in the large majority of cases designed only for use on a user’s local hardware, limiting their scale (Figure 4, bottom).

In contrast, remote platforms like the Neuroscience Gateway (NSG) (Sanielevici et al., 2018) (specializing in neural simulators), Flywheel (flywheel.io) (emphasizing fMRI and medical imaging), and neuroscience-focused research computing clusters offer powerful hardware through the XSEDE (Extreme Science and Engineering Discovery Environment) portal (Towns et al., 2014), the public cloud and on-premises hardware, respectively. These remote platforms offer powerful compute, but at the cost of accessibility to users, who must adapt their software and workflow to new conventions (i.e. wait times for jobs to run on shared resources, hardware specific installation, custom scripting environments, limitations on concurrency) in order to make use of offered hardware. As a particular example, NSG requires users to submit a script that they would like to have run on existing compute nodes in the XSEDE cluster, making it more similar to a traditional on-premises cluster in usage than NeuroCAAS. NSG also restricts jobs to run serially, and does not have an open system for contributing new analyses, making it incompatible with the usage model and analyses that we present here. Likewise, while Flywheel () (with a focus on human brain imaging tools) offers the option of cloud compute, the platform is not structured in terms of infrastructure stacks for given analyses, in effect leaving many infrastructure design choices to individual users. Altogether, remote platforms are best for committed, experienced users who are already familiar with the analyses available on the remote platform and understand how to optimize them for available hardware. It is also more difficult to contribute new analyses to these platforms than their locally hosted counterparts (see Figure 4, left side). This difficulty makes them less suitable for actively developing or novel analyses, as updates may be slow to be incorporated, or introduce breaking changes to user-written scripts, making remote platforms altogether less accessible than local ones.

Some platforms provide both local or remote style usage: Galaxy (Goecks et al., 2010) and Brainlife (Avesani et al., 2019) offer a set of default compute resources, but can also be used to run analyses on personal computing resources, or the cloud. These mixed-compute models provide a useful way to increase the accessibility of many analyses, and can provide levels of reproducibility similar to that provided by the IAE and job manager of NeuroCAAS. However, without having an IaC framework that makes a reproducible configuration of compute resources available to all analysis users, we lose the guarantee that all platform users will be able to use analyses that depend on specific infrastructure configurations. As noted in (Goecks et al., 2010), it is a challenge even for these mixed-compute platforms to ensure accessibility for an analysis developed on a given set of local compute resources-significant work must be done to make this analysis functional on other computing platforms, or to maintain these local compute resources in order to ensure that others can use them whenever needed. These challenges are only exacerbated by the increasing reliance of analysis tools upon more powerful and specific infrastructure configurations, such as high performance GPUs.

Although undeniably useful, all available platforms operate on a tradeoff that forces researchers to choose between accessibility and scale. While these platforms often concentrate on applications that mitigate the effects of this tradeoff, there are many popular analyses that would not be suitable for existing analysis platforms (see Figure 4, center). Furthermore, critically for reproducibility, across all existing platforms analysis users and developers are still required to manually configure analysis infrastructure, whether by installing new tools onto one’s personal infrastructure, or porting code and dependencies to run in a remote (and sometimes variably allocated) infrastructure stack.

Some platforms in cellular and molecular biology (Riley, 2010), as well as bioinformatics (Simonyan and Mazumder, 2014,,Terra, 2022) have shown that IaC approaches are feasible to handle these infrastructure issues. A notable difference in the design of our platform is that these other platforms assume that individual users will themselves manually configure analyses- that they will choose relevant resources to support the analyses that they want to conduct, or compose an analysis of interest out of a set of small modular parts, in each case performing the work of a NeuroCAAS developer. While resulting analyses can be shared with other individuals on a case-by-case basis, this is very different from NeuroCAAS. Our platform is geared towards a heterogeneous community of researchers, where some researchers are developing general purpose analyses that only need to be configured or updated once before being used by a large group of potential users.

Next, we concretely demonstrate how the infrastructure benefits of the NeuroCAAS platform address ongoing challenges in neuroscience data analysis. We show two examples of NeuroCAAS native analyses that would not be feasible without the simultaneous benefits to accessibility, scale, and reproducibility that we provide.

2.4. NeuroCAAS Simplifies Large Data Pipelines: Widefield Imaging Protocol

Often, big data pipelines demand many individual preprocessing steps, creating the need for unwieldy multi-analysis infrastructure stacks— infrastructure stacks that support the needs of multiple core analyses at the same time. A notable example is widefield calcium imaging (WFCI)— a high-throughput imaging technique that can collect activity dependent fluorescence signals across the entire dorsal cortex of an awake, behaving mouse (Couto et al., 2021), potentially generating terabytes of data across chronic experiments. The protocol paper Couto et al. (2021) describes a complete WFCI analysis that links together cutting-edge data compression/denoising with demixing techniques designed explicitly for WFCI (via Penalized Matrix Decomposition, or PMD (Buchanan et al., 2018) and LocaNMF (Saxena et al., 2020), respectively). Each of these analyses depends upon its own specialized hardware and installation, creating many competing requirements on a multi-analysis infrastructure stack that are difficult to satisfy in practice. While we offer a NeuroCAAS implementation of the described WFCI analysis in Couto et al. (2021), we do not discuss how NeuroCAAS addresses the issue of multi-analysis infrastructure stacks, which can pose IaGS challenges even to our blueprint based infrastructure.

Instead of working with multi-analysis infrastructure stacks, for this analysis NeuroCAAS extends the function of a standard job manager (see Figure 5A) to trigger multiple jobs, built from separate blueprints, in sequence (Figure 5B). We employ this design to dramatically simplify the infrastructure requirements for a complete WFCI pipeline. First the initial steps of motion correction, denoising, and hemodynamic correction of the data are run from a blueprint that emphasizes multicore parallelism (64 CPU cores) to suit the matrix decomposition algorithms employed by PMD. Upon termination of this first step, analysis results are not only returned to user storage, but also used as inputs to a second job, performing demixing with LocaNMF on infrastructure supporting a high performance GPU. This modular organization improves the performance and efficiency of each analysis component (see Figure 7), and also allows users to run steps individually if desired, giving them the freedom to interleave existing analysis pipelines with the components offered here. As an alternative to the standard NeuroCAAS interface, this WFCI analysis can be controlled from a custom-built graphical user interface (GUI). This GUI further extends NeuroCAAS ‘s accessibility with features such as interactive alignment of a brain atlas to user data as part of parameter configuration (in the process validating input data as well). Following parameter configuration, this GUI interacts with NeuroCAAS programmatically, using locally run code scripts to perform data upload and job submission, and to detect and retrieve results once analysis is complete. Results can be visualized directly in this GUI as well. Altogether, this GUI can be used as a model for researchers who would like to take advantage of our computational infrastructure within a more sophisticated user interface, or integrate NeuroCAAS programmatically with other software tools. Importantly, despite its interactivity, the performance of our WFCI analysis does not depend on the infrastructure available to the user. For example, users could simultaneously launch many analyses and have them run in parallel through this GUI, easily conducting a hyperparameter search over their entire multi-step analysis. Researchers can find the GUI for this WFCI analysis with NeuroCAAS integration at https://github.com/jcouto/wfield.

Figure 5:

NeuroCAAS Supports Multi-Stack Design Patterns. A. Default workflow: If more than one dataset is submitted, NeuroCAAS automatically creates separate infrastructure for each. B. Chained workflow: Multiple analysis components with different infrastructure needs are seamlessly combined on demand. Intermediate results are returned to the user so that they can be examined and visualized as well (§2.4). C. Parallelism + chained workflow: Workflows A and B can also be combined to support batch processing pipelines with a separate postprocessing step (§2.5).

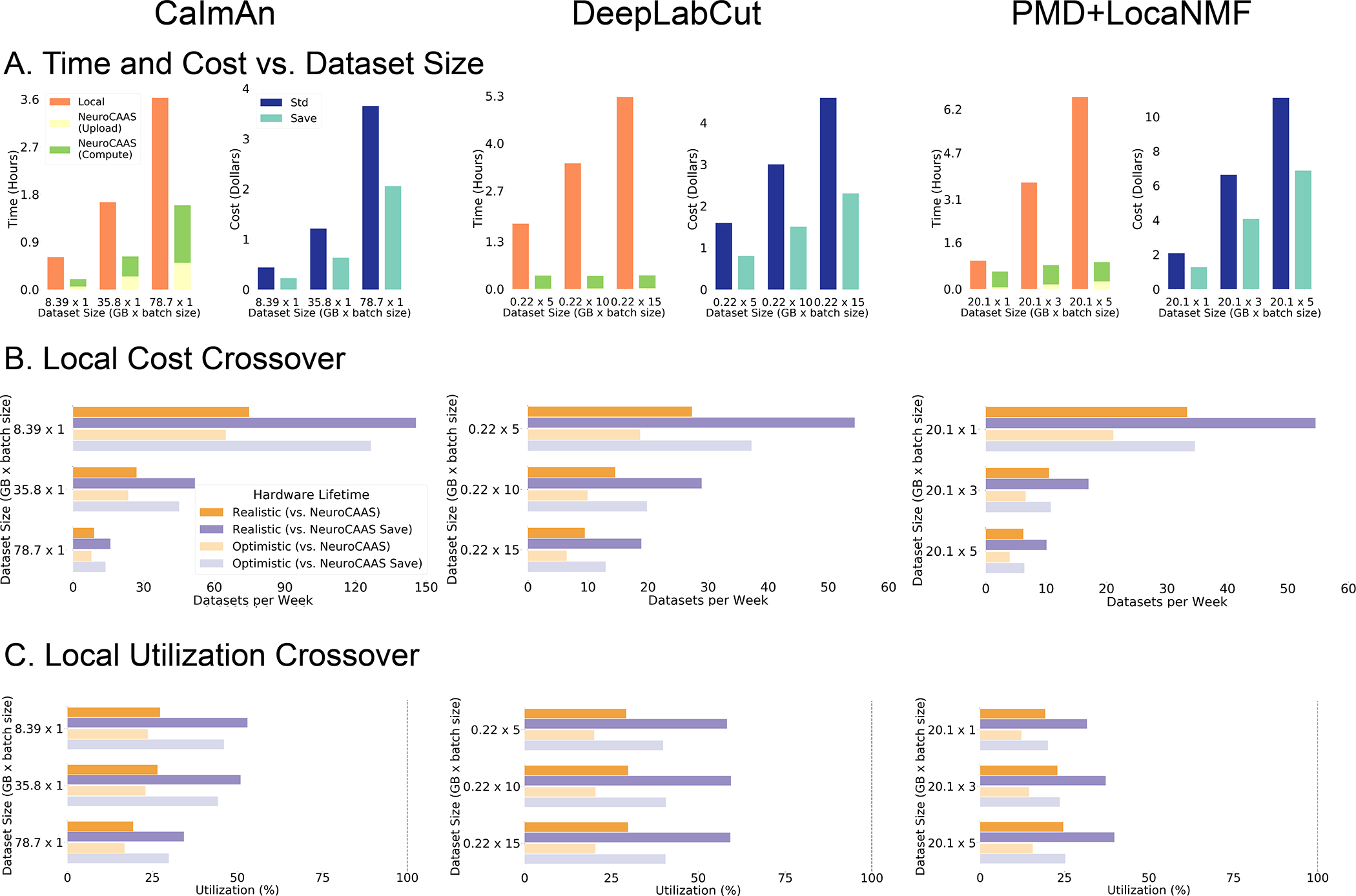

Figure 7: Quantitative Comparison of NeuroCAAS vs. Local Processing for Three Different Analyses.

A. Simple quantifications of NeuroCAAS performance. Left graphs compare total processing time on NeuroCAAS vs. local infrastructure (orange). NeuroCAAS processing time is broken into two parts: Upload (yellow) and Compute (green). Right graphs quantify cost of analyzing data on NeuroCAAS with two different pricing schemes: Standard (dark blue) or Save (light blue). B. Cost comparison with local infrastructure (LCC). Figure compares local pricing against both Standard and Save prices, with Realistic (2 year) and Optimistic (4 year) lifecycle times for local hardware. C. Achieving Crossover Analysis Rates. Local Utilization Crossover gives the minimum utilization required to achieve crossover rates shown in B. Dashed vertical line indicates maximum feasible utilization rate at 100% (utilizing local infrastructure 24 hours, every day). See Figure S7 for cluster analysis, and Tables S4–S8 for supporting data.

For developers, this analysis presents a counterpart to existing domain specific projects such as CaImAn (Giovannucci et al., 2019) for cellular resolution calcium imaging or SpikeInterface (Buccino et al., 2020) for electrophysiology, which explicitly make multi-step data analyses compatible with optimized hardware. In contrast, our WFCI analysis is made directly available to users on powerful remote hardware without the need for anyone to revise existing analysis or infrastructure. To our knowledge, there is no other neuroscience platform with the accessibility, scale, and support for reproducibility to link together cutting-edge analyses across separate infrastructures, and make this exact configuration available directly to the research community.

2.5. NeuroCAAS Stabilizes Deep Learning Models: Ensemble Markerless Tracking

The black box nature of deep learning can generate sparse, difficult to detect errors that reduce the benefits of deep learning based tools in sensitive applications. For modern markerless tracking analyses built on deep neural networks (Mathis et al., 2018, Graving et al., 2019, Nilsson et al., 2020, Wu et al., 2020), these errors can manifest as “glitches” (Wu et al., 2020), where a marker point will jump to an incorrect location, often without registering as an error in the network’s generated likelihood metrics (see Figure 6).

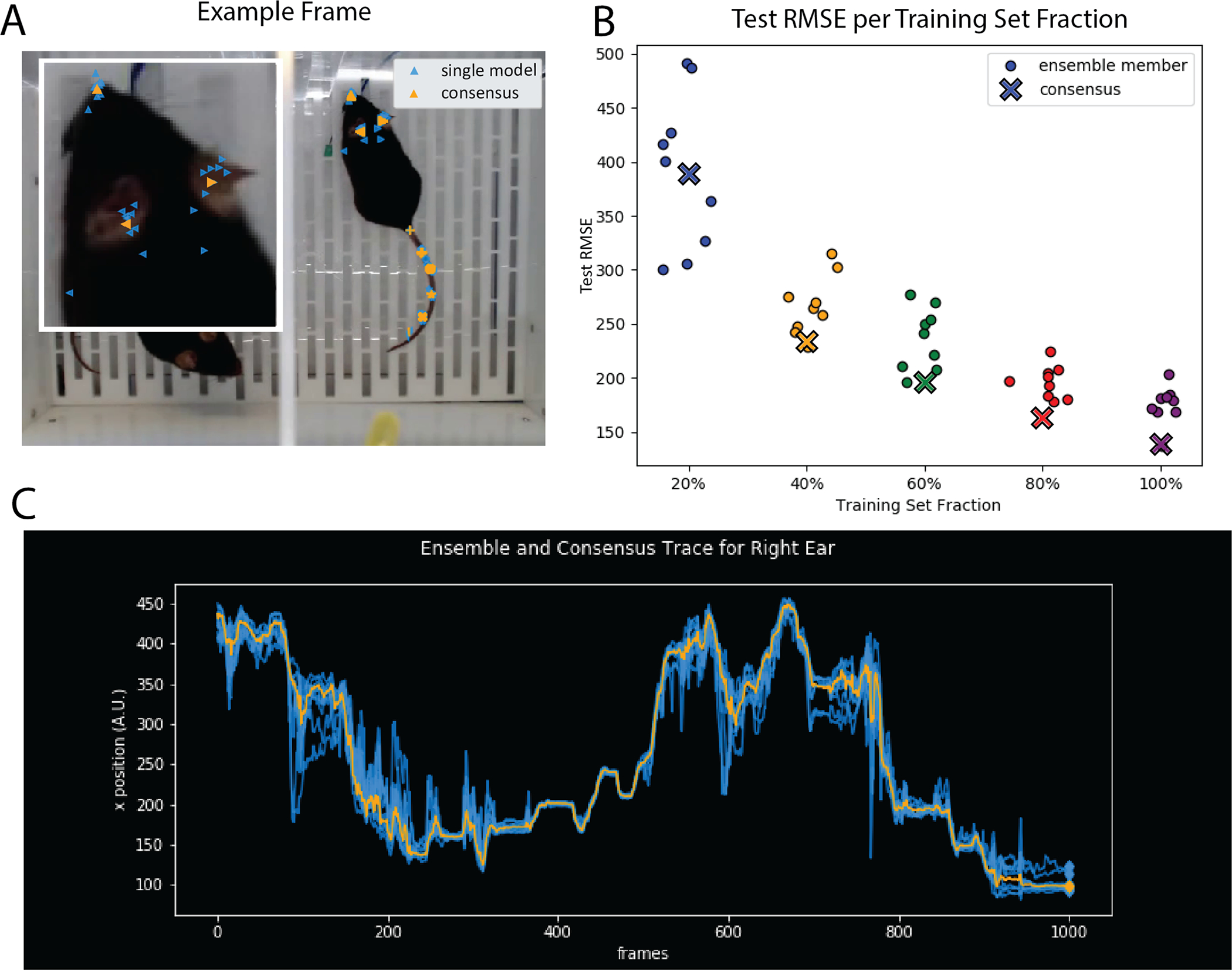

Figure 6: Ensemble Markerless Tracking.

A. Example frame from mouse behavior dataset (courtesy of Erica Rodriguez and C. Daniel Salzman) tracking keypoints on the top down view of a mouse, as analyzed in Wu et al. (2020). Marker shapes track different body parts: blue markers representing the output of individual tracking models, and orange markers representing the consensus. Inset image shows tracking performance on the nose and ears of the mouse. B. consensus test performance vs. test performance of individual networks on a dataset with ground truth labels as measured via root mean squared error (RMSE). C. traces from 9 networks (blue) + consensus (orange). Across the entire figure, ensemble size = 9. A and C correspond to traces taken from the 100% split in B corresponding to 20 training frames.

One general purpose approach to combat the unreliable nature of individual machine learning models is ensembling (Dietterich, 2000): instead of working with a single model, a researcher simultaneously prepares multiple models on the same task, subsequently aggregating their outputs into a single consensus output. Ensemble methods have been shown to be effective for deep networks in a variety of contexts, (Lakshminarayanan et al., 2017, Fort et al., 2019, Ovadia et al., 2019), but they confer a massive infrastructure burden if run on limited local compute resources: researchers must simultaneously train, manage, and aggregate outputs across many different deep learning models, incurring either prohibitively large commitments to deep learning specific infrastructure and/or infeasibly long wait times.

In contrast, NeuroCAAS enables easy and routine implementation of ensemble methods. By modifying the NeuroCAAS job manager, we designed an analysis which takes input training data, and distributes it to N identical sets of IAEs and resource bank instances (Figure 5C). For the application shown here, we used an IAE with DeepGraphPose (Wu et al., 2020) as our core analysis; the N infrastructures differ only in the minibatch order of data used to train models. The results from each trained model are then used to produce a consensus tracking output, taking each individual model’s estimate of part location across the entire image (i.e. the confidence map output) and averaging these estimates. Even with this relatively simple approach, we find the consensus tracking output is robust to the errors made by individual models (Figure 6A,C). This consensus performance is maintained even when we significantly reduce the size of the training set (Figure 6B). Finally, in Figure 6C, we can see that there are portions of the dataset where the individual model detections fluctuate around the consensus detection. This fluctuation offers an empirical readout of tracking difficulty within any given dataset; frames with large diversity in the ensemble outputs are good candidates for further labeling, and could be easily incorporated in an active learning loop. After training, models can be kept in user storage, and used to analyze further behavioral data, without moving these models out of NeuroCAAS. Overall, Figure 6 shows that with the scale of infrastructure available on NeuroCAAS, ensembling can easily improve the robustness of markerless tracking, naturally complementing the infrastructure reproducibility provided by the platform.

NeuroCAAS is uniquely capable of providing the flexible infrastructure necessary to support a generally available, on-demand ensemble markerless tracking application. To our knowledge, none of the platforms with the scale to support markerless tracking on publicly available resources (e.g. on premise clusters, Google Colab, Galaxy Goecks et al. (2010), NSG (Sanielevici et al., 2018), Brainlife Avesani et al. (2019)) can satisfactorily alleviate the burden of a deep ensembling approach, still forcing the user to accept either long wait times or manual management of infrastructure. These limitations also prohibit use cases involving the quantification of ensemble behavior across different parameter settings (c.f. Figure 6B, where we trained 45 networks simultaneously).

2.6. NeuroCAAS is Faster and Cheaper than IaGS Analogues

NeuroCAAS offers a number of major advantages over IaGS : reproducibility, accessibility, and scale, whether we compare against a personal workstation or resources allocated from a locally available cluster. However, since NeuroCAAS is based on a cloud computing architecture, one might worry that data transfer times (i.e., uploading and downloading data to and from the cloud) could potentially lead to slower overall processing or that the cost of cloud compute could outweigh that of local infrastructure.

Figure 7 considers this question quantitatively, comparing NeuroCAAS to a simulated personal workstation (see §9.3 for details). For the analogous comparisons (with similar conclusions) against a simulated local cluster, see Figure S7. Figure 7 presents time and cost benchmark results on four popular analyses that cover a variety of data modalities: CaImAn (Giovannucci et al., 2019) for cellular resolution calcium imaging; DeepLabCut (DLC) (Mathis et al., 2018) for markerless tracking in behavioral videos; and a two-step analysis consisting of PMD (Buchanan et al., 2018) and LocaNMF (Saxena et al., 2020) for analysis of widefield imaging data. To be (extremely) conservative, we assume local infrastructure is set up, neglecting all of the time associated with installing and maintaining software and hardware.

Across all analyses and datasets considered in Figure 7, analyses run on NeuroCAAS were significantly faster than those run on the selected local infrastructure, even accounting for the time taken to stage data to the cloud (Figure 7A, left panes). We batched data to take advantage of both compute optimization offered by individual core analyses, and NeuroCAAS ‘s scale (see 9.3 for details). These examples show that many analyses can be used efficiently on NeuroCAAS regardless of the degree to which they have been intrinsically optimized for parallelism. Additionally NeuroCAAS upload time can be ignored if analyzing data that is already in a user storage — for example if there is a need to reprocess data with an updated algorithm or parameter setting — leading to further speedups. Finally, although we found download speeds negligible (see 9.3 for full details of timing quantifications) this could vary significantly based on user internet speeds and analyses. Across our platform, we have attempted to design analyses with much smaller outputs than input data- a point we will return to in the discussion (§3).

Next we turn to cost analyses. Over the range of algorithms and datasets considered here, we found that the total baseline NeuroCAAS analysis cost was on the order of a few US dollars per dataset (Figure 7A, right panels)- see Table S7 for pricing details. We observe that for the most part, costs are approximately linear in compute time, ensuring that even compute intensive operations like training a deep network for DLC (~12 hours on the same machines used here) can be accomplished for ~ $10-trained networks can be maintained in cloud storage to reduce data transfer cost. In addition to our baseline implementation, we also offer an option to run analyses at a significantly lower price (indicated as “Std” and “Save” respectively in the cost barplots in Figure 7), if the user can upper bound the expected runtime of their analysis to anything lower than 6 hours (i.e. from previous runs of similar data).

Finally, we compare the cost of NeuroCAAS directly to the cost of purchasing local infrastructure. We use a total cost of ownership (TCO) metric (Morey and Nambiar, 2009) that includes the purchase cost of local hardware, plus reasonable maintenance costs over estimates of hardware lifetime; see §9.3 for full details. We first ask how frequently one would have to run the analyses presented in Figure 7 before it becomes worthwhile to purchase dedicated local infrastructure. This question is answered by the Local Cost Crossover (LCC): the threshold weekly rate at which a user would have to analyze data for NeuroCAAS costs to exceed the TCO of local hardware. As an example, the top two bars of Figure 7B, left, show that in order for a local machine to be cost effective for CaImAn, one must analyze ~ 100 datasets of 8.39 GB per week, every week for several years (see Table S4 for a conversion to data dimensions). In all use cases, the LCC rates in Figure 7B show that a researcher would have to consistently analyze ~ 10–100 datasets per week for several years before it becomes cost effective to use local infrastructure. While such use cases are certainly feasible, managing these use cases on local infrastructure via IaGS would involve an significant amount of human labor.

In Figure 7C, we characterize this labor cost via the Local Utilization Crossover (LUC): the actual time cost of analyzing data on a local machine at the corresponding LCC rate. Across the analyses that we considered, local infrastructure would have to be dedicated to the indicated analysis for 25–50% of the infrastructure’s total lifetime (i.e. ~ 6–12 hours per day, every day) to achieve its corresponding LCC threshold, requiring an inordinate amount of work on the part of the researcher to manually run datasets, monitor analysis progress for errors, or build the computing infrastructure required to automate this process– in essence forcing researchers to perform by hand the large scale infrastructure management that NeuroCAAS achieves automatically. These calculations demonstrate that even without considering all of the IaGS issues that our solution avoids, or explicitly assigning a cost to researcher time, it is difficult to use local infrastructure more efficiently than NeuroCAAS for a variety of popular analyses. Given the diversity of IaGS solutions, we also provide a tool for users to benchmark their available infrastructure options against NeuroCAAS (see the instructions at https://github.com/cunningham-lab/neurocaas).

2.7. NeuroCAAS is Offered as a Free Service for Many Users

In many cases, researchers may use infrastructure available on hand to test out analyses before purchasing components for a dedicated infrastructure stack. Given the low per-dataset cost and the major advantages summarized above of NeuroCAAS compared to the current IaGS status quo, we have decided to mirror this model on the NeuroCAAS platform, and subsidize a large part of NeuroCAAS usage by the community. Users do not need to set up any billing information or worry about incurring any costs when starting work on NeuroCAAS; we cover all costs up to a per-user cap (initially set at $300). This subsidization removes one final friction point that might slow adoption of NeuroCAAS, and protects NeuroCAAS as a non-commercial open-source effort. Since NeuroCAAS is relatively inexpensive, many users will not hit the cap; thus, for these users, NeuroCAAS is offered as a free service. We note that we are also open to consider budget increases for researchers as they become necessary.

3. Discussion

NeuroCAAS integrates rigorous infrastructure practices into neural data analysis while also respecting current development and use practices. The fundamental choice made by NeuroCAAS is to provide analysis infrastructure with as much automation as possible. This choice naturally makes NeuroCAAS into a service, and in the simplest case neither analysis users nor analysis developers have to manage infrastructure directly; rather, NeuroCAAS removes the infrastructure burden entirely. However, as an open source project, NeuroCAAS also acknowledges the possibility that some users may want to accept some degree of responsibility for computing infrastructure, in return for a greater degree of flexibility in how they use the platform. We highlight two notable alternative use cases here:

Working at scale: large datasets/many jobs.

Although our drag-and-drop console removes the need for users to have previous experience with coding, some users may find the console restrictive when working with large datasets, or managing many jobs at once- both important facets of analysis use that NeuroCAAS is poised to improve. These restrictions can be reduced by working with NeuroCAAS from the command line, or by integrating calls to NeuroCAAS within locally run applications, as is done in §2.4. Since NeuroCAAS can be used solely by interacting with cloud storage, these interfaces to NeuroCAAS are easily supported by general purpose data transfer tools. We provide instructions for this use case in our developer documentation (see §9.4).

In order to streamline data transfer in cases where input or output data are unavoidably large, we have also implemented a “storage bypass” option for our CLI interface. Using this option, public data stored elsewhere in the AWS cloud can be analyzed, and results can be written back directly to this location without incurring additional data transfer time and costs, laying the groundwork for the integration of NeuroCAAS analyses with external data sources. This option is intended for analyses which handle especially large input or output data, where CLI use is preferable, but we plan to extend this functionality to all analyses and our standard interface soon. We believe these additional features will better equip NeuroCAAS to handle the ever increasing scale of neuroscience data (e.g. Steinmetz et al., 2021; Couto et al., 2021), as well as methods that consider multiple data modalities simultaneously (e.g. Batty et al., 2019), and faciliate sharing of analysis outputs across many users.

Working independently: private management of costs/compute resources.

A major benefit of NeuroCAAS ‘s IaC construction is that the entire platform (except private user data) can be reconstructed automatically given the code in the NeuroCAAS source repository (§9.2, Figure S5): there is no dependence of the platform upon specifics of infrastructure configuration that are not recorded in a blueprint. This benefit means that if users anticipate very high costs, or would like to use IaC management for their own custom analyses, it is easy for them to switch from using our public implementation of NeuroCAAS, to one that they pay for themselves, maintaining all the benefits of NeuroCAAS ‘s infrastructure management. We provide detailed instructions on this process in our developer documentation (§9.4), describing platform setup and cloning of individual analyses.

Finally, we revisit NeuroCAAS ‘s stated objectives of supporting reproducible, accessible, and scalable data analyses. These are fundamentally multifaceted issues, and will manifest in different ways across a variety of use cases. To this end, we identify strengths and limitations of NeuroCAAS ‘s approach to these issues (and related costs) so that researchers can evaluate the suitability of NeuroCAAS to their particular use case.

Reproducibility.

What are the benefits and limits of analysis reproducibility in NeuroCAAS? In section §2.2.1, we show that a dataset, configuration file, and analysis blueprint form a set of sufficient resources to reproduce an analysis output against a set of practically relevant interventions. We note some qualifications to this performance: First, it can be non-trivial to maintain a dataset across multiple analysis runs. Importantly, when data is uploaded it will not be versioned by default, creating the potential for it to be overwritten. For dataset provenance, we recommend data infrastructure projects like DANDI Dandi Team (2019). Integration with a data archive is an important future direction to extend reproducibility for NeuroCAAS. Second, we are limited by the inherent computational reproducibility of the core analysis we offer- for example, random computations can introduce significant differences from run to run (although ensemble methods can mitigate these issues). Finally, we can consider the lifecycle of different resources on the AWS cloud. For example, reproducibility could be affected if support for certain hardware instances become deprecated, and can no longer be used to run analyses. Given the large scale reliance of industrial applications on the AWS cloud, such events are very rare and announced well in advance, but we can take steps to address such a contingency. In particular, an important future direction is to consider how we can expand our approach outside of a particular cloud provider (see 9.2 for details).

Accessibility.

NeuroCAAS aims to improve the accessibility of data analysis by removing the need for users to independently configure infrastructure stacks, as is the de facto standard with IaGS approaches. By default, NeuroCAAS does not aim to improve other aspects of usability, such as the scientific use of core analysis algorithms. For example, if a user has data that is incorrectly formatted for a particular algorithm, the same error will happen with NeuroCAAS as it would with conventional usage, although curated deployments and blueprint based updates can significantly mitigate these issues.

Another approach towards achieving robust and general purpose analyses focuses on the explicit standardization of data formats and workflow. As mentioned, we plan to integrate with data archiving projects like DANDI (distributed archives for neurophysiology data integration) (Dandi Team, 2019) which enforces the NWB (Teeters et al., 2015, Rübel et al., 2019, Rübel et al., 2021) data standard, providing both a stable set of expectations for analysis developers, while also improving reproducibility of analysis results. Likewise, workflow management systems for neuroscience such as Datajoint (Yatsenko et al., 2015) or more general tools like snakemake (Koster and Rahmann, 2012) and the Common Workflow Language (Amstutz et al., 2016) codify the sequential steps that make up a data analysis on given infrastructure, ensuring data integrity and provenance. Other platforms both within (NeuroScout, 2022, Avesani et al., 2019) and outside of neuroscience (Seven Bridges, 2019) provide well-designed examples of how standardized data formats paired with workflow management systems can be used to make analyses more modular and easy to use.

Scale.

Although NeuroCAAS offers analyses at scale, it does not offer unstructured access to cloud computational resources. The concept of IAEs should clarify this fact: NeuroCAAS serves a set of analyses that are configured to a particular specification, as established by the analysis developer. This constraint is often ideal, since the specification is in many cases established by the analysis method’s original authors. Without specific structure to manage the near infinite scale of resources available on the cloud, the management of resources on the cloud easily becomes susceptible to the issues of IaGS that motivated the development of NeuroCAAS to begin with (Monajemi et al., 2019). The constraint of immutability distinguishes NeuroCAAS from interactive data analysis offerings that offer cloud computing like Pan-neuro (Rokem et al., 2021) or Google Colab, in keeping with their differing intended use cases. While interactive computing plays a key role in data analysis applications, we believe there is fundamental value in immutable data analyses as well.

Importantly, immmutability does not suggest that analyses on NeuroCAAS are a black box. All NeuroCAAS analyses are built from open source projects, the workflow scripts used to parse datasets and config files inside an IAE are made available to all analysis users, and jobs constantly print live status logs back to users. Furthermore, our novel analyses show that there are means of comprehensively characterizing analysis performance that only become available at scale (i.e. full parameter searches over a multi-step analysis, or ensembling to evaluate reliability of analysis outputs).

Cost.

The cost quantifications that we present in this manuscript are intended to demonstrate that the cost of using NeuroCAAS ‘s computing infrastructure is practically feasible when compared against the cost of computing on typical IaGS infrastructure. One point to note is that for individual research groups, the cost of using local infrastructure may vary significantly across institutions. Our quantifications are best fit to the case where a research group is supporting its own computing costs and resources. While the relative cost of using NeuroCAAS may thus differ from group to group, it is our hope that offering analyses at a uniform (and highly subsidized) cost will increase analysis accessibility to a significant portion of the neuroscience community, and potentially provide a more concrete understanding of the costs associated with the development and adoption of new analysis tools.

Beyond compute, we do not discuss the costs of storing and retrieving data from the cloud in depth. Without restricting data sizes on user storage, we found that data storage costs were small enough that we could support them without counting them towards user budgets. A common theme of the analyses that we discuss is that we can minimize data retrieval costs by designing workflows such that analysis results that the user actually needed to retrieve were far smaller than input data, specifically by modifying IAEs, and by maintaining large intermediate results on the cloud for use in future analyses. NeuroCAAS ‘s cost benefits may be reduced if these conditions are harder to achieve for a given analysis, although we believe that alternative use cases, such as our CLI interface with “storage bypass” are well poised to handle these contingencies, especially when paired with future directions such as integration with a data archive. Importantly, on private resources users will have to pay for cloud storage. This cost can be minimized by deleting input data and storing all results locally when not in use.

Beyond our proposed improvements above, NeuroCAAS will naturally continue to evolve by virtue of its open source code and public cloud construction. First, we hope to build a community of developers who will add more analysis algorithms to NeuroCAAS, with an emphasis on subfields of computational analysis that we do not yet support. Throughout this manuscript, we focus largely on analyses in systems neuroscience and neurophysiology, in accordance with the previous experience of the authors, and the opinion that analyses in this area are in great need of the platform design implemented by NeuroCAAS. We also plan to add support for real-time processing (e.g., Giovannucci et al. (2017) for calcium imaging, or Schweihoff et al. (2021), Kane et al. (2020) for closed-loop experiments, or Lopes et al. (2015) for the coordination of multiple data streams), using blueprint based methods to design fast, reliable infrastructure for closed loop analyses, in the same spirit as these batch mode analyses. Second, other tools have brought large-scale distributed computing to neural data analyses (Freeman, 2015, Rocklin, 2015) in ways that conform to more traditional high performance computing ideas of scalability for applications that are less easily parallelized than those presented here. Integrating more elaborate scaling into NeuroCAAS while maintaining development accessibility will be an important goal going forwards. Third, we aim to take inspiration from other computing platforms both within and beyond neuroscience to improve the usability of our platform, such as reporting the expected runtime and success rate of analyses Avesani et al. (2019), indicating the compatibility of different analysis steps in a sequence (Seven Bridges, 2019), or improving user and developer resources to include forums and full time support Goecks et al. (2010). We also aim to identify platforms and tools that could potentially be integrated with NeuroCAAS resources, in order to provide the infrastructure reliability that we prioritize. Finally, a major opportunity for future work is the integration of NeuroCAAS with modern visualization tools. We have emphasized above that immutable analysis environments on NeuroCAAS are designed with the ideal of fully automated data analyses in mind, because of the virtues that automation brings to data analyses. However, we recognize that for some of the core analyses on NeuroCAAS, and indeed most of those popular in the field, some user interaction is required to speed up analysis and optimize results. We have already demonstrated the compatibility of interactive interfaces with NeuroCAAS in our widefield calcium imaging analysis, and we will aim to establish a general purpose interface toolbox for developers in the same spirit, without sacrificing the benefits of cost efficiency, scalability, and reproducibility that distinguish NeuroCAAS in its current form.

Longer term, we hope to build a sustainable and open source user and developer community around NeuroCAAS. We welcome suggestions for improvements from users, and new analyses as well as extensions from interested developers, with the goal of creating a sustainable community-driven resource that will enable new large-scale neural data science in the decade to come.

9. STAR Methods

9.1. Resource Availability

Lead Contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, John P. Cunningham (jpc2181@columbia.edu)

Materials Availability

This study did not generate new unique reagents.

Data/Code Availability

Quantifications of performance and reproducibility of NeuroCAAS have been deposited at Zenodo and are publicly available as of the date of publication. DOIs are listed in the key resources table.

This paper analyzes existing, publicly available data. These accession numbers for the datasets are listed in the key resources table.

All other data reported in this paper will be shared by the lead contact upon request.

All original code has been deposited at Zenodo and is publicly available as of the date of publication. DOIs are listed in the key resources table.

KEY RESOURCES TABLE.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Deposited Data | ||

| Raw data used for the benchmarking of CaImAn (Giovannucci et al. 2019) | Zenodo | DOI: 10.5281/zenodo.1659149 |

| Performance quantification data used to report timing and cost of analyses on NeuroCAAS (related to Table 1, Figure 8) | Zenodo | DOI: 10.5281/zenodo.6512118 |

| Raw data used for to test WFCI analysis. | Cold Spring Harbor Repository | DOI: 10.14224/1.38599 |

| Software and Algorithms | ||

| Source repository used to build analyses from blueprints. | Zenodo | DOI: 10.5281/zenodo.6512118 |

| Contributor repository used to help developers add analyses to NeuroCAAS. | Zenodo | DOI: 10.5281/zenodo.6512121 |

| Interface repository used to build the website www.neurocaas.org | Zenodo | DOI: 10.5281/zenodo.6512125 |

| Repository used to generate ensemble outputs from individually trained models | Zenodo | DOI: 10.5281/zenodo.6513057 |

9.2. Method Details

NeuroCAAS architecture specifics

The software supporting the NeuroCAAS platform has been divided into three separate Github repositories. The first, https://github.com/cunningham-lab/neurocaas is the main repository that hosts the Infrastructure-as-Code implementation of NeuroCAAS. We will refer to this repository as the source repo throughout this section. The source repo is supported by two additional repositories: https://github.com/cunningham-lab/neurocaas_contrib hosts contribution tools to assist in the development and creation of new analyses on NeuroCAAS, and https://github.com/jjhbriggs/neurocaas_frontend hosts the website interface to NeuroCAAS. We will refer to these as the contrib repo and the interface repo respectively throughout this section. We discuss the relationship between these repositories in the following section, and in Figure S5.

Source Repo

Section 2.1 gives an overview of how NeuroCAAS encodes individual analyses into blueprints, and deploys them into full infrastructure stacks, following the principle of Infrastructure-as-Code (IaC). This section presents blueprints in more depth and show how the whole NeuroCAAS platform can be managed through IaC, encoding features such as user data storage, credentials, and logging infrastructure in code documents analogous to analysis blueprints as well. All of these code documents, together with code to deploy them, make up NeuroCAAS ‘s source repo. There is a one-to-one correspondence between NeuroCAAS ‘s source repo and infrastructure components: deploying the source repo provides total coverage of all the infrastructure needed to analyze data on NeuroCAAS (Figure S5, bottom). Although much of the code to translate blueprints and other infrastructure code necessarily references AWS resources, NeuroCAAS blueprints and other IaC artefacts are not tied to AWS, except in their reliance on particular hardware instance configurations. We can potentially recreate these hardware instances in other public clouds, using existing tools to support cloud-agnostic IaC approaches, as suggested by Brikman (2019). Doing so will further improve the scale and robustness of our platform.

Within the source repo, each NeuroCAAS blueprint (see Figure S2 for an example) is formatted as a JSON document with predefined fields. The expected values for most of these fields identify a particular cloud resource, such as the ID for an immutable analysis environment, or a hardware identifier to specify an instance within the resource bank (Lambda.LambdaConfig.AMI and Lambda.LambdaConfig.INSTANCE_TYPE in Figure S2, respectively). Upon deployment, these fields determine the creation of certain cloud resources: AWS EC2 Amazon Machine Images in the case of IAE IDs, and AWS EC2 Instances in the case of hardware identifiers. One notable exception is the protocol specifying behavior of a corresponding NeuroCAAS job manager (Lambda.CodeUri and Lambda.Handler in Figure S2). Instead of identifying a particular cloud resource, each blueprint’s protocol is a python module within the source repo that contains functions to execute tasks on the cloud in response to user input. The ability to specify protocols in python allows NeuroCAAS to support the complex workflows shown in Figure 5. Job managers are deployed from these protocols as AWS Lambda functions that execute the protocol code for a particular analysis whenever users submit data and parameters. Since all parts of NeuroCAAS workflow can be managed with python code (i.e. through a programmatic interface, job manager protocol, or within the IAE itself), external workflow management tools can easily be integrated to analyses on a case-by-case basis in order to deploy the scale of NeuroCAAS in parallel or sequentially, as needed.

Another major aspect of NeuroCAAS ‘s source repo that is not discussed in §2 is the management of individual users. NeuroCAAS applies the same IaC principles to user creation and management as it does to individual analyses. To add a new user to the platform, NeuroCAAS first creates a corresponding user profile in the source repo (Figure S5, right), that specifies user budgets, creates private data storage space, generates their (encrypted) security credentials, and identifies other users who they collaborate with. Users resources are created using the AWS Identity and Accesss Management (IAM) service.

Contrib and Interface Repos.

Given only the NeuroCAAS source repo, analyses can be hosted on the NeuroCAAS platform and new users can be added to the platform simply by deploying the relevant code documents. However, interacting directly with resources provided by the NeuroCAAS source repo can be challenging for both analysis users and developers. For developers, the steps required to fill in a new analysis blueprint may not be clear, and the scripting steps necessary within an IAE to retrieve user data and parameters requires knowledge of specific resources on the Amazon Web Services cloud. For users, the NeuroCAAS source repo on its own does not support an intuitive interface or analysis documentation, requiring users to interact with NeuroCAAS through generic cloud storage browsers, forcing them to engage in tedious tasks like navigating file storage and downloading logs before examining them. Collectively, these tasks lower the accessibility that is a key part of NeuroCAAS ‘s intended design. To handle these challenges, we created two additional code repositories, the NeuroCAAS contrib repo and interface repo, for developers and users, respectively.