Abstract

We consider modeling the dependence of sensitivity and specificity on the disease prevalence in diagnostic accuracy studies. Many meta-analyses compare test accuracy across studies and fail to incorporate the possible connection between the accuracy measures and the prevalence. We propose a Pearson type correlation coefficient and an estimating equation–based regression framework to help understand such a practical dependence. The results we derive may then be used to better interpret the results from meta-analyses. In the biomedical examples analyzed in this paper, the diagnostic accuracy of biomarkers are shown to be associated with prevalence, providing insights into the utility of these biomarkers in low- and high-prevalence populations.

Keywords: Correlation analysis, Diagnostic accuracy, Disease prevalence, Estimating equation, Meta-analysis

1. INTRODUCTION

Sensitivity is the probability for a diagnostic test to correctly identify diseased subjects, while specificity is the probability to correctly identify healthy subjects (Zhou and others, 2002, Pepe 2003, Kranowski and Hand, 2009). These two components have long been regarded as the intrinsic accuracy measures of a test independent of the prevalence of the disease (p). That is, the accuracy remains constant across different populations with different disease prevalence. However, in practice such a belief may not be correct. It is sometimes useful to consider the dependence of these accuracy measures on p. In the following, for ease of presentation, we focus on sensitivity, although the results given in this paper are equally applicable to specificity.

The independence of the sensitivity and prevalence has been scrutinized, with many empirical studies suggesting that the disease prevalence may impact the sensitivity. See Brenner and Gefeller (1997) for a comprehensive historical review. Prevalence could influence analyses of accuracy at three levels. First, low prevalence of disease might lead to small number of diseased subjects (denoted by N) included in a study sample. Thus, the estimator might have a low precision relative to an estimator obtained from a population with high disease prevalence. Second, as argued in Brenner and Gefeller (1997), the disease prevalence might potentially affect the number of correctly identified diseased subjects (denoted by X). One might assume that the occurrence of the disease is linked to the event that some underlying continuous variable crosses a threshold and that such a latent variable might also determine the binary classification of the diagnostic test. Such dependence might be explained by the disease spectrum being linked to prevalence, for example higher percentages of severe cases in populations with higher prevalences. Third, a human factor might play a role. If prevalence is high, as in a clinical setting, the medical staff who is judging an image might be more aware that the patient might be diseased than in a screening setting with few diseased, but thousands of disease-free individuals. This would result in a positive correlation between prevalence and sensitivity and a negative correlation between prevalence and specificity.

In a given population from a particular study, the distribution of X given N is binomial with probability of success equal to a fixed se. The estimator of sensitivity is naturally . However, as we examine sensitivity across multiple studies corresponding to multiple populations, we may view both sensitivity and p as random variables which may be associated. In this paper, we propose methods for understanding how prevalence influences sensitivity. We exclude consideration of case–control studies since they cannot provide the estimation of prevalence.

Meta-analysis provides a convenient avenue for this objective since it synthesizes the results of many studies pertaining to the performance of a diagnostic test. There is a rich literature on statistical methodology for meta-analysis of diagnostic accuracy measures. Moses and others (1993) introduced a useful summary measure to combine multiple studies by plotting the sensitivity against specificity in a single graph. Rutter and Gatsonis (1995, 2001) proposed the regression modeling approach for the meta-analysis. Lijmer and others (2002) further extended the regression method to control confounding factors. Dukic and Gatsonis (2003) examined the role of different thresholds used by different studies. Reitsma and others (2005) analyzed bivariate distribution of sensitivity and specificity across populations via a parametric random-effects approach. Harbord and others (2007) provide a recent review of progress of meta-analysis methodology. However, none of these earlier authors considered modeling the sensitivity as a function of the prevalence. Chu and others (2009) considered joint models for sensitivity and prevalence using a special random-effects structure. Such analyses permit an indirect assessment of associations between sensitivity and prevalence, but do not address these issues using standard tools like correlation and regression analysis. Our goal is to directly apply standard association methods to analyze the bivariate relationships and to develop a rigorous framework for how such methods perform in different scenarios.

The methods developed in this paper are motivated by data commonly encountered in biomedical meta-analyses. We consider two recent examples that are typical of such research. The first, Kang and others (2010), evaluates biomarkers for ovarian cancer, while the second, Kwee and Kwee (2009), assesses the use of imaging for cancer detection. In these publications, it was noted that sensitivities and specificities varied across studies, but the influence of prevalence on such heterogeneity was not explored. Since these systematic reviews involved studies from populations with very different disease spectra, it seems likely that the prevalence of the disease may help explain these observed differences in diagnostic accuracies. The methods we propose will be used to directly evaluate the impact of prevalence in these meta-analyses.

To assess the dependence of diagnostic accuracy measures on the prevalence, we have to resolve several obstacles. First, the true sensitivity (se), a fixed parameter for any given population, is usually unknown and has to be estimated by . Replacing se with could influence the study of its dependence on the prevalence due to the estimation uncertainty. The other difficulty is regarding the unknown prevalence value. In many cross-sectional or cohort studies, it may be possible to estimate p using the observed data and an assessment of the effect of p on se may be carried out using in place of the true unknown p. Using such an estimated prevalence introduces another source of variability for the analysis. We develop results that demonstrate how the estimation uncertainty of these population-specific parameters influences the inferential procedures.

2. SETTINGS AND KNOWN RESULTS

Suppose se∈Θ⊂[0,1] is the sensitivity for the test, on average, across all different populations. The quantity se may take random value Si in the ith study population, whereas the prevalence of the disease for that population is pi∈𝒫⊂(0,1),i = 1,⋯,n. One may regard se as the expectation of the n i.i.d. Si's. The prevalence pi might vary across studies and thus are random variables across populations. For the n studies, we assume pi's are i.i.d. with a probability distribution Π, with μp = ∫pdΠ(p) denoting the mean of pi.

When Si and pi are not known a priori we have to estimate them from the observed samples. Denote Ni and Mi as the number of observations used for estimation in study i, respectively. In the simplest design, Mi is the sample size of the ith study and Ni is number of diseased individuals included in this study. We notice that usually a case–control study selects subjects according to their disease status and thus does not provide a valid estimate for the prevalence. Since our primary goal is to show the relationship between the two important population-specific parameters across different studies, we exclude any such kind of studies where the estimates for either Si or pi is not available.

We denote to be the estimated sensitivity of the ith study, usually computed as the fraction of positive test recipients among all cases. We use the estimated sensitivity to replace Si as needed. We assume that such an estimator is conditionally unbiased in that for the ith study. Such a property can be verified easily for the proportion estimator introduced in Section 1. For such estimator, we also have .

If the ith study is a cross-sectional or cohort study with total sample size Mi, can be obtained easily as Ni/Mi, which is the fraction of total number of diseased subjects among the total number of subjects examined. Sometimes one may acquire more complicated estimates via statistical modeling (Walter and Irwig, 1988) or combining information from similar observational studies for the particular study population. We assume that 's are independent and that each converges in probability to the true pi as the sample size Mi based on which is constructed tends to infinity. Denote the variance of this estimator as . For a cohort or nested case–control study, λi = pi(1 − pi).

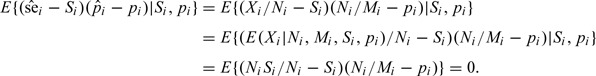

We argue that and are conditionally uncorrelated. This property will be proven by the following equations. Assuming that they are based on the same sample, as with cohort or nested case–control studies, we notice for the ith study (thus Si and pi fixed)

|

Such a fact often leads people to ignore any further examination of the relationship between Si and pi. It only claims the conditional uncorrelatedness of two estimators and this conditional uncorrelatedness does not imply their independence. That is, it says nothing about the dependence between Si and pi since these two quantities are treated as fixed parameters in the above equations.

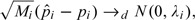

Inference for individual pi and Si can be made by considering the conditional asymptotical distributions of their estimators. Conditional on pi, as Mi→∞, we have

|

(2.1) |

and conditional on Si, as Ni→∞

|

(2.2) |

In this paper, we assume that asymptotically Mi − 1 = Op(Ni − 1), where X = Op(Y) means X = RY and R is bounded in probability (chapter 2, van der Vaart, 1998). Such a requirement is usually satisfied when the estimations of disease prevalence and sensitivity are based on the same sample.

Of course, it is well known that unconditionally

|

Depending on the choice of estimation methods, the unconditional asymptotic distribution of may assume a complicated structure, being a mixture of normal distributions with an overall mean equal to μp (chapter 17, van der Vaart, 1998).

The purpose of this paper was to explore the relationship between pi and Si across different studies. In Section 3, we study a simple correlation measure, while in Section 4, we consider linear/nonlinear regression for predicting sensitivity using disease prevalence.

3. CORRELATION ANALYSIS

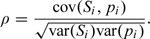

A measure of correlation between the random Si and pi across populations is

|

(3.1) |

Such a Pearson type correlation coefficient is easy to understand and capable of assessing the linear dependence between Si and pi. It is important to recognize that the correlation of Si and pi is defined with respect to their unconditional joint distribution, with means se and μp. In practice, we estimate (3.3) by

|

(3.2) |

where the weights wi > 0 are chosen to represent the fraction of each specific study population in the overall population, and are the sample means of and , respectively. The weights may be chosen to be the inverses of the study variances and can be normed such that ∑i = 1nwi = 1. A good choice of weights may reduce the estimation variability of the correlation coefficient. In cases where Si's or pi's are known, we plug-in their exact values in (3.4) instead of estimates.

When all study populations are treated with equal emphasis, we may put wi = 1 uniformly across i. In general, we assume wi's to be i.i.d. bounded positive variables with a finite expectation μw and independent of and pi. However, we realize that there are some situations that this assumption is violated when the investigators tend to place higher emphasis on studies with larger estimated prevalence values. The consistency of the estimate (3.4) is unaffected but the asymptotic distribution might be more complicated than what we report below.

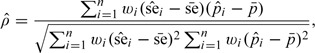

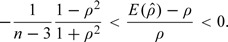

The exact mean of is not equal to ρ. Even in the most ideal case where pi and Si are known, it is shown in Meng (2005) that for a finite sample size n ≥ 4 and equal weights,

|

The absolute relative bias is bounded by n − 1 and therefore usually of no practical concern for a relatively large sample. An unbiased estimator can be constructed by following Olkin and Pratt (1958). The strong consistency of (3.4) is given in the following theorem.

THEOREM 3.1

Suppose E(Si2) > 0 and E(pi2) > 0. If n→∞, Mi→∞, and Ni→∞, then the estimate (3.4) converges to (3.3) with probability 1.

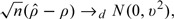

The proof of this theorem is provided in the supplementary materials (available at Biostatistics online). Next, by using multivariate central limit theorem on n pairs of independent observations , we can show that under the same conditions in Theorem 3.3,

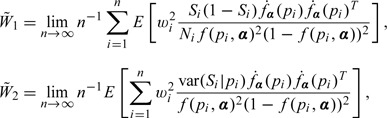

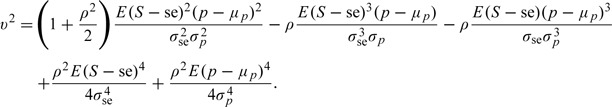

|

where

|

(3.3) |

We note that μp is the mean prevalence across populations, not the mean prevalence in the combined population, which may differ, depending on the relative contributions of each population to the combined population. The quantity S is a generic notation for a random sensitivity. The standard deviation terms involved in the expression above are defined according to σse = {E(S − se)2}1/2 and σp = {E(p − μp)2}1/2.

One should recognize that the variance v2 is not influenced by uncertainty in estimation of pi and Si. That is, replacing these quantities with their estimators does not influence the variance (3.5). The only quantities influencing this variance are moments from the underlying joint distribution of pi and Si. Additional details regarding these results are given in the supplementary materials (available at Biostatistics online). This differs from results in Section 4 when fitting regression models relating pi to Si.

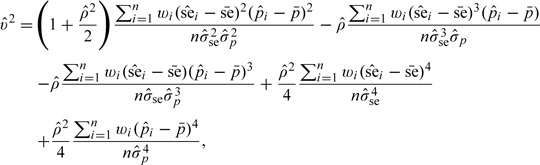

The variance can be estimated by plugging in sample versions of various moments. The empirical estimator of v2 is

|

(3.4) |

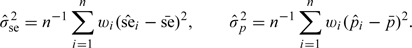

where

|

The estimator is consistent to the true variance by applying the law of large number.

4. REGRESSION ANALYSIS

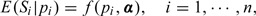

We note that the correlation coefficient can only measure the linear dependence and therefore may be rather limited. A finer analysis for the relationship between Si and pi can be carried out with a formal regression. We consider the following mean model

|

(4.1) |

where α∈𝒜⊂ℝk is an unknown parameter and f is a known link function. We are interested in finding the regression parameter α to determine the relationship between population-specific sensitivity and the prevalence of the population. We assume that variance var(Si|pi) exists for all i in this paper.

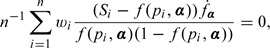

We seek to estimate the regression parameter by solving the following quasi-likelihood type estimating equations:

|

(4.2) |

where is the derivative of f(pi,α) with respect to α. Since pi and Si are generally unknown in practice, their estimators are substituted in the estimating equations. Denote the solution of the estimating equations (4.8) to be .

Depending on the form of f, we may choose either linear or nonlinear optimization programs to find the numerical solution . Provided with the first- and second-order gradient information, most solvers in common statistical computing packages such as R or Matlab can converge quickly to the optimum.

In order to show that the estimator is consistent, we have to show that (4.8) is asymptotically unbiased. The proof for the following theorem is contained in the Appendix.

THEOREM 4.1

Suppose the parameter space 𝒜 is compact and is continuous and bounded. If n→∞ and Mi→∞, we have that the solution exists and converges to the true parameters α with probability 1.

When pi and Si are known, we only need n to go to infinity in the above theorem. The additional requirement for Mi→∞ is necessary when pi are estimated by . Basically we need to be close enough to the real pi in the estimation equation so that the regression parameters can be estimated consistently. Otherwise there will be nonignorable biases in the estimates. We do not necessarily need Ni→∞ in practice even though are used to replace Si. This occurs because of the finitesample unbiasedness property of , as shown in the proof in the supplementary materials (available at Biostatistics online).

The following theorem provides the asymptotic distribution property of the estimates.

THEOREM 4.2

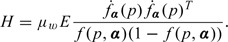

Assume the same condition as Theorem 4.1, further assume that , the second derivative of f with respect to α, is continuous and bounded. Suppose in probability that . If the matrix is nonsingular, then the sequence

is asymptotically normal with mean zero and covariance matrix . where

and

When pi and Si are known, the covariance matrix reduces to . In the above results, reflects the estimation variation for the conditional analysis of Si on pi. corresponds to the additional variation due to replacing Si with its estimator . If at least one Ni is considered far from being infinity, does not vanish and affects the overall variance even if n tends to infinity. The covariance matrix can be approximated by the empirical estimator in practice.

5. SIMULATION STUDIES

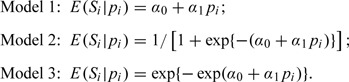

To evaluate the performance of the proposed methods, we conduct simulation studies in this section. We set the number of studies n = 15 and 30 and generated pi from a uniform distribution on [0.1,0.9]. For each study, we fix the total sample size Mi = 200 and simulated the number of cases Ni from a binomial distribution with parameters Mi and pi. We then considered the following three models for sensitivity:

|

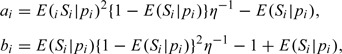

They represent three typical regression models: linear regression, logit link binary regression and complementary log–log link binary regression. The sensitivity Si is generated from a Beta distribution (Gupta and Nadarajah, 2004) with two parameters given by

|

where the variance parameter η is chosen so that the Monte Carlo signal-to-noise ratio is roughly 4:1. One can easily verify that the mean of this beta distribution is E(Si|pi). For each study, we simulated the number of true-positive Xi from a binomial distribution with parameters Ni and Si. A total number of 1000 simulations were conducted.

We summarized in Table 1 the results regarding estimation of the correlation coefficient and α in the correctly specified regression model. These results include biases and empirical and model-based variances. Only results for the practically relevant case of unknown Si and pi are provided, in which and are utilized in the estimation. The correlation estimates have a slight downward bias. In fact, linear correlation is not a sensible measure for Models 2 and 3 since the underlying relationship is not linear. The biases decrease as we enlarge the sample size n. One can see that all the regression parameters and standard errors can be consistently estimated with the proposed methods. Therefore for a study with a small or moderate sample size the regression model approach seems to outperform the simple correlation coefficient since the regression parameters can be estimated more accurately, when the model is correctly specified.

Table 1.

Estimation of correlation and regression parameters under three models over 1000 simulations

| Model | Parameter | True | n | Est. | SD | SE |

| 1 | ρ | 0.85 | 15 | 0.78 | 0.108 | 0.114 |

| 30 | 0.82 | 0.082 | 0.086 | |||

| α0 | 0.5 | 15 | 0.49 | 0.004 | 0.003 | |

| 30 | 0.50 | 0.002 | 0.002 | |||

| α1 | 0.5 | 15 | 0.50 | 0.014 | 0.013 | |

| 30 | 0.50 | 0.010 | 0.009 | |||

| 2 | ρ | 0.88 | 15 | 0.77 | 0.13 | 0.15 |

| 30 | 0.83 | 0.11 | 0.12 | |||

| α0 | 1 | 15 | 0.89 | 0.69 | 0.75 | |

| 30 | 0.95 | 0.55 | 0.60 | |||

| α1 | 3 | 15 | 3.09 | 3.20 | 3.54 | |

| 30 | 2.98 | 2.15 | 2.26 | |||

| 3 | ρ | 0.94 | 15 | 0.85 | 0.12 | 0.10 |

| 30 | 0.90 | 0.095 | 0.093 | |||

| α0 | − 0.5 | 15 | − 0.54 | 0.035 | 0.038 | |

| 30 | − 0.48 | 0.022 | 0.026 | |||

| α1 | − 1.5 | 15 | − 1.46 | 0.12 | 0.12 | |

| 30 | − 1.51 | 0.092 | 0.097 |

True, fixed true parameter values; n, sample size; Est., sample means of the estimated parameters; SD, empirical standard deviations of the parameter estimates; SE, means of the estimated standard errors.

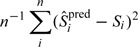

To understand the impact of model misspecification, we next consider fitting a model in which f may be misspecified. That is, the sensitivity is simulated from Model i, while we use Model j to estimate parameters (i,j = 1,2,3;i¬j). We investigate how the predicted sensitivity based on the wrong model might differ from the true sensitivity. The results for the prediction error

|

are summarized in Table 2. It is noted that when linear model (Model 1) was mistakenly chosen for nonlinearly generated data (Models 2 and 3), the prediction errors were somewhat smaller. Nonetheless, in all settings, the prediction errors for misspecified models are close to the prediction errors for correctly specified models. The procedures are thus to some degree insensitive to the model choices. A similar phenomenon has been witnessed in the analysis of experimental design (chapter 12, Wu and Hamada, 2000) that for a small sample size n usually there may be little qualitative difference between the use of various models or parameterizations. In practice, since we cannot evaluate the true prediction errors due to the lack of knowledge of Si, we choose to use a leave-one-out cross-validation (CV) procedure to estimate the prediction error and select among candidate models. We may replace with the predicted sensitivity for the ith study based on a model built with data excluding the ith study and replace Si with .

Table 2.

Prediction errors for three model specifications and the percentages of the model being selected by CV (in parentheses)

| Actual model | n | Specified model |

||

| 1 | 2 | 3 | ||

| 1 | 15 | 0.0062 | 0.0068 | 0.0069 |

| (65.0) | (16.5) | (18.5) | ||

| 30 | 0.0059 | 0.0065 | 0.0067 | |

| (72.8) | (15.3) | (11.9) | ||

| 2 | 15 | 0.0029 | 0.0029 | 0.0032 |

| (15.7) | (61.1) | (23.2) | ||

| 30 | 0.0026 | 0.0025 | 0.0026 | |

| (8.3) | (72.5) | (19.2) | ||

| 3 | 15 | 0.0033 | 0.0033 | 0.0032 |

| (29.4) | (19.3) | (51.3) | ||

| 30 | 0.0028 | 0.0028 | 0.0027 | |

| (19.1) | (12.6) | (69.3) | ||

We thus also report the frequency that CV correctly selects the true model that generates the data in the simulations. The results are reported in the brackets in Table 2, suggesting that CV indeed pinpoints the correct model with high probability. The performance of CV improves as sample size increases.

6. EXAMPLES

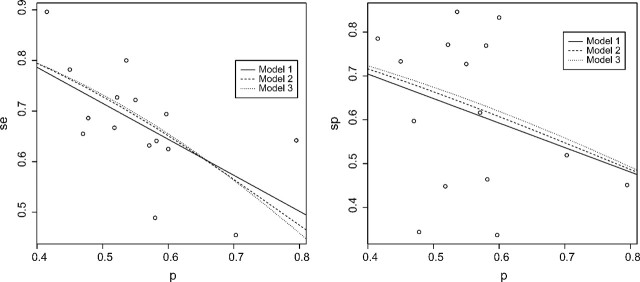

Example 1: We consider a meta-analysis for evaluating the diagnostic performance of preoperative CA-125 levels in the assessment of suboptimal cytoreduction in ovarian cancer. Kang and others (2010) reviewed 15 prospective studies that reported sensitivities, specificities, and prevalences. These data are included in Table 1 of the supplementary materials (available at Biostatistics online). The scatter plots of sensitivity and specificity versus prevalence are given in Figure 1. One observes that the specificities of the test are generally quite high regardless of prevalence, while the sensitivities show a decreasing trend.

Fig. 1.

Fitted models for sensitivity (left panel) and specificity (right panel) for Example 1.

The Pearson correlation coefficient between the estimated sensitivity and prevalence is − 0.62 (95% confidence interval [ − 0.86 to − 0.16]), while that between the estimated specificity and prevalence is − 0.30 (95% confidence interval [ − 0.70 to 0.24]). There is no significant linear correlation between specificity and prevalence but a rather strong negative correlation between sensitivity and prevalence, which is statistically significant. To better understand the dependence of accuracy measures on prevalence, we conducted a regression analysis.

For both sensitivity and specificity, we explored using the identity, logit, and complementary log–log link functions (Models 1–3 given in Section 5) to model the association with the prevalence. The estimated parameters and variance estimates are given in Table 3. The fitted response curves were superimposed in the scatter plots of the observed sensitivity–specificity versus the prevalence in Figure 1. For sensitivity, for all three models, the coefficients α1 are significant in Table 3. These results indicate a strong decreasing relationship between se and prevalence. The CVs for predicting the sensitivity are 0.0125, 0.0145, and 0.0171 for the three models, respectively. The simple linear model may be the most appropriate choice over the observed range of prevalences, although the CV values are fairly small for all models. For specificity, we found no dependence on prevalence since in Table 3 none of the coefficients α1 was significant. The CVs for predicting the specificity are 0.0325, 0.0329, and 0.0330 for the three models, respectively. These values are quite large in comparison with those for specificity and indicate that the three models perform similarly, as one would expect if prevalence and specificity are not strongly associated.

Table 3.

Fitted results for two examples

| Model 1 |

Model 2 |

Model 3 |

|||||||

| Est. | SE | P value | Est. | SE | P value | Est. | SE | P value | |

| Example 1 | |||||||||

| se | |||||||||

| α0 | 1.07 | 0.14 | < 0.0001 | 2.80 | 0.70 | 0.0016 | − 2.69 | 0.59 | 0.005 |

| α1 | − 0.71 | 0.24 | 0.0131 | − 3.63 | 1.25 | 0.0121 | 3.05 | 1.05 | 0.0125 |

| sp | |||||||||

| α0 | 0.92 | 0.27 | 0.0048 | 1.90 | 1.24 | 0.1500 | − 1.90 | 0.98 | 0.0739 |

| α1 | − 0.55 | 0.48 | 0.2698 | − 2.44 | 2.20 | 0.286 | 1.95 | 1.73 | 0.2804 |

| Example 2 | |||||||||

| se | |||||||||

| α0 | 0.68 | 0.10 | < .0001 | 0.81 | 1.22 | 0.5290 | − 1.01 | 1.18 | 0.4110 |

| α1 | 0.45 | 0.24 | 0.0846 | 4.03 | 2.32 | 0.0830 | − 3.72 | 2.38 | 0.0970 |

| sp | |||||||||

| α0 | 0.65 | 0.05 | < .0001 | − 0.79 | 0.63 | 0.2309 | 0.59 | 0.60 | 0.3446 |

| α1 | 0.48 | 0.12 | 0.0024 | 7.13 | 1.42 | 0.0005 | − 6.84 | 1.37 | 0.0005 |

Est., estimated regression coefficient; SE, standard error for the estimated parameter; P value, corresponding p value for testing whether the parameter is different from zero.

In summary, by aggregating evidence from multiple studies, we can conclude that sensitivity and specificity have different relationships with disease prevalence. The sensitivity is clearly decreasing as prevalence increases, with a simple linear model providing a reasonable approximation to this relationship, as evidenced in Figure 1. Results for specificity are consistent with the linear correlation analysis—the relationship between specificity and prevalence is weak, even when considering nonlinear association models.

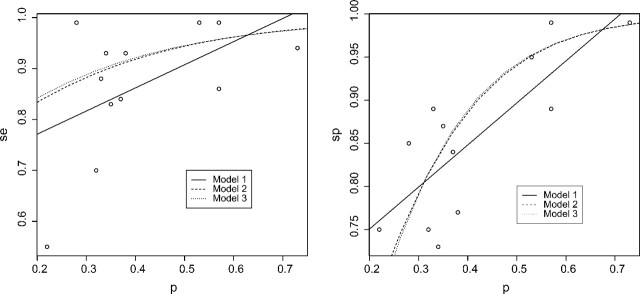

Example 2: We consider another meta-analysis for the accuracy of combined 18F-fluoro-2-deoxyglucose positron emission tomography/computed tomography (FDF-PET/CT) in the detection of primary tumors where 11 prospective studies were investigated (Kwee and Kwee, 2009). The data for sensitivities, specificities, and prevalences from this study are included in Table 2 of the supplementary materials (available at Biostatistics online). The scatter plots of sensitivity and specificity versus prevalence are given in Figure 2 along with the fitted superimposed regression curves from the three models.

Fig. 2.

Fitted models for sensitivity (left panel) and specificity (right panel) for Example 2.

The Pearson correlation coefficient between the estimated sensitivity and prevalence is 0.52 (95% confidence interval [ − 0.08 to 0.84]), while that between the estimated specificity and prevalence is 0.79 (95% confidence interval [0.39–0.94]). The correlation between sensitivity and prevalence is moderately strong but not statistically significant, while that between specificity and prevalence is quite strong and statistically significant.

The estimated regression coefficients under three different models are also given in Table 3. For sensitivity, the coefficients α1 are significant at level 0.10 but not significant at level 0.05. There is only a moderate dependence between sensitivity and prevalence. The CVs for predicting the sensitivity are 0.0183, 0.0180, and 0.0182 for the three models. For specificity, we found the slope coefficients α1 are significant under three models. The p values are much more significant for logistic and complementary log–log link models than the simple linear model. The models with these more complicated links are thus more likely to detect the association between the specificity and the prevalence. The CVs for predicting the specificity are 0.0038, 0.0032, and 0.0033 for the three models, respectively. The logistic model seems superior, particulary relative to the linear model, confirming the nonlinear pattern in Figure 2. One may conclude that the specificity of the test tends to increase as the prevalence increases and that the increasing trend may be well described using the logistic link model.

7. DISCUSSION

Our focus has been on the dependence of the sensitivity and specificity on the prevalence. There are other intrinsic accuracy measures such as the receiver operating characteristic (ROC) curve and the area under the ROC curve (AUC) for a diagnostic test. Since ROC and AUC are derived from the sensitivity and specificity, their dependence on the prevalence can be inferred subsequently based on the known results for the sensitivity and specificity.

We propose our methodology mainly for studies where an estimate of pi is available. In a retrospective study, the prevalence cannot be directly estimated. Lack of an estimator muddies the waters, especially when external or historical information for the prevalence is unattainable for the study. A potential statistical framework is to jointly model the distribution of Si and pi, which may enable the use of studies without .

We have analyzed the prevalence dependence separately for sensitivity and specificity. It has been advised in Rutter and Gatsonis (1995, 2001) and Reitsma and others (2005) that a more coherent analysis might involve analyzing the dependence of sensitivity and specificity on the prevalence jointly. A limitation of existing joint modeling approaches is that they may not provide simple assessments of the association between diagnostic accuracy and prevalence. Random effects models have been studied (Arends and others, 2008; Chu and others, 2009; Reitsma and others, 2005), with the dependencies implicitly captured via latent variables. In general, data analysts who are interested in assessing the prevalence dependence of diagnostic accuracy measures may find the simplicity of interpretation and the ease of implementation of the proposed methods in the current paper. Another practical concern for the joint model is the potential model misspecification. If the joint model is incorrectly specified, nonignorable bias might enter the model-based parameter estimates.

SUPPLEMENTARY MATERIALS

Supplementary material is available at http://biostatistics.oxfordjournals.org.

FUNDING

Academic Research Funding (R-155-000-109-112) and National Medical Research Council (NMRC/NIG/0054/2009).

Supplementary Material

References

- Arends LR, Hamza TH, van Houwelingen JC, Heijenbrok-Kal MH, Hunink MGM, Stijnen T. Bivariate random effects meta-analysis of ROC curves. Medical Decision Making. 2008;28:621–638. doi: 10.1177/0272989X08319957. [DOI] [PubMed] [Google Scholar]

- Brenner H, Gefeller O. Variation of sensitivity, specificity, likelihood ratios and predictive values with disease prevalence. Statistics in Medicine. 1997;16:981–991. doi: 10.1002/(sici)1097-0258(19970515)16:9<981::aid-sim510>3.0.co;2-n. [DOI] [PubMed] [Google Scholar]

- Chu H, Nie L, Cole SR, Poole C. Meta-analysis of diagnostic accuracy studies accounting for disease prevalence: alternative parameterizations and model selection. Statistics in Medicine. 2009;28:2384–2399. doi: 10.1002/sim.3627. [DOI] [PubMed] [Google Scholar]

- Dukic V, Gatsonis C. Meta-analysis of diagnostic test accuracy assessment studies with varying number of thresholds. Biometrics. 2003;59:936–946. doi: 10.1111/j.0006-341x.2003.00108.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gupta AK, Nadarajah S. Handbook of Beta Distribution and Its Applications. New York: Marcel Dekker, Inc; 2004. [Google Scholar]

- Harbord RM, Deeks JJ, Egger M, Whiting P, Sterne JA. A unification of models for meta-analysis of diagnostic accuracy studies. Biostatistics. 2007;8:239–251. doi: 10.1093/biostatistics/kxl004. [DOI] [PubMed] [Google Scholar]

- Kang S, Kim TJ, Nam BH, Seo SS, Kim BG, Bae DS, Park SY. Preoperative serum CA-125 levels and risk of suboptimal cytoreduction in ovarian cancer: a meta-analysis. Journal of Surgical Oncology. 2010;101:13–17. doi: 10.1002/jso.21398. [DOI] [PubMed] [Google Scholar]

- Kranowski WJ, Hand DJ. ROC Curves for Continuous Data. Boca Raton, FL: CRC Press; 2009. [Google Scholar]

- Kwee TC, Kwee RM. Combined FDG-PET/CT for the detection of unknown primary tumors: systematic review and meta-analysis. European Radiology. 2009;19:731–744. doi: 10.1007/s00330-008-1194-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lijmer JG, Bossuyt PM, Heisterkamp SH. Exploring sources of heterogeneity in systematic reviews of diagnostic tests. Statistics in Medicine. 2002;21:1525–1537. doi: 10.1002/sim.1185. [DOI] [PubMed] [Google Scholar]

- Meng X-L. From unit root to Stein's estimator to Fisher's k statistics: if you have a moment, I can tell you more. Statistical Science. 2005;20:141–162. [Google Scholar]

- Moses LE, Shapiro D, Littenberg B. Combining independent studies of a diagnostic test into a summary ROC curve: data-analytic approaches and some additional considerations. Statistics in Medicine. 1993;12:1293–1316. doi: 10.1002/sim.4780121403. [DOI] [PubMed] [Google Scholar]

- Olkin I, Pratt JW. Unbiased Estimation of Certain Correlation Coefficients. Annals of Mathematical Statistics. 1958;29:201–211. [Google Scholar]

- Pepe MS. The Statistical Evaluation of Medical Tests for Classification and Prediction. Oxford: Oxford University Press; 2003. [Google Scholar]

- Reitsma JB, Glas AS, Rutjes AW, Scholten RJ, Bossuyt PM, Zwinderman AH. Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic review. Journal of Clinical Epidemiology. 2005;58:982–990. doi: 10.1016/j.jclinepi.2005.02.022. [DOI] [PubMed] [Google Scholar]

- Rutter CM, Gatsonis CA. Regression methods for meta-analysis of diagnostic test data. Academic Radiology. 1995;2:S48–56. [PubMed] [Google Scholar]

- Rutter CM, Gatsonis CA. A hierarchical regression approach to meta-analysis of diagnostic test accuracy evaluators. Statistics in Medicine. 2001;20:2865–2884. doi: 10.1002/sim.942. [DOI] [PubMed] [Google Scholar]

- van der Vaart AW. Asymptotic Statistics. Cambridge: Cambridge University Press; 1998. [Google Scholar]

- Walter SD, Irwig LM. Estimation of test error rates, disease prevalence and relative risk from misclassified data: a review. Journal of Clinical Epidemiology. 1988;41:923–937. doi: 10.1016/0895-4356(88)90110-2. [DOI] [PubMed] [Google Scholar]

- Wu CFJ, Hamada M. Experiments: Planning, Analysis, and Parameter Design Optimization. New York: John Wiley & Sons, Inc; 2000. [Google Scholar]

- Zhou XH, Obuchowski NA, McClish DK. Statistical Methods in Diagnostic Medicine. New York: Wiley; 2002. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.