Abstract

Motivation

Genomic data is frequently stored as segments or intervals. Because this data type is so common, interval-based comparisons are fundamental to genomic analysis. As the volume of available genomic data grows, developing efficient and scalable methods for searching interval data is necessary.

Results

We present a new data structure, the Augmented Interval List (AIList), to enumerate intersections between a query interval q and an interval set R. An AIList is constructed by first sorting R as a list by the interval start coordinate, then decomposing it into a few approximately flattened components (sublists), and then augmenting each sublist with the running maximum interval end. The query time for AIList is , where n is the number of overlaps between R and q, N is the number of intervals in the set R and m is the average number of extra comparisons required to find the n overlaps. Tested on real genomic interval datasets, AIList code runs 5–18 times faster than standard high-performance code based on augmented interval-trees, nested containment lists or R-trees (BEDTools). For large datasets, the memory-usage for AIList is 4–60% of other methods. The AIList data structure, therefore, provides a significantly improved fundamental operation for highly scalable genomic data analysis.

Availability and implementation

An implementation of the AIList data structure with both construction and search algorithms is available at http://ailist.databio.org.

Supplementary information

Supplementary data are available at Bioinformatics online.

1 Introduction

A genomic interval r is defined by the two coordinates that represent the start and end locations of a feature on a chromosome. The general interval search problem is defined as follows.

Given a set of N intervals in R for , and a query interval q, find the subset S of R that intersect q. If we define all intervals to be half-open, S can be represented as:

If the order of intervals in R remains the same when the elements are sorted based on interval start or based on interval end, then S can be computed using a single binary search followed by another n + 1 comparisons, where n is the number of overlaps between R and q. We refer to R in this case as being flat. The strategy of a binary search followed by sequential comparisons becomes sub-optimal when intervals in R possess a coverage or containment relationship, i.e. one interval covers or contains another interval in R. Such non-flat interval lists require extra comparisons beyond n + 1 after the binary search.

The interval search problem is fundamental to genomic data analysis (Giardine et al., 2005; Jalili et al., 2018; Layer et al., 2018; Li and Durbin, 2011) and several approaches have been developed to do this efficiently (Alekseyenko and Lee, 2007; Cormen et al., 2001; Kent et al., 2002; Neph et al., 2012; Quinlan and Hall, 2010; Richardson, 2006). Currently, the most popular data-structures are the nested containment list (NCList) by Alekseyenko and Lee (2007), the augmented interval-tree (AITree) by Cormen et al. (2001) and the R-tree by Kent et al. (2002). The design of these data-structures can be conceptualized as minimizing the additional comparisons in the search strategy we described earlier. NCList and AITree require time to build the data-structures, and their query time complexities are , where m is the average number of extra comparisons (comparisons that do not yield overlapping results) required to find the n overlaps. The R-tree has a complexity of O(N) in construction and in query. For genomic interval datasets, N is usually several orders of magnitudes larger than n or m.

NCLists, AITrees and R-trees differ both in time to build the data structure and to search it. As shown in Alekseyenko and Lee (2007), both average construction time and search time can vary significantly among the methods in practice. The search time differences are determined by the extra comparison value m, which differs based on the different approaches of each algorithm: for NCList, many sublists may be involved in a query, which requires many extra binary searches; for AITree, one must compare all interval nodes marked by the augmenting value, and not all of them intersect the query and for R-tree, all intervals in an indicated bin are scanned, although many of these may not overlap the query.

In this paper, we present a new data structure, the Augmented Interval List (AIList). The AIList search algorithm reduces the number of extra comparisons (m) and thereby achieves better performance.

2 AIList data structure and query algorithm

2.1 A simple AIList and query algorithm

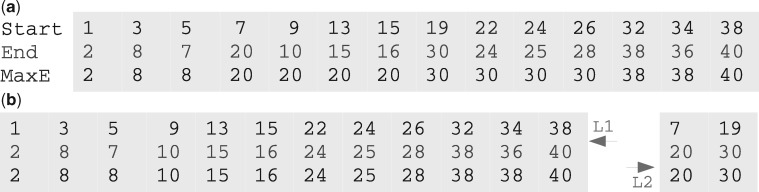

To begin, we sort R based on the interval start to create an interval list. We then augment the list with the running maximum end (MaxE) value, which thus stores the MaxE value among all preceding intervals (Fig. 1a) to create the AIList. MaxE reflects the containment relationship among the intervals: because the second interval [3, 8) contains the third interval [5, 7), they have the same MaxE value (8); similarly the fourth interval [7, 20) contains the next three intervals and they all have the same MaxE value 20. MaxE therefore indicates a list of containment groups. The coverage length of a containment group can be defined as the number of sequential identical MaxE values −1. A variation of this approach instead augments the list with the sorted end value, SortedE, which we detail in the Supplementary Material.

Fig. 1.

(a) An interval list sorted by the Start and augmented with MaxE, the maximum end counting from the first interval. (b) Interval list decomposition: Intervals in the above list with End value larger than that of the three following intervals are put into a separated list. The two component lists L1 and L2 are both flattened. Two queries [9, 12) and [17, 21) are discussed in the text

Once an AIList is constructed, we seek to search it with a query interval. To find the overlaps of a query with the AIList, we first do a binary search using the query interval end against the sorted list of database interval starts to find the right-most interval in the AIList where . We then step backwards to test for each interval whether . Since MaxE is in ascending order, and , when we find the first interval with , we are guaranteed that all remaining intervals do not overlap the query and can be skipped. For example, if our query was [9, 12), we first binary search to find the index of the last interval IE that has , which is the fifth interval [9, 10), MaxE =20. We know all intervals on the right side will not overlap the query since their . We then step backwards to the fifth, then the fourth interval, but we can stop at the third interval, [5, 7) with MaxE = 8, since MaxE < 9, which ensures that no further intervals on the left side will overlap the query. This is very efficient since we only checked one extra interval.

However, this strategy becomes less efficient in the presence of long coverage groups. For example, if the query was [17, 21), then we need to check six intervals: from the eighth, [19, 30) with MaxE = 30, back to the third, [5, 7) with MaxE = 8, which requires four extra comparisons. This example query is the worst case for this list. To mitigate the issue of containment, we next extend the AIList by decomposing the list.

2.2 Decomposition of an interval list

Because long coverage groups lead to extra comparisons, we seek to reduce the length of these groups. We achieve this by extracting containing intervals into a second list. In the simple case shown in Figure 1a, we can define the coverage length len of a list interval i as the number of the immediately following intervals (i + 1, i + 2, …) that are covered by interval i; so in Figure 1a, etc. Then, we can extract all intervals that have coverage length larger than or equal to a criterion (minimum coverage length MinL). In the above example, we can set MinL = 3 to decompose the list into two sublists, L1 and L2 (Fig. 1b). Then we add MaxE to each L1 and L2 independently and attach L2 to the end of L1 to form an improved AIList with the same size as the original list. The start of the sublists in the new AIList is maintained in a header list hSub. Now in L1 there are only two containment groups, both of length 1, and there is no containment in L2.

For a more complex dataset, the L2 sublist may in turn have long containments, in which case the decomposition is repeated recursively as long as L2 is decomposable. The practical implementation of this decomposition may need to relax our definition of the coverage length len to include more general cases. For example, if does not contain , but it contains all intervals from to , we may still want to extract . The AIList data structure can accommodate different ways to implement the decomposition depending on how len is defined. In our implementation, we define coverage length for interval item i as the number of intervals among the next intervals that are covered by interval i. Therefore, to find we can simply check intervals from to to count how many of them are covered by . Limiting the search range to reduces construction time.

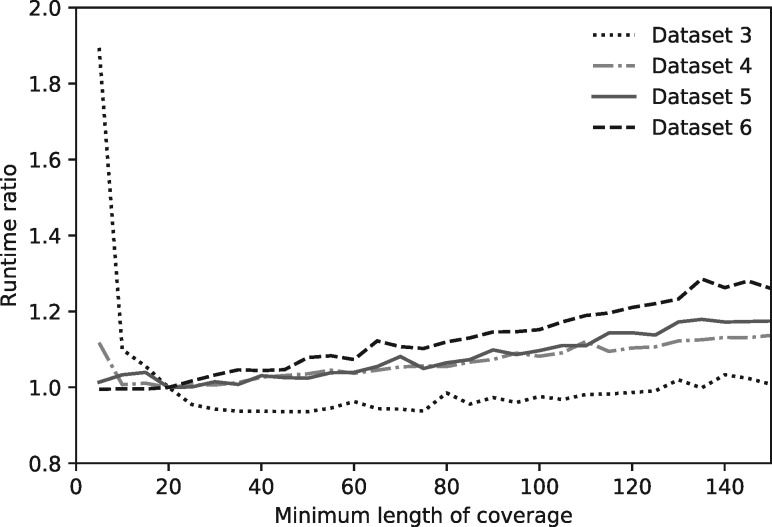

Selection of MinL determines the extent of decomposition, with greater values leading to less decomposition. The optimal MinL differs mildly among datasets (Fig. 2). It is clear from Figure 2 that MinL = 20 is near optimal for datasets we tested, and more importantly, that the runtime is relatively robust to substantial variation in MinL choice, so it is not necessary to find the optimal MinL for each dataset. We have set the default MinL to 20, which results in the number of components nSub being <10 for all the datasets we have tested (see Table 1).

Fig. 2.

Runtime ratio as a function of MinL value for four different datasets with a fixed query dataset. Since the runtime for the four different sized datasets differ significantly, each curve is scaled to the runtime of its own at MinL=20

Table 1.

Genomic interval datasets used as database or query for performance evaluation

| Genomic | File name | N | Average | dcRatio | nSub | nHits |

|---|---|---|---|---|---|---|

| datasets | (.bed format) | (x1000) | width | (%) | (x1000) | |

| Dataset 1 | fBrain-DS14718 | 199 | 460 | 0.0 | 1 | 321 |

| Dataset 2 | exons | 439 | 311 | 0.2 | 2 | 2633 |

| Dataset 3 | chainOrnAna1 | 1957 | 3688 | 28.1 | 6 | 1 086 692 |

| Dataset 4 | chainVicPac2 | 7684 | 913 | 13.1 | 8 | 3 892 116 |

| Dataset 5 | chainXenTro3Link | 50 981 | 68 | 7.0 | 7 | 18 432 255 |

| Dataset 6 | chainMonDom5Link | 128 187 | 70 | 5.6 | 7 | 27 741 145 |

| Dataset 0 | chainRn4 | 2351 | 2113 | 22.2 | 6 | 1 375 224 |

Note: N is the number of intervals, dcRatio is the ratio of the number of intervals that cover their immediate next over N, nSub is the number of total sublists for AIList, nHits is the number of overlaps with query Dataset 0, Datasets 1 and 2 are from BEDTools, and others are from UCSC.

Algorithm 1 lists a simplified O(Nlog2N) algorithm for constructing an AIList, including both decomposition and augmentation. The function AddRunningMax is a simple linear scan to determine the maxE value.

Algorithm 1.

AIList Construction Algorithm

Input: interval list iL, MinL

Output: aiL, hSub

1: procedureAIListConstruction(iL, MinL)

2: sortListByStart(iL) ▹ sort

3:

4: repeat

5:

6:

7: ▹ start of the next sublist

8:

9: until

10: return aiL, hSub

11: end procedure

1: procedureDecompose(L, MinL)

2:

3: forto do

4: if covers MinL intervals then ▹ find , see text

5:

6: else

7:

8: end if

9: end for

10: ▹ Augmentation

11: return

12: end procedure

2.3 The AIList query algorithm including decomposed sublists

Queries against the decomposed list structure are similar to the original case, but now done independently on each sublist. The decomposition process has divided the original list into two or more flattened or nearly flattened sublists, so queries in each sublist are close to optimal. The cost of this improvement is that we now require additional binary searches to get the indices IE for each sublist. This approach thus implements a tradeoff between number of binary search comparisons and number of extra interval comparisons due to interval containment. Similar to other algorithms mentioned above, the query time complexity for AIList is , but the average number of extra comparisons m is minimized. The search algorithm is listed in Algorithm 2.

Algorithm 2.

AIList Search Algorithm

Input: AIList aiL, sublist header hSub, query

Output: Overlaps H

1: procedureAIListSearch()

2:

3: forto do

4:

5: whileand do

6: ifthen

7:

8: end if

9:

10: end while

11: end for

12: return H

13: end procedure

3 Results

AIList is implemented in C. To evaluate the efficiency of AIList, we compared its performance with AITree, NCList and R-trees. For AITree we used the rbtree-based interval tree from Linux kernel (see Supplementary Material); for NCList we used the C code implemented by Alekseyenko and Lee (2007) and for R-tree we used the popular C++ implementation BEDTools v2.25.0 by Quinlan and Hall (2010). AIList outperforms other approaches on all datasets that we have tested so far. Table 1 lists seven real and representative genomic datasets ranging in size from 199 000 to 128 million intervals. In each of the experiments we describe below, we used Dataset 0 as the query set, with the other six used as the database interval set. All experiments were run on a computer with 2.8 GHz CPU and 16 GB memory, and the reported runtime for each method includes the time required for data loading, data structure construction, searching and result output.

For all datasets, AIList outperformed all other methods (Table 2). The improvement was more dramatic for the datasets with more complex containment structure: for flat Dataset 1 and near flat Dataset 2, AIList is 120–150% faster than AITree and NCList and two times faster than BEDTools. For datasets with greater containment, AIList is up to five times faster than AITree and NCList, and up to 18 times faster than BEDTools. Furthermore, AIList consumed substantially less memory than the other algorithms (Table 3).

Table 2.

Runtime (seconds) of AIList, AITree, NCList and BEDTools for datasets listed in Table 1

| Runtime(s) | Dataset 1 | Dataset 2 | Dataset 3 | Dataset 4 | Dataset 5 | Dataset 6 |

|---|---|---|---|---|---|---|

| AIList | 0.916 | 1.023 | 7.189 | 19.465 | 78.640 | 141.986 |

| AITree | 1.235 | 1.532 | 24.053 | 73.670 | 368.177 | 581.189 |

| NCList | 1.080 | 1.192 | 26.094 | 101.796 | 419.106 | 661.759 |

| BEDTools | 1.741 | 2.073 | 46.533 | 139.8467 | 1, 430.620 | NA |

Note: Datasets 1–6 are used as database and Dataset 0 is as query set. No result for BEDTools on Dataset 6 since it took nearly all of the machine memory (16 GB) and was terminated.

Table 3.

Memory-usage (%, memory used by a program divided by the total machine memory) of AIList, AITree, NCList and BEDTools for large datasets on a computer with a total memory of 16 GB

| Dataset | AIList | AITree | NCList | BEDTools |

|---|---|---|---|---|

| Dataset 5 | 3.7 | 19.6 | 6.2 | 95.4 |

| Dataset 6 | 9.2 | 49.2 | 19.1 | NA |

Note: BEDTools was terminated for Dataset 6 because it took all machine memory.

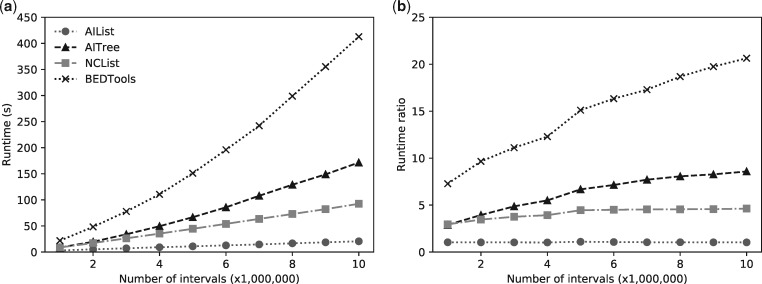

To evaluate how AIList, AITree, NCList and R-tree scale in practice for differing sizes of input dataset, we selected a single comparison (Dataset 0 queried against Dataset 5 as input dataset) and then downsampled the input dataset in increments of 1 000 000 intervals. Figure 3a shows four curves of runtime versus input dataset size. Figure 3b shows the ratio of the runtime compared to AIList. As input dataset size increases from 1 to 10 million, the runtime ratio increases from 2.8 to 8.3 for AITree, from 2.8 to 4.5 for NCList and from 6.9 to 20 for BEDTools, demonstrating the superior scaling of AIList.

Fig. 3.

Performance comparison of AIList with AITree, NCList and BEDTools as a function of the size of the target dataset. Dataset 0 is the query set, target datasets are subsets of Dataset 5 by sampling (a): Runtime in seconds plotted against increasing number of intervals; (b): Runtime ratio of each tool compared to the AIList runtime

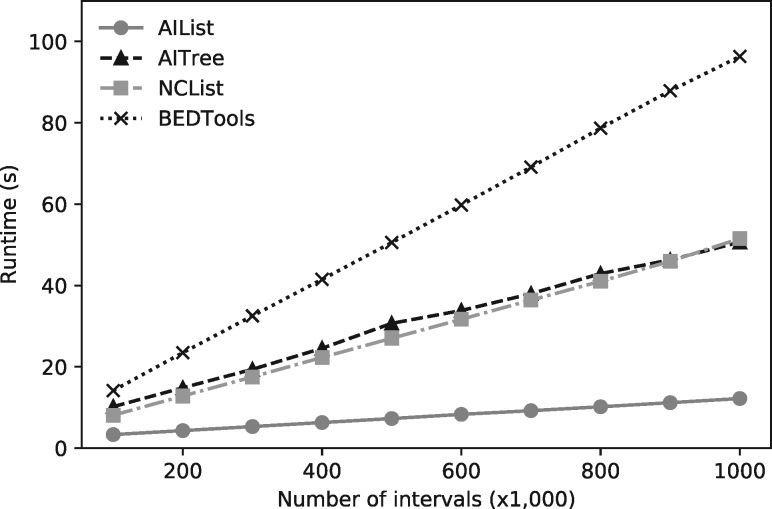

We also performed a similar simulation experiment by subsampling the query, which demonstrates the practically lower scaling of the AIList algorithm (Fig. 4). As expected, all algorithms scale linearly but AIList has the lowest coefficient.

Fig. 4.

Performance comparison of AIList with AITree, NCList and BEDTools as a function of the size of the query interval set. Dataset 4 is the target dataset, query sets are subsets of Dataset 0 by sampling

4 Conclusion

We defined a novel data structure that dramatically improves on the enumeration of interval overlaps. Our method pairs the techniques of list augmentation and list decomposition to provide tighter terminal conditions for overlap checking, which reduces the number of extra comparisons that must be made. We demonstrated that this method outperforms existing methods across a series of datasets that range from flat structure to highly nested interval containment.

Similar to the NCList, the AIList data structure contains only one extra data element MaxE, so it is more efficient than the AITree (three extra elements) and the R-tree (contains duplicate elements); but the AIList header size is negligible, while the NCList header size can be comparable to database size (see Supplementary Material for details). Another advantage of the AIList is that inserting a new interval element requires simply checking the few sublists to find roughly where it belongs, which is much more flexible than NCList, which can require reconstructing the whole data structure. Because of its simple data structure, AIList is also the simplest to implement (see Algorithms 1 and 2, and source code). Finally, as shown in Table 3, AIList takes the least memory.

Taken all these together, AIList provides a significantly improved fundamental operation for highly scalable genomic data analysis.

Funding

This work was supported by the Univesrity of Virginia 4-VA and by National Institutes of Health grant [1R35GM128636-01] (to N.C.S.).

Conflict of Interest: none declared.

Supplementary Material

References

- Alekseyenko A.V., Lee C.J. (2007) Nested Containment List (NCList): a new algorithm for accelerating interval query of genome alignment and interval databases. Bioinformatics, 23, 1386–1393. [DOI] [PubMed] [Google Scholar]

- Cormen T.H. et al. (2001) Introduction to Algorithms, Second Edition. The MIT Press, Cambridge, MA, USA. [Google Scholar]

- Giardine B. et al. (2005) Galaxy: a platform for interactive large-scale genome analysis. Genome Res., 15, 1451–1455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jalili V. et al. (2018) Next generation indexing for genomic intervals. In: IEEE Transactions on Knowledge and Data Engineering. pp. 1.

- Kent W.J. et al. (2002) The human genome browser at UCSC. Genome Res., 12, 996–1006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Layer R.M. et al. (2018) GIGGLE: a search engine for large-scale integrated genome analysis. Nat. Methods, 15, 123–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li H., Durbin R. (2011) Fast and accurate short read alignment with Burrows-Wheeler transform. Bioinformatics, 25, 1754–1760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neph S. et al. (2012) BEDOPS: high-performance genomic feature operations. Bioinformatics, 28, 1919–1920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinlan A.R., Hall I.M. (2010) BEDTools: a flexible suite of utilities for comparing genomic features. Bioinformatics, 26, 841–842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson J.E. (2006) fjoin: simple and efficient computation of feature overlaps. J. Comput. Biol., 13, 1457–1464. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.