Abstract

The brain performs various cognitive functions by learning the spatiotemporal salient features of the environment. This learning requires unsupervised segmentation of hierarchically organized spike sequences, but the underlying neural mechanism is only poorly understood. Here, we show that a recurrent gated network of neurons with dendrites can efficiently solve difficult segmentation tasks. In this model, multiplicative recurrent connections learn a context-dependent gating of dendro-somatic information transfers to minimize error in the prediction of somatic responses by the dendrites. Consequently, these connections filter the redundant input features represented by the dendrites but unnecessary in the given context. The model was tested on both synthetic and real neural data. In particular, the model was successful for segmenting multiple cell assemblies repeating in large-scale calcium imaging data containing thousands of cortical neurons. Our results suggest that recurrent gating of dendro-somatic signal transfers is crucial for cortical learning of context-dependent segmentation tasks.

Author summary

The brain learns about the environment from continuous streams of information to generate adequate behavior. This is not easy when sensory and motor sequences are hierarchically organized. Some cortical regions jointly represent multiple levels of sequence hierarchy, but how local cortical circuits learn hierarchical sequences remains largely unknown. Evidence shows that the dendrites of cortical neurons learn redundant representations of sensory information compared to the soma, suggesting a filtering process within a neuron. Our model proposes that recurrent synaptic inputs multiplicatively regulate this intracellular process by gating dendrite-to-soma information transfers depending on the context of sequence learning. Furthermore, our model provides a powerful tool to analyze the spatiotemporal patterns of neural activity in large-scale recording data.

Introduction

The ability of the brain to learn hierarchically organized sequences is fundamental to various cognitive functions such as language acquisition, motor skill learning, and memory processing [1–8]. To adequately process the cognitive implications of sequences, the brain has to generate context-dependent representations of sequence information. For instance, in language processing the brain may recognize "nueron" as a misspelling of "neuron" if the brain knows the word "neuron" but not the word "nueron". However, the brain recognizes "affect" and "effect" as different words even if the two words are very similar. "Break" and "brake" are also different words although these words combine the same letters in different serial orders. The brain can also recognize the same word presented in different temporal lengths. All these examples suggest the inherent flexibility of context-dependent sequence learning in the brain. However, the neural mechanisms underlying this flexible learning, which occurs in an unsupervised manner, remain elusive.

Segmentation or chunking of sensory and motor information is at the core of the context-dependent analysis of hierarchically organized sequences [9–12]. However, little is known about the neural representations and learning mechanisms of hierarchical sequences. Recently, neurons encoding long-range temporal correlations in the song structure were found in the higher vocal center of songbirds [13]. These neurons responded differently to the same syllables (the basic elements of bird song) depending on the preceding phrases (constituted by several syllables) or the succeeding phrases. Different responses of the same neurons in different sequential contexts were also found in the monkey supplementary motor area [14,15]. Hierarchical sequences are often assumed to mirror the hierarchical organization of brain regions. However, the human premotor cortex jointly represents movement chunks and their sequences [16] and linguistic processing in humans also lacks an orderly anatomical representation of sequential context [17].

Unsupervised, context-dependent segmentation is difficult in computational models. Recurrent network models can generate rich sequential dynamics, but these networks are typically trained by a supervised method. Spike-timing-dependent plasticity was used for unsupervised segmentation of input sequences in a recurrent network model [18]. However, while the model worked for simple hierarchical spike sequences, it could not learn context-dependent representations for overlapping spike sequences. Single-cell computation with dendrites could solve a variety of temporal feature analysis including the unsupervised segmentation of hierarchical spike sequences [19], supporting the role of dendrites in sequence processing [20]. However, context-dependent segmentation was also difficult for this model. Although the segmentation problem has been partially solved, recurrent connections alone or dendrites alone are insufficient for solving the difficult segmentation tasks such as exemplified in the beginning of this article.

Here, we demonstrate that a combination of dendritic computation and a recurrent gating dramatically improves the ability of neural networks to context-dependently segment hierarchical spike sequences. Our central hypothesis is that recurrent synaptic input multiplicatively regulates the degree of gating of instantaneous current flows from the dendrites to the soma. We derive an optimal learning rule for afferent and recurrent synapses to minimize a prediction error. The resultant dendrites generally learn redundant representation of multiple sequence elements while recurrent input learns to selectively gate the dendritic activity suitable for the given context. Recurrent networks with gating synapses were recently used in supervised sequence learning [21].

There is an increasing need for efficient methods to detect and analyze the characteristic spatiotemporal patterns of activity in large-scale neural recording data. We demonstrate that the proposed model can efficiently detect cell-assembly structures in large-scale calcium imaging data. We show two example cases of such analysis in the hippocampus and visual cortex of behaving rodents. In particular, the latter dataset contains the activity of tremendously many neurons (~ 6,500), and analyzing the fine-scale spatiotemporal structure of activity patterns is computationally costly and difficult for any other methods. In contrast, the data size hardly affected the performance and speed of learning in our model. Surprisingly, the efficiency was even somewhat higher for larger data sizes. These results highlighted the crucial role of recurrent gating in amplifying the weak signature of cell assembly structure detected by the dendrites.

Results

The dendritic computation with recurrent gating network

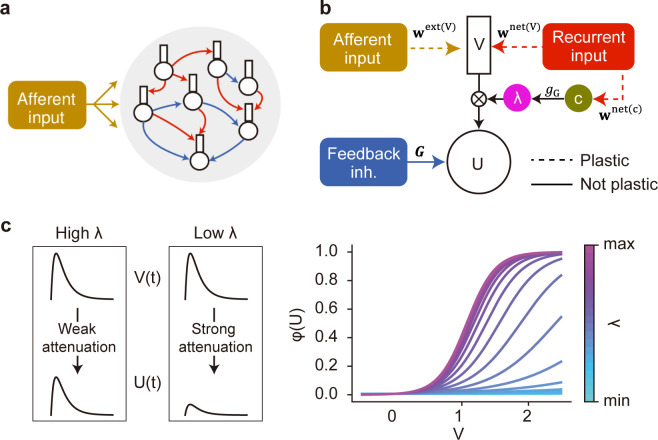

Our recurrent network model consists of two-compartment neurons with somatic and dendritic compartments (Fig 1A and 1B). The dendritic components receive hierarchically structured afferent input and recurrent synaptic input, and the sum of these inputs drives the activity of the dendritic component. Afferent and recurrent connections onto the dendritic component are plastic and can be either excitatory or inhibitory. Before training, the weights of these connections are initialized by a Gaussian distribution with mean zero (see Methods). The somatic component receives a nonmodifiable uniform feedback inhibition, which induces competition among neurons. A similar two-compartment model without recurrent inputs has been studied in segmentation problems [19]. Here, we hypothesize that recurrent synaptic input multiplicatively amplifies or attenuates a current flow from the dendrite to the soma in an input-dependent fashion: The stronger the recurrent input, the larger the dendro-somatic current flow. This "gating" effect is described by a non-linear function of recurrent input to the neuron and controls the instantaneous impact of dendritic activity on the soma (Fig 1C). As in the previous models [19,22], all synaptic weights were trained to minimize the prediction error between two compartments. However, we considered the gated rather than raw dendritic activity in the error term. The rule derived for afferent synapses is the same as our previous rule [19] except for the gated dendritic activity in the error term. The learning rule for gating recurrent connections is novel and depends on the raw dendritic activity prior to gating. We will show below that the recurrent-driven gating plays a pivotal role in the learning of flexible segmentation. Unless otherwise stated, below the results are shown for network models with multiplicative recurrent inputs but no additive ones. In this setting, afferent inputs can evoke large somatic responses if and only if both dendritic activity and gating effect are sufficiently strong. A network model with both additive and multiplicative recurrent inputs will be considered later.

Fig 1. A recurrent gated network of compartmentalized neurons.

(a) A network of randomly connected compartmentalized neurons is considered. The red and blue arrows indicate recurrent connections to the dendrite, and the feedback inhibition to the soma, respectively. (b) Each neuron model consists of a somatic compartment and a dendritic compartment. Dashed and solid arrows indicate plastic and non-plastic synaptic connections, respectively. Unless otherwise specified, the dendrite only receives afferent inputs. The somatic compartment integrates the dendritic activity and inhibition from other neurons. Gating factor λ is determined by recurrent inputs and regulates the instantaneous fraction of dendritic activity propagated to the soma. (c) The effect of gating factor on the somatic response is schematically illustrated (left). The relationship between the dendritic potential and somatic firing rate is shown for various values of gating factor (right).

The role of recurrent-driven gating in complex segmentation tasks

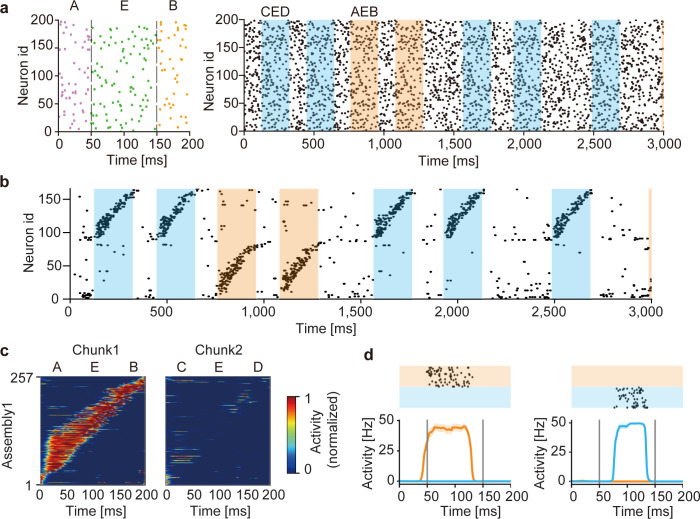

We first demonstrate the segmentation of two spike pattern sequences (chunks) repeated in input spike trains (Fig 2A). Each chunk was a combination of three fixed spike patterns out of the total five: “A”, “B”, “C”, “D” and “E”, where the component pattern “E” appeared in both chunks. Therefore, the two chunks were mutually overlapped. Throughout this paper, we fixed the average firing rate of each input neuron at 5 Hz over the entire period of simulations, and chunks were separated by random spike trains with variable lengths of 50 to 400 ms. As training proceeded, the correlation between the somatic response and gated dendritic activity increased (S1 Fig). Interestingly, the trained network generated two distinct cell assemblies, each of which selectively responded to one of the chunks (Fig 2B and 2C). Notably, each cell assembly responded to the pattern “E” in a preferred chunk of the cell assembly but not in a non-preferred chunk (Fig 2C and 2D). A network with a constant gating function trained on the same afferent input failed to discriminate the pattern “E” in different chunks and consequently could not learn the chunks (S2 Fig). The result suggests the crucial role of the recurrent-driven gating in the segmentation task.

Fig 2. Learning of overlapping chunks.

(a) Two chunks “AEB” (orange shade) and “CED” (blue shade) were repeated in input Poisson spike trains (left). The chunks were separated by random spike trains with variable lengths of 50 to 400 ms (unshaded). All neurons had the same firing rate of 5 Hz. Example spike trains during the initial 3 seconds are shown (right). In each chunk, the component patterns “A”, “B”, “C”, and “D” were 50 ms-long and the shared component “E” was 100 ms-long. (b) Output spike trains of the trained network model. Neurons were sorted according to their onset response times, and only 160 out of the total 500 neurons are shown for the visualization purpose. A selective cell assembly emerged for each chunk. (c) The average responses of “AEB”-selective assembly to chunks “AEB” (left) and “CED” (right) are shown. The responses were averaged over 20 presentations of the chunks and normalized by the maximal response to the preferred chunk (i.e., chunk 1). (d) Responses of a “AEB”-selective neuron (left) and a “CED”-selective neuron in the trained network are shown. The raster plots show responses over 20 trials.

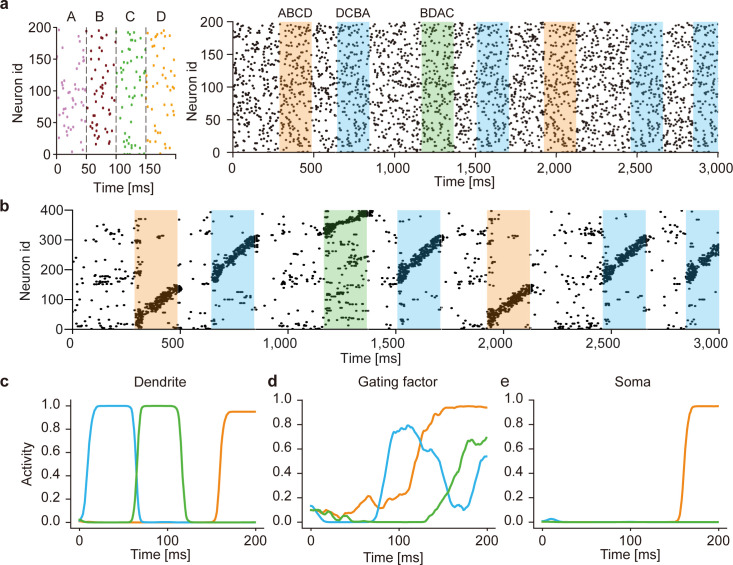

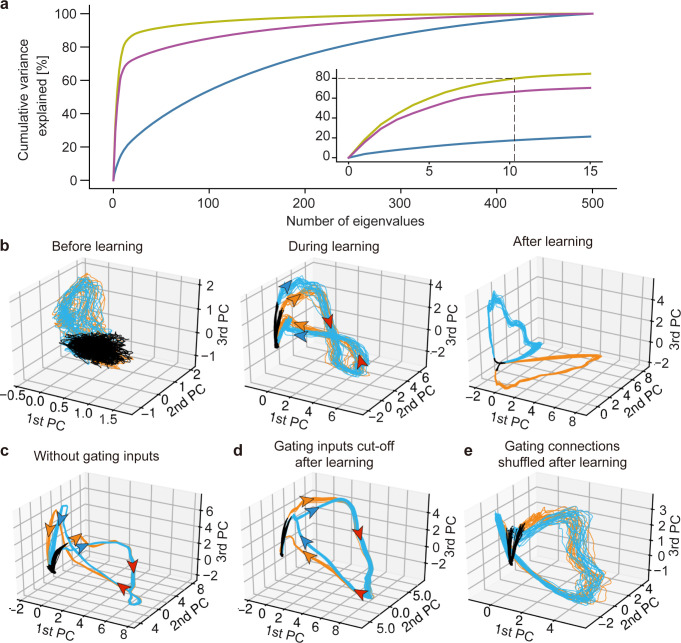

The above results suggest that this model can discriminate the context of sequences (i.e., the relationship between “E” and other component patterns in a chunk). However, it is also possible that the model separated the overlapping chunks merely relying on the component patterns that were not common between the two chunks and/or on the nonhomogeneous occurrence probabilities among the component patterns. To exclude these possibilities, we examined the case where different chunks shared all component patterns with equal frequencies (Fig 3A, left). In other words, the same component patterns occurred in different chunks in different orders (i.e., “ABCD”, “DCBA”, “BDAC”). During learning, the model was exposed to irregular spike trains recurring the three chunks intermittently (Fig 3A, right). The model developed distinct cell assemblies responding selectively to one of these chunks (Fig 3B and see S3A and S3B Fig), thus successfully discriminating the same components belonging to different chunks.

Fig 3. Learning of complex spike sequences with recurrent gating.

(a) Three chunks were presented, which consists of four identical component patterns with its specific order (left). The example input spike train during first three seconds are shown (right). The regions filled in orange, blue and green represent the times when chunks "ABCD", "DCBA" and "BDAC" were presented, respectively. All neurons generate Poisson spike with the constant firing rate of 5Hz. Random spike sequences were presented in the unshaded areas. (b) Output spike trains of the trained network are shown. Neurons were sorted according to their onset response times, and only 400 out of 1200 neurons are shown for the visualization purpose. (c-e) The time evolution of dendritic activity, gating factor, and somatic response are shown in a neuron. Orange, blue and green traces represent the responses to its preferred component pattern "D" when the corresponding chunks were presented. The activities were averaged over 20 trials.

The network mechanism of context-dependent gating

In this model, the recurrent-driven gating provides context-dependent signals necessary for segmenting overlapping chunks. To gain an insight into the role of recurrent gating in learning, we investigated how the somatic and dendritic compartments and gating factors of individual neurons behave during training. The dendritic compartments of these neurons responded to a preferred component pattern irrespective of which chunk the component appeared, showing that the dendrites were unable to discriminate the same component pattern as shared by different chunks (Fig 3C). In contrast, the gating factors responded differently to the same component pattern appearing in different chunks depending on the preceding component patterns (Fig 3D). This selective gating is thought to arise from a memory effect which is generated by recurrent synaptic input and determined by the previous state of the network. As a consequence, the somatic compartment could selectively respond to a particular chunk that strongly activated the gating factor during the presentation of the preferred component pattern (Fig 3E). Similar results are shown for other neurons in the trained network (S3C Fig).

To explore the mechanism of the context dependent computation in our model, we analyzed the structure of recurrent connections in the trained model. Here, we first classified network neurons into 12 sub-groups according to the selectivity for the 4 component patterns of the 3 chunks. The average values of the weights between each group were then calculated to quantify the average strength of gating recurrent connections between the subgroups (S4A Fig). The diagonal elements of the matrix are greater than the non-diagonal elements, suggesting that neurons within the same sub-groups are connected by strong excitatory synapses. A further analysis of the interactions between the different assemblies (S4B Fig) shows each assembly has excitatory connections to the assembly corresponding to the next component in the same chunk (e.g., “A” to “B” in chunk 1), while inhibitory connections to the assemblies for the previous component (e.g., “B” to “A” in chunk 1). Further, strong inhibition was formed among the assemblies selective to the same letters in different chunks (e.g., "B" in chunk 1 and "B" in chunk 2). Thus, a structured connectivity consistent with sequence structure emerges from the context-dependent learning.

The single-cell model proposed previously [19] could not perfectly segment similar sequence patterns as shown in Figs 2 and 3. Though the consistency between somatic and dendritic activities forces the neuron to respond to a specific input pattern, it could not perfectly discriminate similar patterns involving overlapping components. In the recurrent gating network, recurrent input on each neuron selectively passes one of similar input patterns from the dendrite to the soma, enabling the context-dependent segmentation. This cooperative function of recurrent synaptic input is difficult to prove analytically but is understandable because both afferent and recurrent synapses are trained by the same learning rule to achieve the same goal, that is, an optimally predictable somatic response.

Like other learning models, the performance of the proposed model varies depending on the values of multiple parameters. We evaluated the trainable number of sequences for a given size of the network. This number was larger in a network with 600 neurons than in that of 300 neurons, indicating that performance in learning is degraded as the number of input sequences is increased (S5A Fig). Second, we measured how the performance depends on the parameter γ, which determines the hysteresis effects in the mean and variance of past neuronal activities (Eq (5)-(6) in Methods). As we have shown previously [19], standardization with moments of membrane potentials is crucial for avoiding a trivial solution. We found that the performance of learning is deteriorated as the parameter γ is increased (S5B Fig). This result is reasonable as a larger value of γ weakens the hysteresis effect, making the standardization less stable. Finally, we explored to what extent the strength of static recurrent inhibitory connections affect the model performance. We measured the performance at various values of the scaling parameter J and found the best performance at J = 0.5 (S5C Fig). These results show that the model does not require a fine tuning of parameters as the performance is not narrowly peaked.

Our model developed low-dimensional representations that strongly reflect the temporal structures of chunks. The principal component analysis (PCA) of the network responses to the overlapping chunks shown in Fig 2 revealed that a smaller number of eigenvectors explained a larger cumulative variance as the training progressed (Fig 4A). At different stages of learning, the low-dimensional trajectories differently represented the chunks. Before learning, the two chunks and unstructured input segments (i.e., random spike trains) occupied almost the same portions of the low-dimensional trajectories (Fig 4B, left). At the mid stage of learning, the portions of the chunks grew while those of the random segments shrank (Fig 4B, middle). Neural states evolved along separate trajectories at the initial (corresponding to “A” and “C”) and final (corresponding to “B” and “D”) parts of the chunk-representing portions whereas the middle part (corresponding to “E”) was tangled. After sufficient learning, the trajectories were completely separated (Fig 4B, right). As previously shown, recurrent gating crucially contributed to this separation. Indeed, the network generated almost overlapping trajectories for the pattern "E" if we fastened recurrent gating during learning and test (Fig 4C) or if we trained the model with recurrent gating but fastened it during test (Fig 4D). Two trajectories for the overlapping chunks were not clearly separable due to large fluctuations if we randomly shuffled the learned recurrent connections to destroy their connectivity pattern (Fig 4E). Thus, the context-dependent gating depends crucially on the learned fine structure of recurrent connections.

Fig 4. Principal component analysis of the trained network.

(a) Cumulative variance explained of the PCs of the activities of before (blue), during (purple), and after (yellow) learning. Inset is an expanded view for major eigenstates. (b) The PCA-projected trajectories of network activity before, during, and after training are shown in the space spanned by PC1 to PC3. The network was trained with the same task as in Fig 2. The black, orange, and blue trajectories represent the periods during which random spike input, chunk 1 and chunk 2 were presented, respectively. (c) Recurrent gating was fixed in all neurons during the whole simulation. (d) The gating factor was clamped after the network in (b) were trained. (e) Recurrent connections were randomly shuffled after the network in (b) were trained. The directions of state evolution are indicated by arrows along the PCA-projected trajectories. The arrows are colored according to the corresponding chunks on the separated parts of the trajectories while they are colored in red on unseparated parts.

While the network model could learn noisy chunks as far as jitters in spike times were not too large (S6A and S6B Fig), the magnitude of jitters strongly influenced learning speed. This was indicated by the slow saturation of normalized mutual information (see Methods) between network responses and the true labels of chunks during learning (S6C Fig). The normalized mutual information took near the maximum value (≈ 1) as far as the variance of jitters fell within the length of chunks (50 ms). This information dropped rapidly beyond the chunk length (S6D Fig).

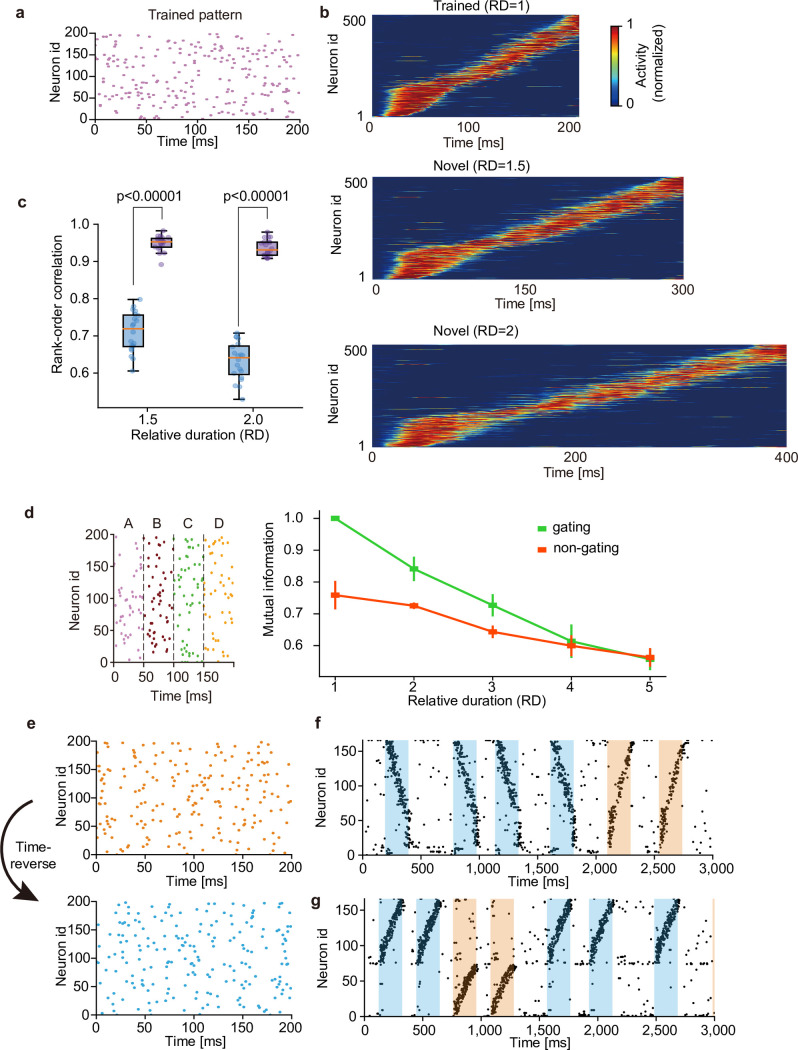

Just like the brain can recognize a learned sequence irrespective of the length of its presentation, a learned pattern is detectable for the network even if the pattern is presented with a length different from the learned one (Fig 5A and 5B). We quantified the similarity of network responses to otherwise the same input patterns with different lengths. By calculating the rank-order correlations between responses to stimuli presented with three different lengths, we measured to what extent the serial order of neural responses are preserved over different conditions. In the network that learned the original pattern, the similarity increased significantly for all three durations of stimulus presentation (Fig 5C), suggesting that our model learns the manifold of temporal spike patterns rather than individual specific patterns. We examined the relative duration (RD) of input sequence beyond which the gating and non-gating models lose the ability of recognizing a learned sequence. The spike pattern used in Fig 3 was used. As shown in Fig 5D, performance was gradually deteriorated in both models as the RD was increased. While the gating model showed better performance than the non-gating model for RDs less than 3, the superiority of the gating model disappeared when the RD reached 4. These results demonstrate that the gating model recognizes sequences with RDs up to about 3. The robustness shown above raises a question about whether the present model can discriminate precise temporal spike patterns. Indeed, the network model clearly discriminated between similar but different input patterns when the inputs were learned as separate chunks. To study this, we trained the network model with random spike trains involving a repeated temporal pattern and stimulated the learned model with the original pattern (Fig 5E, top) and its time-reversal version (Fig 5E, bottom). The cell assembly that only learned the original pattern also responded to the reversed pattern in a reversed temporal order, meaning that the different temporal patterns were not discriminable in this case (Fig 5F). Interestingly, the same network model trained with both original pattern and time-reversed pattern self-organized distinct cell assemblies selective for the individual patterns (Fig 5G). This result may account for discrimination between "break" and "brake" when these words were learned as separate entities.

Fig 5. Context-dependent learning of sequence information.

(a) For testing on time-warped patterns, the network was trained on random spike trains embedding a single pattern. (b) The trained network responded sequentially to the original and stretched patterns with two untrained lengths (i.e., the relative durations RD of 1.5 and 2). (c) Similarities of sequential order between the responses to the original and two untrained patterns were measured before (blue) and after (purple) learning. Independent simulations were performed 20 times, and p-values were calculated by two-sided Welch’s t-test. (d) The input spike pattern used in the task in Fig 3 was considered (left) to quantify the degree of time warping that can be tolerated by our network model. Performance of both gating and non-gating models trained by input patterns with various relative durations are shown. (e) A time-inverted spike pattern (bottom) was generated from a original pattern (top). (f) The network was exposed to the original pattern in (e) during learning, and its responses were tested after learning for both original and time-inverted patterns. Both patterns activated a single cell assembly. (g) The network was exposed to both original and time-inverted patterns during learning as well as testing. Two assemblies with different preferred patterns were formed. For the visualization purpose, only 160 out of 500 neurons are shown in (f) and (g).

Cell assembly detection in large-scale calcium imaging data

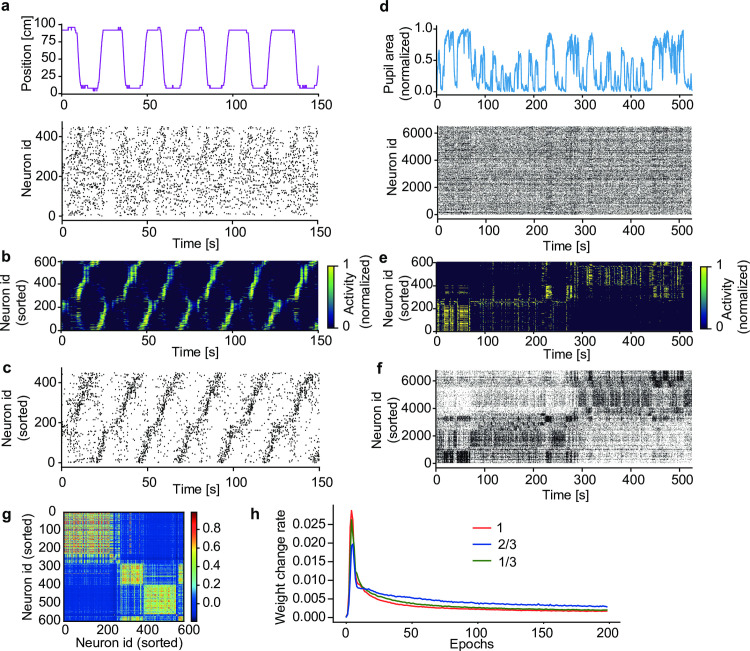

A virtue of our model is its applicability to analyzing large-scale neural recoding data. We show this in two calcium imaging data. The first data contains the activities of 452 hippocampal CA1 neurons recorded from mice running back and forth along a linear track between two rewarded sites (Fig 6A, top) [23]. Repetitive sequential activations of place cells were reported previously in the data (https://github.com/zivlab/island). For the use of our model, we binarized the data by thresholding activity of each neuron at the 50% of its maximal intensity (Fig 6A, bottom). After training, model neurons detected groups of input spike trains that tended to arrive in sequences, each of which was preferentially observed at a particular position of the track in a particular direction of run (Fig 6B). Sorting the activities of hippocampal neurons according to the sequential firing of model neurons (Methods) revealed place-cell sequences without referring to the behavioral data (Fig 6C).

Fig 6. Detecting salient activity patterns in calcium imaging data.

(a) The positions of a mouse (top) on a linear track and calcium imaging data of activity of 452 hippocampal CA1 neurons (bottom) were obtained from previously recorded data [23]. (b) The learned activities of model neurons were sorted according to their onset response times. (c) Each CA1 neuron was associated with a model neuron having the highest mutual correlation with the CA1 neuron. Then, the CA1 neurons were sorted according to the serial order of model neurons shown in (b). (d) The time course of normalized pupil area (top) and simultaneously recorded activities of 6,532 visual cortical neurons (bottom) were calculated from previously recorded data [24,25]. (e) Activity of a trained network model was sorted according to their onset response times (Methods). (f) Activities of the cortical neurons were sorted as in (c). (g) Correlation matrix of the population of network neurons is shown. (h) Learning curves over 200 epochs for various size of input neurons are shown. Red, blue and green traces show learning curves with the number of input neurons 1, 2/3, 1/3 times smaller than original 6,532 neurons. The weight change rate was calculated as the ratio of the sum of the absolute values of synaptic changes to the sum of the absolute values of all synapses.

Our second example is from the visual cortex in mice running on an air-floating ball [24]. The 525 second-long dataset [25] contains the activity of 6,532 neurons recorded by two-photon calcium imaging from the visual cortex as well as the behavioral data (running speed, pupil area, and whisking) monitored simultaneously with an infrared camera (Fig 6D) (https://figshare.com/articles/dataset/Recordings_of_ten_thousand_neurons_in_visual_cortex_during_spontaneous_behaviors/6163622/4). Due to the large data size, detecting cell assemblies is computationally challenging in this dataset. After training, the network model formed several neural ensembles, each of which displayed distinct spatiotemporal response patterns (Fig 6E). Interestingly, these neural ensembles showed their maximal responses at different periods of time, and the pupil area also changed its maximal size depending on active neural ensembles (Fig 6D and 6E). By sorting cortical neurons according to the response patterns of model neurons (Methods), we could find the repetition of distinct cell assemblies in the visual cortex (Fig 6F). The result revealed that active cell assemblies were changed between the early (< 280–290 s) and late epoch of spontaneous behavior, despite that there was no clear distinction in behavior (S7 Fig). To find the cell-assembly structures, we grouped co-activated model neurons (Fig 6G: see Methods). Unexpectedly, the time necessary for learning did not change much with data size, or the time was even slightly shorter for larger data sizes (Fig 6H). Presumably, this unintuitive result was because each cell assembly was represented by more neurons in larger data [19].

As the gating mechanism is crucial for context-dependent computation, we studied the effect of gating on the analysis of neural recording data. We first applied the model without recurrent gating (this model is equivalent to the previous feedforward network [19]) to the analysis of hippocampal CA1 data. Interestingly, without recurrent gating, the model could not separate the two sequences corresponding to forward and backward runs (S8A Fig). Next, we applied the non-gating model to the data recorded from the mouse visual cortex. The model showed structured activity patterns (S8B Fig) and detected cell assemblies (S8C Fig). However, compared to the gating model, the non-gating model generated monotonous responses, suggesting that the cell assemblies detected were contaminated (S8D Fig). Further, the responses of the gating model were significantly more correlated with the various behaviors of mice than those of the non-gating model (S8E Fig). These results show the crucial contribution of recurrent gating to cell assembly detection.

Redundant information representations on dendrites

Evidence from the visual cortex [26], retrosplenial cortex [27], and hippocampus [28] suggested that the representations of sensory and environmental information in cortical neurons are more redundant on the dendrites compared to the soma. The dendrites can have multiple receptive fields while the soma generally represents only one of these receptive fields. The soma is likely to access information represented in a subset of the dendritic branches that share the same receptive field. A similar redundant coding occurs in the present somato-dendritic sequence learning.

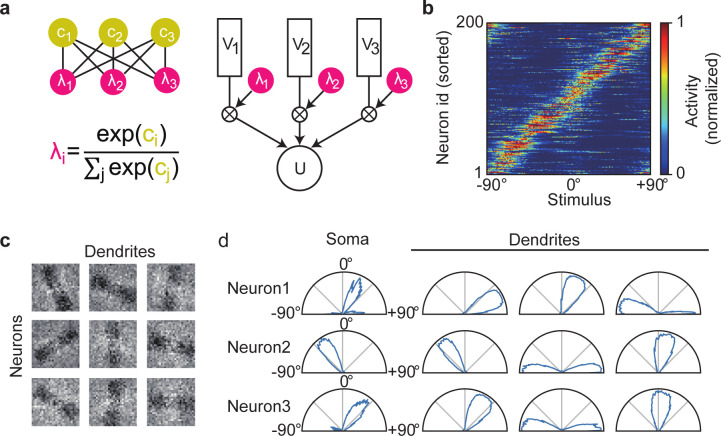

To show this, we constructed a recurrent network of neurons having three dendritic components and simulated how the model learns orientation tuning. The individual dendritic branches were assumed to undergo independent recurrent gating and mutual competition through softmax (see Methods) (Fig 7A). We repeatedly presented a 40 ms-long random sequence of noisy binary images of oriented bars every 80 ms. The size of each image was 28×28 (= 784) pixels and each bar has a width of 7 pixels. Each pixel was flipped with the probability of 0.1, and circular mass was applied to the images to suppress artifacts from the edges. Input neurons encoded the current value of a pixel by firing with 10 Hz.

Fig 7. Redundant dendritic representations of preferred sensory features.

(a) A schematic illustration of the neuron model with three dendritic compartments. The dendritic branches have independent gating factors, which compete with each other by softmax. (b) Somatic responses are shown for all neurons in the trained network. (c) Trained weight matrices are displayed for afferent inputs to three dendritic branches of three example neurons. (d) Somatic and dendritic activities of the three neurons in (c) are shown.

During learning, the competition suppressed the dendritic activities that were less correlated with the somatic responses. In the self-organized network, the somatic compartments acquired unique preferred orientations (Fig 7B). In contrast, dendritic branches displayed different preferred orientations in some neurons (Fig 7C and 7D). Such redundant representations were not found in neurons having three dendritic components without gating (S9 Fig). Thus, the learning rule and recurrent gating proposed in this study possibly underlie the somatic selection process of redundant dendritic representations.

Role of the conventional recurrent synaptic input

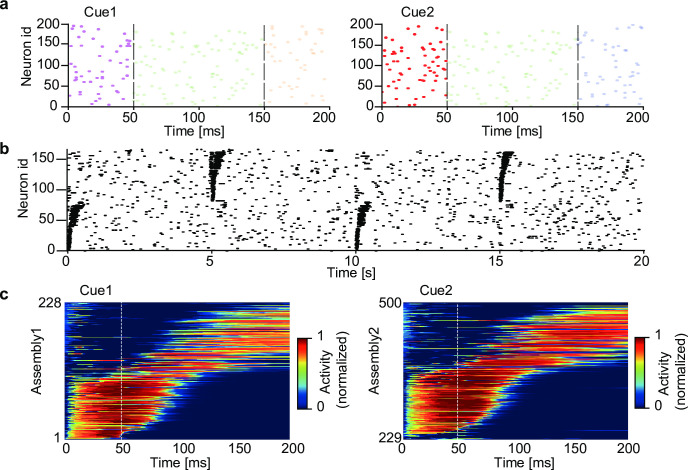

While the multiplicative recurrent input (i.e., recurrent gating) is crucial for segregating complex chunks, what is the role of additive (i.e., conventional) recurrent input? We demonstrate that the additive component is still needed for retrieving chunked sequences, namely, for pattern completion. We simulated a network model having both recurrent gating and non-vanishing additive recurrent inputs. Non-gating recurrent connections were trained with the same rule as for afferent connections. The network received a temporal input containing two mutually overlapping chunks (Fig 8A), and all synaptic connections underwent learning. The trained network formed two cell assemblies responding selectively to either of the chunks, as in the previous network without additive recurrent connections (S10 Fig). Since only additive recurrent input, but not recurrent gating, can activate postsynaptic cells, the additive input generates a reverberating activity, which may in turn assist the retrieval of learned sequences. This actually occurred in our simulations. Applying a cue stimulus, which was the first component pattern of one of the learned chunks, enabled the trained network to retrieve the subsequent component patterns in the chunk (Fig 8B and 8C). Thus, recurrent gating and additive recurrent inputs contribute to learning and retrieval of segmented sequence memory, respectively, in our network model.

Fig 8. Spontaneous completion of learned sequences.

The two chunks shown in Fig 2 were used for training a network having both recurrent gating and additive recurrent inputs. (a) In the testing phase, the first component pattern of each chunk (cue 1 or cue2; dark raster plots), but not the subsequent component patterns (light raster plots), was presented to the network. (b) The raster plot of network activities in the testing phase is shown. Cue 1 and cue 2 were presented alternately every 5 seconds. Neurons were sorted according to their onset response times, and only 160 out of the total 500 neurons are shown for the visualization purpose. (c) Sequential responses of the two assemblies were averaged over 20 trials. Vertical lines indicate the end of cue presentation. The sequential responses were evoked in the learned order.

Discussion

In this study, we constructed a recurrent network of compartmentalized neuron models to explore the neural mechanisms to segment temporal input. The crucial role of recurrent gating in context-dependent chunking of complex sequences is a major finding. Recurrent gating enables the instantaneous network state to regulate the degree of the dendro-somatic information transfer in single neurons in different contexts. In contrast, simple segmentation tasks do not necessarily require recurrent connections. With the help of recurrent gating, the model is capable of detecting the fine structures of cell assemblies in large-scale neural recording data.

Our model describes a possible form of integrating dendritic computation into computation at the network level. Learning in our model minimizes the prediction error between the soma and dendrites, thus improving the consistency in responding to synaptic input between the input terminal (dendrites) and the output terminal (soma) of single neurons. This enables the neurons to learn repeated patterns in synaptic input in a self-supervised manner. Previous theoretical studies utilized the local dendritic potential with a fixed gating factor to predict the somatic spike responses [19,22]. We extended the previous learning rule over recurrent connections such that recurrent input helps the dendritic compartment to predict the somatic responses by regulating the degree of signal transfer to the soma in a network state-dependent manner. As a consequence of recurrent gating, the soma can respond differently to the same sequence component depending on the preceding element in sequences, whereas the dendrites respond similarly to the same component. As in our model, some neurons in the premotor nucleus HVC in canaries change their responses to a song element depending on the preceding phrases in songs [13]. However, the response of HVC neurons can also vary according to the following phrases in songs. Such response modulations are likely to represent action planning, which was not considered in this study. Previous experimental and theoretical studies suggested that dendritic inhibition implements a gating operation on synaptic input [29–32]. The role of inhibition on the context-dependent segmentation of input should also be investigated further.

Previously, spike-timing-dependent plasticity was used for detecting recurring patterns in input spike trains in a recurrent neural network without recurrent gating [18]. While the model successfully discriminated relatively simple sequences, it could not discriminate complex sequences involving, for instance, overlapping spatiotemporal patterns. Our results suggest that additive recurrent connections are unlikely to be crucial for learning hierarchically organized sequences. These connections are necessary for retrieving chunked sequences but are unnecessary for learning these sequences. Our results instead suggest that such learning crucially relies on recurrent gating and its context-dependent tunning. Thus, multiplicative and additive recurrent connections have a clear division of labor in the present model. Recurrent synapses were shown to amplify the responses of cortical neurons having similar receptive fields [33], and this amplification resembles the selective amplification of a particular sequence component shown in this study. Further, it was previously shown that postsynaptic inhibition multiplicatively modulates the membrane potential of visual neurons in the locust [34]. In addition, a recent study suggests that the thalamocortical feedback is necessary for reliable propagation of a synaptically evoked dendritic depolarization to the soma in layer-5 pyramidal neurons [35]. This phenomenon is reminiscent of the gating mechanism proposed in this study. Thus, such a mechanism may be realized on a large spatial scale by cortico-thalamocortical recurrent circuits. However, the biological mechanisms of recurrent gating are open to future studies.

Some neural network models in artificial intelligence also utilize gating operations. A well-known example is Long Short-Term Memory (LSTM) for sequence learning and control [36]. The most general form of LSTM contains three types of gate functions, i.e., input, output, and forget gates, and these functions are optimized through supervised learning. In an interesting attempt at the learning-to-learn paradigm [37–39], LSTM was coupled with another network model for self-supervised learning of visual features [40]. In another LSTM-inspired model, neural dynamics with oscillatory gated recurrent input were used to convert spatial activity patterns to temporal sequences in working memory and motor control [21]. In contrast to LSTM, our model learns the optimal gating of the dendro-somatic information transfer by seeking a self-consistent solution to the optimization problem without supervision. Unlike in LSTM, our model only has a single type of gate, which likely corresponds to the input gate of LSTM, to regulate a current flow into the output terminal (soma) of the neuron. In LSTM, however, the most influential gate on learning performance is thought to be the forget gate [41,42]. It is intriguing to ask whether and how recurrent networks learn an optimal forget gate for unsupervised learning of hierarchical sequences in addition to the proposed gate.

When multiple dendritic branches compete for repeated patterns of synaptic input to a single neuron, recurrent gating enables these branches to learn different input features. Consequently, in each neuron, the dendrites learn more redundant representations of input information than the soma. In pyramidal neurons in the rodent primary visual cortex, the dendritic branches have heterogeneous orientation preferences while the somata have unique orientation preferences [26]. Similarly, in retrosplenial cortex [27] and place cells in the hippocampal CA3 [28], the dendritic branches have multiple receptive fields whereas the somata have unique receptive fields. Our model provides a possible neural mechanism for these redundant representations on the dendrites. When the environment suddenly changes, such redundancy may allow neural networks to quickly remodel their responses to adapt to the novel situation. However, the functional benefit of this redundancy has yet to be clarified.

A practically interesting feature of our model is its applicability to large-scale neural recording data. For such purposes, various mathematical tools have been proposed based on methods in computer science and machine learning [43–47]. However, many of these methods suffer time-consuming, combinatorial problems necessary for an exhaustive search for activity patterns in the neural population. In contrast, our model with a biologically inspired learning rule is free from this problem, presumably due to the same reason that cortical circuits do not have this problem. Actually, the present data from the mice visual cortex contain more than 6,000 active neurons, yet our analysis revealed clear evidence for cell assembly structures. These results are interesting because they suggest that cell assemblies underly the multidimensional neural representations of mice spontaneous behavior [24]. As the size of neural recording data is increasing rapidly, the low computational burden and high sensitivity to structured activity patterns show big advantages of this model.

Methods

Neural network model

Our network model consists of Nin input neurons and N recurrently connected neurons. Each neuron in the recurrent network consists of two compartments: the somatic and dendritic compartments. Inspired from a previous single neuron model, the somatic response can be approximated as an attenuated version of the dendritic potential V [22]. In our recurrent network model, the dendro-somatic signal transfer is regulated by the gating factor λ that depends on recurrent synaptic inputs through the local potential c as follows:

| (1) |

| (2) |

| (3) |

where the subscript i is the neuron index, are the N-dimensional weight vector of recurrent gating on the local potential c, and the N-dimensional weight vector of additive recurrent connections on the dendrite of the i-th neuron. In Eq (2), gG and will be defined later. The Nin-dimensional vector represents the weights of afferent inputs. Except in Fig 8, we set as . The variables enet and eext are the post-synaptic potentials evoked by recurrent and afferent inputs, respectively. The initial values of and were generated by Gaussian distributions with zero mean and the standard deviations of and , respectively. All three types of connections are fully connected.

The dynamics of the somatic membrane potential are described as

| (4) |

where τ = 15 ms is the membrane time constant. The last term in Eq (4) represents a peri-somatic recurrent inhibition with uniform inhibitory weights of the strength , with J = 0.5 in all simulations. No self-inhibition is considered. Further, and are the standardized potentials calculated as

| (5) |

| (6) |

where and are exponentially decaying averages of the membrane potential and its square of the gating compartment,

| (7) |

| (8) |

respectively (0<γ<1). The values of and are calculated from Vi in a similar fashion. As we have shown previously [19], the standardization enables the model to avoid a trivial solution. Without standardization, our learning rule can minimize the error between the somatic and dendritic activities to zero by making both activities simultaneously zero (see Eq (18)). This trivial solution occurs when all synaptic weights vanish after learning. The standardization prevents the trivial solution by maintaining temporal fluctuations of O(1) in the somatic membrane potential, thus ensuring successful learning of nontrivial temporal features. In Eq (2), the gating function gG(x) is defined as

| (9) |

where g0 = 0.7, βG = 5 and θG = 0.5.

The somatic compartment generates a Poisson spike train with saturating instantaneous firing rate given as

| (10) |

where ϕ0 = 0.05 kHz, β = 5 and θ = 1 throughout the present simulations.

Afferent inputs are described as Poisson spike trains of Nin input neurons:

| (11) |

where δ is the Dirac’s delta function and is the time of the q-th spike generated by the k-th input neuron. The postsynaptic potential evoked by the k-th input is calculated as

| (12) |

| (13) |

where τs = 5 ms and e0 = 25. Note that the parameter τ in Eqs (12) and (13) is the membrane time constant used in Eq (4). The scaling by τ−1 ensures that the last term in Eq (12) is in a unit of current. The postsynaptic potentials induced by recurrent inputs, enet, are similarly calculated.

The optimal learning rule for recurrent gated neural networks

We derive an optimal learning rule for the gating recurrent neural network in the spirit of minimization of regularized information loss (MRIL), which we recently proposed for single neurons [19]. The objective function is the KL-divergence between two Poisson distributions associated with the somatic and dendritic activities:

| (14) |

where angle bracket stands for the averaging over input spike trains, and WV and Wc are the weight matrix of synaptic inputs onto the dendrite V and those onto the local potential c for recurrent gating, respectively. The gated dendritic potential is defined as

| (15) |

where gL = τ−1. The crucial point in Eq (15) is that the degree of gating depends on c, and hence on network states through Eq (1).

The weights of all synaptic connections on the dendritic compartment (i.e., the weights of both afferent input and additive recurrent input) obey learning rules similar to the previously derived rule [19] except that the degree of gating is no longer constant in the present model:

| (16) |

where the function is defined as

| (17) |

The learning rule for recurrent gating is novel and can be calculated by a gradient descent as follows:

| (18) |

where the function is defined as

| (19) |

In the present simulations, we used an online version of the above learning rules:

| (20) |

| (21) |

where the learning rates were given as , and .

The optimal learning rule for multi-dendrite neuron model

For the multi-dendrite neuron model used in Fig 7, the membrane potential of the k-th dendrite of the i-th neuron and the dynamics of the corresponding somatic potential were calculated as

| (22) |

| (23) |

where K is the number of dendrites in each neuron. In this study, K = 3 for all neurons. We assumed that the dendritic compartments compete for the somatic activity of each neuron, governed by recurrent gating with a softmax function:

| (24) |

where ci,k is calculated as

| (25) |

Since , it straightforward to derive the update rule for connections onto to the dendrites:

| (26) |

where

| (27) |

Using the fact that we can derive the update rule for recurrent gating as follows:

| (28) |

where

| (29) |

Normalized mutual information score

In S3 Fig, we determined the estimated labels of the output response by Affinity Propagation [48], and then calculated the normalized mutual information score [49] between the estimated labels X and the true label Y as

| (30) |

where I(X;Y) is the mutual information between X and Y and H(X) is the entropy of X.

The Spearman’s rank-order correlation

In Fig 5C, we quantified the extent to which the order of sequential responses was preserved in network activity. To this end, we calculated the Spearman’s rank-order correlation [50] between network responses as

| (31) |

where N is the number of neurons in the network and Dn is the difference in the ranks of the n-th neuron between two datasets when sorted according to their onset response times.

The capacity of gating recurrent network

In S5A Fig, we considered the network of sizes 300 and 600. For each network, learning was performed with 3, 5, and 7 sequences. These sequences were randomly selected from 4! = 24 permutations of sequence a-b-c-d. After training, we evaluated the performance of the model by calculating the index SI of feature selectivity as

where K is the number of stimuli (or the number of the corresponding assemblies) and rk is the population-averaged activity of the k-th assembly. Note that 0≤SI≤1. The brackets <r>k and <r>\k refer to temporal averages during the presentation of the k-th stimulus or all stimuli except the k-th stimulus, respectively.

Neural sorting algorithms

In all figures except Fig 6E, neurons in the trained network were sorted according to the onset response times of these neurons. In Fig 6E, we first grouped neurons such that all pairs in a group had a correlation coefficient greater than 0.2. We then sorted the resultant groups based on their onset response times. In Fig 6C and 6F, we first sorted model neurons based on their peak response times. We then sorted the experimental data by associating each cortical neuron with a model neuron showing the highest correlation.

Simulation parameters

The values of parameters used in the present simulations are as follows: in Figs 2, 4, 5, 8 and S1, S2, S6, and S10 Figs, N = 500, Nin = 2,000 and γ = 0.0003; in Figs 3 and 2. S3 and S4 Figs, N = 1,200, Nin = 2,000 and γ = 0.0003; in Fig 6A–6C and S4A Fig, N = 600, Nin = 452 and γ = 0.0003; in Fig 6D–6F and S8B and S8C Fig, N = 600, Nin = 6,532 and γ = 0.00005; in Fig 7 and S9 Fig, N = 200, Nin = 28×28 and γ = 0.0003. Usually, the network was trained for the duration of 1,000 seconds. In Fig 6, the input spike trains constructed from experimental data were repeated 200 times during training.

Data and Code

All numerical datasets necessary to replicate the results shown in this article can easily be generated by numerical simulations with the software code provided below. No datasets were generated during this study. All codes were written in Python3 with numpy 1.17.3 and scipy 0.18.1. Example program codes used for the present numerical simulations and data analysis are available at https://github.com/ToshitakeAsabuki/dendritic_gating.

Supporting information

Correlation coefficient between somatic and dendritic activity during learning in a task considered in Fig 2 is shown. Here, the 1,000 seconds-long learning period was divided into multiple training sections and the correlation coefficient between somatic and dendrite activity was calculated in each section. The solid line and shaded area (invisible) represent the mean and the s.d. of correlation over 10 independent simulations.

(PDF)

(a) Output spike trains of the trained recurrent network are shown. Neurons were sorted according to their onset response times. (b) The responses to the two chunks were averaged over 20 trials. (c) PCA was applied to obtain the low-dimensional trajectories of the trained network. The black, orange, and blue portions indicate the periods of random spike input, chunk 1 and chunk 2, respectively. The two trajectories corresponding to the two chunks were inseparable and the network failed to learn the chunks.

(PDF)

(a) The responses of the first cell assembly to its preferred (left) and non-preferred (middle, right) chunks are shown. These responses were averaged and normalized as in Fig 2C. (b) Preferred responses of two neurons are shown as examples. Top and bottom traces show the responses of chunk2-D and chunk3-D selective neurons, respectively. (c) As in Fig 3C–3E, trial-averaged responses of dendrite, gating factor and soma are shown for two other neurons.

(PDF)

(a) The average values of the weights between 12 assemblies defined according to the selective response to the 4 component patterns of the 3 chunks are shown. (b) Mean weights between groups of neurons in three cases are shown. Cyan and green colors indicate the connections project to assemblies correspond to previous and next component patterns within chunk, while magenta indicates interactions between assemblies correspond to the same component patterns but belong to different chunks.

(PDF)

(a) Performances of networks with sizes 600 and 300 are shown over different number of chunks are shown. Error bars show s.d.s. (b) Learning performances of network of size 600 over various values of parameter γ (see Eq (7) and (8)) are shown. Error bars show s.d.s. (c) Same as in (b), but over the strength of inhibition are shown.

(PDF)

(a) Responses of the networks trained on input spike trains with timing jitters of 70 ms (top) and 100 ms (bottom) are shown. Here, spike times within chunks were sifted by the amounts drawn by a Gaussian distribution with mean zero and s.d of jitter strength, and these jitters were present during learning and testing. Neurons were sorted according to the times of their response onsets during chunks, and only 160 out of the total 500 neurons are shown for the visualization purpose. (b) The normalized average activities of the two assemblies with timing jitters of 70 ms (left) and 100 ms (right) are shown. (c) Learning curves are shown when the average jitter was 0 ms (purple), 70 ms (green), and 100 ms (blue), respectively. The solid lines and shaded areas represent the averages and s.d over 20 trials, respectively. Learning performance was measured by the normalized mutual information between network activity and target labels (Methods). (d) The performance measures averaged over 20 trials are shown at various sizes of jitters. Error bars stand for the s.d.

(PDF)

Running speed (top), whisking (middle), and pupil area (bottom) of freely behaving mouse are shown.

(PDF)

(a) The positions of a mouse on a linear track [23] (top) and the activities of model neurons learned without recurrent gating (bottom) are shown. Model neurons were sorted according to their onset response times. Separations between the two sequences corresponding to forward and backward runs are invisible (c.f. Fig 6B). (b) Activities of model neurons trained on the neural data recorded from the mice visual cortex [24,25] without recurrent gating are shown. The model neurons were sorted according to their onset response times. (c) We associated each cortical neuron with a model neuron having the highest correlation with the cortical neuron. Then, we sorted the cortical neurons according to the serial order of model neurons shown in (b). (d) Population-averaged activities of network model trained on the data of visual cortex with (left) and without (right) gating are shown. (e) Correlation between average activities shown in (d) and various behaviors are shown. Blue and magenta plots correspond to gating and non-gating, respectively. In both type of networks, 10 independent simulations were performed.

(PDF)

(a) A schematic illustration of the neuron model with three dendritic compartments without gating. (b) Trained weight matrices are displayed for afferent inputs to three dendritic branches of three example neurons. (c) (d) Somatic and dendritic activities of the three neurons in (b) are shown.

(PDF)

Dendrites received additive recurrent inputs as well as afferent inputs and the dendritic activity underwent recurrent gating. (a) As in Fig 2, the trained network segmented two overlapping chunks in the presence of additive recurrent inputs. (b) Normalized average responses of two emergent assemblies during the presentations of chunk 1 and chunk 2. (c) PCA showed that the different chunks were distinguishable by different low-dimensional trajectories, of which the black, orange, and blue portions indicate the periods of random spike input, chunk 1 and chunk 2, respectively.

(PDF)

Acknowledgments

The authors express their sincere thanks to Thomas Burns for technical assistance.

Data Availability

All numerical datasets necessary to replicate the results shown in this article can easily be generated by numerical simulations with the software code provided below. No datasets were generated during this study. All codes were written in Python3 with numpy 1.17.3 and scipy 0.18.1. Example program codes used for the present numerical simulations and data analysis are available at https://github.com/ToshitakeAsabuki/dendritic_gating.

Funding Statement

This work was partly supported by KAKENHI (nos. 19H04994 and 18H05213) to T.F. T.A was supported by the SRS Research Assistantship of OIST. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274: 1926–1928. doi: 10.1126/science.274.5294.1926 [DOI] [PubMed] [Google Scholar]

- 2.Wiestler T, Diedrichsen J. Skill learning strengthens cortical representations of motor sequences. Elife. 2013;2: e00801. doi: 10.7554/eLife.00801 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Waters-Metenier S, Husain M, Wiestler T, Diedrichsen J. Bihemispheric transcranial direct current stimulation enhances effector-independent representations of motor synergy and sequence learning. J. Neurosci. 2014;34: 1037–1050. doi: 10.1523/JNEUROSCI.2282-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dehaene S, Meyniel F, Wacongne C, Wang L, Pallier C. The neural representation of sequences: from transition probabilities to algebraic patterns and linguistic trees. Neuron 2015;88: 2–19. doi: 10.1016/j.neuron.2015.09.019 [DOI] [PubMed] [Google Scholar]

- 5.Leonard MK, Bouchard KE, Tang C, Chang EF. Dynamic encoding of speech sequence probability in human temporal cortex. J. Neurosci. 2015;35: 7203–7214. doi: 10.1523/JNEUROSCI.4100-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Naim M, Katkov M, Recanatesi S, Tsodyks M. Emergence of hierarchical organization in memory for random material. Sci. Rep. 2019;9: 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Henin S, Turk-Browne NB, Friedman D, Liu A, Dugan P, Flinker A, et al. Learning hierarchical sequence representations across human cortex and hippocampus. Sci. Adv. 2021;7: eabc4530. doi: 10.1126/sciadv.abc4530 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Franklin NT, Norman KA, Ranganath C, Zacks JM, Gershman SJ. Structured Event Memory: A neuro-symbolic model of event cognition. Psychol. Rev. 2020;127: 327–361. doi: 10.1037/rev0000177 [DOI] [PubMed] [Google Scholar]

- 9.Miller GA. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956;101: 343–52. [DOI] [PubMed] [Google Scholar]

- 10.Ericcson KA, Chase WG, Faloon S. Acquisition of a memory skill. Science. 1980;208: 1181–1182. doi: 10.1126/science.7375930 [DOI] [PubMed] [Google Scholar]

- 11.Orbán G, Fiser J, Aslin RN, Lengyel M. Bayesian learning of visual chunks by human observers. Proc. Natl Acad. Sci. USA. 2008;105: 2745–2750. doi: 10.1073/pnas.0708424105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Christiansen MH, Chater N. The now-or-never bottleneck: A fundamental constraint on language. Behav. brain sci. 2016;39: e62. doi: 10.1017/S0140525X1500031X [DOI] [PubMed] [Google Scholar]

- 13.Cohen Y, Shen J, Semu D, Leman DP, Liberti 3rd WA, Perkins, et al., Hidden neural states underlie canary song syntax. Nature. 2020;582: 539–544. doi: 10.1038/s41586-020-2397-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Baldauf D, Cui H, Andersen RA. The posterior parietal cortex encodes in parallel both goals for double-reach sequences. J. Neurosci. 2008;28: 10081–10089. doi: 10.1523/JNEUROSCI.3423-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Tanji J, Shima K. Role for supplementary motor area cells in planning several movements ahead. Nature. 1994;371: 413–416. doi: 10.1038/371413a0 [DOI] [PubMed] [Google Scholar]

- 16.Yokoi A, Diedrichsen J. Neural organization of hierarchical motor sequence representations in the human neocortex. Neuron. 2019;103: 1178–1190. doi: 10.1016/j.neuron.2019.06.017 [DOI] [PubMed] [Google Scholar]

- 17.Ding N, Melloni L, Zhang H, Tian X, Poeppel D. Cortical tracking of hierarchical linguistic structures in connected speech. Nat. Neurosci. 2016;19: 158–164. doi: 10.1038/nn.4186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Klampfl S, Maass W. Emergence of dynamic memory traces in cortical microcircuit models through STDP. J. Neurosci. 2013;33: 11515–11529. doi: 10.1523/JNEUROSCI.5044-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Asabuki T, Fukai T. Somatodendritic consistency check for temporal feature segmentation. Nat. Commun. 2020;11: 1554. doi: 10.1038/s41467-020-15367-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Branco T, Clark BA, Häusser M, Dendritic discrimination of temporal input sequences in cortical neurons. Science. 2010;329: 1671–1675. doi: 10.1126/science.1189664 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Heeger DJ, Mackey WE. Oscillatory recurrent gated neural integrator circuits (ORGaNICs), a unifying theoretical framework for neural dynamics. Proc. Natl Acad. Sci. USA. 2019;116: 22783–22794. doi: 10.1073/pnas.1911633116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Urbanczik R, Senn W. Learning by the Dendritic Prediction of Somatic Spiking. Neuron. 2014;81: 521–528. doi: 10.1016/j.neuron.2013.11.030 [DOI] [PubMed] [Google Scholar]

- 23.Rubin A, Sheintuch L, Brande-Eilat N, Pinchasof O, Rechavi Y, Geva N., et al. Revealing neural correlates of behavior without behavioral measurements. Nat. Commun. 2019;10: 4745. doi: 10.1038/s41467-019-12724-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Stringer C, Pachitariu M, Steinmetz N, Reddy CB, Carandini M, Harris KD. Spontaneous behaviors drive multidimensional, brainwide activity. Science. 2019;364: 255. doi: 10.1126/science.aav7893 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stringer C, Pachitariu M, Reddy CB, Carandini M, Harris KD. Recordings of ten thousand neurons in visual cortex during spontaneous behaviors; 2018. [cited 2022 May 20]. Database: figshare [internet]. Available from: 10.25378/janelia.6163622.v4. [DOI] [Google Scholar]

- 26.Jia H, Rochefort NL, Chen X, Konnerth A. Dendritic organization of sensory input to cortical neurons in vivo. Nature. 2010;464: 1307–1312. doi: 10.1038/nature08947 [DOI] [PubMed] [Google Scholar]

- 27.Voigts J, Harnett MT. Somatic and dendritic encoding of spatial variables in retrosplenial cortex differs during 2D navigation. Neuron. 2020;105: 237–245. doi: 10.1016/j.neuron.2019.10.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rashid SK, Pedrosa V, Dufour MA, Moore JJ, Chavlis S, Delatorre RG, et al. The dendritic spatial code: branch-specific place tuning and its experience-dependent decoupling. BioRxiv [Preprint]. 2020. bioRxiv 916643 [posted 2020 Jan 24; cited 2022 May 20]: [29 p.]. Available from: https://www.biorxiv.org/content/10.1101/2020.01.24.916643v1 [Google Scholar]

- 29.Wang XJ, Tegner J, Constantinidis C, Goldman-Rakic PS. Division of labor among distinct subtypes of inhibitory neurons in a cortical microcircuit of working memory. Proc. Natl Acad. Sci. USA. 2004;101: 1368–1373. doi: 10.1073/pnas.0305337101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Jadi M, Polsky A, Schiller J, Mel BW. Location-dependent effects of inhibition on local spiking in pyramidal neuron dendrites. PLoS Comput. Biol. 2012;8: e1002550. doi: 10.1371/journal.pcbi.1002550 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sridharan D, Knudsen EI. Selective disinhibition: a unified neural mechanism for predictive and post hoc attentional selection. Vision Res. 2015;116: 194–209. doi: 10.1016/j.visres.2014.12.010 [DOI] [PubMed] [Google Scholar]

- 32.Yang GR, Murray JD, Wang XJ. A dendritic disinhibitory circuit mechanism for pathway-specific gating. Nat. Commun. 2016;7: 12815. doi: 10.1038/ncomms12815 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Peron S, Pancholi R, Voelcker B, Wittenbach JD, Ólafsdóttir HF, Freeman J, et al. Recurrent interactions in local cortical circuits. Nature. 2020:579: 256–259. doi: 10.1038/s41586-020-2062-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gabbiani F, Krapp HG, Koch C, Laurent G. Multiplicative computation in a visual neuron sensitive to looming. Nature. 2002;420: 320–324. doi: 10.1038/nature01190 [DOI] [PubMed] [Google Scholar]

- 35.Suzuki M, Larkum M. General anesthesia decouples cortical pyramidal neurons. Cell. 2020;180: 666–676. doi: 10.1016/j.cell.2020.01.024 [DOI] [PubMed] [Google Scholar]

- 36.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9: 1735–1780. doi: 10.1162/neco.1997.9.8.1735 [DOI] [PubMed] [Google Scholar]

- 37.Schmidhuber J, Zhao J, Wiering M. Shifting inductive bias with success-story algorithm, adaptive Levin search, and incremental self-improvement. Machine Learning. 1997;28: 105–130. [Google Scholar]

- 38.Thrun S, Pratt L. Learning to learn: Introduction and overview. In: Thrun S, Pratt L, editors. Learning to learn. Boston: Springer; 1998. pp. 3–17. [Google Scholar]

- 39.Finn C, Abbeel P, Levine S. Model-agnostic meta-learning for fast adaptation of deep networks. Int. Conf. Machine Learning. 2017;70: 1126–1135. [Google Scholar]

- 40.Wortsman M, Ehsani K, Rastegari M, Farhadi A, Mottaghi R. Learning to learn how to learn: Self-adaptive visual navigation using meta-learning. Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10.1109/CVPR.2019.00691. [DOI] [Google Scholar]

- 41.Gers FA, Schmidhuber J, Cummins F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000;12: 2451–2471. doi: 10.1162/089976600300015015 [DOI] [PubMed] [Google Scholar]

- 42.Greff K, Srivastava RK, Koutník J, Steunebrink BR, Schmidhuber J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. 2016;28: 2222–2232. doi: 10.1109/TNNLS.2016.2582924 [DOI] [PubMed] [Google Scholar]

- 43.Abeles M, Bergman H, Margalit E, Vaadia E. Spatiotemporal firing patterns in the frontal cortex of behaving monkeys. J. Neurophysiol. 1993;70: 1629–1638. doi: 10.1152/jn.1993.70.4.1629 [DOI] [PubMed] [Google Scholar]

- 44.Euston DR, Tatsuno M, McNaughton BL, Fast-forward playback of recent memory sequences in prefrontal cortex during sleep. Science. 2007;318: 1147–1150. doi: 10.1126/science.1148979 [DOI] [PubMed] [Google Scholar]

- 45.Shimazaki H, Amari S, Brown EN, Grün S. State-space analysis of time-varying higher-order spike correlation for multiple neural spike train data. PLoS Comput. Biol. 2012;8: e1002385. doi: 10.1371/journal.pcbi.1002385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Mackevicius EL, Bahle AH, Williams AH, Gu S, Denisenko NI, Goldman MS, et al. Unsupervised discovery of temporal sequences in high-dimensional datasets, with applications to neuroscience. Elife. 2019;8: e38471. doi: 10.7554/eLife.38471 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Watanabe K, Haga T, Tatsuno M, Euston DR, Fukai T. Unsupervised detection of cell-assembly sequences by similarity-based clustering. Front. Neuroinform. 2019;13: 39. doi: 10.3389/fninf.2019.00039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Frey BJ, Dueck D. Clustering by passing messages between data points. Science. 2007;315: 972–976. doi: 10.1126/science.1136800 [DOI] [PubMed] [Google Scholar]

- 49.Kvalseth TO. Entropy and correlation: Some comments. IEEE Transactions on Systems, Man, and Cybernetics. 1987;17: 517–519. [Google Scholar]

- 50.Spearman C. The proof and measurement of association between two things. American Journal of Psychology. 1904;15: 72–101. [PubMed] [Google Scholar]