Abstract

Background

Computational state space models (CSSMs) enable the knowledge-based construction of Bayesian filters for recognizing intentions and reconstructing activities of human protagonists in application domains such as smart environments, assisted living, or security. Computational, i. e., algorithmic, representations allow the construction of increasingly complex human behaviour models. However, the symbolic models used in CSSMs potentially suffer from combinatorial explosion, rendering inference intractable outside of the limited experimental settings investigated in present research. The objective of this study was to obtain data on the feasibility of CSSM-based inference in domains of realistic complexity.

Methods

A typical instrumental activity of daily living was used as a trial scenario. As primary sensor modality, wearable inertial measurement units were employed. The results achievable by CSSM methods were evaluated by comparison with those obtained from established training-based methods (hidden Markov models, HMMs) using Wilcoxon signed rank tests. The influence of modeling factors on CSSM performance was analyzed via repeated measures analysis of variance.

Results

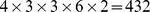

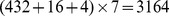

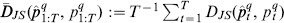

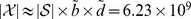

The symbolic domain model was found to have more than  states, exceeding the complexity of models considered in previous research by at least three orders of magnitude. Nevertheless, if factors and procedures governing the inference process were suitably chosen, CSSMs outperformed HMMs. Specifically, inference methods used in previous studies (particle filters) were found to perform substantially inferior in comparison to a marginal filtering procedure.

states, exceeding the complexity of models considered in previous research by at least three orders of magnitude. Nevertheless, if factors and procedures governing the inference process were suitably chosen, CSSMs outperformed HMMs. Specifically, inference methods used in previous studies (particle filters) were found to perform substantially inferior in comparison to a marginal filtering procedure.

Conclusions

Our results suggest that the combinatorial explosion caused by rich CSSM models does not inevitably lead to intractable inference or inferior performance. This means that the potential benefits of CSSM models (knowledge-based model construction, model reusability, reduced need for training data) are available without performance penalty. However, our results also show that research on CSSMs needs to consider sufficiently complex domains in order to understand the effects of design decisions such as choice of heuristics or inference procedure on performance.

Introduction

1.1 Motivation

Recently, a number of different approaches to representing the transition models of probabilistic state space models (SSMs) by computational means have been proposed as method for building intention recognition systems, from somewhat different research perspectives and conceptual backgrounds [1]–[5]. Computational state space models (CSSMs) are probabilistic models where the transition model of the underlying dynamic system can be described by any computable function using compact algorithmic representations. Objective of the study reported in this paper is to evaluate the applicability of CSSMs for the purpose of sequential state estimation in dynamic systems with very large state spaces and dense transition models. Such domains are difficult to handle with conventional methods relying on the explicit enumeration of states or paths, such as hidden Markov models (HMMs) and their various extensions [6], probabilistic context-free grammars [7], or (libraries of) (partially ordered) plans [8]. We specifically consider CSSMs for the objective of recognizing activities, goals, plans, and intentions of autonomous non-deterministic agents, such as human protagonists. These recognition tasks frequently arise in application domains like smart environments [9], [10], security and surveillance [11], man-machine-collaboration [2], and assistive systems [12], [13].

Researchers have chosen computational state space models for applications in activity and intention recognition for a range of different reasons. CSSMs have been considered because they allow to

substitute training data by symbolic prior knowledge [3],

replace explicit enumerations of possible action sequences by on-the-fly-synthesis of plans [5],

enable the flexible introduction of additional state variables that allow inferences about, for instance, the cognitive state of a person [2],

exchange observation models without affecting the system model in response to changing sensor setups [14].

While these properties seem desirable from the viewpoint of model development and model reusability, they come at a price: using computational symbolic descriptions, it is very easy to produce models with a very large state space. This is an immediate effect of the generalization and abstraction power of the computational representations: a model that considers not only an explicit enumeration of action sequences, but rather all sequences that achieve the same objective, will have a larger set of states. From the viewpoint of probabilistic inference, a large state space is first of all not an asset but a liability. Considering the bias-variance trade-off [15], a large state-space might produce a weaker performance (due to variance) than a smaller, potentially less flexible and more biased state space.

The use of CSSMs for activity and intention recognition has so far been investigated only in comparatively limited scenarios with small state spaces and only few activities to distinguish. It remains unclear, how well this approach scales to larger problems and in how far inference in large state spaces is tractable. Objective of the study presented in this paper is to answer this question. Our findings suggest that such problems can indeed be successfully tackled by CSSMs.

The further structure of this paper is as follows: as CSSMs are not yet widely established, a brief overview of the pertinent concepts of CSSMs is given in Sec. 1.2. A review of current empirical investigations of CSSMs for activity and intention recognition, including an assessment of the experimental scenarios, is contained in Sec. 1.3. In Sec. 1.4 we explain the experimental setting we used, the overall CSSM model structure used for activity reconstruction, as well as the inference algorithms. In Sec. 2.4.2 we present the quantitative data obtained from our experiments. A discussion of these results, our reasoning why we think these results justify the claim that CSSMs are capable to tackle real world scenarios, and the limitations of our study are given in Sec. 3.4. Notational conventions and abbreviations are summarized in Appendix S1, the remaining appendices contain supplemental material.

1.2 Computational state space models

This section provides a brief review of the pertinent notions of CSSMs from the perspective of intention recognition (IR) and activity recognition (AR). A detailed introduction and discussion is provided in Appendix S2.

We consider dynamic systems whose behavior can be formally captured by the notion of labeled transition systems (LTS). An LTS is a triple  where

where  is a set of states,

is a set of states,  a set of action labels and

a set of action labels and  a labeled transition relation. It is easy to arrive at LTS where

a labeled transition relation. It is easy to arrive at LTS where  and

and  are infinite, even though

are infinite, even though  is finite – for instance by introducing states that represent counter values and actions that increment such counters. In the domain of intelligent assistive systems, protagonist activities such as setting a table by incrementally moving items from the kitchen to the dining room result in this counting behavior. Such LTS can not be represented by explicit enumeration of states and transitions, but if defined in a suitable algorithmic language (which we call “computational action language”), then also these LTS have a finite representation. As long as only a finite subset of states needs to be considered in a given intention recognition task, computations on such latently infinite systems remain feasible.

is finite – for instance by introducing states that represent counter values and actions that increment such counters. In the domain of intelligent assistive systems, protagonist activities such as setting a table by incrementally moving items from the kitchen to the dining room result in this counting behavior. Such LTS can not be represented by explicit enumeration of states and transitions, but if defined in a suitable algorithmic language (which we call “computational action language”), then also these LTS have a finite representation. As long as only a finite subset of states needs to be considered in a given intention recognition task, computations on such latently infinite systems remain feasible.

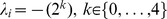

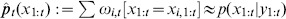

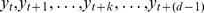

State space models (SSMs) [16] are a general class of probabilistic models that allow to infer the hidden state of an LTS (e. g. the current activity or location of items) given a sequence of observations (sensor readings). Let  be some set of states, let

be some set of states, let  be a sequence of random variables with value domain

be a sequence of random variables with value domain  . Furthermore, let

. Furthermore, let  be a set of observations and

be a set of observations and  a sequence of random variables with value domain

a sequence of random variables with value domain  . Then the joint distribution

. Then the joint distribution  can be described by an SSM if it recursively (over time

can be described by an SSM if it recursively (over time  ) factorizes into a transition model

) factorizes into a transition model

and an observation model

and an observation model

, that is

, that is  . The underlying idea of computational state space models (CSSMs) is to use computational action languages for representing the transition distribution. This approach is interesting when the process under observation can be considered as performing some kind of sequential “computation”, including such phenomena as goal directed behavior of human protagonists.

. The underlying idea of computational state space models (CSSMs) is to use computational action languages for representing the transition distribution. This approach is interesting when the process under observation can be considered as performing some kind of sequential “computation”, including such phenomena as goal directed behavior of human protagonists.

We call  the action selection distribution, which models the non-deterministic behavior of protagonists in the case that multiple actions are applicable to a given situation. They encompass decision theoretic quantities, such as an “action's utility” in reaching the goal from the given state [17], as well as situation-based conflict resolution strategies such as “specificity” [14].

the action selection distribution, which models the non-deterministic behavior of protagonists in the case that multiple actions are applicable to a given situation. They encompass decision theoretic quantities, such as an “action's utility” in reaching the goal from the given state [17], as well as situation-based conflict resolution strategies such as “specificity” [14].

1.3 Feasibility of CSSMs for large scenarios: Current research

As discussed above, CSSMs provide a number of desirable properties. However, an analysis of current literature reviews on activity and intention recognition [18]–[20] shows that currently there is little empirical evidence for their applicability to detailed models of everyday situations. Current studies either use models that do not employ computational mechanisms, or they use scenarios of very limited detail and/or simulations. Below we summarize the results of this literature analysis.

1.3.1 Survey criteria

We identified different features (factors) for assessing and comparing capabilities of the methods and complexities of experiments. Table 1 gives a brief explanation of the factors used for evaluation. The F factors represent the different properties provided by CSSMs, they show in how far the approach used in the respective study can be considered a CSSM. The N factors quantify the complexity of the experimental setting used for evaluation in the respective study. N.subjects indicates whether sensor data obtained from human subjects has been used, or simulations drawn from the model. The factor N.State gives a rough quantitative estimate of the model's detail level.

Table 1. Factors for analyzing empirical studies on activity recognition.

| F.latent.infty | Method allows inference in latently infinite state spaces (typically employing a computational action language). |

| F.plan.synth | Plan synthesis is supported. Otherwise, the approach requires to create plan libraries by explicitly enumeration. |

| F.duration | Durative actions are supported. (This will significantly increase inference complexity, as the starting time for an action becomes another state variable, which has a large value space. See Appendix S4) |

| F.action.sel | Explicit mechanisms for modeling human action selection based on opportunistic and/or goal driven features are supported. |

| F.probability | Method provides (an approximation of) the posterior probability distribution over states (or actions, depending on the mechanism). This is a prerequisite for selecting assistive interventions using decision-theoretic methods (i. e., that aim at maximizing the expected utility). |

| F.struct.state | The state maintained by inference provides a structured representation of the environment state. This allows the formulation of state predicates and the dynamic synthesis of contingency plans. (Otherwise the state typically represents the action currently executed.) |

| F.non.monoton | Non-monotonous action sequences are considered, that – temporarily – may increase goal distance. (This affects the number of plans that need to be considered. Methods using explicit plan enumeration usually avoid non-monotonicity.) |

| F.complexity | Filter step complexity (computational complexity for the filtering step from  to to  ). If greater than ). If greater than  , for instance , for instance  , then online filtering is essentially intractable. , then online filtering is essentially intractable. |

| Method | Type of inference method used. |

| Scenario | Scenarios considered in experimental tasks. |

| N.states | Number of  states considered. (See text for further explanation.) states considered. (See text for further explanation.) |

| N.plan.length | Lengths of plans considered in study. |

| N.classes | Number of classes in classification target used for performance evaluation. |

| N.subjects | Number of subjects participating in trials (or “sim” in case evaluation is based on simulated observations). |

| M.accuracy | Accuracy is provided as performance measure. |

| M.conf.based | Other quantities based on confusion matrices (true–positive rate, precision, etc.) are provided as performance measures. |

We note that N.State has substantial methodological drawbacks. First, this number (in fact, any number quantifying the level of detail of the respective experimental scenario) is often not given explicitly and has to be inferred from the study description. Secondly, if highly discriminative observations are available (such as the ground action label used in some of the simulation studies), only a small portion of the potential state space will have non-vanishing support. In this case, inference in fact only needs to consider a small subset of the state space, based on unrealistic assumptions on observation quality. Finally, a continuous state space obviously would have an infinite number of possible states. Concerning this last point, the real quantity of interest would be the representation complexity of the statistical model for the state distribution, as inference algorithms operate on finite representations of distributions. A continuous state space modeled by a Gaussian can be represented by a point  in 2-d space. A categorical state space with

in 2-d space. A categorical state space with  category labels is represented by a point on a

category labels is represented by a point on a  -d simplex. So, although the latter state space is finite, its representational complexity is higher than for the continuous Gaussian model. Fortunately, all studies in the literature survey used some kind of categorical state space. Therefore, despite its deficiencies, for the purpose of this study N.State was considered as useful surrogate measure for model complexity.

-d simplex. So, although the latter state space is finite, its representational complexity is higher than for the continuous Gaussian model. Fortunately, all studies in the literature survey used some kind of categorical state space. Therefore, despite its deficiencies, for the purpose of this study N.State was considered as useful surrogate measure for model complexity.

1.3.2 Survey results

21 studies were analyzed in the survey, these include the studies contained in [18]–[20] with the addition of new results we regarded as relevant for the topic of this study. An overview of the analysis results is given in Table 2. There were two studies with more than 100,000 states (studies 6 and 8). 7 studies (33%) considered no more than 1000 states. The median plan length used in trials was 15 (with interquartile range  ). Concerning trial sizes, the median number of subjects was 3 (

). Concerning trial sizes, the median number of subjects was 3 ( ).

).

Table 2. Quantitative and qualitative properties of selected studies on activity and intention recognition.

| Reference | F.latent.infty | F.plan.synth | F.duration | F.action.sel | F.probability | F.struct.state | F.non.monoton | F.complexity | Method | Scenario | N.states | N.plan.length | N.classes | N.subjects | M.accuracy | M.conf.based | |

| 1 | [1] [4] | ▪ | ▪ | □ | ▪ | ▪ | ▪ | ▪ | 1 | BD | M | 70,000† | 20 | 3 | 23 | □ | □ |

| 2 | [2] [56] | ▪ | ▪ | □† | ▪ | ▪ | ▪ | ▪† | 1 | BD | OM | – | – | ◊ | sim | □ | □ |

| 3 | [3]– | ▪ | ▪ | ▪ | ▪ | ▪ | ▪ | ▪ | 1 | BPF | O | 70,000† | 15† | 10 | 6 | ▪ | ▪ |

| 4 | [4] [20] | ▪ | ▪ | □ | ▪ | ▪ | ▪ | □ | t | BPl | K | 10,000† | – | 3 | sim | □ | ▪ |

| 5 | [5] [20] | ▪ | ▪ | □ | ▪ | ▪ | ▪ | ▪ | 1 | BP | K | 70,000 | 6 | 5 | sim | ▪ | ▪ |

| 6 | [57] [19] | ▪ | □ | □ | □ | ▪ | ▪ | □ | 1 | BD | A | 200,000 | 5† | 6 | 6 | □ | ▪ |

| 7 | [12] [19] | ▪ | □ | □ | ▪ | ▪ | ▪ | □ | 1 | BD | K | 70,000 | 40 | ◊ | 2 | □ | □ |

| 8 | [21] [18] | □ | ▪ | □ | □ | ▪ | ▪ | ▪ | 1 | BD | O | 250,000† | – | 5 | 5 | ▪ | □ |

| 9 | [58] [59] | □ | ▪ | □ | □ | ▪ | ▪ | ▪ | t | NBN | M | 1,000† | – | 15† | sim | □ | ▪ |

| 10 | [60]– | □ | ▪ | ▪ | □ | ▪ | □ | ▪ | 1 | BH | K | 28 | 6 | 6 | – | ▪ | ▪ |

| 11 | [61]– | □ | ▪ | □ | □ | ▪ | □ | ▪ | 1 | BH | A | 300† | 12† | 15 | 3 | ▪ | □ |

| 12 | [62] [19] | □ | ▪ | ▪ | □ | ▪ | □ | ▪ | 1 | BRP | K | 96 | – | 13 | 2 | □ | ▪ |

| 13 | [63] [19] | □ | ▪ | ▪ | □ | ▪ | □ | ▪ | 1 | BRP | O | 3,500† | 3 | 3 | 2† | □ | □ |

| 14 | [64] [56] | □ | ▪ | □ | □ | ▪ | ▪ | ▪ | t | OML | M | – | 20† | 4 | 14 | ▪ | ▪ |

| 15 | [29] [19] | □ | ▪ | ▪ | □ | ▪ | ▪ | ▪ | 1 | BD | AK | 528† | – | 33 | 3† | ▪ | ▪ |

| 16 | [65] [19] | □ | □ | ▪ | □ | ▪ | □ | □ | 1 | NMH | O | 720† | – | 2 | 1 | □ | ▪ |

| 17 | [66] [19] | □ | □ | □ | □ | □ | □ | □ | 1 | LDL | K | – | 15 | 6 | sim | □ | ▪ |

| 18 | [67] [19] | □ | □ | □ | □ | □ | □ | □ | 1 | LDL | AK | – | 24† | 8 | 3 | ▪ | □ |

| 19 | [7]– | □ | □ | □ | □ | □ | □ | □ | t 2 | OG | M | – | 50† | ◊ | 2† | □ | □ |

| 20 | [68] [19] | □ | □ | □ | □ | ▪ | □ | □ | 1 | LP | A | 100† | 40† | 7† | 6 | ▪ | □ |

| 21 | [8] [18] | □ | □ | ▪ | □ | ▪ | □ | □ | 1 | BMF | A | 20,000 | 14† | 14 | 3 | ▪ | ▪ |

“▪” = feature included in study.

“□” = feature not included.

“x †” = value/property x not explicitly stated in study description.

“–” = value unknown.

“◊” = property not meaningful considering target of study.

Method codes: L: logic-based (DL = description logic, P = combined with possibility theory). B: using some variant of sequential Bayesian filtering (exact: H = HMM or extension, D = other DBN, Pl = transformation into a planning problem, P = partially observable Markov decision process; approximate: PF = particle filter, RP = Rao-Blackwellized particle filter, MF = marginal filter). N = Non-sequential Bayesian inference (MH = Metropolis-Hastings, BN = unrolled Bayes Net). O = other exact method (G = some kind of grammar, ML = Markov Logic net). Scenario codes: K = kitchen task, A = other activities of daily living, O = office, M = miscellaneous other scenario.

We consider the first five studies as CSSM-like approaches.

It can indeed be observed that CSSMs have been evaluated only in simpler scenarios, and complex experimental settings have only been used for testing more simple inference methods. There was a single study using a model supporting all features (study 3). Studies including durative actions used at most 70,000 states. As CSSM-like approaches all studies were considered that supported latently infinite state spaces and plan synthesis. From the resulting five studies (studies 1–5), only a single one (again, study 3) used non-simulated observation data and durative actions.

While non-CSSM methods have been evaluated with in median 6.5 ( ) different activity classes, experiments using CSSMs distinguish only 4 (

) different activity classes, experiments using CSSMs distinguish only 4 ( ) activity classes. When fewer target classes have to be discriminated, it is easier to achieve higher recognition accuracies. The plan length to be recognized by the approaches is also influenced by the supported features, where it can be observed that more complex models are usually evaluated with shorter plans. For instance, approaches employing plan synthesis (studies 1–5, 8–15) have been evaluated with a median plan length of 12 (

) activity classes. When fewer target classes have to be discriminated, it is easier to achieve higher recognition accuracies. The plan length to be recognized by the approaches is also influenced by the supported features, where it can be observed that more complex models are usually evaluated with shorter plans. For instance, approaches employing plan synthesis (studies 1–5, 8–15) have been evaluated with a median plan length of 12 ( ), while in median 24 (

), while in median 24 ( ) actions had to be recognized without plan synthesis. Similarly when the action's durations are modeled, the plans have a median length of 10 (

) actions had to be recognized without plan synthesis. Similarly when the action's durations are modeled, the plans have a median length of 10 ( ), whereas plans of length 20 (

), whereas plans of length 20 ( ) are used when actions are modeled without durations.

) are used when actions are modeled without durations.

The single most frequently used performance measure was accuracy, (10 studies, 48%). 16 studies (76%) used some kind of performance measure derived from the confusion matrix (accuracy, precision, recall,  , etc.). Four studies reported no performance data. No study used performance measures sensitive to the sequential (or causal) structure of the sequence of estimates (cf. Sec. 2.4.2.). The median number of target classes used in performance evaluations was 6 (

, etc.). Four studies reported no performance data. No study used performance measures sensitive to the sequential (or causal) structure of the sequence of estimates (cf. Sec. 2.4.2.). The median number of target classes used in performance evaluations was 6 ( ). Activities of daily living represented the majority of scenarios (12 studies, 57%). The most frequent single scenario was kitchen activities (8 studies, 38%).

). Activities of daily living represented the majority of scenarios (12 studies, 57%). The most frequent single scenario was kitchen activities (8 studies, 38%).

Considering inference methods, if approximate methods were employed and if on-line filtering was possible (i.e., where complexity =  ), variants of particle filters were used in all cases, with the exception of [8] (study 21). Approximate methods are inevitable if large state spaces have to be supported.

), variants of particle filters were used in all cases, with the exception of [8] (study 21). Approximate methods are inevitable if large state spaces have to be supported.

1.3.3 Assessment

While being successful in using CSSMs for intention recognition and state estimation in scenarios of a level of complexity comparable to those reported in Table 2, we made the experience that achieving success with CSSM-based methods in larger settings, such as they occur in certain domains of everyday activities, is not as straightforward as their intuitive appeal implies. When applying the CSSM approach to the scenario outlined in Sec. 2.1.1 (with an average plan length of 91.6 actions), our initial attempt did not yield a model that was able to compete with a simple hidden Markov model applied to the same estimation task. The CSSM models were found to contain several hundred million states. This indicates a potential scalability problem of the method due to combinatorial explosion.

As the above survey results indicate, current studies have considered scenarios of substantially smaller size. For instance, Ramírez and Geffner [5] infer the plan of a (software) agent solving a problem in a kitchen environment (among others). They show how the plan can be inferred when observing the actions executed by the agent. The model contains about 70,000 states, including location of four ingredients. There are less than 10 different action classes including interacting with kitchen utensils and the ingredients like frying and mixing. Baker et al. [1] use a Markov Decision Process to model agent or human behaviour. The model describes an agent moving on a 2-dimensional grid towards a goal, avoiding obstacles. The state is the position of the agent and locations of obstacles, resulting in approximately 70,000 states. One of three goals is recognized by Bayesian filtering. Krüger et al. [3] recognize human activities in a presentation scenario. A state in their model contains the location of up to three people and the currently executed activity. They distinguish 10 activities (sitting, presenting, discussing, walking to different locations) using a particle filter. As an example of inference without CSSM modeling, Dai et al. [21] recognize different meeting activities of three to five persons. They manually modelled a Dynamic Bayesian Network with two activity nodes (discussion, presentation, and various sub-activities), and different context nodes (e. g. locations and roles). They use exact inference using an exact Bayes Filter adopted to this particular DBN, showing that 250,000 states can be handled without approximations in this scenario. Accuracies have been evaluated for different nodes independently, distinguishing up to five different activity classes.

While the first two operate only on simulated data and action observations, the last two examples use real sensor data. Nonetheless, none of the scenarios contains fine-grained activities, where more than 10 classes of activities are distinguished. As opposed to 91.6 actions on average for our scenario, the scenarios presented here do not exhibit more than 20 actions that are executed. As a consequence, the results reported in previous studies may not be applicable to the larger scenarios arising from the use of CSSM methods. The question is whether the scalability issues we encountered can be resolved by suitable design decisions. To answer this question, we conducted a study on the feasibility of CSSM methods for reconstructing activity sequences of durative actions from noisy observation data in scenarios with millions of states. Considering the studies discussed above, this is the first attempt to analyze whether the CSSM method is applicable to problems of this scale. We therefore provide important evidence regarding the general applicability of the method. In addition, we provide data on the impact of several important modeling considerations – such as the choice of inference method – on the performance of the resulting system.

1.4 Study objectives

Objective of this study was to evaluate the applicability of CSSMs for the purpose of sequential state estimation in dynamic systems with very large state spaces and dense transition models. Focus was the application domain of tracking human activities. Study aims were answering the following two subsequent research questions:

Is it possible to achieve successful state estimation using CSSM models of everyday activities with large state spaces (containing hundreds of millions of states)?

Which modeling factors (duration model, action selection heuristics, inference algorithm, etc.) are relevant for achieving a good performance in CSSM-based inference?

These questions were reframed into two research hypotheses:

: A suitably parameterized CSSM model for a typical activity of daily living will achieve the same accuracy on average on a given estimation task as a conventional state space model built from training data when applied to the same task.

: A suitably parameterized CSSM model for a typical activity of daily living will achieve the same accuracy on average on a given estimation task as a conventional state space model built from training data when applied to the same task. : All CSSM modeling factors and their interactions have significant effects on the average accuracy achieved in state estimation using the CSSM model.

: All CSSM modeling factors and their interactions have significant effects on the average accuracy achieved in state estimation using the CSSM model.

The study focused on the applicability of the method, not on superiority with respect to other methods considering measures such as accuracy. The primary motivation of using CSSM-based methods is not that it gives a higher performance, but rather that it provides additional benefits for model construction such as use of prior knowledge and reusability (cp. Sec. 1.1). It was also not an objective to build a model with specific reusability properties or to show that CSSMs indeed provide the claimed benefits. A deeper investigation of these aspects is of interest only once it has been established that CSSMs are applicable to the inference task itself.

Materials and Methods

This section explains the methods and design of the empirical study. The study and all procedures were approved by the institutional review board of Rostock University (A 2014-0057). Participation was voluntary and all participants provided written informed consent. Data presented in this study is anonymous and does not allow identification of individual characteristics of participants.

The roadmap for testing both research hypotheses is: collect real-world data of an activity of daily living, build a suitable CSSM model, and evaluate performance of the model against the recorded data. Sec. 2.1 presents the trial setting, experimental procedure, and annotation methodology. The statistical model used for the experiments is described in Sec. 2.2, including the sensor model, action durations, and the DBN structure. Sec. 2.3 describes the two main inference methods used for the experiments: the particle filter and the marginal filter. The concrete experimental setup (choice of model and parameter combinations) and evaluation methodology for testing the research hypotheses  and

and  is described in Sec. 2.4.

is described in Sec. 2.4.

2.1 Empirical data

The use of empirical trial data was chosen for the following considerations:

Using simulated data (presumably from the same model that is used for inference) will exaggerate accuracy and overestimate the effect of action selection heuristics: if actions are simulated according to the same selection heuristics used for inference, the heuristic essentially knows which actions are executed. This is highly undesirable, as it will guide research on heuristics in the wrong direction.

Evaluating model behavior with respect to sensor data obtainable in real settings requires to have such data available for use as observations.

As long as we do not know whether prior knowledge provides enough information for building CSSM models, it seems prudent to use samples of real-world behavior as starting points for model construction, in order to arrive at symbolic models that have realistic structural complexity with respect to everyday behavior.

A certain amount of training data was required for building the baseline classifiers, against which the CSSM model was to be compared.

2.1.1 Trial setting

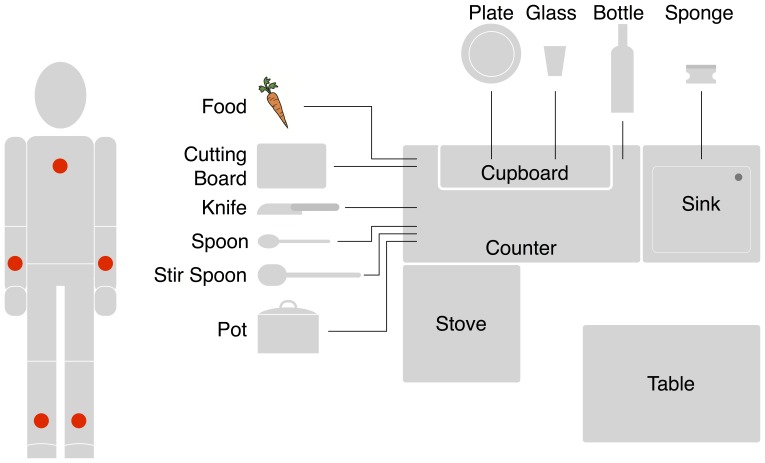

A typical meal time routine was selected as trial setting, consisting of the following major tasks: (i) Prepare meal (prepare ingredients; cook meal). (ii) Set table. (iii) Eat meal. (iv) Clean up and put away utensils. (A symbolic map of the spatial structure of the trial domain and the involved domain objects are given in Fig. 1; a more detailed task sequence is given in Table S1). Selection of this scenario is based on the following considerations:

Figure 1. Instrumentation and trial setting.

Left: Instrumentation of participants (red points indicate IMU positions). Right: Conceptual spatial layout (view from above) and domain objects of trial setting.

It combines a relevant activity of daily living (eating) and a relevant instrumental activity of daily living (meal preparation and cleanup) [22]. Assisting (instrumental) activities of daily living is an important application domain of assistive systems.

It covers as subtask (meal preparation) an activity that is used as functional measure for recording the level of cognitive support required by a person suffering from cognitive decline (the Kitchen Task Assessment, [23]). In addition, there is evidence that action languages can be used to model erroneous behavior specifically for this setting [24].

Kitchen activities are frequently used as trial settings for activity recognition methods (see Sec. 1.3.2 and [25]). A successful use of CSSM for this setting should allow many researchers to reproduce the results of this study in a similar environment.

The task has a non-trivial causal structure combining regions of high dispersion, where many different routes exist (often caused by permutation effects, when

actions can be executed in any order, such as setting the table or cleaning up kitchen utensils) with regions of low dispersion (preparing food and cooking food are strictly sequential), making the use of CSSM techniques meaningful.

actions can be executed in any order, such as setting the table or cleaning up kitchen utensils) with regions of low dispersion (preparing food and cooking food are strictly sequential), making the use of CSSM techniques meaningful.

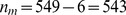

2.1.2 Subjects and sample size

Target of the study was the comparison of the CSSM approach to standard methods in a scenario of realistic complexity. Therefore, as merely relative comparisons between methods were required, the representativity of the subjects chosen for the trials was not an issue, allowing the use of a convenience sample of volunteers. As CSSMs are conceptually not built from training data but from prior knowledge, the main purpose of the empirical samples is to provide data for model comparison. Seven subjects were considered sufficient to detect relevant effects on accuracy, such as consistent inferiority of CSSM in comparison to standard methods, at the

level (significance level). The rationale here is that if CSSM can not be proven to be inferior at this level, then this justifies to spend the effort on a larger scale experiment.

level (significance level). The rationale here is that if CSSM can not be proven to be inferior at this level, then this justifies to spend the effort on a larger scale experiment.

With respect to designing the symbolic component of the CSSM system model, seven data sets were considered sufficient to allow a system designer to detect all relevant causal dependencies. Although there is no direct data on how much experimental data is required for building successful causal models, a weak argument can be found in the domain of usability research, where it is established that in interactive software five to seven subjects are sufficient to identify most usability problems – most situations where system behavior does not meet user expectations [26]. Furthermore, the causal model is not subject to the  law regarding the standard error of a parameter estimate, as at the symbolic level a single example is sufficient to infer a causal link.

law regarding the standard error of a parameter estimate, as at the symbolic level a single example is sufficient to infer a causal link.

For methodologically obvious reasons, a leave-one-out cross-validation would have been infeasible considering CSSM model construction: it would have required the availability of seven model engineers of identical qualification. Therefore, in order to not place the baseline models at a disadvantage relative to the CSSM model, they also were built on the complete data. Thus, CSSM and baseline performance can be expected to be exaggerated in absolute terms due to overfitting. However, as this study focuses on a comparison of modeling factors, absolute performance is of minor interest. Indeed, as the training-based baseline has more parameters as the CSSM model (cp. Sec. 4.2), this exaggeration should favor the baseline. This bias is actually desirable, as it is an additional safeguard against type I errors considering  .

.

2.1.3 Experimental procedure

Considering the study objectives, neither absolute motion trajectories nor absolute action duration were of relevance. Therefore, it was possible to use a simplified motion capturing environment where some of the kitchen utensils (for instance, the stove) were replaced by physical props (cp. [27]), and some actions (e.g., cooking) were shortened to bound overall experiment duration. (See Fig. S1 for the physical setup.)

The experimenter presented the experimental task verbally to the participants and explained the stage, the props, and their use. Afterwards, the participants were instrumented with motion capturing equipment. After the “start” signal, the participants would execute the task; the experimenter would monitor task execution and prompt the next step in case the participant got stuck. Within the causal dependencies of actions (food needs to be prepared before being cooked) the participants were free to choose the sequence of actions. The experiment was simultaneously recorded by a documenter on video to enable later annotation.

2.1.4 Sensor data and preprocessing

As prototypic examples for realistic sensor setups, a motion capturing system based on wearable inertial measurement units (IMUs) was chosen. This choice was motivated by the following considerations in favor of other setups such as RFID labeling [28], cameras [18], or various multi-modal setups [29]:

This sensor setup is used in several experiments by various researchers [30]–[32], simplifying a translation of the CSSM method to other available data sets.

As IMUs do not require the instrumentation of the environment, they are a technically and economically feasible choice for everyday environments.

As IMUs monitor a specific individual, identification problems do not arise (although such problems by design did not arise in the trial setting, they become relevant when translating results into the application domain). Likewise, environmental factors such as lighting conditions have no influence.

If absolute accuracy is not a major target, it is comparatively easy to set up IMU sensor models with reasonable performance.

Some researchers claim that “wearable sensors are not suitable for monitoring activities that involve complex physical motions and/or multiple interactions with the environment” [19]. It is therefore especially interesting to see whether a more refined system model is able to alleviate these problems.

The participants were instrumented with five IMUs, fixed at lower legs, lower arms, and upper back. These sensor locations were chosen to be compatible with sensor data available from other experiments [32]. For each sensor three axis acceleration and angular rates were recorded, with a sampling rate of 120 Hz. Although provided by the sensing platform, magnetometer readings as well as higher order features (such as joint angles) were discarded, as such features may be not available in low cost equipment. The resulting data stream of  signals was segmented into frames using a simple window-based segmentation with a window size of 128 samples and

signals was segmented into frames using a simple window-based segmentation with a window size of 128 samples and  overlap, giving a frame rate of 3.75 Hz. For each frame, mean, variance, skew, kurtosis, peak, and energy were computed for each signal. This stream of 180-dimensional feature vectors at 3.75 Hz was then subjected to dimension reduction by applying principal component analysis to the full set of feature vectors, choosing the loadings of the factors corresponding to the

overlap, giving a frame rate of 3.75 Hz. For each frame, mean, variance, skew, kurtosis, peak, and energy were computed for each signal. This stream of 180-dimensional feature vectors at 3.75 Hz was then subjected to dimension reduction by applying principal component analysis to the full set of feature vectors, choosing the loadings of the factors corresponding to the  largest eigenvalues as effective observations. (See below for the method of choosing

largest eigenvalues as effective observations. (See below for the method of choosing  .)

.)

Additionally, the collected data was annotated based on the video logs. This is done in order to provide a target label  for every observation

for every observation  such that methods for supervised learning can be applied. Furthermore, comparing target values with the values estimated from observation data is used for quantifying the performance of the estimation procedure. These labels are called “ground truth”, as they conceptually provide a symbolic representation of the true state of the world at time

such that methods for supervised learning can be applied. Furthermore, comparing target values with the values estimated from observation data is used for quantifying the performance of the estimation procedure. These labels are called “ground truth”, as they conceptually provide a symbolic representation of the true state of the world at time  . As CSSMs provide causal representation of the human behavior, the underlying annotation has to be causally correct, too. However, in reality, labels are a finite set

. As CSSMs provide causal representation of the human behavior, the underlying annotation has to be causally correct, too. However, in reality, labels are a finite set  where besides the equality relation no other algebraic structure on

where besides the equality relation no other algebraic structure on  exists. For that reason one has to ensure that the produced annotation represents a causal structure. The detailed procedure of annotating the data and ensuring its causal correctness can be found in Appendix S3. The annotations for subject S1 are provided in Table S3.

exists. For that reason one has to ensure that the produced annotation represents a causal structure. The detailed procedure of annotating the data and ensuring its causal correctness can be found in Appendix S3. The annotations for subject S1 are provided in Table S3.

2.2 Statistical model

The statistical model formally describes the framework and assumptions of the model for the kitchen task scenario. This section briefly describes the model for the inference LTS (iLTS), including the sensor model, duration model, and action selection heuristics. For a discussion of the difference between the inference LTS and the annotation LTS (aLTS) we refer to Appendix S3. Detailed discussions of the statistical model and its development process is given in Appendix S4. Finally, the baseline models (QDA and HMM) are presented briefly, which are used as comparison against the CSSM model.

2.2.1 CSSM model structure

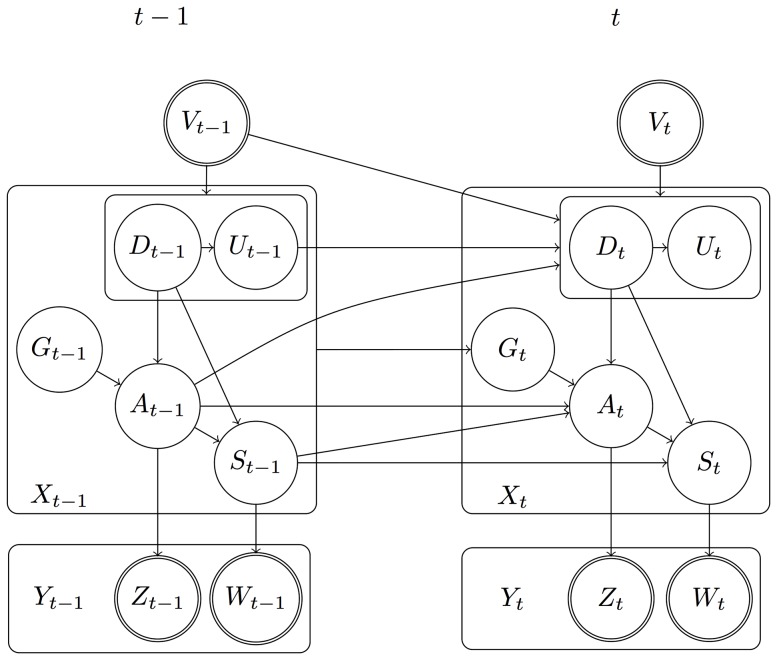

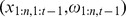

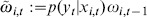

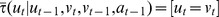

For the probabilistic model of Sec. 1.2 (see Appendix S2 for details), a DBN with the structure given in Fig. 2 was used.  is the observation data for time step

is the observation data for time step  , i. e. the sensor data as discussed in Sec. 2.1.4.

, i. e. the sensor data as discussed in Sec. 2.1.4.  is the associated time stamp, required to be strictly increasing.

is the associated time stamp, required to be strictly increasing.  defines the hidden state. For this study,

defines the hidden state. For this study,  , the current goal, could be assumed to be constant, namely that the user has prepared the meal, eaten, and cleaned afterwards. A new action is selected according to the action selection heuristic

, the current goal, could be assumed to be constant, namely that the user has prepared the meal, eaten, and cleaned afterwards. A new action is selected according to the action selection heuristic  , which incorporates the distance from the current state to the goal (for more details on action selection heuristics, see Sec. 4.1.5 of Appendix S4).

, which incorporates the distance from the current state to the goal (for more details on action selection heuristics, see Sec. 4.1.5 of Appendix S4).  is the LTS state for time step

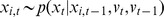

is the LTS state for time step  : either the result of applying the new action to the previous state, or by carrying over the old state. For the purpose of this study, actions could be assumed to be deterministic and with instantaneous effect. In contrast to the model defined in Sec. 1.2, actions in our model may last longer than a single time step. A model was chosen where multiple observations may correspond to a single action. This model introduces a real-valued random variable

: either the result of applying the new action to the previous state, or by carrying over the old state. For the purpose of this study, actions could be assumed to be deterministic and with instantaneous effect. In contrast to the model defined in Sec. 1.2, actions in our model may last longer than a single time step. A model was chosen where multiple observations may correspond to a single action. This model introduces a real-valued random variable  representing the starting time of an action

representing the starting time of an action  and a boolean random variable

and a boolean random variable  signaling termination status of the previous action

signaling termination status of the previous action  .

.

Figure 2. CSSM DBN structure.

Boxes represent tuples of random variables. An arc starting/ending at a box ( = a tuple) represents a set of arcs connected to the tuple's components. Nodes with double outline signify observed random variables.

As the sensor model, all actions  of a given class

of a given class  share the same observation distribution, each being a multivariate normal distributions with unconstrained covariance. Although there is no reason to believe that the observation data is particularly well represented by this model, it was found to perform reasonably well in the baseline models, justifying its further use in this study. The 16 action classes and their empirical frequencies can be seen in Fig. S3. For example, an action class is TAKE, while the actions belonging to it are take carrot, take bottle, take spoon etc. As alternative to the IMU sensors, a location-based model was set up, giving categorical observations (place names) of the

share the same observation distribution, each being a multivariate normal distributions with unconstrained covariance. Although there is no reason to believe that the observation data is particularly well represented by this model, it was found to perform reasonably well in the baseline models, justifying its further use in this study. The 16 action classes and their empirical frequencies can be seen in Fig. S3. For example, an action class is TAKE, while the actions belonging to it are take carrot, take bottle, take spoon etc. As alternative to the IMU sensors, a location-based model was set up, giving categorical observations (place names) of the  state component. The observations themselves were taken from the annotations of each subject, where the locations of the protagonist (3 places) and the food (6 places) were used (see Table S5).

state component. The observations themselves were taken from the annotations of each subject, where the locations of the protagonist (3 places) and the food (6 places) were used (see Table S5).

For simplification it was assumed that all actions of a given class share the same action duration distribution. Note that duration distributions with large, possibly infinite, support increase inference complexity. In order to determine this effect, an instance based and a parametric duration model was built. The instance based model was given by the corresponding empirical distribution function, that is by the observed action durations from the annotations. For the parametric models, the distribution giving the maximum likelihood was selected from a set of candidate distributions (including Cauchy, exponential, and lognormal) the parameters of which were fitted to the observed class durations.

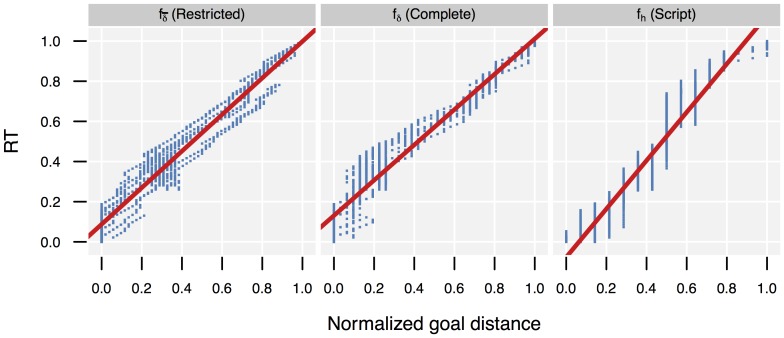

As primary goal-driven action selection feature, the goal distance feature  as discussed in Appendix S2 was chosen. As computing goal distances may become intractable for large models, two approximations were considered in this study:

as discussed in Appendix S2 was chosen. As computing goal distances may become intractable for large models, two approximations were considered in this study:

A goal distance heuristic

, that assigns heuristic distances to LTS states based on prior knowledge, identifying 14 serial task steps there were used to define a map from LTS state

, that assigns heuristic distances to LTS states based on prior knowledge, identifying 14 serial task steps there were used to define a map from LTS state  to remaining script steps

to remaining script steps  .

.A restricted goal distance feature

. Here, only those LTS states were considered that are visited when using the annotations as exact observations. Restricted goal distance

. Here, only those LTS states were considered that are visited when using the annotations as exact observations. Restricted goal distance  should give an upper limit to the gain achievable by a goal distance measure.

should give an upper limit to the gain achievable by a goal distance measure.

To gain insight on the effect of weight factors, each of these features was tested with the weight values  , using exponential probing.

, using exponential probing.

2.2.2 Baseline models

Two baseline models for estimating the action class from observation data were built: a quadratic discriminant model (QDA) with one category for each action class and a hidden Markov model (HMM) with one state for each class. The QDA model was constructed from the sensor models  and the priors

and the priors  , given by the frequencies of the action classes in the data set annotations. The HMM transition matrix was computed by counting the class-transition frequencies in the data set annotations, the

, given by the frequencies of the action classes in the data set annotations. The HMM transition matrix was computed by counting the class-transition frequencies in the data set annotations, the  were used as observation model. The baseline models were used to establish target values for estimation accuracy and to select between alternative observation models (see also Sec. 2.4).

were used as observation model. The baseline models were used to establish target values for estimation accuracy and to select between alternative observation models (see also Sec. 2.4).

2.3 Inference methods

Due to the expected size of the state space, exact methods are infeasible and approximate methods for inference were selected. In order to assess the effect of inference method on performance, two methods were compared: a particle filter (PF) and a marginal filter (MF), a variant of the D-condensation algorithm described in [8].

2.3.1 The particle filter

The PF maintains a vector of  weighted samples

weighted samples  where

where  and

and  such that the density

such that the density  approximates the joint filtering distribution. A standard bootstrap filter was used [33] where the system model serves as proposal function: given a sample vector

approximates the joint filtering distribution. A standard bootstrap filter was used [33] where the system model serves as proposal function: given a sample vector  and a new observation

and a new observation  , a new sample vector

, a new sample vector  was produced by drawing

was produced by drawing  , setting

, setting  and normalizing

and normalizing  . The effective number of samples is computed from the weights as

. The effective number of samples is computed from the weights as  [34]. If this number drops below a threshold, resampling is performed. The filter step complexity of PF is

[34]. If this number drops below a threshold, resampling is performed. The filter step complexity of PF is  . If

. If  is fixed, this is

is fixed, this is  .

.

Essentially, a PF represents the probability of a point in state space by the density of samples in the vicinity of this point. This works very well in continuous state spaces where a meaningful concept of “distance” can be defined. In these domains, all particles typically occupy different points in state space (with the exception of resampling time, when particles are copied); there are as many different state samples as there are particles. In discrete categorical spaces, this does not hold any more. There is no “distance” between points in state. Probabilities have to be represented by particle weights – and as a PF strives for all particles to have equal weight, this results in probabilities to be represented by the number of particles in this state (explaining the “particle clinging” phenomenon often observed in PF applications). In discrete categorical spaces, there are usually much more particles than states. PF thus may perform suboptimal in discrete categorical spaces with high complexity – which are created by CSSM models.

2.3.2 The marginal filter

The MF is tailored towards categorical discrete spaces, where there is no notion of distance, but where there is a chance for trajectories to end up in the same state (consider  actions that need to be done in any order: there are

actions that need to be done in any order: there are  trajectories, all ending in the same state). The MF uses a finite set of states for approximating the marginal filtering distribution by maintaining a density

trajectories, all ending in the same state). The MF uses a finite set of states for approximating the marginal filtering distribution by maintaining a density  with finite support,

with finite support,  . Being finite,

. Being finite,  can be represented by a set of ordered pairs (implementationally, these sets were built using tries, see Sec. 4.2.1 of Appendix S4). The value of

can be represented by a set of ordered pairs (implementationally, these sets were built using tries, see Sec. 4.2.1 of Appendix S4). The value of  is computed by summing over all trajectories that arrive in

is computed by summing over all trajectories that arrive in  at time

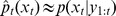

at time  . The MF therefore should give a better approximation than the PF in categorical domains, specifically with high dispersion, as here many different trajectories could lead to the same state. MF inference proceeds in two stages. First, prediction

. The MF therefore should give a better approximation than the PF in categorical domains, specifically with high dispersion, as here many different trajectories could lead to the same state. MF inference proceeds in two stages. First, prediction  and uncorrected posterior

and uncorrected posterior  are computed using

are computed using

| (1) |

| (2) |

If  and

and  have finite support (specifically, if

have finite support (specifically, if  is deterministic), then both computations remain tractable since only a finite number of states is reachable from each state in

is deterministic), then both computations remain tractable since only a finite number of states is reachable from each state in  . Thus

. Thus  is finite too. The second stage computes

is finite too. The second stage computes  from

from  . In general,

. In general,  will contain more than

will contain more than  states, requiring pruning. For this study,

states, requiring pruning. For this study,  was computed from

was computed from  by selecting the

by selecting the  most probable states from

most probable states from  , normalizing the values so that

, normalizing the values so that  sums to one. The bias introduced by this method was considered negligible for the purpose of this study.

sums to one. The bias introduced by this method was considered negligible for the purpose of this study.

If the number of actions applicable to a state can be considered constant, the filter step complexity of MF is  , giving

, giving  if

if  is fixed. The term

is fixed. The term  is due to the sorting procedure implicit to the pruning process. For computing the approximate marginal smoothing density

is due to the sorting procedure implicit to the pruning process. For computing the approximate marginal smoothing density  and the MAP (maximum a-posteriori) sequence

and the MAP (maximum a-posteriori) sequence  using an adapted Viterbi algorithm [35], a complexity of

using an adapted Viterbi algorithm [35], a complexity of  can be achieved.

can be achieved.

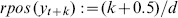

In general, the support of  will not be finite. However, the frame rate was considered high enough to render the error introduced by approximating

will not be finite. However, the frame rate was considered high enough to render the error introduced by approximating  with a suitable point distribution – positioned for instance at the mean or the new time stamp

with a suitable point distribution – positioned for instance at the mean or the new time stamp  – negligible. For both PF and MF the approximation

– negligible. For both PF and MF the approximation  was used in this study. Using the time stamp values

was used in this study. Using the time stamp values  fixed by observation as potential

fixed by observation as potential  values supports state identification in the MF.

values supports state identification in the MF.

The PF was parameterized with  particles. PF resampling threshold was set to

particles. PF resampling threshold was set to  states. The MF used

states. The MF used  states with discrete empirical timing and

states with discrete empirical timing and  with parametric timing. (

with parametric timing. ( is the number of “representation units” available to filter

is the number of “representation units” available to filter  .) These MF and PF parameters had shown reasonable results in preliminary tests.

.) These MF and PF parameters had shown reasonable results in preliminary tests.

2.4 Experimental analysis

The experimental design and evaluation methodology used for establishing the hypotheses  and

and  (Sec. 1.4) is described in this section. In this context, an experiment refers to the application of one of the inference algorithms on all data sets, followed by a performance analysis. This includes the set-up of different algorithms (marginal or particle filter) and parameters (e. g. observation models), and describes which analysis methods have been chosen for testing the hypotheses.

(Sec. 1.4) is described in this section. In this context, an experiment refers to the application of one of the inference algorithms on all data sets, followed by a performance analysis. This includes the set-up of different algorithms (marginal or particle filter) and parameters (e. g. observation models), and describes which analysis methods have been chosen for testing the hypotheses.

2.4.1 Experimental design

The experimental design targeted the following objectives

Selection of the observation models relevant to analyzing CSSM performance.

Comparison of CSSM and baseline models (test of

).

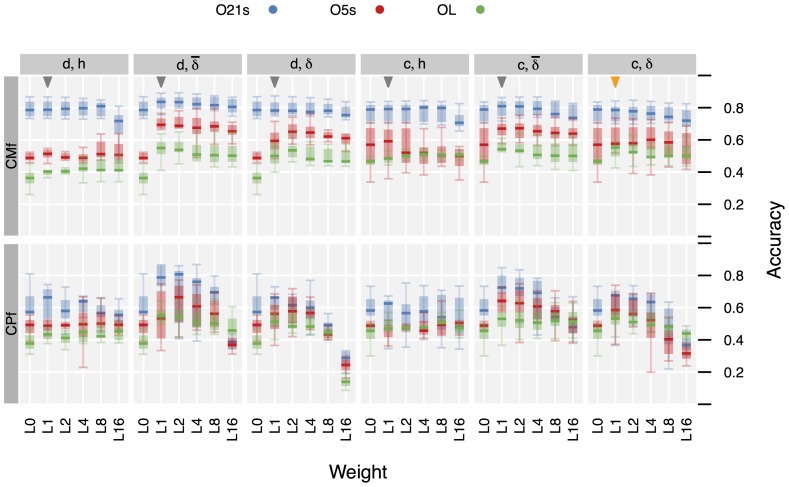

).Factor analysis of the effect of CSSM configuration parameters on CSSM performance (test of

).

).

For model comparison ( ), using the best result of some standard parameter search procedure would be sufficient. However, understanding how parameter variations affect model performance and how parameters interact (

), using the best result of some standard parameter search procedure would be sufficient. However, understanding how parameter variations affect model performance and how parameters interact ( ) requires a systematic multi-factorial experimental design.

) requires a systematic multi-factorial experimental design.

Table 3 lists all factors and levels that determine the experimental configurations resulting from the discussions in the preceding sections. Target gives the distribution (or MAP sequence) that is estimated by the inference process. Model describes the system model used for representing temporal correlations. Mode is the inference mode used for the system model based on the CSSM approach. Observations describes the different models for (continuous) IMU observations, using either original or scrambled (ensuring i.i.d. observations, see Sec. 4.1.3 of Appendix S4) sequences, and the categorical location model. For evaluating the effect of  , the number of principal components, a selection according to the Fibonacci series was chosen (Fibonacci probing). Distance, Weight, and Duration represent the different tuning parameters of the CSSM system model discussed above.

, the number of principal components, a selection according to the Fibonacci series was chosen (Fibonacci probing). Distance, Weight, and Duration represent the different tuning parameters of the CSSM system model discussed above.

Table 3. Factors and levels for experimental configurations.

| Factor | Level | Comment |

| Target | f | filtering distribution

|

| s | smoothing distribution

|

|

| v | MAP-sequence

|

|

| Model | QDA | (no system model) |

| HMM | HMM transition matrix | |

| C | CSSM model | |

| Mode | M | Marginal filter |

| P | Particle filter | |

| Observations | Oko | IMU data using  principal components principal components |

| Oks | IMU data, scrambled | |

| OL | Locations (categorical) | |

| Distance |

|

True goal distance, complete state space |

|

True goal distance, restricted state space | |

|

Heuristic goal distance, using script | |

| Weight |

L

|

|

| Duration |

|

continuous parametric duration models |

|

discrete duration models based on empirical distribution function |

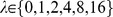

Target, Model, and Mode are combined to a Method factor with the valid levels QDA, HMMf, HMMs, CMf, CMs, CMv, CPf, where for instance “HMMs” means an HMM model with smoothing distribution as estimation target, “CPf” a CSSM model with particle filter and filtering distribution as target, and “CMv” a CSSM model with target MAP-sequence computed using the Viterbi algorithm. A detailed summary of all factor meanings is given in Table 3.

Finally, the factor “Subject” with levels “S

” where

” where  represents the seven data sets being available for experiments.

represents the seven data sets being available for experiments.

Using these factors, the following experimental configurations were selected:

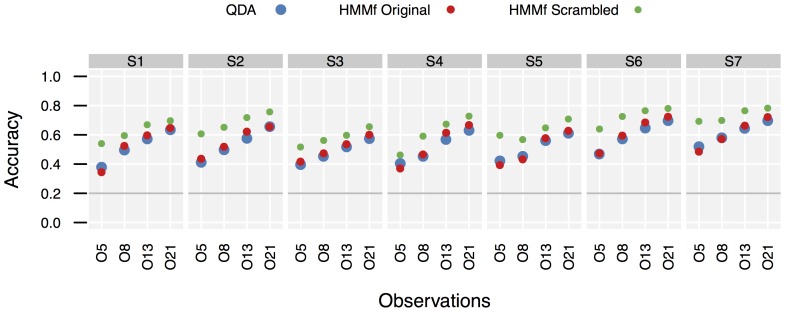

– Baseline establishing discriminative power of observations. As this model does not use any information on temporal correlations, using original or scrambled observations will have no influence on performance. (4 Configurations)

– Baseline establishing discriminative power of observations. As this model does not use any information on temporal correlations, using original or scrambled observations will have no influence on performance. (4 Configurations)

– Baseline for establishing the impact (i) of information on temporal correlations, (ii) of different observation models, and (iii) of scrambling. The outcome of these experiments was used for selecting the relevant observation models for the CSSM experiments. For each target, the best performing HMM baseline was selected as basis of comparison for the CSSM experiments. (

– Baseline for establishing the impact (i) of information on temporal correlations, (ii) of different observation models, and (iii) of scrambling. The outcome of these experiments was used for selecting the relevant observation models for the CSSM experiments. For each target, the best performing HMM baseline was selected as basis of comparison for the CSSM experiments. ( Configurations)

Configurations) – CSSM evaluation experiments. The restriction to

– CSSM evaluation experiments. The restriction to  results from the baseline analysis. OL implements an observation model based on locations of two objects. For comparing marginal filter and particle filter, it was considered that already the f target would allow a substantiated judgment. This results in

results from the baseline analysis. OL implements an observation model based on locations of two objects. For comparing marginal filter and particle filter, it was considered that already the f target would allow a substantiated judgment. This results in  configurations.

configurations.

A within-subjects design was used where each configuration was applied to all of the data sets, resulting in  experiments. (We report these numbers to provide an intuition for the number of data points shown in the plots of Sec. 2.4.2.)

experiments. (We report these numbers to provide an intuition for the number of data points shown in the plots of Sec. 2.4.2.)

2.4.2 Evaluation methods

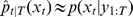

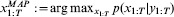

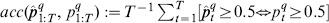

Performance comparisons for testing research hypothesis  were based on point estimates for the action class at time

were based on point estimates for the action class at time  , given by the obvious method. (This means choosing the most probable action class for each time

, given by the obvious method. (This means choosing the most probable action class for each time  given the estimated filtering resp. smoothing distribution for f and s targets. For the MAP sequence the action class at time

given the estimated filtering resp. smoothing distribution for f and s targets. For the MAP sequence the action class at time  is directly given by the action selected for time

is directly given by the action selected for time  .) The estimates were collected into a confusion matrix

.) The estimates were collected into a confusion matrix  , where

, where  is the number of time-steps where the action class was actually

is the number of time-steps where the action class was actually  and action class

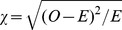

and action class  has been estimated. From the resulting confusion matrices, the accuracy

has been estimated. From the resulting confusion matrices, the accuracy  ) was used as primary comparison measure. The target “action class” was chosen in favor of the more complex targets such as “ground action” in order to allow the training-based baseline models (HMM) a sufficient amount of training data for estimating the transition model. For the QDA model, a finer grained target than provided by the observation model would have been meaningless anyway as the QDA model does not support temporal information and therefore can not disambiguate actions indistinguishable by the observation model.

) was used as primary comparison measure. The target “action class” was chosen in favor of the more complex targets such as “ground action” in order to allow the training-based baseline models (HMM) a sufficient amount of training data for estimating the transition model. For the QDA model, a finer grained target than provided by the observation model would have been meaningless anyway as the QDA model does not support temporal information and therefore can not disambiguate actions indistinguishable by the observation model.

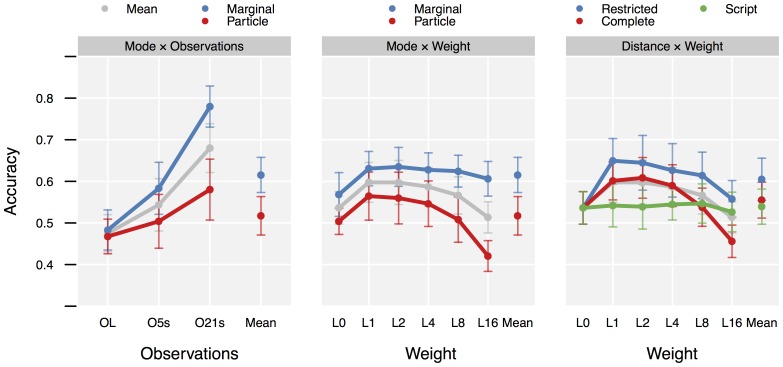

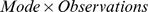

Concerning research hypothesis  , multi-factorial repeated measures analysis of variance (rANOVA) was used to analyze the effects of the configuration factors Mode, Observations, Distance, Weight, and Duration on Accuracy for CSSM configurations.

, multi-factorial repeated measures analysis of variance (rANOVA) was used to analyze the effects of the configuration factors Mode, Observations, Distance, Weight, and Duration on Accuracy for CSSM configurations.

Accuracy corresponds to assuming a 0-1-loss function for a decision theoretic comparison of classifier performance, where the loss of a classification decision at time  is counted independently of previous and following decisions. If no additional knowledge is available, this correctly reflects system performance. Accuracy is also the performance measure most frequently used in present research (cp. Sec. 1.3.2). Therefore, it was selected as primary measure for comparing model performance.

is counted independently of previous and following decisions. If no additional knowledge is available, this correctly reflects system performance. Accuracy is also the performance measure most frequently used in present research (cp. Sec. 1.3.2). Therefore, it was selected as primary measure for comparing model performance.

Although it has the advantage of being a well established and widely understood performance measure, Accuracy has the drawback that it assigns the same value to causally different sequences. Consider the true sequence (on, off, off) for which two hypothetical estimates  and

and  are given. Both estimates have the same accuracy (2/3), but their causal consequences are different. In addition,

are given. Both estimates have the same accuracy (2/3), but their causal consequences are different. In addition,  consists of two actions, while

consists of two actions, while  has three actions. Thus,

has three actions. Thus,  can be regarded as “better” than

can be regarded as “better” than  , since

, since  mistakes just the action durations while

mistakes just the action durations while  gets the causal sequence wrong. Metrics for measuring sequence alignment – such as edit distances (e.g., the Levenshtein distance) or dynamic time warping (DTW) – may provide a more appropriate measure with respect to these considerations (cp. [36]). However, as it is not yet known in how far the implicit assumptions of these metrics agree with the reality of the application domain considered in this study, performance comparisons focused on accuracy. (A short analysis of edit distance and DTW is given in Fig. S7.)

gets the causal sequence wrong. Metrics for measuring sequence alignment – such as edit distances (e.g., the Levenshtein distance) or dynamic time warping (DTW) – may provide a more appropriate measure with respect to these considerations (cp. [36]). However, as it is not yet known in how far the implicit assumptions of these metrics agree with the reality of the application domain considered in this study, performance comparisons focused on accuracy. (A short analysis of edit distance and DTW is given in Fig. S7.)

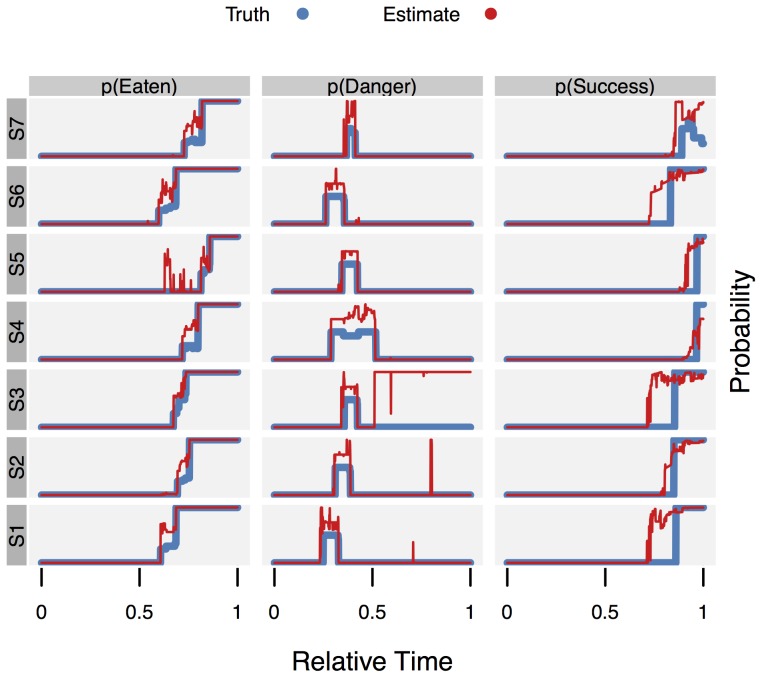

Besides estimating the action (class) sequence, the CSSM method also allows to estimate the probability of states and, more generally, the truth value of predicates on state. To provide data on the validity of the estimates obtainable, three sample predicates corresponding to three situations of potential interest were established:

“Danger” – a potentially dangerous situation exist (i. e., the stove is on):

.

.“Eaten” – the protagonist has finished the dinner:

.

.“Success” – the protagonist has finished the complete routine (and may be engaged with additional cleanup):

.

.

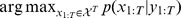

The ground truth for these predicates was computed by the same method used for generating the location observations. This ground truth  is a Bernoulli distribution with parameter

is a Bernoulli distribution with parameter  giving the probability of

giving the probability of  being true. Usually

being true. Usually  is deterministic with

is deterministic with  either 0 or 1, except for situations where the aLTS model is ambiguous given the observed actions.

either 0 or 1, except for situations where the aLTS model is ambiguous given the observed actions.

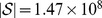

The estimated filtering probability  of predicate

of predicate  being true at time

being true at time  is given by the parameter estimate

is given by the parameter estimate  . For the smoothing target,

. For the smoothing target,  is used in place of

is used in place of  . For comparing the estimated distribution

. For comparing the estimated distribution  with the true distribution

with the true distribution  the Jensen-Shannon distance

the Jensen-Shannon distance  was used. (

was used. ( is the square root of the Jensen-Shannon divergence [37].) As measure for the sequence of distributions

is the square root of the Jensen-Shannon divergence [37].) As measure for the sequence of distributions  the mean Jensen-Shannon distance was used, given by

the mean Jensen-Shannon distance was used, given by  . In addition, a conventional accuracy value was computed by

. In addition, a conventional accuracy value was computed by  . This uses point estimates, assuming

. This uses point estimates, assuming  to be true if its probability is at least 0.5.

to be true if its probability is at least 0.5.

Results

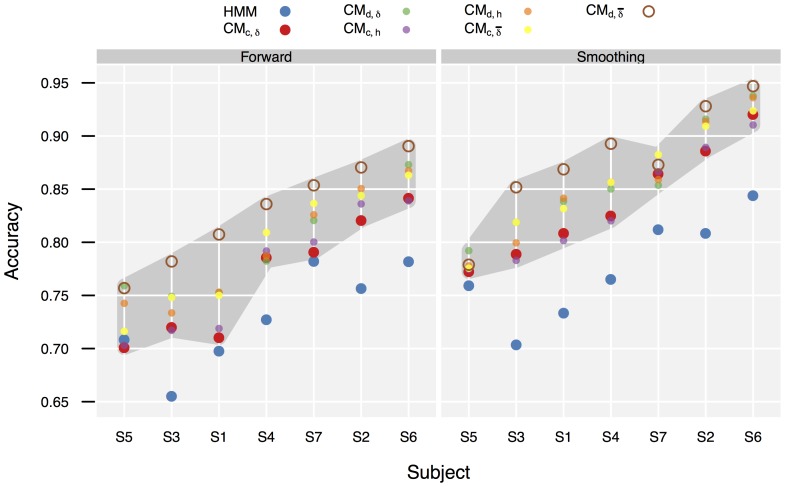

This section provides results of the experiments as described in Sec. 2.4. First, some empirical data on the model and the annotations themselves are given. They provide some intuition about the complexity of the experimental setting. Then, the performance of the baseline models is compared with the CSSM models and inference algorithms. The key result is the confirmation of hypothesis  . Hypothesis

. Hypothesis  can be accepted after assessing the influence of the parameters (factors presented in Sec. 2.4.1). Finally, results on the ability of state predicate estimation are given.

can be accepted after assessing the influence of the parameters (factors presented in Sec. 2.4.1). Finally, results on the ability of state predicate estimation are given.

3.1 Empirical data

3.1.1 Data sets and annotations

The data sets obtained have a mean length of 91.6 (standard deviation  ) action steps and a mean duration of 950 (

) action steps and a mean duration of 950 ( ) observation steps (cf. Table S2). The shortest observed plan had a length of 66 causal actions (that is, not counting “WAIT” actions). This is considerably longer than has been found in previous research (cp. Sec. 1.3).

) observation steps (cf. Table S2). The shortest observed plan had a length of 66 causal actions (that is, not counting “WAIT” actions). This is considerably longer than has been found in previous research (cp. Sec. 1.3).

Plots of the preprocessed observation data (PCA transformed) and action classes for the ground truth annotation are given in Fig. S4. The detailed annotations for subject 1 are given in Table S3.

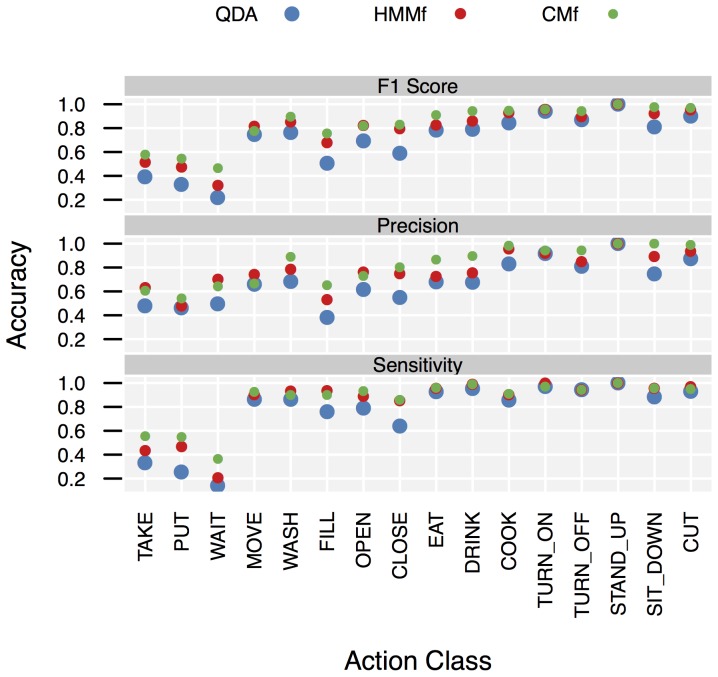

3.1.2 aLTS and iLTS model

The aLTS model defines 16 action classes and 82 action instances. Thus performance evaluations would be based on 16 target classes. This is in the highest quartile of N.classes and it exceeds the number of target classes that have been considered in previous studies on CSSMs (cp. Sec. 1.3.2). The action classes occupied between 0.5% of total time (TURN_ON, TURN_OFF) and 19.8% (WASH). See Fig. S3 for details.

In the iLTS model, these were translated into 44 action schemata (of which 9 were parameterized), resulting in 99 ground actions, produced by the instantiation of action schemata with iLTS domain constants (objects and slot values). The iLTS model defines 18 state variables based on 14 domain objects and 11 slot types (not all objects use all slot types). For further details, see Table S4. In total  iLTS states were reachable from the initial state using simple breadth-first search (bfs). The median branching factor was

iLTS states were reachable from the initial state using simple breadth-first search (bfs). The median branching factor was  (interquartile range

(interquartile range  ). Bfs tree depth was 66 steps, which means that all action sequences taking 66 or more steps will allow all iLTS states to become possible. (The fact that the shortest observed action sequence took 66 steps is pure coincidence.) If all actions have a non-zero probability for a single-step duration (which is the case for parametric duration models), this means that already after 66 observation steps, all states have non-zero probability. The maximum goal distance was 40.

). Bfs tree depth was 66 steps, which means that all action sequences taking 66 or more steps will allow all iLTS states to become possible. (The fact that the shortest observed action sequence took 66 steps is pure coincidence.) If all actions have a non-zero probability for a single-step duration (which is the case for parametric duration models), this means that already after 66 observation steps, all states have non-zero probability. The maximum goal distance was 40.