Abstract

Studies of neuron-behaviour correlation and causal manipulation have long been used separately to understand the neural basis of perception. Yet these approaches sometimes lead to drastically conflicting conclusions about the functional role of brain areas. Theories that focus only on choice-related neuronal activity cannot reconcile those findings without additional experiments involving large-scale recordings to measure interneuronal correlations. By expanding current theories of neural coding and incorporating results from inactivation experiments, we demonstrate here that it is possible to infer decoding weights of different brain areas at a coarse scale without precise knowledge of the correlation structure. We apply this technique to neural data collected from two different cortical areas in macaque monkeys trained to perform a heading discrimination task. We identify two opposing decoding schemes, each consistent with data depending on the nature of correlated noise. Our theory makes specific testable predictions to distinguish these scenarios experimentally without requiring measurement of the underlying noise correlations.

Author summary

The neocortex is structurally organized into distinct brain areas. The role of specific brain areas in sensory perception is typically studied using two kinds of laboratory experiments: those that measure correlations between neural activity and reported percepts, and those that inactivate a brain region and measure the resulting changes in percepts. The two types of experiments have generally been interpreted in isolation, in part because no theory has been able combine their outcomes. Here, we describe a mathematical framework that synthesizes both kinds of results, giving us a new way to assess how different brain areas contribute to perception. When we apply our framework to experiments on behaving monkeys, we discover two models that can explain the perplexing finding that one brain area can predict an animal’s reported percepts, even though the percepts are not affected when that brain area is inactivated. The two models ascribe dramatically different efficiencies to brain computation. We show that these two models could be distinguished by a proposed experiment that measures correlations while inactivating different brain areas.

Introduction

Although much is known about how single neurons encode information about stimuli, how neurons contribute to reported percepts is less well understood[1]. The latter, called the “decoding problem”, seeks to identify how the brain uses the information contained in neuronal activity. Although some studies have sought to understand principled ways to decode population responses in the presence of correlated noise [2–12], the rules by which the brain actually integrates information across noisy neurons remain unclear.

Neuroscientists have traditionally investigated this question using two distinct approaches: causal or correlational. In causal approaches, experimenters selectively activate or inactivate brain regions of interest, and measure resulting perceptual or behavioural changes. In correlational approaches, experimenters measure correlations between behavioural choices and neuronal activity, typically quantified by ‘choice probability’ (reviewed in Ref. [13]) or, more straightforwardly, by ‘choice correlation’ (CC)[14,15]. If CCs reflect a functional link between neurons and behaviour, one would expect brain areas with greater CCs to contribute more strongly to behaviour. This naïve view is contradicted by recent results that reveal a striking dissociation between the magnitude of CCs and the effects of inactivation across brain systems in rodents[16,17] and primates[18,19]. In hindsight, this apparent disagreement is not all that surprising because the two techniques, on their own, yield results whose interpretation is fraught with major difficulties.

For instance, the CC of a neuron depends not only on its direct influence on behaviour but also on the influence of all the other neurons with which it is correlated. As an extreme example, a neuron that is not decoded at all could be correlated with one that is, and thus exhibit choice-related activity[9]. Recent theoretical results show that it is possible, in principle, to use knowledge of noise correlations to extract decoding weights from CCs[14]. However, directly measuring the correlational structures that matter for decoding may be extremely difficult[20]. This problem is compounded by the fact that behaviourally relevant information may be distributed across neurons in multiple brain areas, so neuronal CCs in one area may depend on activity in other areas. Moreover, in causal approaches, inactivation of one brain area could lead to a dynamic recalibration of decoding weights from other areas. Therefore, changes in behavioural thresholds following inactivation may not be commensurate with the contribution of the area.

When analysed in conjunction, however, results from correlational and causal studies may together provide constraints that can be used to precisely determine the relative contributions of the brain areas involved. In this work, we extend recent theories[14,15,20] and propose a general framework for inferring decoding weights of neurons across multiple brain areas using CCs and changes in behavioural threshold following inactivation. The two quantities together provide a direct estimate of the relative contributions of different areas without needing to precisely measure the correlation structure. This analysis is based on coarse-grained models of decoded neural noise that is correlated across populations. We demonstrate our technique by applying it to data from macaque monkeys trained to perform a heading discrimination task. In this task, there is a known discrepancy[18,21–23] between CCs and the effects of inactivating two brain areas: although neurons in the ventral intraparietal (VIP) area were found to be substantially better predictors of the animal’s choices than dorsal medial superior temporal (MSTd) neurons, performance is impaired by inactivating MSTd but not VIP. We use our framework to extract key properties of the decoder that can account for these counter-intuitive results. To our surprise, we find that, depending on the structure of correlated noise, experimental data are consistent with two opposing schemes that attribute either too much or too little weight to VIP. We use our theory to make specific testable predictions to distinguish these schemes using CCs measured during inactivation, again without measuring the detailed noise correlations.

Results

Our framework for understanding neural decoding involves three main ingredients: an analysis of choice correlations and discrimination thresholds, two classes of models for noise correlations with different information content, and coarse-grained descriptions of those models for multiple populations. Our analysis proceeds as follows. We begin in section Decoding framework with some core definitions for neural population responses and estimation tasks based on decoding from multiple populations. Then, in the section Analysis of choice correlations, we describe the expected patterns of choice-related activity under the assumptions of optimal and suboptimal decoding. These patterns depend on the structure of neural noise, so in the section, Models of neural variability, we next describe two fundamentally different noise models, whose information content is extensive (i.e. growing with population size) or limited. We then refine these models for multiple populations in the section Coarse-grained noise models for multiple populations. Next we return to choice correlations to explore consequences of this coarse-grained description in the section Coarse-grained choice correlations. Our general theoretical analysis concludes in Combining choice correlations and inactivation effects to infer decoding of distinct populations. Finally, we specialize this theory to two populations as we apply it to experimental data.

Some readers wishing to skip some of the mathematical details may wish to read the sections Decoding framework, which sets out the basic concepts we invoke, and Models of neural variability, which describes the two main noise models we contrast, before jumping to Application to neural data.

Decoding framework

We consider a linear feedforward network in which the firing rates r = [r1,…,rN] of the N neurons are tuned to the stimulus s as f(s) = 〈r|s〉, where the angle brackets denote an average over trials conditioned on the stimulus. The responses on a single trial differ from their averages by some noise with variance for neuron k, and exhibit a covariance Σ = 〈rrT|s〉 − f(s)f(s)T that we assume is stimulus-independent. These neural responses are combined linearly using weights w to yield a locally unbiased estimate of the stimulus according to . Here local means that the stimulus is near a reference s0, which we will now take to be 0 without loss of generality, and f(s0) is the mean population response to that reference. Unbiased estimation means that the estimate is accurate on average, so that . In the experiments we model, the animals indeed are unbiased after training.

The performance of a decoder is often characterized by the variance ε of its estimate:

| (1) |

Other common measures of performance are the discrimination threshold ϑ, sensitivity index, d′, and Fisher information J. These measures are all closely related. We will often refer to the discrimination threshold ϑ, which is the stimulus difference, Δs, required for reliable binary discrimination between two categories when discrimination is based on an estimator with finite variance. When 'reliable' is 68% correct, then this threshold is just the estimate's standard deviation, . This definition coincides with the sensitivity index , when the mean difference, Δμ, between estimates for the two stimuli is the same size as the standard deviation, , of those estimates. When the neural response mean f(s) is tuned to the stimulus, but other statistics do not provide additional information (i.e. for responses drawn from the exponential family), then the Fisher information, J, is exactly equal to the inverse variance of an unbiased, locally optimal linear estimator: J = 1/ε (also assuming differentiable tuning curves and non-singular noise covariance).

Many experiments assess performance using a two-alternative forced-choice experiment (2AFC). They quantify performance by the discrimination threshold, ϑ, which is the stimulus difference required for reliable binary discrimination (68% correct) (see Methods), and assess neural decoding based on choice probabilities[24]. However, theoretical results about decoding are much simpler when applied to continuous estimation (which we will consider to be a continuous ‘choice’). Conveniently, local continuous estimation and fine discrimination are closely related. For example, as mentioned above, the discrimination threshold ϑ is equal to the standard deviation of an unbiased local estimator, , if the output variability is Gaussian. Under the same assumptions, choice correlation has a simple near-affine relation to choice probability (see Methods, [15]). We thus first describe the theory in terms of a local estimation task, and later apply the suitable transformations when we analyze data from binary discrimination tasks.

If the brain decodes signals linearly from multiple populations of neurons, its overall estimate can always be expressed as a linear combination of unbiased estimates from each population separately:

| (2) |

where is a vector of separate estimates from each of Z populations, and a is a vector of scaling factors for each estimate to create one overall estimate. We call these ‘scaling factors’ to distinguish them from the weights given to individual neurons. Thus the problem of decoding multiple populations can be viewed as one of scaling and combining estimates from individual populations. Note that this is equivalent to a single linear decoder of all populations together using w = [a1w1 ⋯ aZwZ].

For locally linear decoding, the assumption of no bias implies a normalization constraint on the weights and scaling factors. An unbiased estimate should match the stimulus, on average; and so a change in the estimate should match the change in the stimulus, on average: . Analogously, unbiased scaling factors of individually unbiased estimates satisfy , where 1 is a vector of all ones and where each population estimate obeys the normalization .

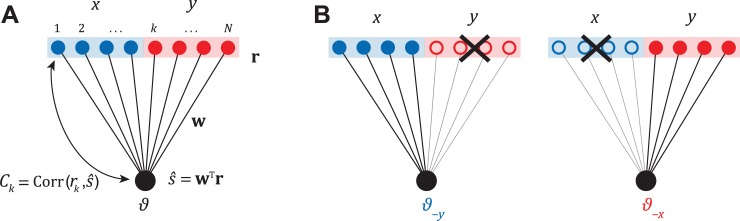

Using this decomposition into populations, we can dissociate how the weight patterns within each subpopulation (wx) and their scaling factors (ax) affect the output of the decoder. This mathematical separation is also appealing because it provides a common framework to synthesize results from experiments conducted at two fundamentally different levels of granularity. One class of experiments involves making fine measurements such as the correlation between trial-by-trial fluctuations in the activity rk of an individual neuron k and the animal’s decision (Fig 1A). The second class of experiments studies causation by measuring behavioural effects of inactivating certain candidate brain areas. For perceptual discrimination tasks, this is done by comparing coarse measures such as the animal’s behavioural performance before (ϑ) and after (ϑ−x) inactivating population x (Fig 1B).

Fig 1. Experimental strategies.

(A) An illustration of a feedforward network with linear readout. The decoder linearly combines the activity r of neurons in two populations x and y with weights w, to produce an estimate of the stimulus. Activity of individual neurons rk is correlated with and is quantified by either the choice probability CPk, or the closely related choice correlation Ck. In an optimal system, the weights w generate choice correlations that satisfy Eq (4). (B) In inactivation experiments, the neurons from each population are inactivated and the resulting changes in behavioural threshold are recorded.

We would like to use these experimental measurements to identify the relative behavioural contributions of various brain areas. Therefore we will present a technique to infer neuronal readout weights in multiple brain areas, focusing primarily on how to extract the scaling factors, ax, of the brain areas rather than the fine structures, wx, of their decoding weights.

Analysis of choice correlations

Choice correlation of a neuron k is defined as the correlation coefficient between its response rk and the animal’s estimate of the stimulus , across repeated trials with the same stimulus s. Substituting the estimate into this correlation, we find:

| (3) |

where the noise variance for neuron k is . All neurons' choice correlations can then be expressed together in vector form as , where S is a diagonal matrix of the standard deviations.

These choice correlations follow a particularly simple pattern if readout weights are locally optimal [15] as obtained from linear regression as w ∝ Σ−1f′. If we substitute these optimal weights into Eq (3), the inverse covariance from the weights cancels the covariance driving the choice correlations:

| (4) |

where Ck,opt is the choice correlation of neuron k expected from optimal decoding, is the discrimination threshold of neuron k (or, equivalently, the standard deviation of an unbiased estimator based only on that neuron’s response), and ϑ is the behavioural discrimination threshold. If decoding were optimal, then this behavioural threshold will match the standard deviation of a locally optimal unbiased estimator based on the whole population, ϑ = (f′TΣ−1f′)−1/2. By itself, such a match would be strong evidence for optimal decoding, but testing this would require recording from all relevant neurons in the brain. The relationship in Eq (4) is thus a far more practical test for optimal decoding.

If all neurons from multiple populations satisfy the above equation, this gives us strong evidence that the neuronal weights — and consequently also the relative scaling factors a of different populations — are optimal. As we will see later, the exact values of a can then be directly extracted from the behavioural thresholds following inactivation of those areas.

The pattern of choice correlations generated by any generic suboptimal decoder is more complicated, as it depends explicitly on the structure of noise covariance and the readout weights [14]. For a population of N neurons, the noise covariance Σ describes, for a fixed stimulus, the power along N orthogonal modes of variation. Each of these modes could contribute to the overall choice correlation, depending on how strongly that mode is decoded. We express the decoding weights of a suboptimal decoder in terms of the covariance, as w = (Σ−1g)/f′TΣ−1g where g could be any vector in . The normalization ensures that this decoder is locally unbiased, satisfying wTf′ = 1.

| (5) |

Note that this recovers the optimal expression given by equation (4) if g is replaced by f′. We now rewrite g in the basis of the eigenmodes ui of the covariance Σ, using . By multiplying and dividing by , we can decompose the choice correlations for a suboptimal decoder into a weighted combination of optimal choice correlations patterns arising from each eigenmode:

| (6) |

where

| (7) |

is essentially the i'th noise mode ui rescaled by the individual neural sensitivity, and . These multipliers βi reflect the extent of suboptimality. When decoding weights are optimal, then the readout direction (again in units of the covariance) is g = f′, leading to βi = 1 for all i. Thus, for optimal decoding the above equation reduces to Eq (4).

In principle, elements of βi, and thus properties of the decoding weights, can be estimated by regressing measured choice correlations against individual columns of the matrix of choice correlations Copt predicted by optimal decoding. In practice, it is very difficult to estimate all of the multipliers βi because the components depend on the individual noise modes of Σ (Eq (7)). Directly measuring Σ is a notoriously challenging task [20] that involves simultaneously recording the activity of a large population of neurons, and is nearly impossible for certain areas due to the geometry of the brain. Even if such recordings could be performed, it would be challenging to get an accurate assessment of the fine structure of the covariance with limited data, since the number of parameters to measure increases with population size faster than the number of measurements. Fortunately, since neuronal choice correlations are measurably large, it follows that one can infer the animal’s decoding weights with reasonable precision by estimating the few leading multipliers that depend only on the most dominant modes of covariance. This is because if the correlated noise modes with small variance were to dominate the decoder, then only a tiny fraction of each neuron’s variations would propagate to the decision, leading to immeasurably small choice correlations[15] (S1 Fig). It is possible to model properties of the leading modes of covariance without large-scale recordings, and we will consider two different noise models: extensive information and limited information.

Models of neural variability

Extensive information model

A common way to measure important components of the covariance structure is through pairwise recordings. Noise covariance measured between pairs of neurons can be modeled as a function of their response properties, such as the difference in their preferred stimulus or the similarity of their tuning functions, to obtain empirical models of noise.

One such model is limited-range noise correlations[25–30], so called because they are proportional to signal correlation and thereby limited in range to pairs with similar tuning. We use this model to approximate a full noise covariance for all neurons in the population[31,32]. Specifically, we assume that the typical noise correlation coefficient between responses of two neurons i and j is given by

| (8) |

where is the signal correlation, i.e. the correlation coefficient between neurons' mean responses over a uniform distribution of stimuli s and the proportionality m between signal and noise correlations can be empirically determined (see Methods). To match Poisson-like properties of neural responses, model variances are set equal to the mean responses, and this scaling produces a covariance of . This has been a common noise model in the study of population codes[25–30]. Although the resulting covariance matrix is unlikely to capture fine details accurately, if the model is reasonable then most of the variance would be captured by the leading modes.

In an extensive information model, the amount of information encoded by the neural activity grows with population size [33–35], hence the name. If the brain extracts information by a decoder restricted only to the noisiest subspace given by these leading noise modes, this would recover just a tiny fraction of the total available information. Although this is radically suboptimal, this is the only way an extensive information model can explain the large magnitude of neuronal choice correlations[15].

Limited information model

Extensive information models are based on measurements of neural populations but, as we mentioned above, current recordings are not sufficient to measure or even infer the covariance matrix in vivo. It is therefore possible that information in cortex is not extensive. Indeed, the extensive information model conflicts with the fact that cortical neurons receive their inputs from a smaller population of neurons. The cortex must then inherit not only the input signal but also any noise in that input. This generates information-limiting correlations [15,20] in the cortex, a form of correlated noise that looks exactly like the signal and thus cannot be averaged away by adding more cortical neurons. Since inferring the brain’s decoding weights from choice-related activity depends on the noise covariance, we also consider the consequences of information-limiting correlations.

For fine discrimination between two neighboring stimuli s and s + δs, the signal is given by the change in mean population responses f(s + δs) − f(s) ≈ δs f′(s). Information-limiting correlations for this task thus fluctuate along the direction f′, generating a covariance containing differential correlations [20] — that is, a covariance component proportional to f′f′T. The constant of proportionality, which we denote as ε, represents the variance of information-limiting correlations. According to this model, the total noise covariance ΣIL for the information-limiting model can be decomposed into a general noise covariance Σ (which we assume follows the extensive information model) and the information-limiting component:

| (9) |

The variance of a locally optimal linear estimator based on a neural population with this noise covariance is given by [20]:

| (10) |

where we have used the Sherman-Morrison lemma to invert ΣIL. The estimator variance due to the extensive information term (f′TΣ−1f′)−1 shrinks with population size [20,33,34], and is eventually dominated by the information-limiting noise variance ε. With increasing population size, both the signal f′ and the information-limiting component εf′f′T grow identically, eventually resulting in no further improvement in signal-to-noise ratio, and thus no improvement in discriminability. In general, ε could be very small, and hence information-limiting correlations may be very hard to detect with limited data as they are easily swamped by noise arising from other sources. Nevertheless, this noise has enormous implications for decoding large populations because it limits the total information to 1/ε.

Coarse-grained noise models for multiple populations

In this section we describe these two noise correlation models coarsely, at the population level, so that we can use the shared fluctuations between populations to reveal the decoder's scaling factors. To attribute scaling factors to each of Z decoded populations, one must consider at least Z modes of the noise covariance, one per population. We will restrict our attention to decoders inhabiting only these leading modes. If there are Z dominant noise modes and they are correlated across populations, then we can approximate Σ with a rank-Z noise covariance matrix composed of both independent and correlated noise between the populations.

Multi-population limited information model

When dealing with multiple populations (e.g., in different brain areas), one has to keep in mind that although they may together receive limited information, they need not inherit it from exactly the same upstream neurons. Therefore, we construct a more general model allowing the different populations to receive both distinct and shared information. To describe this, we separate a low-rank information-limiting fluctuations from a general noise covariance Σ (which we assume follows the extensive information model),

| (11) |

Here F is an N×Z block-diagonal matrix

| (12) |

and is a vector of stimulus sensitivities for all neurons in population z, with elements , and E is a Z×Z covariance for information-limiting noise in each population. The covariance between two neurons in this more general information-limiting model would still be proportional to the product of the derivative of their tuning curves. However, the constant of proportionality varies depending on whether the neurons are both from the same population x (Exx), both from y (Eyy), or from different populations (εxy):

| (13) |

Analogous to the information-limiting noise variance ε in the single population case (Eq (10)), elements of E once again determine the variance of the locally optimal linear estimators (and thus optimal discrimination thresholds) for individual populations, as well as for all populations together (S2 Text). We call the noise in each population x “locally information-limiting noise” because it is local to one population x. For large populations with this noise structure, the total information content within population x alone is limited to 1/εxx.

By itself, this local noise does not guarantee that the complete population is globally information-limited: that depends on how the noise in different populations is correlated. For example, input from another brain area might add some locally information-limiting noise[36], which could in principle be removed again by appropriately decoding both brain areas together. Depending on the covariance between information-limiting noise across populations, εxy, different populations may contain completely redundant, independent, or synergistic information [37,38]. However, the information in all populations together may be limited as well, ultimately by the f′f′T component of the covariance Σ. We call this component “globally information-limiting noise”.

Correlations that limit information also cause redundancy. As a consequence, many different decoding weights extract essentially the same information. The population is then robust to some amount of suboptimal decoding, which makes it easier to achieve near-optimal behavioural performance [15]. In the locally information-limited noise model for multiple populations described above, this robustness also holds within each population individually. In this case, a separate decoder for each population x produces an estimate that is near-optimal for the corresponding areas. Importantly, however, these estimates may have different variances, and may even covary, so they need to be properly combined to produce a good single estimate according to Eq (2). While information-limiting correlations within each area would make the system generally robust to the choice of weight patterns wx, suboptimality could yet arise from an incorrect scaling ax of each individually near-optimal estimate. This is because after the dimensionality reduction from large redundant populations down to a single unbiased estimate per population, most of the redundancy has been squeezed out: just one degree of freedom remains for the decoder, so different ways of combining the estimates are not equivalent.

Multi-population extensive information model

For the extensive information model, we can also define a useful rank-Z approximation of the relevant components of the noise covariance Σ. Let ux denote the leading eigenvector of population x's covariance Σxx, with corresponding eigenvalue λx. Note that these are not the eigenvectors of the full covariance matrix, just of the covariances for each population separately. If, in the full covariance, the leading modes of different populations x and y interact to produce correlated noise with strength λxy, then we approximate the full covariance by Σ = ULUT where, analogously with Eq (12),

| (14) |

and the Z×Z matrix

| (15) |

In the extensive information model, an optimal decoder would largely avoid the largest noise modes. However, optimal decoding of the extensive model is thoroughly ruled out by experimental measurements described below (see section ‘Test for Optimality’). Thus, for our coarse-grained multi-population model, we assume the brain's decoder is limited to the noisiest mode for each population, while it has complete freedom to combine estimates derived thusly from each population. Future refinements of this coarse-grained framework could consider decoding other modes per population instead, or more modes.

Unlike elements of information-limiting noise E in Eq (13), elements of L cannot be directly related to the variance of the output estimator because the latter depends not only on the magnitude of noise (λx) but also on the signal (). But we can rescale each element of L to obtain E, and express a low-rank approximation of the covariance Σ in terms of E as:

| (16) |

where E = (UTF)−1L(UTF)−1, so the elements of E are related to L as: and . Just like the case of information-limiting noise, the elements of E again determine optimal thresholds according to S2 Text (Eqn (S2.1) – (S2.2)), but with one key distinction: whereas those thresholds correspond to the output of optimal decoding for each population in the case of information-limiting noise, these correspond to outputs of optimal decoding only within the subspace of the Z populations' leading modes in the case of extensive information model. Note that we can use the formulation in Eq (16) to derive information-limiting noise (Eq (11)) as a special case by using to recover Σ = FEFT.

Coarse-grained choice correlations

These coarse-grained representations of population variability reflect the dominant decoded mode in each population. This level of description allows us to focus on how information is combined between populations. If the brain indeed combines activity from different areas suboptimally, then simplifying Eq (6) in the presence of information-limiting correlations gives choice correlations within each area that are not equal to the optimal choice correlations, but are still proportional to them.

| (17) |

where . Under conditions of suboptimality, choice correlations in different brain areas x may have different multipliers βx which depend on the scaling of the brain areas and on the covariance between the estimates that can be derived from them. These multipliers βx can be directly identified by regressing measured choice correlations against ϑ/ϑk, the choice correlations predicted for optimal decoding. S4 Text shows that a similar relation holds for the extensive information model when only the leading mode of each population is decoded (S4 Text – Eqn (S4.1)).

Combining choice correlations and inactivation effects to infer decoding of distinct populations

In the previous section, we showed how to reduce the fine structure of choice correlations down to one number for each population, the slope βx of its choice correlation. We will now show how these multipliers can be used, together with the behavioural thresholds ϑ following inactivations of different brain areas, to infer the relative scaling of their weights a. First we describe the main approach in the general setting with multiple populations, and then we specialize to the particular case of two populations and apply it to our data.

Previous work has shown how one can combine knowledge of choice correlations and neural noise correlations to estimate the decoding weights of individual neurons[14]. If decoded neural responses in each population are dominated by a single mode, then we can extend this concept to the population level. The population-level analog of a neural response rk is an estimate derived from population x. The analog of choice correlations Ck are the slopes βx that relate observed and optimal choice correlations, and the analog of noise covariance Σij between neurons i and j is the covariance εxy (Eqs (11) & (14)) between estimates and derived from distinct populations.

Unlike neural noise correlations, we cannot directly measure the noise correlations E at the population level. Nonetheless, we can infer those population-level noise correlations indirectly from inactivation experiments, in which behavioral thresholds are measured after altering the decoder scaling afforded to different brain areas by a factor ρxϕ for inactivation experiment number ϕ. In our feedforward linear model, it is mathematically equivalent to reduce the activity by ρxϕ, or to alter a decoder's scaling ax by the same factor. Totally inactivating an area is equivalent to setting its scaling to zero, but here we permit partial inactivation of multiple brain areas. For now, we assume these inactivation factors are controlled by the experimenter, and thus known, although later we will incorporate some uncertainty about these inactivations.

Each such experiment provides one constraint on the unknown population properties, according to

| (18) |

where θϕ is the behavioural threshold during the ϕ’th inactivation experiment, aϕ is the vector of decoder scaling factors for the different populations with components axϕ = axρxϕ, and where the l1-normalization ensures that the decoder remains unbiased after inactivation (as observed experimentally[18,22]). In such experiments one could also measure the slopes βxϕ of the choice correlations for multiple different populations to provide additional measurement constraints

| (19) |

Notice that Eqs (18) and (19) can be written as multivariate polynomials up to cubic order jointly in the unknowns E and a. Altogether there are Z(Z+1)/2 unknowns for the covariance matrix E, and another Z unknowns for the intact brain's decoder scaling factors a. As long as the number of independent threshold and slope measurements is at least as large as the number of unknowns, then Eq (19) can be solved numerically (S2 Fig), revealing the correct decoder scaling for multiple populations. Slopes of choice correlations during inactivation experiments provides a larger number of data points from a given set of inactivation experiments than measuring the thresholds alone.

Two population solution

When only two populations of neurons, x and y, are relevant for a particular task, this general approach to identifying their relative scaling can be simplified. We next describe this simpler two-population theory, and then apply it to data from the vestibular system.

If we can completely inactivate one brain area, then from Eq (1), the animal’s total estimate would be equal to either or , depending on which area is inactivated. The resultant behavioural threshold would simply reflect the variance of the remaining estimate, which is equal to the magnitude of dominant decoded noise within the active area, so and . If populations x and y are uncorrelated (εxy = 0), then the ratio of weight scaling factors can be factorized into a product of ratios (S5 Text):

| (20) |

where the two independent factors represent outcomes of correlational and causal studies. If readout is optimal, then the multipliers βx and βy are both equal to one, so . This is consistent with the general belief that the behavioural effects of inactivating a brain area must be commensurate with its contribution to the behaviour. A departure from optimality could break this relationship, so the effects of causal manipulation may not match the relative sensitivities of the brain areas (S3 Fig). Even in purely feedforward networks, the magnitude of neuronal choice correlations need not equal the effects of inactivation. Thus, disagreements between the two experimental outcomes should not be entirely surprising and do not undermine the functional significance of either.

In fact, Eq (20) revealed how one can combine choice correlations and behavioural thresholds to infer the contributions of two uncorrelated areas. But if the areas are correlated, one must explicitly account for the magnitude of correlation between areas εxy and the ratio of scales no longer factorizes:

| (21) |

where γ = εxy /εxx is the magnitude of correlated noise between the two populations’ estimates relative to the variance of estimates from x alone. Note that one can also use Eqs (20) and (21) to compute the optimal weight scaling factors simply by setting both βx and βy to 1. Therefore, we can use these equations not only to determine the relative weights of brain areas but to also to evaluate precisely how suboptimal those weights are.

Application to data

We now use the techniques developed so far to infer the relative contributions of two brain areas in macaque monkeys to heading discrimination. Data were collected from monkeys trained to discriminate their direction of self-motion in the horizontal plane (Fig 2A) using vestibular (inertial motion) and/or visual (optic flow) cues (see Methods; see also refs. [21,23]). At the end of each trial, the animal reported whether their perceived heading was leftward () or rightward () relative to straight ahead.

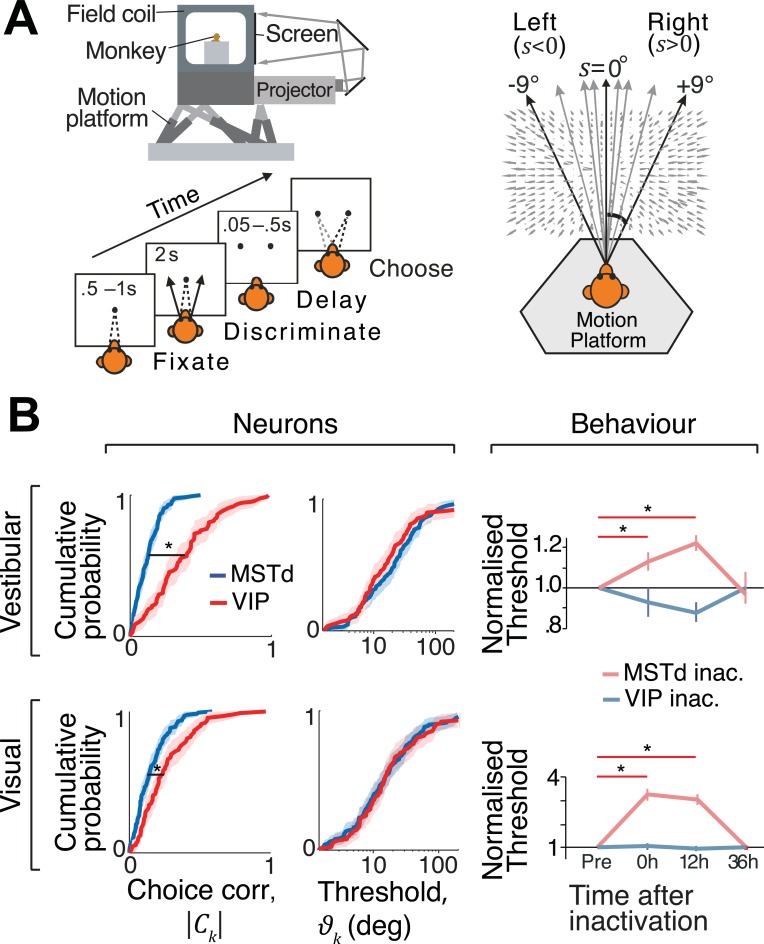

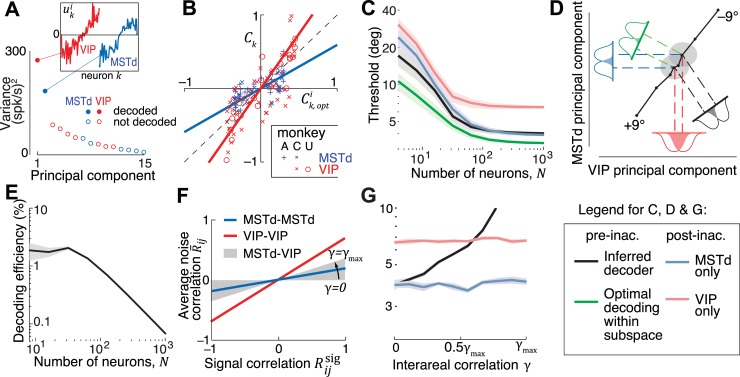

Fig 2. Choice-related activity and effects of inactivation.

(A) Behavioural task: the monkey sits on a motion platform facing a screen. He fixates on a small target at the center of the screen, and then we induce a self-motion percept by moving the platform (vestibular condition) or by displaying an optic flow pattern on the screen (visual condition). The fixation target then disappears and the monkey reports his percept by making a saccade to one of two choice targets. (B) Left: Neurons in both MSTd (n=129) and VIP (n=88) exhibited significant choice correlations (CCs). The median CC of VIP neurons was significantly greater than that of MSTd neurons (*p<0.001, Wilcoxon rank-sum test) in both vestibular (top) and visual (bottom) conditions. Middle: Median neuronal thresholds were not significantly different between areas (vestibular: p=0.94, visual: p=0.86, Wilcoxon rank–sum test). Right: Average discrimination thresholds at different times relative to inactivation of VIP (unsaturated blue) and MSTd (unsaturated red). All threshold values were normalized by the corresponding baseline thresholds (“pre”). Shaded regions and error bars denote standard errors of the mean (SEM); asterisks indicate significant differences (*p<.05, t–test). Neural data re-analyzed from refs. [21,23]. Inactivation data reproduced from refs. [18,22].

Discrepancy between correlation and causal studies

Responses of single neurons were recorded from either area MSTd (monkeys A and C; n=129) or area VIP (monkeys C and U; n=88) during the heading discrimination task (see Methods). Basic aspects of these responses were analyzed and reported in earlier work[21,23]. Briefly, it was found that neurons in VIP had substantially greater choice correlations (CC) than those in MSTd (Fig 2B – left) for both the vestibular and visual conditions. This difference in CC between areas could not be attributed to differences in neuronal thresholds ϑk (Fig 2B – middle), defined as the stimulus magnitude that can be discriminated correctly 68% of the time (d′=1) from neuron k’s response rk (Methods; S3 Fig). Based on its greater CCs, one might expect that VIP plays a more important role in heading discrimination than MSTd. In striking contrast to this expectation, a recent study showed that there was no significant change in heading thresholds following VIP inactivation for either the visual or vestibular stimulus conditions[18] (Fig 2B – right (blue); monkeys B and J). On the other hand, inactivation of MSTd using a nearly identical experimental protocol led to substantial deficits in heading discrimination performance[22] (Fig 2B – right (red); monkeys C, J, and S). The neural and inactivation studies in VIP used non-overlapping subject pools, so the observed dissociation between CCs and inactivation effects could potentially reflect the idiosyncrasies of the subjects’ brains. To rule this out, we repeated the inactivation experiment by specifically targeting Muscimol injections to sites in area VIP that were previously found to contain neurons with high CCs in another monkey and obtained similar results (S5 Fig).

These findings reveal a striking dissociation between choice correlations and effects of causal manipulation: VIP has much greater CCs than MSTd yet inactivating VIP does not impair performance. One may be tempted to simply conclude that VIP does not contribute to heading perception. We will now show that this is not necessarily true. Depending on the structure of correlated noise and the decoding strategy, neurons in both areas may be read out in a manner that is entirely consistent with the observed effects of inactivation.

Test for optimality

We first asked if the above results can simply be explained if the brain allocated weights optimally to the two areas. To answer this, we tested if neuronal choice correlations satisfied Eq (4). Binary discrimination experiments typically do not measure choice correlations because they do not have direct access to the animal’s continuous stimulus estimate ; they only track the animal’s binary choice. Instead they measure a related quantity known as choice probability defined as the probability that a rightward choice is associated with an increase in response of neuron k according to where is a response of neuron k when the animal chooses ±1. Therefore we first transformed the measured choice probabilities to choice correlations using a known relation[14] before further analyses (Methods). Equivalently, one could measure the correlation between the neural response and the binary choice, which [15] showed is ≈ 0.8Ck. Note that the above definition gives choice correlations that are either positive or negative depending on whether a rightward choice is associated with an increase or decrease in neuronal response. Therefore, we adjusted Eq (4) to generate predictions for optimal CCs that accounted for our convention (see Methods).

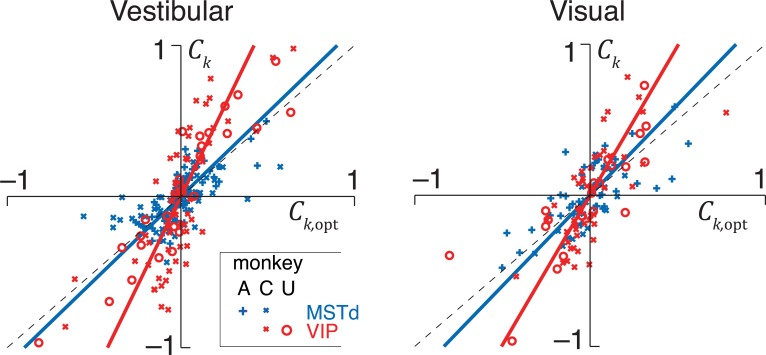

Fig 3compares experimentally measured CCs against the CCs predicted by optimal decoding for all neurons recorded in the vestibular (left panel) and visual (right panel) conditions (see S6 Fig for data from individual animals). Our data are consistent with optimal decoding of MSTd, since the predicted and measured CCs are significantly correlated (vestibular: Pearson’s r =0.65, p<10–3; visual: r =0.70, p<10–3) with a slope not significantly different from 1 (vestibular: slope = 1.11, 95% confidence interval (CI) =[0.83 1.54]; visual: slope = 1.24, 95% CI =[0.94 1.78]). For VIP, although the predicted and measured CCs are again strongly correlated (vestibular: r = 0.80, p<10–3; visual: r = 0.75, p<10–3), the regression slope deviates substantially from unity (vestibular: slope=2.37, 95% CI =[1.97 3.08]; visual: slope=1.98, 95% CI =[1.41 2.74]), demonstrating that our data are inconsistent with optimal decoding. Note that, if VIP is decoded suboptimally, this implies that the overall decoding—one based on both VIP and MSTd—is suboptimal as well because the decoder failed to use all information available in the neurons across both populations. This leads to two questions: First, how much information is lost by suboptimal decoding? Second, how is this information lost? To get precise answers, we will now determine how the brain weights activity in MSTd and VIP to perform heading discrimination.

Fig 3. Readout is not optimal.

Whereas the experimentally measured choice correlations (Ck) of neurons in MSTd (blue) for both the vestibular (left) and the visual (right) condition are well described by the optimal predictions (Ck,opt), those of VIP neurons are systematically greater (red). This observation was consistent across all monkeys (see S5A Fig for monkey X). Solid lines correspond to the best linear fit. Vestibular data replotted from Ref.[15] with different sign convention (see Methods).

Inferring readout weights

Throughout this section, we use subscripts M and V to denote MSTd and VIP instead of the generic subscripts x and y used to describe the methods. For clarity, we will restrict our focus to the vestibular condition but results for the visual condition are presented in the supporting information. In order to determine decoding weights, we constructed two kinds of covariance structures that implied either extensive or limited information as explained earlier.

In the extensive information case, we modeled noise covariance using data from pairwise recordings within MSTd and VIP reported previously [21,29]. Those experiments established that noise correlation between neurons in these areas tends to increase linearly with the similarity of their tuning functions, or signal correlation (Eq (8)). This relationship between noise and signal correlations has a substantially steeper slope in VIP than in MSTd (MSTd: mM = 0.19±0.08; VIP: mV = 0.70±0.16, S7 Fig). We used these empirical relationships to extrapolate noise correlations between all pairs of independently recorded neurons within each of the two populations, using only their tuning curves, and assuming that any stimulus-dependent changes in correlation were negligible. Although the neural sensitivities were comparable in the two brain areas, the stronger correlations in VIP gave it higher information content than MSTd: since the dominant noise modes point away from the signal direction, greater correlations lead to less noise variance along the signal direction, and hence more information [35]. Since correlations between VIP and MSTd populations were not measured experimentally, we explored different correlation matrices (see Methods, Eq (24)).

In the limited information case, we added correlations that limited the total information content across the two populations (Eq (13)). For this latter case, we relied on behavioural thresholds before and after inactivation, and choice correlations, to determine the magnitudes of noise within (εMM and εVV) and between (εMV) areas (see Methods). In both cases, we constructed covariances for many different population sizes N by sampling equal numbers of neurons from both areas with replacement. The choice of distributing neurons equally among the two areas was made only for convenience and has no bearing on the result as explained later.

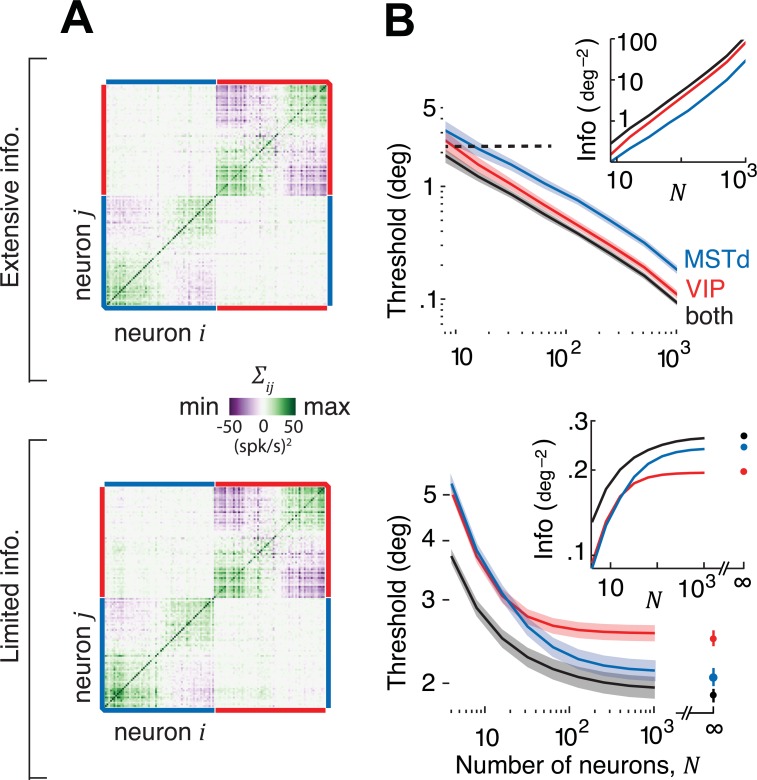

Fig 4A shows example covariance matrices for both extensive and limited information models for a population of 128 neurons. The two structures look visually similar because the additional fluctuations caused by information-limiting correlations are quite subtle. Nevertheless, there is a huge difference between the two models in terms of their information content (Fig 4B). The extensive model has information that grows linearly with N, implying that these brain areas have enough information to support behavioural thresholds that are orders of magnitude better than what is typically observed. However, when information-limiting correlations are added, information saturates rapidly suggesting that behavioural thresholds may not be much lower than population thresholds even if the decoding weights are fine-tuned for best performance. We will now infer scaling factors aM and aV of decoding weights using both noise models and examine their implications.

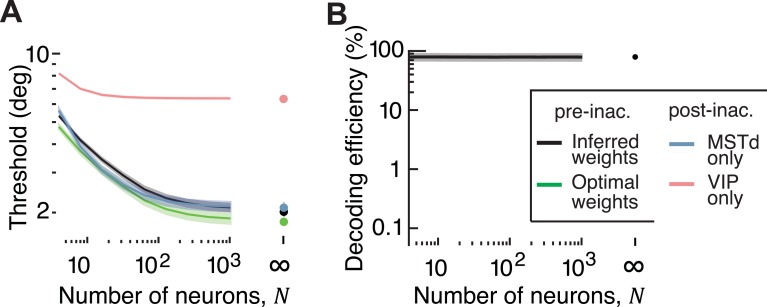

Fig 4. Covariance structure of extensive and limited information models.

(A) Matrix of covariances Σij among neurons in MSTd and VIP (N=128). Top: Extensive information model constructed by sampling according to the empirical relationship in S7 Fig, for the case when the two areas are uncorrelated on average. Bottom: Limited information model adds a small amount of information-limiting correlations with magnitudes (εMM = 4.2, εVV = 7, εMV = 0) chosen arbitrarily for illustration. (B) Inset shows the effect of population size on the information content implied by the two kinds of noise in MSTd (blue), VIP (red) and in both areas together (black). If decoded optimally, behavioural thresholds implied by the extensive information model would decrease with N resulting in performance levels that are vastly superior to those actually observed in monkeys (black dashed line). Information-limiting correlations cause information to saturate with N.

Extensive information model

We’ve already seen that the pattern of choice correlations is not consistent with optimal decoding of MSTd and VIP. In fact, for the extensive information model, optimal decoding will lead to extremely small CCs by suppressing response components that lie along the leading noise modes as they have very little information (S8A Fig). Ironically, the magnitude of CCs found in our data could only have emerged if the response fluctuations along those leading modes substantially influenced animal’s choice (S8B Fig). This means that the decoder must be largely confined to the subspace spanned by those modes. We therefore restricted our focus to the two leading eigenvectors u1 and u2 of the covariance matrix. When the two populations are uncorrelated, these vectors lie exclusively within the one-dimensional subspaces spanned by neurons in MSTd and VIP respectively (Fig 5A). In our case, vectors u1 and u2 corresponded to uV and uM. Although decoding only this subspace is not optimal with respect to the total information content in the two areas, a decoder could still be optimal within that subspace. To test this, we estimated the choice correlations and that would be expected from optimally weighting the two areas within this subspace (Eq (7)). The observed CCs were proportional (MSTd: Pearson’s r =0.55, p<10–3; VIP: r =0.76, p<10–3) to these optimal predictions implying that the leading noise modes of the extensive information model are able to capture the basic structure of choice-related activity in both areas (Fig 5B). However the slopes βM and βV were significantly different from 1 (βM = 0.73, 95% CI =[0.63 0.84]; βV = 2.38, 95% CI = [2.2 2.57]) implying that the weight scaling factors aM and aV must be suboptimal even within the two-dimensional subspace. Since we knew the magnitudes of εMM and εVV for this noise model from pairwise recordings (Table 1), we applied the exact rather than approximate form of Eq (20) and obtained a scaling ratio aM/aV = 0.8 ± 0.1.

Fig 5. Decoder inferred using the extensive information model.

(A) Decoding weights were inferred in the subspace of 2 leading principal components of noise covariance (solid circles). Inset: These components lie entirely within the space spanned by neurons in one of the two brain regions. Components are color coded according to the brain region that it inhabits (red=VIP; blue=MSTd). (B) Experimentally measured choice correlations (Ck) of individual neurons in VIP (red) and MSTd (blue) are plotted against their respective components and of choice correlations generated from optimally decoding responses within the subspace of 2 leading principal components. (C) Unlike the optimal decoder in Fig 4B, the behavioural threshold predicted by the inferred weights (black) saturates at a population size of about 100 neurons. The green line indicates the performance of an optimal decoder within the two-dimensional subspace. Inactivating VIP is correctly predicted to have no effect on behavioural performance for large N (blue), while MSTd inactivation increases the threshold (red). (D) A schematic of the inferred decoding solution projected onto the first principal component of noise in VIP and MSTd. The solid colored lines correspond to the readout directions for the four cases shown in (c). The long diagonal black line is the projection of the mean population responses for headings from –9° to +9°, and the two gray ellipses correspond to the noise distribution at heading directions of ±2°. The colored gaussians correspond to the projections of this signal and noise onto each of the four readout directions, and the overlap between these gaussians corresponds to the probability of discrimination errors. (E) The percentage of available information read out by the inferred decoder (the decoding efficiency) decreases with population size, because the decoded information saturates while the total information is extensive. (F) Correlations between MSTd and VIP were not measured experimentally. We modeled these correlations according to the same linear trend that on average described correlations within each population, but with different slopes, yielding different interareal correlations parametrized by γ = εMV/εMM (Methods). This slope reaches its maximum allowable value , the geometric mean of the slopes for MSTd and VIP. (G) For each value of γ, we used the resultant covariance and CCs to infer the decoder, and plotted its behavioural thresholds. Thresholds are shown for a population of 256 neurons, by which point the performance had saturated to its asymptotic value for all γ. Shaded regions in (c), (e), and (g) represent ±1 SEM.

Table 1. Model parameters and predicted changes in CCs following inactivation for the two covariance models, shown as median ± central quartile range.

(†Values correspond to when decoder is inferred using a rank-two approximation of the covariance.).

| Model | Extensive information model† | Limited information model | |

|---|---|---|---|

| Model parameters | Noise magnitudes | εMM = 15,εVV = 45,εMV = 0 | εMM = 5,εVV = 38,εMV = 10 |

| Multiplicative scaling of CCs relative to optimal | βM = 0.44,βV = 1.4 | βM = 1.1,βV = 2.4 | |

| Optimal weights | |aM/aV| = 2.8 ± 0.5 | |aM/aV| = 9 ± 4 | |

| Inferred weights | |aM/aV| = 0.8 ± 0.1 | |aM/aV| = 14 ± 7 | |

| Model predictions | Multiplicative change in CCs following inactivation | ζM = 2.2 ± 0.3 | ζM = 0.9 ± 0.4 |

| ζV = 1.3 ± 0.1 | ζV = 1.3 ± 0.4 | ||

To test whether the inferred scaling was meaningful, we compared behavioural thresholds implied by the resulting decoding scheme against experimental findings of inactivation. The threshold prior to inactivation is related to the variance of the estimator whose decoding weights w are along the direction specified by aMuM + aVuV. Inactivating either area is equivalent to setting the corresponding scaling factors to zero, so post-inactivation thresholds are given by the variance along the leading noise mode specific to the active area (uM or uV). We computed pre and post-inactivation thresholds and found they were qualitatively consistent with experimental results: for large populations, MSTd inactivation is predicted to produce a large increase in threshold (Fig 5C, red vs black) whereas VIP inactivation is predicted to have little or no effect (Fig 5C, blue vs black; see S9 Fig for visual condition). This correspondence to experimental inactivation results is remarkable because the procedure to deduce scaling factors aM and aV was not constrained in any way by behavioural data, but rather informed entirely by neuronal measurements. We also confirmed that the threshold expected from optimal scaling factors (Table 1) was smaller than that produced by inferred weights (Fig 5C, green vs black) implying that the brain indeed weighted the two areas suboptimally.

The above findings are explained graphically in Fig 5D by projecting the relevant quantities (tuning curves f(s), noise covariance Σ, decoding weights w) onto the subspace of the first two principal components (uM and uV) of the noise covariance Σ. The colored lines indicate different readout directions, determined by the scaling (aM and aV) of weights for the two populations. A ratio of |aM/aV| > 1 corresponds to greater weight on the estimate derived from MSTd activity, and the associated readout direction will be closer to the principal component of MSTd. The response distributions are depicted as gray ellipses (isoprobability contours) for the two stimuli to be discriminated. The discrimination threshold for different decoders can be obtained simply by projecting these ellipses onto the readout direction of the specified decoder and examining the overlap between the projections. Within this subspace, the ratio |aM/aV| of the decoder inferred from CCs was much smaller than the optimal ratio (Table 1), meaning that MSTd was given too little weight. Consequently, the response distributions have more overlap along the direction corresponding to the decoder inferred from neuronal CCs (black) than along the optimal direction in that subspace (green). This means that the outputs are less discriminable and thus that the decoding is suboptimal. VIP inactivation (aV = 0) corresponds to decoding only from MSTd (blue). This happens to produce no deficit because the overlap of the response distributions is similar to that along the original decoder direction. On the other hand, inactivating MSTd (aM = 0) corresponds to decoding only from VIP (red), where the two response distributions have greater overlap leading to a larger threshold.

It is important to keep in mind that decoding the noisiest two-dimensional subspace, which throws away all signal components in the remaining low-noise N–2 response dimensions, is a much more severe suboptimality than misweighting the two areas’ signals within that restricted subspace, which loses less than half the information (Fig 5C). As illustrated in Fig 5E, the efficiency — the fraction of available linear Fisher information recovered by this decoder (η = Jdecoded/Jopt) — drops precipitously with the number of neurons (η ~ 2.5N–1). Moreover, for this model, a steeper relationship between signal and noise correlations leads to greater CCs. This is because the model is only consistent with suboptimal decoding that fails to remove the strong noise correlations; these noise correlations are decoded to drive the choice, and thus correlate neurons not only with each other but also with that choice. Thus, in the extensive information model, high CCs are a consequence of decoding a restricted subspace of neural activity, a radically suboptimal strategy for the brain.

Behavioural predictions of this model were robust to assumptions about the exact size of the decoded subspace (S10 Fig), but were found to depend on the magnitude of noise correlations between the VIP and MSTd populations. Since interareal correlations were not measured, we systematically varied the strength of these correlations by changing γ (Fig 5F), and used Eq (21) to infer scaling factors for each case. We used these scaling factors to generate behavioural predictions for different values of γ. Predictions for one example value of these correlations are shown in S11 Fig. Behavioural predictions progressively worsened as a function of the strength of noise correlations between MSTd and VIP: for this model, even weak but nonzero interareal correlations imply that inactivating area VIP should improve behavioural performance (Fig 5G).

Limited information model

In the presence of information-limiting correlations, choice correlations must be proportional to the ratio of behavioural to neuronal thresholds (Eq (17)). This was indeed the case both in MSTd and VIP as we showed already in Fig 3. Those slopes correspond to the multipliers βM and βV for this model, and were found to be different for the two areas (Table 1).

As we noted earlier, unlike the leading modes of noise in the extensive information model, the magnitudes of information-limiting correlations (εMM, εVV and εMV) are difficult to measure. Nevertheless, we can deduce them from behaviour because behavioural precision is ultimately limited by these correlations. Briefly, using behavioural thresholds after inactivation of each area, along with βM and βV derived from choice correlations as additional constraints, we can simultaneously infer the magnitude of information-limiting correlation within each area (εMM and εVV), the correlated component of the noise (εMV), and scaling factors (aM and aV) (see Methods). A model based on these inferred parameters correctly predicted that the behavioural threshold before inactivation would not be significantly different from threshold following VIP inactivation (Fig 6A; see S12 Fig for visual condition). This was because the scaling of weights in MSTd was much larger than in VIP according to this model (aM ≫ aV, Table 1), so inactivating VIP had little impact on the output of the decoder and left behaviour nearly unaffected. Unlike the decoder inferred for the extensive information model, the efficiency η of this decoder did not depend on the size of the population being decoded (Fig 6B, ) because neurons in this model carry a lot of redundant information.

Fig 6. Decoder inferred using the limited information model.

(A) Like decoding in the presence of extensive information, this decoder is suboptimal (black vs green), and can account for the behavioural effects of inactivation. (B) Unlike decoding in the extensive information model, the efficiency of this decoder (expressed in percentage) is high and insensitive to population size. Shaded areas represent ±1 SEM.

Effect of temporal variability

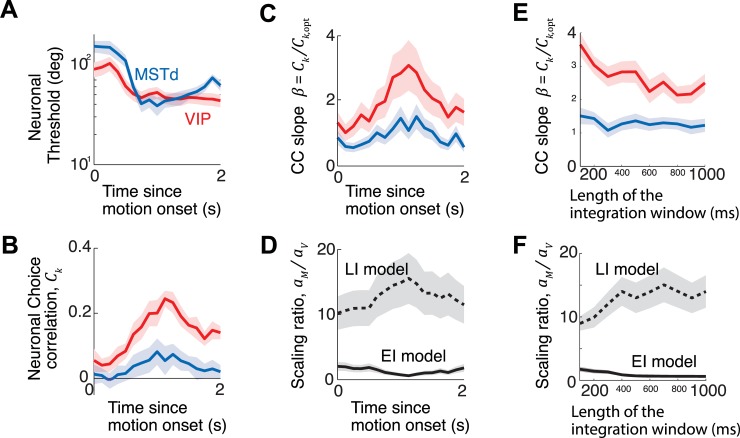

All analyses above were performed on neural data in the central 400ms of the trials following earlier work. This corresponds to an implicit assumption that monkeys made their decisions based solely on the information available during the period of the trial where the stimulus amplitude was highest (Gaussian stimulus profile). However, the experiments did not measure the monkeys’ psychophysical kernel, so we do not know if the above assumption is strictly valid. Moreover, both stimulus and choice-related activity typically vary across time in MSTd [23] and VIP [21], so it is unclear if our conclusions about the relative decoding weights hold outside of the time-window considered in the above analysis. To test this, we repeated our analysis using a sliding window to estimate decoding weights across time. As expected, both neuronal thresholds (Fig 7A) and choice correlations (Fig 7B) were variable across time. Transiently higher firing rates at stimulus onset provide more information early in a trial, but choice correlations peak in the middle of the stimulus. Consequently, the slopes relating observed and optimal choice correlations also varied over time in both areas (Fig 7C). Nevertheless, the time-course of the ratio of scaling factors was much less variable and the qualitative differences in the extensive and limited information models described above are still found to hold throughout the trial (Fig 7D). A full model of the time course of these signals will likely require recurrence for temporal integration (see Discussion). However, temporal integration of independent evidence would yield choice correlations that should grow monotonically with time, so the observed dynamics already indicate another form of suboptimality. Decoding weights may also depend on the length of the integration window and past studies have proposed ways to simultaneously infer the length of integration window and decoding weights from neural data [32]. Although we did not infer the size of the integration window, we found that the slopes of choice correlations in VIP were larger than MST for various choices of integration window, implying that our conclusions are robust to the duration of the analysis window (Fig 7E).

Fig 7. Readout weights do not vary drastically across time.

Neuronal thresholds (A) and choice correlations (B) were computed for each neuron across the duration of the trial using a 250ms moving window and averaged across neurons. Note that these readouts predict the choice based only on a single time window per data point, and do not perform a weighted sum of responses in multiple windows. Neuronal thresholds in both brain areas were comparable at all times, yet the choice correlations (CCs) differed between brain areas VIP and MSTd in a consistent manner over time. Although CCs in both areas peaked around the middle of the trial, those in VIP were proportionally larger at almost all times. (C) Consequently the slopes, β = Ck/Ck,opt, that related observed and optimal choice correlations were generally greater in area VIP than in MSTd. (D) The readout weights inferred using the two models remain largely constant throughout the trial, and are qualitatively consistent with the conclusions drawn from our analyses presented in the main text: the extensive information model implies that area MSTd is underweighted, whereas the limited information model predicts the opposite. Symbols aM and aV denote scaling of readout weights of areas MSTd and VIP respectively. (E) Regression slopes are minimally affected by the length of the analysis window. Both observed neuronal choice correlations as well as those implied by optimal decoding of MSTd and VIP populations increased similarly with the length of the analysis window. This leaves the regression slopes β = Ck/Ck,opt largely invariant with the window length for both VIP (red) and MSTd (blue). (F) The qualitative difference in the readout weights inferred using the two noise models are consistent across different lengths of analysis window. Error bars denote ±1 standard deviation. See S13 Fig for visual condition.

Likewise, the variance of the estimate also depends on the size of the neural recording. Although we extrapolated our data to larger populations by resampling from a set of about 100 neurons recorded from each area, our results are not attributable to the limited size of the recording (S14 Fig). We also extended our model to account for the fact that the two brain areas may have only been partially inactivated by Muscimol, and found that our conclusions hold under a wide range of partial inactivations (S7 Text; S15 Fig). Finally, we assumed that inactivation leaves responses in the un-inactivated area unaffected, as would be the case in a purely feedforward network model. While an exhaustive treatment of recurrent networks is beyond the scope of this work, we find that our conclusions can still hold at equilibrium if the above assumption is compromised by certain types of recurrent connections between MSTd and VIP (S8 Text; S16 Fig).

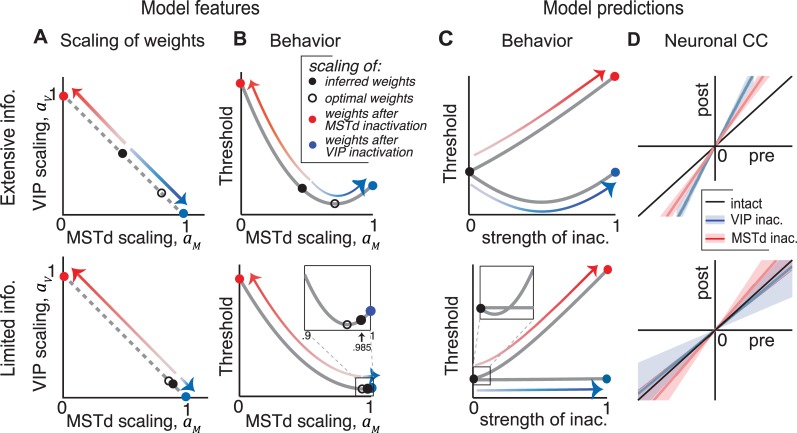

Comparison of the two decoding strategies

We inferred decoding weights in the presence of two fundamentally different types of noise, the extensive information model and the limited information model. Both of these decoders could account for the behavioural effects of selectively inactivating either MSTd or VIP, albeit with very different readout schemes. For the extensive information model, neurons in area VIP were weighted more heavily than optimal, and vice-versa in the presence of information-limiting noise (Table 1, Fig 8A). Why do the two models have such different weightings? Both noise models have larger noise in VIP than MSTd, but differ in correlations between the two areas. In the extensive information model, the interareal correlations must be nearly zero to be consistent with behavioural data (Fig 5G), and the neuronal weights in VIP must be high to account for the high CCs. In the limited information model, the significant interareal correlations explain the large CCs in VIP, even with a readout mostly confined to MSTd.

Fig 8. Decoding strategy and model predictions for the extensive information model and the limited information model.

(A) Optimal (open black) and inferred (filled black) scaling of weights in MSTd (aM) and VIP (aV). Inactivation of either MSTd (red) or VIP (blue) confines the readout to the active area resulting in a scaling of 1. Red and blue arrows indicate the transformation resulting from inactivating MSTd and VIP respectively. The scaling factors always sum to 1. (B) Behavioural threshold ϑ as a function of aM. Whereas ϑ increases following MSTd inactivation for both models (red), it improves initially following partial VIP inactivation (blue) in the extensive information model (top) but remains unchanged in the limited information model (bottom). (C) The same curves can be replotted as a function of the strength of inactivation of MSTd (red) or VIP (blue) yielding behavioural predictions for partial inactivation of the areas. (D) Choice correlations (CC) of neurons in MSTd (blue) and VIP (red), before and after inactivation of VIP and MSTd respectively. Again the results following MSTd inactivation do not discriminate the two information models, but for VIP inactivation the predictions differ, showing increased CCs for the extensive information model and decreased CCs for the limited information model. Slopes of the lines correspond to ζM and ζV in Eq (25), and shaded regions indicate ±1 s.d. of uncertainty.

How could such fundamentally different strategies lead to the same behavioural consequences? For a given noise model, an optimal decoder achieves the lowest possible behavioural threshold by scaling the weights of neurons in the two areas according to a particular optimal ratio aM/aV. Ratios that are either smaller or larger than this optimum will both result in an increase in the behavioural threshold due to suboptimality. This produces a U-shaped performance curve. Under certain precise conditions, complete inactivation of one of the areas will leave behavioural performance unchanged, exactly on the other side of the optimum. This is the case for VIP according to the extensive information model (Fig 8B – top). On the other hand, if the weight is already too small to influence behaviour then inactivation may not appreciably change performance, as demonstrated by the limited information model (Fig 8B – bottom).

Model predictions

According to the extensive information model, the brain loses almost all of its information by poorly weighting its available signals. Moreover, even beyond this poor overall decoding, the model brain gives VIP too much weight. As a consequence, this model makes a counterintuitive prediction that gradually inactivating VIP should improve behavioural performance! A hint of this might already be seen in Fig 2D and S5B Fig for the vestibular condition (both 0 and 12 h), although the difference was not statistically significant. Beyond a certain level of inactivation, as the weight decreases past the optimal scaling of the two areas, performance should worsen again (Fig 8C – top). According to the extensive information model, the brain just so happens to overweight VIP under normal conditions by about the same amount as it underweights VIP after inactivation. Suboptimal decoding in the limited information model has the opposite effect, giving too little weight to VIP, while overweighting MSTd. However, according to this model, the available information in VIP is small, because when MSTd is inactivated the behavioural thresholds are substantially worse (Fig 8C – bottom). Thus the suboptimality due to underweighting VIP is mild (around 80% in both visual and vestibular conditions, as described above), and the predicted improvement following partial MSTd inactivation is negligible as gradual inactivation quickly shoots past the optimum. Graded inactivation of brain areas can be accomplished by varying the concentration of muscimol, as well as the number of injections. In fact, we have previously reported that behavioural thresholds increase gradually depending on the extent of inactivation of area MSTd [22]. Unfortunately, those results do not distinguish the two models, as there is no qualitative difference between the model predictions for partial MSTd inactivation (Fig 8C, red). Future experiments involving graded inactivation of VIP should be able to distinguish between the models due to the stark difference in their behavioural predictions.

The decoding strategies implied by the two models also have different consequences for how CCs should change during inactivation experiments (Methods, Eq (25)). According to the extensive information model, VIP and MSTd are nearly independent, and both are decoded, so inactivating either area must scale up neuronal CCs in the other area (Fig 8D – top). In the limited information model, inactivating either area produces no significant changes in the other’s CCs (Fig 8D – bottom). This effect has different origins for MSTd and VIP. Although inactivating MSTd confines the readout to VIP, it also eliminates the high-variance noise components that VIP shared with MSTd: these two effects approximately cancel leaving CCs in VIP essentially unaffected. The results of VIP inactivation are simpler to understand: CCs in MSTd do not change much because VIP has little influence on behaviour to begin with.

Discussion

Several recent experiments show that silencing brain areas with high decision-related activity does not necessarily affect decision-making[16–19]. To explain these puzzling results, we have developed a general, unified decoding framework to synthesize outcomes of experiments that measure decision-related activity in individual neurons and those that measure behavioural effects of inactivating entire brain areas. We know from the influential work of Haefner et al[14] how the behavioural impact (readout weights) of single neurons relates to their decision-related activity (choice correlations) in a standard feedforward network. We built on this theoretical foundation by adding three new elements that helped us relate the influence of multiple brain areas to both the magnitude of choice correlations, and the behavioural effects of inactivating those areas.

First, we have generalised their readout scheme to include multiple correlated brain areas by formulating the output of the decoder as a weighted sum of estimates derived from decoding responses of individual areas. In this scheme, the weight scales of individual estimates can be readily identified as the scaling of neuronal weights in the corresponding areas, providing a way to quantify the relative contribution of different brain areas. Second, we postulated that readout weights are mostly confined to a low-dimensional subspace of neural response that carries the highest response covariance, in both the extensive and limited information models. This postulate was instrumental to developing a theory of decoding that focused on the relationship between the overall scales of choice-related activity and neuronal weights, in lieu of their fine structures. Besides its mathematical simplicity, the resulting coarse-grained formulation confers an important practical advantage in that we can apply it without precisely knowing the fine structure of response covariance. Third, we used a straight-forward relation between behavioural threshold and the variance of the decoder to explicitly link the relative scaling of weights across areas to the behavioural effects of inactivating them.

Our theoretical result linking the behavioural influence of brain areas to their CCs and inactivation effects (Eqs (20) and (21)) is applicable only when neuronal weights within each area are mostly confined to the leading dimension of their response covariance. Although this requirement looks stringent, it is needed to explain the high CCs seen in experiments[15]. This claim might appear to be at odds with the fact that some earlier studies successfully predicted CCs that plateaued close to experimental levels using pooling models that did not explicitly take care of the above confinement[6,9]. However, a closer examination revealed that these studies used a scheme in which each decision was based on the average response of neuronal pools that were all uniformly correlated, a combination of model assumptions that in fact satisfies our requirement. Similar explanations apply to other simulation studies that used support-vector machines or alternative schemes that inadvertently restricted decoding weights to low-frequency modes of population response where shared variability was highest[12,30]. Thus our postulate is fully compatible with earlier work and in fact points to a more general class of models that can be used to describe the magnitude of CCs in those data.