Abstract

Agents living in volatile environments must be able to detect changes in contingencies while refraining to adapt to unexpected events that are caused by noise. In Reinforcement Learning (RL) frameworks, this requires learning rates that adapt to past reliability of the model. The observation that behavioural flexibility in animals tends to decrease following prolonged training in stable environment provides experimental evidence for such adaptive learning rates. However, in classical RL models, learning rate is either fixed or scheduled and can thus not adapt dynamically to environmental changes. Here, we propose a new Bayesian learning model, using variational inference, that achieves adaptive change detection by the use of Stabilized Forgetting, updating its current belief based on a mixture of fixed, initial priors and previous posterior beliefs. The weight given to these two sources is optimized alongside the other parameters, allowing the model to adapt dynamically to changes in environmental volatility and to unexpected observations. This approach is used to implement the “critic” of an actor-critic RL model, while the actor samples the resulting value distributions to choose which action to undertake. We show that our model can emulate different adaptation strategies to contingency changes, depending on its prior assumptions of environmental stability, and that model parameters can be fit to real data with high accuracy. The model also exhibits trade-offs between flexibility and computational costs that mirror those observed in real data. Overall, the proposed method provides a general framework to study learning flexibility and decision making in RL contexts.

Author summary

In stable contexts, animals and humans exhibit automatic behaviour that allows them to make fast decisions. However, these automatic processes exhibit a lack of flexibility when environmental contingencies change. In the present paper, we propose a model of behavioural automatization that is based on adaptive forgetting and that emulates these properties. The model builds an estimate of the stability of the environment and uses this estimate to adjust its learning rate and the balance between exploration and exploitation policies. The model performs Bayesian inference on latent variables that represent relevant environmental properties, such as reward functions, optimal policies or environment stability. From there, the model makes decisions in order to maximize long-term rewards, with a noise proportional to environmental uncertainty. This rich model encompasses many aspects of Reinforcement Learning (RL), such as Temporal Difference RL and counterfactual learning, and accounts for the reduced computational cost of automatic behaviour. Using simulations, we show that this model leads to interesting predictions about the efficiency with which subjects adapt to sudden change of contingencies after prolonged training.

Introduction

Learning agents must be able to deal efficiently with surprising events when trying to represent the current state of the environment. Ideally, agents’ response to such events should depend on their belief about how likely the environment is to change. When expecting a steady environment, a surprising event should be considered as an accident and should not lead to updating previous beliefs. Conversely, if the agent assumes the environment is volatile, then a single unexpected event should trigger forgetting of past beliefs and relearning of the (presumably) new contingency. Importantly, assumptions about environmental volatility can also be learned from experience.

Here, we propose a general model that implements this adaptive behaviour using Bayesian inference. This model is divided in two parts: the critic which learns the environment and the actor that makes decision on the basis of the learned model of the environment.

The critic side of the model (called Hierarchical Adaptive Forgetting Variational Filter, HAFVF [1]) discriminates contingency changes from accidents on the basis of past environmental volatility, and adapts its learning accordingly. This learner is a special case of Stabilized Forgetting (SF) [2]: practically, learning is modulated by a forgetting factor that controls the relative influence of past data with respect to a fixed prior distribution reflecting the naive knowledge of the agent. At each time step, the goal of the learner is to infer whether the environment has changed or not. In the former case, she erases her memory of past events and resets her prior belief to her initial prior knowledge. In the latter, she can learn a new posterior belief of the environment structure based on her previous belief. The value of the forgetting factor encodes these two opposite behaviours: small values tend to bring parameters back to their original prior, whereas large values tend to keep previous posteriors in memory. The first novel contribution of our work lies in the fact that the posterior distribution of the forgetting factor depends on the estimated stability of past observations. The second and most crucial contribution lies in the hierarchical structure of this forgetting scheme: indeed, the posterior distribution of the forgetting factor is itself subject to a certain forgetting, learned in a similar manner. This leads to a 3-level hierarchical organization in which the bottom level learns to predict the environment, the intermediate level represents its volatility and the top level learns how likely the environment is to change its volatility. We show that this model implements a generalization of classical Q-learning algorithms.

The actor side of the model is framed as a full Drift-Diffusion Model of decision making [3] (Normal-Inverse-Gamma Diffusion Process; NIGDM) that samples from the value distributions inferred from the critic in order to select actions in proportion to their probability of being the most valued. We show that this approach predicts plausible results in terms of exploration-exploitation policy balance, reward rate, reaction times (RT) and cognitive cost of decision. Using simulated data, we also show that the model can uncover specific features of human behaviour in single and multi-stage environments. The whole model is outlined in Algorithm 8: the agent first selects an action given an (approximate) Q-sampling policy, which is temporally implemented as a Full DDM [3] with variable drift rate and accumulation noise, then learns based on the return of the action executed (reward r(sj, aj) and transition s′ = T(sj, aj)). Then, the critic updates its approximate posterior belief about the state of the environment qj ≈ p.

Algorithm 1: AC-HAFVF sketch a represents actions, r stands for rewards, s stands for state and x stands for observations. μr is the expected value of the reward. q represents the approximate posterior of the latent variable z and θ0 stands for the prior parameter values of the distribution of z

1 for j = 1 to J do

2 Actor: NIGDM Line 8;

3 select ;

4 Observe xj = {r(sj, aj), sj+1};

5

6 Critic: HAFVF Line 8;

7 update qj(z(sj, aj)) ≈ p(z(sj, aj)|xj;x<j, θ0);

8 end

We apply the proposed approach to Model-Free RL contexts (i.e. to agents limiting their knowledge of the environment to a set of reward functions) in an extensive manner. We explore in detail the application of our algorithm to Temporal Discounting RL, in which we study the possibility of learning the discounting factor as a latent variable of the model. We also highlight various methods for accounting for unobserved events in a changing environment. Finally, we show that the way our algorithm optimizes the exploration-exploitation balance is similar to Q-Value Sampling when using large DDM thresholds.

Importantly, the proposed approach is very general, and even though we apply it here only to Model-Free Reinforcement Learning, it could be also extended to Model-Based RL [4], where the agent models a state-action-state transition table together with the reward functions. Additionally, other machine-learning algorithms can also benefit from this approach [1].

The paper is structured as follows: first (Related work section) we review briefly the state of the art and place our work within the context of current literature. in the Methods section, we present the mathematical details of the model. We derive the analytical expressions of the learning rule, and frame them in a biological context. We then show how this learning scheme directly translates into a decision rule that constitutes a special case of the Sequential Sampling family of algorithms. In the Results section, we show various predictions of our model in terms of learning and decision making. More importantly, we show that despite its complexity, the model can be fitted to behavioural data. We conclude by reviewing the contributions of the present work, highlighting its limitations and putting it in a broader perspective.

Related work

The adaptation of learning to contingency changes and noise has numerous connections to various scientific fields from cognitive psychology to machine learning. A classical finding in behavioural neuroscience is that instrumental behaviours tend to be less and less flexible as subjects repeatedly receive positive reinforcement after selecting a certain action in a certain context, both in animals [5–8] and humans [9–13]. This suggests that biological agents indeed adapt their learning rate to inferred environmental stability: when the environment appears stable (e.g. after prolonged experience of a rewarded stimulus-response association), they show increased tendency to maintain their model of the environment unchanged despite reception of unexpected data.

Most studies on such automatization of behaviour have focused on action selection. However, weighting new evidence against previous belief is also a fundamental problem for perception and cognition [14–16]. Predictive coding [17–22] provides a rich, global, framework that has the potential to tackle this problem, but an explicit formulation of cognitive flexibility is still lacking. For example, whereas [22] provides an elegant Kalman-like Bayesian filter that learns the current state of the environment based on its past observations and predicts the effect of its actions, it assumes a stable environment and cannot, therefore, adapt dynamically to contingency changes. The Hierarchical Gaussian Filter (HGF) proposed by Mathys and colleagues [23, 24] provides a mathematical framework that implements learning of a sensory input in a hierarchical manner, and that can account for the emergence of inflexibility in various situations. This model deals with the problem of flexibility (framed as expected “volatility”) by building a hierarchy of random variables: each of these variables is distributed with a Gaussian distribution with a mean equal to this variable at the trial before and the variance equal to a non-linear transform of the variable at superior level. Each level encodes the distribution of the volatility of the level below. Although it has shown its efficiency in numerous applications [25–30], a major limitation of this model, within the context of our present concern, is that While the HGF accommodates a dynamically varying volatility, it assumes that the precision of the likelihood at the lowest level is static. To understand why it is the case, one should first observe that in the HGF the variance at each level is the product of two factors: a first “tonic” component, which is constant throughout the experiment, and a “phasic” component that is time-varying and controlled by the level above. These terms recall the concepts of “expected” and “unexpected” uncertainty [31, 32], and in the present paper, we will refer to these as variance (of the observation) and volatility (of the contingency). Now consider an experiment with two distinct successive signals, one with a low variance and one with a high variance. When fitted to this dataset, the HGF will consider the lower variance as the first tonic component, and all the extra variance in the second part of the signal will be assigned to the “phasic” part of the volatility, thus wrongfully considering noise of the signal as a change of contingency (see Fig 1). In summary, the HGF will have difficulties accounting for changes in the variance of the observations. Moreover, the HGF model cannot forget past experience after changes of contingency, but can only adapt its learning to the current contingency. This contrasts with the approach we propose, where the assessment of a change of contingency is made with the use of a reference, naive prior that plays the role of a “null hypothesis”. This way of making the learning process gravitate around a naive prior allows the model to actively forget past events and to eventually come back to a stable learning state even after very surprising events. These caveats limit the applicability of the HGF to a certain class of datasets in which contingency changes affect the mean rather than the variance of observations and in which the training set contains all possible future changes that the model may encounter at testing.

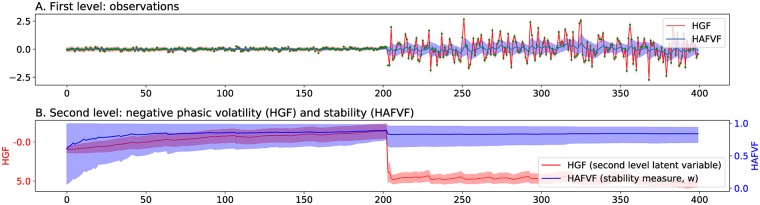

Fig 1. Fitting of HGF model on dataset with changing variance.

Two signals with a low (0.1) and high (1) variance were successively simulated for 200 trials each. A two-level HGF and the HAFVF were fitted to this simple dataset. A. The HGF considered the lower variance component as a “tonic” factor whereas all the additional variance of the second part of the signal was assigned to the “phasic” (time-varying) volatility component. This corresponded to a high second-level activation during the second phase of the experiment (B.) reflecting a low estimate of signal stability. The corresponding Maximum a Posteriori (MAP) estimate of the HAFVF had a much better variance estimate for both the first and second part of the experiment (A.), and, in contrast to the HGF, the stability measure (B.) decreased only at the time of the change of contingency. Shaded areas represent the 95% (approximate) posterior confidence interval of the mean. Green dots represent the value of the observations.

As will be shown in detail below, in the model proposed in the present paper, volatility is not only a function of the variance of the observations: if a new observation falls close enough to previous estimates then the agent will refine its posterior estimate of the variance and will decrease its forgetting factor (i.e. will move its prior away from the fixed initial prior and closer to the learned posterior from the previous trial), but if the new observation is not likely given this posterior estimate, the forgetting factor will increase (i.e. will move closer to the fixed initial prior) and the model will tend to update to a novel state (because of the low precision of the initial prior). In the results of this manuscript, we show that our model outperforms the HGF in such situations.

In Machine Learning and in Statistics, too, the question of whether new unexpected data should be classified as outlier or environmental change is important [33]. This problem of “denoising” or “filtering” the data is ubiquitous in science, and usually relies on arbitrary assumptions about environmental stability. In signal processing and system identification, adaptive forgetting is a broad field where optimality is highly context (and prior)-dependant [2]. Bayesian Filtering (BF) [34], and in particular the Kalman Filter [35] often lack the necessary flexibility to model real-life signals that are, by nature, changing. One can discriminate two approaches to deal with this problem: whereas Particle Filtering (PF) [36–38] is computationally expensive, the SF family of algorithms [2, 39], from which our model is a special case, usually has greater accuracy for a given amount of resources [36] (for more information, we refer to [35] where SF is reviewed). Most previous approaches in SF have used a truncated exponential prior [40, 41] or a fixed, linear mixture prior to account for the stability of the process [37]. Our approach is innovative in this field in two ways: first, we use a Beta prior on the mixing coefficient (unusual but not unique [42]), and we adapt the posterior of this forgetting factor on the basis of past observations, the prior of this parameter and its own adaptive forgetting factor. Second, we introduce a hierarchy of forgetting that stabilizes the learning when the training length is long.

We therefore intend to focus our present research on the very question of flexibility. We will show how flexibility can be implemented in a Bayesian framework using an adaptive forgetting factor, and what prediction this framework makes when applied to learning and decision making in Model-Free paradigms.

Methods

Bayesian Q-learning and the problem of flexibility

Classical RL [43], or Bayesian RL [44, 45] cannot discriminate learners that are more prone to believe in a contingency change from those who tend to disregard unexpected events and consider them as noise. To show this, we take the following example: let p(ρ|r≤j) = Beta(α, β) be the posterior probability at trial j of a binary reward rj ∼ Bern(ρ) with prior probability ρ ∼ Beta(α0, β0). It can be shown that, at the trial j = vj + uj, where vj is the number of successes and uj the number of failures, the posterior probability parameters read {αj = α0 + vj, βj = β0 + uj}. This can be easily mapped to a classical RL algorithm if one considers that, at each update of v and u, the posterior expectation of ρ is updated by

| (1) |

| (2) |

| (3) |

| (4) |

which has the form of a classical myopic Q-learning algorithm with a decreasing learning rate.

The drawback of this fixed-schedule learning rate is that, if the number of observed successes outnumbers greatly the number of failures (v ≫ u) at the time of a contingency change in which failures become suddenly more frequent, the agent will need v − u + 1 failures to start considering that p(rj = 0|r≤j) > p(rj = 1|r≤j). This behaviour is obviously sub-optimal in a changing environment, and Dearden [44] suggests adding a constant forgetting factor to the updates of the posterior, making therefore the agent progressively blind to past outcomes. Consider the case in which

with w ∈ [0; 1] being the forgetting factor. We can easily see that, in the limit case of α0 = 0 and β0 = 0, as j → ∞. We can define as the efficient memory of the agent, which provides a bound on the effective memory, represented by the total amount of trials taken into account so far (e.g. αj+ βj in the previous example). This produces an upper and lower bound to the variance of the posterior estimate of p(ρ|r≤j). This can be seen from the variance of the beta distribution which is maximized when αj = βj, and minimized when either αj = α0 or βj = β0. In a steady environment, agents with larger memory are advantaged since they can better estimate the variance of the observations. But when the environment changes, large memory becomes disadvantageous because it requires longer time to adapt to the new contingency. Here, we propose a natural solution to this problem by having the agent erase its memory when a new observation (or a series of observations) is unlikely given the past experience.

General framework

Our framework is based on the following assumptions:

Assumption 1 The environment is fully Markovian: the probability of the current observation given all the past history is equal to the probability of this observation given the previous observation.

Assumption 2 At a given time point, all the observations (rewards, state transitions, etc.) are i.i.d. and follow a distribution p(x|z) that is issued from the exponential family and has a conjugate prior that also belongs to the exponential family p(z|θ0).

For conciseness, the latent variables z (i.e. action value, transition probability etc.) and their prior θ will represent the natural parameters of the corresponding distributions in what follows.

Assumption 3 The agent builds a hierarchical model of the environment, where each of the distributions at the lower level (reward and state transitions) are independent, i.e. the reward distribution for one state-action cannot be predicted from the distribution of the other state-actions.

Assumption 4 The agent can only observe the effects of the action she performs.

Finally, an important assumption that will guide the development of the model is that the evolution of the environment is unpredictable (i.e. transition probabilities are uniformly distributed for all states of the environment) with the notable exception that it is more or less likely to stay in the same state than to switch to another state. Formally:

Assumption 5 Let be a set of environment states, with A ≫ 0 and a, b, c ∈ {1, 2, …, A}, a ∉ {b, c}. We assume that the transition probabilities are uniformly distributed for b, c ∈ {1: A}¬a, which reads:

Assumption 5 implies that any attempt to learn new transitions from state to state based on a uniform prior over these transitions will harm the performance of the predictive model, and the best strategy one could adopt is to learn the probability of staying in the same state and group the probabilities of changing to any other state together. Then, the only two transition probabilities to learn are:

This is what the “critic” part of the AC-HAFVF we propose achieves. Of course, the model could be improved by learning the other transition probabilities, if needed, but we leave this for future work (see for instance [35]).

Model specifications

We are interested in deriving the posterior probability of some datapoint-specific measure pj(z ∣ x≤j, θ0), where j indicates the point in time, given the past and current observations x≤j and some prior belief θ0. Bayes theorem states that this is equal to

| (5) |

We now consider the case of a subject that observes the stream of data and updates her posterior belief on-line as data are gathered. According to Assumption 2, one can express the posterior of z given the current observation xj

| (6) |

It appears immediately that the prior p(z|x<j) has the same form as the previous posterior, so that our posterior probability function can be easily estimated recursively using the last posterior estimate as a prior (yesterday’s posterior is today’s prior) until p(z|θ0) is reached. Assumption 2 implies that the posterior p(z|xj, x<j) will be tractable: since p(x|z) is from the exponential family and has a conjugate prior p(z|θ0), the posterior probability has the same form as the prior, and has a convenient expression:

| (7) |

where T(xi) is the sufficient statistics of the ith sample, and where we have made explicit the fact that can be partitioned in two parts, from which represents the prior number of observations. Consequently, is equivalent to the effective memory introduced above.

This simple form of recursive posterior estimate suffers from the drawbacks we want to avoid, i.e. it does not forget past experience. Let us therefore assume that zj can be different from zj−1 with a given probability, which we first assume to be known. We introduce a two-component mixture prior where the previous posterior is weighted against the original prior belief:

| (8) |

The exponential weights on this prior mixture allow us to easily write its logarithmic form, but it still demands that we compute the normalizing constant Z(w, x<j, θ0) = ∫ p(z|x<j)w p(z|θ0)1−w d z. This constant has, however, a closed-form if both the prior and the previous posterior are from the same distribution, issued from the exponential family.

In Eq 8, we assumed that the forgetting factor was known. However, it is more likely that the learner will need to infer it from the data at hand. Putting a beta prior on this parameter, and under the assumption that the posterior probability factorizes (Mean-Field assumption), the joint probability at time j reads:

| (9) |

where ϕ0 is the vector of the parameters of the beta prior of w. The model in Eq 9 is not conjugate, and the posterior is therefore not guaranteed to be tractable anymore.

Hierarchical filter

Let us now analyze the expected behaviour of an agent using a model similar to the one just described, in a steady environment: if all belong to the same, unknown distribution p(x|z), the value of will progressively converge to its true value as the prior (or the previous posterior) over w will eventually put a lot of weight on the past experience (i.e. it favours high values of w), since the distribution from which x is drawn is stationary. We have shown that such models rapidly tend to an overconfident posterior over w [1]. In practice, when the previous posterior of w is confident on the value that w should take (i.e. has low variance), it tends to favor updates that reduce variance further, corresponding to values of pj(w|x≤j) that match pj−1(w|x<j), even if this means ignoring an observed mismatch between pj−1(z|x<j) and pj(z|x≤j). In order to deal with this issue, we enrich our model by introducing a third level in the hierarchy.

We re-define the prior over w as a two-component mixture of priors:

and the full joint probability has the form

| (10) |

This additional hierarchical level allows the model to forget w as a function of observed data (i.e. not at a fixed rate) providing it with the capacity to adapt the approximate posterior distribution over w with greater flexibility [1]. The latent variable b can be seen as a regulizer for pj(w ∣ x≤j).

The prior parameters of the HAFVF and their interpretation is outlined in Table 1.

Table 1. HAFVF prior parameters in the case of normally distributed variables.

Horizontal lines separate the various levels.

| General identifier | Parameter | Domain | Name | Interpretation |

|---|---|---|---|---|

| θ0 | Prior mean | Expected value of the observations | ||

| Prior number of observations (over μ) | Importance of the prior belief of μ | |||

| Gamma shape parameter | Importance of the prior belief of σ | |||

| Gamma rate parameter | Sum of squared residuals | |||

| ϕ0 | Beta shape parameter | Stability belief of {μ, σ} | ||

| Beta shape parameter | Volatility belief of {μ, σ} | |||

| β0 | Beta shape parameter | Stability belief of w | ||

| Beta shape parameter | Volatility belief of w |

Variational Inference

Eqs 6–10 involve the posterior probability distributions of the parameters given the previous observations. When these quantities have no closed-form formula, two classes of methods can be used to estimate them. Simulation-based algorithms [46] such as importance sampling, particle filtering or Markov Chain Monte Carlo, are asymptotically exact but computationally expensive, especially in the present case where the estimate has to be refined at each time step. The other class of methods, approximate inference [47, 48], consists in formulating, for a model with parameters y and data x, an approximate posterior q(y), that we will use as a proxy to the true posterior p(y|x). Roughly, approximate inference can be partitioned into Expectation Propagation and Variational Bayes (VB) methods. Let us consider in more detail VB, as it is the core engine of our learning model. In VB, optimizing the approximate posterior amounts to computing a lower-bound to the log model evidence (ELBO) , whose distance from the true log model evidence can be reduced by gradient descent [49]. Hybrid methods, that combine sampling methods with approximate inference, also exist (e.g. Stochastic Gradient Variational Bayes [50] or Markov Chain Variational Inference [51]). With the use of refined approximate posterior distributions [52–54], they allow for highly accurate estimates of the true posterior with possibly complex, non-conjugate models.

We define a variational distribution over y with parameters υ: q(y|υ), which we will use as a proxy to the real, but unknown, posterior distribution p(y|x). The two distribution match exactly when their Kullback-Leibler divergences are equal to zero, i.e.

where we have omitted the approximate posterior parameters υ for sparsity of the expressions. Given some arbitrary constraints on q(y), we can choose (for mathematical convenience) to reduce DKL[q(y)||p(y|x)] wrt q(y). Formally, this can be written as

We can now substitute log p(y|x) by its rhs in the log-Bayes formula

| (11) |

Because log p(x) does not depend on the model parameters, it is fixed for a given dataset. Therefore, as we maximize in Eq 11, we decrease the divergence DKL[q(y)||p(y|x)] between the approximate and the true posterior. When a maximum is reached, we can consider that (1) we have obtained the most accurate approximate posterior given our initial assumptions about q(y) and (2) provides a lower bound to log p(x). It should be noted here that the more q(y) is flexible, the closer we can hope to get from the true posterior, but this is generally at the expense of tractability and/or computational resources.

The ELBO in Eq 11 is the sum of the expected log joint probability and the entropy of the approximate posterior. In order for the former to be tractable, one must carefully choose the form of the approximate posterior. The Mean-field assumption we have made allows us to select, for each factor of the approximate posteriors, a distribution with the same form as their conjugate prior, which is the best possible configuration in this context [55].

Applying now this approach to Eq 10, our spherical approximate posterior looks like:

In addition, in order to recursively estimate the current posterior probability of the model parameters given the past, we make the natural approximation that the true previous posterior can be substituted by its variational approximation:

| (12) |

and similarly for p(w|x<j) and p(b|x<j). The use of this distribution as a proxy to the posterior greatly simplifies the optimization of qj(z, w, b).

The full, approximate joint probability distribution at time j therefore looks like

where θj−1, ϕj−1 and βj−1 are the variational parameters at the last trial for z, w and b respectively. A further advantage of the approximation made in Eq 12 is that the prior of z and w simplifies elegantly:

| (13) |

(see Appendix A for the full derivation).

A conjugate distribution for p(w) is hard to find. Šmìdl and Quinn [56] propose a uniform prior and a truncated exponential approximate posterior over w. They interpolate the normalizing constant between two fixed value of w, which allows them to perform closed-form updates of this parameter. Here, we chose p(w|ϕ0) and q(w|ϕj) to be both beta distributions, a choice that does not impair our ability to perform closed-form updates of the variational parameters as we will see in the Update equation section.

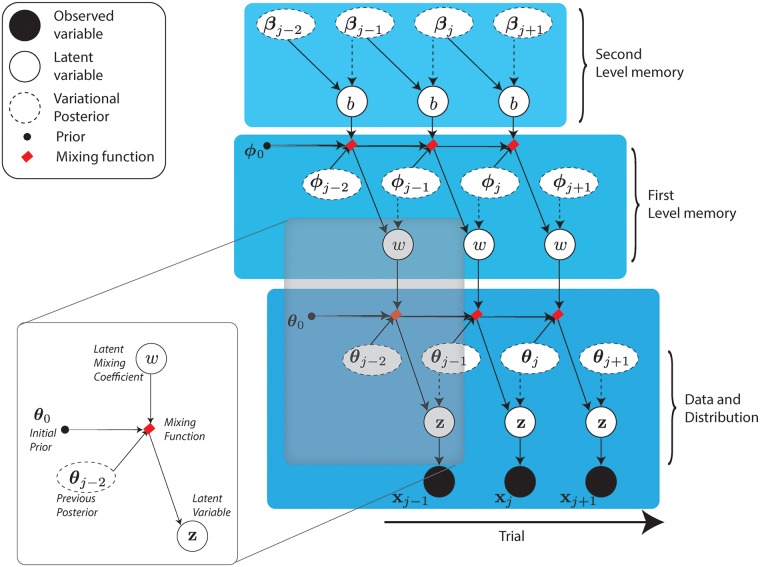

In this model (see Fig 2), named the Hierarchical Adaptive Forgetting Variational Filter [1], specific prior configurations will bend the learning process to categorize surprising events either as contingency changes, or as accidents. In contrast with other models [57], w and b are represented with a rich probability distribution where both the expected values and variances have an impact on the model’s behaviour. For a given prior belief on z, a confident prior over w, centered on high values of this parameter, will lead to a lack of flexibility that would not be observed with a less confident prior, even if they have the same expectation.

Fig 2. Directed Acyclic Graph of the HAFVF model.

Plain circles represent observed variables, white circles represent latent variables and dots represents prior distribution parameters. Dashed circles and dashed arrows represent approximate posteriors and approximate posterior dependencies. A weighted prior latent node is highlighed.

The critic: HAFVF as a reinforcement learning algorithm

Application of this scheme of learning to the RL case is straightforward, if one considers as being the observed rewards and z as the parameters of the distribution of these rewards. In the following, we will assume that the agent models a normally distributed state-action reward function xj = r(s, a), from which she tries to estimate the posterior distribution natural parameters z ≜ η(μ(s, a), σ(s, a)) where η(⋅) is the natural parameter vector of the normal distribution. In this context, an intuitive choice for the prior (and the approximate posterior) of these parameters is a Normal Inverse-Gamma distribution (): for the prior, we have

and the approximate posterior can be defined similarly with a normal component and a gamma component .

Update equation

Even though the model is not formally conjugate, the use of a mixture of priors with exponential weights makes the variational update equations easy to implement for the first level. Let us first assert a few basic principles from the Mean-Field Variational Inference framework: it can be shown that, under the assumption that the approximate posterior factorizes in q(y1 y2) = q(y1)q(y2), then the optimal distribution q*(y1) given our current estimate of q(y2) is given by

| (14) |

where log Z is some log-normalizer that does not depend on y. Eq 14 states that each set of variational parameters can be updated independently given the current value of the others: this usually lead to an approach similar to EM [46], where one iterates through the updates of variational posterior successively until convergence.

Fortunately, thanks to the conjugate form of the lower level of the HAFVF, Eq 14 can be unpacked to a form that recalls Eq 7 where the update of the variational parameters of z reads:

| (15) |

where

is the weighted prior of z (see Appendix A). This update scheme can be mapped onto and be interpreted in terms of Q-learning [43] (see Appendix B). Again, is the updated effective memory of the subject, which is bounded on the long term by the (approximate) efficient memory . One can indeed see that the actual efficient memory, which reads

is undefined when b ≤ 1. To solve this issue, we used the first order Taylor approximation

which is a biased but consistent estimator of the efficient memory, as it approaches its true value for large values of ϕ.

More specifically, for the approximate posterior of a single observation stream with corresponding parameters , we can apply this principle easily, as the resulting distribution has the form of a with parameters:

| (16) |

where we have used .

Deriving updates for the approximate posterior over the mixture weights w and b is more challenging, as the optimal approximate posterior in Eq 14 does not have the same form as the beta prior due to the non-conjugacy of the model. Fortunately, non-conjugate variational message passing (NCVMP) [58] can be used in this context. In short, NCVMP minimizes an approximate KL divergence in order to find the value of the approximate posterior parameters that maximize the ELBO. Although NCVMP convergence is not guaranteed, this issue can be somehow alleviated by damping of the updates (i.e. updating the variational parameters to a value lying in between the previous value they endorsed and the value computed using NCVMP, see [58] for more details). The need for a closed-form formula of the expected log-joint probability constitutes another obstacle for the naive implementation of NCVMP to the present problem: indeed, computing the expected value of the log-partition functions log Z(w) and log Z(b) involves a weighted sum of the past variational parameters θj−1 and the prior θ0, which are known, with a weight w, which is unknown. Expectation of this expression given q(w) does not, in general, have an analytical expression. To solve this problem, we used the second order Taylor expansion around (see Appendix A).

The derivation of the update equations of ϕ and β can be found in [1].

Counterfactual learning

As an agent performs a series of choices in an environment, she must also keep track of the actions not chosen and update her belief accordingly: ideally, the variance of the approximate posterior of the reward function associated with a given action should increase when that action is not selected, to reflect the increased uncertainty about its outcome during the period when no outcome was observed. This requirement implies counterfactual learning capability [59–61].

Two options will be considered here: the first option consists in updating the approximate posterior parameters of the non-selected action at each time step with an update scheme that pulls the approximate posterior progressively towards the prior θ0, with a speed that depends on w, i.e. as a function of the belief the agent has about environment stability. The second approach will consist in updating the approximate posterior of the actions only when they are actually selected, but accounting for past trials during which that action was not selected. The mathematical details of these approaches are detailed in Appendix C.

Delayed updating

Even though the agent learns only actions that are selected, it can adapt its learning rate as a function of how distant in the past was the last time the action was selected. Formally, this approach considers that if the posterior probability had been updated at each time step and the forgetting factor w had been stable, then the impact of the observations n trials back in time would currently have an influence that would decrease geometrically with a rate ω ≜ wn. We can then substitute the prior over z by:

| (17) |

which is identical to Eq 8 except that w has been substituted by ω.

We name this strategy Delayed Approximate Posterior Updating.

Continuous updating

When an action is not selected, the agent can infer what value it would have had given the observed stability of the environment. In practice, this is done by updating the variational parameters of the selected and non-selected action using the observed reward for the former, and the expected reward and variance of this reward for the latter.

This approach can be beneficial for the agent in order to optimize her exploration/exploitation balance. In Appendix C.1, it is shown that if the agent has the prior belief that the reward variance is high, then the probability of exploring the non-chosen option will increase as the lag between the current trial and the last observation of the reward associated with this option increases.

This feature makes this approach intuitively more suited for exploration among multiple alternatives in changing environments, and we therefore selected it for the simulations achieved in this paper.

Temporal difference learning

An important feature required for an efficient Model-Free RL algorithm is to be able to account for future rewards in order to make choices that might seem suboptimal to a myopic agent, but that make sense on the long run. This is especially useful when large rewards (or the avoidance of large punishments) can be expected in a near future.

In order to do this, one can simply sum the expected value of the next state to the current reward in order to perform the update of the reward distribution parameters. However, because the evolution of the environment is somehow chaotic, it is usually considered wiser to decay slightly the future rewards by a discount rate γ. This mechanism is in accordance with many empirical observations of animal behaviours [62–64], neurophysiological processes [65–67] and theories [68–70].

As the optimal value of γ is unknown to the agent, we can assume that she will try to estimate its posterior distribution from the data as she does for the mean and variance of the reward function. Appendix D shows how this can be implemented in the current context. An example of TD learning in a changing environment is given in the TD learning with the HAFVF section.

We now focus on the problem of decision making under the HAFVF.

The actor: Decision making under the HAFVF

Bayesian policy

In a stable environment where the distribution of the action values are known precisely, the optimal choice (i.e. the choice that will maximize reward on the long run) is the choice with the maximum expected value: indeed, it is easy to see that if , then (here and for the next few paragraphs, we will restrict our analysis to the case of single stage tasks, and omit the s input in the reward function). However, in the context of a volatile environment, the agent has no certainty that the reward function has not changed since the last time she visited this state, and she has no precise estimate of the reward distribution. This should motivate her to devote part of her choices to exploration rather than exploitation. In a randomly changing environment, there is no general, optimal balance between the two, as there is no way to know how similar is the environment wrt the last trials. The best thing an agent can do is therefore to update her current policy wrt her current estimate of the uncertainty of the latent state of the environment.

Various policies have been proposed in order to use the Bayesian belief the agent has about its environment to make a decision that maximizes expected rewards in the long run. Here we will focus more particularly on Q-value sampling, or Q-sampling (QS) [71]. Note that we use the terminology Q-value sampling in accordance with Dearden [44, 71], but one should recall that QS is virtually indistinguishable from Thompson sampling [72, 73]. Our framework can also be connected to another algorithm used in the study of animal RL [74, 75], based on the Value of Perfect Information (VPI) [44, 45, 76], which we describe in Appendix E.

QS [71] is an exploration policy based on the posterior predictive probability that an action value exceeds all the other actions available:

| (18) |

The expectation of Eq 18 provides a clear policy to the agent. The QS approach is compelling in our case: in general, the learning algorithm we propose will produce a trial-wise posterior probability that should, most of the time, be easy to sample from.

In bandit tasks, the policy dictated by QS is optimal provided that the subject has an equal knowledge of all the options she has. If the environment is only partly and unequally explored, the value of some actions may be overestimated (or underestimated), in which case QS will fail to detect that exploration might be beneficial. QS can lead to the same policy in a context where two actions (a1 and a2) have similar uncertainty associated with their reward distributions (σ1 = σ2) but different means (μ1 > μ2), and in a context where one action has a much larger expected reward (μ1 ≫ μ2) but also larger uncertainty (σ1 ≫ σ2) (see [44] for an example). This can be sub-optimal, as the action with the larger uncertainty could lead to a higher (or lower) reward than expected: in this specific case, choosing the action with the largest expected reward should be even more encouraged due to the lack of of knowledge about its true reward distribution, which might be much higher than expected. A strategy to solve this problem is to give to each action value a bonus, the Value of Perfect Information, that reflects the expected information gain that will follow the selection of an action. This approach, and its relationship to our algorithm, is discussed in Appendix E.

Q-sampling as a stochastic process

Let us get back to the case of QS, and consider an agent solving this problem using a gambler ruin strategy [77]. We assume that, in the case of a two-alternative forced choice task, this agent has equal initial expectations that either a1 or a2 will lead to the highest reward, represented by a start point z0 = ζ/2, where ζ will be described shortly. The gambler ruin process works as follows: this agent samples a value and a value and assess which one is higher. If beats , she computes the number of wins of a1 until now as z1 = z0 + 1, and displaces her belief the other way (z0−1) if a2 beats a1. Then, she starts again and moves in the direction indicated by sign(r(a1) − r(a2)) until she reaches one of the two arbitrary thresholds situated at 0 or ζ that symbolize the two actions available. It is easy to see that the number of wins and losses generated by this procedure gives a Monte Carlo sample of p(r(a1) > r(a2)|x<j). We show in Appendix F.1 that this process tends to deteministically select the best option as the threshold grows.

So far, we have studied the gambler ruin problem as a discrete process. If the interval between the realization of two samples tends to 0, this accumulation of evidence can be approximated by a continuous stochastic process [77]. When the rewards are normally distributed, as in the present case, this results in a Wiener process, or Drift-Diffusion model (DDM, [78]), since the difference between two normally distributed random variables follows a normal distribution. This stochastic accumulation model has a displacement rate (drift) that is given by

see [79].

Crucially, it enjoys the same convergence property of selecting almost surely the best option for high thresholds (see Appendix F.2).

Sequential Q-sampling as a Full-DDM model

This simple case of a fixed-parameters DDM, however, is not the one we have to deal with, as the agent does not know the true value of {μ(a), σ2(a)}a∈a, but she can only approximate it based on her posterior estimate. Assuming that the approximate posterior over the latent mean and variance of the reward distribution is a distribution, and keeping the original statement , we have

| (19) |

and where, for the sake of sparsity of the notation, the indices are used to indicate the corresponding action-related variable.

To see how such evidence accumulation process evolves, one can discretize Eq 19: this would be equivalent to sample at each time t a displacement

| (20) |

where Δx stands for xt − xt−1. The drift in Eq 20 is sampled as the difference between two sampled means and the squared noise is sampled as the sum of the two sampled variances . At each time step (or at each trial, quite similarly as we will show), the tuple of parameters is drawn from the current posterior distribution.

Hereafter, we will refer to this process as the Normal-Inverse-Gamma Diffusion Process, or NIGDM.

NIGDM as an exploration rule

Importantly, and similarly to QS, this process has the desired property of selecting actions with a probability proportional to their probability of being the best option. This favours exploratory behaviour since, assuming equivalent expected rewards, actions that are associated with large reward uncertainty will tend to be selected more often. We show that the NIGDM behaves like QS in Appendix F.3. There, it is shown (Proposition 3) that, as the threshold grows, the NIGDM choice pattern resembles more and more the QS algorithm. For lower values of the threshold, this algorithm is less accurate than QS (see further discussion of the property of NIGDM for low thresholds in Appendix E).

Cognitive cost optimization under the AC-HAFVF

Algorithm 2 summarizes the AC-HAFVF model. This model ties together a learning algorithm—that adapts how fast it forgets its past knowledge on the basis of its assessment of the stability of the environment—with a decision algorithm that makes full use of the posterior uncertainty of the reward distribution to balance exploration and exploitation.

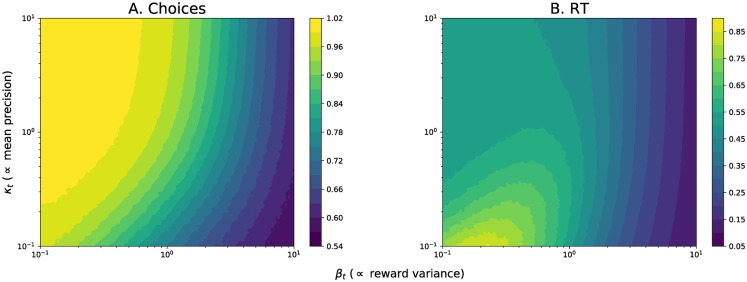

Importantly, these algorithms make time and resource costs explicit: for instance, time constraints can make decisions less accurate, because they will require a lower decision threshold. Fig 3 illustrates this interpretation of the AC-HAFVF by showing how accuracy and speed of the model vary as a function of the variance of the reward estimates: the cognitive cost of making a decision using the AC-HAFVF is high when the agent is uncertain about the reward mean (low κj) but has a low expectation of the variance (low βj). In these situations, the choices were also more random, allowing the agent to explore the environment. Decisions are easier to make when the difference in mean rewards is clearer or when the rewards were more noisy.

Fig 3. Simulated policies for the AC-HAFVF as a function of reward variance βj and number of effective observations κj, for a fixed value of posterior mean rewards (μ1 = −μ2 = 1), shape parameter α1 = α2 = 3 threshold ζ = 2, start point z0 = ζ/2 and τ = 0.

A. Choices were more random for more noisy reward distributions (i.e. high values of βj) and for mean estimates with a higher variance (i.e. with a lower number of observations κj). B. Decisions were faster when the difference of the means was clearer (high κj) and when the reward distributions was noisy (high β). Subjects were slower to decide what to do for noisy mean values but precise rewards, reflecting the high cognitive cost of the decision process in these situations.

Besides the decision stage, the computational cost of the inference step can also be determined. To this end, one must first consider that the HAFVF updates the variational posterior using a natural gradient-based [80] approach with a Fisher preconditioning matrix [58, 81, 82]: the learner computes a gradient of the ELBO wrt the variational parameters, then moves in the direction of this gradient with a step length proportional to the posterior variance of the loss function. Because of this, the divergence between the true posterior probability p(z|x≤j) and the prior probability p(z) (i.e. the mixture of the default distribution and previous posterior) conditions directly the expected number of updates required for the approximate posterior to converge to a minimum DKL[q||p] (see for instance [83]). Also, in a more frequentist perspective and at the between-trial time scale, convergence rate of the posterior probability towards the true (if any) model configuration is faster when the KL divergence between this posterior and the prior is small [84]: in other words, in a stable environment, a higher confidence in past experience will require less observations for the same rate of convergence, because it will tighten the distance between the prior and posterior at each time step.

These three aspects of computational cost (for decision, for within-trial inference and for across-trial inference) can justify the choice (or emergence) of lower flexible behaviours as they ultimately maximize the reward rate [74, 85]: indeed, such strategies will lead in a stable environment to faster decisions, to faster inference at each time step and to a more stable and accurate posterior.

Algorithm 2: AC-HAFVF. For simplicity, the NIGDM process has been discretized.

input: prior belief {θ0, ϕ0, β0}

1 for j = 1 to J do

2 Actor: NIGDM;

input: Start point z0, threshold ζ, non-decision time τ

3 k ← 0;

4 sample for i = {1, 2};

5 while 0 < zk < ζ do

6 k += 1;

7 sample ;

8 move zk += δz;

9 end

10 select ;

11 Get reward rj = r(sj, aj), Observe transition sj+1 = j(sj, aj);

12

13 Critic: HAFVF;

14 ELBO ← −∞;

15 while |δL| ≤ 10−3 do

16 update using CVMP;

17 update {ϕj, βj} using NCVMP;

18 ;

19 ;

20 end

21 end

Fitting the AC-HAFVF

So far, we have provided all the necessary tools to simulate behavioural data using the AC-HAFVF. It is now necessary to show how to fit model parameters to an acquired dataset. We will first describe how this can be done in a Maximum Likelihood framework, before generalizing this method to Bayesian inference using variational methods.

The problem of fitting the AC-HAFVF to a dataset can be seen as a State-Space model fitting problem. We consider the following family of models:

| (21) |

and tj,n stands for the reaction time associated with the state-action pair (s, a) of the subject n at the trial j. Unlike many State-Space models, we have made the assumption in Eq 21 that the transition model Ωj,n = f(Ωj−1,n, Ω0,n, xj−1,n) is entirely deterministic given the subject prior Ω0,n and the observations x<j, which is in accordance with the model of decision making presented in the The actor: Decision making under the HAFVF section. Note that the Bayesian procedure we will adopt hereafter is formally identical to considering that the drift and noise are drawn according to the rules defined in the The actor: Decision making under the HAFVF section, making this model equivalent to:

Quite importantly, we have made the assumption in Eq 21 that the threshold, the non-decision time and the start-point were fixed for each subject throughout the experiment. This is a strong assumption, that might be relaxed in practice. To simplify the analysis, and because it is not a mandatory feature of the model exposed above, we do not consider this possibility here and leave it for further developments.

We can now treat the problem of fitting the AC-HAFVF to behavioural data as two separate sub-problems: first, we will need to derive a differentiable function that, given an initial prior Ω0,n and a set of observations xn produces a sequence , and second (Maximum a Posteriori estimate of the AC-HAFVF section) a function that computes the probability of the observed behaviour given the current variational parameters.

Maximum a Posteriori estimate of the AC-HAFVF

The update equations described in the Update equation section enable us to generate a differentiable sequence of approximate posterior parameters Ωj,n given some prior Ω0,n and a sequence of choices-rewards x. We can therefore reduce Eq 21 to a loss function of the form

whose gradient wrt Ω0,n can be efficiently computed using the chain rule:

Recall that is the jacobian (i.e. matrix of partial derivative) of Ωj,n wrt each of the elements of Ω0,n that are optimized, and is the gradient of the loss function (i.e. the NIGDM) wrt the output of f(⋅).

As the variational updates that lead to the evaluation of Ωj,n are differentiable, the use of VB makes it possible to use automatic Differentiation to compute the Jacobian of Ωj,n wrt Ω0,n.

The next step, is to derive the loss function log p(yj,n|Ωj,n, ζn, z0n, τn). In this log-probability density function, the local, trial-wise parameters

| (22) |

have been marginalized out. This makes its evaluation hard to implement with conventional techniques. Variational methods can be used to retrieve an approximate Maximum A Posteriori (MAP) in these cases [86]. The method is detailed in Appendix G. Briefly, VB is used to compute a lower bound (ℓj,n ≤ log p(yj,n|Ωj,n, ζn, z0n, τn)) to the marginal posterior probability described above for each trial. Instead of optimizing each variational parameters independently, we optimize the parameters ρ of an inference network [87] that maps the current HAFVF approximate posterior parameters Ωj,n and the data yj,n to each trial-specific approximate posterior. This amortizes greatly the cost of the optimization (hence the name Amortized Variational Inference), as the nonlinear mapping (e.g. multilayered perceptron) h(yj,n;ρ) can provide the approximate posterior parameters of any datapoint, even if it has not been observed yet. We chose qρ(χj,n|yj,n) to be a multivariate Gaussian distribution, which leads to the following form of variational posterior:

| (23) |

and is the lower Cholesky factor of . Another consideration is that, in order to use the multivariate normal approximate posterior, the unbounded variances sample must be transformed to an unbounded space. We used the inverse softplus transform λ ≜ log(exp(⋅) − 1), as this function has a bounded gradient, in contrasts with the exponential mapping, which prevents numerical overflow. We found that this simple trick could regularize greatly the optimization process. However, this transformation of the normally distributed λ−1(⋅) variables requires us to correct the ELBO by the log-determinant of the Jacobian of the transform [54], which for the sofplus transform of x is simply .

The same transformation can be used for the parameters of θ0 that are required to be greater than 0 (i.e. all parameters except μ0), which obviously do not require any log-Jacobian correction.

In Eq 22, we have made explicit the fact that we used the three latent variables: the drift rate and the two action-specific noise parameters. This is due to the fact that, unfortunately, the distribution of the sum of two Inverse-Gamma distributed random variables does not have a closed form formula, making the use of single random variable challenging, whereas it can be done easily for ξj,n = μj,n(a1) − μj,n(a2), which is normally distributed (see Eq 19).

The final step to implement a MAP estimation algorithm is to set a prior for the parameters of the model. We used a simple L2 regularization scheme, which consists trivially in a normal prior over all parameters, mapped onto an unbounded space if needed.

Algorithm 3 shows how the full optimization proceeds.

Algorithm 3: MAP estimate of AC-HAFVF parameters.

input: Data x = {rj,n, yj,n for j = 1 to J, n = 1 to N}

1 initialize Ωn = {θ0, ϕ0, β0}n for n = 1 to N and IN§ weights ρ;

2 repeat

3 set L ← 0;

4 for n = 1 to N do

5 Set ;

6 for j = 1 to J do

7 Learning Step: HAFVF;

8 Get Ωj,n = f(Ωj−1,n, Ω0,n, xj−1,n)

9 and Jacobian wrt Ω0,n: using FAD*;

10 Decision Step: HAFVF;

11 Get ELBO ℓj,n of using AVI†;

12 and corresponding gradient using RAD‡;

13 Increment L += ℓj,n;

14 Compute gradient wrt Ω0,n using the chain rule:

15 ;

16 Increment gradient of DDM and IN parameters

17 ;

18 end

19 L2-norm regularization:

20 ;

21 ;

22 end

23 Perform gradient step for a small η;

24 until Some convergence criterion is met;

§ IN = Inference Network, * FAD = Forward Automatic Differenciation, † AVI = Amortized Variational Inference, ‡ RAD = Reverse Automatic Differentiation (i.e. backpropagation).

Results

We now present four simulated examples of the (AC-)HAFVF in various contexts. The first example compares the performance of the HAFVF to the HGF [23, 24] in a simple contingency change scenario. The second example provides various case scenarios in a changing environment, illustrating the trade-off between flexibility and the precision of the predictions (Learning and flexibility assessment section), including cases where agents fail to adapt to contingency changes following prolonged training in a stable environment, as commonly observed in behavioural experiments [5]. The third example shows that the HAFVF can be efficiently fitted to a RL dataset using the method described in Fitting the AC-HAFVF section. The fourth and final example shows how this model behaves in multi-stage environments, and compares various implementations.

Adaptation to contingency changes and comparison with the HGF

In order to compare the performance of our model to the HGF, we generated a simple dataset consisting of a noisy square-wave signal of two periods of 200 trials, alternating between two normally distributed random variables ( and ). We fitted both a Gaussian HGF and the HAFVF to this simple dataset by finding the MAP parameter configuration for both models. The default configuration of the HGF was used, whereas in our case we put a normal hyperprior of on the parameters (with inverse softplus transform for parameters needing positive domains).

This fit constituted the first part of our experiment, which is displayed on the left part of Fig 4. We compared the quadratic approximations of the Maximum Log-model evidences [88] for both models, which for the HAFVF reads

where H is the hessian of the log-joint at the mode and M is the number of parameters of the model. We found a value of -186.42 for HAVFV and -204.73 for the HGF, making the HAVFV a better model of the data, with a Bayes Factor [89] greater than 8 * 107.

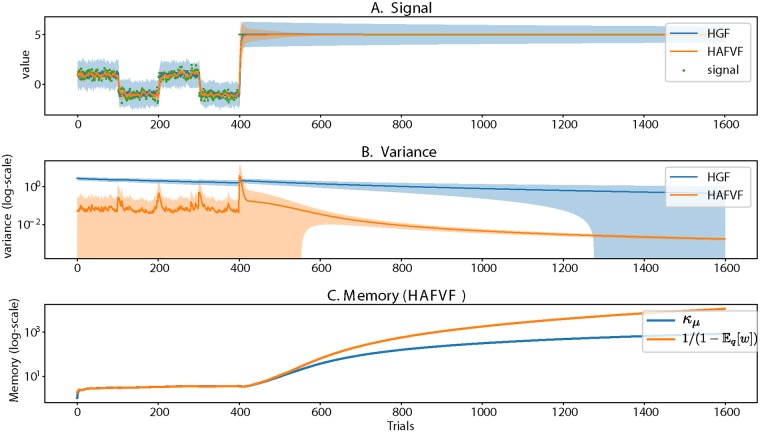

Fig 4. HAFVF and HGF performance on the same dataset.

Shaded areas represent the ±3 standard error interval. The two models were fitted to the first 400 trials, and then tested on the whole trace of observations. A. Observations, mean and standard error of the mean estimated by both models. B. The variance estimates show that the HAFVF adapted better to the variance in the first part of the experiment, reflected better the surprise at the contingency change and adapted successfully its estimate when the environment was highly stable. The HGF, on the contrary, rapidly degenerated its estimate of the variance, and did not show a significant trace of surprise when the contingency was altered. C. The value of the effective memory of the HAVFV is represented by the approximate posterior parameter κμ, and the maximum memory (efficient memory, see Bayesian Q-Learning and the problem of flexibility) allowed by the model at each trial.

The second part of the experiment consisted in adding to this 400-trial signal a 1200-trial signal of input situated at y = 5. We evaluated for both models the quality of the fit obtained when using the parameter configurations resulting from the fit of the first part of the experiment (first 400 trials, or training dataset) (Fig 4, right part), on the remaining dataset (following 1200 trials, i.e. testing dataset). An optimal agent in such a situation should first account for the surprise associated with the sudden contingency change, and then progressively reduce its expected variance estimate to reflect the steadiness of the environment. We considered the capacity of both models to account for new data for a given parameter configuration as a measure of their flexibility. This test was motivated by the observation that a change detection algorithm has to be able to detect changes at test time that might be qualitatively different from changes at training time. A financial crisis is for instance an event that is in essence singular and unseen in the past (otherwise it would have been prevented). The algorithm should nevertheless be able to detect it efficiently.

The HGF was unable to exhibit the expected behaviour: it hardly adapted its estimated variance to the contingency change and did not adjust it significantly afterwards. This contrasted with the HAFVF, in which we observed initially an increase in the variance estimate at the point of contingency change (reflecting a high surprise), followed by progressively decreasing variance estimate, reflecting the adaptation of the model to the newly stable environment.

Together, these results are informative of the comparative performance of the two algorithms. The Maximum Log-model Evidence was larger for the HAFVF than for the HGF by several orders of magnitude, showing that our approach modelled better the data at hand than the HGF. Moreover, the lack of generalization of the HGF to a simple, new signal not used to fit the parameters, shows that this model tended to overfit the data, as can be seen from the estimated variance at the time of the contingency change.

Importantly, this capability of the HAFVF to account for unseen volatility changes did not need to be instructed through the selection of the model hyperparameters: it is a built in feature of the model.

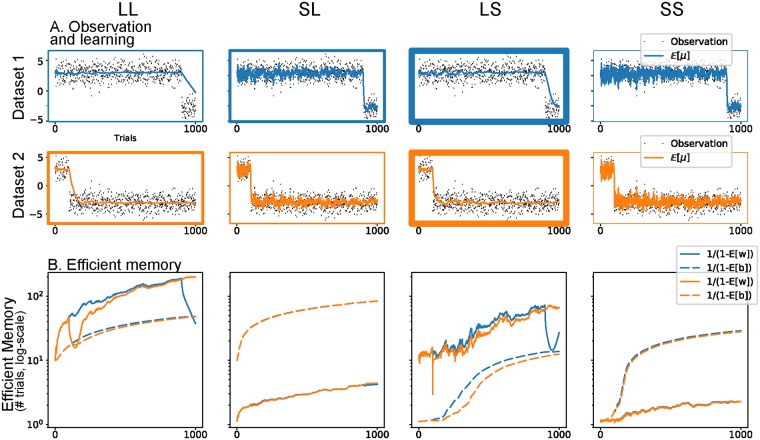

Learning and flexibility assessment

In the following datasets, we simulated the learning process of four hypothetical subjects differing in their prior distribution parameters ϕ0, β0, whereas we kept θ0 fixed for all of them (Table 2). The choice of the subject parameters was made to generate limit and opposite cases of each expected behaviour. With these simulations, we aimed at showing how the prior belief on the two levels of forgetting conditioned the adaptation of the subject in case of contingency change (CC, Experiment 1) or isolated expected event (Experiment 2).

Table 2. This table summarizes the parameters of the beta prior of the two forgetting factors w and b used in the Learning and flexibility assessment section, as well as the initial prior over the mean and variance.

A low value of initial number of observations κ0 was used, in order to instruct learner to have a large prior variance over the value of the mean. Each subject will be referred by its expected memory at the lower and higher level (i.e. L = long, S = short memory). For instance, the subject number 3 (LS) is expected to have a long first-level memory, but a short second-level memory, which should make her more flexible than subject 2 (SL) after a long training, whom has a short first-level memory but a long second-level memory.

| Subjects | μ0 | κ0 | α0 | β0 | |||||

|---|---|---|---|---|---|---|---|---|---|

| (1) | LL | 0 | 0.1 | 1 | 1 | 4.5 | 0.5 | 4.5 | 0.5 |

| (2) | SL | 0.5 | 4.5 | 4.5 | 0.5 | ||||

| (3) | LS | 4.5 | 0.5 | 0.5 | 4.5 | ||||

| (4) | SS | 0.5 | 4.5 | 0.5 | 4.5 |

In both experiments, these agents were confronted with a stream of univariate random variables from which they had to learn the trial-wise posterior distribution of the mean and standard deviation.

In Experiment 1, we simulated the learning of these agents in a steady environment followed by an abrupt CC, occurring either after a long (900 trials) or a short (100 trials) training. The signal r = {r1, r2, …, rn} was generated according to a Gaussian noise with mean μ = 3 before the CC and μ = −3 after the CC, and a constant standard deviation σ = 1. Fig 5 summarizes the results of this first simulation. During the training phase, the subjects with a long memory on the first level learned the observation value faster than others. Conversely, the SS subject took a long time to learn the current distribution. More interesting is the behaviour of the four subjects after the CC. In order to see which strategy reflected best the data at hand, we computed the average of the ELBOs for each model. The winning agent was the Long-Short memory, irrespective of training duration, because it was better able to adapt its memory to the contingency.

Fig 5. HAFVF predictions after a CC.

Each column displays the results of a specific hyperparameter setting. The blue traces and subplots represent the learning in an experiment with a long training, the orange traces and subplots show learning during a short training experiment. A. The stream of observations in the two training cases are shown together with the average posterior expected value of the mean . The box line width illustrates the ranking of the ELBO of each specific configuration for the dataset considered, with bolder borders corresponding to larger ELBOs. For both training conditions, the winning model (i.e. the model that best reflected the data) was the Long-Short memory model. This can be explained by the fact that the first two models trusted too much their initial knowledge after the CC, whereas the Short-Short learner was too cautious. B. Efficient memory (defined as ) for the first level (, plain line) and second level (, dashed line).

The two levels had a different impact on the flexibility of the subjects: the first level indicated how much a subject should trust his past experience when confronted with a new event, and the second level measured the stability of the first level. On the one hand, subjects with a low prior on first-level memory were too cautious about the stability of the environment (i.e. expected volatile environments) and failed to learn adequately the contingency at hand. On the other hand, after a long training, subjects with a high prior on second-level memory tended to over-trust environment stability, compared to subjects with a low prior on second level memory, impairing their adaptation after the CC.

The expected forgetting factors also shed light on the underlying learning process occurring in the four subjects: grew or was steady until the CC for the four subjects, even for the SL subject which showed a rapid growth of during the first trials, but failed to reduce it at the CC. In contrast, the LS subject did not exhibit this weakness, but rapidly reduced its expectation over the stability of the environment after the CC thanks to her pessimistic prior belief over b.

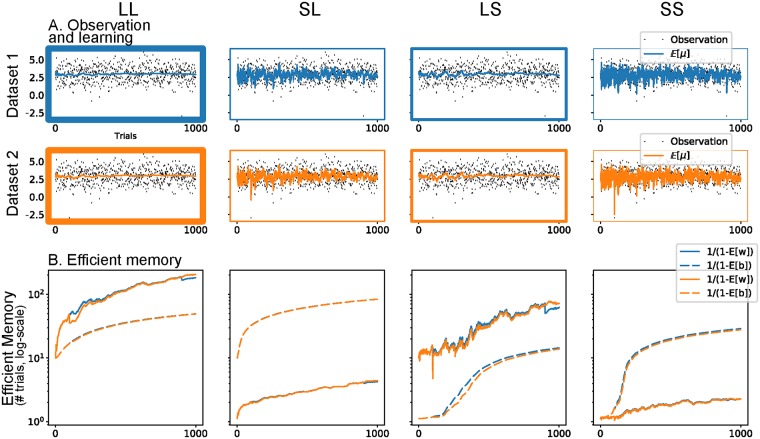

In Experiment 2, we simulated the effect of an isolated, unexpected event (rj = −3) after long and short training with the same distribution as before. For both datasets, we focused our analysis on the value of the expected forgetting factors and , as well as the effective memory of the agents, represented by the parameter κμ. As noted earlier, the value of sets an upper bound (the efficient memory) on κμ, which represented the actual number of trials kept in memory up to the current trial.

Fig 6 illustrates the results of this experiment. Here, the flexible agents (with a low memory on either the first or second memory level, or both) were disadvantaged wrt the low flexibility agent (mostly LL). Indeed, following the occurrence of a highly unexpected observation, one can observe that the LS learner memory dropped after either long or short training. The LL learner, instead, was able to cushion the effect of this outlier, especially after a long training, making it the best learner of the four to learn these datasets (Table 3).

Fig 6. HAFVF predictions after an isolated unexpected event.

The figure is similar to Fig 5. Here, the winning model was the one with a high memory on the first and second levels. The figure is structured as Fig 5, and we refer to this for a more detailed description.

Table 3. Average ELBOs for Experiment 1 and 2.

Higher ELBOs stand for more probable models.

| Experiment 1 | Experiment 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | LL | SL | LS | SS | LL | SL | LS | SS |

| 1 | -1.719 | -1.657 | -1.62 | -1.863 | -1.459 | -1.652 | -1.501 | -1.863 |

| 2 | -1.526 | -1.649 | -1.519 | -1.866 | -1.453 | -1.648 | -1.495 | -1.866 |

Fitting the HAFVF to a behavioural task

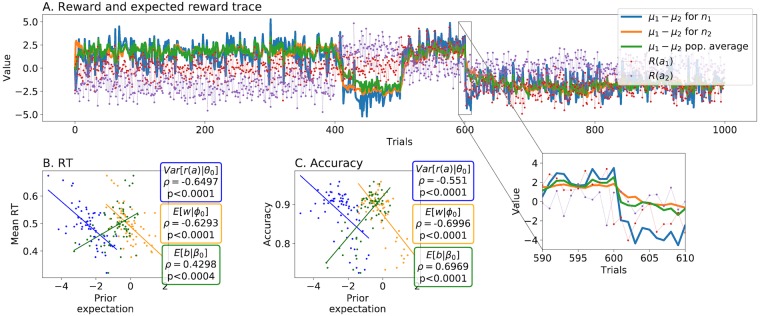

The model we propose has a large number of parameters, and overfitting could be an issue. To show that inference about the latent variables of the model depicted in the Fitting the AC-HAFVF section is possible, we simulated a dataset of 64 subjects performing a simple one-stage behavioural task, that consisted in trying to choose at each trial the action leading to the maximum reward. In any given trial , the two possible actions (e.g. left or right button press) were associated to different, normally distributed, reward probabilities with a varying mean and a fixed standard deviation for the first action (a1) the reward had a mean of 0 for the first 500 trials, then switched abruptly to + 2 for 100 trials and then to −2 for the rest of the experiment. The second action value was identically distributed but in the opposite order and with the opposite sign (Fig 7). This pattern was chosen in order to test the flexibility of each agent after an abrupt CC: after the first CC, an agent discarding completely exploration in favour of exploitation would miss the CC. The second and third CC tested how fast did the simulated agents adapt to the observed CC.

Fig 7. Simulated behavioral results.

A. The values of the two available rewards are shown with the dotted lines. The average drift rate μ1 − μ2 is shown in plain lines for two selected simulated subjects n1 and n2, and population average. Subject n1 was more flexible than subject n2 on both the first and the second level, making her more prone to adapt after the CCs, situated at trials 400, 500 and 600. This result is highlighted in the underlying zoomed box. B. The subjects’ expected variance (blue, log-valued) correlated negatively with the mean RT. The same correlation existed with the expected stability on the first level (orange, logit-valued), but not with the second level, which correlated positively with the average RT (green, logit-valued). Pearson correlation coefficient and respective p-values are shown in rounded boxes. C. Similarly, subjects with a higher expected variance and first-level stability had a lower average accuracy. Again, second-level memory expectation had the opposite effect.

Individual prior parameters and thresholds ζn were generated as follows: we first looked for the L2-regularized MAP estimates of these parameters that led to the maximum total reward:

using a Stochastic Gradient Variational Bayes (SGVB) [90] optimization scheme. With a negligible loss of generality, the prior mean μ0 was considered to be equal to 0 for all subjects for both the data generation and the fitting procedures. With a negligible loss of generality, the prior mean μ0 was considered to be equal to 0 for all subjects for both the data generation and the fitting procedures.

We then simulated individual priors centered around this value with a covariance matrix arbitrarily sampled as

where is an inverse-Wishart distribution with n degrees of freedom. This choice of prior lead to a large variability in the values of the AC-HAFVF parameters, except for the NIGDM threshold whose variance was set to a sufficiently low value (hence the 0.1 value in the prior scale matrix) to keep the learning performance high.

This method ensured that each and every parameter set was centered around an unknown optimal policy. This approach was motivated by the need to prevent strong constrains on the data generation pattern while keeping behaviour close to optimal, as might be expected from healthy population. The other DDM parameters, νn and τn, were generated according to a Gaussian distribution centered on 0 and 0.3, respectively. Simulated subjects with a performance lower than 70% were rejected and re-sampled to avoid irrelevant parameter patterns.

Learning was simulated according to the Continuous Learning strategy (see Counterfactual learning), because it was supposed to link more comprehensively the tendency to explore the environment with the choice of prior parameters Ω0. Choices and RT where then generated according to the decision process described in the The actor: Decision making under the HAFVF section using the algorithm described by [91]. Fig 7 shows two examples of the simulated behavioural data.

The behavioural results showed a clear tendency of subjects with large expected variance in action selection to act faster and less precisely than others. This follows directly from the structure of the NIGDM: larger variance of the drift-rate leads to faster but less precise policies. More interesting is the negative correlation between the expected stability and the reward-rate and average reaction time: this shows that the AC-HAFVF was able to encode a form of subject-wise computational complexity of the task. Indeed, large stability expectation leads subjects to trust more their past experience, thereby decreasing the expected reward variance after a long training, but it also leads to a lower capacity to adapt to CCs. For subjects with low expectation of stability, the second level memory was able to instruct the first-level to trust past experience when needed, as the positive correlation between accuracy and upper level memory shows.

Fitting results

The fit was achieved with the Adam [92] Stochastic Gradient Descent optimizer with parameters s = 0.005, β1 = 0.9, β2 = 0.99, where s decreased with a rate where i is the iteration number of the SGD optimizer. We used the following annealing procedure to avoid local minima: at each iteration, the whole set of parameters was sampled from a diagonal Gaussian distribution with covariance matrix 1/i. This simple manipulation greatly improved the convergence of the algorithm.

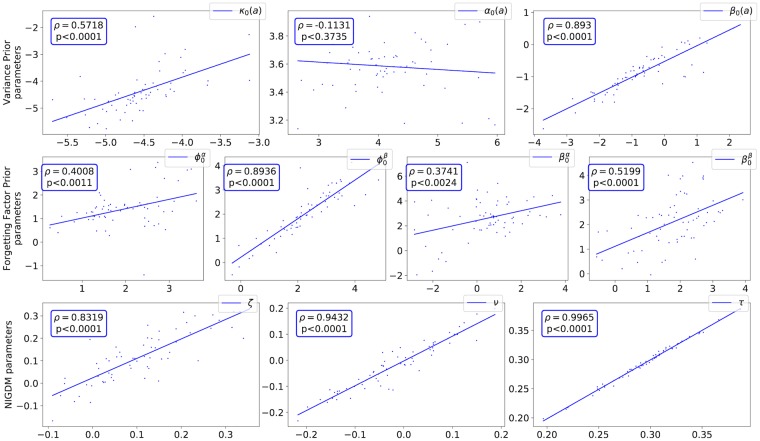

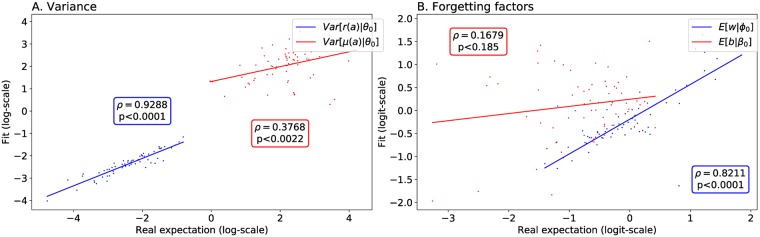

The MAP fit of the model is displayed in Fig 8. In general, the posterior estimates of the prior parameters were well correlated with their true value, except for the prior shape parameter α0. This lack of correlation did not, however, harm much the fit of the variance (see below), showing that the model fit was able to accurately recover the expected prior variability of each subject, which depended on α0. The NIGDM parameters were highly correlated with their original value.

Fig 8. Correlation between the true (x axis) and the posterior estimate (y axis) of the parameters of the prior distributions across subjects.

The first row displays the correlations between true value and estimated θ0. The second row focuses on ϕ0 and β0, whereas the third row shows the correlations for the NIGDM parameters (threshold, relative start-point and non-decision time). Correlation coefficients and associated p-value (with respect to the posterior expected value) are displayed in blue boxes. All parameters are displayed in the unbounded space they were generated from. Overall, all parameters correlated well with their true value, except for the α0(a).

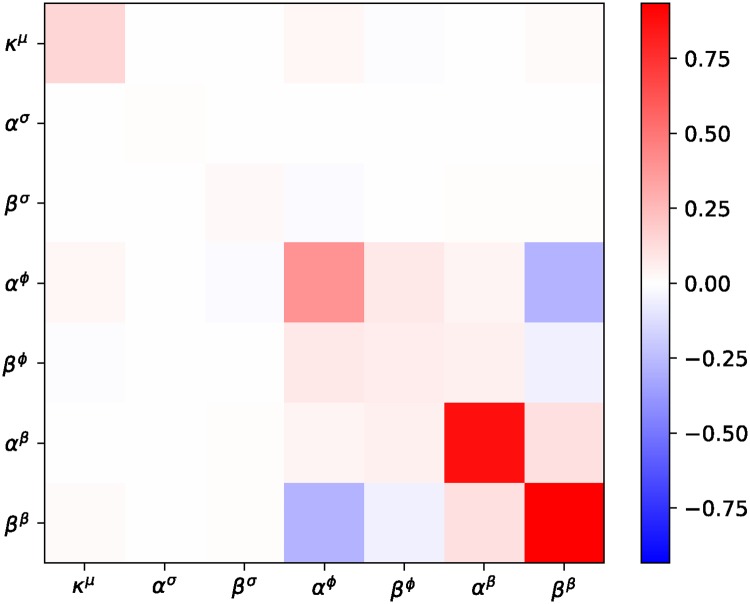

In order to evaluate the identifiability of our model, we performed the quadratic approximation to the posterior covariance of the fitted HAFVF parameters. The average result, displayed in Fig 9, shows that covariance between model parameters was low, except at the top level. This indicates that each of parameter had a distinguishable effect on the loss. The higher variance and covariance of the parameters αβ and ββ relates to the fact that the influence of these prior parameters vanishes as more and more data is observed, in accordance with the Bernstein-Von-Mises theorem. In practice, this means that αβ and ββ should not be given behavioural interpretation but should rather be viewed as regularizers of the model.

Fig 9. Average quadratic approximation to the posterior covariance of the HAFVF parameters at the mode.

We also looked at how the true prior expected value of w and b and variance correlated with their estimate from the posterior distribution. All of these correlated well with their generative correspondent, with the notable exception of the expected value of the second-level memory (Fig 10). This confirms again the role of regulizer of b over w.

Fig 10. Correlation between true and expected values of the variances and forgetting factors.

All the fitted values (y axis) are derived from the expected value of θ0 (A., variance) and {ϕ0, β0} (B., forgetting factors) under the fitted approximate posterior distribution. Each dot represents a different subject. A. True (x-axis) to fit (y axis) correlation for the reward (blue) and mean reward (red) variance. Both expected values correlated well with their generative parameter, although the initial number of observations of the gamma prior α0 did not correlate well with its generative parameter. B. True (x-axis) to fit (y axis) correlation for the first (blue) and second (red) level expected forgetting factor.

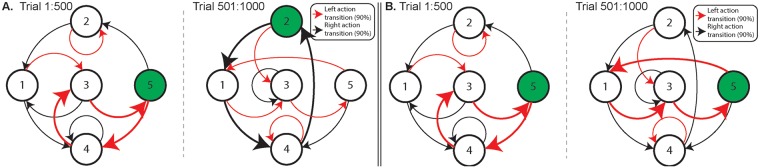

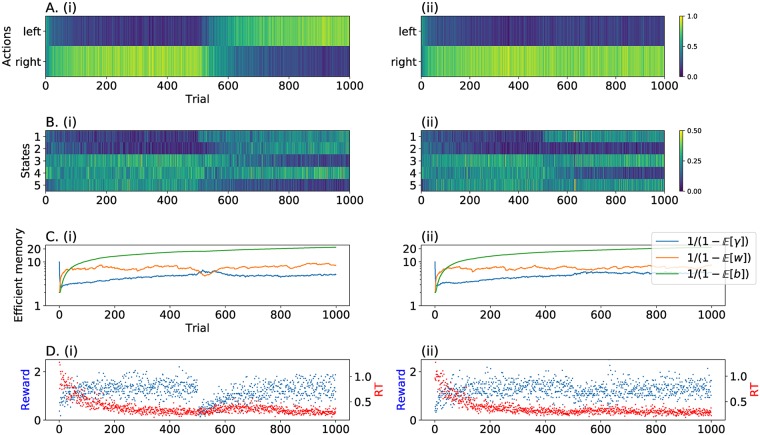

TD learning with the HAFVF